Trimming screening tests and modern psychometrics Paul K

- Slides: 61

Trimming screening tests and modern psychometrics Paul K. Crane, MD MPH General Internal Medicine University of Washington

Outline • • Background on screening tests 2 x 2 tables ROC curves Consideration of strategies for shortening tests • A word or two on testlets

I. Background on Screening

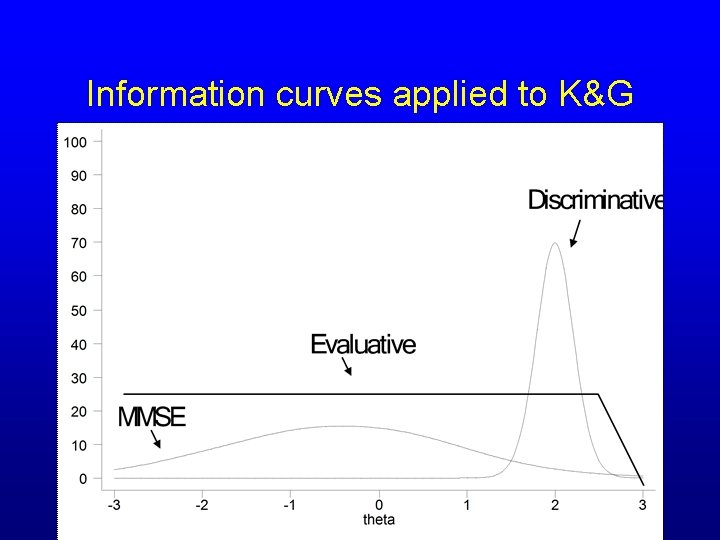

Purposes of measurement • Discriminative (e. g. screening) • Evaluative (e. g. longitudinal analyses) • Predictive (e. g. prognostication) – After Kirshner and Guyatt (1985)

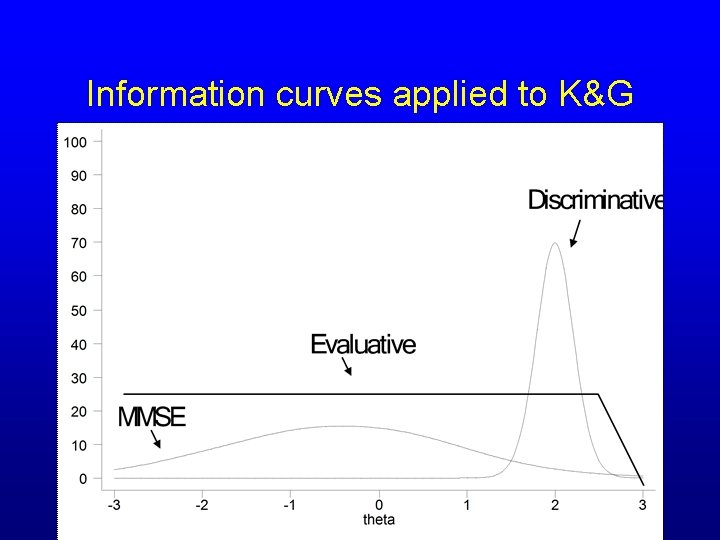

Information curves applied to K&G

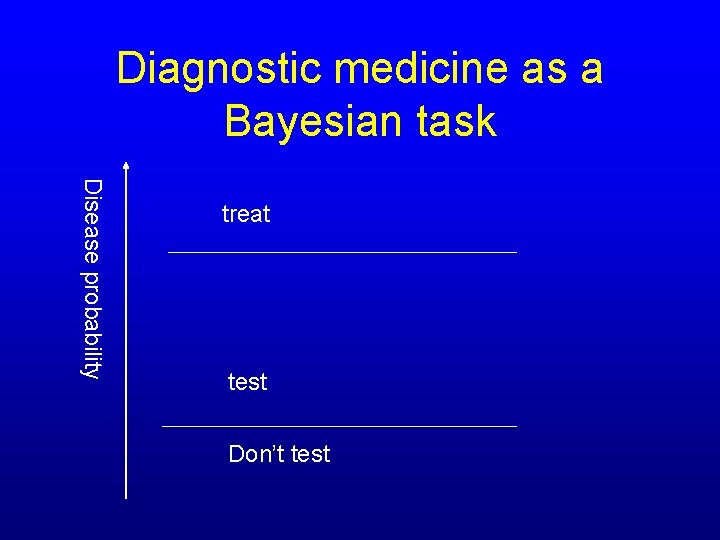

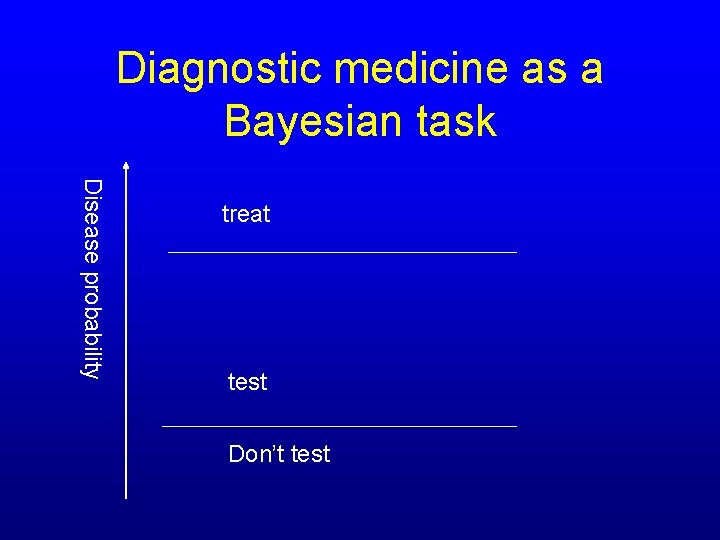

Diagnostic medicine as a Bayesian task Disease probability treat test Don’t test

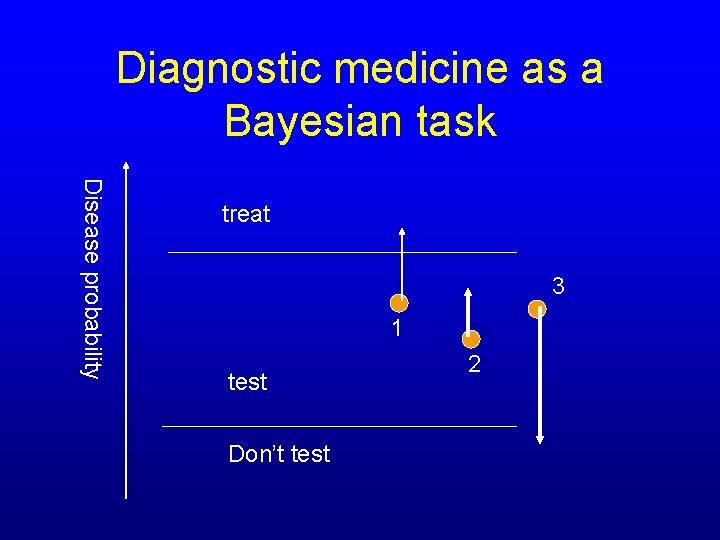

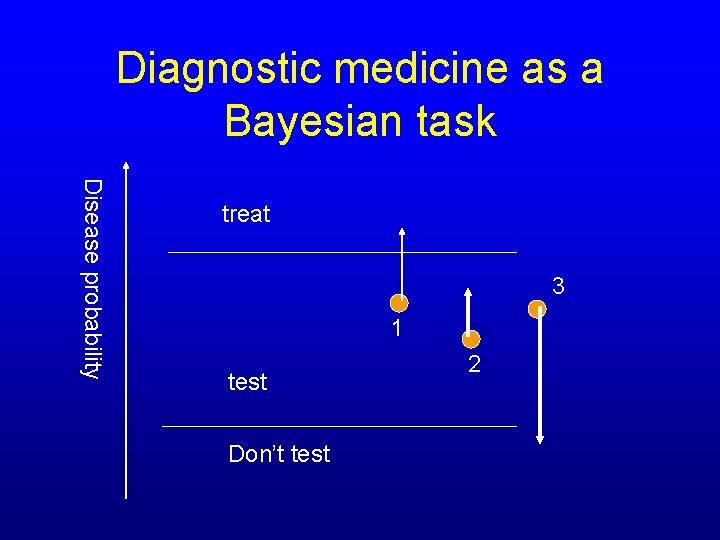

Diagnostic medicine as a Bayesian task Disease probability treat 3 1 test Don’t test 2

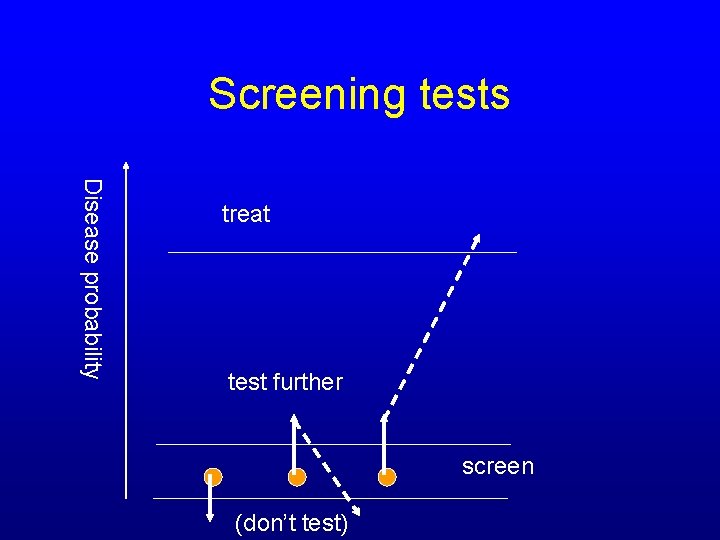

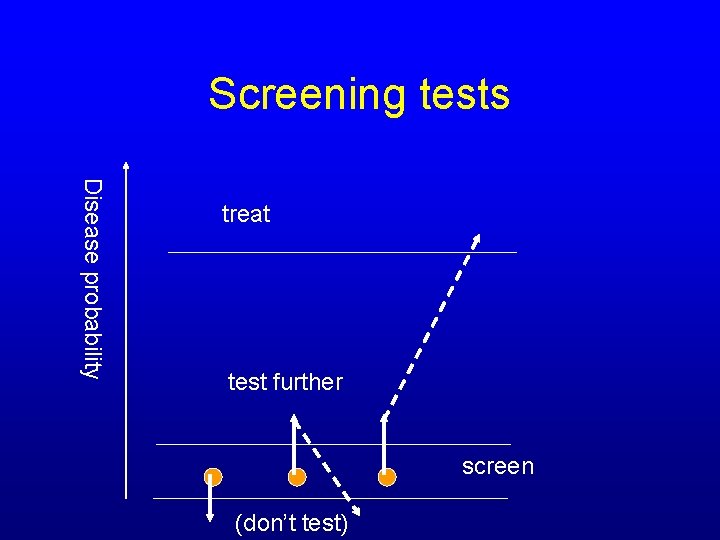

Screening tests are the same – only different • Screening implies applying the test to asymptomatic individuals in whom there is no specific reason to suspect the disease – In the previous slides, the test/don’t test threshold of 0 • Often result of screening test is need for further testing rather than a specific diagnosis – Need for biopsy rather than need for chemotherapy

Screening tests Disease probability treat test further screen (don’t test)

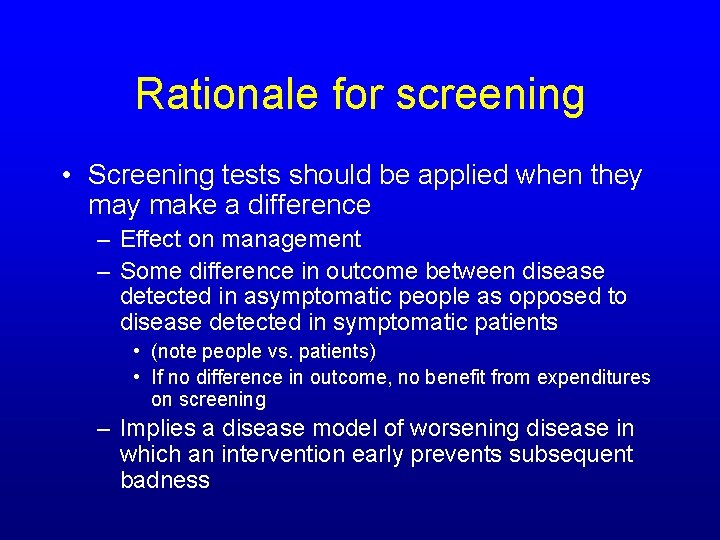

Rationale for screening • Screening tests should be applied when they make a difference – Effect on management – Some difference in outcome between disease detected in asymptomatic people as opposed to disease detected in symptomatic patients • (note people vs. patients) • If no difference in outcome, no benefit from expenditures on screening – Implies a disease model of worsening disease in which an intervention early prevents subsequent badness

Screening isn’t always a good idea • Lung cancer in smokers with CT scans (disease grows too fast) • Liver cancer with CEA in Hep C patients (yield too low, false negatives too common – test isn’t accurate enough) • Breast cancer in young women (disease is less prevalent but more aggressive, breast biology leads to higher false-positive rates in young women, which in combination lead to unacceptable morbidity for a negligible mortality benefit)

What about dementia? • No DMARD equivalents (so far); marginal benefit to early detection – Planning, QOL decisions, etc. – Potential harm in early detection? • There are those who advocate populationbased screening now (Borson 2004) – USPSTF says evidence insufficient to recommend for or against screening – Spiegel letter to editor (2007); Brodaty paper (2006) • Primary purpose is research

What about CIND / MCI? • Even less rationale for population-based screening – In several studies, while patients with MCI have an increased risk of progressing to AD, for any individual with MCI their risk for reverting to normal is higher than their risk for AD. – No intervention known to reduce rates of conversion • Again, research rationale

Research rationale • Parameter of interest is the rate of disease in the general population (or other denominator) • Most valid way: gold standard test applied to entire population – Chicago study, ROS, some others – Problems: expensive • General idea: apply a screening test / strategy to determine who should receive gold standard eval – Most of the epidemiological studies of cognitive functioning use this strategy

2 -stage sampling • 1 st stage: everyone in enumerated sample receives a screening test/strategy • 2 nd stage: some decision rule is applied to the 1 st stage results to identify people who receive further evaluations to definitively rulein or rule-out disease • Analysis: disease status from 2 nd stage extrapolated back to the underlying sample from the 1 st stage

Variations on a theme • Simplest: single cut-point, no sampling over the cutpoint – EURODEM, ACT • Slight elaboration: single cut-point, 100% below, small % above – Can address possibility of false negatives – CSHA • Fancier still: age/education adjusted cutpoints, sampling (Cache County) (also case-cohort design, which is even more fancy)

Validity of the screening protocol • Imagine an epidemiological risk factor study • Risk factor is correlated with educational quality (e. g. smoking, obesity, untreated hypertension…) • Educational quality is associated with DIF on the screening test – People with lower education have lower scores for a given degree of actual cognitive deficit • Borderline people with higher education more likely to escape detection by the screening test; ignored by the study • Rates extrapolated back: biased study

DIF in screening tests • DIF thus becomes a key feature for validity of epidemiological investigations of studies that employ 2 -stage sampling designs – Crane et al. Int Psychogeriatr 2006; 18: 505 -15. • Overwhelmingly ignored in the literature – Entire session on epidemiological studies of HTN at Vas. COG 2007 in which education and SES were not mentioned at all • Not really the focus this year, but could be an important feature of validation

Test accuracy in 2 -stage sampling • Begg and Greenes. Assessment of diagnostic tests when disease verification is subject to selection bias. Biometrics. 1983; 39: 207 -215 (web site) • Straight-forward way to extend back to the original population

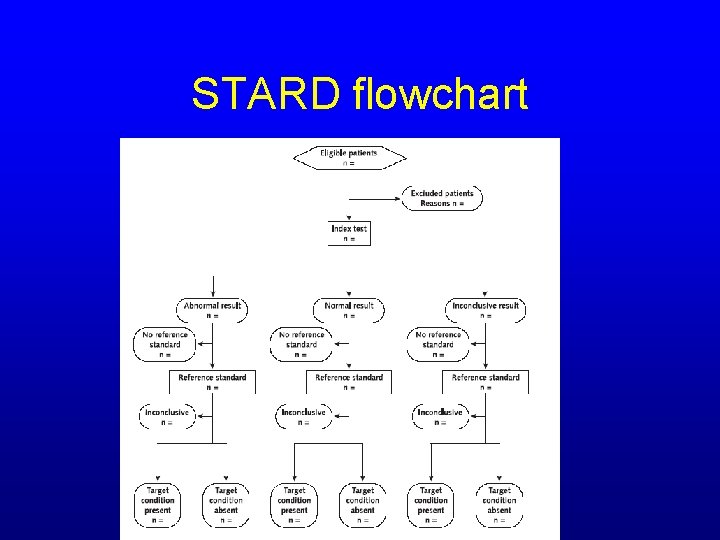

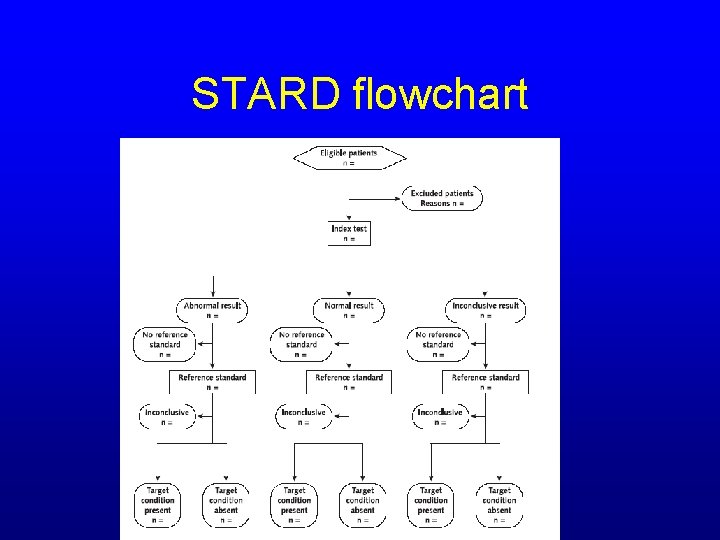

Quality of papers on diagnostic tests • STARD initiative. Ann Int Med 2003 (web site) • Provides a guideline for high-quality articles about diagnostic or screening tests • We should play by these rules • There is a checklist (p. 42) and a flow chart (p. 43; next slide) – Nothing too surprising – Reviews on quality of papers about diagnostic and screening tests: quality is terrible.

STARD flowchart

II. 2 x 2 tables

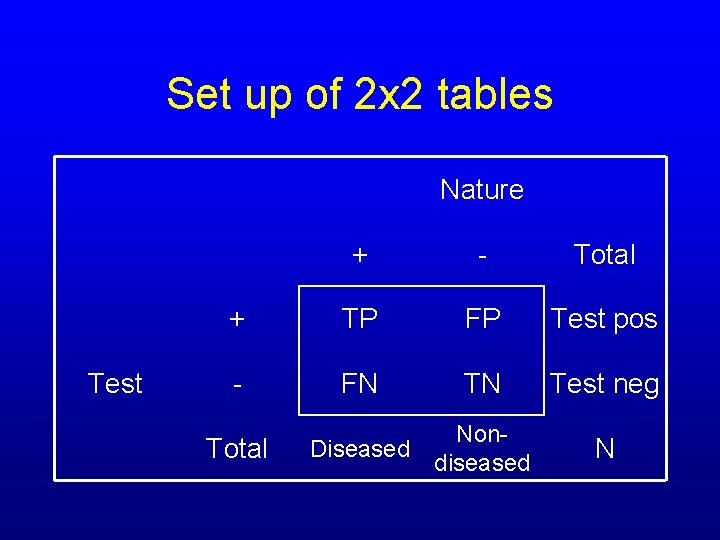

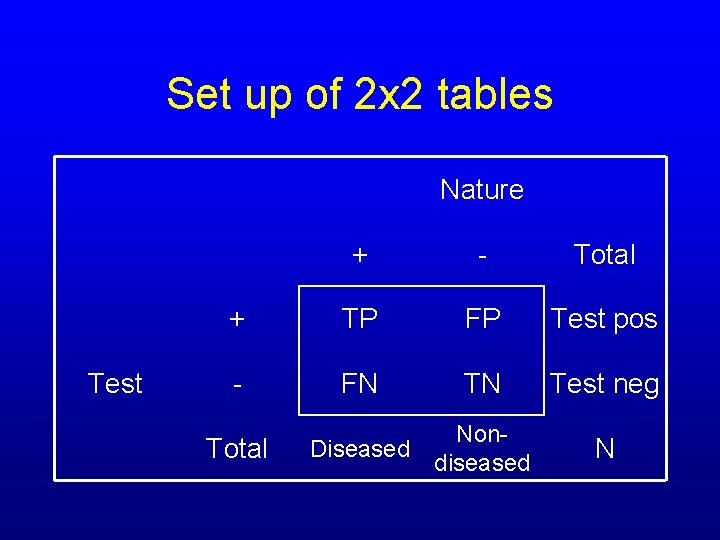

Set up of 2 x 2 tables Nature Test + - Total + TP FP Test pos - FN TN Test neg Diseased Nondiseased N Total

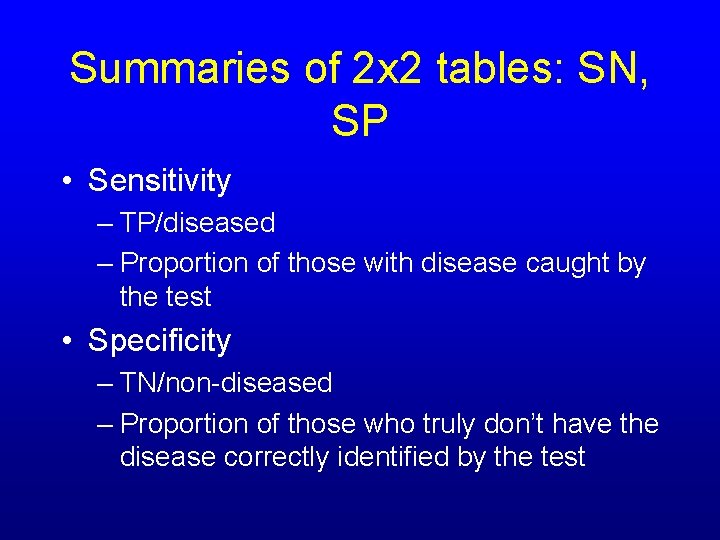

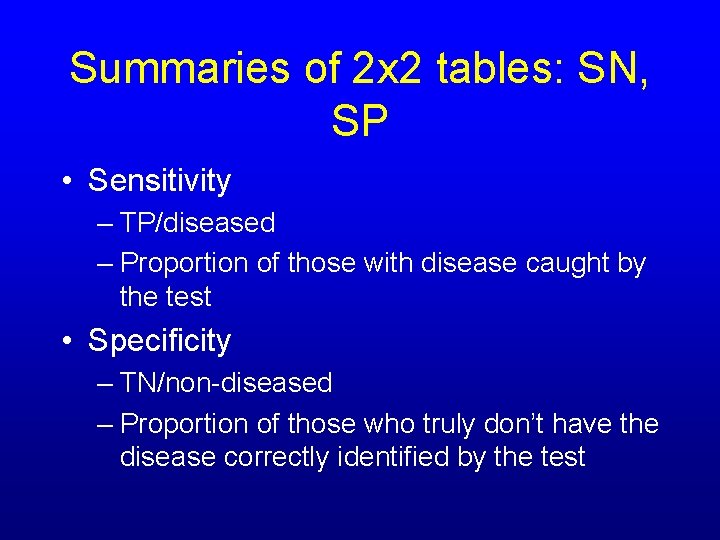

Summaries of 2 x 2 tables: SN, SP • Sensitivity – TP/diseased – Proportion of those with disease caught by the test • Specificity – TN/non-diseased – Proportion of those who truly don’t have the disease correctly identified by the test

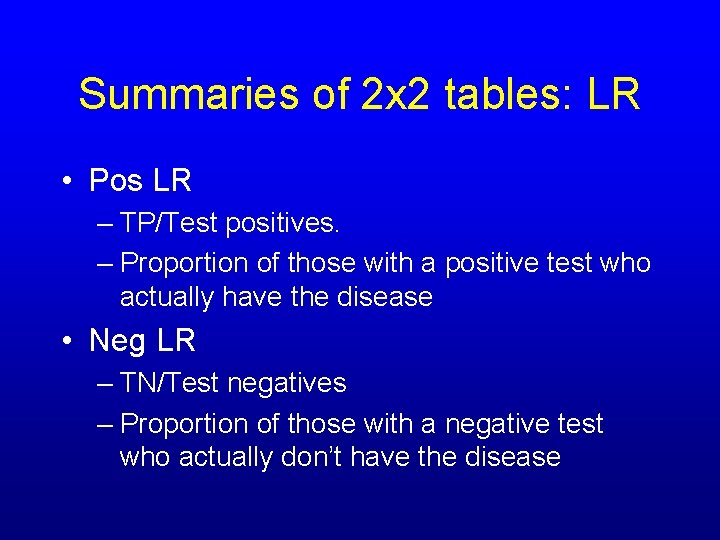

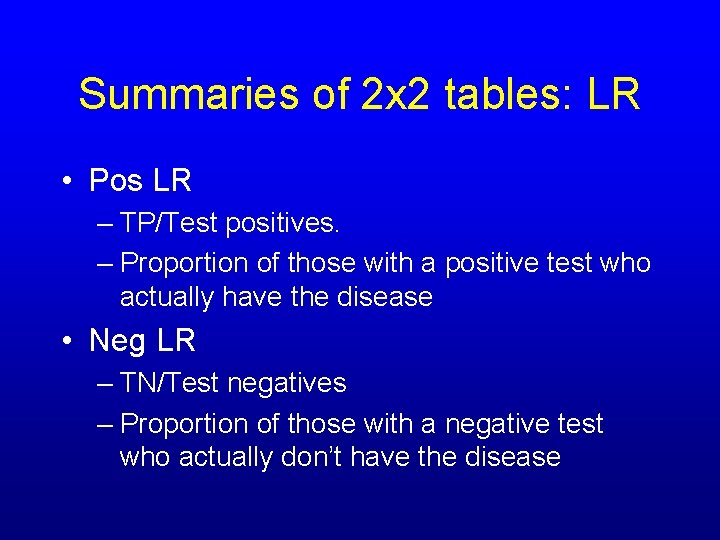

Summaries of 2 x 2 tables: LR • Pos LR – TP/Test positives. – Proportion of those with a positive test who actually have the disease • Neg LR – TN/Test negatives – Proportion of those with a negative test who actually don’t have the disease

SPIN, SNOUT • Need a (positive result on a) SPecific test to rule something IN • Need a (negative result on a) SENsitive test to rule something OUT • Decent rule of thumb but doesn’t apply pre-test probabilities

II. ROC curves

ROC curves • "Signal Dectection Theory" • World War II -- analysis of radar images • Radar operators had to decide whether a blip on the screen represented an enemy target, a friendly ship, or just noise • Signal detection theory measured the ability of radar receiver operators to do this, hence the name Receiver Operator Characteristics • In the 1970's signal detection theory recognized as useful for interpreting medical test results http: //gim. unmc. edu/dxtests/roc 3. htm

ROC basics • ROC curves plot – sensitivity vs. (1 -specificity) – (the true positive rate vs. the false negative rate) – at each possible cutpoint • Useful for visualizing the impact of various potential cutoff points on a continuous measure (continuous binary) • Economic decision on cutpoint; no single right answer

Limitations of ROC curves • Not intended to help with choosing particular items or for improving tests • Doesn’t tell us which parts of the test (items) are helpful in the region of interest • Doesn’t help us in combining the best items from several tests

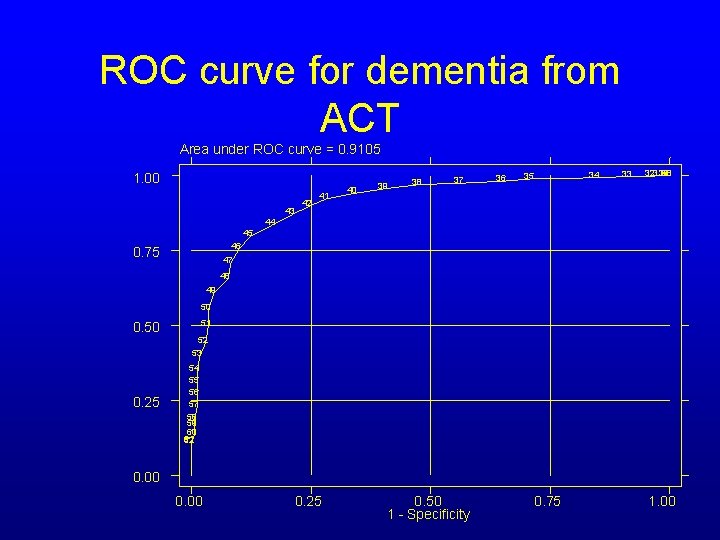

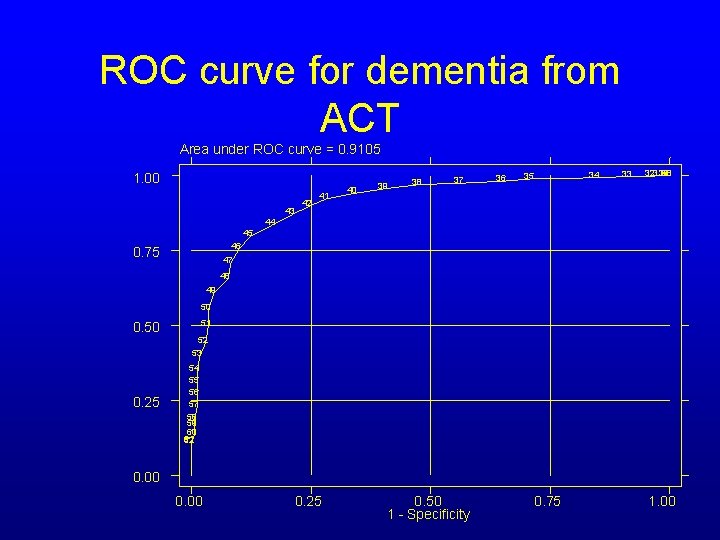

ROC curve for dementia from ACT Area under ROC curve = 0. 9105 1. 00 43 42 41 40 39 38 37 36 35 34 33 48 0 3231350 44 45 46 0. 75 47 48 49 50 0. 25 51 52 53 54 55 56 57 58 59 60 61 62 0. 00 0. 25 0. 50 1 - Specificity 0. 75 1. 00

Optimality from an ROC curve • Always tradeoffs between sensitivity and specificity • Also numbers of people who need to be evaluated with the gold standard test (number of individuals who will screen positive) • Optimal point depends on consequences of missing cases (false negatives), costs of working up false positives • Breast cancer: 10: 1 for sufficient sensitivity

What about normal/CIND/dementia? • Chengjie Xiong: ROC surface (Stat Med 2006; 25: 1251 -1273) – May have the same issues in terms of tradeoffs – Does missing a case of CIND have the same impact as missing a case of dementia? – Should we try to use the same tool to do both tasks? Dementia/normal is an easier target than CIND/normal. Dementia/CIND is hard and primarily depends on whether deficits have a functional impact, which in turn is very hard to tease out

III. Shortening of psychometric tests: strategies used in the literature

Search strategy • • “short*” “psychometric test*” #1 AND #2 Convenience sample of resulting articles – One or two examples of each technique

CTT strategies • Bengtssen et al (2007): item: total correlations >0. 80; missing>5% – Standard CTT approaches to limiting an item pool (also commonly see low item: total correlations excluded) – Doesn’t use disease status

Brute force strategies • Christensen et al. (2007) looked at all pairs of 2 tests for each subdomain and compared based on alpha and correlation with the subdomain (Psychological Assessment; WAIS-3 SF)

Regression strategies • Regress on total score (for evaluative tests) or use logistic regression approaches – Sapin et al (2004) used linear regression to predict a longer measure; nice series of validation steps including an independent validation sample – Eberhard-Gran et al (2007) used stepwise linear regression; no external validation • Problems: overfitting, ignores colinearity of items – Need a 2 nd confirmatory sample and/or some bootstrapping approach for model optimism – Also need a modeling strategy: Forwards/backwards stepwise? Best subsets?

EFA strategies • Rosen et al. (2007) looked at loadings from EFA and chose items with the highest loadings – No use of external (disease status) information – Highest loadings (// to highest item discriminations) has nothing to do with item difficulty; may well end up selecting highly discriminatory items with no relevance to disease/no disease

CFA strategies • Bunn et al. (2007) used MPLUS: CFA on a new sample, modified paths and eliminated items to improve fit statistics – No independent sample confirmation – No disease status reference

IRT strategies • Gill et al. (2007) – Bayesian IRT but I can’t figure out how they reduced their scale • Beevers et al. (2007) used nonparametric IRT – single sample. If the items looked bad they threw them out. Psych Assessment – Both of these papers relied only on IRT parameters to reduce the scale, not anything external (e. g. disease status)

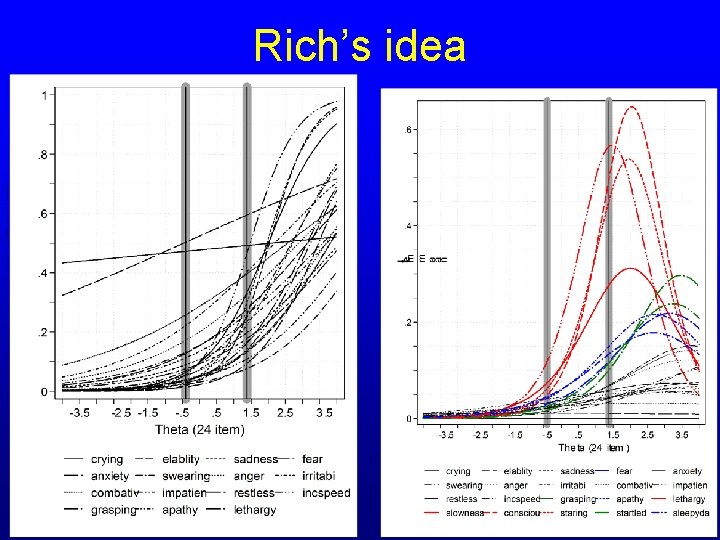

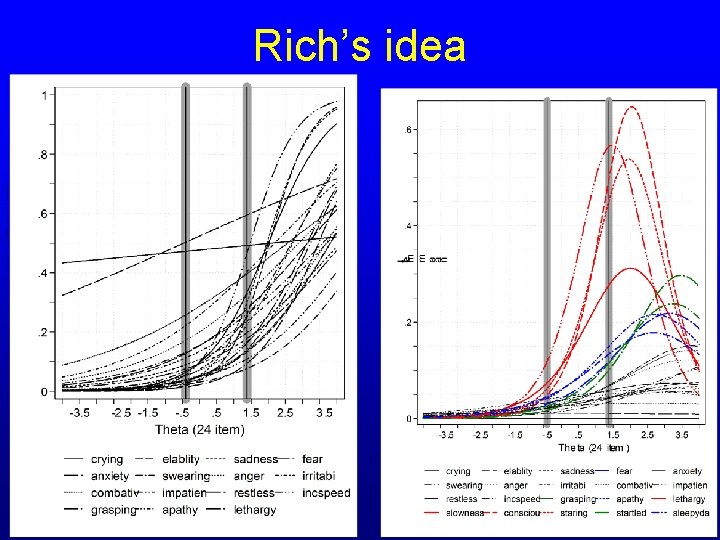

Combining IRT with external information • Combine item characteristic / information curves with some indicator of disease status – Paul’s old idea: ROC curves, identify region of interest, determine items with maximal information in that region – Rich’s new and simpler (and thus likely better) idea: box plots for diseased and non-diseased individuals superimposed on the ICCs/IICs • Takes advantage of the fact that item difficulty and person ability are on the same scale

Rich’s idea

Paul’s idea

Extensions of IRT / external information approaches • Targeted creation / addition of new items in particular regions of theta scale seems like a reasonable strategy • We have only talked about fixed forms – CAT is a reasonable extension – CAT likely more relevant for evaluative tests – Could terminate early if results became clear – reduced burden for those not close to the threshold

Other strategies • Decision trees – A bit like PCA – Based entirely on relationships with disease • Random forests – Machine learning technique; extension of decision trees – Microarray and GWA applications – Jonathan Gruhl – expertise obtained since he first heard of this topic on Monday

General comments • Literature is pretty wide open • Seems like IRT provides some useful tools • IRT wedded to distribution of scores of diseased / non-diseased individuals seems like a good strategy • Machine learning tools are interesting – Ignore covariation between items, theory item link • Hope to compare/contrast strategies with CSHA data

IV. Testlet response theory

Rationale • IRT posits unidimensionality: a single underlying latent trait (domain) explains observed covariation between items • Various tools to address this assumption – Literature essentially always concludes that scales are sufficiently unidimensional to do what the investigator wanted to do in the first place • See JS Lai, D Cella, P Crane, “Factor analysis techniques for assessing sufficient unidimensionality of cancer related fatigue. ” Qual Life Res 2006; 15: 1179 -90.

Dimensionality of cognitive screening tests • Initial MMSE and 3 MS papers do not mention different cognitive domains • Initial CASI paper (also by Evelyn Teng) describes 9 domains: • • • long-term memory, orientation, attention, concentration, short-term memory, language, visual construction, fluency, abstraction and judgment

Single factor (IRT) model

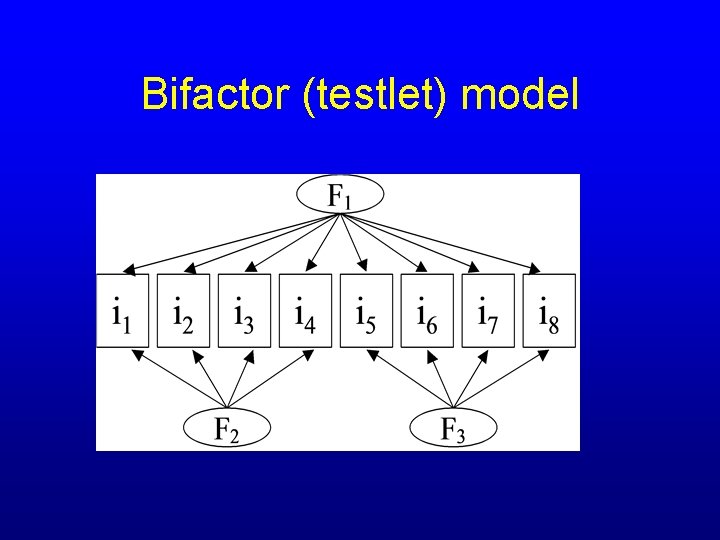

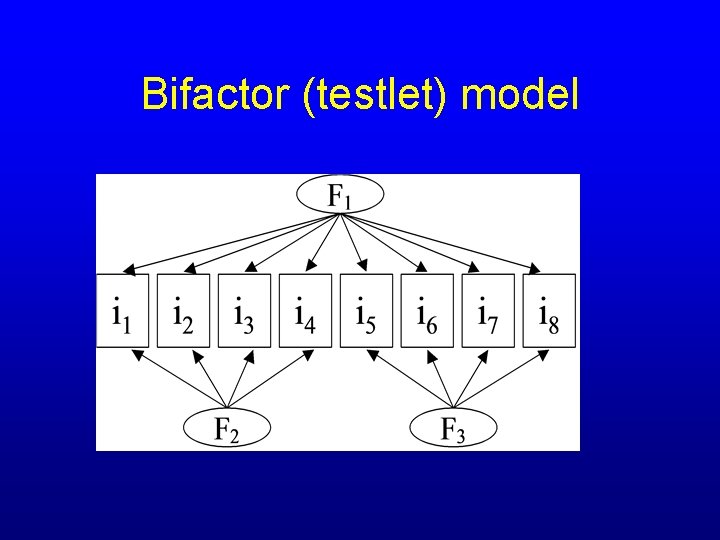

Bifactor (testlet) model

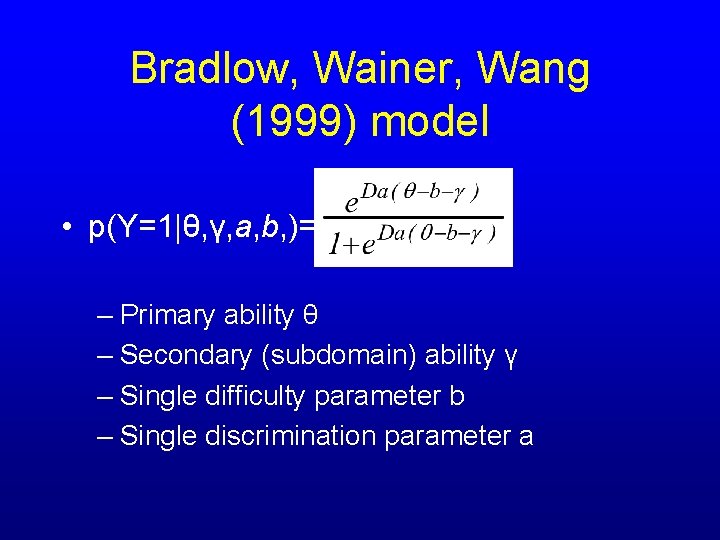

Bradlow, Wainer, Wang (1999) model • p(Y=1|θ, γ, a, b, )= – Primary ability θ – Secondary (subdomain) ability γ – Single difficulty parameter b – Single discrimination parameter a

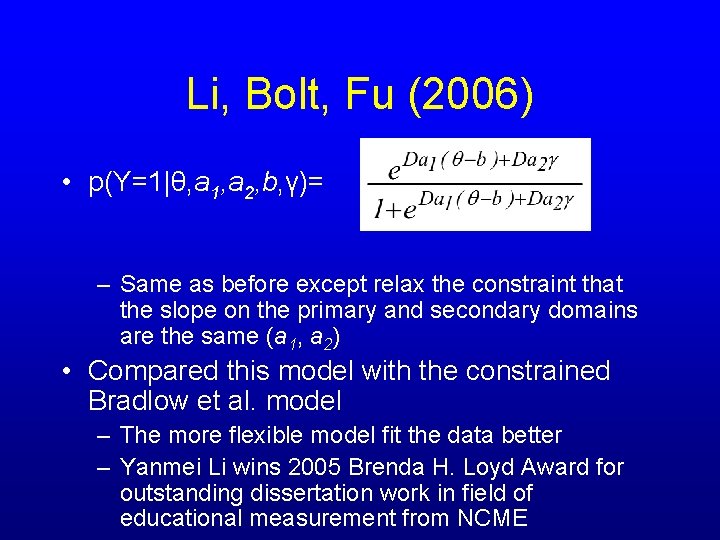

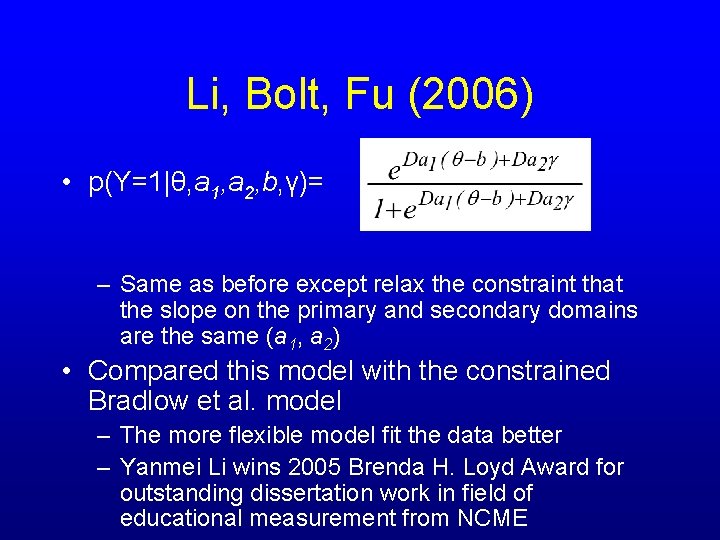

Li, Bolt, Fu (2006) • p(Y=1|θ, a 1, a 2, b, γ)= – Same as before except relax the constraint that the slope on the primary and secondary domains are the same (a 1, a 2) • Compared this model with the constrained Bradlow et al. model – The more flexible model fit the data better – Yanmei Li wins 2005 Brenda H. Loyd Award for outstanding dissertation work in field of educational measurement from NCME

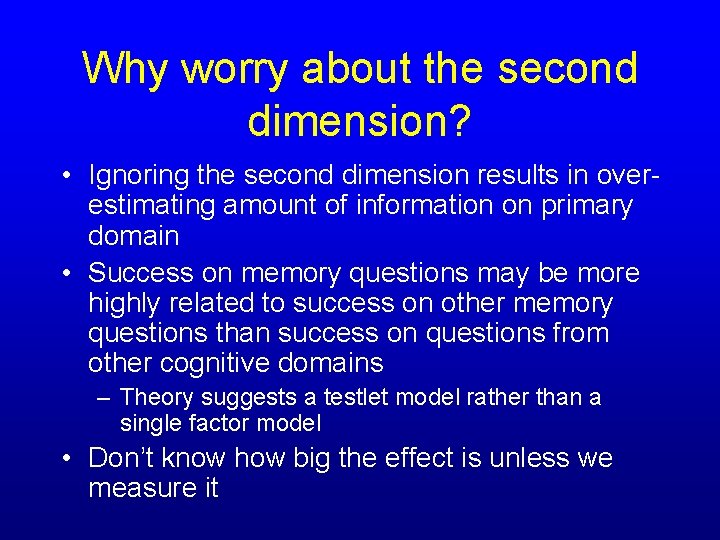

Why worry about the second dimension? • Ignoring the second dimension results in overestimating amount of information on primary domain • Success on memory questions may be more highly related to success on other memory questions than success on questions from other cognitive domains – Theory suggests a testlet model rather than a single factor model • Don’t know how big the effect is unless we measure it

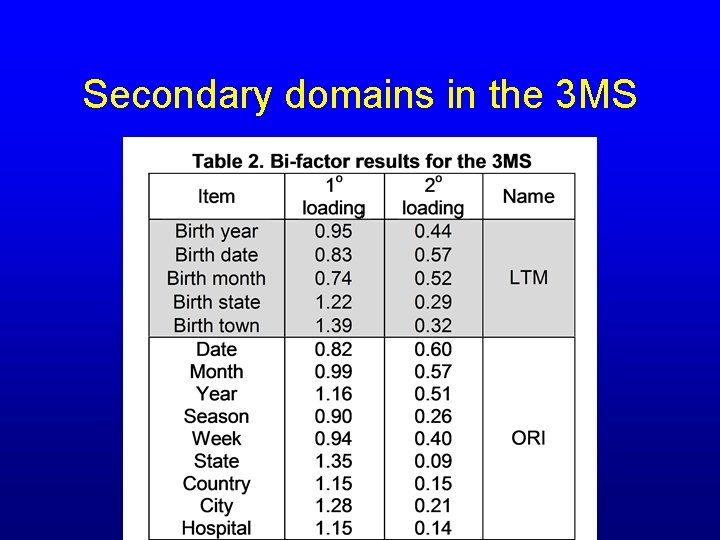

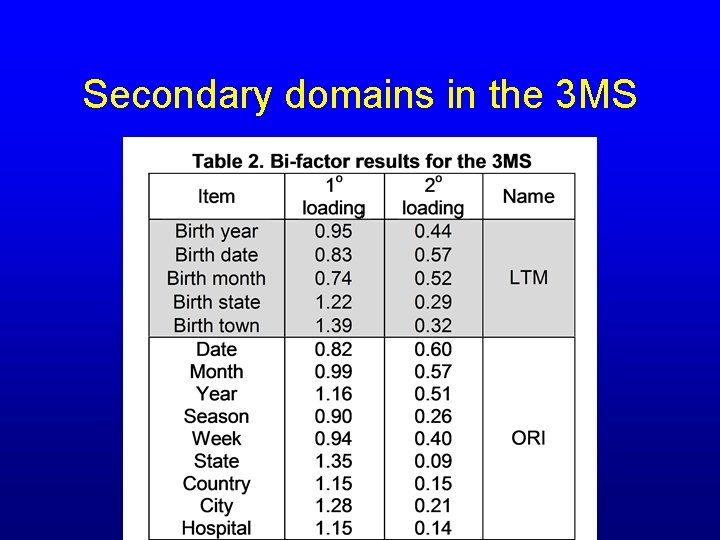

Secondary domains in the 3 MS

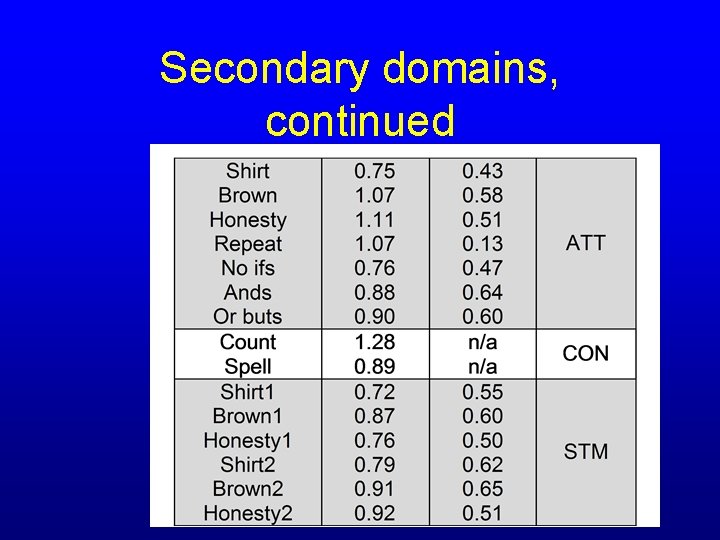

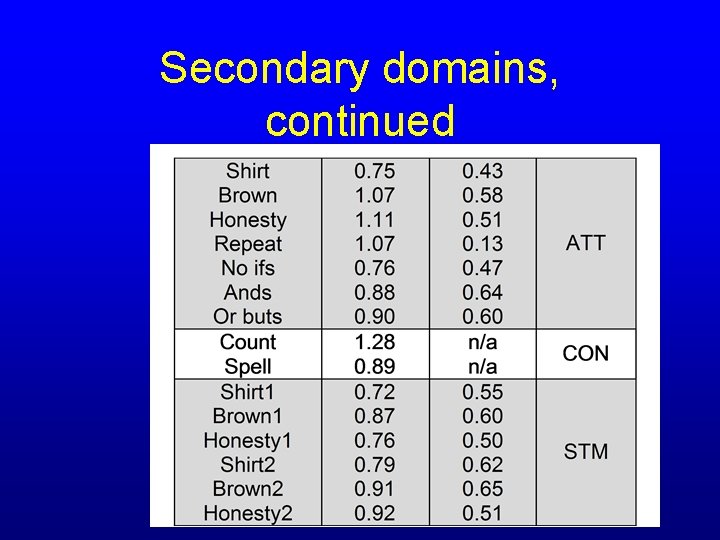

Secondary domains, continued

Secondary domains, slide 3

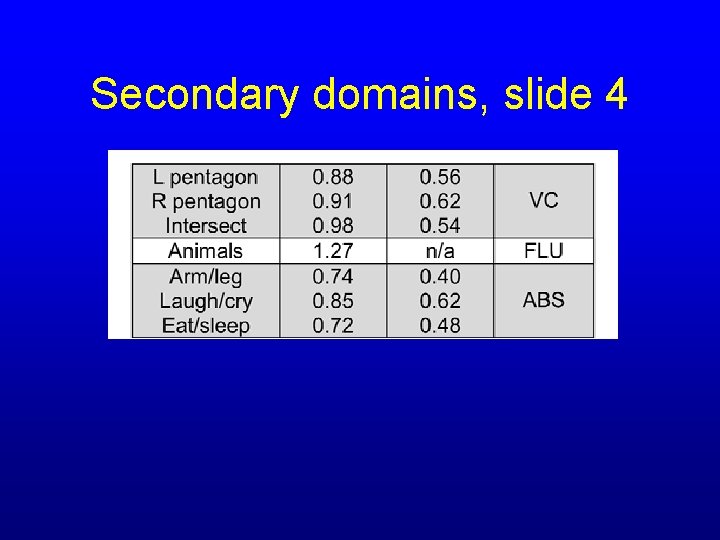

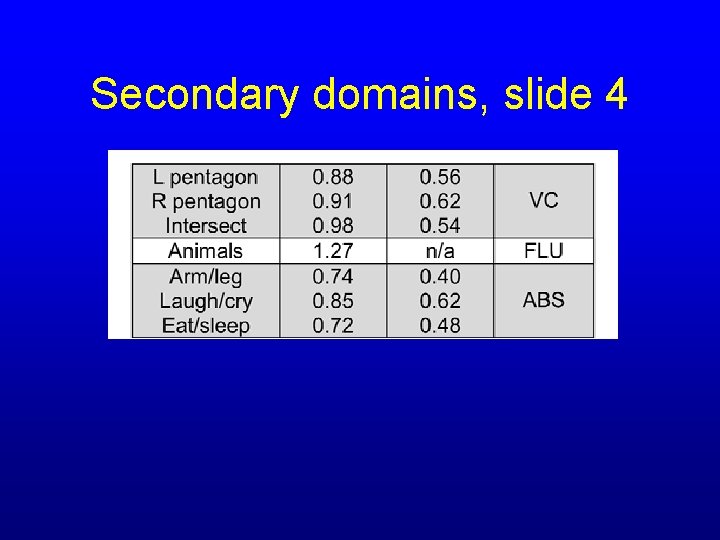

Secondary domains, slide 4

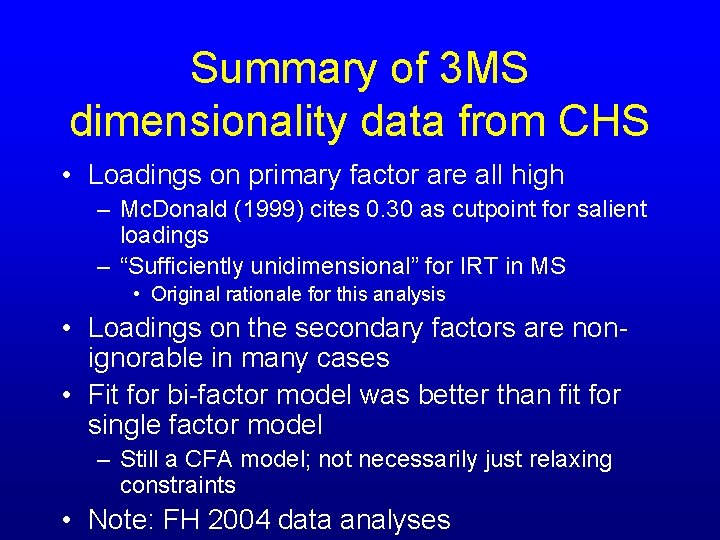

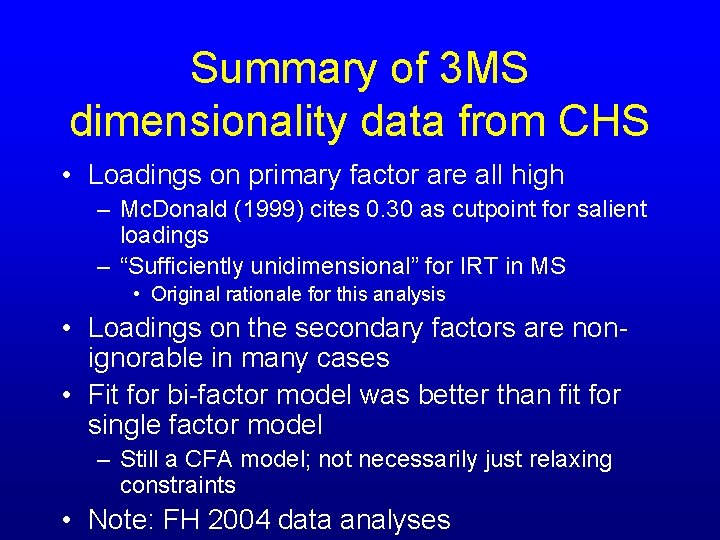

Summary of 3 MS dimensionality data from CHS • Loadings on primary factor are all high – Mc. Donald (1999) cites 0. 30 as cutpoint for salient loadings – “Sufficiently unidimensional” for IRT in MS • Original rationale for this analysis • Loadings on the secondary factors are nonignorable in many cases • Fit for bi-factor model was better than fit for single factor model – Still a CFA model; not necessarily just relaxing constraints • Note: FH 2004 data analyses

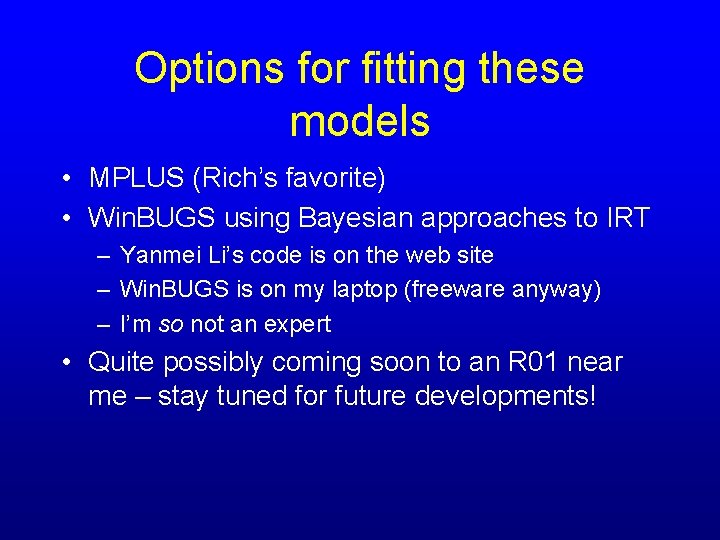

Options for fitting these models • MPLUS (Rich’s favorite) • Win. BUGS using Bayesian approaches to IRT – Yanmei Li’s code is on the web site – Win. BUGS is on my laptop (freeware anyway) – I’m so not an expert • Quite possibly coming soon to an R 01 near me – stay tuned for future developments!

Introduction to psychometric

Introduction to psychometric Objective of pruning

Objective of pruning Osha tree trimming safety book

Osha tree trimming safety book Tasc psychometrics made easy

Tasc psychometrics made easy Psychometrics 101

Psychometrics 101 Introduction to psychometrics

Introduction to psychometrics Graphic design tools and equipment

Graphic design tools and equipment Edging in forging

Edging in forging Passmore psychometrics in coaching download

Passmore psychometrics in coaching download Ace different tests help iq but

Ace different tests help iq but Screening and selecting employees international

Screening and selecting employees international Checklist method of idea generation

Checklist method of idea generation Restaurant use case diagram

Restaurant use case diagram Waterfall approach vs shower approach

Waterfall approach vs shower approach Zoho and background screening

Zoho and background screening Yeast artificial chromosome

Yeast artificial chromosome How to work out payback period

How to work out payback period Blue-white selection

Blue-white selection Class diagram for airport check-in and security screening

Class diagram for airport check-in and security screening Preimplantation genetic screening pros and cons

Preimplantation genetic screening pros and cons Screening decisions and preference decisions

Screening decisions and preference decisions Alberta screening and prevention program

Alberta screening and prevention program Shrek ordinary world

Shrek ordinary world Parametric test and non parametric test

Parametric test and non parametric test City and guilds evolve

City and guilds evolve Chapter 24 diagnostic tests and specimen collection

Chapter 24 diagnostic tests and specimen collection Chapter 20 more about tests and intervals

Chapter 20 more about tests and intervals Romeo and juliet test

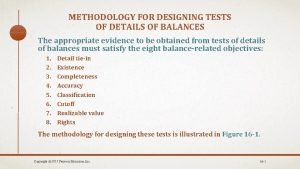

Romeo and juliet test Test of detail

Test of detail Family and friends unit 2

Family and friends unit 2 Gl progress test sample papers science

Gl progress test sample papers science Biochemical data, medical tests, and procedures (bd)

Biochemical data, medical tests, and procedures (bd) Physical activity and physical fitness assessments grade 9

Physical activity and physical fitness assessments grade 9 Projective test advantages and disadvantages

Projective test advantages and disadvantages Advantage of focus groups

Advantage of focus groups Family and friends movers

Family and friends movers Chapter 23 specimen collection and diagnostic testing

Chapter 23 specimen collection and diagnostic testing List and describe 3 tests of cerebellar function

List and describe 3 tests of cerebellar function Leeds pathology tests and tubes

Leeds pathology tests and tubes 2019 phonics screening test

2019 phonics screening test Magnesium in preeclampsia mechanism

Magnesium in preeclampsia mechanism What is a screening interview

What is a screening interview Tscc screening form

Tscc screening form Vbd screening full form

Vbd screening full form Denver developmental screening test

Denver developmental screening test Crafft screening tool

Crafft screening tool Dettato con doppie

Dettato con doppie Reynolds intellectual screening test sample questions

Reynolds intellectual screening test sample questions Reliability of a screening test

Reliability of a screening test Down syndrome screening results

Down syndrome screening results Psp mvr

Psp mvr Postural screening worksheet

Postural screening worksheet Phytochemical screening methods

Phytochemical screening methods Peehip wellness screening form 2021

Peehip wellness screening form 2021 Pediatric vision screening devices

Pediatric vision screening devices Fine-grained screening

Fine-grained screening Obra screening illinois

Obra screening illinois Ejemplos de firewall

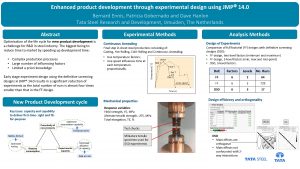

Ejemplos de firewall Enhanced product development

Enhanced product development Dst test

Dst test Developmental screening vs surveillance

Developmental screening vs surveillance Trivandrum developmental screening chart

Trivandrum developmental screening chart