Tri Media TM 5250 Optimization Training Part 3

![Restricted Pointers [2] Without restricted pointers: Cycle, X: X+1: X+2: X+3: X+4: X+5: X+6: Restricted Pointers [2] Without restricted pointers: Cycle, X: X+1: X+2: X+3: X+4: X+5: X+6:](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-4.jpg)

![Restricted Pointers [3] With restricted pointers: Cycle, X: X+1: X+2: X+3: X+4: X+5: X+6: Restricted Pointers [3] With restricted pointers: Cycle, X: X+1: X+2: X+3: X+4: X+5: X+6:](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-5.jpg)

![Smart Method to replace integer Division and Modulo[2] • Integer multiply unit calculates 64 Smart Method to replace integer Division and Modulo[2] • Integer multiply unit calculates 64](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-20.jpg)

![Smart Method to replace integer Division and Modulo[3] • Integer multiply unit calculates 64 Smart Method to replace integer Division and Modulo[3] • Integer multiply unit calculates 64](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-21.jpg)

![Compacting data structures • Use smallest possible data type: char data[5]={ 5, 23, 65, Compacting data structures • Use smallest possible data type: char data[5]={ 5, 23, 65,](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-34.jpg)

![Data Pre-fetching [2] • L 2 Cache supports Software Pre-fetching – Supported through Pre-fetch Data Pre-fetching [2] • L 2 Cache supports Software Pre-fetching – Supported through Pre-fetch](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-40.jpg)

![Divx Video Decoder Case Study [2] • Measured over seven streams including HD streams. Divx Video Decoder Case Study [2] • Measured over seven streams including HD streams.](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-61.jpg)

- Slides: 62

Tri. Media TM 5250 Optimization Training Part 3, Optimizations by Hand Torsten Fink & Manoj Koul Tri. Media Center of Excellence January 2006

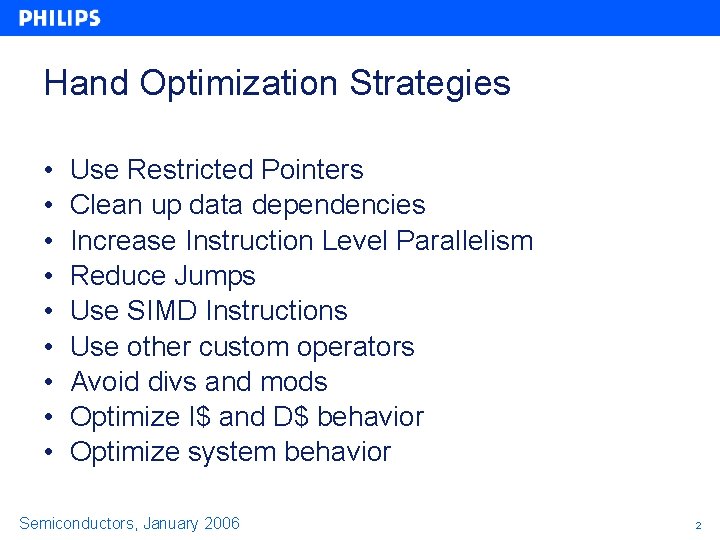

Hand Optimization Strategies • • • Use Restricted Pointers Clean up data dependencies Increase Instruction Level Parallelism Reduce Jumps Use SIMD Instructions Use other custom operators Avoid divs and mods Optimize I$ and D$ behavior Optimize system behavior Semiconductors, January 2006 2

Restricted Pointers • Restricted pointers are a hint to the compiler – ANSI C 99 • Add “restrict” keyword to variable declarations – if pointers are passed into function, compiler does not know if they can overlap void foo 1( short *a, char *b, char *c) { int i; for( i = 0; i < 1000; i++) { a[i] = b[i] * c[i]; } } – use restricted pointers if it is guaranteed, that pointers don’t alias/overlap void foo 2( short * restrict a, char * restrict * b, char * restrict c) { …. } Semiconductors, January 2006 3

![Restricted Pointers 2 Without restricted pointers Cycle X X1 X2 X3 X4 X5 X6 Restricted Pointers [2] Without restricted pointers: Cycle, X: X+1: X+2: X+3: X+4: X+5: X+6:](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-4.jpg)

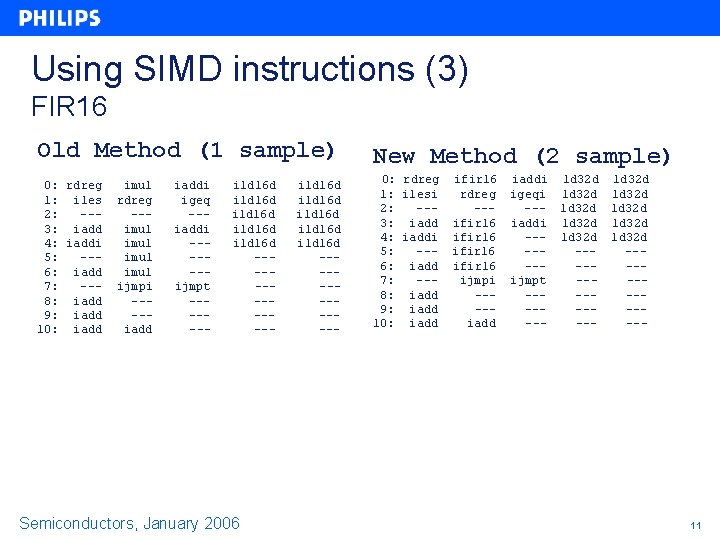

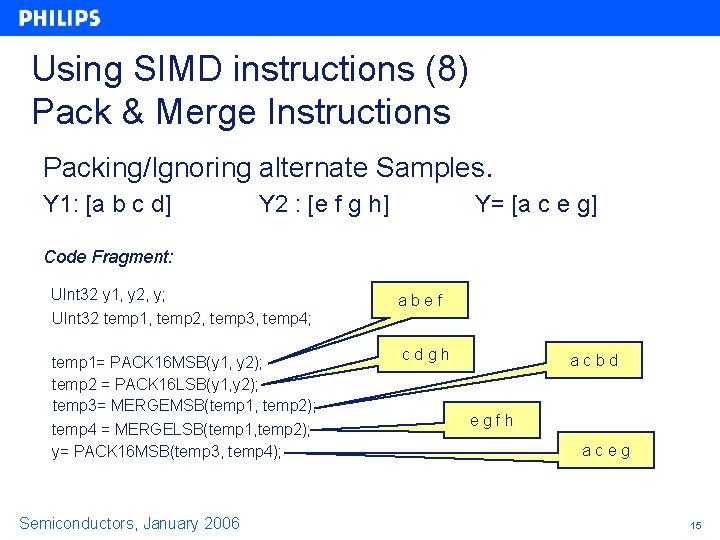

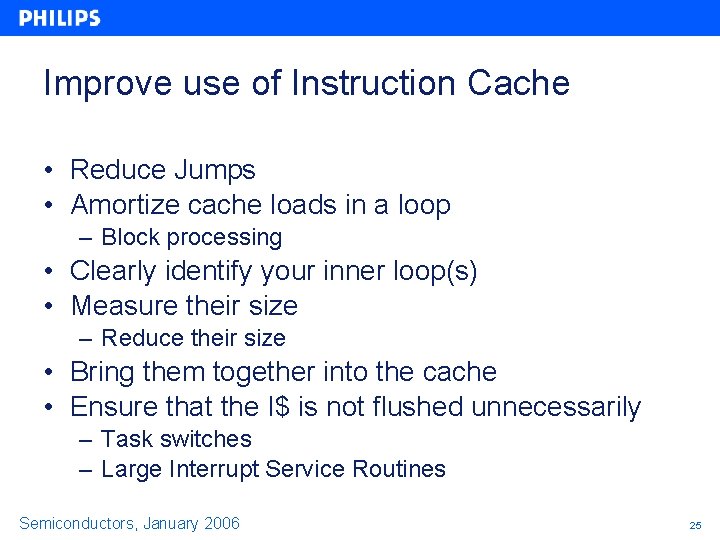

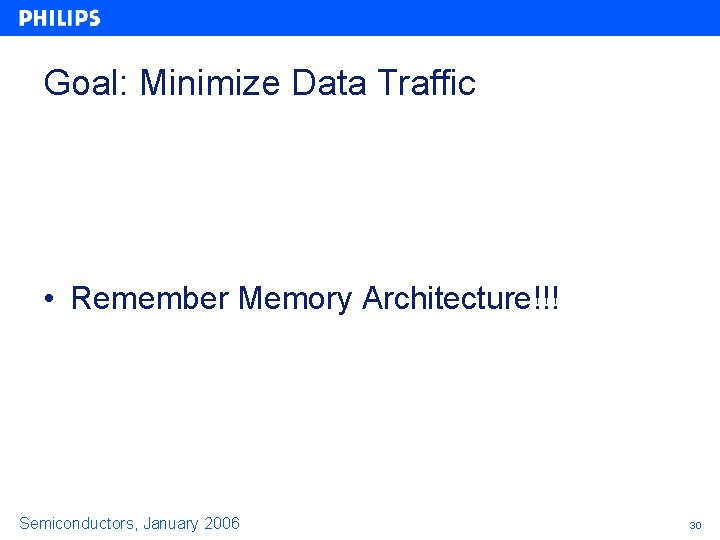

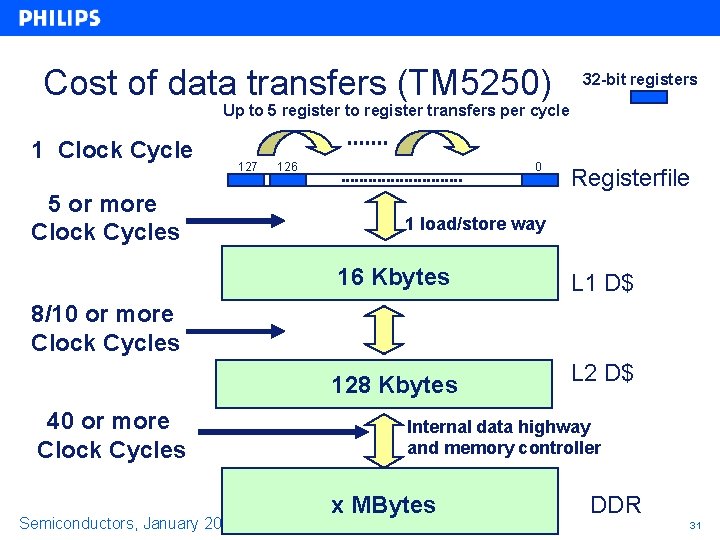

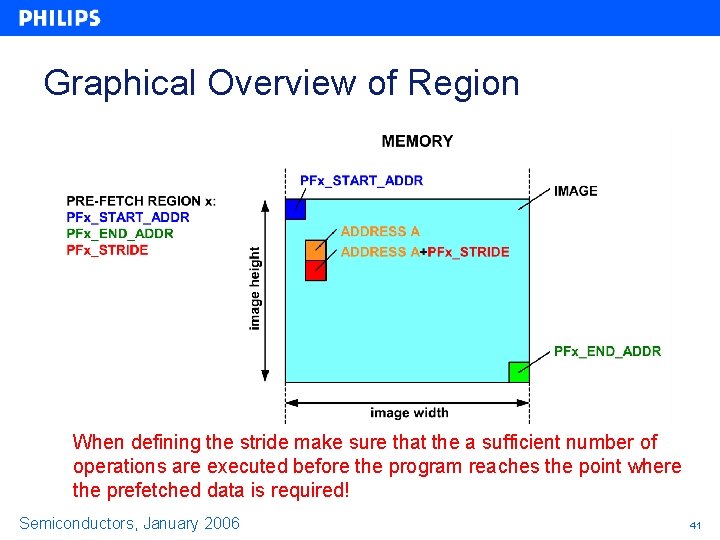

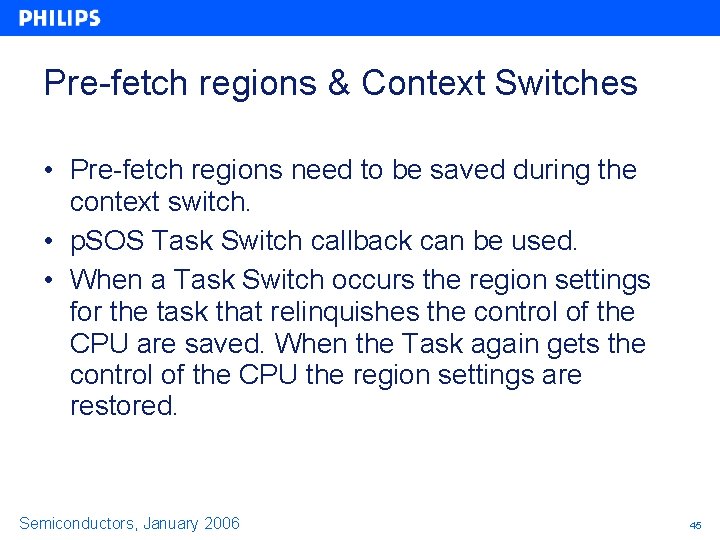

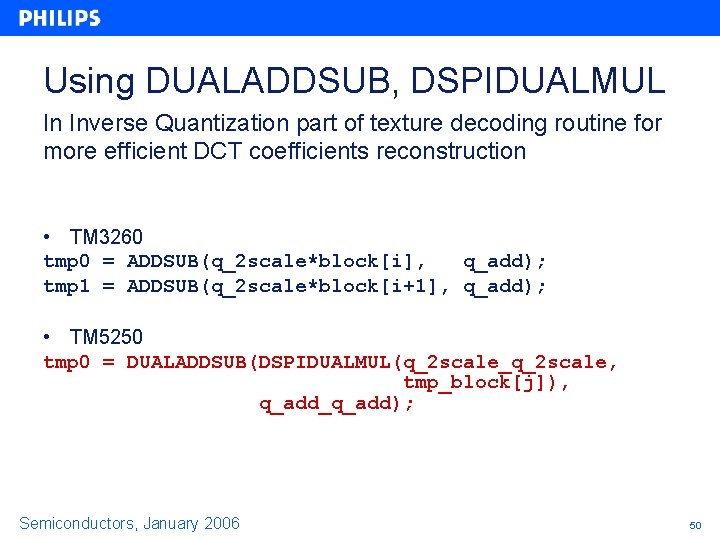

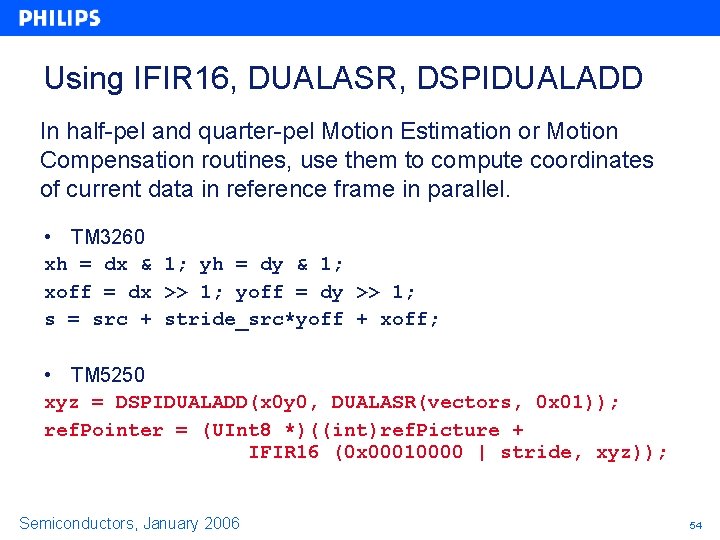

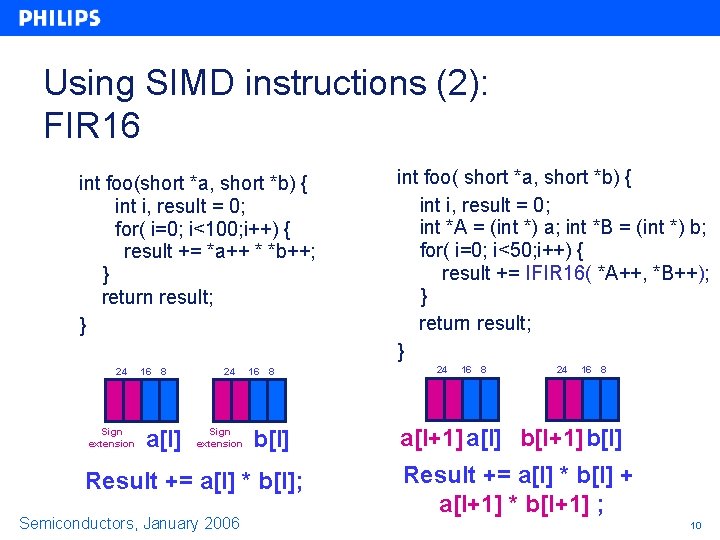

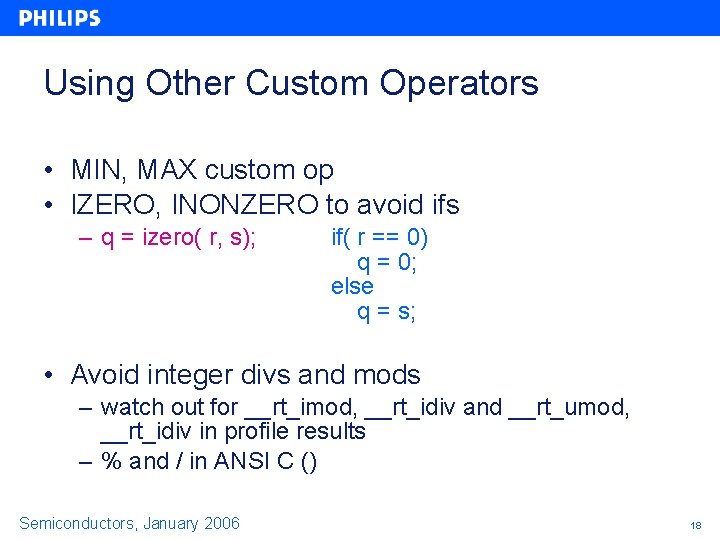

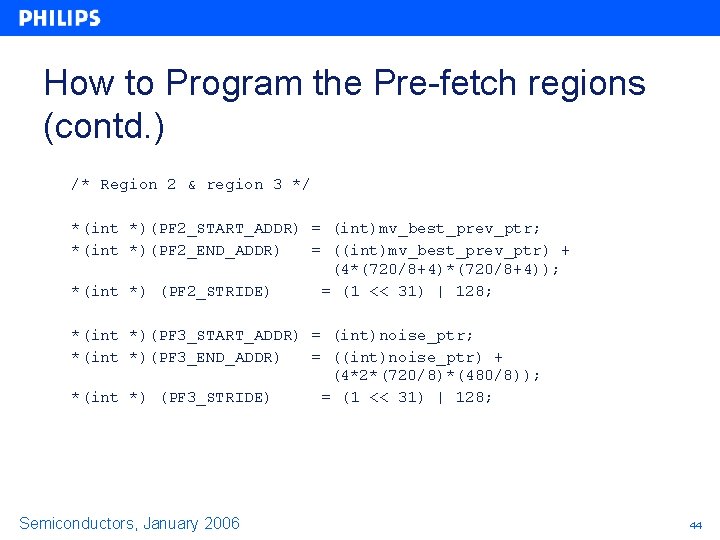

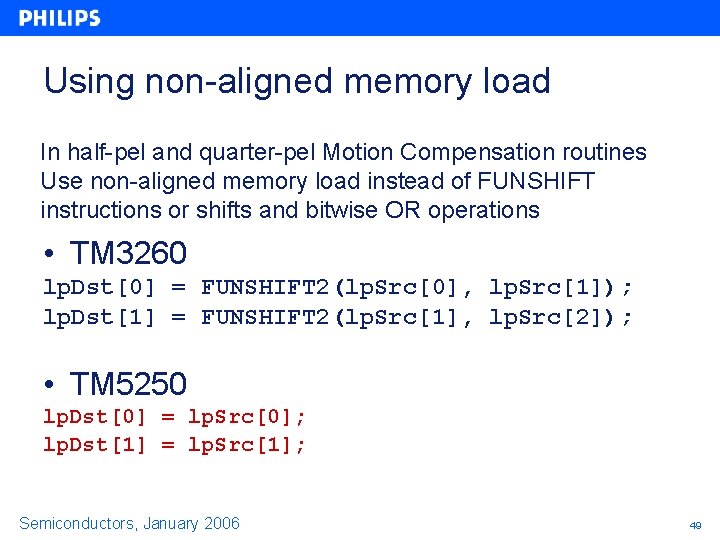

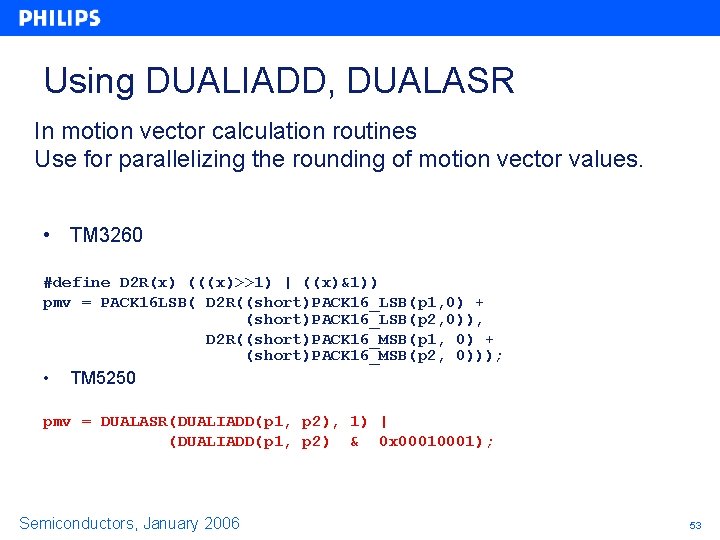

Restricted Pointers [2] Without restricted pointers: Cycle, X: X+1: X+2: X+3: X+4: X+5: X+6: X+7: X+8: X+9: X+10: Issue slots 1 - 5 --imulm -------------imulm ------- Semiconductors, January 2006 ------------st 16 d ild 8 d -----st 16 d ----ild 8 d ------- Loads cannot be executed before store 4

![Restricted Pointers 3 With restricted pointers Cycle X X1 X2 X3 X4 X5 X6 Restricted Pointers [3] With restricted pointers: Cycle, X: X+1: X+2: X+3: X+4: X+5: X+6:](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-5.jpg)

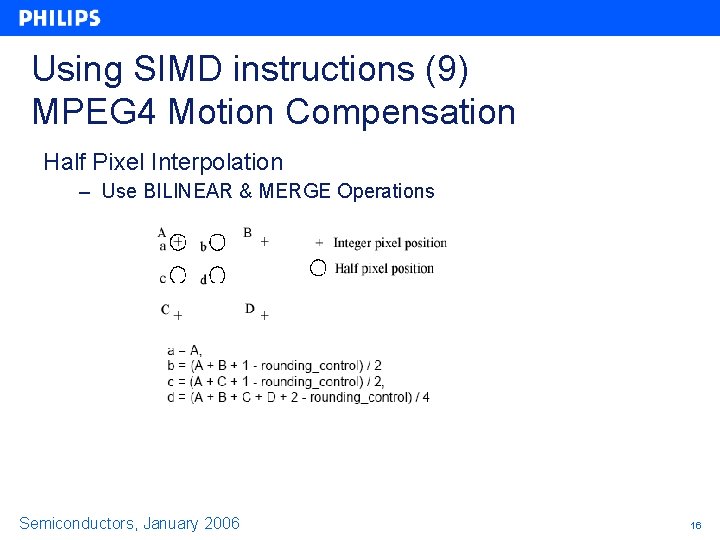

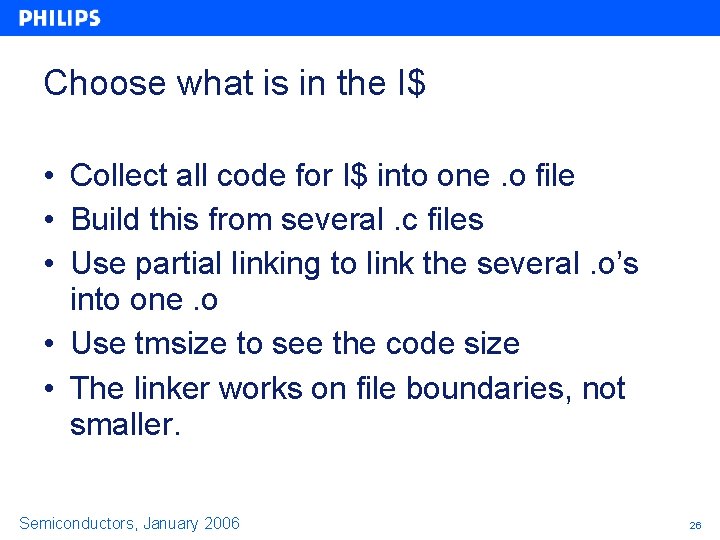

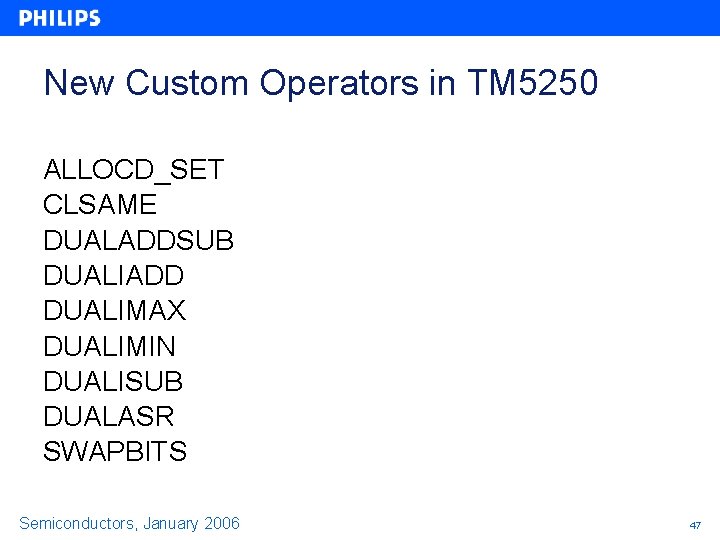

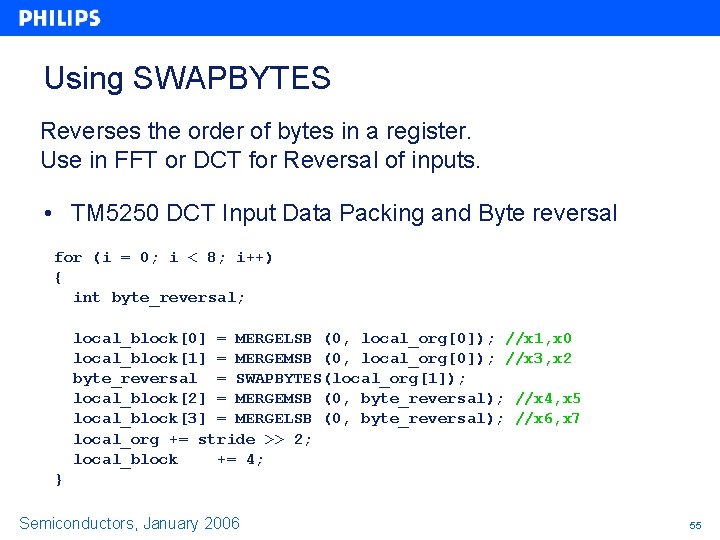

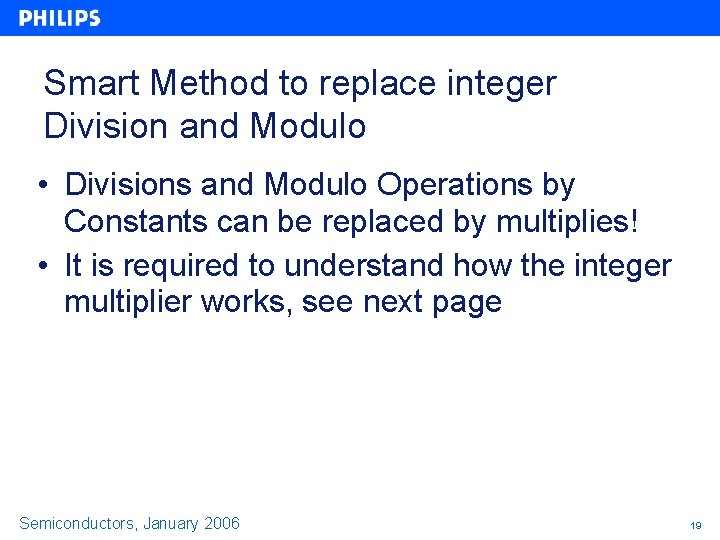

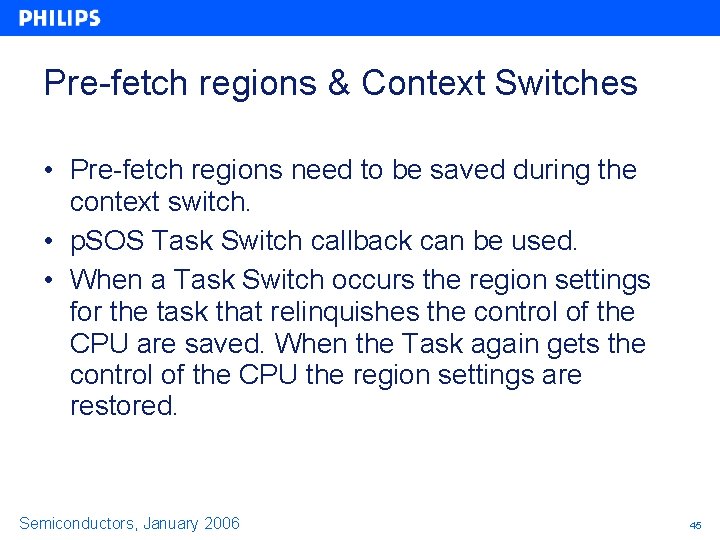

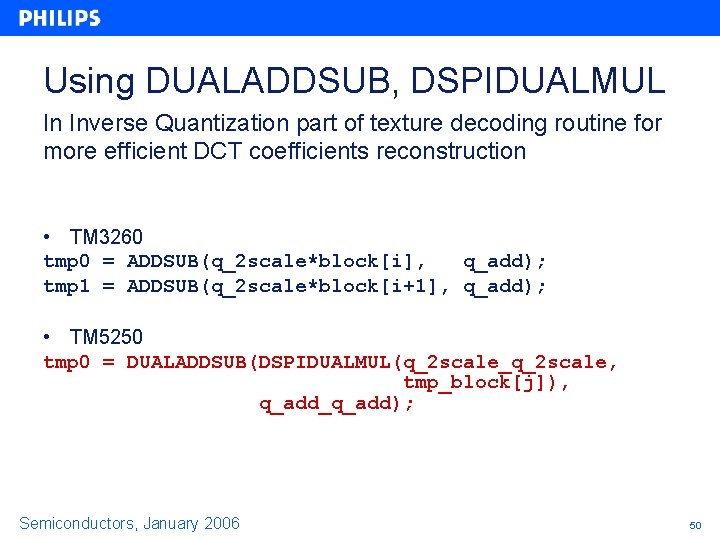

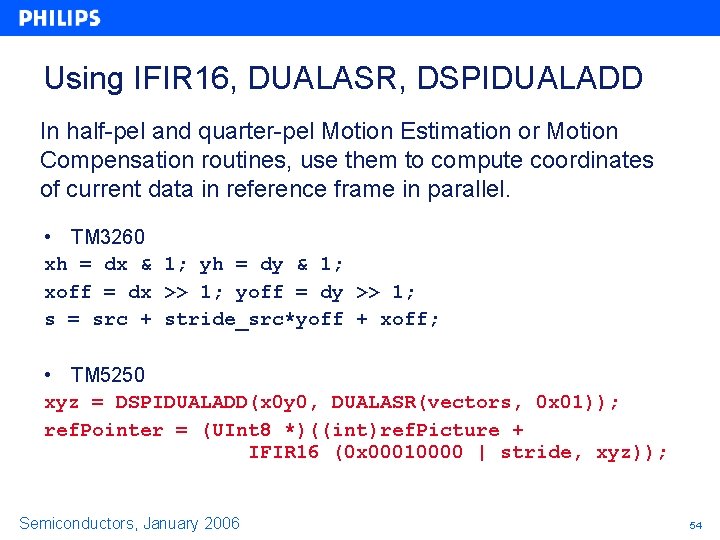

Restricted Pointers [3] With restricted pointers: Cycle, X: X+1: X+2: X+3: X+4: X+5: X+6: X+7: X+8: X+9: X+10: Issue slots 1 - 5 --imulm -----imulm ----------- Semiconductors, January 2006 ------------ ild 8 d st 16 d ild 8 d --st 16 d ----- ild 8 d -------- Loads can be executed before store 5

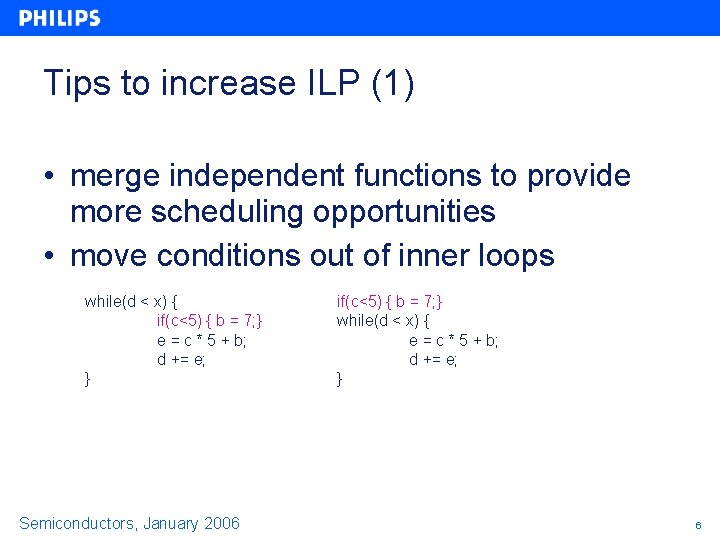

Tips to increase ILP (1) • merge independent functions to provide more scheduling opportunities • move conditions out of inner loops while(d < x) { if(c<5) { b = 7; } e = c * 5 + b; d += e; } Semiconductors, January 2006 if(c<5) { b = 7; } while(d < x) { e = c * 5 + b; d += e; } 6

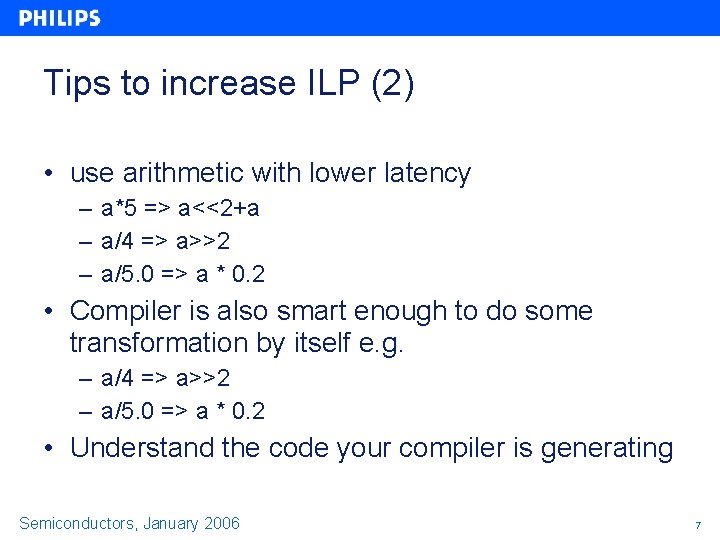

Tips to increase ILP (2) • use arithmetic with lower latency – a*5 => a<<2+a – a/4 => a>>2 – a/5. 0 => a * 0. 2 • Compiler is also smart enough to do some transformation by itself e. g. – a/4 => a>>2 – a/5. 0 => a * 0. 2 • Understand the code your compiler is generating Semiconductors, January 2006 7

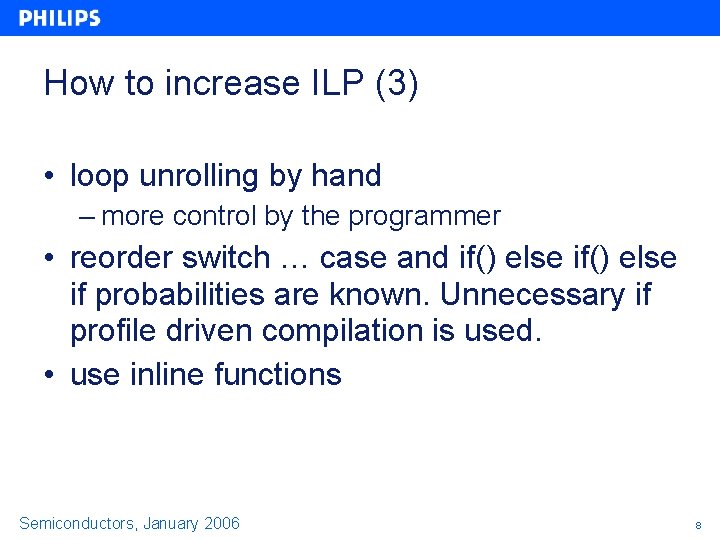

How to increase ILP (3) • loop unrolling by hand – more control by the programmer • reorder switch … case and if() else if probabilities are known. Unnecessary if profile driven compilation is used. • use inline functions Semiconductors, January 2006 8

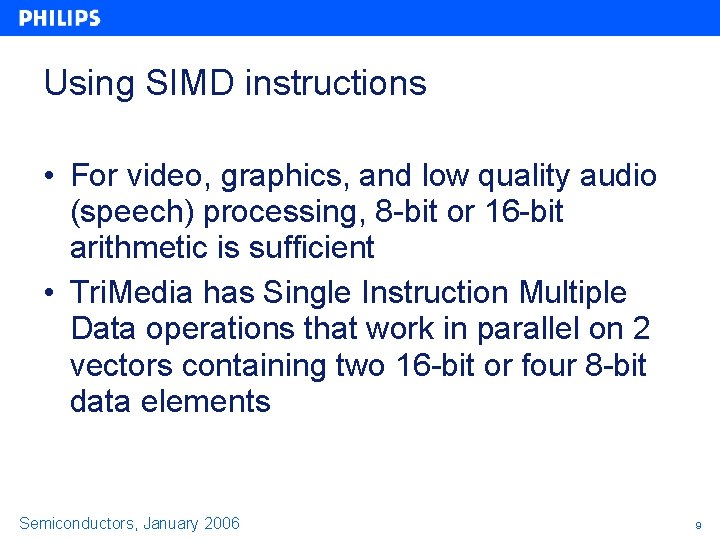

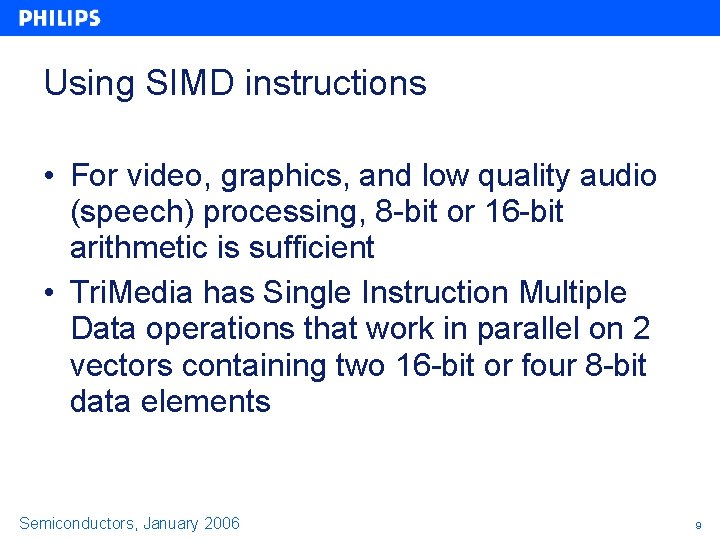

Using SIMD instructions • For video, graphics, and low quality audio (speech) processing, 8 -bit or 16 -bit arithmetic is sufficient • Tri. Media has Single Instruction Multiple Data operations that work in parallel on 2 vectors containing two 16 -bit or four 8 -bit data elements Semiconductors, January 2006 9

Using SIMD instructions (2): FIR 16 int foo(short *a, short *b) { int i, result = 0; for( i=0; i<100; i++) { result += *a++ * *b++; } return result; } 24 Sign extension 16 8 a[I] 24 Sign extension 16 8 b[I] Result += a[I] * b[I]; Semiconductors, January 2006 int foo( short *a, short *b) { int i, result = 0; int *A = (int *) a; int *B = (int *) b; for( i=0; i<50; i++) { result += IFIR 16( *A++, *B++); } return result; } 24 16 8 a[I+1] a[I] b[I+1] b[I] Result += a[I] * b[I] + a[I+1] * b[I+1] ; 10

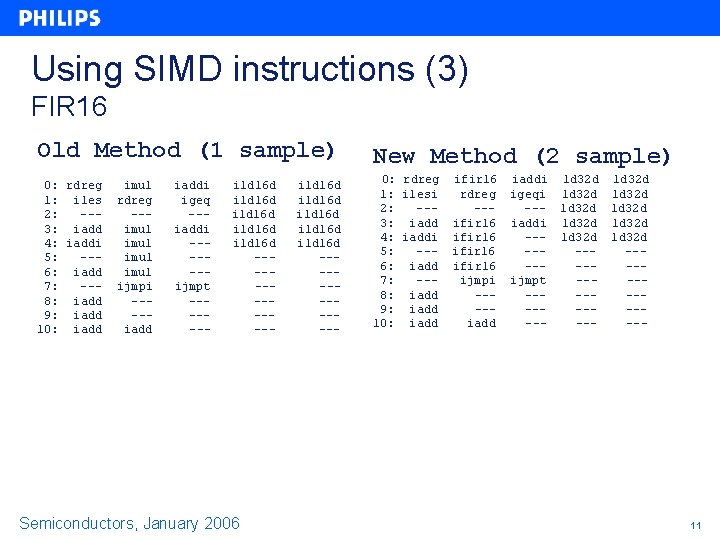

Using SIMD instructions (3) FIR 16 Old Method (1 sample) New Method (2 sample) 0: rdreg 1: iles 2: --3: iadd 4: iaddi 5: --6: iadd 7: --8: iadd 9: iadd 10: iadd 0: rdreg 1: ilesi 2: --3: iadd 4: iaddi 5: --6: iadd 7: --8: iadd 9: iadd 10: iadd imul rdreg --imul ijmpi ----iaddi igeq --iaddi ------ijmpt ------- ild 16 d ild 16 d ------- Semiconductors, January 2006 ild 16 d ild 16 d ------- ifir 16 rdreg --ifir 16 ijmpi ----iaddi igeqi --iaddi ------ijmpt ------- ld 32 d ld 32 d ld 32 d ------------- 11

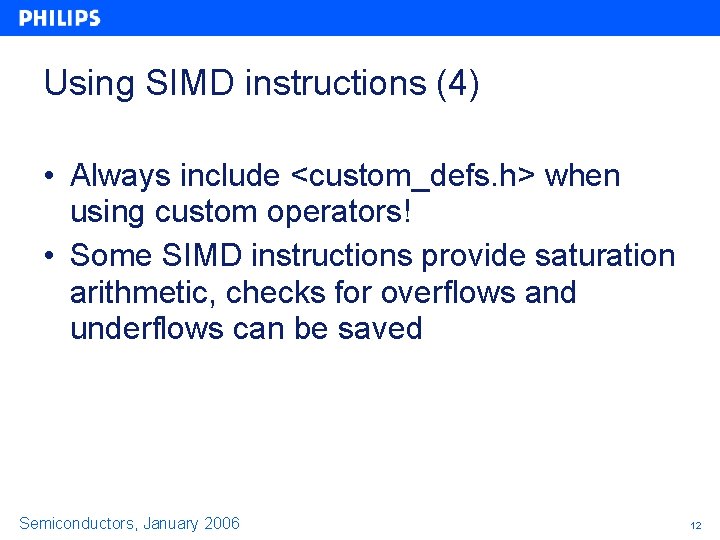

Using SIMD instructions (4) • Always include <custom_defs. h> when using custom operators! • Some SIMD instructions provide saturation arithmetic, checks for overflows and underflows can be saved Semiconductors, January 2006 12

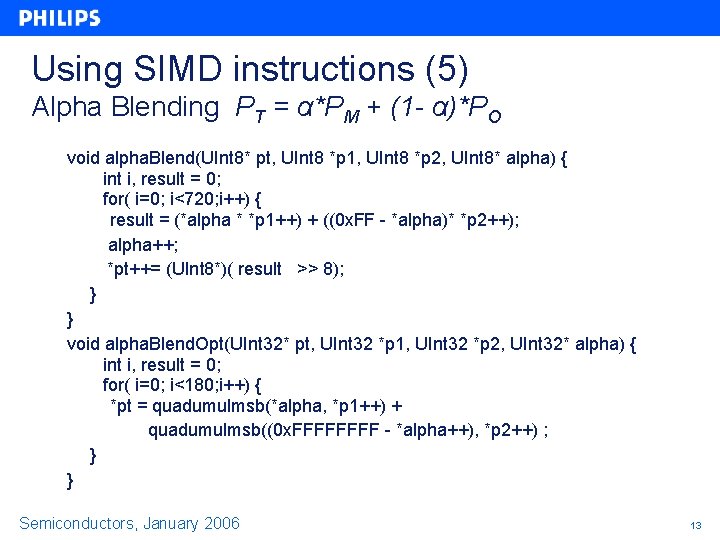

Using SIMD instructions (5) Alpha Blending PT = α*PM + (1 - α)*PO void alpha. Blend(UInt 8* pt, UInt 8 *p 1, UInt 8 *p 2, UInt 8* alpha) { int i, result = 0; for( i=0; i<720; i++) { result = (*alpha * *p 1++) + ((0 x. FF - *alpha)* *p 2++); alpha++; *pt++= (UInt 8*)( result >> 8); } } void alpha. Blend. Opt(UInt 32* pt, UInt 32 *p 1, UInt 32 *p 2, UInt 32* alpha) { int i, result = 0; for( i=0; i<180; i++) { *pt = quadumulmsb(*alpha, *p 1++) + quadumulmsb((0 x. FFFF - *alpha++), *p 2++) ; } } Semiconductors, January 2006 13

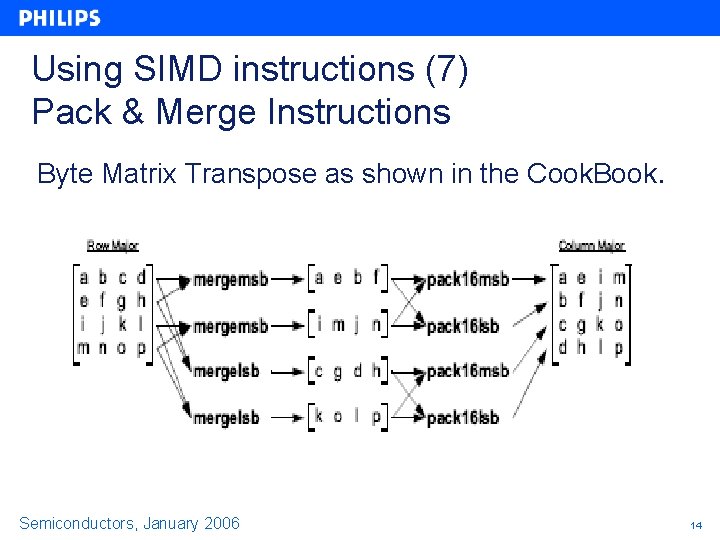

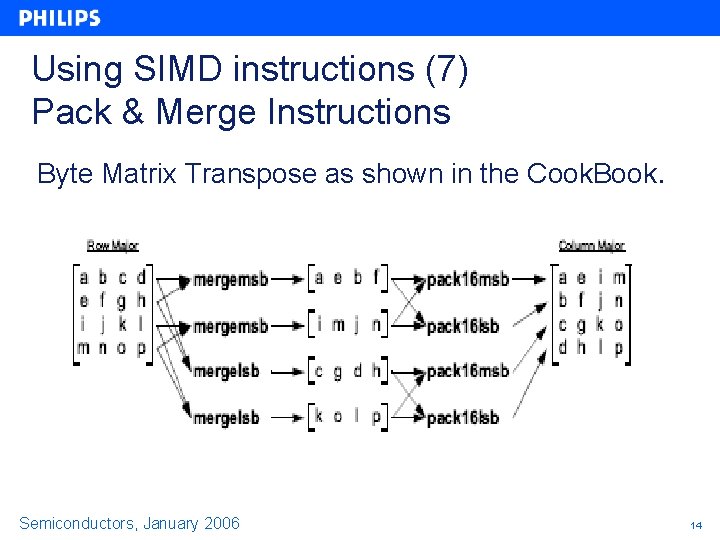

Using SIMD instructions (7) Pack & Merge Instructions Byte Matrix Transpose as shown in the Cook. Book. Semiconductors, January 2006 14

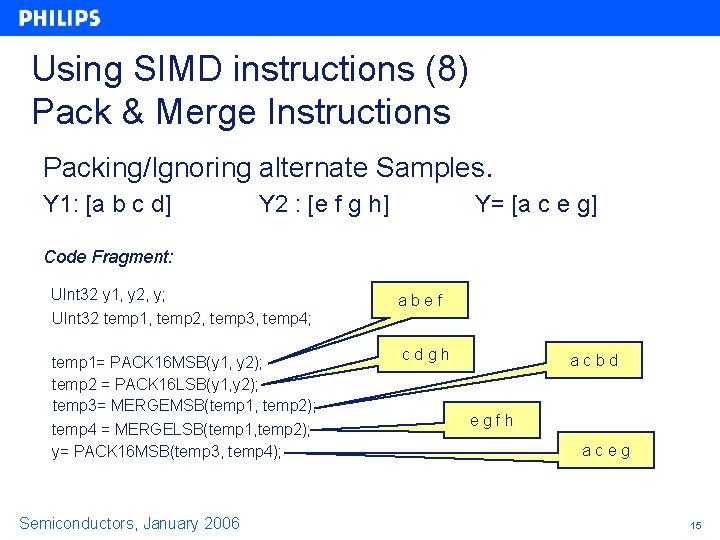

Using SIMD instructions (8) Pack & Merge Instructions Packing/Ignoring alternate Samples. Y 1: [a b c d] Y 2 : [e f g h] Y= [a c e g] Code Fragment: UInt 32 y 1, y 2, y; abef UInt 32 temp 1, temp 2, temp 3, temp 4; temp 1= PACK 16 MSB(y 1, y 2); temp 2 = PACK 16 LSB(y 1, y 2); temp 3= MERGEMSB(temp 1, temp 2); temp 4 = MERGELSB(temp 1, temp 2); y= PACK 16 MSB(temp 3, temp 4); Semiconductors, January 2006 cdgh acbd egfh aceg 15

Using SIMD instructions (9) MPEG 4 Motion Compensation Half Pixel Interpolation – Use BILINEAR & MERGE Operations Semiconductors, January 2006 16

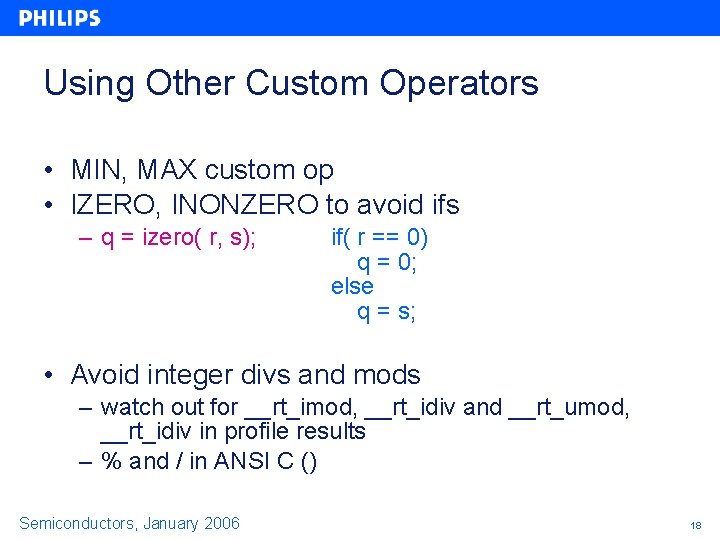

Using SIMD instructions (10) Restricting the number of Loads Using Palette lookup table in DVD Sub-picture creation. Extracts the 2 pixel luma and alpha value based on indexing from the 2 bit/pel data in the sub-picture. unsigned int YYaa 1 top, YYaa 2 top, YYaa 3 top, YYaa 4 top; unsigned int YYaa 5 top, YYaa 6 top, YYaa 7 top, YYaa 8 top; unsigned int sixteenpixels. Atop unsigned int sixteenpixels. Btop = *sourcetop++; = (sixteenpixels. Atop >> 4) & K 0 f 0 f; sixteenpixels. Atop &= K 0 f 0 f; YYaa 1 top YYaa 2 top YYaa 3 top YYaa 4 top YYaa 5 top YYaa 6 top YYaa 7 top YYaa 8 top = = = = ytab[UBYTESEL(sixteenpixels. Btop, ytab[UBYTESEL(sixteenpixels. Atop, Semiconductors, January 2006 2 bit/pel subpicture data BYTEPOSN 1)]; BYTEPOSN 2)]; BYTEPOSN 3)]; BYTEPOSN 4)]; 4 x 4 table of possible combinations of Contrast and Color indexes from the Palette table. Each Entry corresponds to 2 pixel Luma values & corresponding alpha value 17

Using Other Custom Operators • MIN, MAX custom op • IZERO, INONZERO to avoid ifs – q = izero( r, s); if( r == 0) q = 0; else q = s; • Avoid integer divs and mods – watch out for __rt_imod, __rt_idiv and __rt_umod, __rt_idiv in profile results – % and / in ANSI C () Semiconductors, January 2006 18

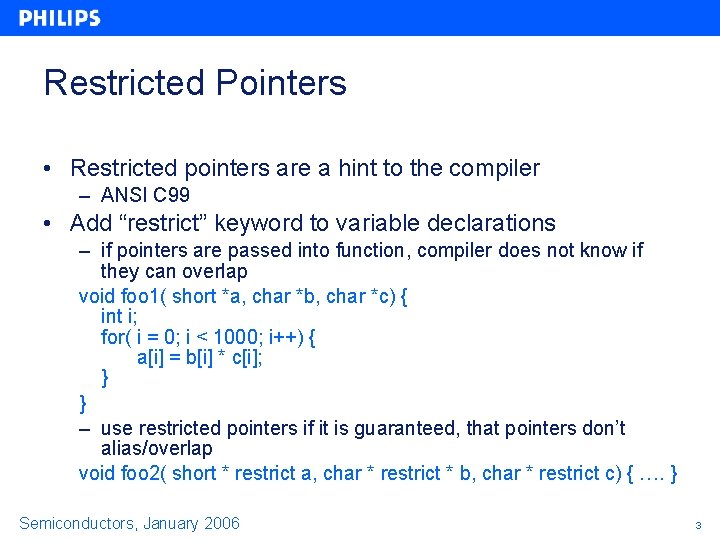

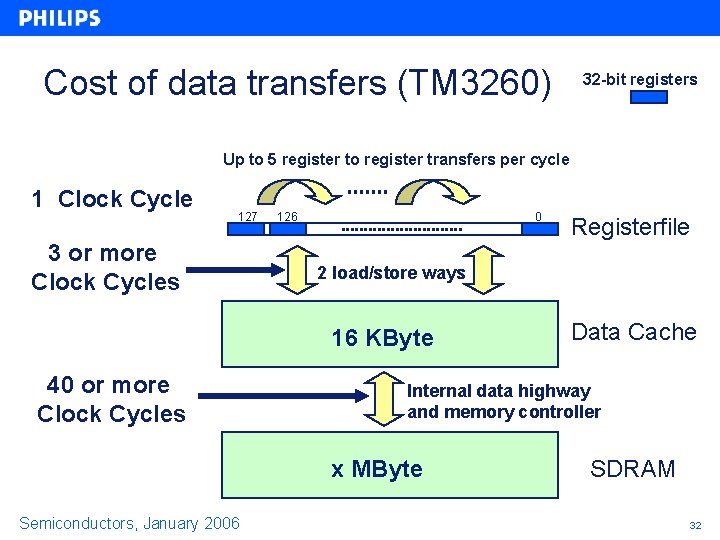

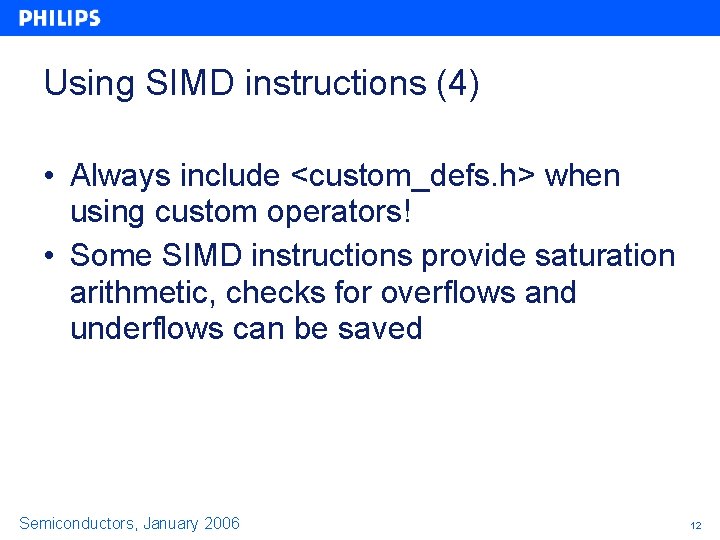

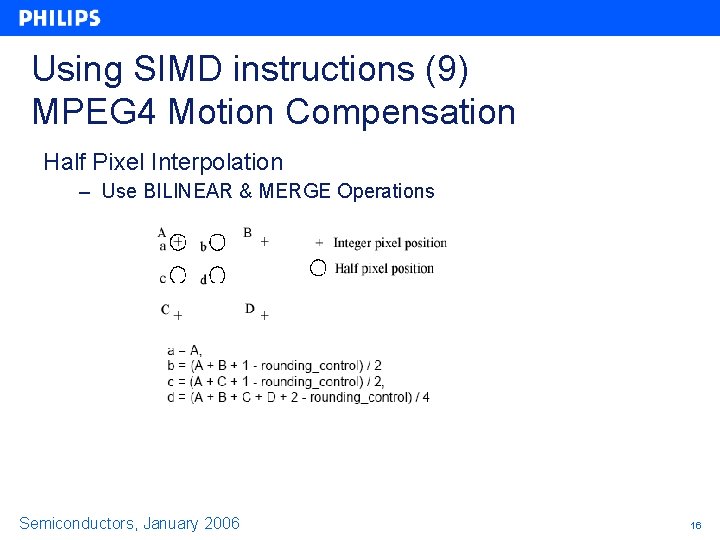

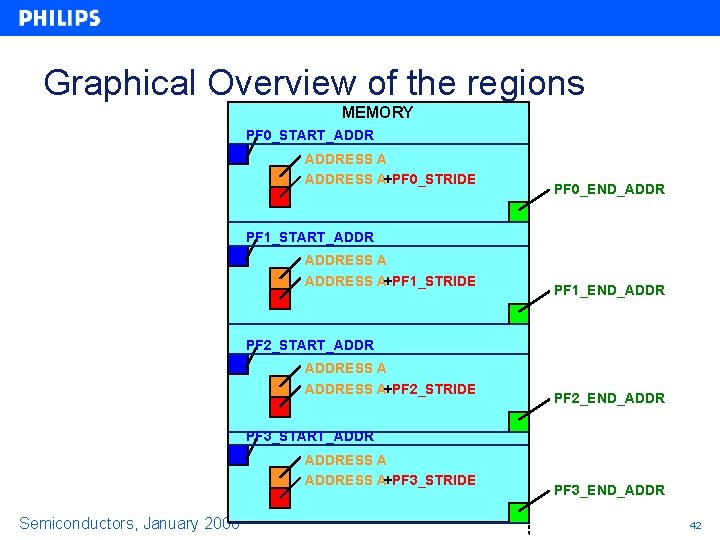

Smart Method to replace integer Division and Modulo • Divisions and Modulo Operations by Constants can be replaced by multiplies! • It is required to understand how the integer multiplier works, see next page Semiconductors, January 2006 19

![Smart Method to replace integer Division and Modulo2 Integer multiply unit calculates 64 Smart Method to replace integer Division and Modulo[2] • Integer multiply unit calculates 64](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-20.jpg)

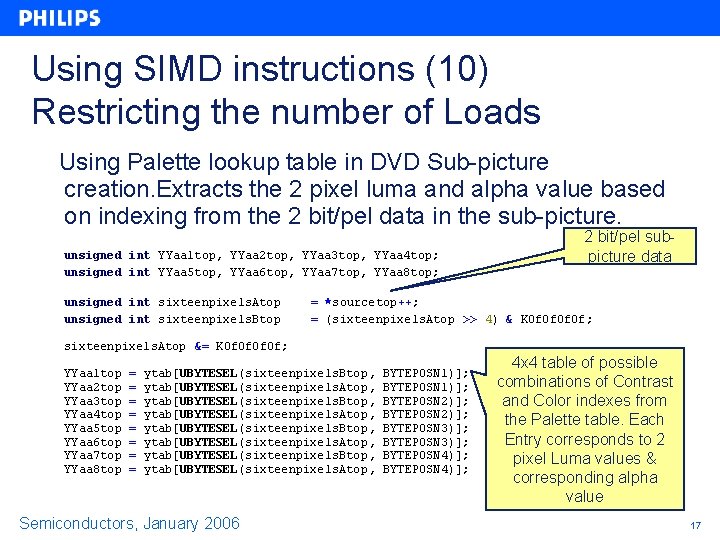

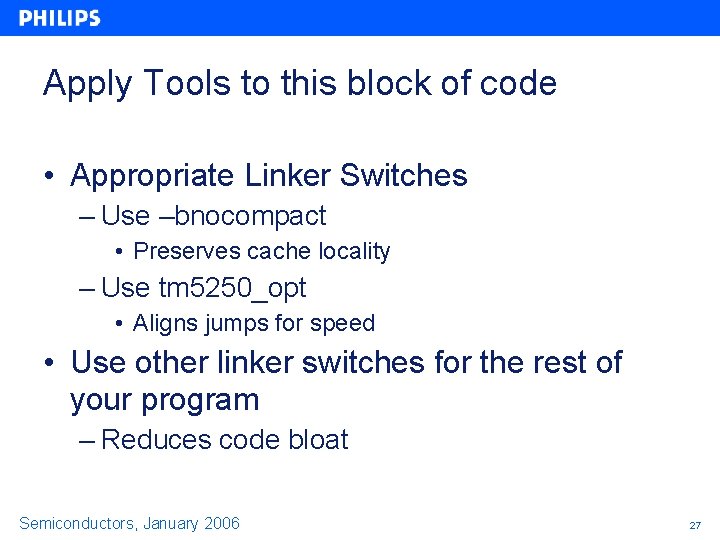

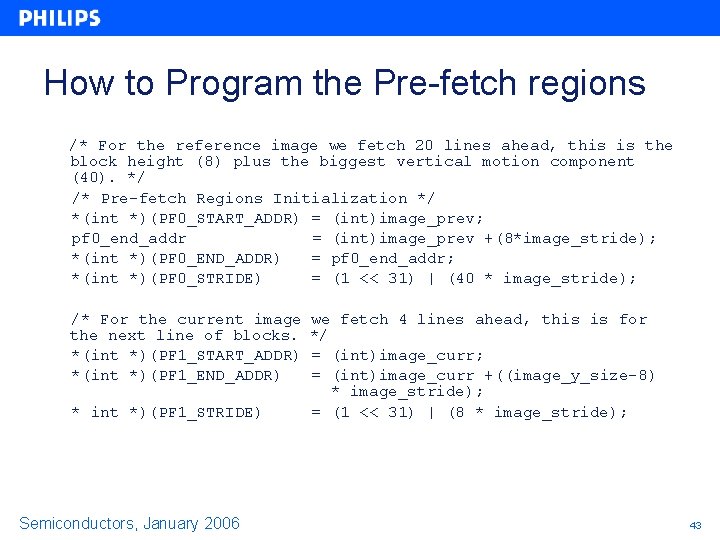

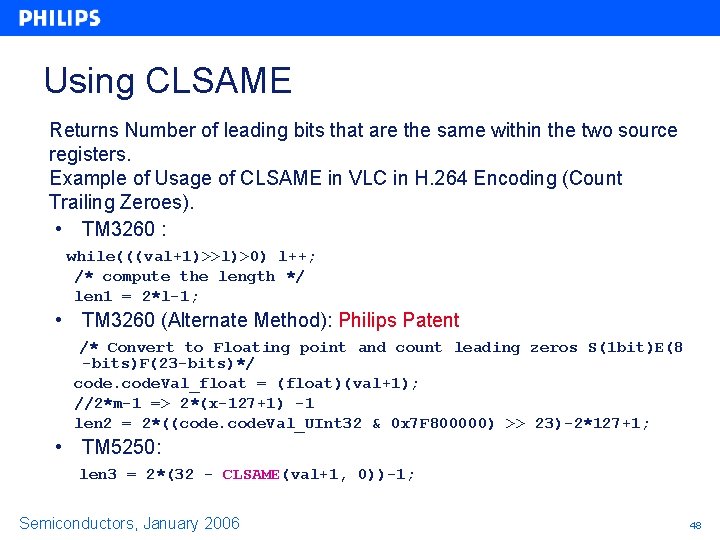

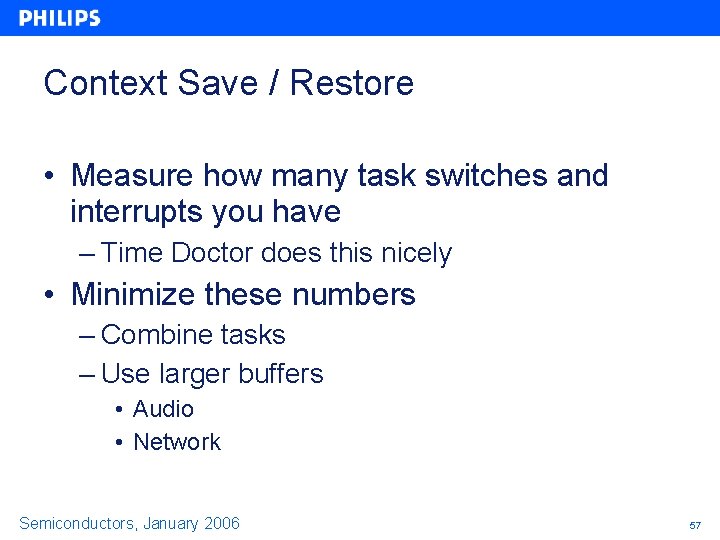

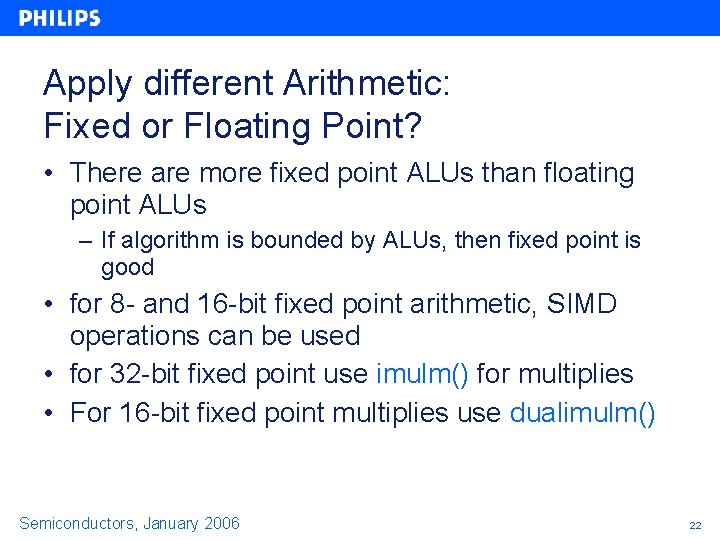

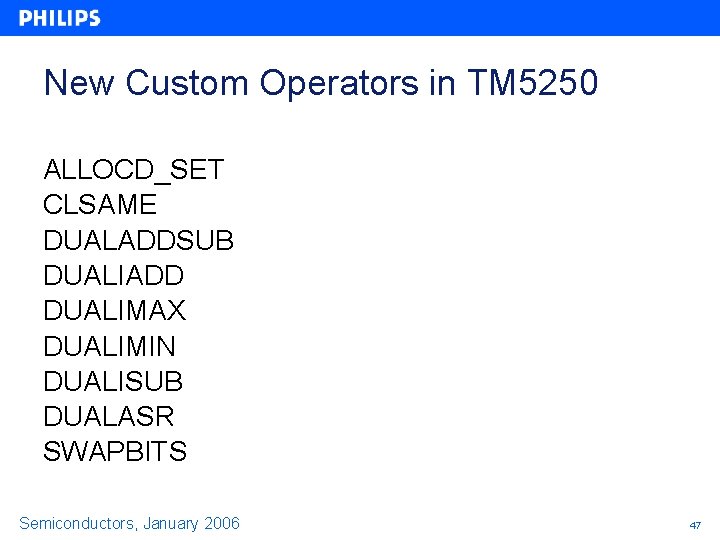

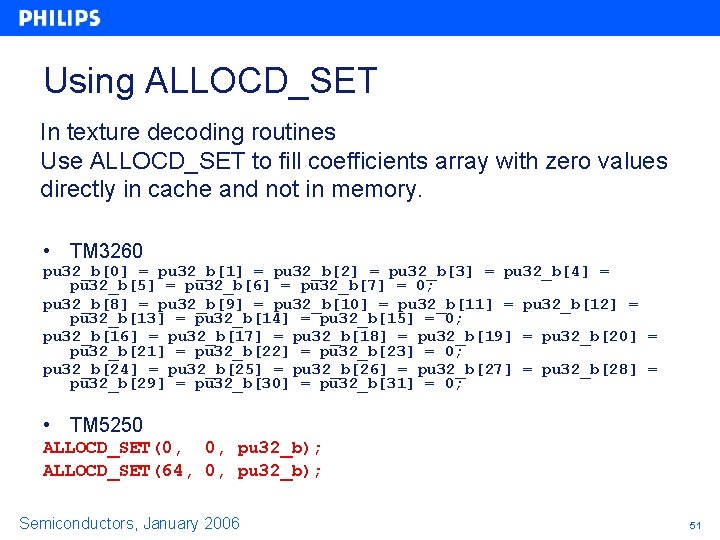

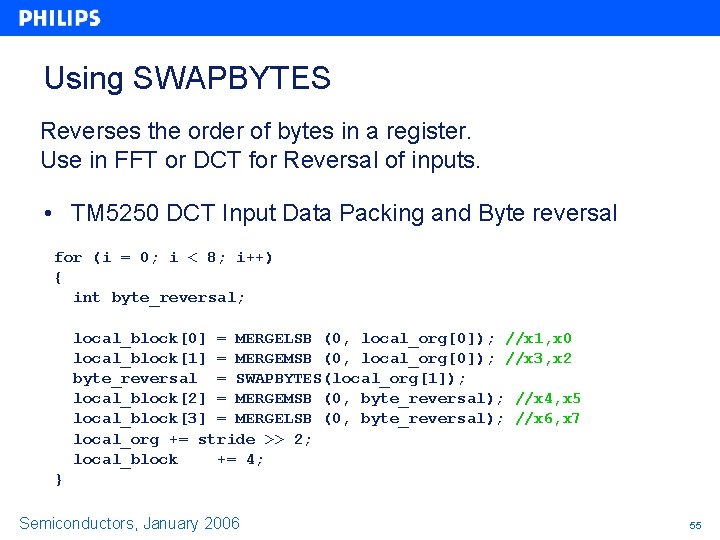

Smart Method to replace integer Division and Modulo[2] • Integer multiply unit calculates 64 -bit result: • • Programmer can select upper or lower 32 -bit of multiply result C = A*B C = IMUL(A, B) returning lower 32 bits C = IMULM(A, B) returning upper 32 bits Use IMUL, IMULM for signed and UMUL, UMULM for unsigned multiplies 31 A 0 31 B 0 x 63 32 31 x. MULM result Semiconductors, January 2006 0 x. MUL result 20

![Smart Method to replace integer Division and Modulo3 Integer multiply unit calculates 64 Smart Method to replace integer Division and Modulo[3] • Integer multiply unit calculates 64](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-21.jpg)

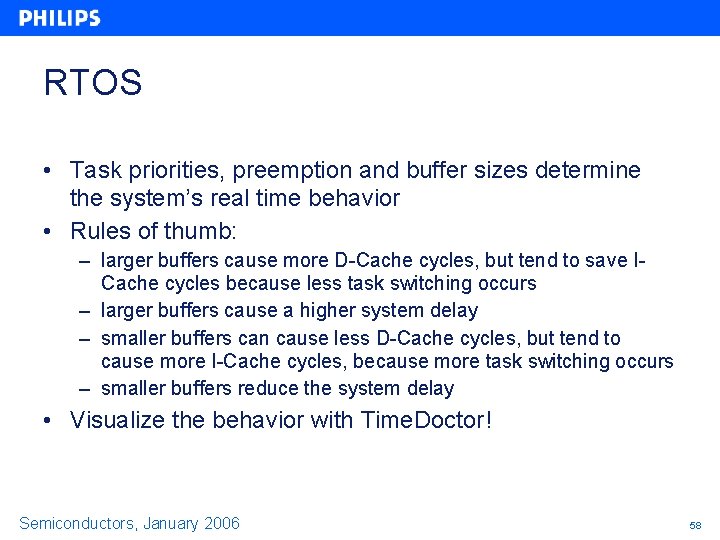

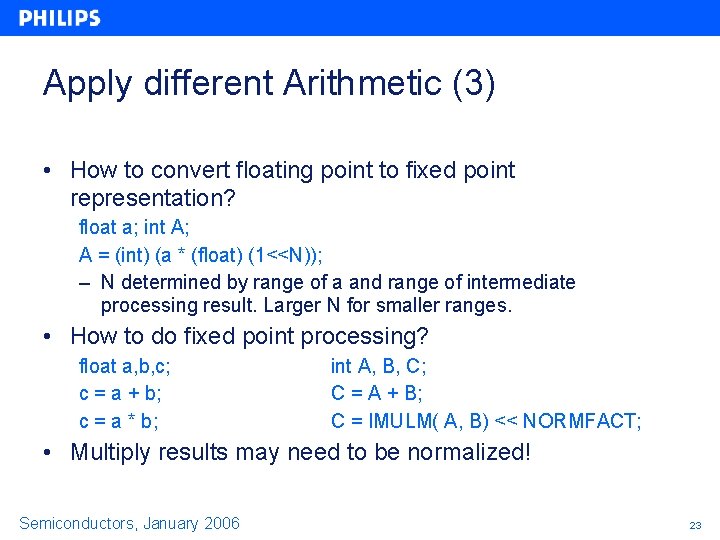

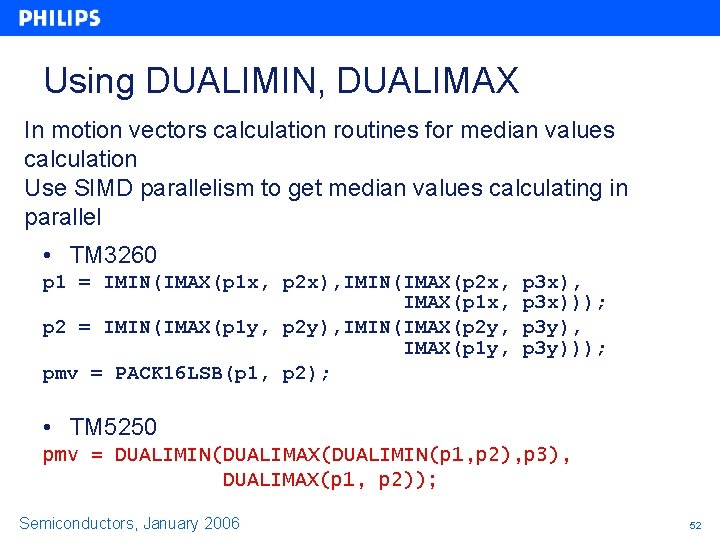

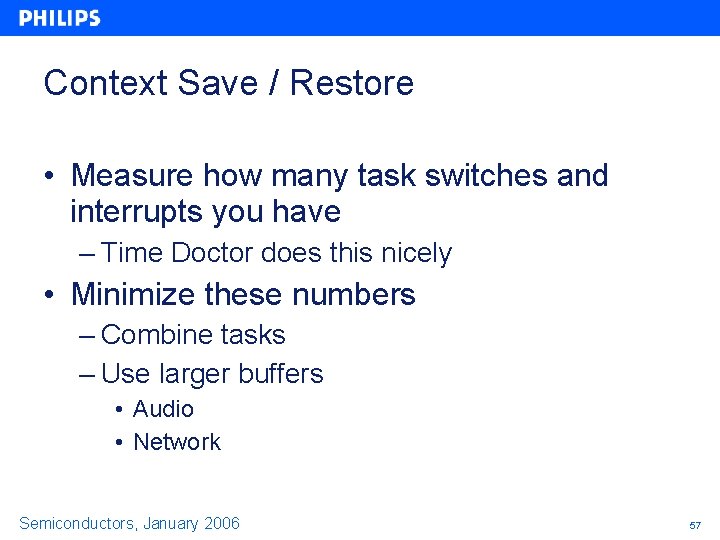

Smart Method to replace integer Division and Modulo[3] • Integer multiply unit calculates 64 -bit result: – A=B/C A = (B * ((2^32 / C)+1)) >> 32 A = IMULM(B, (2^32 / C)+1)) – Example: B = 517, C = 52 (2^32 / C) + 1 = 82595525 31 517 0 31 82595525 0 x 63 9 32 31 0 x. MULM result Semiconductors, January 2006 21

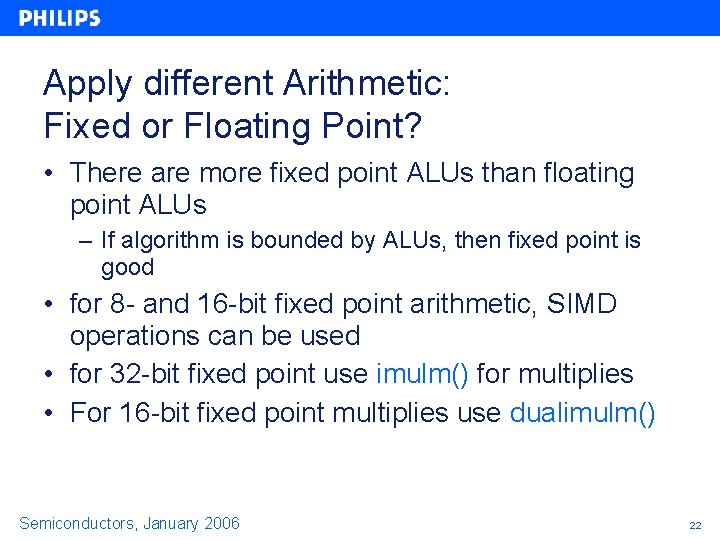

Apply different Arithmetic: Fixed or Floating Point? • There are more fixed point ALUs than floating point ALUs – If algorithm is bounded by ALUs, then fixed point is good • for 8 - and 16 -bit fixed point arithmetic, SIMD operations can be used • for 32 -bit fixed point use imulm() for multiplies • For 16 -bit fixed point multiplies use dualimulm() Semiconductors, January 2006 22

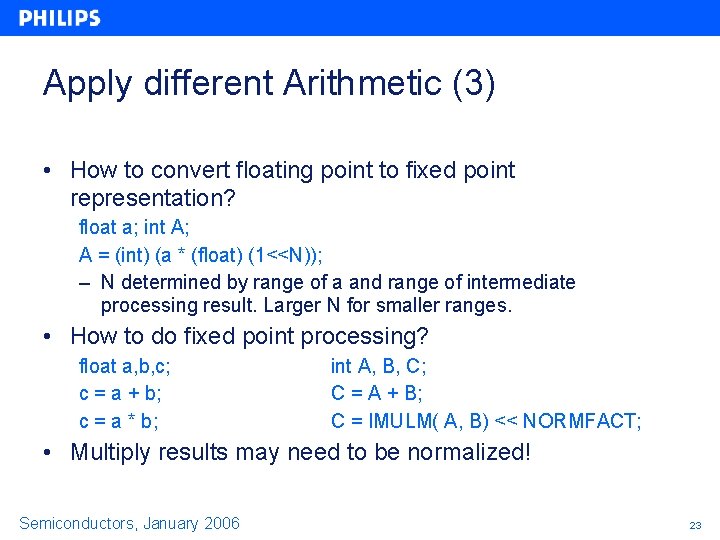

Apply different Arithmetic (3) • How to convert floating point to fixed point representation? float a; int A; A = (int) (a * (float) (1<<N)); – N determined by range of a and range of intermediate processing result. Larger N for smaller ranges. • How to do fixed point processing? float a, b, c; c = a + b; c = a * b; int A, B, C; C = A + B; C = IMULM( A, B) << NORMFACT; • Multiply results may need to be normalized! Semiconductors, January 2006 23

Instruction Cache Optimizations

Improve use of Instruction Cache • Reduce Jumps • Amortize cache loads in a loop – Block processing • Clearly identify your inner loop(s) • Measure their size – Reduce their size • Bring them together into the cache • Ensure that the I$ is not flushed unnecessarily – Task switches – Large Interrupt Service Routines Semiconductors, January 2006 25

Choose what is in the I$ • Collect all code for I$ into one. o file • Build this from several. c files • Use partial linking to link the several. o’s into one. o • Use tmsize to see the code size • The linker works on file boundaries, not smaller. Semiconductors, January 2006 26

Apply Tools to this block of code • Appropriate Linker Switches – Use –bnocompact • Preserves cache locality – Use tm 5250_opt • Aligns jumps for speed • Use other linker switches for the rest of your program – Reduces code bloat Semiconductors, January 2006 27

Smaller size C code • Use jump tables • Parameterize functions • Be conservative by loop unrolling etc. Semiconductors, January 2006 28

Data Cache Optimizations + general load/store Optimization

Goal: Minimize Data Traffic • Remember Memory Architecture!!! Semiconductors, January 2006 30

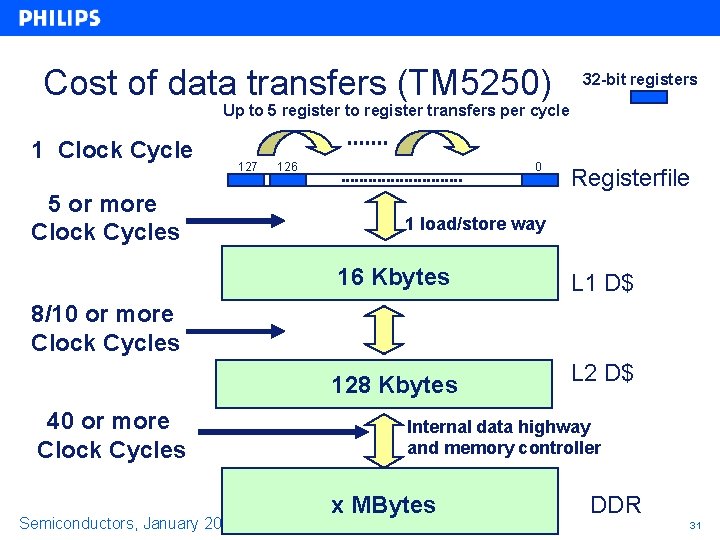

Cost of data transfers (TM 5250) 32 -bit registers Up to 5 register to register transfers per cycle 1 Clock Cycle 127 5 or more Clock Cycles 126 0 Registerfile 1 load/store way 16 Kbytes L 1 D$ 128 Kbytes L 2 D$ 8/10 or more Clock Cycles 40 or more Clock Cycles Semiconductors, January 2006 Internal data highway and memory controller x MBytes DDR 31

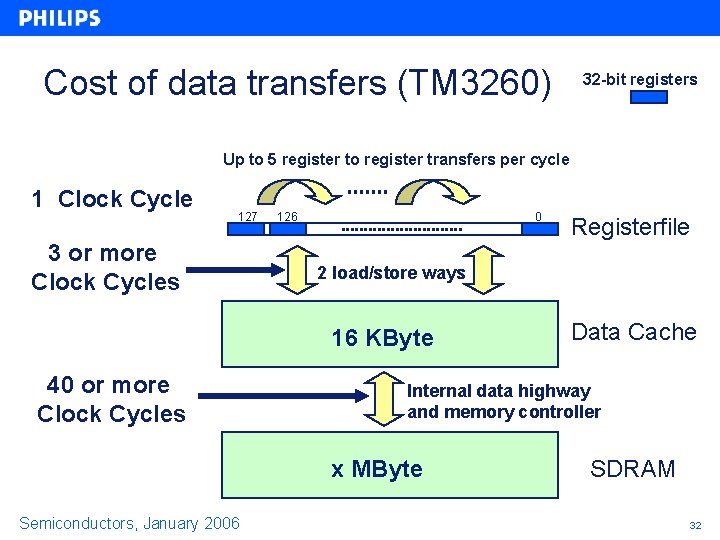

Cost of data transfers (TM 3260) 32 -bit registers Up to 5 register to register transfers per cycle 1 Clock Cycle 127 3 or more Clock Cycles 126 0 2 load/store ways 16 KByte 40 or more Clock Cycles Data Cache Internal data highway and memory controller x MByte Semiconductors, January 2006 Registerfile SDRAM 32

Techniques to Improve D$ Usage • Limit traffic between SDRAM and D$ – Make data structures smaller – Re-arrange data to fit in cache better • Limit traffic between Register File and D$ – Keep data in registers Semiconductors, January 2006 33

![Compacting data structures Use smallest possible data type char data5 5 23 65 Compacting data structures • Use smallest possible data type: char data[5]={ 5, 23, 65,](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-34.jpg)

Compacting data structures • Use smallest possible data type: char data[5]={ 5, 23, 65, 21, 56} instead of int data[5] = {5, 23, 65, 21, 56} • Use table lookups when only some values of a larger set are used: int data[1000] = { 2354, 3597, 2248, 824, 242, 3597, 2248, 242, 824, 3597, 2354, 2248, …, 3597}; int data. Tab[6] = { 2354, 3597, 2248, 824, 242, 3597}; char data. LUT[1000] = { 0, 1, 2, 3, 4, 5, 2, 4, 3, 1, 0, 2, …, 1}; a = data[X]; <=> a = data. Tab[data. LUT[1000]]; Semiconductors, January 2006 34

Keep Things in Registers: Reducing number of load and stores • Problem with load/stores: – only one load/store units available – latency of five clock cycles or more per load/store • Solutions: – keep intermediate results in registers – load more than one char or short at a time and extract data with custom operators Semiconductors, January 2006 35

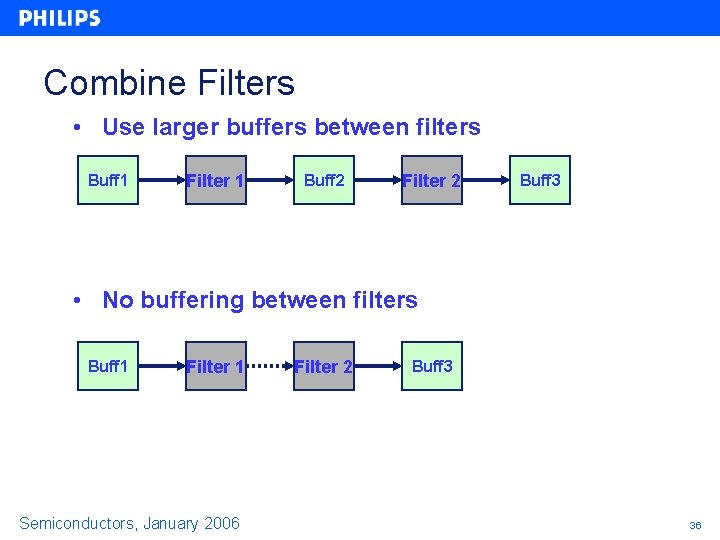

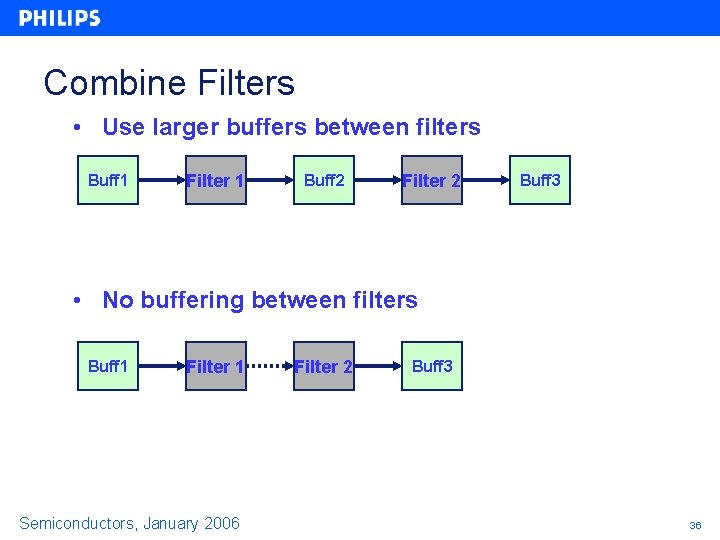

Combine Filters • Use larger buffers between filters Buff 1 Filter 1 Buff 2 Filter 2 Buff 3 • No buffering between filters Buff 1 Filter 1 Semiconductors, January 2006 Filter 2 Buff 3 36

Change Processing Flow • Trade off between I$ and D$ optimization: – Sometimes locality can only be optimized for one but not the other • Analyze if data or instruction cache are more problematic in hot spots • Rules of thumb: – if critical section contains a lot of code, try to keep the code in the cache as long as possible – if critical section is relatively short, try to keep the data in the cache as long as possible Semiconductors, January 2006 37

Level 2 Cache Pre-fetching

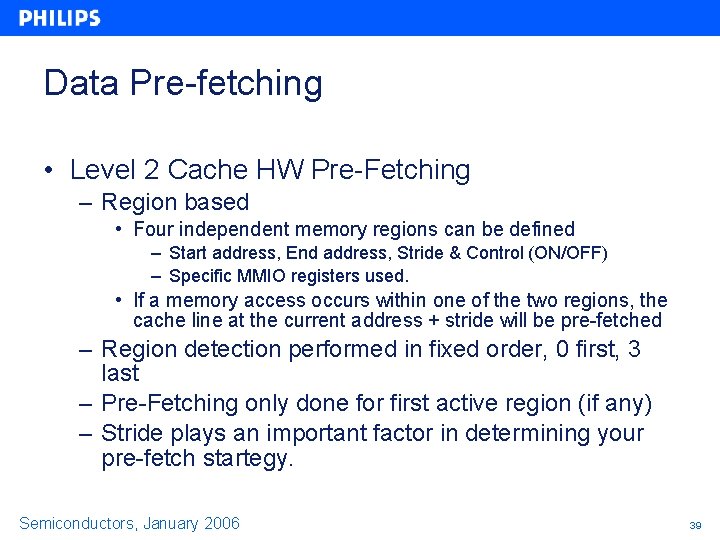

Data Pre-fetching • Level 2 Cache HW Pre-Fetching – Region based • Four independent memory regions can be defined – Start address, End address, Stride & Control (ON/OFF) – Specific MMIO registers used. • If a memory access occurs within one of the two regions, the cache line at the current address + stride will be pre-fetched – Region detection performed in fixed order, 0 first, 3 last – Pre-Fetching only done for first active region (if any) – Stride plays an important factor in determining your pre-fetch startegy. Semiconductors, January 2006 39

![Data Prefetching 2 L 2 Cache supports Software Prefetching Supported through Prefetch Data Pre-fetching [2] • L 2 Cache supports Software Pre-fetching – Supported through Pre-fetch](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-40.jpg)

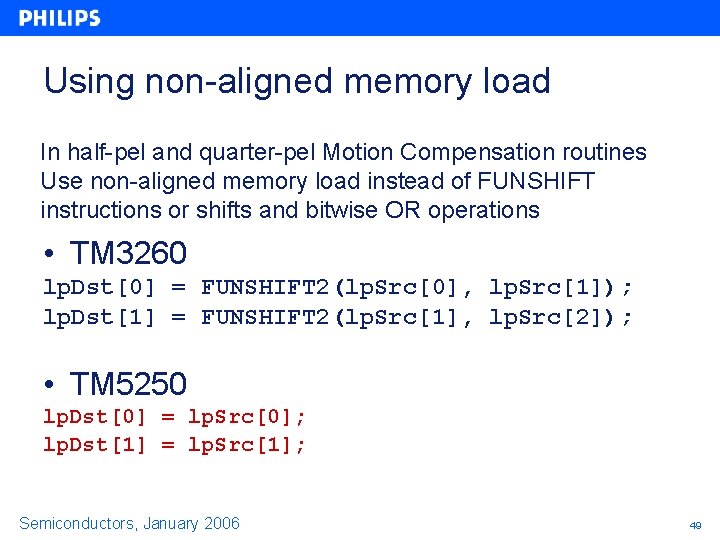

Data Pre-fetching [2] • L 2 Cache supports Software Pre-fetching – Supported through Pre-fetch Instructions – Much improved than TM 3260 – Ignored if data is already in Level 2 Cache. • Discards more than 6 pending Pre-fetches. Semiconductors, January 2006 40

Graphical Overview of Region When defining the stride make sure that the a sufficient number of operations are executed before the program reaches the point where the prefetched data is required! Semiconductors, January 2006 41

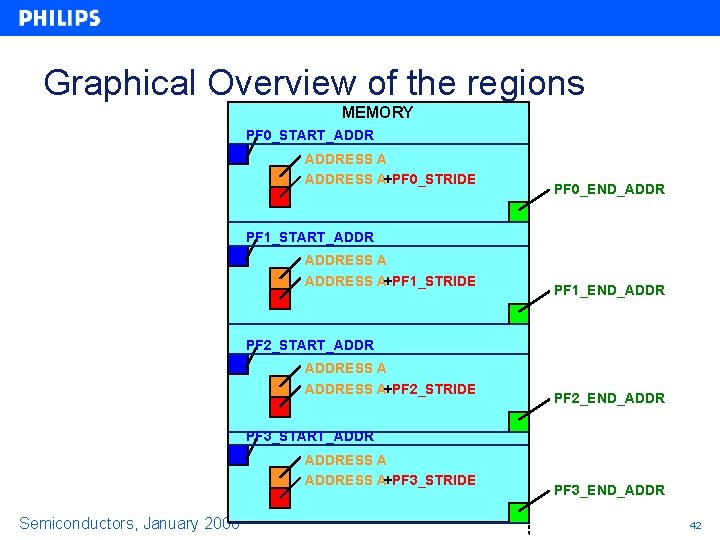

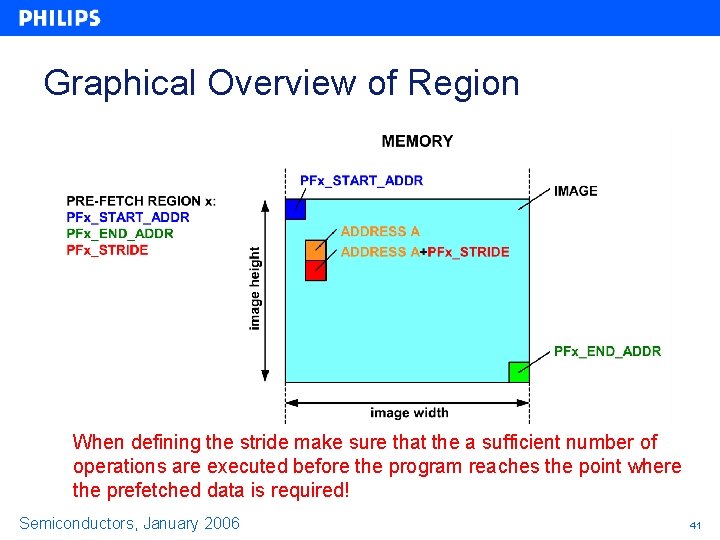

Graphical Overview of the regions MEMORY PF 0_START_ADDRESS A+PF 0_STRIDE PF 0_END_ADDR PF 1_START_ADDRESS A+PF 1_STRIDE PF 1_END_ADDR PF 2_START_ADDRESS A+PF 2_STRIDE PF 2_END_ADDR PF 3_START_ADDRESS A+PF 3_STRIDE Semiconductors, January 2006 PF 3_END_ADDR 42

How to Program the Pre-fetch regions /* For the reference image we fetch 20 lines ahead, this is the block height (8) plus the biggest vertical motion component (40). */ /* Pre-fetch Regions Initialization */ *(int *)(PF 0_START_ADDR) = (int)image_prev; pf 0_end_addr = (int)image_prev +(8*image_stride); *(int *)(PF 0_END_ADDR) = pf 0_end_addr; *(int *)(PF 0_STRIDE) = (1 << 31) | (40 * image_stride); /* For the current image the next line of blocks. *(int *)(PF 1_START_ADDR) *(int *)(PF 1_END_ADDR) * int *)(PF 1_STRIDE) Semiconductors, January 2006 we fetch 4 lines ahead, this is for */ = (int)image_curr; = (int)image_curr +((image_y_size-8) * image_stride); = (1 << 31) | (8 * image_stride); 43

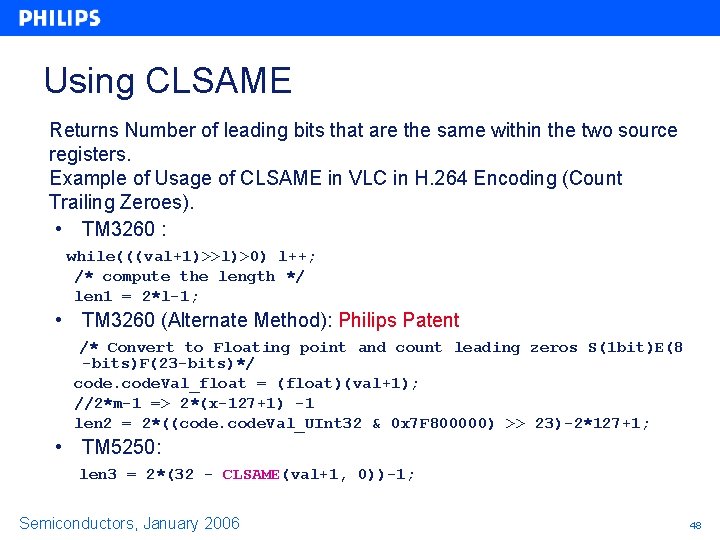

How to Program the Pre-fetch regions (contd. ) /* Region 2 & region 3 */ *(int *)(PF 2_START_ADDR) = (int)mv_best_prev_ptr; *(int *)(PF 2_END_ADDR) = ((int)mv_best_prev_ptr) + (4*(720/8+4)); *(int *) (PF 2_STRIDE) = (1 << 31) | 128; *(int *)(PF 3_START_ADDR) = (int)noise_ptr; *(int *)(PF 3_END_ADDR) = ((int)noise_ptr) + (4*2*(720/8)*(480/8)); *(int *) (PF 3_STRIDE) = (1 << 31) | 128; Semiconductors, January 2006 44

Pre-fetch regions & Context Switches • Pre-fetch regions need to be saved during the context switch. • p. SOS Task Switch callback can be used. • When a Task Switch occurs the region settings for the task that relinquishes the control of the CPU are saved. When the Task again gets the control of the CPU the region settings are restored. Semiconductors, January 2006 45

New Instruction Set Usage Examples

New Custom Operators in TM 5250 ALLOCD_SET CLSAME DUALADDSUB DUALIADD DUALIMAX DUALIMIN DUALISUB DUALASR SWAPBITS Semiconductors, January 2006 47

Using CLSAME Returns Number of leading bits that are the same within the two source registers. Example of Usage of CLSAME in VLC in H. 264 Encoding (Count Trailing Zeroes). • TM 3260 : while(((val+1)>>l)>0) l++; /* compute the length */ len 1 = 2*l-1; • TM 3260 (Alternate Method): Philips Patent /* Convert to Floating point and count leading zeros S(1 bit)E(8 -bits)F(23 -bits)*/ code. Val_float = (float)(val+1); //2*m-1 => 2*(x-127+1) -1 len 2 = 2*((code. Val_UInt 32 & 0 x 7 F 800000) >> 23)-2*127+1; • TM 5250: len 3 = 2*(32 - CLSAME(val+1, 0))-1; Semiconductors, January 2006 48

Using non-aligned memory load In half-pel and quarter-pel Motion Compensation routines Use non-aligned memory load instead of FUNSHIFT instructions or shifts and bitwise OR operations • TM 3260 lp. Dst[0] = FUNSHIFT 2(lp. Src[0], lp. Src[1]); lp. Dst[1] = FUNSHIFT 2(lp. Src[1], lp. Src[2]); • TM 5250 lp. Dst[0] = lp. Src[0]; lp. Dst[1] = lp. Src[1]; Semiconductors, January 2006 49

Using DUALADDSUB, DSPIDUALMUL In Inverse Quantization part of texture decoding routine for more efficient DCT coefficients reconstruction • TM 3260 tmp 0 = ADDSUB(q_2 scale*block[i], q_add); tmp 1 = ADDSUB(q_2 scale*block[i+1], q_add); • TM 5250 tmp 0 = DUALADDSUB(DSPIDUALMUL(q_2 scale_q_2 scale, tmp_block[j]), q_add_q_add); Semiconductors, January 2006 50

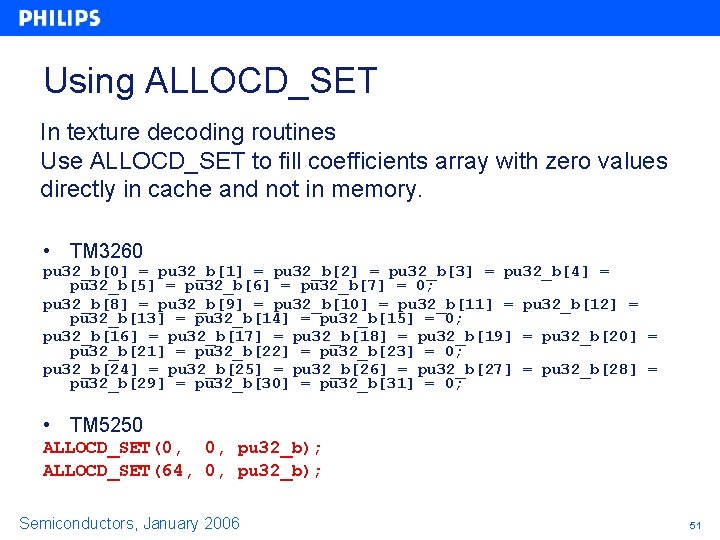

Using ALLOCD_SET In texture decoding routines Use ALLOCD_SET to fill coefficients array with zero values directly in cache and not in memory. • TM 3260 pu 32_b[0] = pu 32_b[1] = pu 32_b[2] = pu 32_b[3] = pu 32_b[4] = pu 32_b[5] = pu 32_b[6] = pu 32_b[7] = 0; pu 32_b[8] = pu 32_b[9] = pu 32_b[10] = pu 32_b[11] = pu 32_b[12] = pu 32_b[13] = pu 32_b[14] = pu 32_b[15] = 0; pu 32_b[16] = pu 32_b[17] = pu 32_b[18] = pu 32_b[19] = pu 32_b[20] = pu 32_b[21] = pu 32_b[22] = pu 32_b[23] = 0; pu 32_b[24] = pu 32_b[25] = pu 32_b[26] = pu 32_b[27] = pu 32_b[28] = pu 32_b[29] = pu 32_b[30] = pu 32_b[31] = 0; • TM 5250 ALLOCD_SET(0, 0, pu 32_b); ALLOCD_SET(64, 0, pu 32_b); Semiconductors, January 2006 51

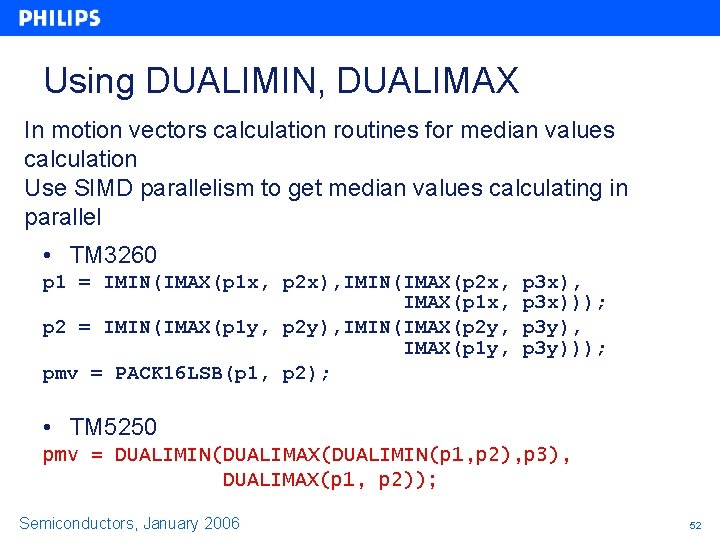

Using DUALIMIN, DUALIMAX In motion vectors calculation routines for median values calculation Use SIMD parallelism to get median values calculating in parallel • TM 3260 p 1 = IMIN(IMAX(p 1 x, p 2 x), IMIN(IMAX(p 2 x, IMAX(p 1 x, p 2 = IMIN(IMAX(p 1 y, p 2 y), IMIN(IMAX(p 2 y, IMAX(p 1 y, pmv = PACK 16 LSB(p 1, p 2); p 3 x), p 3 x))); p 3 y), p 3 y))); • TM 5250 pmv = DUALIMIN(DUALIMAX(DUALIMIN(p 1, p 2), p 3), DUALIMAX(p 1, p 2)); Semiconductors, January 2006 52

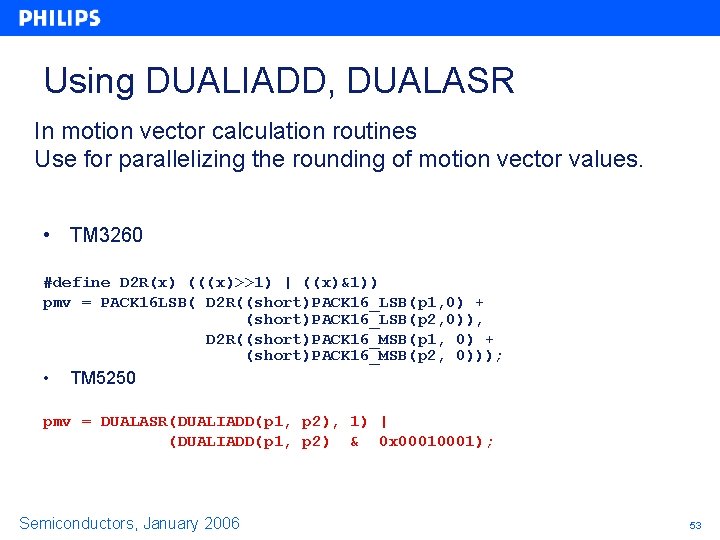

Using DUALIADD, DUALASR In motion vector calculation routines Use for parallelizing the rounding of motion vector values. • TM 3260 #define D 2 R(x) (((x)>>1) | ((x)&1)) pmv = PACK 16 LSB( D 2 R((short)PACK 16_LSB(p 1, 0) + (short)PACK 16_LSB(p 2, 0)), D 2 R((short)PACK 16_MSB(p 1, 0) + (short)PACK 16_MSB(p 2, 0))); • TM 5250 pmv = DUALASR(DUALIADD(p 1, p 2), 1) | (DUALIADD(p 1, p 2) & 0 x 0001); Semiconductors, January 2006 53

Using IFIR 16, DUALASR, DSPIDUALADD In half-pel and quarter-pel Motion Estimation or Motion Compensation routines, use them to compute coordinates of current data in reference frame in parallel. • TM 3260 xh = dx & 1; yh = dy & 1; xoff = dx >> 1; yoff = dy >> 1; s = src + stride_src*yoff + xoff; • TM 5250 xyz = DSPIDUALADD(x 0 y 0, DUALASR(vectors, 0 x 01)); ref. Pointer = (UInt 8 *)((int)ref. Picture + IFIR 16 (0 x 00010000 | stride, xyz)); Semiconductors, January 2006 54

Using SWAPBYTES Reverses the order of bytes in a register. Use in FFT or DCT for Reversal of inputs. • TM 5250 DCT Input Data Packing and Byte reversal for (i = 0; i < 8; i++) { int byte_reversal; local_block[0] = MERGELSB (0, local_org[0]); //x 1, x 0 local_block[1] = MERGEMSB (0, local_org[0]); //x 3, x 2 byte_reversal = SWAPBYTES(local_org[1]); local_block[2] = MERGEMSB (0, byte_reversal); //x 4, x 5 local_block[3] = MERGELSB (0, byte_reversal); //x 6, x 7 local_org += stride >> 2; local_block += 4; } Semiconductors, January 2006 55

System Level Optimizations

Context Save / Restore • Measure how many task switches and interrupts you have – Time Doctor does this nicely • Minimize these numbers – Combine tasks – Use larger buffers • Audio • Network Semiconductors, January 2006 57

RTOS • Task priorities, preemption and buffer sizes determine the system’s real time behavior • Rules of thumb: – larger buffers cause more D-Cache cycles, but tend to save ICache cycles because less task switching occurs – larger buffers cause a higher system delay – smaller buffers can cause less D-Cache cycles, but tend to cause more I-Cache cycles, because more task switching occurs – smaller buffers reduce the system delay • Visualize the behavior with Time. Doctor! Semiconductors, January 2006 58

Results from Real World

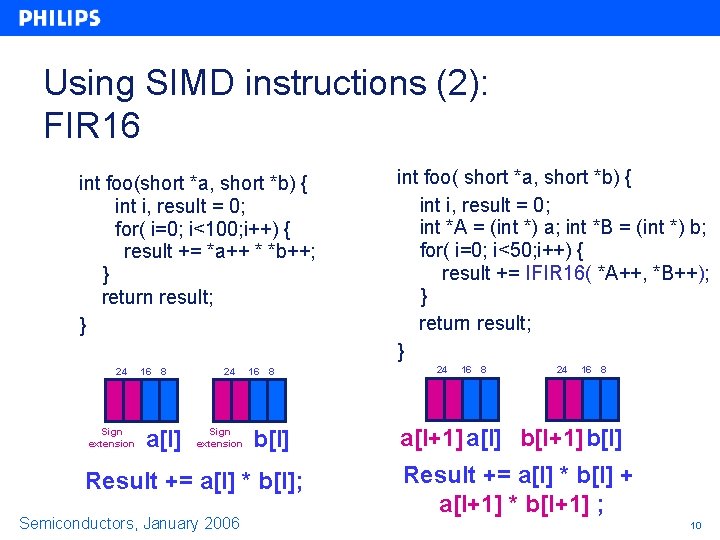

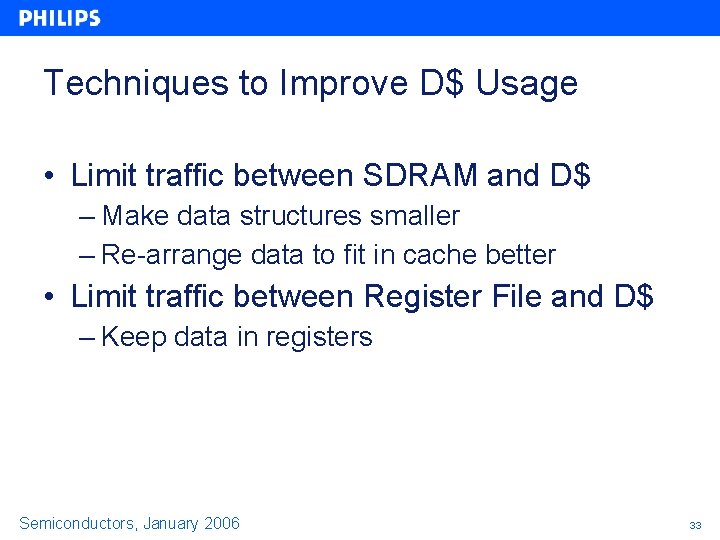

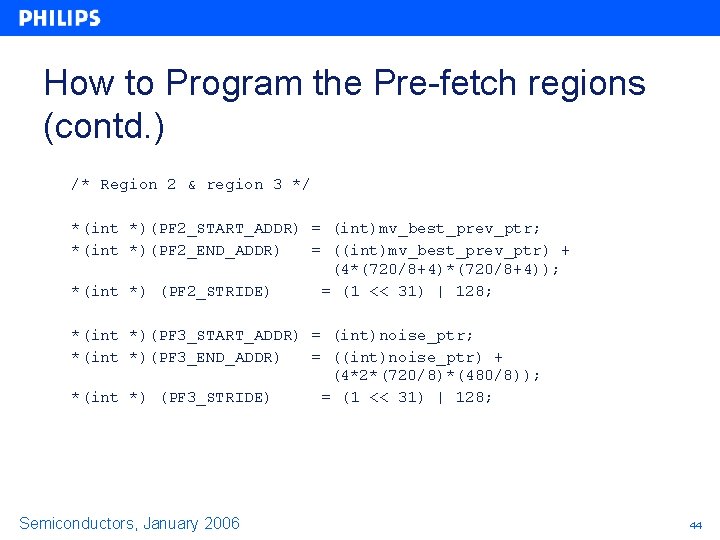

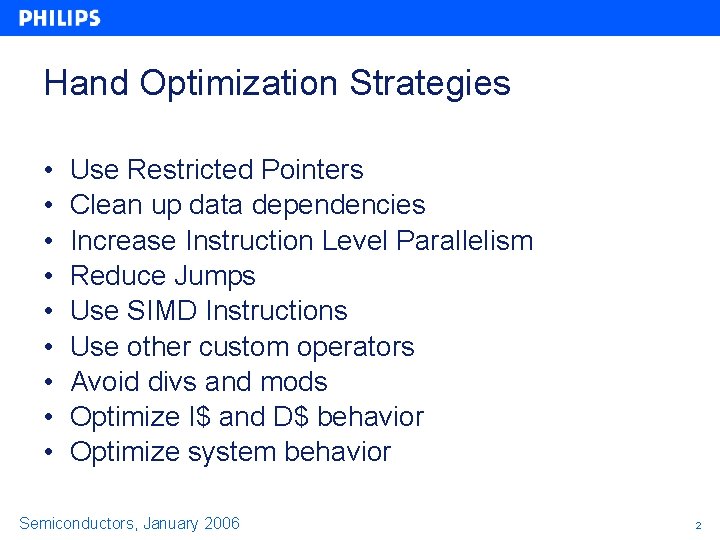

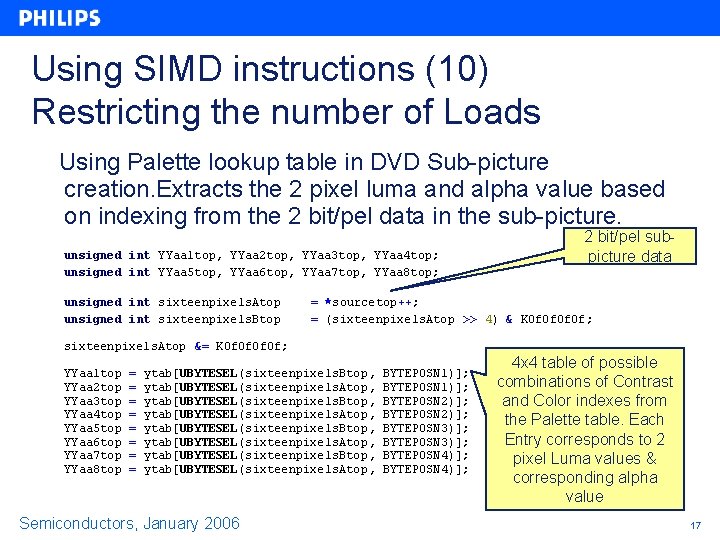

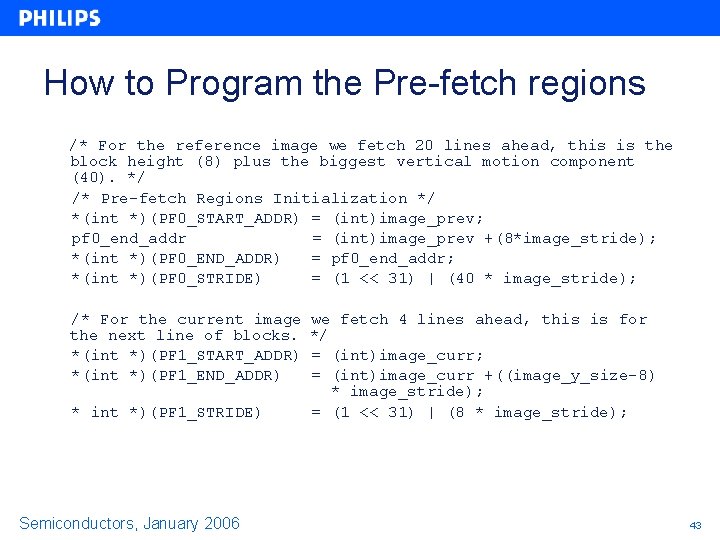

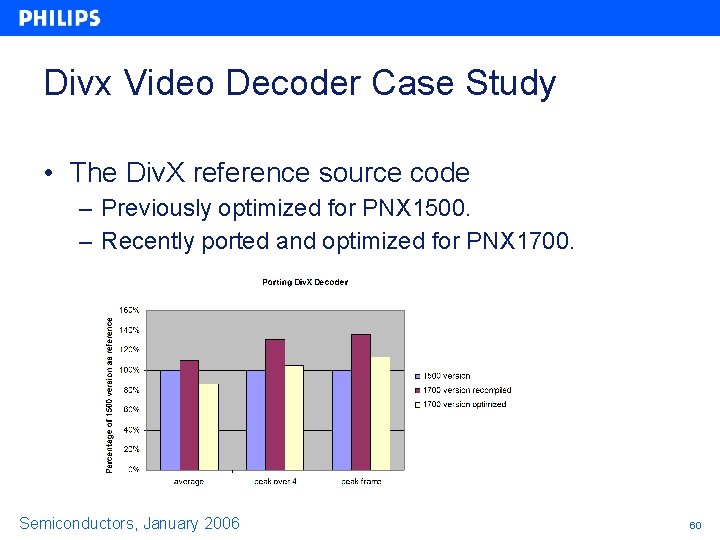

Divx Video Decoder Case Study • The Div. X reference source code – Previously optimized for PNX 1500. – Recently ported and optimized for PNX 1700. Semiconductors, January 2006 60

![Divx Video Decoder Case Study 2 Measured over seven streams including HD streams Divx Video Decoder Case Study [2] • Measured over seven streams including HD streams.](https://slidetodoc.com/presentation_image/430be7527553dc336a9ad90936ea6bb5/image-61.jpg)

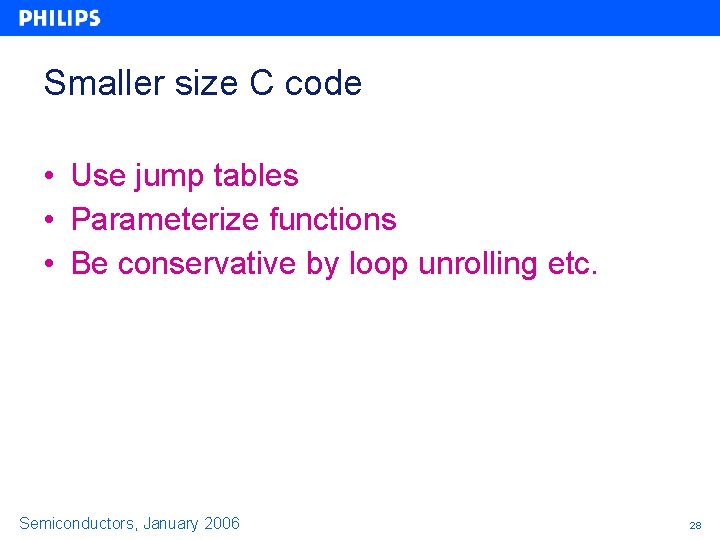

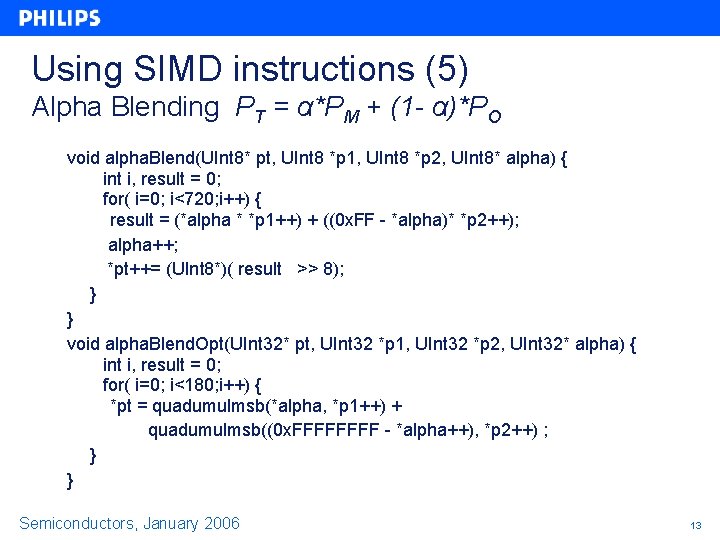

Divx Video Decoder Case Study [2] • Measured over seven streams including HD streams. • Simple recompile – The average load rose by 10% in comparison to PNX 1500 • After optimization – The average required cycle count reduced by 13% from the reference on the 1500. – Peak over 4 cycle count from the PNX 1500 to the optimized PNX 1700 was up by 5% compared to the PNX 1500 code. • Of these, data cache stall cycles are about half compared to PNX 1500. • Implies that the increase in cycle count is due to the longer pipeline. Semiconductors, January 2006 61