Transfer and Multitask Learning Steve Clanton Multiple Tasks

- Slides: 10

Transfer and Multitask Learning Steve Clanton

Multiple Tasks and Generalization “The ability of a system to recognize and apply knowledge and skills learned in previous tasks to novel tasks. ” “aims to improve the learning of the target predictive function using knowledge” from in a related learning task or domain “solve learning tasks [better] using information gained from solving related tasks”

What are related tasks? (different domains) Example 1: cross-company software defect prediction • Projects could be nearly identical but use different metrics • Projects could use the same metrics but have very different distributions

What are related tasks? (different tasks) • Example 3: Regression, multi-classification, and binary classification • Example 4: Predicting driving direction, predicting position of lines on road

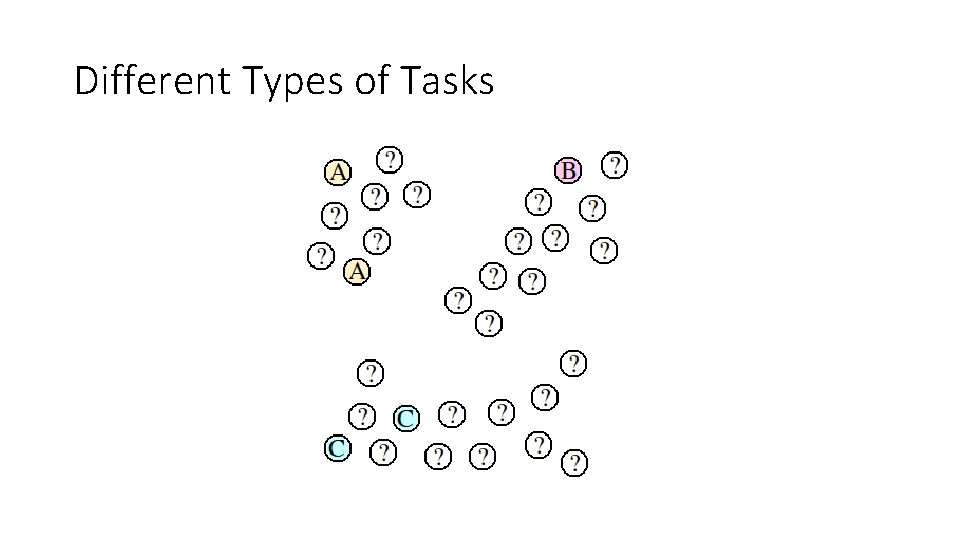

Different Types of Tasks

How to Transfer Domain Knowledge • Instance transfer (e. g. feature weighting) • Feature-representation transfer • Parameter Transfer

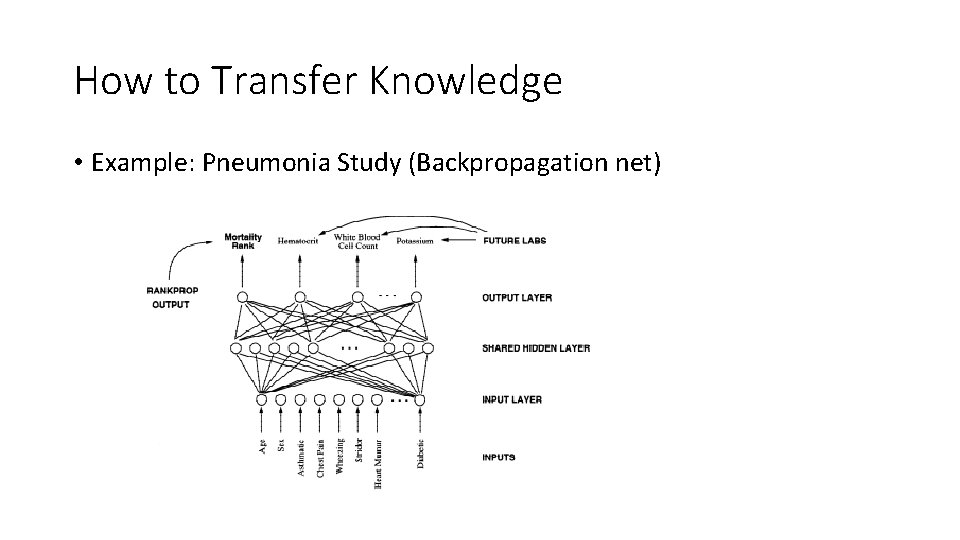

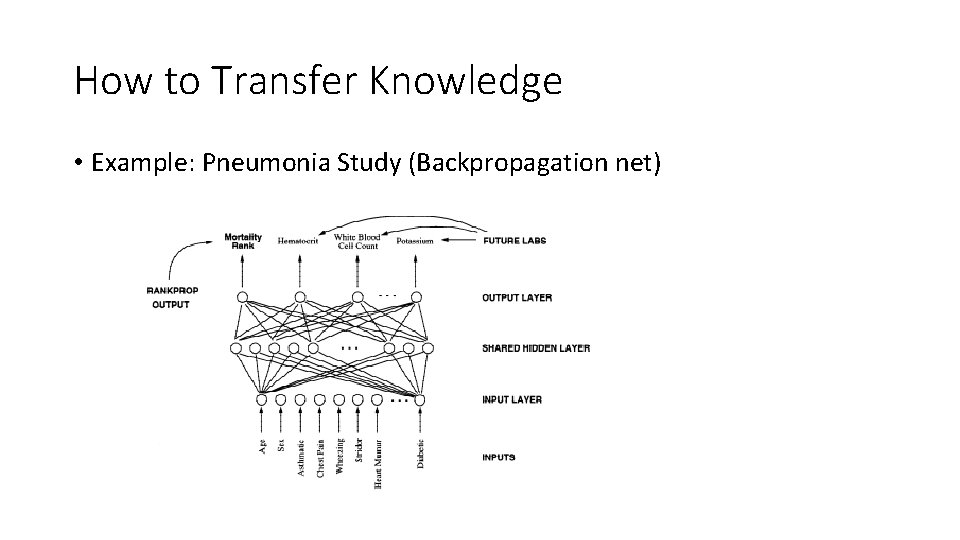

How to Transfer Knowledge • Example: Pneumonia Study (Backpropagation net)

How to Transfer Domain Knowledge • Backpropagation nets • K-nearest neighbor • Kernel regression • Decision trees All of these work off inductive bias

Benefits: What’s better? • Potential to handle noise through averaging (learn with less data) • Potential to discover an important latent feature • Eavesdropping: tell the learner a concept is important • Representation bias: add stability

References • Pan, S. J. & Yang, Q. 2010, "A Survey on Transfer Learning", IEEE Transactions on Knowledge and Data Engineering, vol. 22, no. 10, pp. 1345 -1359. • Caruana, R. 1997, "Multitask Learning", Machine Learning, vol. 28, no. 1, pp. 41 -75. • Hassan Mahmud, M. M. 2009, "On universal transfer learning", Theoretical Computer Science, vol. 410, no. 19, pp. 18261846.