Traditional Software Testing is a Failure Linda Shafer

- Slides: 37

Traditional Software Testing is a Failure! Linda Shafer University of Texas at Austin www. athensgroup. com donshafer@athensgroup. com Don Shafer Chief Technology Officer, Athens Group, Inc. www. athensgroup. com donshafer@athensgroup. com

Seminar Dedication Copyright © 2002 Linda and Don Shafer Enrico Fermi referred to these brilliant Hungarian scientists as “the Martians, ” based on speculation that a spaceship from Mars dropped them all off in Budapest in the early 1900’s. Traditional Software Testing is a Failure!

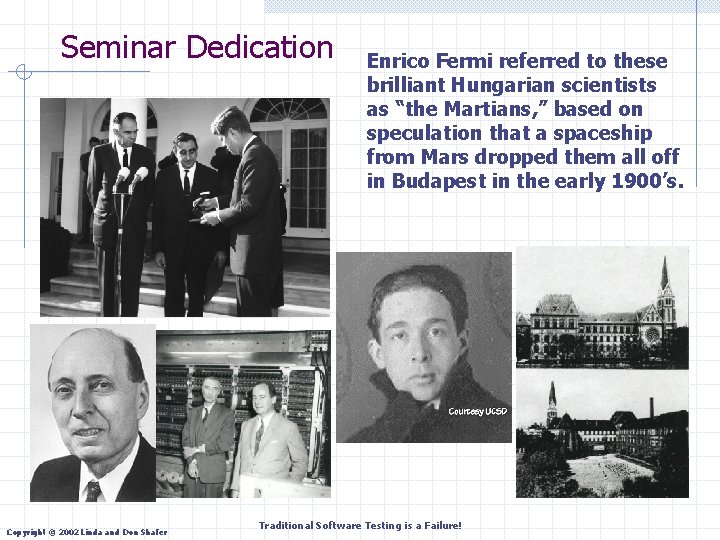

And let us not forget…. 1994 John Harsányi (b. 5/29/1920, Budapest, Hungary, d. 2000) "For his pioneering analysis of equilibrium in theory of non cooperative games. “ Shared prize with A Beautiful Mind‘’s John Nash Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

Special Thanks to: Rex Black RBCS, Inc. 31520 Beck Road Bulverde, TX 78163 USA Phone: +1 (830) 438 -4830 Fax: +1 (830) 438 -4831 www. rexblackconsulting. com rex_black@rexblackconsulting. com Gary Cobb’s testing presentations for SWPM as excerpted from: SOFTWARE ENGINEERING: a Practitioner’s Approach, by Roger Pressman, Mc. Graw-Hill, Fourth Edition, 1997 Chapter 16: “Software Testing Techniques” Chapter 22: “Object-Oriented Testing” UT SQI SWPM Software Testing Sessions from 1991 to 2002 … for all they provided in past test guidance and direct use of some material from the University of Texas at Austin Software Project Management Certification Program. Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

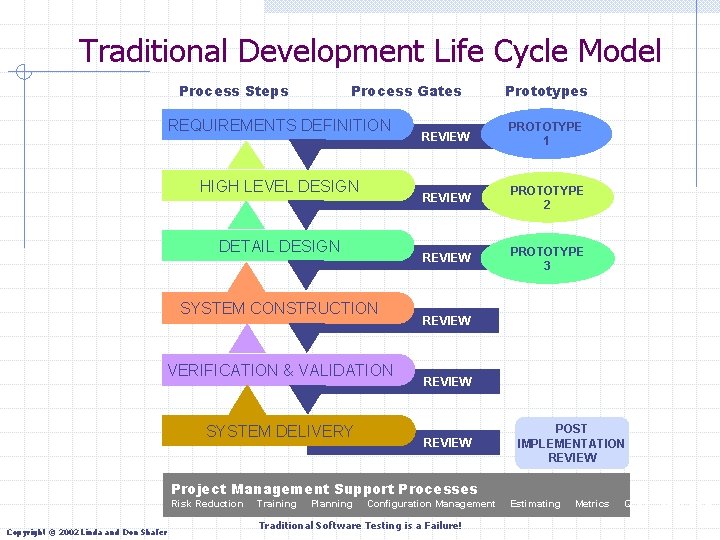

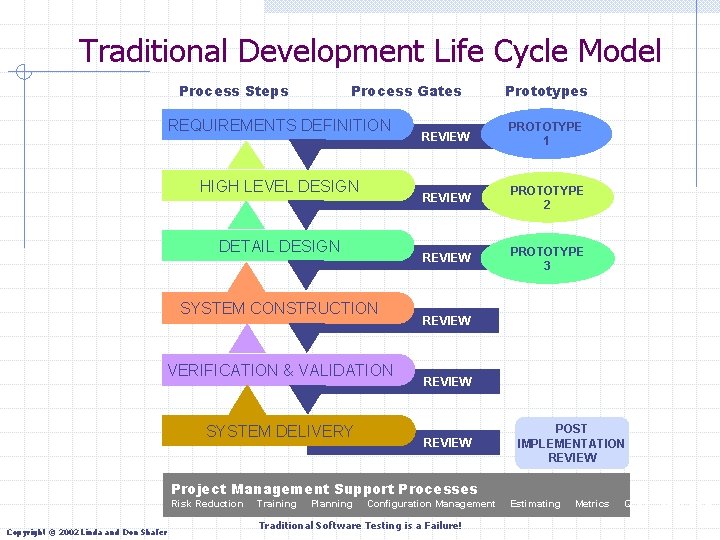

Traditional Development Life Cycle Model Process Steps Process Gates REQUIREMENTS DEFINITION HIGH LEVEL DESIGN DETAIL DESIGN SYSTEM CONSTRUCTION VERIFICATION & VALIDATION SYSTEM DELIVERY Prototypes REVIEW PROTOTYPE 1 REVIEW PROTOTYPE 2 REVIEW PROTOTYPE 3 REVIEW POST IMPLEMENTATION REVIEW Project Management Support Processes Risk Reduction Copyright © 2002 Linda and Don Shafer Training Planning Configuration Management Traditional Software Testing is a Failure! Estimating Metrics Quality Assurance

Software Failures – NIMBY! It took the European Space Agency 10 years and $7 billion to produce Ariane 5, a giant rocket capable of hurling a pair of three-ton satellites into orbit with each launch and intended to give Europe overwhelming supremacy in the commercial space business. All it took to explode that rocket less than a minute into its maiden voyage last June, scattering fiery rubble across the mangrove swamps of French Guiana, was a small computer program trying to stuff a 64 -bit number into a 16 -bit space. http: //www. around. com/ariane. html 1996 James Gleick Columbia and other space shuttles have a history of computer glitches that have been linked to control systems, including left-wing steering controls, but NASA officials say it is too early to determine whether those glitches could have played any role in Saturday's shuttle disaster. … NASA designed the shuttle to be controlled almost totally by onboard computer hardware and software systems. It found that direct manual intervention was impractical for handling the shuttle during ascent, orbit or re-entry because of the required precision of reaction times, systems complexity and size of the vehicle. According to a 1991 report by the General Accounting Office, the investigative arm of Congress, "sequencing of certain shuttle events must occur within milliseconds of the desired times, as operations 10 to 400 milliseconds early or late could cause loss of crew, loss of vehicle, or mission failure. " http: //www. computerworld. com/softwaretopics/software/story/0, 10801, 78135, 00. html After the hydraulic line on their V-22 Osprey aircraft ruptured, the four Marines on board had 30 seconds to fix the problem or perish. Following established procedures, when the reset button on the Osprey's primary flight control system lit up, one of the pilots — either Lt. Col. Michael Murphy or Lt. Col. Keith Sweaney — pushed it. Nothing happened. But as the button was pushed eight to 10 times in 20 seconds, a software failure caused the tilt-rotor aircraft to swerve out of control, stall and then crash near Camp Lejeune, N. C. …The crash was caused by "a hydraulic system failure compounded by a computer software anomaly, " he said. Berndt released the results of a Corps legal investigation into the crash at an April 5 Pentagon press briefing. http: //www. fcw. com/fcw/articles/2001/0409/news-osprey-0409 -01. asp Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

Software Failures – Offshore Material Handling Drive Off under Dynamic Positioning Computer science also differs from physics in that it is not actually a science. It does not study natural objects. Neither is it, as you might think, mathematics; although it does use mathematical reasoning pretty extensively. Rather, computer science is like engineering - it is all about getting something to do something, rather than just dealing with abstractions as in pre-Smith geology. Richard Feynman, Feynman Lectures on Computation, 1996, pg xiii Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

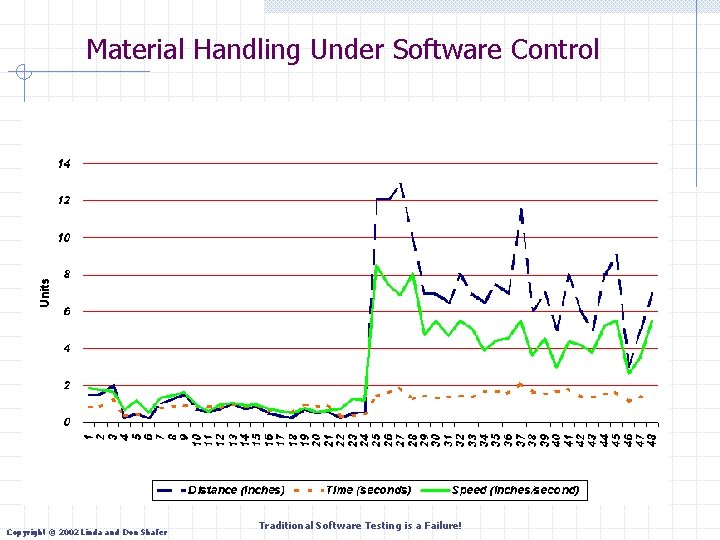

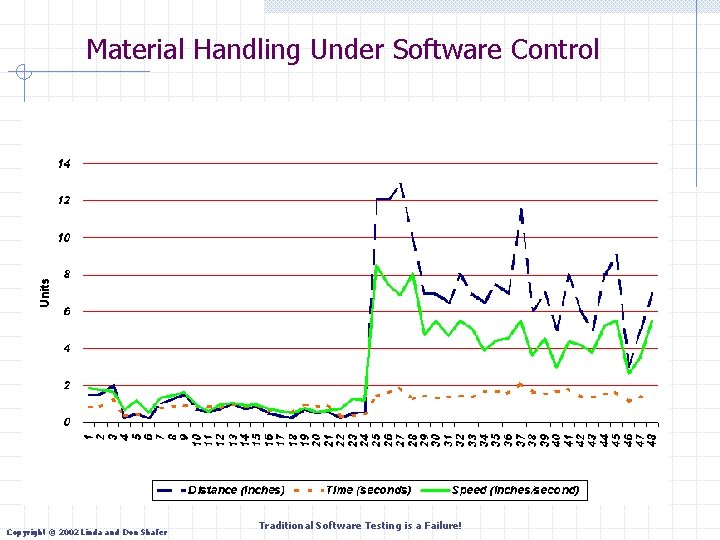

Material Handling Under Software Control Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

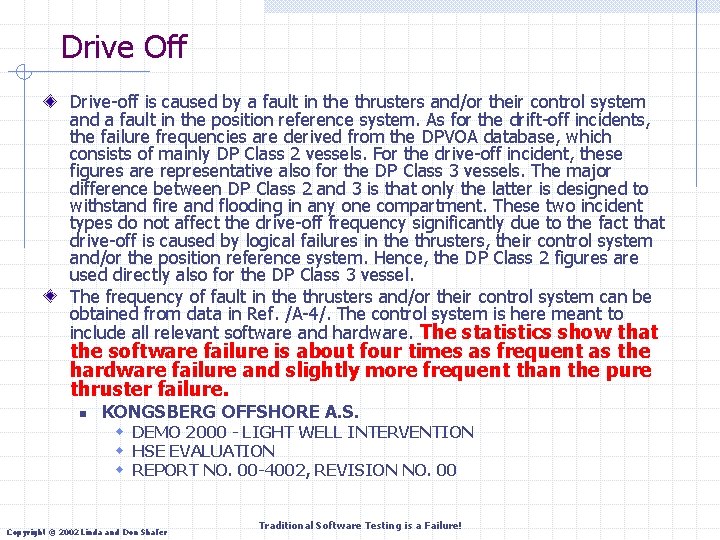

Drive Off Drive-off is caused by a fault in the thrusters and/or their control system and a fault in the position reference system. As for the drift-off incidents, the failure frequencies are derived from the DPVOA database, which consists of mainly DP Class 2 vessels. For the drive-off incident, these figures are representative also for the DP Class 3 vessels. The major difference between DP Class 2 and 3 is that only the latter is designed to withstand fire and flooding in any one compartment. These two incident types do not affect the drive-off frequency significantly due to the fact that drive-off is caused by logical failures in the thrusters, their control system and/or the position reference system. Hence, the DP Class 2 figures are used directly also for the DP Class 3 vessel. The frequency of fault in the thrusters and/or their control system can be obtained from data in Ref. /A-4/. The control system is here meant to include all relevant software and hardware. The statistics show that the software failure is about four times as frequent as the hardware failure and slightly more frequent than the pure thruster failure. n KONGSBERG OFFSHORE A. S. w DEMO 2000 - LIGHT WELL INTERVENTION w HSE EVALUATION w REPORT NO. 00 -4002, REVISION NO. 00 Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

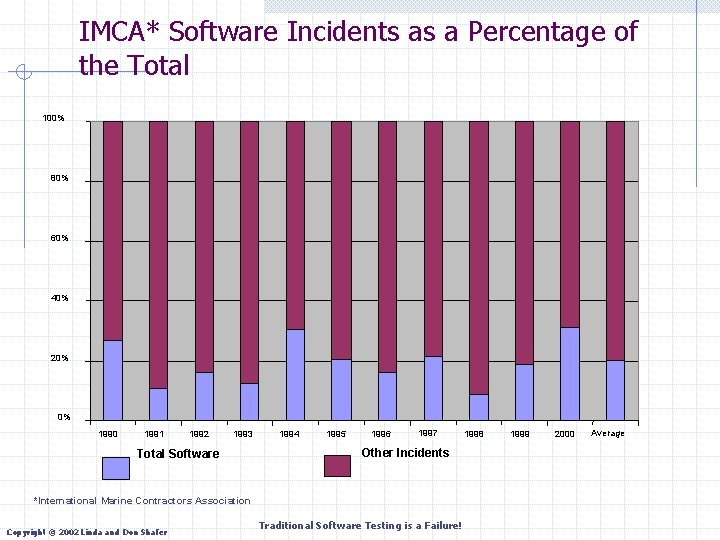

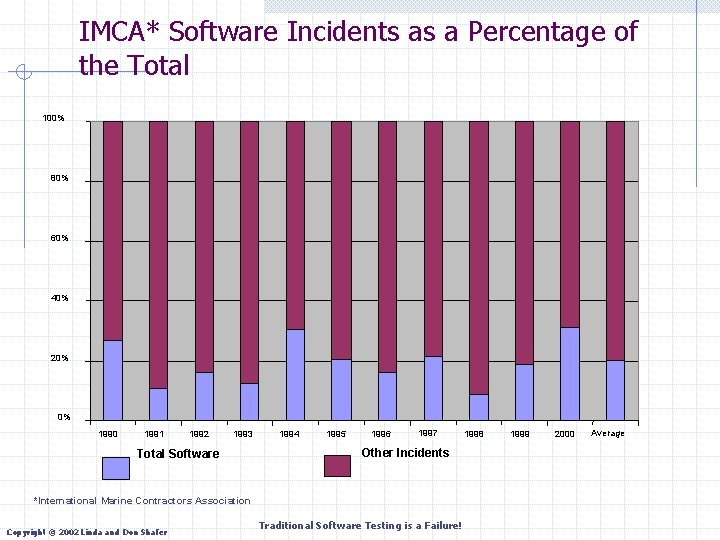

IMCA* Software Incidents as a Percentage of the Total 100% 80% 60% 40% 20% 0% 1990 1991 1992 1993 Total Software 1994 1995 1996 1997 Other Incidents *International Marine Contractors Association Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure! 1998 1999 2000 Average

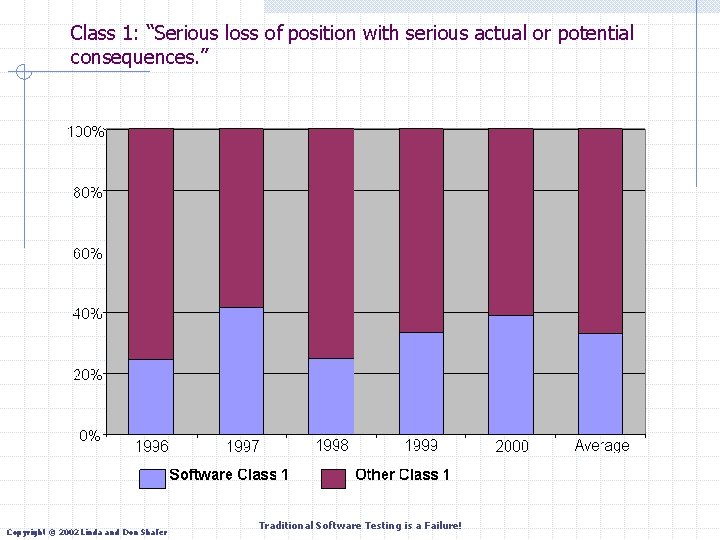

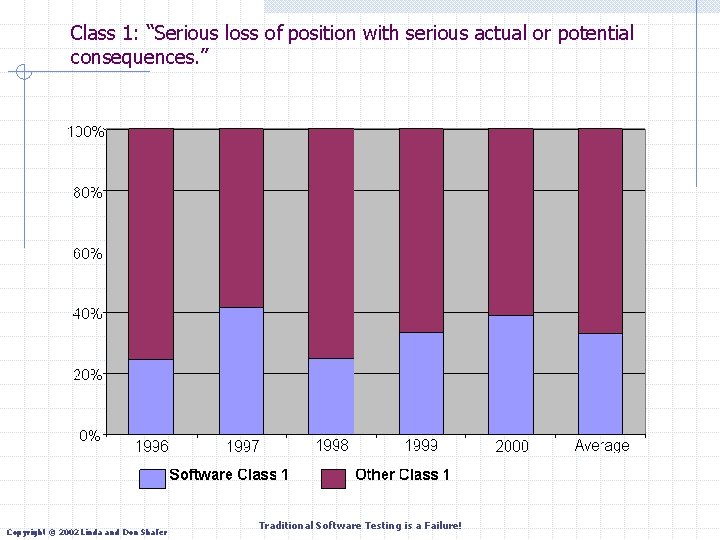

Class 1: “Serious loss of position with serious actual or potential consequences. ” Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

General Testing Remarks n n n n a critical element of software quality assurance represents the ultimate review of specification, design and coding may use up to 40% of the development resources the primary goal of testing is to think of as many ways as possible to bring the newly-developed system down demolishing the software product that has just been built requires learning to live with humanness of practitioners requires an admission of failure in order to be good at it Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

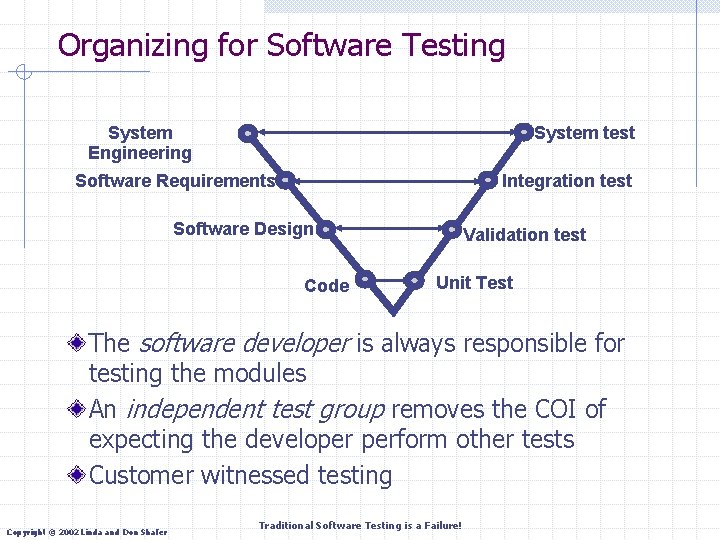

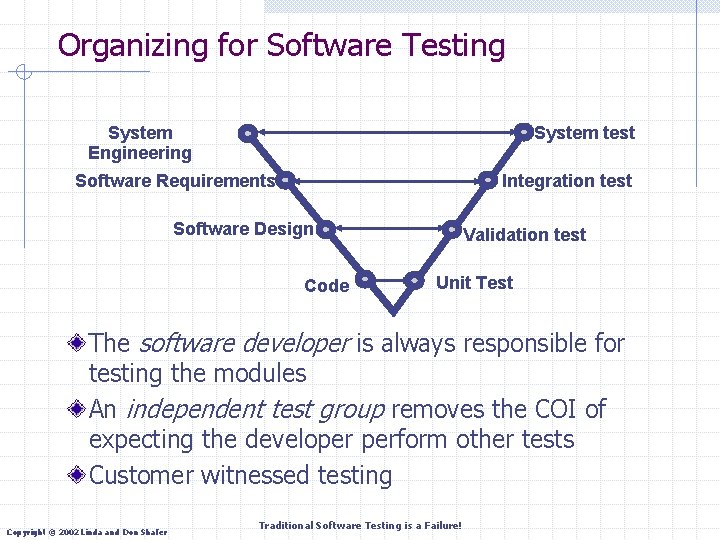

Organizing for Software Testing System Engineering System test Software Requirements Integration test Software Design Code Validation test Unit Test The software developer is always responsible for testing the modules An independent test group removes the COI of expecting the developer perform other tests Customer witnessed testing Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

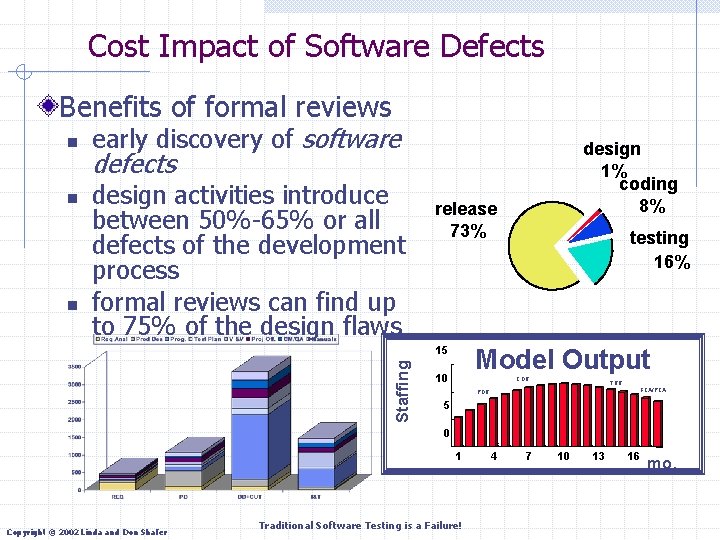

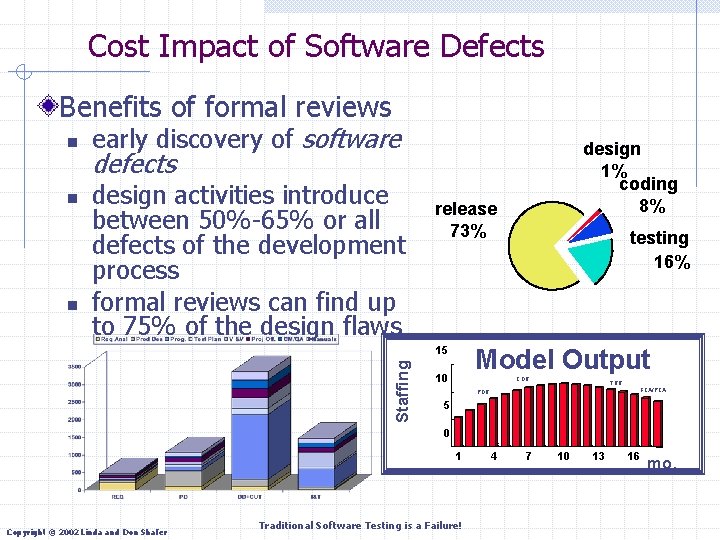

Cost Impact of Software Defects Benefits of formal reviews n n n early discovery of software design 1% coding 8% defects design activities introduce between 50%-65% or all defects of the development process formal reviews can find up to 75% of the design flaws release 73% Model Output Staffing 15 10 CDR TRR FCA/PCA PDR 5 0 Copyright © 2002 Linda and Don Shafer testing 16% 1 1 Traditional Software Testing is a Failure! 4 4 7 7 10 13 16 mo.

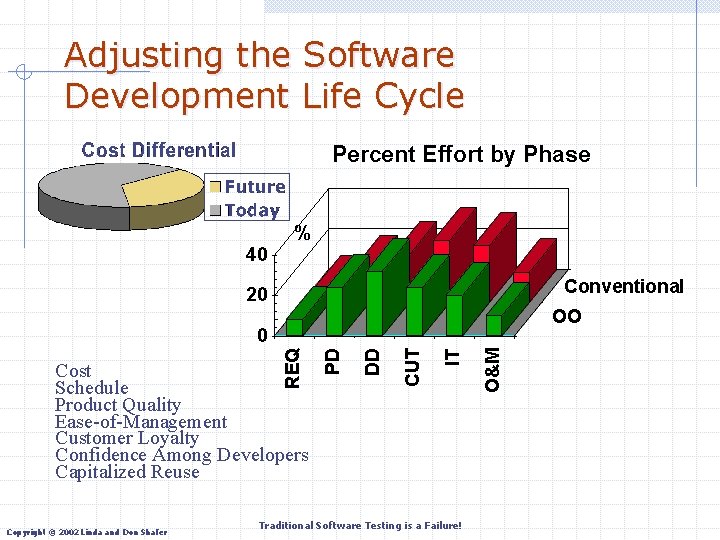

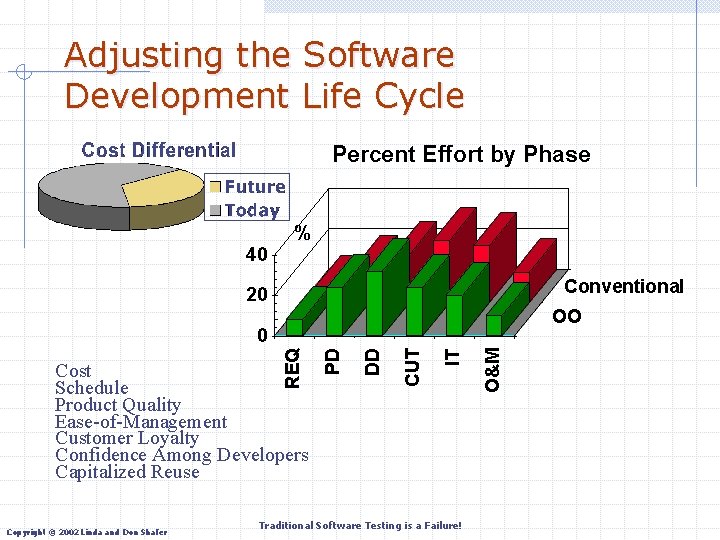

Adjusting the Software Development Life Cycle Percent Effort by Phase % 40 Conventional 20 OO Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure! O&M IT CUT DD Cost Schedule Product Quality Ease-of-Management Customer Loyalty Confidence Among Developers Capitalized Reuse PD REQ 0

Questions People Ask About Testing Should testing instill guilt? Is testing really destructive? Why aren’t there methods of eliminating bugs at the time they are being injected? Why isn’t a successful test one that inputs data correctly, gets all the right answers and outputs them correctly? Why do test cases need to be designed? Why can’t you test most programs completely? Why can’t the software developer simply take responsibility for finding/fixing all his/her errors? How many testers does it take to change a light bulb? Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

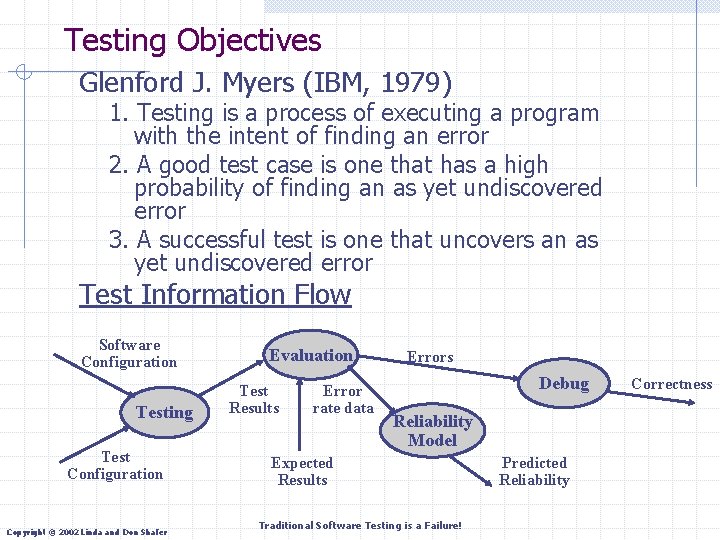

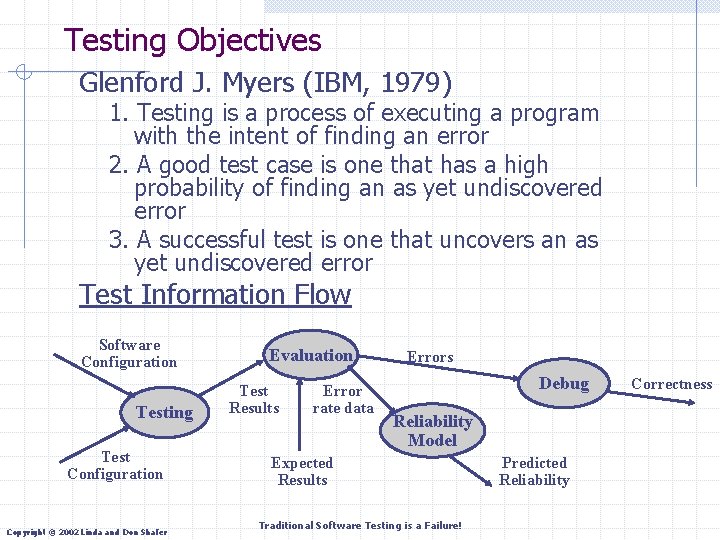

Testing Objectives Glenford J. Myers (IBM, 1979) 1. Testing is a process of executing a program with the intent of finding an error 2. A good test case is one that has a high probability of finding an as yet undiscovered error 3. A successful test is one that uncovers an as yet undiscovered error Test Information Flow Software Configuration Testing Test Configuration Copyright © 2002 Linda and Don Shafer Evaluation Test Results Error rate data Errors Debug Reliability Model Expected Results Traditional Software Testing is a Failure! Predicted Reliability Correctness

Testing Principles All tests should be traceable to customer requirements Tests should be planned long before testing begins The Pareto principle applies to software testing n 80% of all errors will likely be in 20% of the modules Testing should begin “in the small” and progress toward testing “in the large” Exhaustive testing is not possible Testing should be conducted by an independent third party Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure! “The test team should always know how many errors still remain in the code - for when the manager comes by. ”

About Software Testing Software testing - a multi-step strategy n n series of test case design methods that help ensure effective error detection testing is not a safety net or replacement of software quality w SQA typically conducts independent audits of a product’s compliance to standards w SQA assures that the test team follows the policies & procedures n testing is not 100% effective in uncovering defects in software w A real good testing effort will ship no more than 2. 5 defects/KSLOC (thousand source lines of code) Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

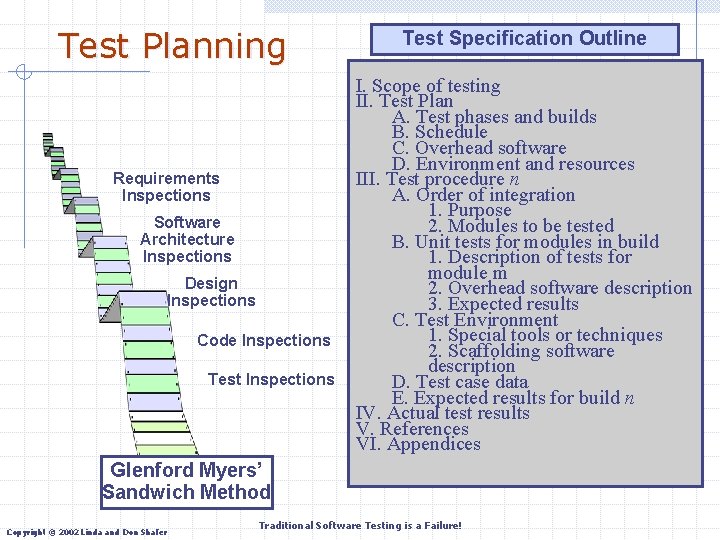

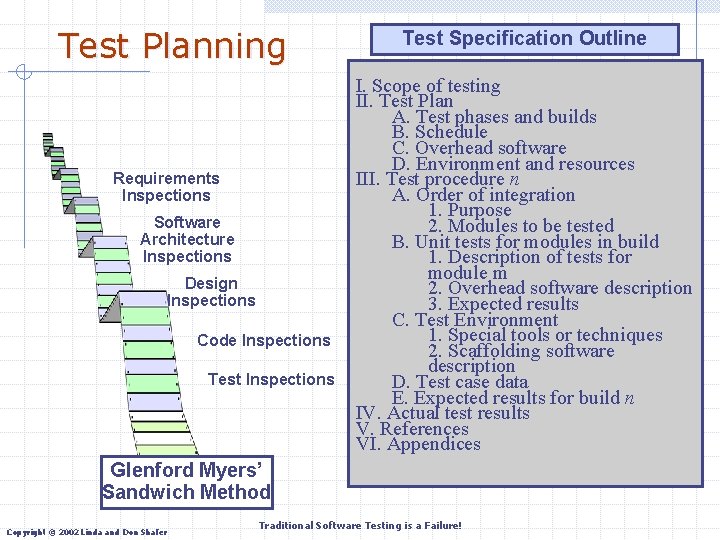

Test Planning Requirements Inspections Software Architecture Inspections Design Inspections Code Inspections Test Specification Outline I. Scope of testing II. Test Plan A. Test phases and builds B. Schedule C. Overhead software D. Environment and resources III. Test procedure n A. Order of integration 1. Purpose 2. Modules to be tested B. Unit tests for modules in build 1. Description of tests for module m 2. Overhead software description 3. Expected results C. Test Environment 1. Special tools or techniques 2. Scaffolding software description D. Test case data E. Expected results for build n IV. Actual test results V. References VI. Appendices Glenford Myers’ Sandwich Method Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

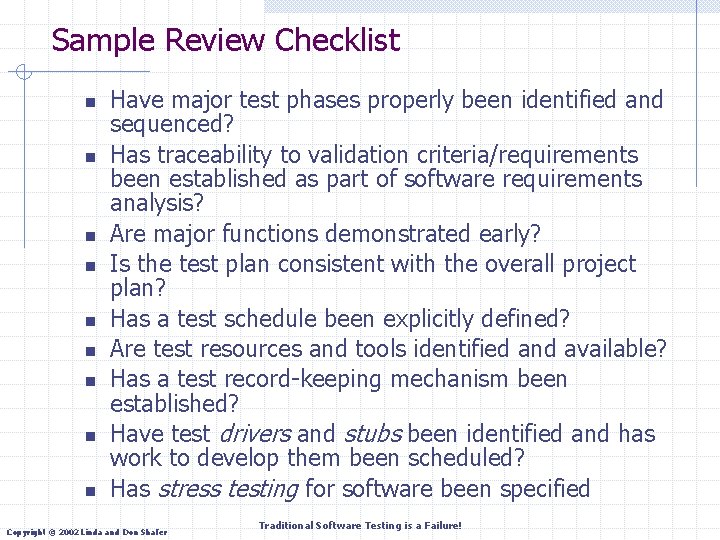

Sample Review Checklist n n n n n Have major test phases properly been identified and sequenced? Has traceability to validation criteria/requirements been established as part of software requirements analysis? Are major functions demonstrated early? Is the test plan consistent with the overall project plan? Has a test schedule been explicitly defined? Are test resources and tools identified and available? Has a test record-keeping mechanism been established? Have test drivers and stubs been identified and has work to develop them been scheduled? Has stress testing for software been specified Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

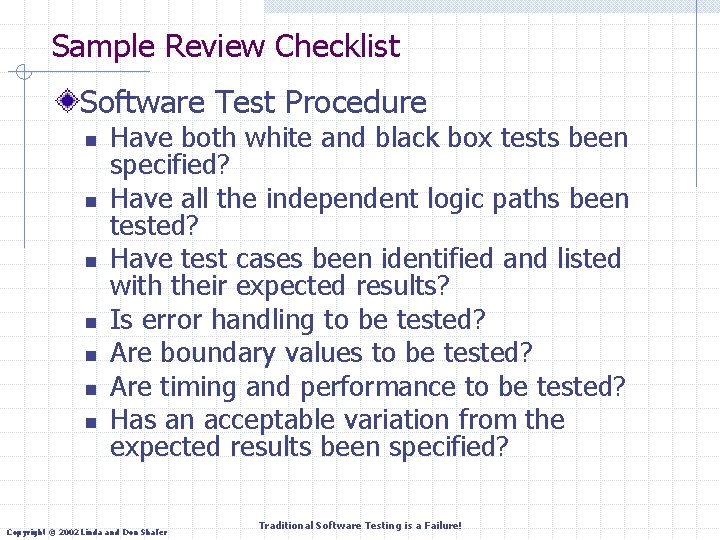

Sample Review Checklist Software Test Procedure n n n n Have both white and black box tests been specified? Have all the independent logic paths been tested? Have test cases been identified and listed with their expected results? Is error handling to be tested? Are boundary values to be tested? Are timing and performance to be tested? Has an acceptable variation from the expected results been specified? Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

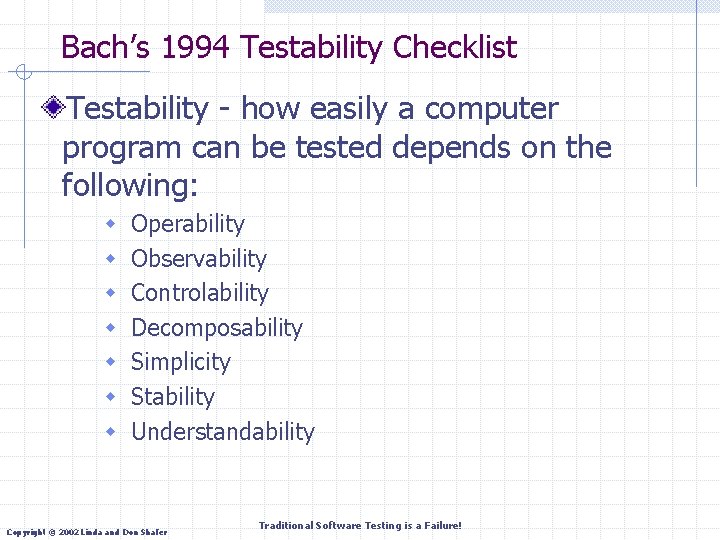

Bach’s 1994 Testability Checklist Testability - how easily a computer program can be tested depends on the following: w w w w Operability Observability Controlability Decomposability Simplicity Stability Understandability Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

Some of the Art of Test Planning Test Time Execution Estimation How Long to get the Bugs Out? Realism not Optimism Rules of Thumb Light Weight Test Plan Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

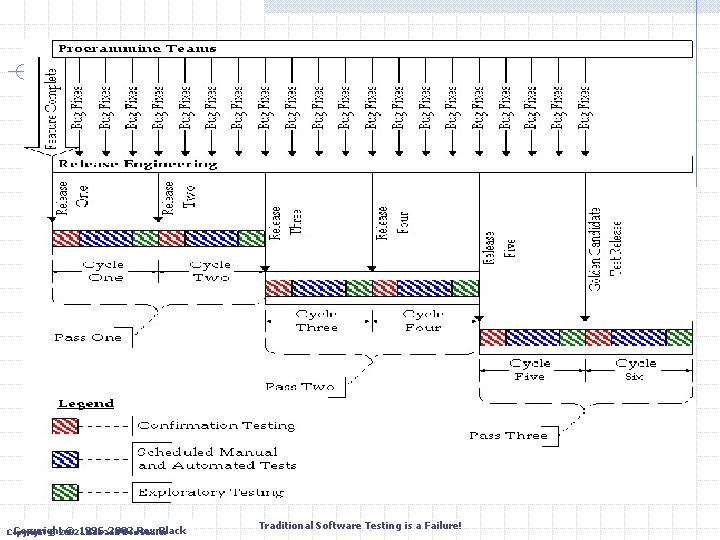

How Can We Predict Test Execution Time? When will you be done executing the tests? Part of the answer is when you’ll have run all the planned tests once n n n Total estimated test time (sum for all planned tests) Total person-hours of tester time available per week Time spent testing by each tester The other part of the answer is when you’ll have found the important bugs and confirmed the fixes Estimate total bugs to find, bug find rate, bug fix rate, and closure period (time from find to close) for bugs n n Historical data really helps Formal defect removal models are even more accurate Copyright © 1996 -2002 Black Copyright © 2002 Linda and Don. Rex Shafer Traditional Software Testing is a Failure!

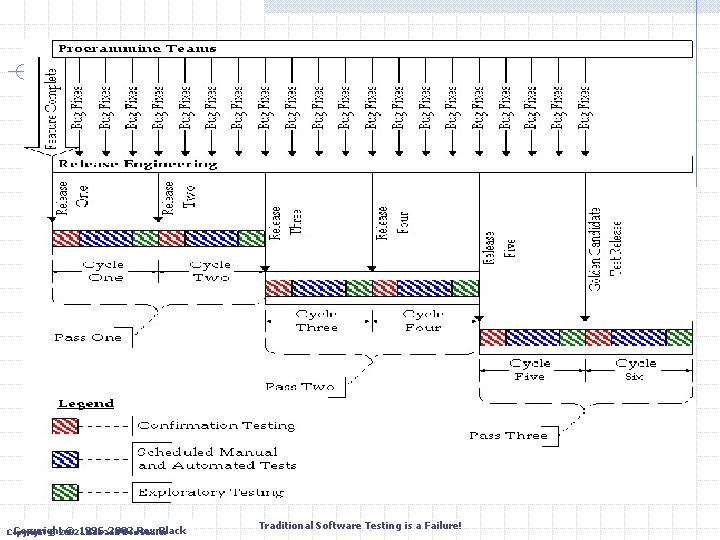

How Long to Run the Tests? It depends a lot on how you run tests n n Scripted vs. exploratory Regression testing strategy (repeat tests or just run once? ) What I often do n n Plan for consistent test cycles (tests run per test release) and passes (running each test once) Realize that buggy deliverables and uninstallable builds slow test execution…and plan accordingly Try to understand the amount of confirmation testing, as a large number of bugs leads to lots of confirmation testing Check number of cycles with bug prediction Testers spend less than 100% of their time testing n n n E-mail, meetings, reviewing bugs and tests, etc. I plan six hours of testing in a nine-to-ten hour day (contractor) Four hours of testing in an eight hour day is common (employee) Copyright © 1996 -2002 Black Copyright © 2002 Linda and Don. Rex Shafer Traditional Software Testing is a Failure!

Copyright © 1996 -2002 Black Copyright © 2002 Linda and Don. Rex Shafer Traditional Software Testing is a Failure!

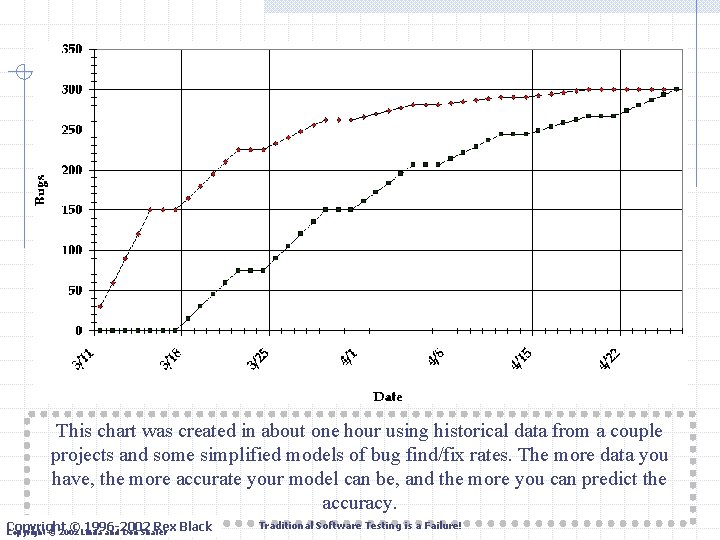

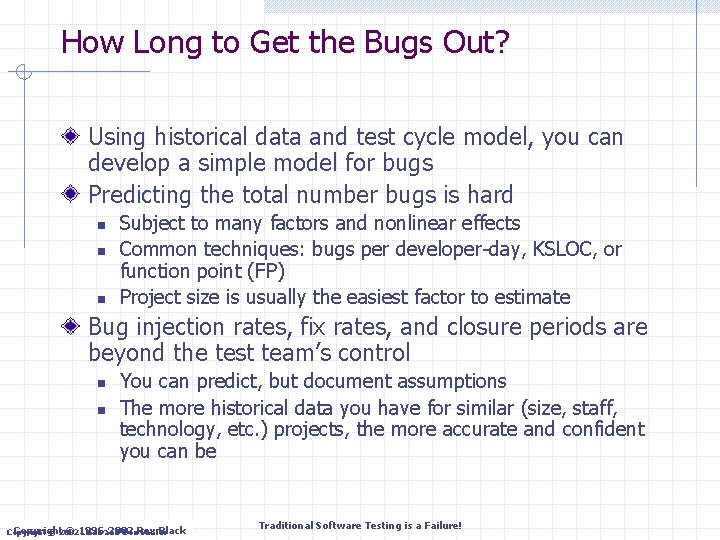

How Long to Get the Bugs Out? Using historical data and test cycle model, you can develop a simple model for bugs Predicting the total number bugs is hard n n n Subject to many factors and nonlinear effects Common techniques: bugs per developer-day, KSLOC, or function point (FP) Project size is usually the easiest factor to estimate Bug injection rates, fix rates, and closure periods are beyond the test team’s control n n You can predict, but document assumptions The more historical data you have for similar (size, staff, technology, etc. ) projects, the more accurate and confident you can be Copyright © 1996 -2002 Black Copyright © 2002 Linda and Don. Rex Shafer Traditional Software Testing is a Failure!

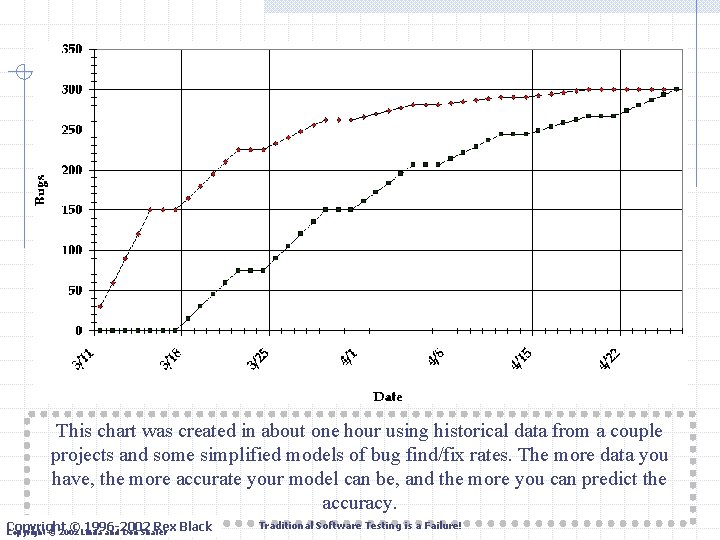

This chart was created in about one hour using historical data from a couple projects and some simplified models of bug find/fix rates. The more data you have, the more accurate your model can be, and the more you can predict the accuracy. Copyright © Linda 1996 -2002 Rex Black Copyright © 2002 and Don Shafer Traditional Software Testing is a Failure!

Objective: Realism, Not Optimism Effort, dependencies, resources accurately forecast Equal likelihood for each task to be early as late Best-case and worst-case scenarios known Plan allows for corrections to early estimates Risks identified and mitigated, especially… n n Gaps in skills Risky technology Logistical issues and other tight critical paths Excessive optimism “[Optimism is the] false assumption that… all will go well, i. e. , that each task will take only as long as it ‘ought’ to take. ” --Fred Brooks, in The Mythical Man-month, 1975. Copyright © 1996 -2002 Black Copyright © 2002 Linda and Don. Rex Shafer Traditional Software Testing is a Failure!

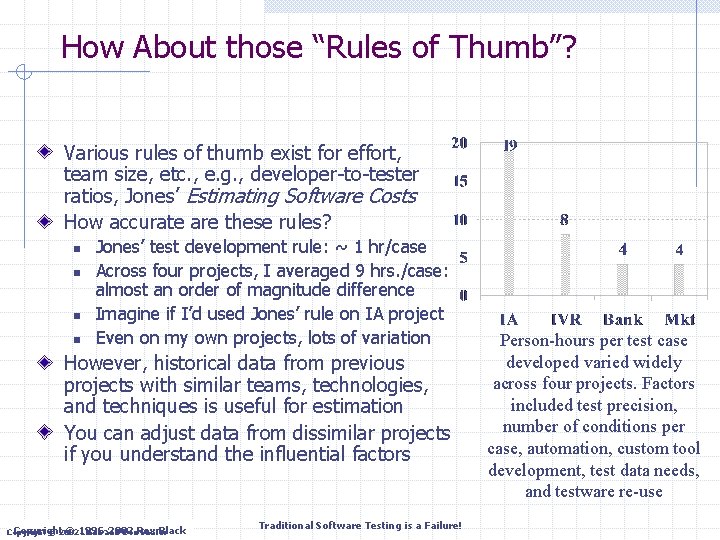

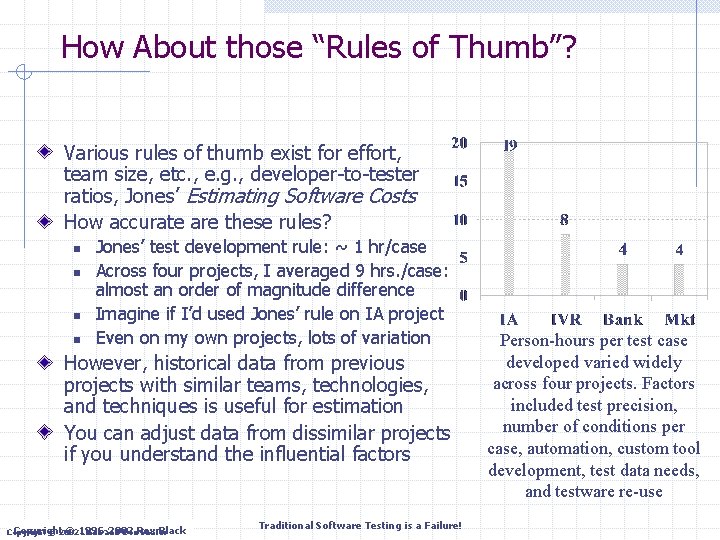

How About those “Rules of Thumb”? Various rules of thumb exist for effort, team size, etc. , e. g. , developer-to-tester ratios, Jones’ Estimating Software Costs How accurate are these rules? n n Jones’ test development rule: ~ 1 hr/case Across four projects, I averaged 9 hrs. /case: almost an order of magnitude difference Imagine if I’d used Jones’ rule on IA project Even on my own projects, lots of variation However, historical data from previous projects with similar teams, technologies, and techniques is useful for estimation You can adjust data from dissimilar projects if you understand the influential factors Copyright © 1996 -2002 Black Copyright © 2002 Linda and Don. Rex Shafer Traditional Software Testing is a Failure! Person-hours per test case developed varied widely across four projects. Factors included test precision, number of conditions per case, automation, custom tool development, test data needs, and testware re-use

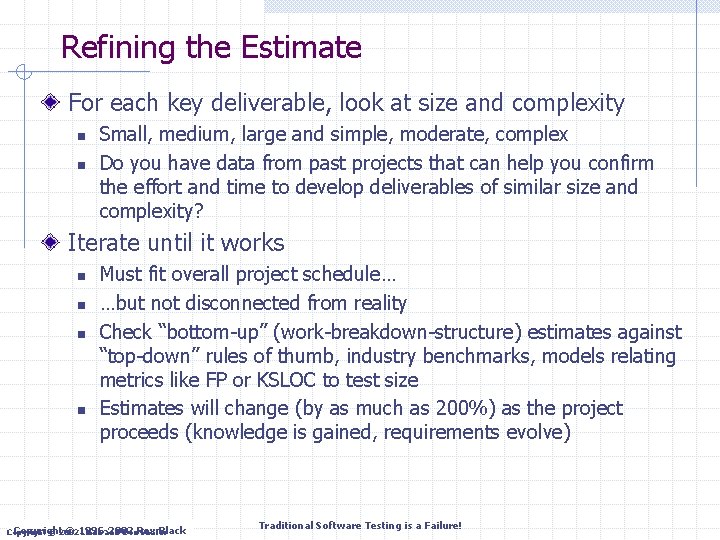

Refining the Estimate For each key deliverable, look at size and complexity n n Small, medium, large and simple, moderate, complex Do you have data from past projects that can help you confirm the effort and time to develop deliverables of similar size and complexity? Iterate until it works n n Must fit overall project schedule… …but not disconnected from reality Check “bottom-up” (work-breakdown-structure) estimates against “top-down” rules of thumb, industry benchmarks, models relating metrics like FP or KSLOC to test size Estimates will change (by as much as 200%) as the project proceeds (knowledge is gained, requirements evolve) Copyright © 1996 -2002 Black Copyright © 2002 Linda and Don. Rex Shafer Traditional Software Testing is a Failure!

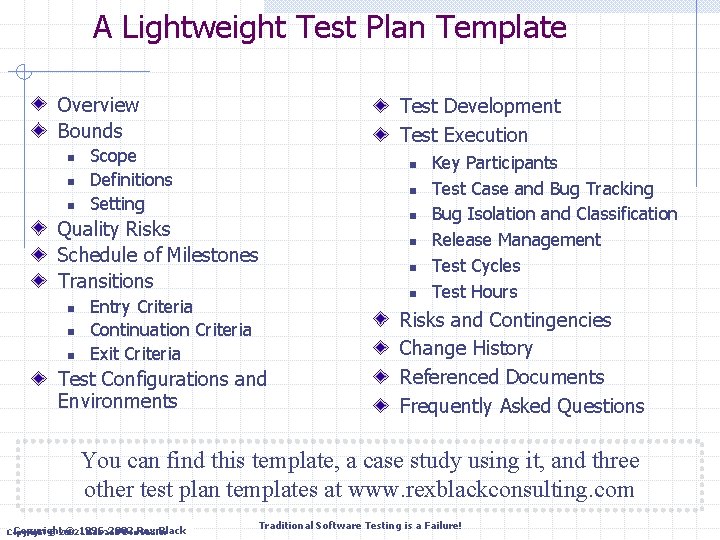

A Lightweight Test Plan Template Overview Bounds n n n Test Development Test Execution Scope Definitions Setting n n Quality Risks Schedule of Milestones Transitions n n n Entry Criteria Continuation Criteria Exit Criteria Test Configurations and Environments n n Key Participants Test Case and Bug Tracking Bug Isolation and Classification Release Management Test Cycles Test Hours Risks and Contingencies Change History Referenced Documents Frequently Asked Questions You can find this template, a case study using it, and three other test plan templates at www. rexblackconsulting. com Copyright © 1996 -2002 Black Copyright © 2002 Linda and Don. Rex Shafer Traditional Software Testing is a Failure!

How well did you test? ? ? s n o i t s e u Q Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure! ? ? ?

Linda Shafer Bio: Linda Shafer has been working with the software industry since 1965, beginning with NASA in the early days of the space program. Her experience includes roles of programmer, designer, analyst, project leader, manager, and SQA/SQE. She has worked for large and small companies, including IBM, Control Data Corporation, Los Alamos National Laboratory, Computer Task Group, Sterling Information Group, and Motorola. She has also taught for and/or been in IT shops at The University of Houston, The University of Texas at Austin, The College of William and Mary, The Office of the Attorney General (Texas) and Motorola University. Ms. Shafer's publications include 25 refereed articles, and three books. She currently works for the Software Quality Institute and coauthored a SQI Software Engineering Series book published by Pren. Hall in 2002: Quality Software Project Management. She is on the International Press Committee of the IEEE and an author in the Software Engineering Series books for IEEE. Her MBA is from the University of New Mexico. Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!

Don Shafer Bio: Don Shafer is a co-founder, corporate director and Chief Technology Officer of Athens Group, Incorporated in June 1998, Athens Group is an employeeowned consulting firm, integrating technology strategy and software solutions. Prior to Athens Group, Shafer led groups developing and marketing hardware and software products for Motorola, AMD and Crystal Semiconductor. He was responsible for managing a $129 million-a-year PC product group that produced award-winning audio components. From the development of lowlevel software drivers in yet-to-be-released Microsoft operating systems to the selection and monitoring of Taiwan semiconductor fabrication facilities, Shafer has led key product and process efforts. In the past three years he has led Athens engineers in developing industry standard semiconductor fab equipment software interfaces, definition of 300 mm equipment integration tools, advanced process control state machine data collectors and embedded system control software agents. His latest patents are on joint work done with Agilent Technologies in state-based machine control. He earned a BS degree from the USAF Academy and an MBA from the University of Denver. Shafer’s work experience includes positions held at Boeing and Los Alamos National Laboratories. He is currently an adjunct professor in graduate software engineering at Southwest Texas His faculty web site is http: //www. cs. swt. edu/~donshafer/. With two other colleagues in 2002, he wrote Quality Software Project Management for Prentice-Hall now used in both industry and academia. Currently he is working on an SCM book for the IEEE Software Engineering Series. Copyright © 2002 Linda and Don Shafer Traditional Software Testing is a Failure!