Trading Flash Translation Layer For Performance and Lifetime

- Slides: 33

Trading Flash Translation Layer For Performance and Lifetime 王 江 涛

Outline 2 1 Introduction 2 Flash Translation Layer 3 Address Mapping 4 Wear Leveling 5 Conclusion

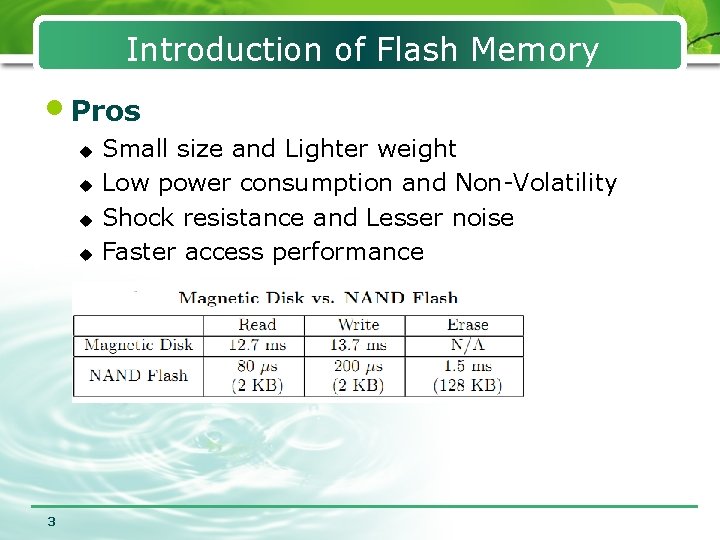

Introduction of Flash Memory • Pros u u 3 Small size and Lighter weight Low power consumption and Non-Volatility Shock resistance and Lesser noise Faster access performance

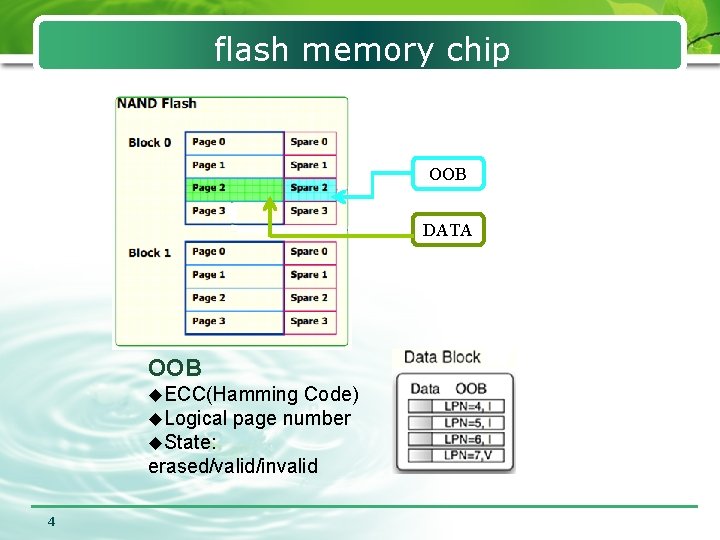

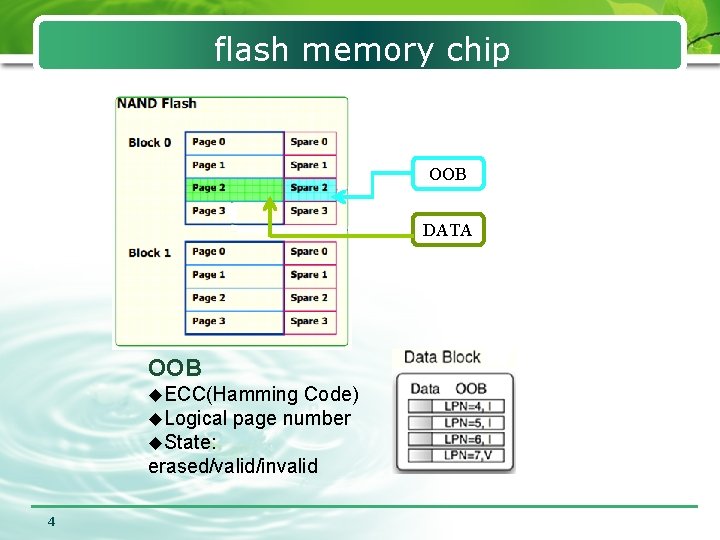

flash memory chip OOB DATA OOB u. ECC(Hamming Code) u. Logical page number u. State: erased/valid/invalid 4

Introduction of Flash Memory l Cons u u Write granularity (page) Erase before write (block) Sequential write within a block Limited erase/write l Out-of-place update u u 5 when a page is to be overwritten, we allocate a new free or erased page we used a software layer called FTL indicate the physical location change of the page

Outline 6 1 Introduction 2 Flash Translation Layer 3 Address Mapping 4 Wear Leveling 5 Conclusion

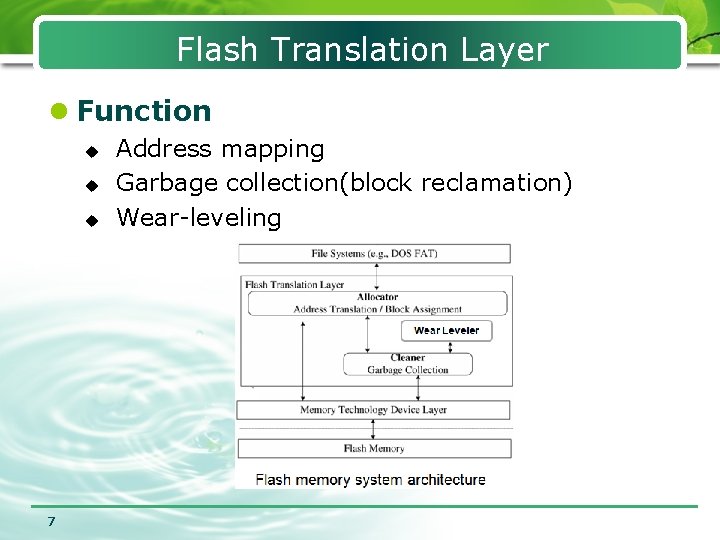

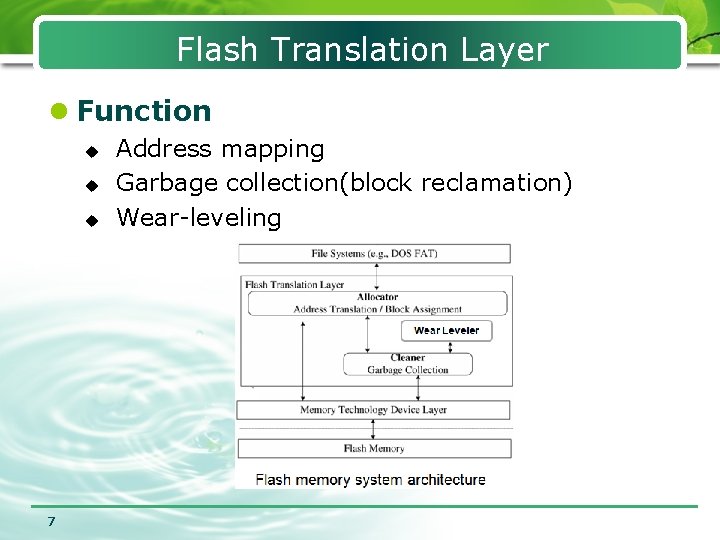

Flash Translation Layer l Function u u u 7 Address mapping Garbage collection(block reclamation) Wear-leveling

Outline 8 1 Introduction 2 Flash Translation Layer 3 Address Mapping 4 Wear Leveling 5 Conclusion

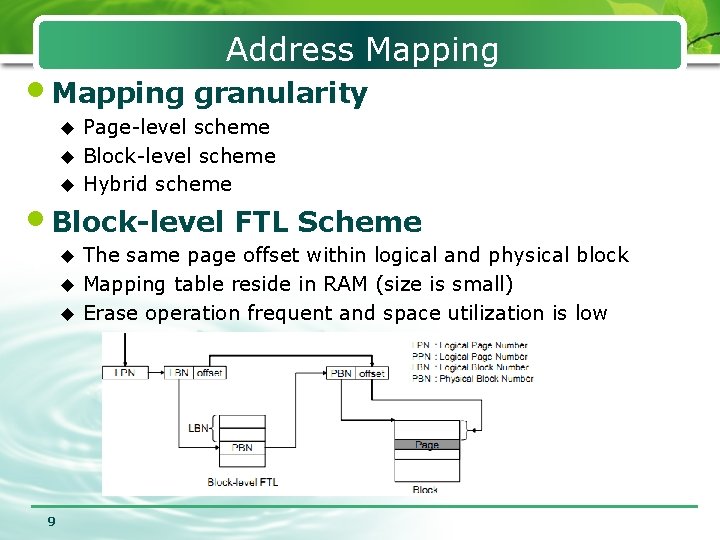

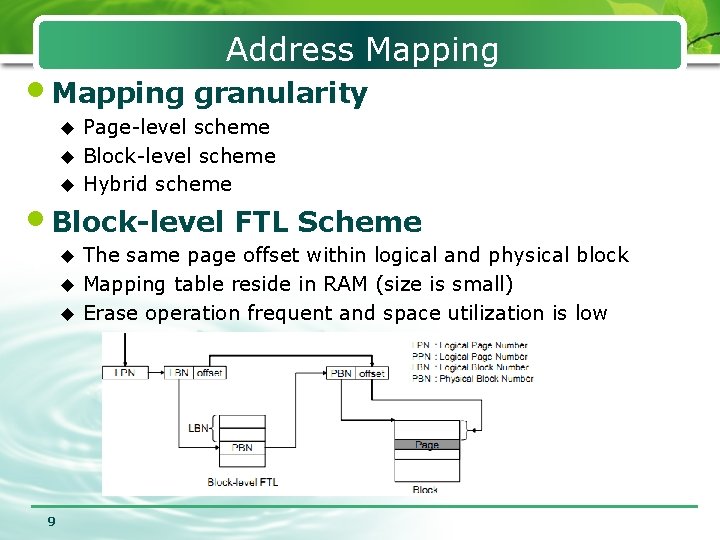

Address Mapping • Mapping granularity Page-level scheme u Block-level scheme u Hybrid scheme u • Block-level FTL Scheme The same page offset within logical and physical block u Mapping table reside in RAM (size is small) u Erase operation frequent and space utilization is low u 9

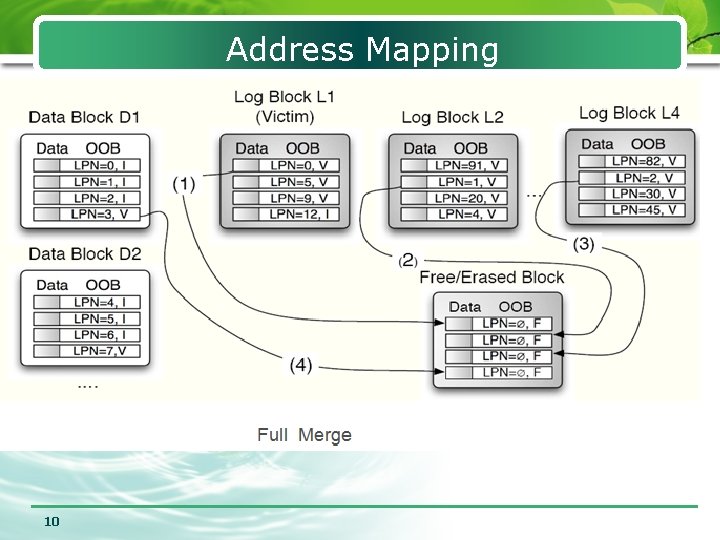

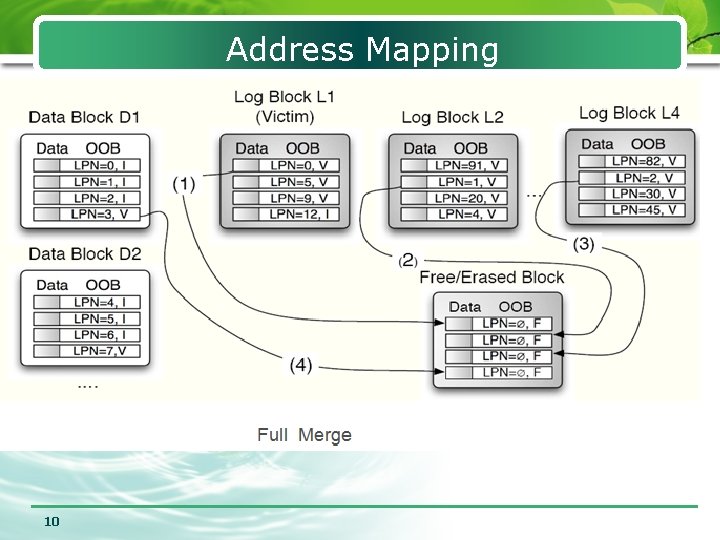

Address Mapping • Hybrid FTL Scheme u DBA: store user data u LBA: store overwriting data 10 (block-level mapping) (page-level mapping)

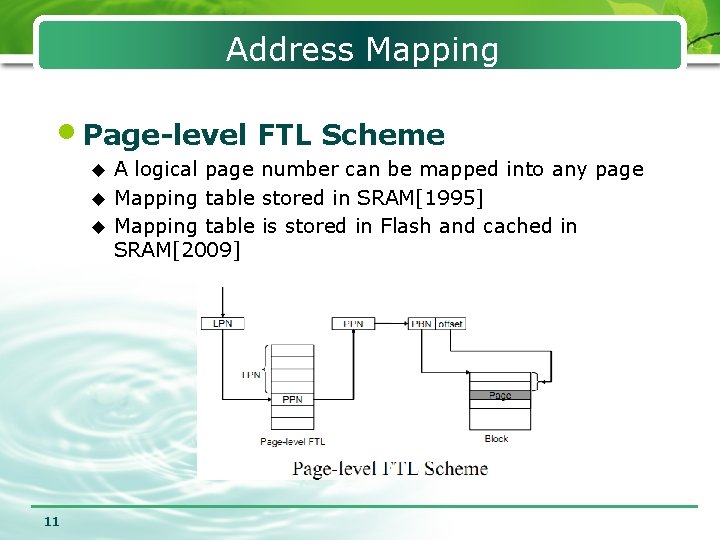

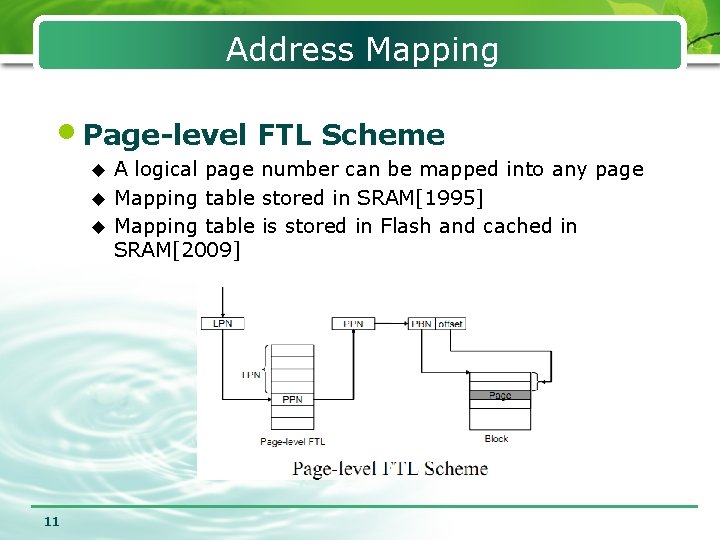

Address Mapping • Page-level FTL Scheme A logical page number can be mapped into any page u Mapping table stored in SRAM[1995] u Mapping table is stored in Flash and cached in SRAM[2009] u 11

Page-level FTL Scheme • Related work u DFTL: A Flash Translation Layer Employing Demand-based Selective Caching of Page-level Address Mapping. (ASPLOS 2009) u A Workload-Aware Adaptive Hybrid Flash Translation Layer with an Efficient Caching Strategy (CFTL) (MASCOTS 2011) u Lazy. FTL: A Page-level Flash Translation Layer Optimized for NAND Flash Memory (SIGMOD 2011) 12

DFTL • Divided flash memory into MBA and DBA MBA--Store the full mapping table on flash u DBA—Store user data u • Use page-level mapping • Dynamically swap page-level mapping entries in/out SRAM 13

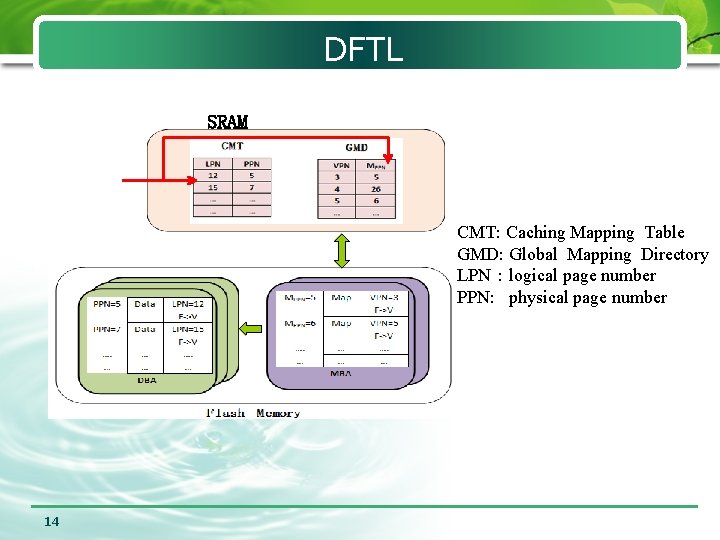

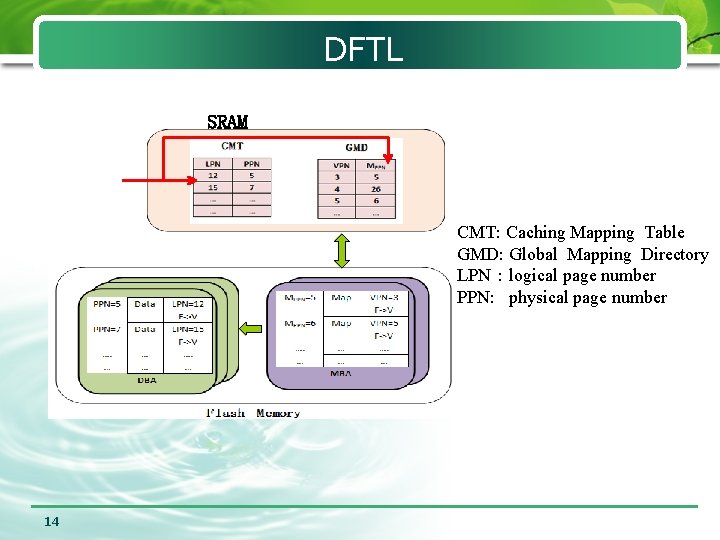

DFTL SRAM CMT: Caching Mapping Table GMD: Global Mapping Directory LPN:logical page number PPN: physical page number 14

DFTL l Pros u u Realize sequential program within a block Avoid full merge l Cons Frequently update the mapping pages during garbage collection u A poor reliability of mapping information u The cost of read is large u 15

Workload-Aware Adaptive FTL(CFTL) • • 16 Divided flash memory into MBA and DBA u MBA--Store the full mapping on flash u DBA—Store user data Use page-level mapping and block-level mapping Dynamically swap page-level mapping entries in/out SRAM Convert to each other based on data access patterns u Read intensive: Block-level mapping u Write intensive: Page-level mapping

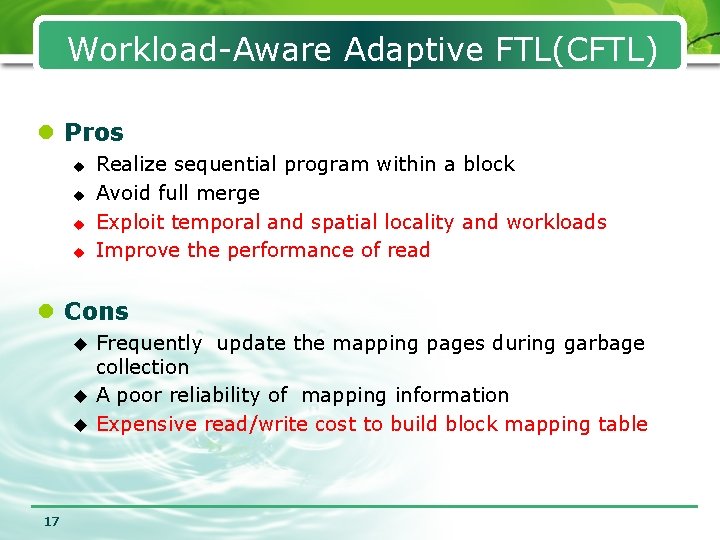

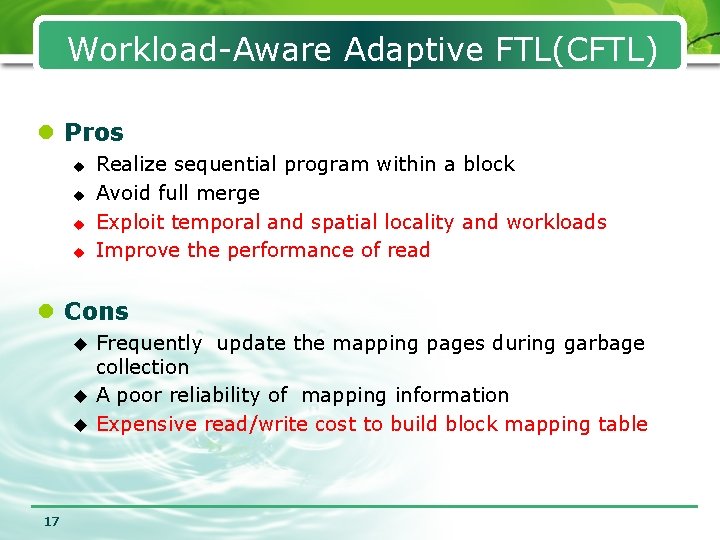

Workload-Aware Adaptive FTL(CFTL) l Pros u u Realize sequential program within a block Avoid full merge Exploit temporal and spatial locality and workloads Improve the performance of read l Cons Frequently update the mapping pages during garbage collection u A poor reliability of mapping information u Expensive read/write cost to build block mapping table u 17

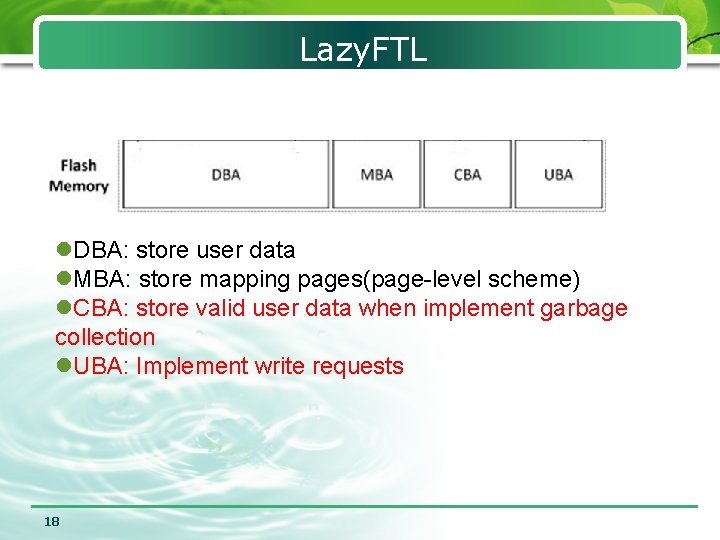

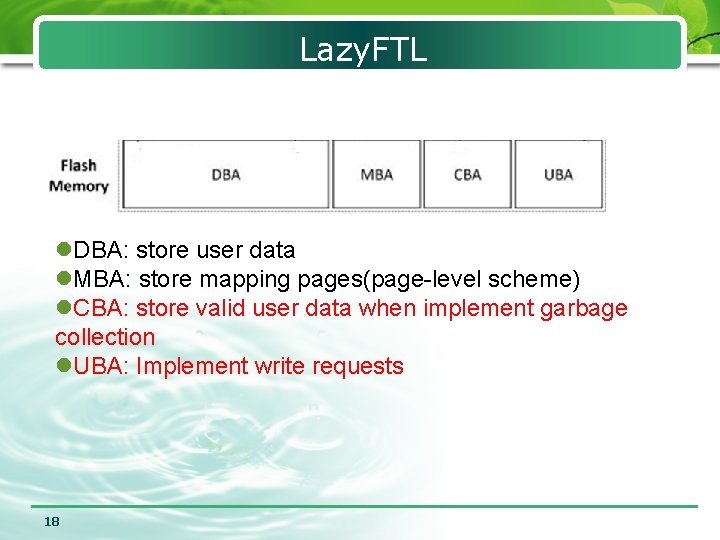

Lazy. FTL l. DBA: store user data l. MBA: store mapping pages(page-level scheme) l. CBA: store valid user data when implement garbage collection l. UBA: Implement write requests 18

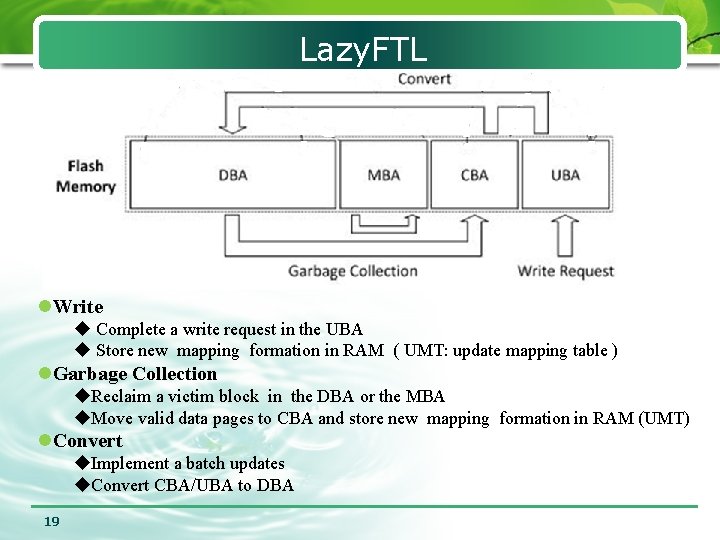

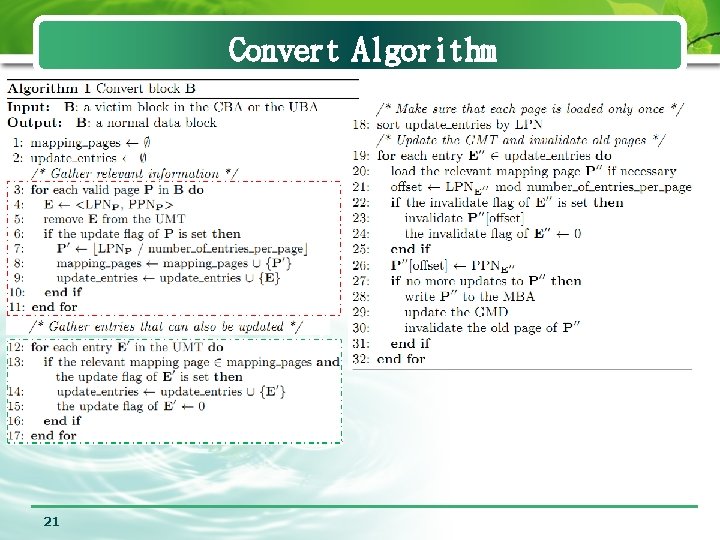

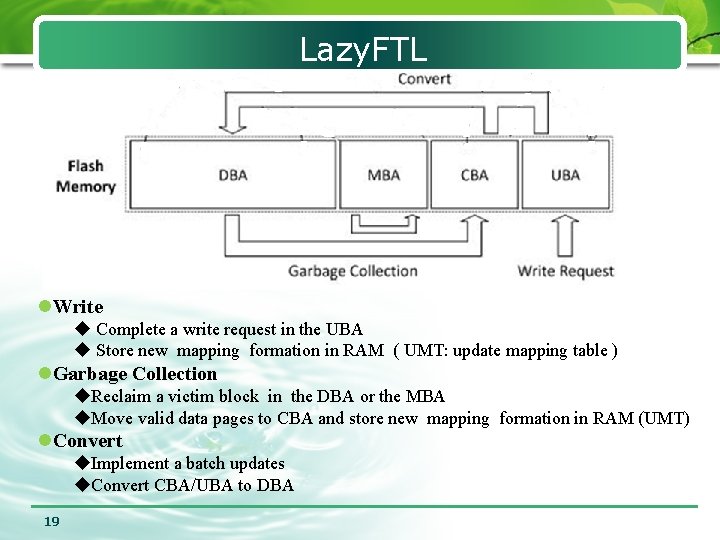

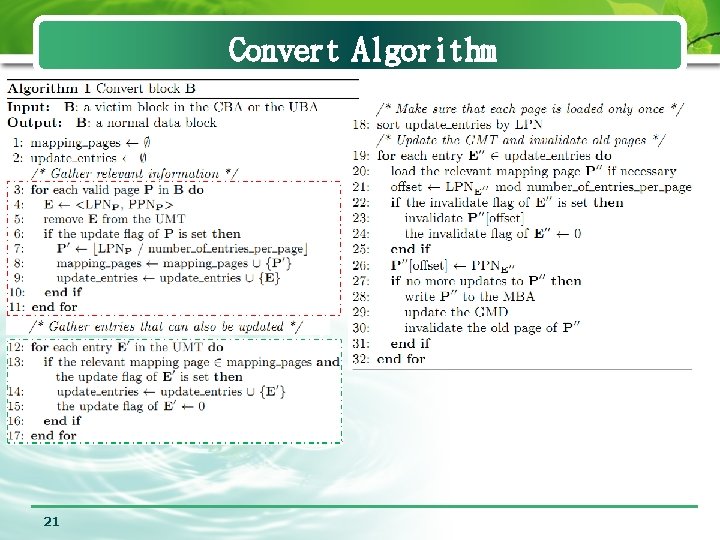

Lazy. FTL l. Write u Complete a write request in the UBA u Store new mapping formation in RAM ( UMT: update mapping table ) l. Garbage Collection u. Reclaim a victim block in the DBA or the MBA u. Move valid data pages to CBA and store new mapping formation in RAM (UMT) l. Convert u. Implement a batch updates u. Convert CBA/UBA to DBA 19

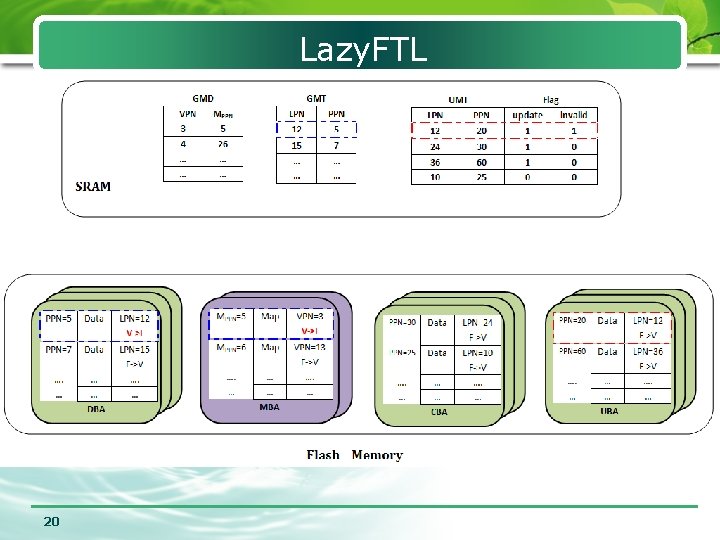

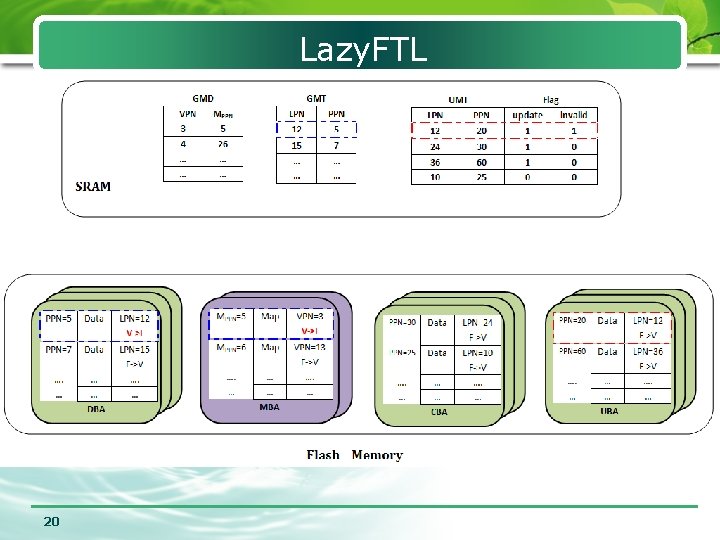

Lazy. FTL 20

Convert Algorithm 21

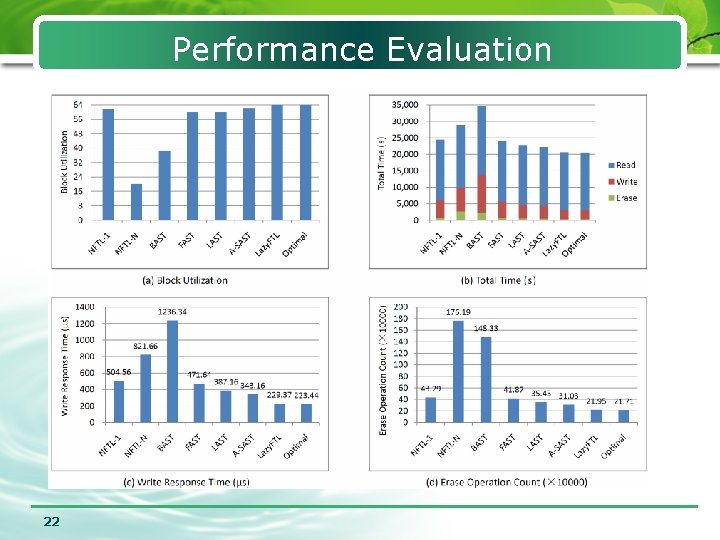

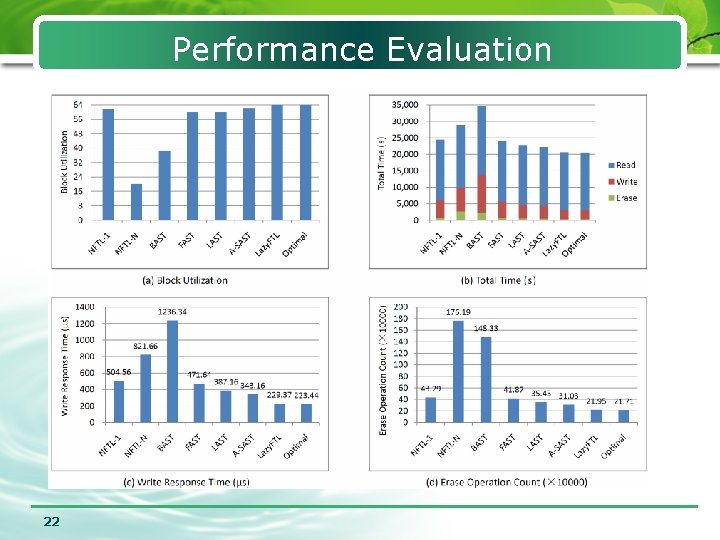

Performance Evaluation 22

Lazy. FTL • Pros Adopt an update buffer to decrease frequently update the mapping pages u Achieve consistency and reliability u Improve write performance by reduce erase operation u • Cons u Increase the cost of read operation u Decrease speedup of garbage collection u Not considering hot-cold data for wear leveling 23

Outline 24 1 Introduction 2 Flash Translation Layer 3 Address Mapping 4 Wear Leveling 5 Conclusion

Wear Leveling • Introduction Any one part of flash memory can only withstand a limited number of erase-write cycles u Localities of data access inevitably degrade wear evenness in flash u • Some definitions u Hot data block and cold data block (access frequency) u Old block and young block (erase counts) • Basic principle Prevent old blocks from being erased(cool down) u Start erasing young blocks actively(heat up) u 25

Wear Leveling l Cold data migration move cold data from young blocks to old blocks u Select young blocks when execute garbage collection u • Related work(Hybrid FTL Scheme) u A Low-Cost Wear-Leveling Algorithm for Block-Mapping Solid. State Disks (lazy scheme) (LCTES 2011) 26

• Overview Lazy Scheme u Cold data migration u Consider recency (recent wear history ) and frequency • Recency Update recency ( a logical block ) the time length since the latest update to a logical block u Erase recency ( a physical block ) the time length since the latest erase operation on a physical block u • Frequency Elder block u Junior block u 27 larger than the average erase count smaller than the average erase count

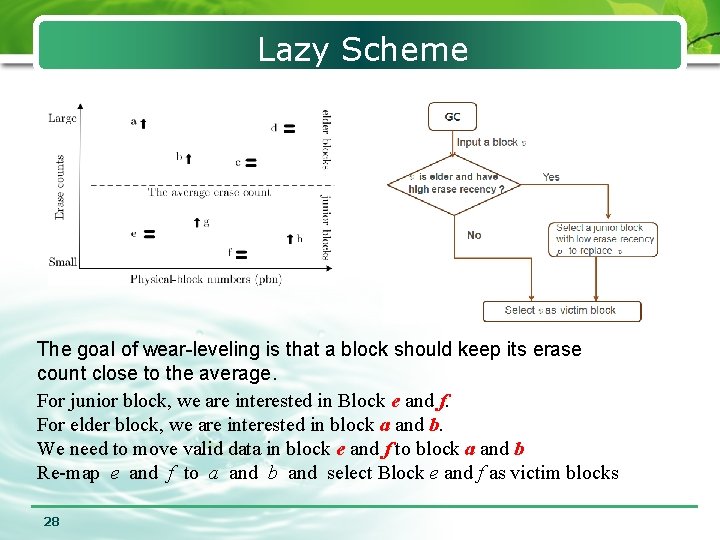

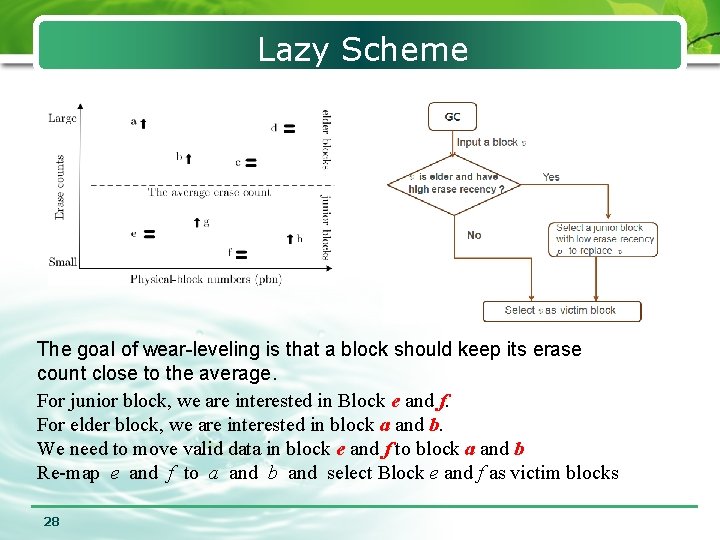

Lazy Scheme The goal of wear-leveling is that a block should keep its erase count close to the average. For junior block, we are interested in Block e and f. For elder block, we are interested in block a and b. We need to move valid data in block e and f to block a and b Re-map e and f to a and b and select Block e and f as victim blocks 28

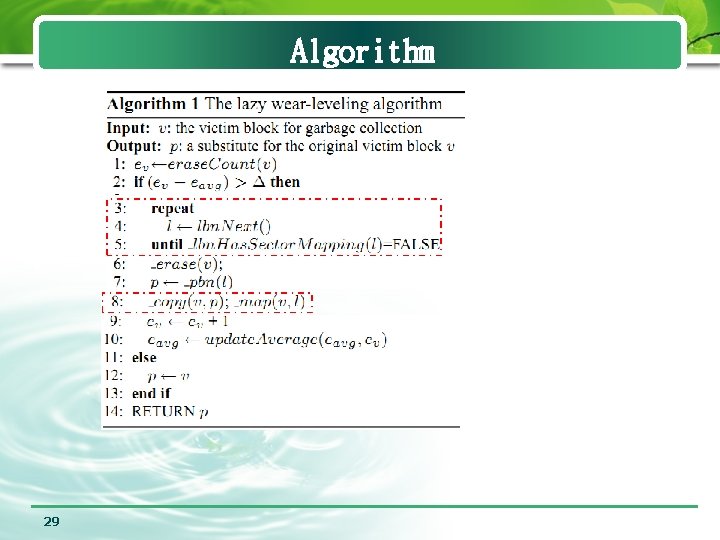

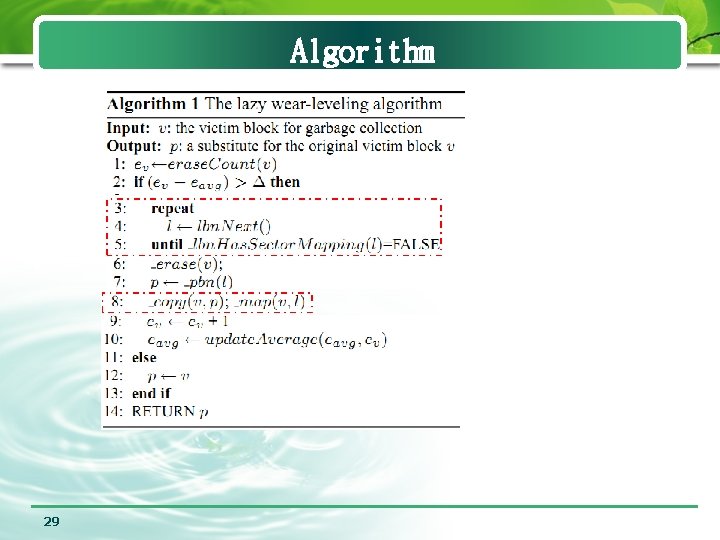

Algorithm 29

Lazy Scheme • Pros u does not store wear information in RAM, but leaves all of this information in flash instead. u utilizes the address-mapping information available and do not need to add extra data structures for wear leveling l Cons u It is important to uniformly visit every logical block when selecting a logical block for re-mapping. 30

Outline 31 1 Introduction 2 Flash Translation Layer 3 Address Mapping 4 Wear Leveling 5 Conclusion

Conclusion • Conclusion u Page mapping scheme shows the best performance in that it can decrease erase operations u It is necessary to design an efficient wear leveling scheme for Page-Mapping Solid-State disks u Some operations in FTL can be executed without interrupting current flash accesses by exploiting internal parallelism of flash memory u As write caching to reduce erase operation SSD for primary storage, auxiliary PCM 32

Thank you