Towards Practical Page Coloring Based Multicore Cache Management

Towards Practical Page Coloring Based Multi-core Cache Management Xiao Zhang Sandhya Dwarkadas Kai Shen 1

The Multi-Core Challenge • Multi-core chip – Dominant on market – Last level cache is commonly shared by sibling cores, however sharing is not well controlled • Challenge: Performance Isolation source: http: //www. intel. com – Poor performance due to conflicts – Unpredictable performance – Denial of service attacks 2

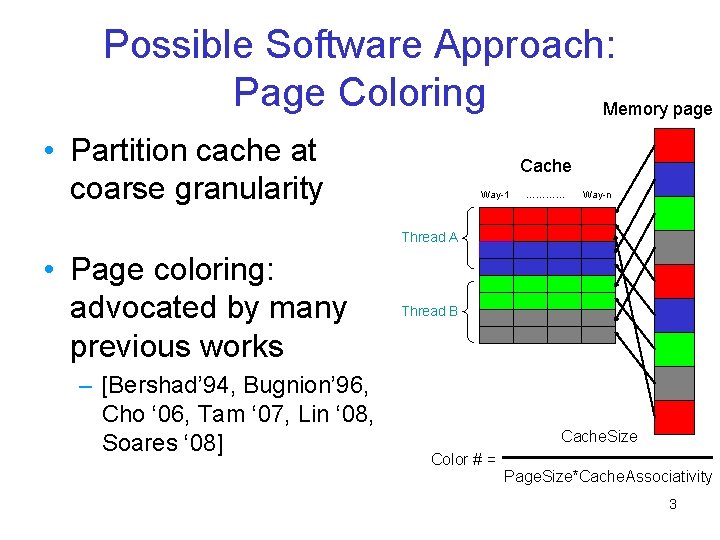

Possible Software Approach: Page Coloring Memory page • Partition cache at coarse granularity Cache Way-1 ………… Way-n Thread A • Page coloring: advocated by many previous works – [Bershad’ 94, Bugnion’ 96, Cho ‘ 06, Tam ‘ 07, Lin ‘ 08, Soares ‘ 08] Thread B Cache. Size Color # = Page. Size*Cache. Associativity 3

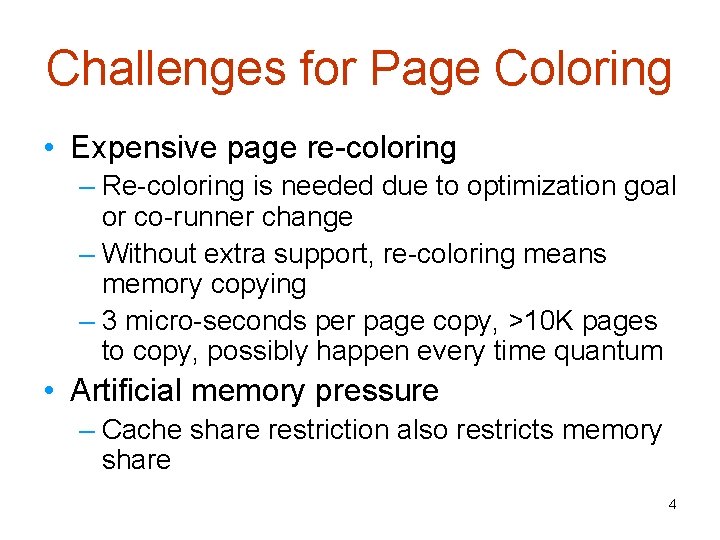

Challenges for Page Coloring • Expensive page re-coloring – Re-coloring is needed due to optimization goal or co-runner change – Without extra support, re-coloring means memory copying – 3 micro-seconds per page copy, >10 K pages to copy, possibly happen every time quantum • Artificial memory pressure – Cache share restriction also restricts memory share 4

Hotness-based Page Coloring • Basic idea – Restrain page coloring to a small group of hot pages • Challenge: – How to efficiently find out hot pages • Outline – Efficient hot page identification – Cache partition policy – Hot page coloring 5

Method to Track Page Hotness • Hardware access bits + sequential table scan – Generally available on x 86, automatically set by hardware – One bit per Page Table Entry (PTE) • Conventional wisdom: scan whole page table is expensive – Not entirely true, per-entry scan latency is overlapped by hardware prefetching – Sequential table scan spends a large portion of time on non-accessed pages, but we can improve that 6

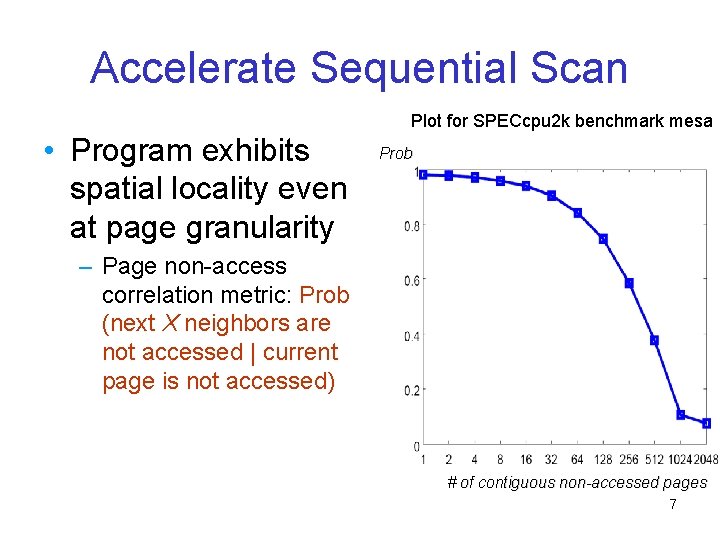

Accelerate Sequential Scan Plot for SPECcpu 2 k benchmark mesa • Program exhibits spatial locality even at page granularity Prob – Page non-access correlation metric: Prob (next X neighbors are not accessed | current page is not accessed) # of contiguous non-accessed pages 7

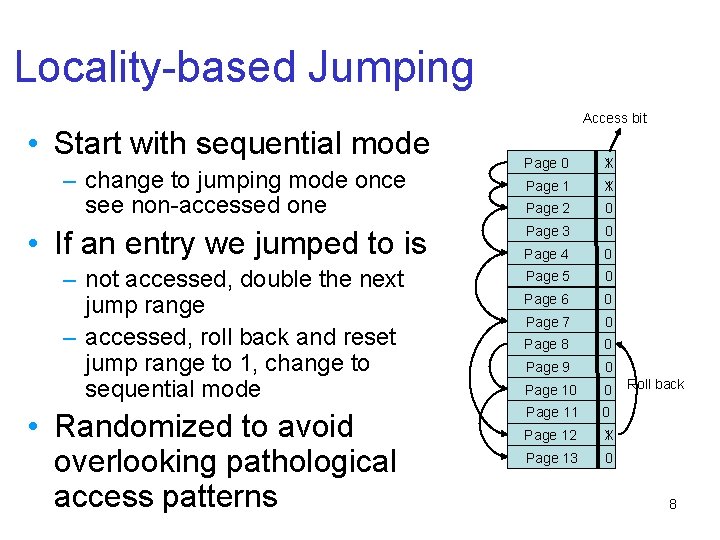

Locality-based Jumping • Start with sequential mode – change to jumping mode once see non-accessed one • If an entry we jumped to is – not accessed, double the next jump range – accessed, roll back and reset jump range to 1, change to sequential mode • Randomized to avoid overlooking pathological access patterns Access bit Page 0 1 X Page 1 1 X Page 2 0 Page 3 0 Page 4 0 Page 5 0 Page 6 0 Page 7 0 Page 8 0 Page 9 0 Page 10 0 Page 11 0 Page 12 1 X Page 13 0 Roll back 8

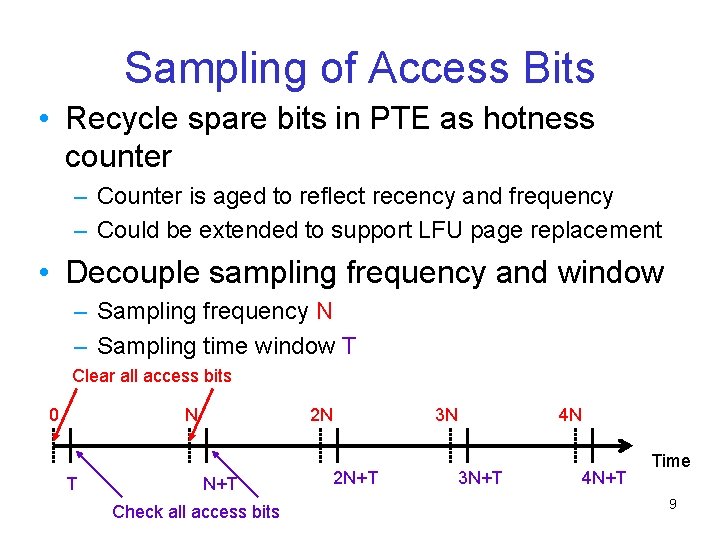

Sampling of Access Bits • Recycle spare bits in PTE as hotness counter – Counter is aged to reflect recency and frequency – Could be extended to support LFU page replacement • Decouple sampling frequency and window – Sampling frequency N – Sampling time window T Clear all access bits 0 N T 2 N N+T Check all access bits 3 N 2 N+T 4 N 3 N+T 4 N+T Time 9

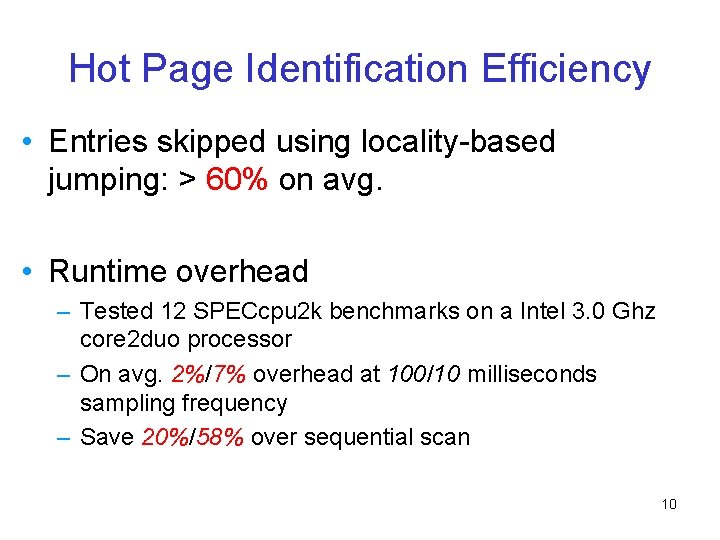

Hot Page Identification Efficiency • Entries skipped using locality-based jumping: > 60% on avg. • Runtime overhead – Tested 12 SPECcpu 2 k benchmarks on a Intel 3. 0 Ghz core 2 duo processor – On avg. 2%/7% overhead at 100/10 milliseconds sampling frequency – Save 20%/58% over sequential scan 10

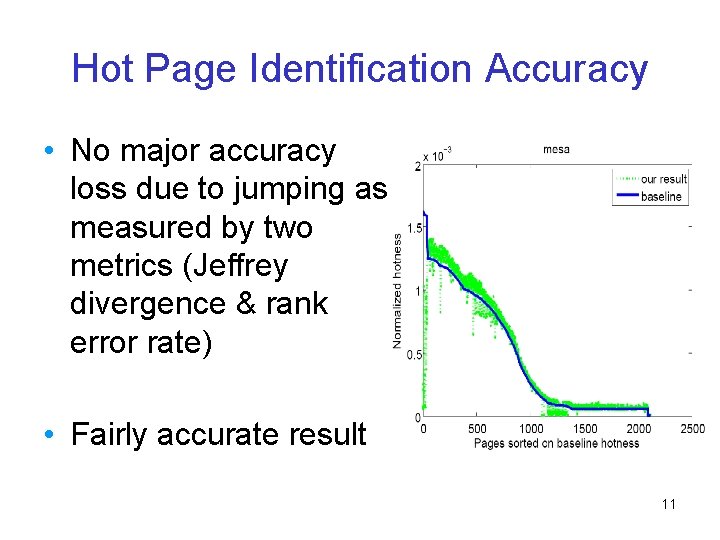

Hot Page Identification Accuracy • No major accuracy loss due to jumping as measured by two metrics (Jeffrey divergence & rank error rate) • Fairly accurate result 11

Roadmap • Efficient hot page identification - locality jumping • Cache partition policy - MRC-based • Hot page coloring 12

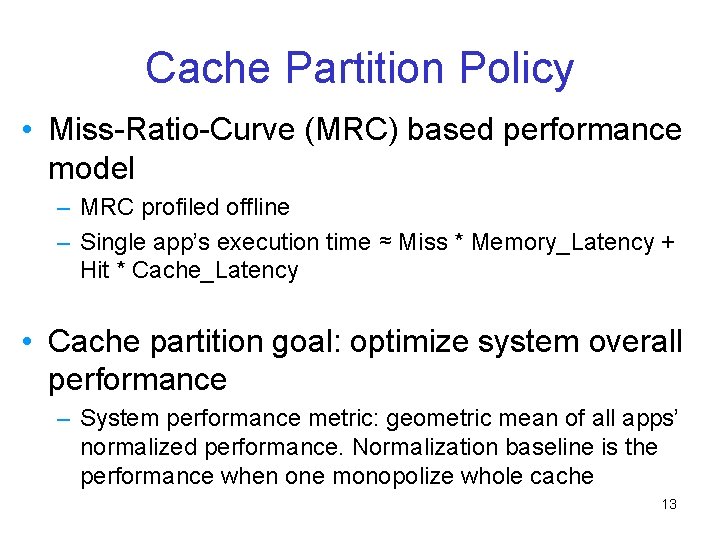

Cache Partition Policy • Miss-Ratio-Curve (MRC) based performance model – MRC profiled offline – Single app’s execution time ≈ Miss * Memory_Latency + Hit * Cache_Latency • Cache partition goal: optimize system overall performance – System performance metric: geometric mean of all apps’ normalized performance. Normalization baseline is the performance when one monopolize whole cache 13

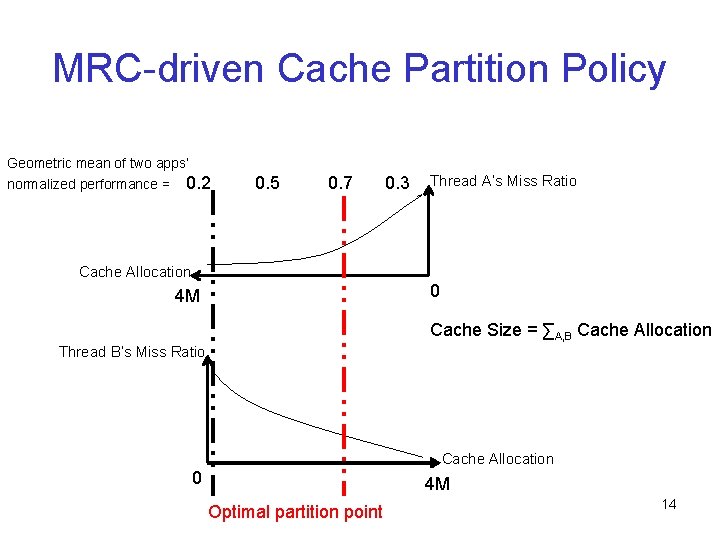

MRC-driven Cache Partition Policy Geometric mean of two apps’ normalized performance = 0. 2 0. 5 0. 7 0. 3 Thread A’s Miss Ratio Cache Allocation 0 4 M Cache Size = ∑A, B Cache Allocation Thread B’s Miss Ratio Cache Allocation 0 4 M Optimal partition point 14

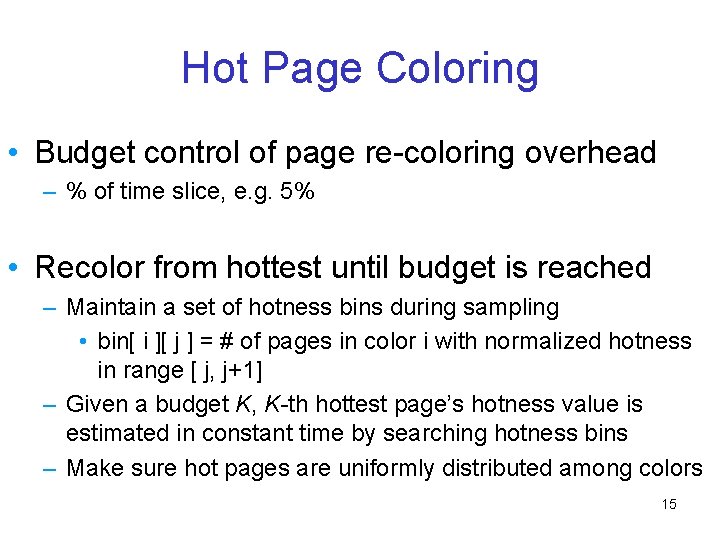

Hot Page Coloring • Budget control of page re-coloring overhead – % of time slice, e. g. 5% • Recolor from hottest until budget is reached – Maintain a set of hotness bins during sampling • bin[ i ][ j ] = # of pages in color i with normalized hotness in range [ j, j+1] – Given a budget K, K-th hottest page’s hotness value is estimated in constant time by searching hotness bins – Make sure hot pages are uniformly distributed among colors 15

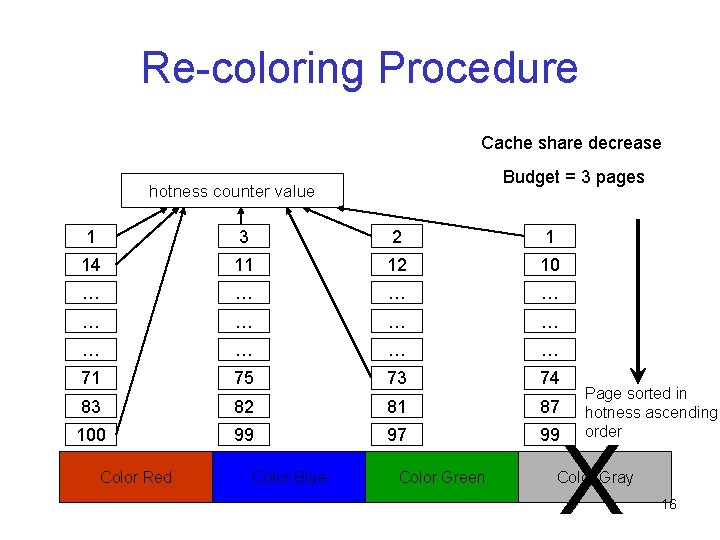

Re-coloring Procedure Cache share decrease Budget = 3 pages hotness counter value 1 3 2 1 14 11 12 10 … … … 71 75 73 74 83 82 81 87 100 99 97 99 Color Red Color Blue Color Green Page sorted in hotness ascending order X Color Gray 16

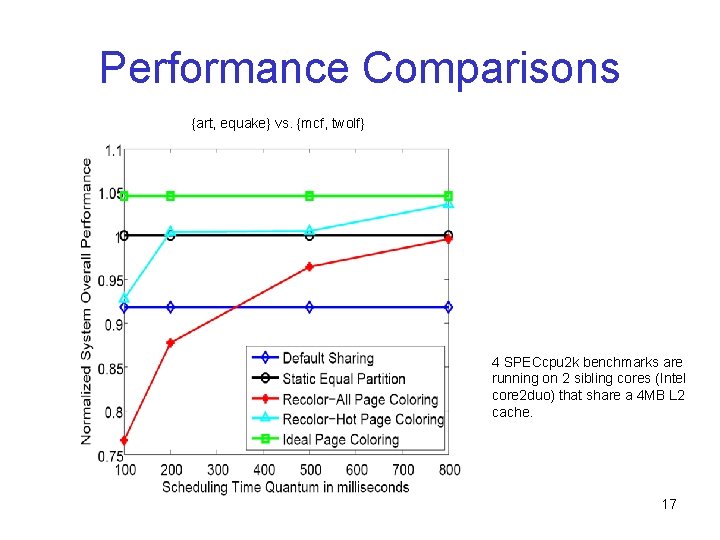

Performance Comparisons {art, equake} vs. {mcf, twolf} 4 SPECcpu 2 k benchmarks are running on 2 sibling cores (Intel core 2 duo) that share a 4 MB L 2 cache. 17

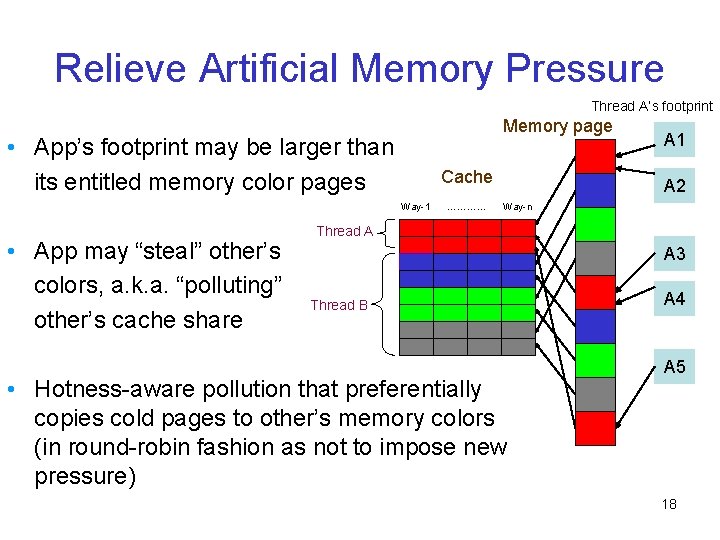

Relieve Artificial Memory Pressure Thread A’s footprint Memory page • App’s footprint may be larger than its entitled memory color pages Cache Way-1 • App may “steal” other’s colors, a. k. a. “polluting” other’s cache share ………… A 1 A 2 Way-n Thread A A 3 Thread B • Hotness-aware pollution that preferentially copies cold pages to other’s memory colors (in round-robin fashion as not to impose new pressure) A 4 A 5 18

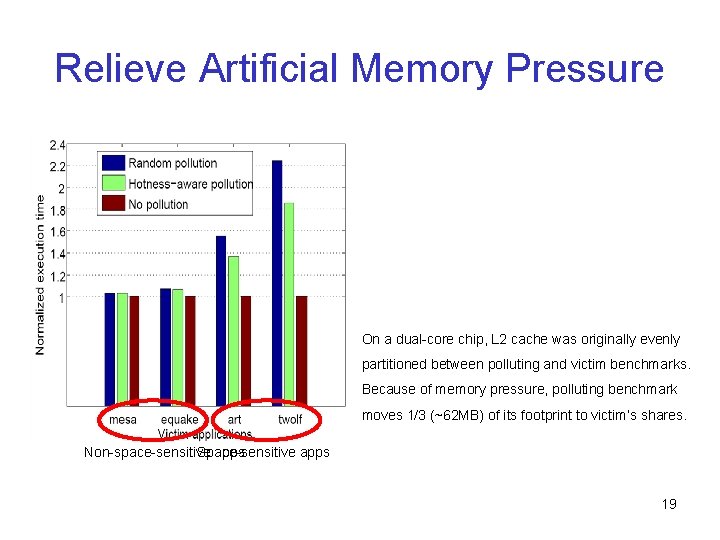

Relieve Artificial Memory Pressure On a dual-core chip, L 2 cache was originally evenly partitioned between polluting and victim benchmarks. Because of memory pressure, polluting benchmark moves 1/3 (~62 MB) of its footprint to victim’s shares. Non-space-sensitive Space-sensitive apps 19

Summary • Contributions: – Efficient hot page identification that can potentially be used by multiple applications – Hotness-based page coloring to mitigate two drawbacks: memory pressure & re-coloring cost • Caveat: large time quantum still required to amortize overhead • Ongoing work: – exploring other possible approaches • e. g. Execution throttling based cache management [USENIX’ 09] 20

- Slides: 20