Towards Perfect Supervised and Unsupervised Machine Translation Statistical

- Slides: 45

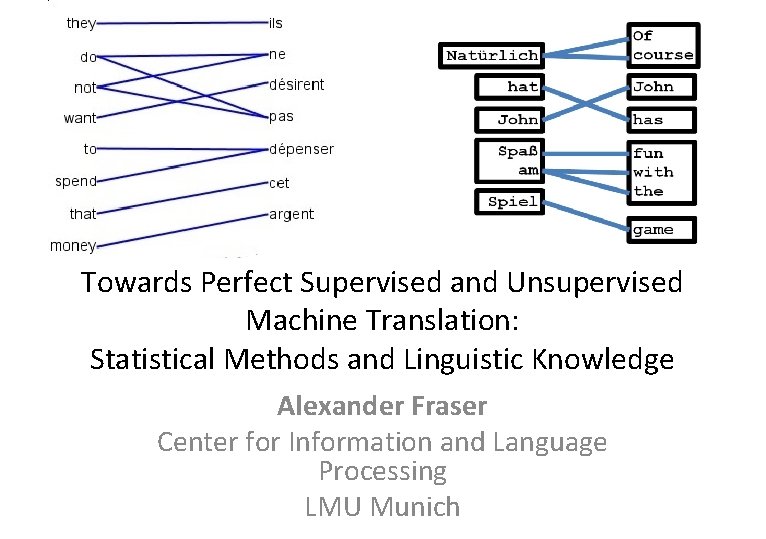

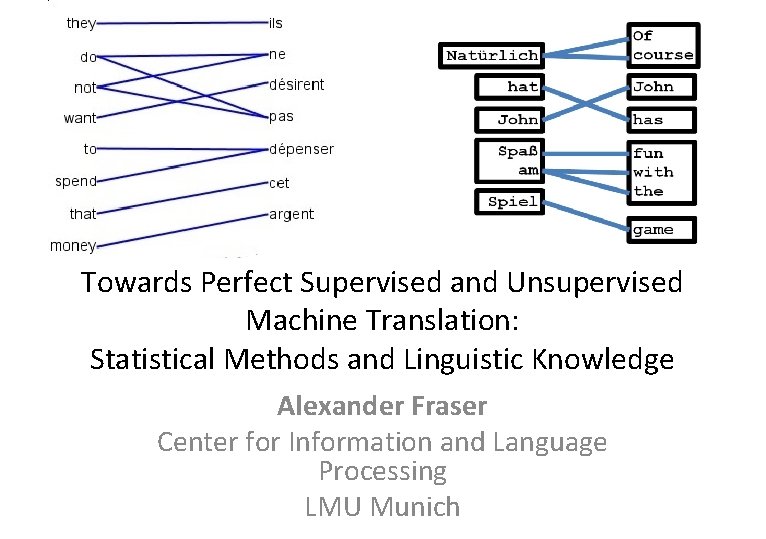

Towards Perfect Supervised and Unsupervised Machine Translation: Statistical Methods and Linguistic Knowledge Alexander Fraser Center for Information and Language Processing LMU Munich

Alexander Fraser • I'm American, from Boston – However, despite this, I speak 4 European languages and Arabic • Ph. D in Computer Science – 2007 University of Southern California / Information Sciences Institute – Work in Intelligent Systems Division (AI department: Daniel Marcu, Kevin Knight, Ed Hovy) – Also extensive industry experience (first statistical machine translation product) and additional international non-profit experience • Since 2007 in Germany – Currently Professor of Information and Language Processing at LMU Munich – Interdisciplinary: mainly teaching Machine Learning (ML) and Natural Language Processing (mostly ML approaches), but also some linguistics and basic computer science/programming

Motivation: Machine Translation • How can we break through language barriers? • How can we. . . find all of the information there is on a topic on the web, no matter what language it is written in? . . . understand newspapers around the world? . . . translate things that otherwise would not be translated at all due to manpower/financial constraints? . . . automate boring repetitive translation tasks, allowing human translators to focus on fun and challenging translations? • Solution: high quality machine translation!

Data-Driven Machine Translation • Previous approach was so-called rule-based machine translation – Human experts writing rules • Current state-of-the-art uses supervised machine learning: learn how to translate from examples – Examples are pairs of sentences (a sentence and its translation) • Phrase-Based Statistical Machine Translation (PBSMT), previously best, still used in some scenarios • Neural Machine Translation (NMT), deep learning approach

Why is data-driven MT research interesting? • Structured prediction – Sentiment Analysis is not structured prediction: label a movie review with one of 3 classes: positive, neutral or negative sentiment – Machine Translation is structured prediction: label a 30 word English input sentence with a 28 word German translation (!) • Uses world and contextual knowledge (later in talk) • Evaluation – There are many right answers, the training data contains just one of the alternatives! • Applicability – MT is basically a language modeling problem. Anything with text outputs is also a language modeling problem. – Feature engineering on text is done with representations from language models and MT (e. g. , ULMFi. T, BERT, MASS, …). Our research: multilingual representations – We can apply MT models to problems like image captioning with little change, just combine an image encoder with our standard text decoder

4 weaknesses of data-driven MT • I will talk about 4 problems with data-driven MT: 1. Phrase-Based SMT is unable to deal with syntactic divergence (i. e. , when the word order of the target language is very different from the source) • Solution: a new SMT translation model 2. Morphological richness causes data sparsity in MT • Solution: generalize over morphological phenomena 3. MT is strongly dependent on the domain of the training data • Solution: develop new domain-adaptation techniques for MT 4. MT is supervised, requiring a large number of parallel sentences • Solution: develop unsupervised MT

1. Improving the modeling of syntax in SMT • Novel model: Operation Sequence Model • New model overcoming problems with phrasebased model • Joint work with Durrani and Schmid – Durrani's 2013 Ph. D thesis won GSCL prize for best NLP thesis in Germany from 2011 -2013 – Additional work with Philipp Koehn and Hinrich Schütze – Numerous papers at *ACL conferences

www. uni-stuttart. de Motivation: Long Distance Reordering in German-to-English SMT • Er hat ein Buch gelesen He read a book • Er hat gestern Nachmittag mit seiner kleinen Tochter, die aufmerksam zugehört hat, und seinem Sohn, der lieber am Computer ein Videogame gespielt hätte, ein spannendes Buch gelesen • We want a model that – captures ”hat … gelesen = read” – captures the generalization that an arbitrary amount of stuff can occur between hat and gelesen (in the so-called "mittelfeld") – is a simple left-to-right model

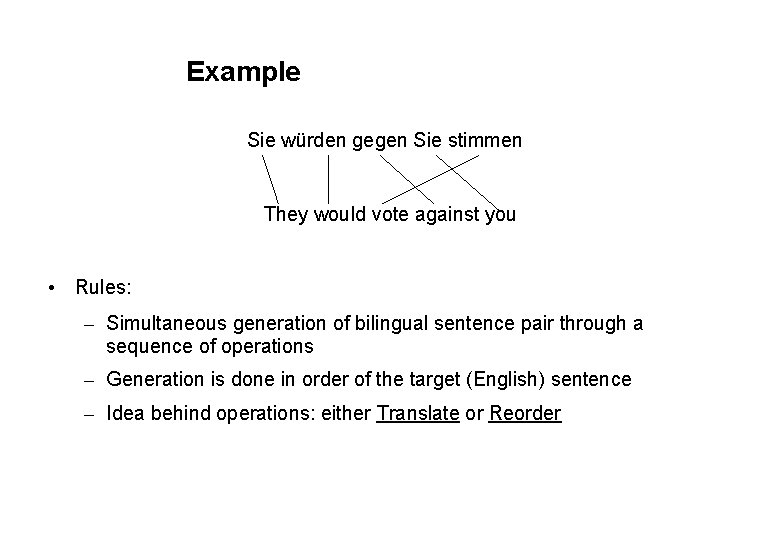

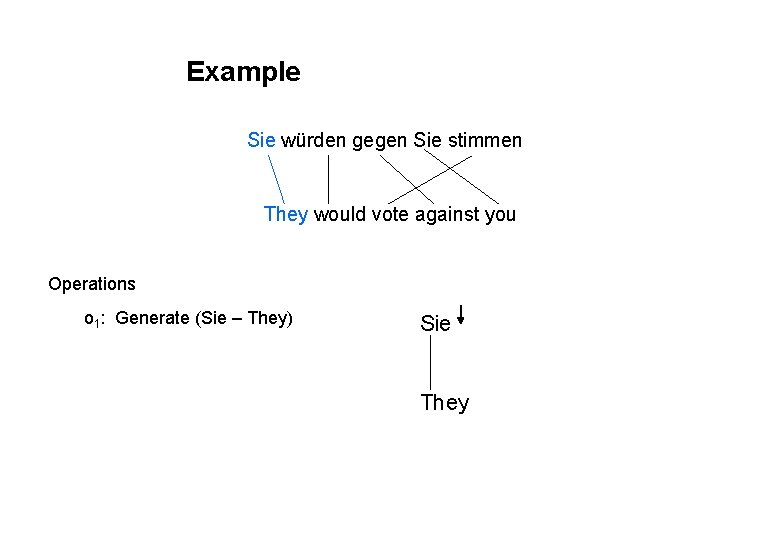

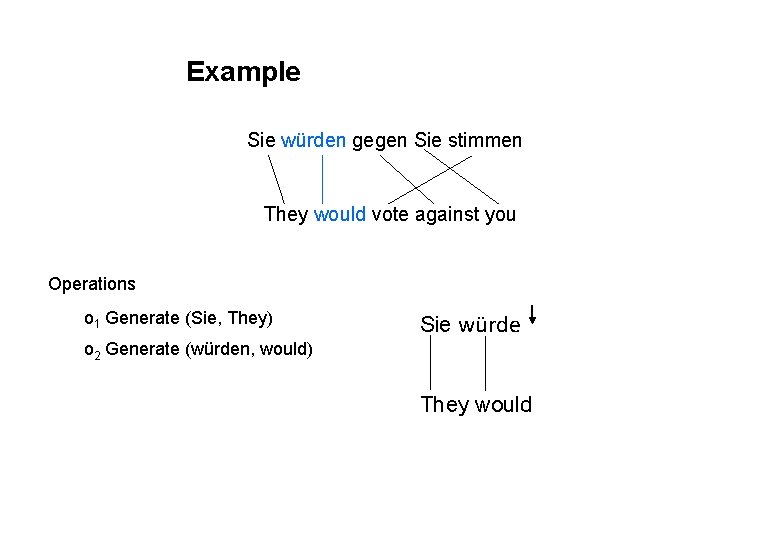

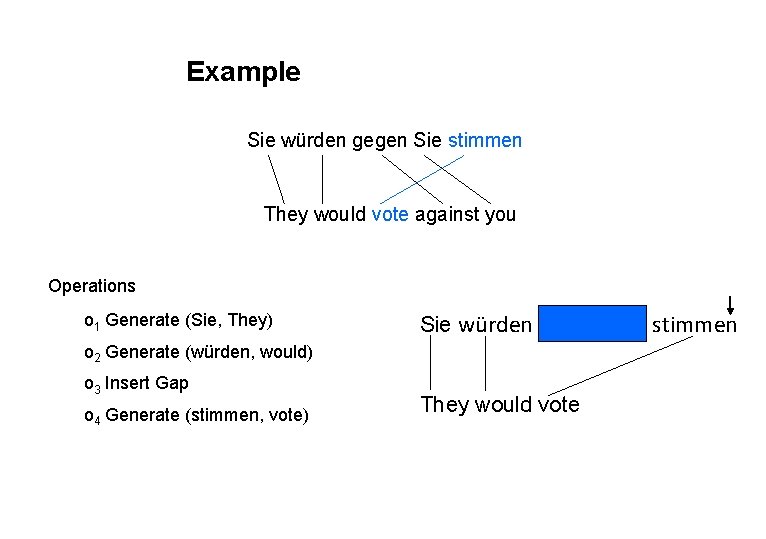

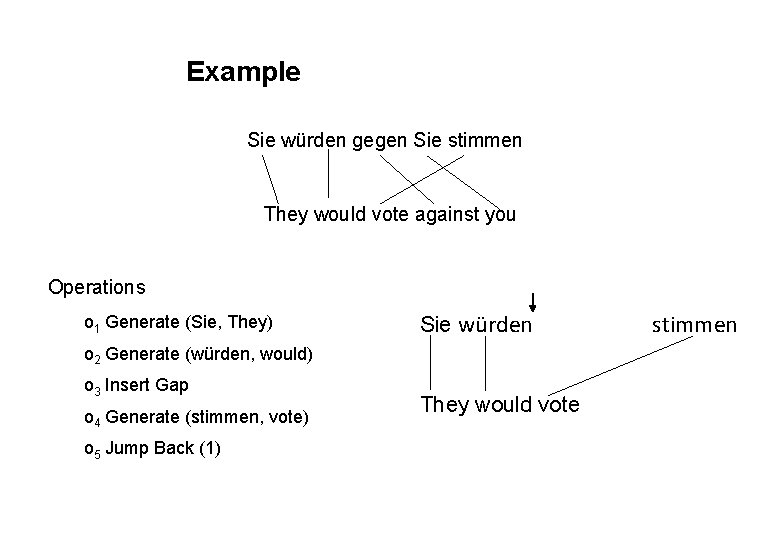

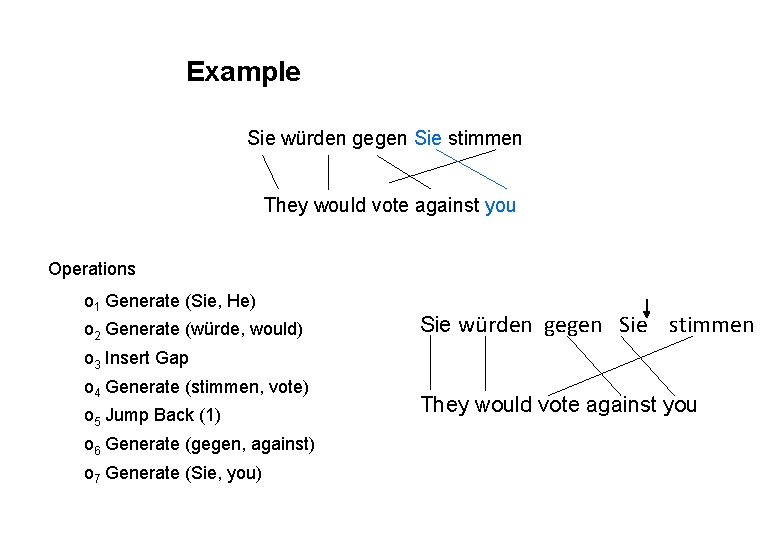

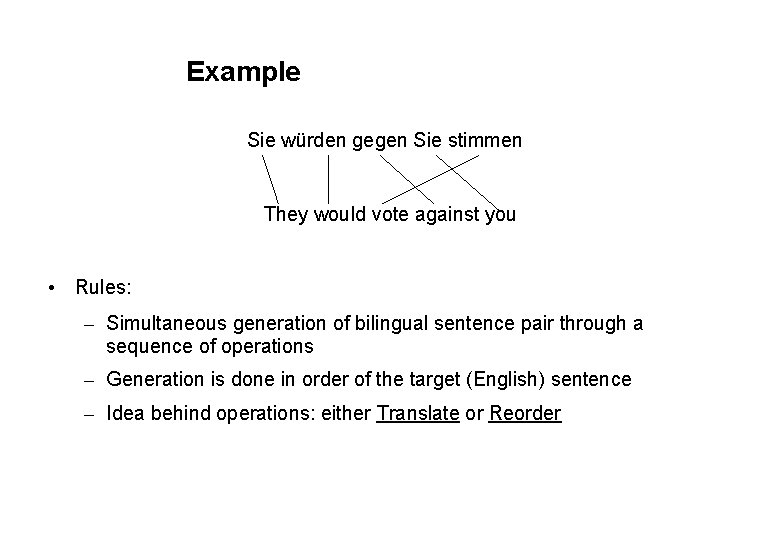

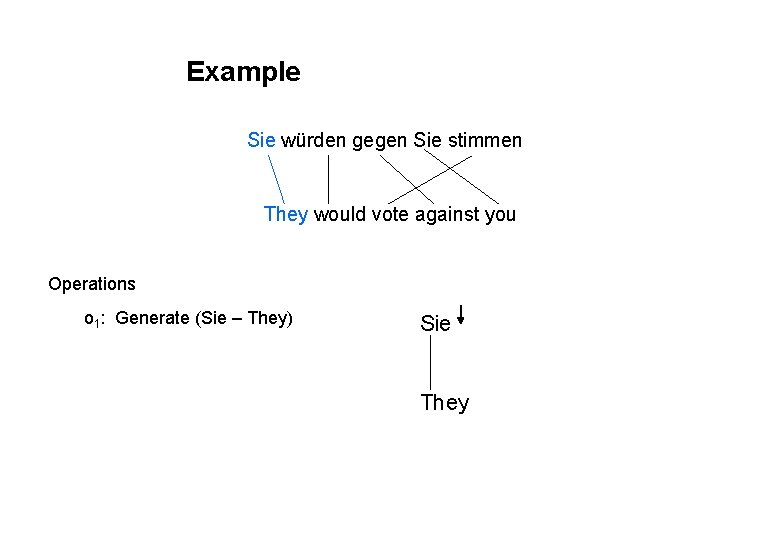

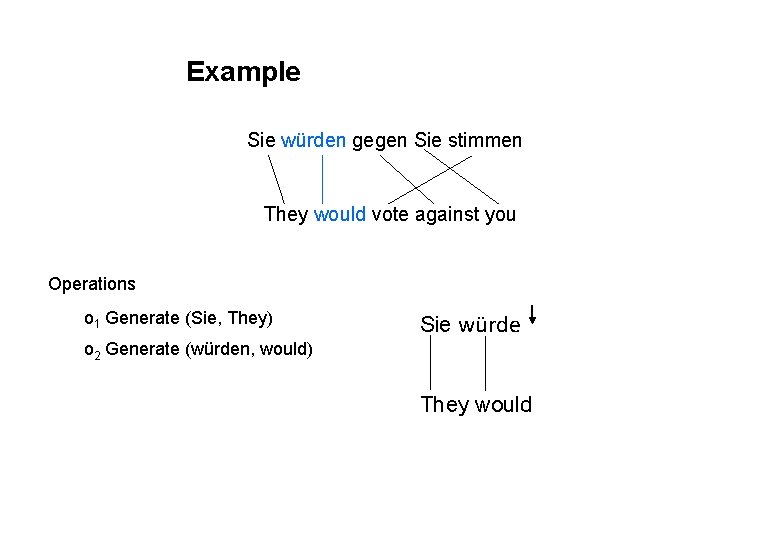

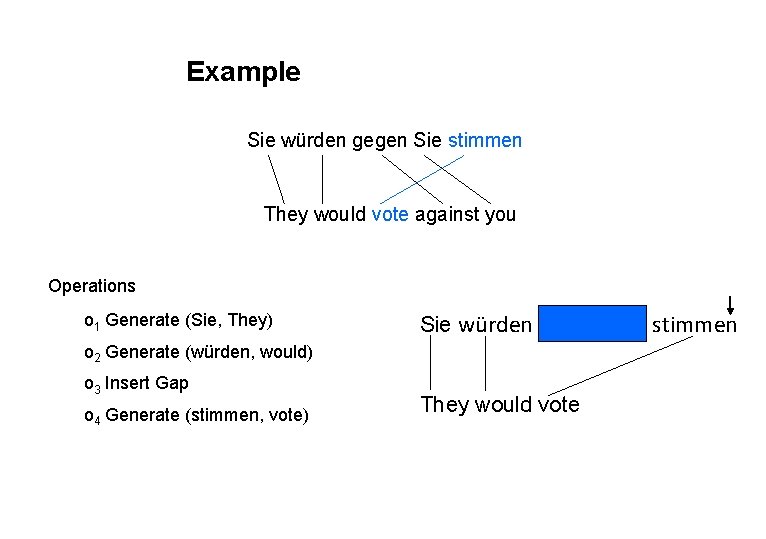

www. uni-stuttart. de Example Sie würden gegen Sie stimmen They would vote against you • Rules: – Simultaneous generation of bilingual sentence pair through a sequence of operations – Generation is done in order of the target (English) sentence – Idea behind operations: either Translate or Reorder

www. uni-stuttart. de Example Sie würden gegen Sie stimmen They would vote against you Operations o 1: Generate (Sie – They) Sie They

www. uni-stuttart. de Example Sie würden gegen Sie stimmen They would vote against you Operations o 1 Generate (Sie, They) Sie würde o 2 Generate (würden, would) They would

www. uni-stuttart. de Example Sie würden gegen Sie stimmen They would vote against you Operations o 1 Generate (Sie, They) Sie würden o 2 Generate (würden, would) o 3 Insert Gap They would

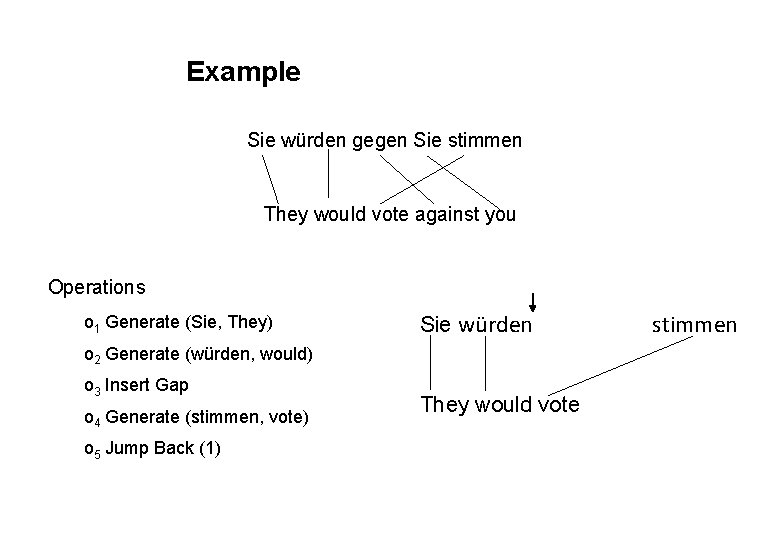

www. uni-stuttart. de Example Sie würden gegen Sie stimmen They would vote against you Operations o 1 Generate (Sie, They) Sie würden o 2 Generate (würden, would) o 3 Insert Gap o 4 Generate (stimmen, vote) They would vote stimmen

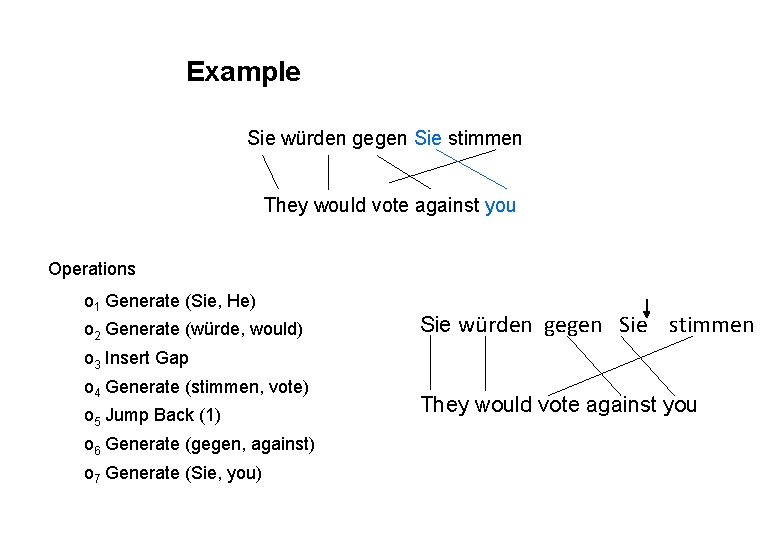

www. uni-stuttart. de Example Sie würden gegen Sie stimmen They would vote against you Operations o 1 Generate (Sie, They) Sie würden o 2 Generate (würden, would) o 3 Insert Gap o 4 Generate (stimmen, vote) o 5 Jump Back (1) They would vote stimmen

www. uni-stuttart. de Example Sie würden gegen Sie stimmen They would vote against you Operations o 1 Generate (Sie, They) o 2 Generate (würden, would) Sie würden gegen o 3 Insert Gap o 4 Generate (stimmen, vote) o 5 Jump Back (1) o 6 Generate (gegen, against) They would vote against stimmen

www. uni-stuttart. de Example Sie würden gegen Sie stimmen They would vote against you Operations o 1 Generate (Sie, He) o 2 Generate (würde, would) Sie würden gegen Sie stimmen o 3 Insert Gap o 4 Generate (stimmen, vote) o 5 Jump Back (1) o 6 Generate (gegen, against) o 7 Generate (Sie, you) They would vote against you

www. uni-stuttart. de Model • Joint probability model over operation sequences Sie würden stimmen <GAP> They o 1 would o 2 Sie against you o 6 o 7 <Jump Back> vote o 3 gegen o 4 o 5 Context window: 9 -gram model

Results and outlook • Operation sequence model overcomes problems with the phrase-based model • Models minimal translation units well that are highly dependent on one another but not contiguous, unlike phrase-based • Reordering is integrated with lexical generation • Operation sequence model is available as a feature function in the latest version of Moses (open-source statistical machine translation toolkit) • The model is widely acknowledged to lead to actual improvements in systems in large scale evaluation campaigns such as WMT and IWSLT • Standardly used in all competitive PBSMT systems • What I didn't talk about: our related work on synchronous grammars, particularly Synchronous Context-Free Grammars (SCFG), Synchronous Tree Substitution Grammars (STSG)

www. uni-stuttart. de 2. Morphological productivity: translating from English to German is difficult! A huge problem in translating to German is morphological productivity Words in noun phrases have context-dependent inflection in German New German compounds are created every day! DFG two-phase project and Health in My Language (Him. L) Horizon 2020 project DFG project: four Ph. D theses (all four excellent female researchers)

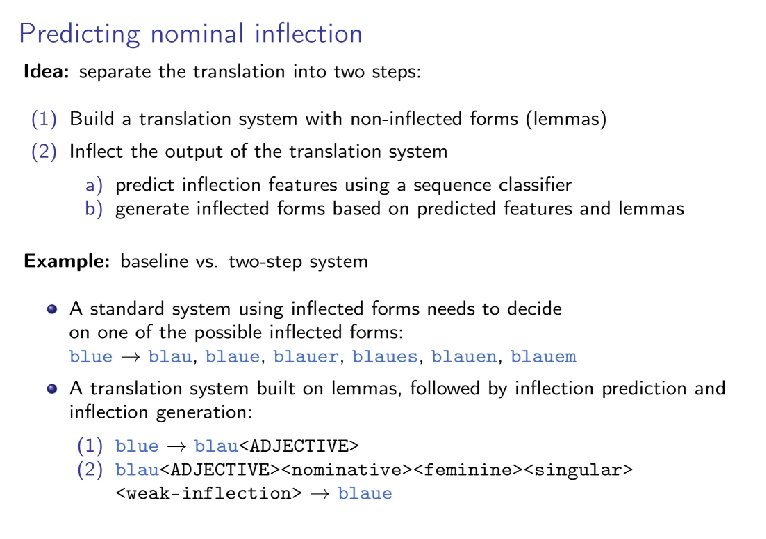

Full English to German linguistic pipeline • Use classifiers to classify English clauses with their German word order • Predict German verbal features like person, number, tense, aspect • Translate English words to German lemmas (with split compounds) • Create compounds by merging adjacent lemmas – Use a sequence classifier to decide where and how to merge lemmas to create compounds • Determine how to inflect German noun phrases (and prepositional phrases) – Use a sequence classifier to predict nominal features – I'll discuss this part briefly

Improving morphological generalization • German is a morphologically rich language • The morphology of German causes problems of sparsity • For instance, new German compounds are created every day!

Results and outlook • Pipeline morphosyntax approach results in robust improvements in standard tasks (such as political/news) – See: Fraser et al. EACL 2012, Weller et al. ACL 2013, Cap et al. EACL 2014, four more *ACL papers • Also improvements on medical translation tasks – Him. L: EU Horizon 2020 Innovation Action with Edinburgh and Prague – Worked on consumer health information and deployed systems in two non-profits – Links up with my pre-Ph. D past. ICTs and health Information in developing countries at Satel. Life • No time to talk about: – We also implemented joint inference directly in the Moses PBSMT decoder (ACL and EACL papers) – Joint inference over morphology and word stems in Neural Machine Translation, large gains in performance (ACL and ACL WMT papers) – Related idea on linguistic segmentation won prestigious ACL Conference on MT shared task in 2017

3. Domain adaptation for MT • MT works well when translating sentences from the same domain as the parallel training data • What about new domains? • In domains like consumer health or medical, we have little or no parallel data – How can we deal with this problem? • I organized a "summer workshop" (= crash research project, 13 people for 6 weeks) at Johns Hopkins on this topic – Co-organizers: Hal Daume (Maryland), Marine Carpuat (National Research Council Canada), Chris Quirk (Microsoft Research) • I was awarded an ERC Starting Grant by PE 6 (Computer Science) to continue this work and try a number of new approaches to solve this problem – I will present our work on "Document as Domain" in some detail – Then I will quickly present the other of the two main areas we work in, mining from comparable corpora, which helps to setup "Unsupervised MT"

ERC St. G: Domain Adaptation for MT • My ERC is on Domain Adaptation for MT • Traditional domain adaptation techniques in SMT and NMT have focused on the corpus as a proxy for domain • If we have plentiful parallel data in the legal domain, we can translate legal documents • But what if we do not have such data?

Roadmap: Domain Adaptation • I will first briefly introduce NMT in detail • Then I will contrast three approaches to domain adaptation • The running example is the translation of this English snippet to German Input: … that is a beautiful seal • But first some basics (not our work!)

Transformer NMT - Encoder • The Transformer is the state-of-theart sentence level model for NMT • This has largely replaced Recurrent Neural Network based formulations • The lefthand side shows the encoder • Inputs are a sequence of words • The first layer is a set of word embeddings (one per word-type) • This input is processed using 6 layers of feed forward networks with attention • Attention allows the network to focus on what is important for each position Graphic from Vaswani et al. 2017, p 3

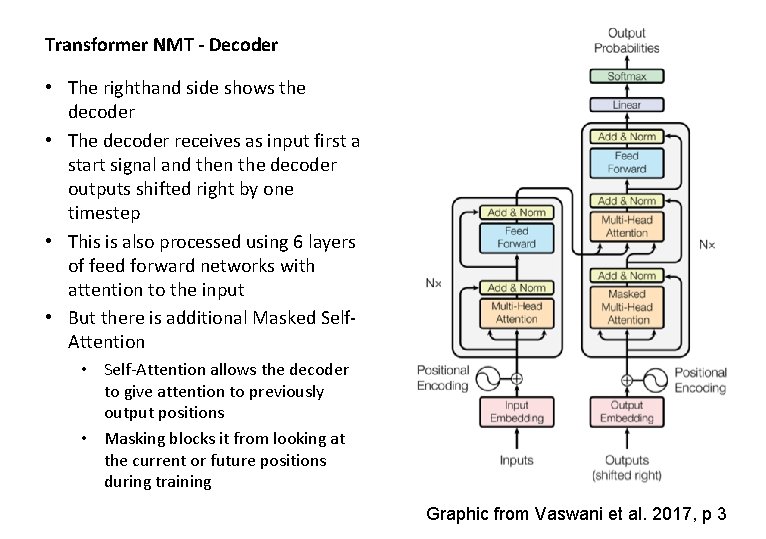

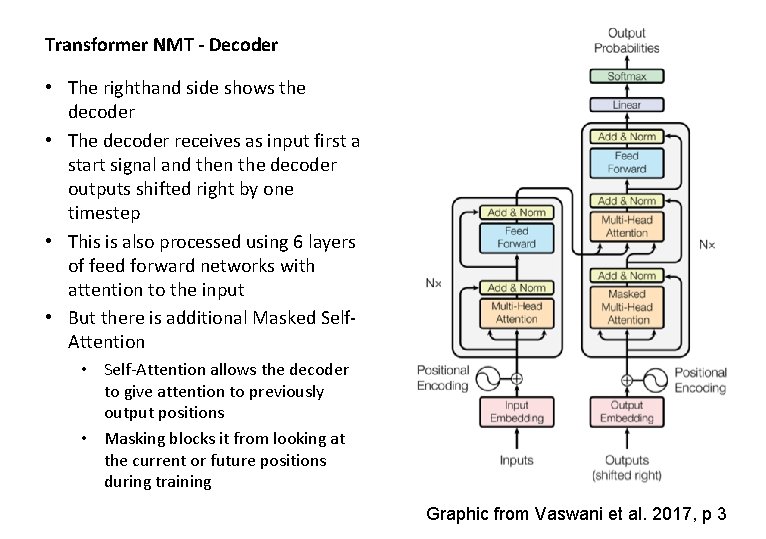

Transformer NMT - Decoder • The righthand side shows the decoder • The decoder receives as input first a start signal and then the decoder outputs shifted right by one timestep • This is also processed using 6 layers of feed forward networks with attention to the input • But there is additional Masked Self. Attention • Self-Attention allows the decoder to give attention to previously output positions • Masking blocks it from looking at the current or future positions during training Graphic from Vaswani et al. 2017, p 3

No domain knowledge • … that is a beautiful seal. • … das ist ein schöner Seehund. • Looks great? • Here is some context: I asked the notary. She said that is a beautiful seal. • Try this in Google Translate – it gets it wrong!

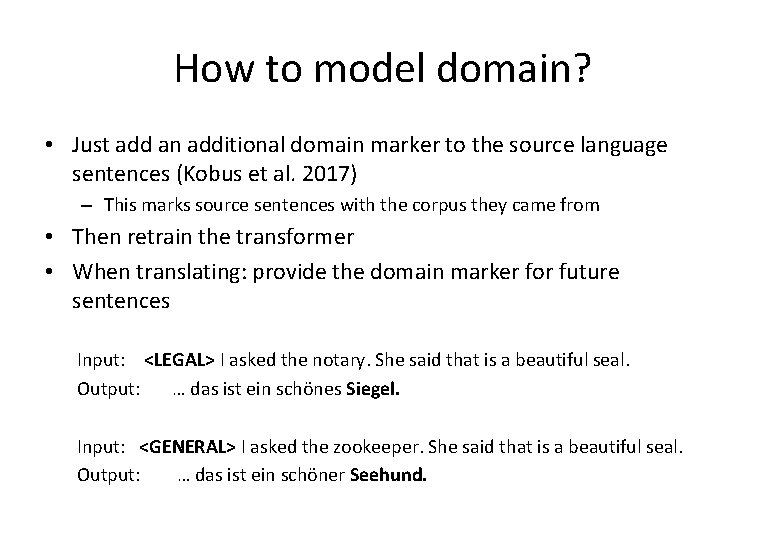

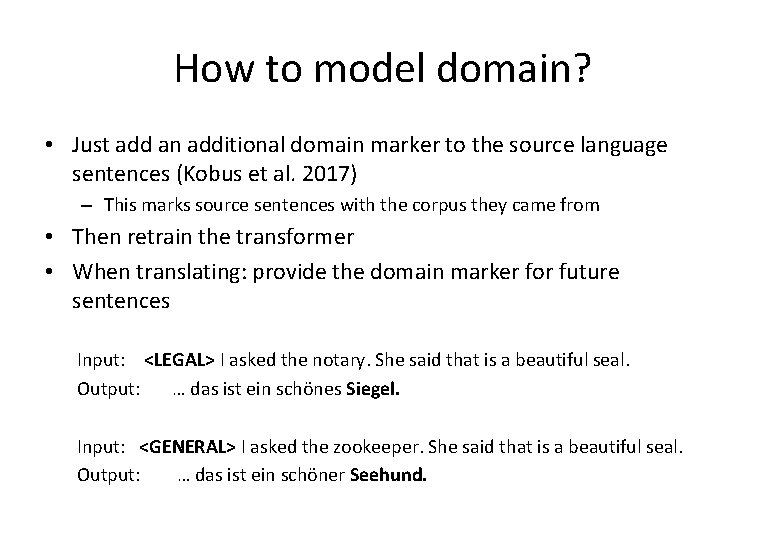

How to model domain? • Just add an additional domain marker to the source language sentences (Kobus et al. 2017) – This marks source sentences with the corpus they came from • Then retrain the transformer • When translating: provide the domain marker for future sentences Input: <LEGAL> I asked the notary. She said that is a beautiful seal. Output: … das ist ein schönes Siegel. Input: <GENERAL> I asked the zookeeper. She said that is a beautiful seal. Output: … das ist ein schöner Seehund.

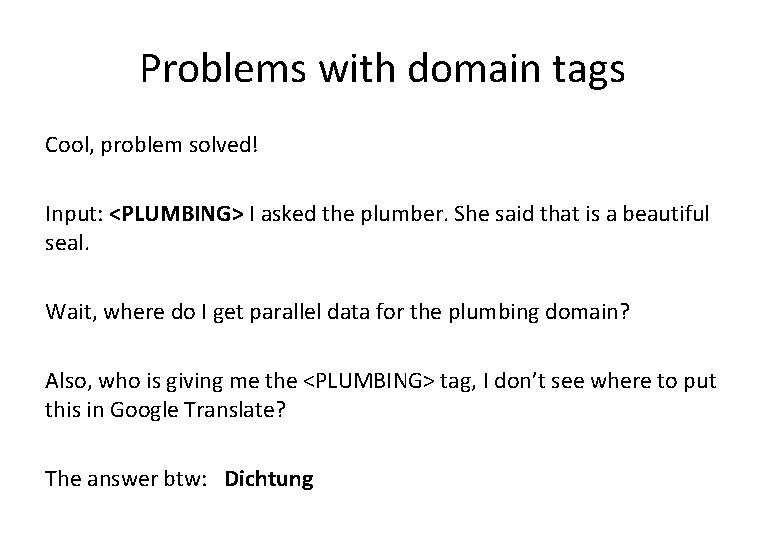

Problems with domain tags Cool, problem solved! Input: <PLUMBING> I asked the plumber. She said that is a beautiful seal. Wait, where do I get parallel data for the plumbing domain? Also, who is giving me the <PLUMBING> tag, I don’t see where to put this in Google Translate? The answer btw: Dichtung

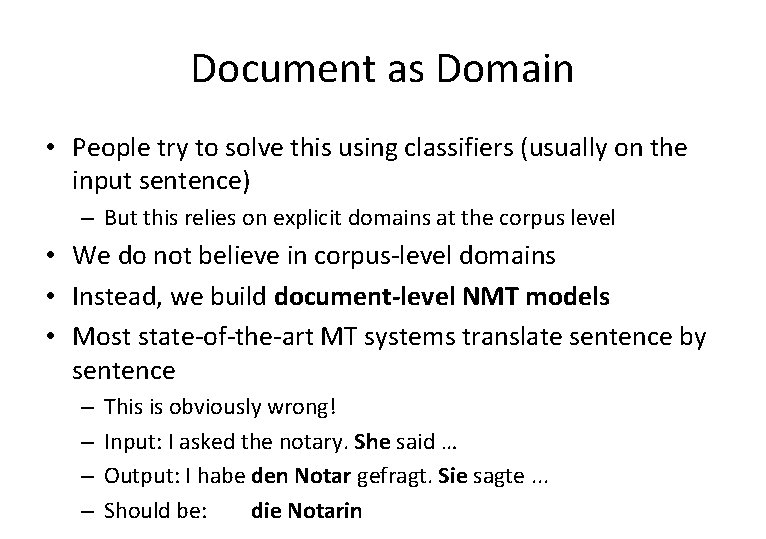

Document as Domain • People try to solve this using classifiers (usually on the input sentence) – But this relies on explicit domains at the corpus level • We do not believe in corpus-level domains • Instead, we build document-level NMT models • Most state-of-the-art MT systems translate sentence by sentence – – This is obviously wrong! Input: I asked the notary. She said … Output: I habe den Notar gefragt. Sie sagte. . . Should be: die Notarin

Document-level Domain Adaptation for NMT • We would like to condition the translation of all words on their document-level context • The baseline model does this very well for single sentences – However, attention is quadratic in the sentence length. We can’t view a document as a long sentence! • We have existing work on pronoun translation: Input: That is a beautiful dog. It ran away. Output: … Er … • New idea: model domain at the document level

Domain Adaptation Without Knowing the Domain • We work with two models here, I will present these on the next slide • The encoder shown to the right is from our Document NMT model, which we originally proposed for pronoun translation in 2019 • The part on the right is almost the same encoder I already explained • The part on the left encodes the context (the sentences in the document that we are currently not translating) • The first 5 layers are shared • The two representations are combined using a gate • (There is also a decoder version of this, not presented) Stojanovski and Fraser 2019, p 3

Domain Adaptation Without Knowing the Domain • First model: • At the word level, add a document embedding • This is part of the input embedding • This is motivated by Kobus’s domain tags, but we learn this endto-end (like the embedding layer) • We use no knowledge of domain/corpus • Second model (not shown): • Create a summarized representation of the document using max pooling over windows of 10 words for all context sentences • This effectively combines the contextual word embeddings • Also trained end-to-end, also no knowledge of domain/corpus Stojanovski and Fraser 2019, p 3

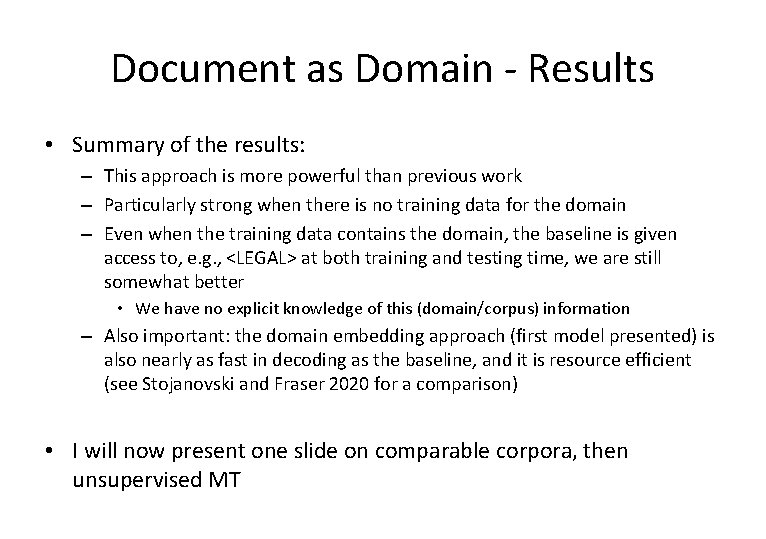

Document as Domain - Results • Summary of the results: – This approach is more powerful than previous work – Particularly strong when there is no training data for the domain – Even when the training data contains the domain, the baseline is given access to, e. g. , <LEGAL> at both training and testing time, we are still somewhat better • We have no explicit knowledge of this (domain/corpus) information – Also important: the domain embedding approach (first model presented) is also nearly as fast in decoding as the baseline, and it is resource efficient (see Stojanovski and Fraser 2020 for a comparison) • I will now present one slide on comparable corpora, then unsupervised MT

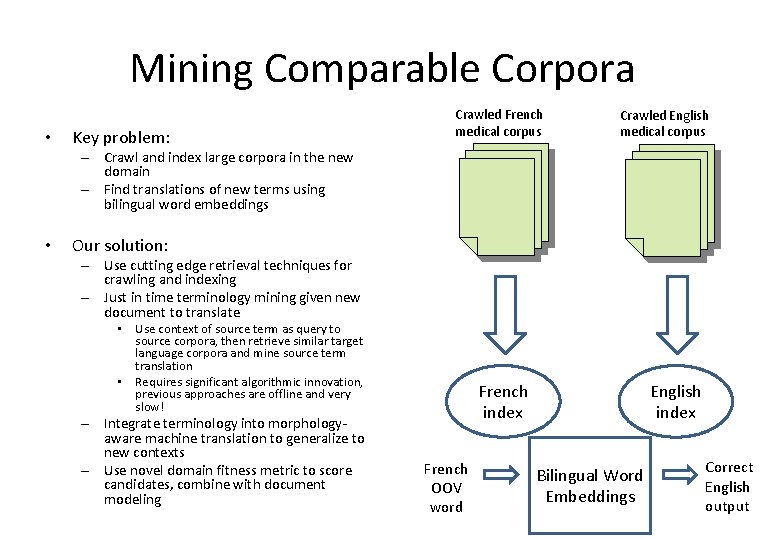

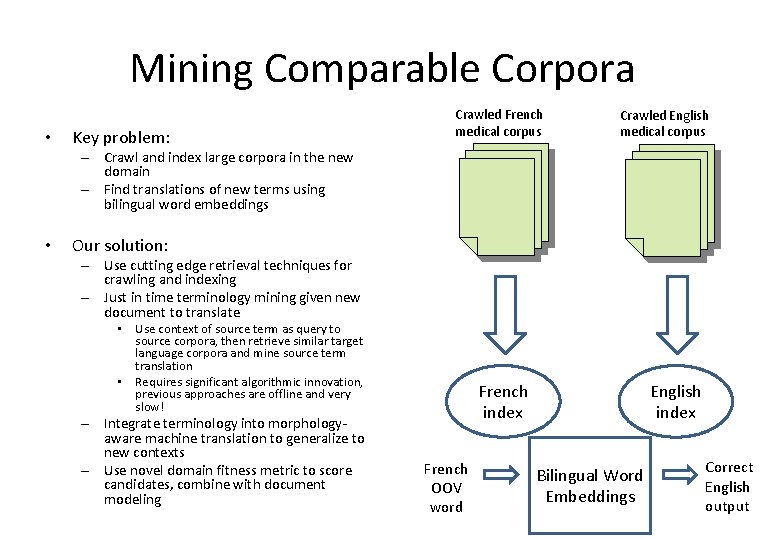

Mining Comparable Corpora • Key problem: Crawled French medical corpus Crawled English medical corpus – Crawl and index large corpora in the new domain – Find translations of new terms using bilingual word embeddings • Our solution: – Use cutting edge retrieval techniques for crawling and indexing – Just in time terminology mining given new document to translate • Use context of source term as query to source corpora, then retrieve similar target language corpora and mine source term translation • Requires significant algorithmic innovation, previous approaches are offline and very slow! – Integrate terminology into morphologyaware machine translation to generalize to new contexts – Use novel domain fitness metric to score candidates, combine with document modeling French index French OOV word --- English index Bilingual Word Embeddings --- Correct English output

Results • We have extensive work on mining translations that are semantically translated (e. g. , Hangya, others, publications at NAACL, ACL) • Mostly lightly supervised systems • We already have systems for unsupervised mining of: – Translations of words that are transliterated (Sajjad Ph. D thesis and several *ACL papers) – Parallel sentences from comparable corpora (first paper on supervised extraction in 2004 with Munteanu, 2018 publications present first unsupervised extraction approach) – Out-of-vocabulary (OOV) words, words which we need to translate but that are not in our training data (Huck et al ACL 2019) • Our success here leads us directly to the fourth problem

Unsupervised Machine Translation I • Wouldn’t it be great to translate to languages which don’t have large amounts of translations to any language available? • For instance, minority languages • Take the case of Upper Sorbian (spoken in the Oberlausitz in Eastern Germany) – Can we build MT from German to Upper Sorbian without parallel data?

Unsupervised Machine Translation II • The answer is yes! – We can use unsupervised techniques to create Bilingual Word Embeddings between Upper Sorbian and German – Use these to generate rough translations of real Upper Sorbian sentence to (broken, noisy) German sentences – Then build an neural machine translation system that is robust to noise in the input to translate real German to real Lower Sorbian – Can iterate translation in both directions to further improve system

Unsupervised Machine Translation III • We have fairly good systems already • We are working on: – – – Improving bilingual word embeddings Increasing robustness to noise Scaling of GPU training Implementing semi-supervised pipelines Pretraining using transformer language models (like m. BERT) Many other ideas! • I am the main organizer of the shared task on this at the ACL Conference on Machine Translation this year • Finally: this also has important implications for building cross-lingual systems – We have state-of-the-art Spanish sentiment analysis built only using English training data! (Hangya et al. ACL 2018) – Also ongoing work on detecting hate speech

Summary • I've presented four areas where I am already a well-known leader – – Statistical modeling of translation Syntax and morphology for MT Domain adaptation for MT Unsupervised machine translation

Scientific Outlook I • I will continue work on new models for MT – In particular, there are significant hurdles in computation as we make models more complex – There aspects of Big Data and Scientific Computation that are highly relevant • I'm also excited about the possibilities to shift modalities – Speech and image processing are both clear areas of interest – We are all using similar models now!

Scientific Outlook II • I'm also interested in linguistic levels going beyond morphology and syntax (semantics, discourse) • At the semantics level I have several collaborations • At the discourse level, working on coreference – We have work on translating pronouns in subtitles (this is a spoken genre) – Would be interesting to expand this to use images/video • There is a lot of potential to collaborate here – Better linguistic and situational knowledge -> better translation • I'd also like to continue applying our techniques to new domains and languages – For instance, continue work on bio / medical / consumer health – Work on new low resource languages (e. g. , Hiligaynon), also historical

Scientific Outlook III • Finally, I am interested in deploying MT in human translation pipelines – Computer-aided Translation (CAT) – Massively multilingual Content Management. For instance, can we hold an encyclopedia (Wikipedia) in sync in multiple languages? – Creative Literary Translation (and improving the life of literary translators) • Enabling CAT in a domain-adaptation scenario is a long-time interest of mine • I expect to have to do significant modeling of how humans work with a translation workbench – How can we offer suggestions without disrupting their flow? – See, for example, Michael Carl's work on using eye-trackers • This is an area where I see a lot of possibility to work with a mix of people experienced in intelligent interfaces and user-modeling

Thank You! • Thanks for your attention • Credits to my entire team, thank you! • Contact: fraser@cis. lmu. de • (or see my webpage, also for current and former team members, all publications are available)