towards a Tracking Machine Learning challenge status David

- Slides: 23

towards a Tracking Machine Learning challenge status David Rousseau (ATLAS-LAL) and Andreas Salzburger (CERN) with Paolo Calafiura (LBNL), David Clark (LBNL), Markus Elsing (CERN), Armin Farbin (UTA), , Cécile Germain (LAL/LRI) , Isabelle Guyon (Charlearn/LRI), Vincenzo Innocenti (CERN), Jean-Roch Vlimant (Cal. Tech) Simons DS@HEP Workshop 6 th July 2016

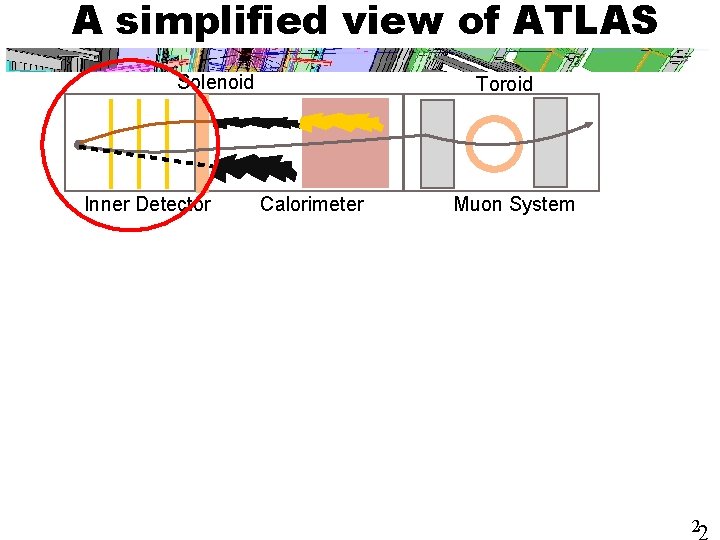

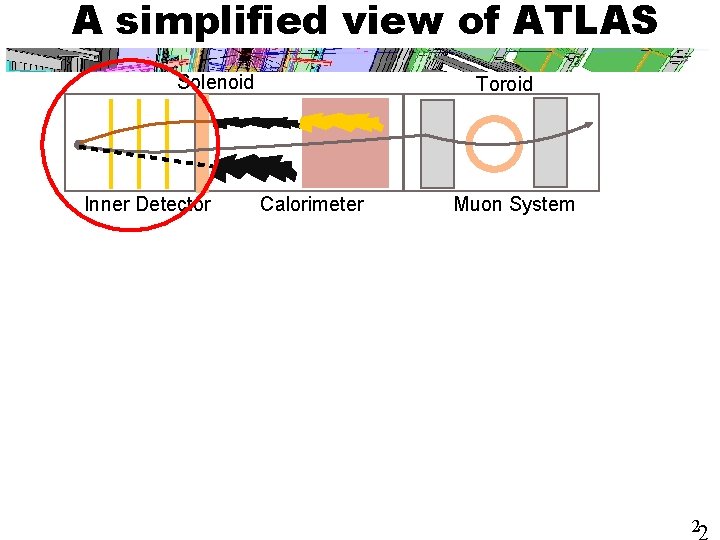

A simplified view of ATLAS Solenoid Inner Detector Toroid Calorimeter Muon System 2

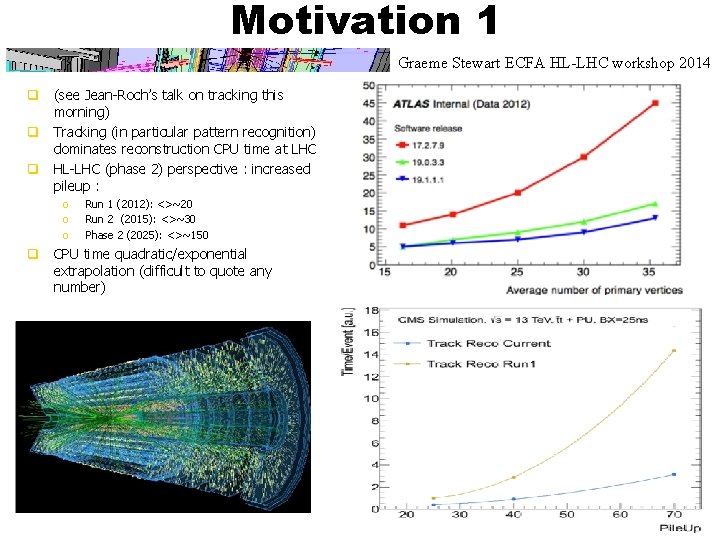

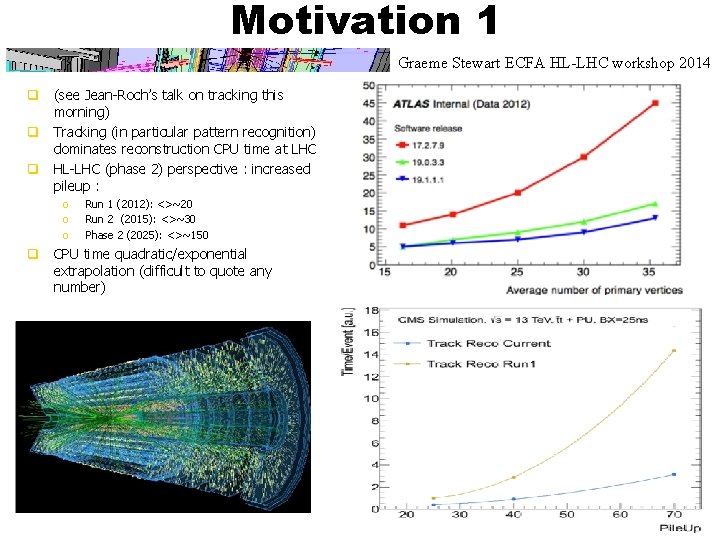

Motivation 1 Graeme Stewart ECFA HL-LHC workshop 2014 q (see Jean-Roch’s talk on tracking this morning) q Tracking (in particular pattern recognition) dominates reconstruction CPU time at LHC q HL-LHC (phase 2) perspective : increased pileup : o o o Run 1 (2012): <>~20 Run 2 (2015): <>~30 Phase 2 (2025): <>~150 q CPU time quadratic/exponential extrapolation (difficult to quote any number) 3

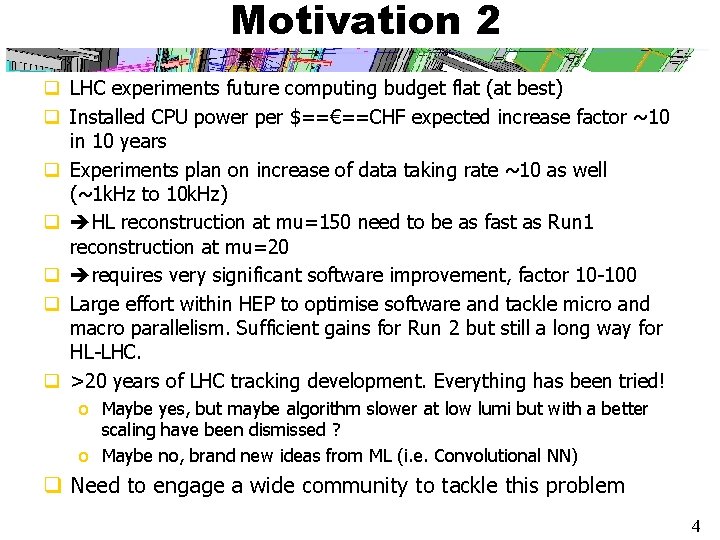

Motivation 2 q LHC experiments future computing budget flat (at best) q Installed CPU power per $==€==CHF expected increase factor ~10 in 10 years q Experiments plan on increase of data taking rate ~10 as well (~1 k. Hz to 10 k. Hz) q HL reconstruction at mu=150 need to be as fast as Run 1 reconstruction at mu=20 q requires very significant software improvement, factor 10 -100 q Large effort within HEP to optimise software and tackle micro and macro parallelism. Sufficient gains for Run 2 but still a long way for HL-LHC. q >20 years of LHC tracking development. Everything has been tried! o Maybe yes, but maybe algorithm slower at low lumi but with a better scaling have been dismissed ? o Maybe no, brand new ideas from ML (i. e. Convolutional NN) q Need to engage a wide community to tackle this problem 4

Interacting with Machine Learners q Suppose we want to improve the tracking of our experiment q We (in HEP) read the literature, go to workshops, hear/read about an interesting technique (e. g. RANSAC, Conv. Nets…). Then: o Try to figure by ourself what can work, and start coding traditional way o Find an expert of the new technique, have regular coffee/beer, get confirmation that the new technique might work, and get implementation tips better q …repeat with each technique. . . q Much much better: o Release a data set, with a figure of merit, and have the expert do the coding him/herself o he has the software and the know-how so he’ll be (much) faster even if he does not know anything about our domain at start o engage multiple techniques and experts simultaneously (e. g. 2000 people participated to the Higgs Machine Learning challenge) in a comparable way o even better if people can collaborate o a challenge is a dataset with a buzz 5

Motivating Machine Learners q (ML people in the room please disagree…) q Why would ML experts spend days/week/months working for free on our problem? o Interesting new problem (for them) o Potential for publications (beware of experiment policies) o Prestige § High Energy Physics, “CERN”, “Higgs” § Kaggle : Higgs. ML winner was hired by Deep. Mind, XGBoost co-author got a US visa and a Ph. D grant o (money) q The key is the dataset and associated material (figure of merit) q The challenge is just a way to advertise the dataset, and organise the collaboration between experts q The learning threshold to participate should be as low as possible (to entice the experts to spend time on our challenge not another): o Relatively easy for a classification problem o Less so for a tracking challenge (no on the shelf solution) q In particular, things should be presented with ML vocabulary (e. g. “classifier” instead of MVA, “feature” instead of “variable”, “false positive” instead of “accepted background”, etc…. 6

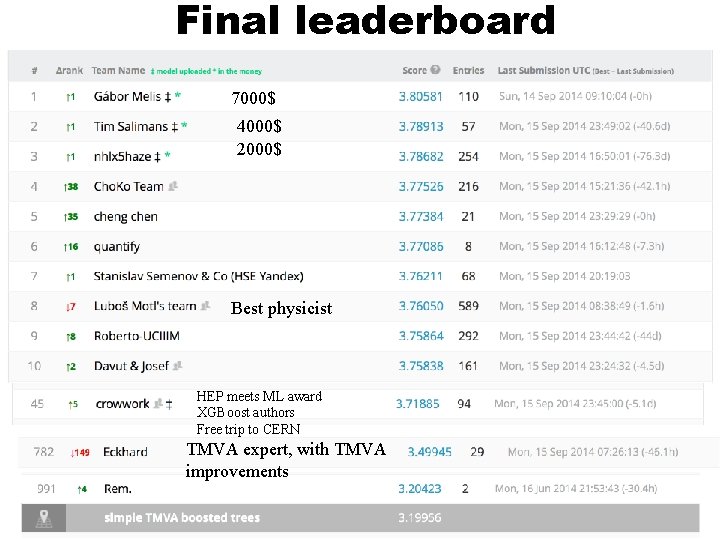

Higgs Machine learning challenge q See talk DR CTD 2015 Berkeley q An ATLAS Higgs signal vs background classification problem, optimising statistical significance q Ran in summer 2014 q 2000 participants (largest on Kaggle at that time) q Outcome o Best significance 20% than with TMVA o BDT algorithm of choice in this case where number variables and number of training events limited (NN very slightly better but much more difficult to tune) o XGBoost best BDT on the market (quite wide spread nowadays) o Wealth of ideas, documented in JMLR proceedings v 42 o Still working on what works in real life what does not o Raised awareness about ML in HEP 7

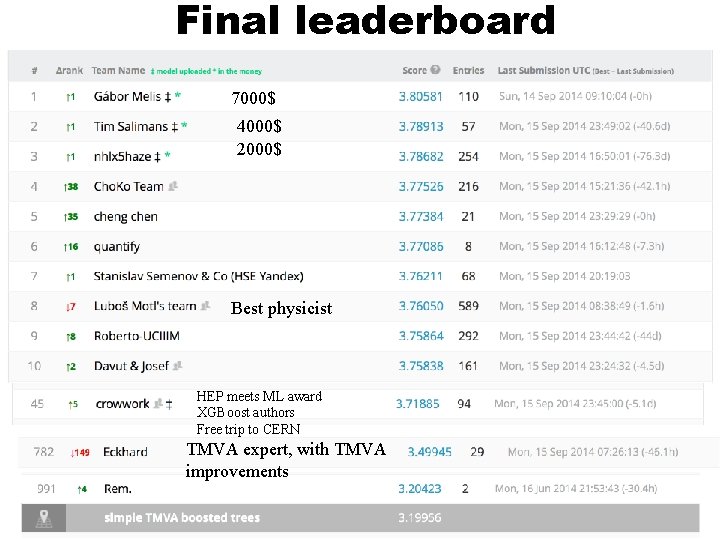

Final leaderboard 7000$ 4000$ 2000$ Best physicist HEP meets ML award XGBoost authors Free trip to CERN TMVA expert, with TMVA improvements 8

From domain to challenge and back Domain e. g. HEP Challenge organisation Challenge 18 months? Problem simplify Domain experts solve the domain problem 4 months? The crowd solves the challenge problem >n months/years ? Solution reimport Solution 9

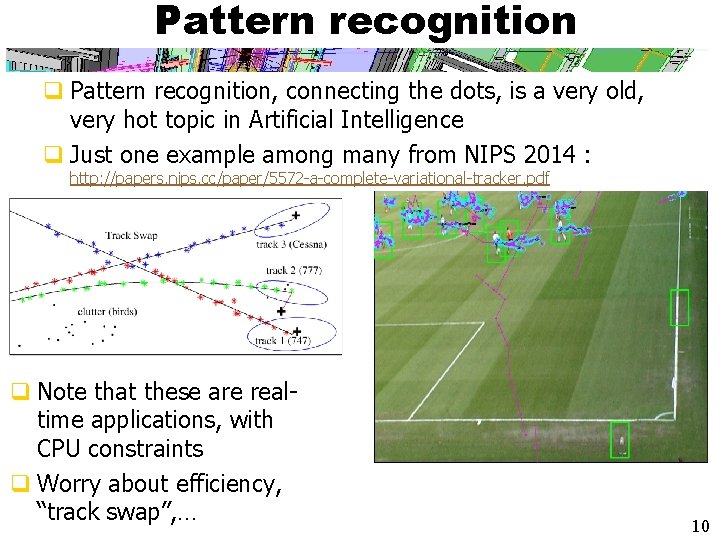

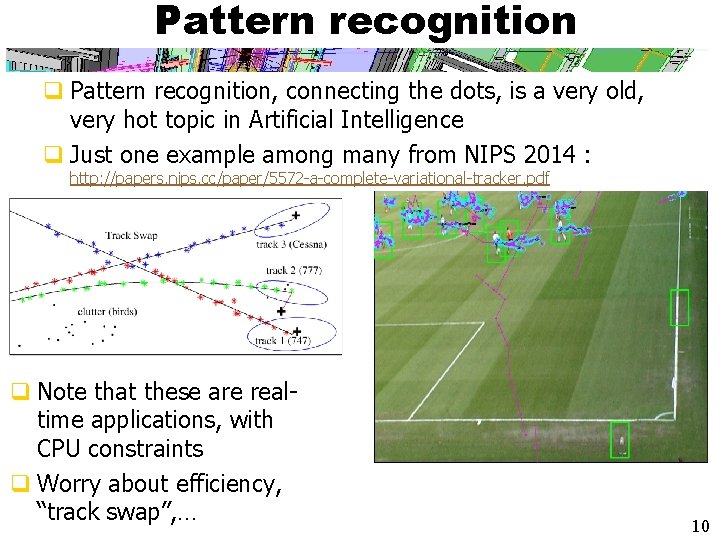

Pattern recognition q Pattern recognition, connecting the dots, is a very old, very hot topic in Artificial Intelligence q Just one example among many from NIPS 2014 : http: //papers. nips. cc/paper/5572 -a-complete-variational-tracker. pdf q Note that these are realtime applications, with CPU constraints q Worry about efficiency, “track swap”, … 10

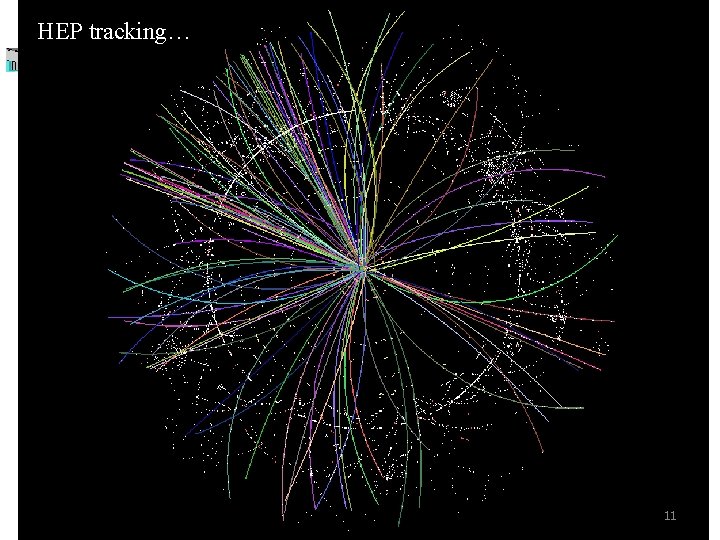

HEP tracking… 11 11

…fascinates ML experts (right? ) 12

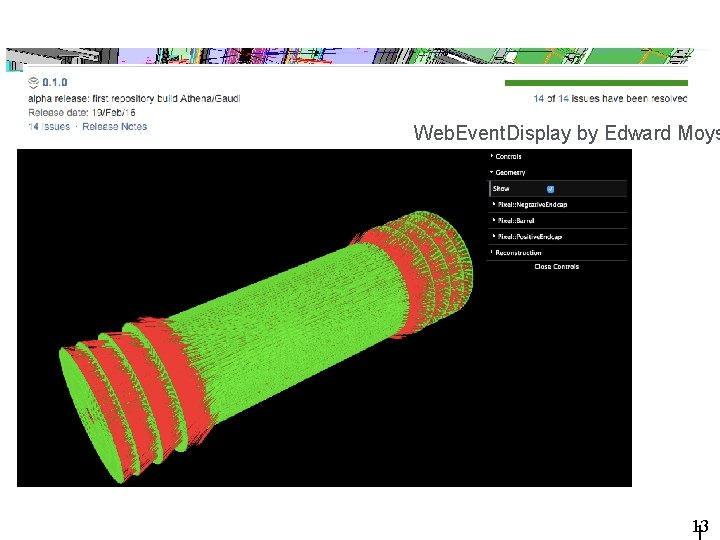

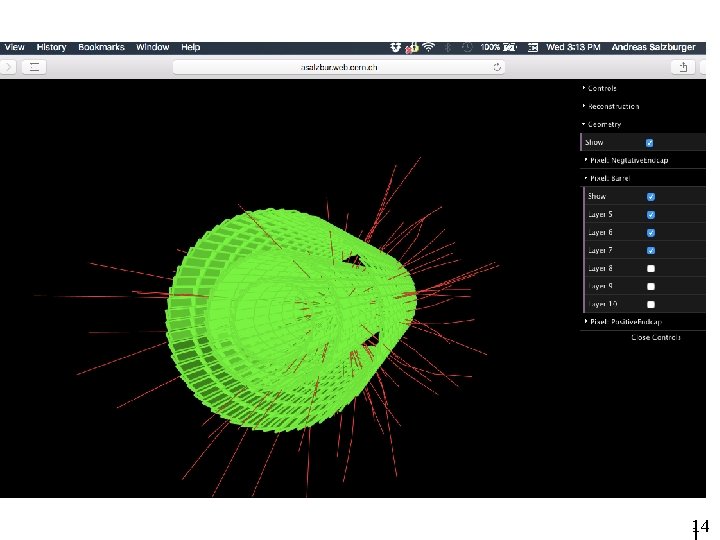

Web. Event. Display by Edward Moys 13

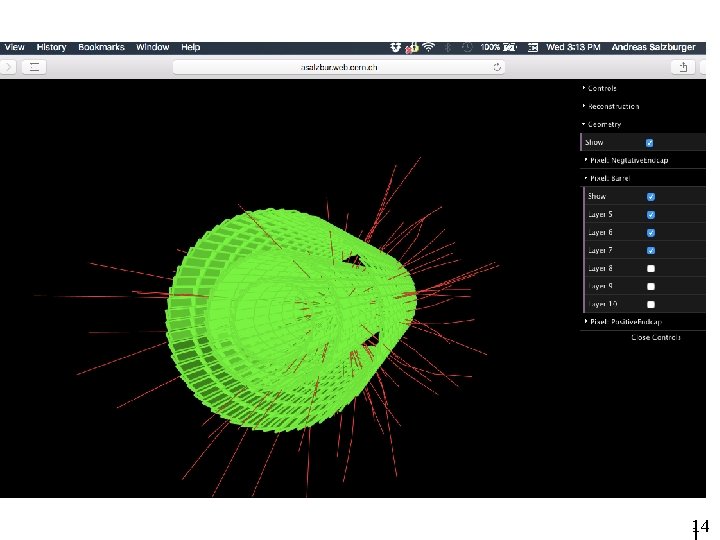

14

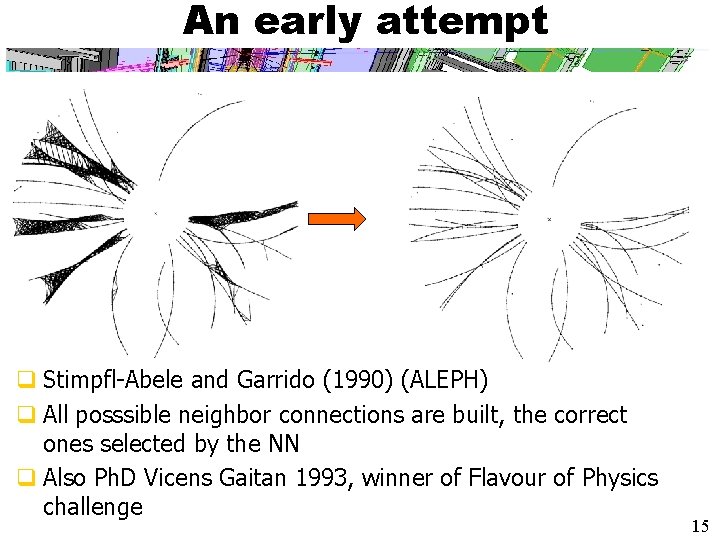

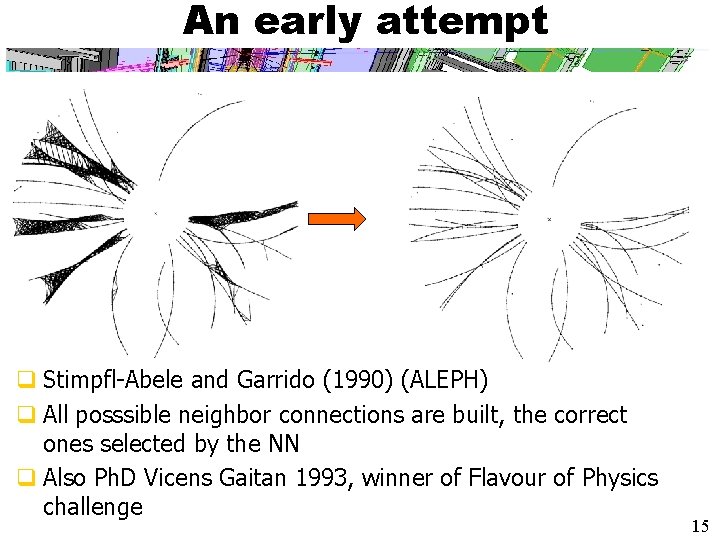

An early attempt q Stimpfl-Abele and Garrido (1990) (ALEPH) q All posssible neighbor connections are built, the correct ones selected by the NN q Also Ph. D Vicens Gaitan 1993, winner of Flavour of Physics challenge 15

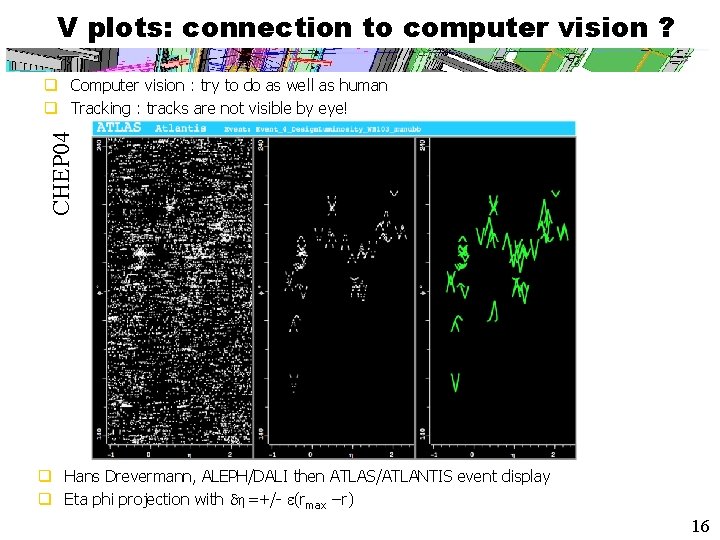

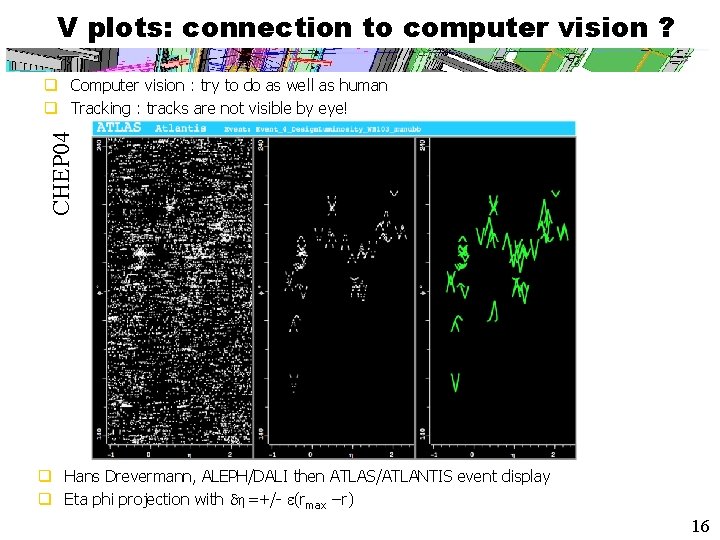

V plots: connection to computer vision ? CHEP 04 q Computer vision : try to do as well as human q Tracking : tracks are not visible by eye! q Hans Drevermann, ALEPH/DALI then ATLAS/ATLANTIS event display q Eta phi projection with dh=+/- e(rmax −r) 16

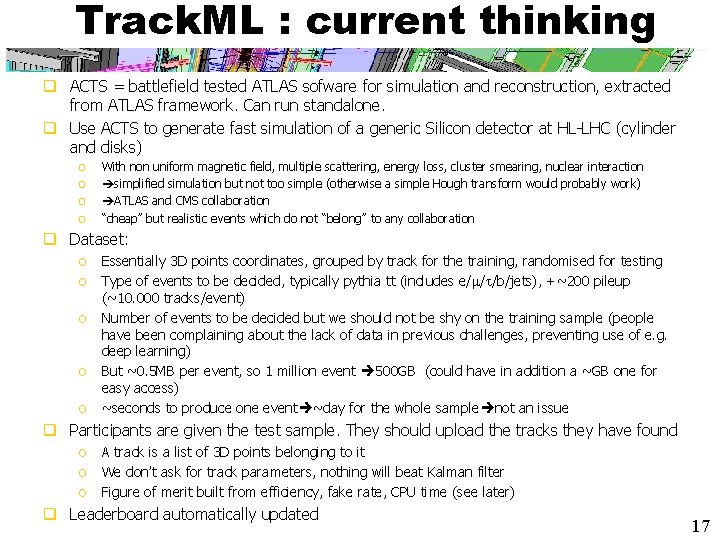

Track. ML : current thinking q ACTS = battlefield tested ATLAS sofware for simulation and reconstruction, extracted from ATLAS framework. Can run standalone. q Use ACTS to generate fast simulation of a generic Silicon detector at HL-LHC (cylinder and disks) o o With non uniform magnetic field, multiple scattering, energy loss, cluster smearing, nuclear interaction simplified simulation but not too simple (otherwise a simple Hough transform would probably work) ATLAS and CMS collaboration “cheap” but realistic events which do not “belong” to any collaboration q Dataset: o o o Essentially 3 D points coordinates, grouped by track for the training, randomised for testing Type of events to be decided, typically pythia tt (includes e/m/t/b/jets), +~200 pileup (~10. 000 tracks/event) Number of events to be decided but we should not be shy on the training sample (people have been complaining about the lack of data in previous challenges, preventing use of e. g. deep learning) But ~0. 5 MB per event, so 1 million event 500 GB (could have in addition a ~GB one for easy access) ~seconds to produce one event ~day for the whole sample not an issue q Participants are given the test sample. They should upload the tracks they have found o o o A track is a list of 3 D points belonging to it We don’t ask for track parameters, nothing will beat Kalman filter Figure of merit built from efficiency, fake rate, CPU time (see later) q Leaderboard automatically updated 17

Figure of merit q We’re more interested by CPU gain, than efficiency or fake rate reduction, provided they are “good enough” o Our current algorithms find correctly tracks in HL-LHC, they are just not fast enough by factors q The f. o. m should favour the algorithm which is the most likely to become part of the HL-LHC ATLAS/CMS reconstruction o (even if we keep only the algorithm ideas and rewrite the software) q Actually efficiency (probability to find a track) is more important than fake rate (wrong association of points), because fakes can be removed later on by the final fit q Bulk of the tracks are uninteresting ones! q Need to give more weight in the evaluation to: o o o o Higher momentum tracks Tracks in dense jets Tracks from displaced vertices, b, tau (not K 0 s, conversion ? ) Electrons (? ) Zero weight to very low pt tracks Most tracks from pileup vertices should still be found current idea is to group tracks into category, the f. o. m retained would be the one in the worst category 18

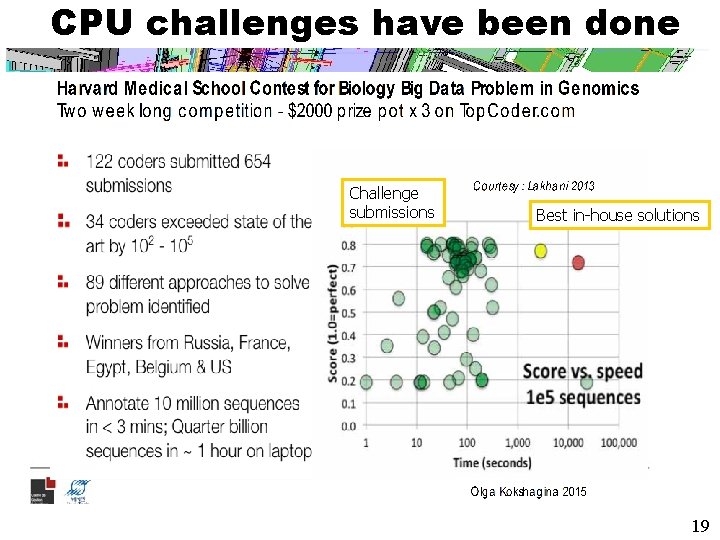

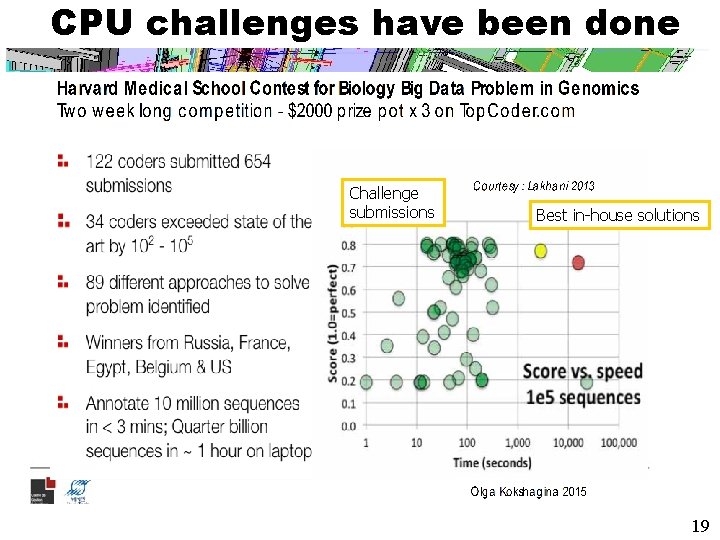

CPU challenges have been done Challenge submissions Best in-house solutions 19

CPU measurement q Contrary to Higgs. ML or flavour of physic challenge need to evaluate CPU time o o o CPU time to find the tracks Cap on memory used (e. g. one x 86 -64 core with 2 GB) Training time unlimited q Some platforms (see Auto. ML, Codalab, Topcoder) now allow to automatically upload, compile and run software o o o well defined hardware (CPU and memory available) uniform comparison Could also use an Amazon instance § (amazon could also host the large dataset and allow resources for the training) q Positive side-effect : limit diversity of software languages and libraries q We’re more interested in the detailed algorithm (as it would be explained in a technical paper) rather than the software itself (we do want to see the software) q We’re more interested in new approaches than in super-optimised version of old approaches 20

Starting kit ==all that we provide on top of the data set Difficult to get right : between PR and technical documentation Web pages (see current Kaggle challenges), videos… Document with HEP tracking for novices … guiding people to more complex algorithms, without scaring them, q Software which allows to get a very first solution in <1 hour, addressing different communities: q q o o o Jupyter notebook Simple python Sci. Kit-learn Caffe (e. g. David Clark attempt https: //github. com/davidclark 1/Track. Net. ) ACTS nicely packaged Etc…. 21

Challenge sequencing q Building collaborations more important than the competition q Strive to promote “coopetition” so that participant collaborates (tricky): example in Higgs. ML challenge XGBoost released very early and use by many participants q foresee long term interaction between participants and HEP q dataset and evaluation should remain available after the challenge is done q Think of multiple steps challenge, for example o o Actually Isabelle Guyon proposes a two step competition (to not overemphasize “technical” speed) : a first step with only a minimum requirement on the CPU where people should maximise the f. o. m, a second step on algorithm optimisation this allows to reorient the challenge, adjust the figure of merit if we see it has been “hacked” (see Flavour of Physics challenge) also to reshuffle the play deck : best ideas exchanged between teams can also separate sub tasks q Platform to exchange code, e. g. : o o o q q Kaggle scripting Github RAMP (CDS Paris Saclay hackathon platform) Can foresee several prices for different category Foresee of releasing the sample publicly (e. g. CERN Open Data Portal) just after the challenge Foresee a publication outlet (e. g. a satellite NIPS workshop proceedings, like for Higgs. ML) Anticipate from the very beginning the final re-import stage 22

Conclusion q Track Machine Learning challenge taking shape q Broad lines are defined but still a lot of work: o o o converge on the pseudo-detector define the figure of merit (including CPU) write documentation starting kit software (many) development/test platforms challenge sequencing (one or several stages) budget (>>10 k€), sponsoring legal matter outreach, challenge publicity, social media post challenge connection with Common Tracking Forum (and HSF) q We’re now narrowing on a v 0 of the challenge q Most important is what remains after the challenge 23