Towards a Scalable and Robust DHT Baruch Awerbuch

Towards a Scalable and Robust DHT Baruch Awerbuch Johns Hopkins University Christian Scheideler Technical University of Munich Towards a Scalable and Robust DHT

Holy Grail of Distributed Systems Scalability and Robustness Adversarial behavior increasingly pressing issue! Towards a Scalable and Robust DHT 2

Why is this difficult? ? ? Scalability: minimize resources needed for operations Robustness: maximize resources needed for attack Scalable solutions seem to be easy to attack! Towards a Scalable and Robust DHT 3

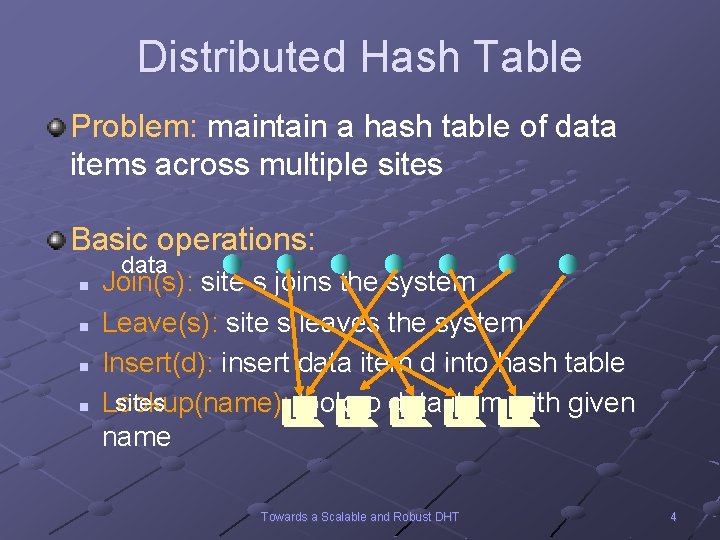

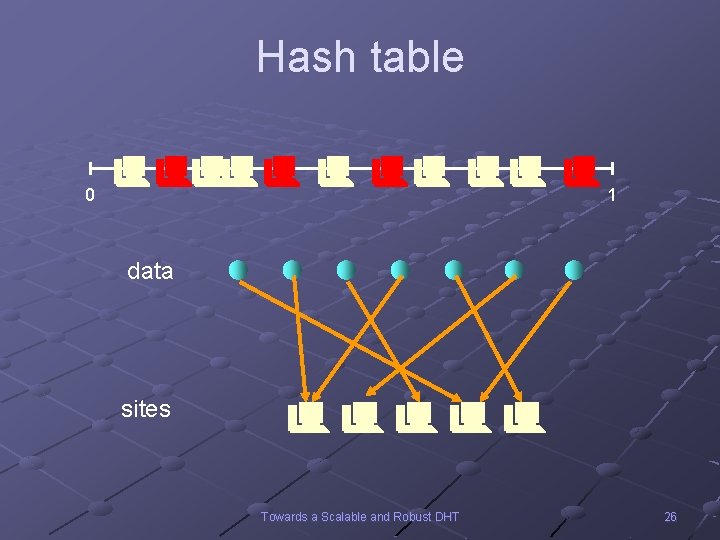

Distributed Hash Table Problem: maintain a hash table of data items across multiple sites Basic operations: data n n Join(s): site s joins the system Leave(s): site s leaves the system Insert(d): insert data item d into hash table sites Lookup(name ): lookup data item with given name Towards a Scalable and Robust DHT 4

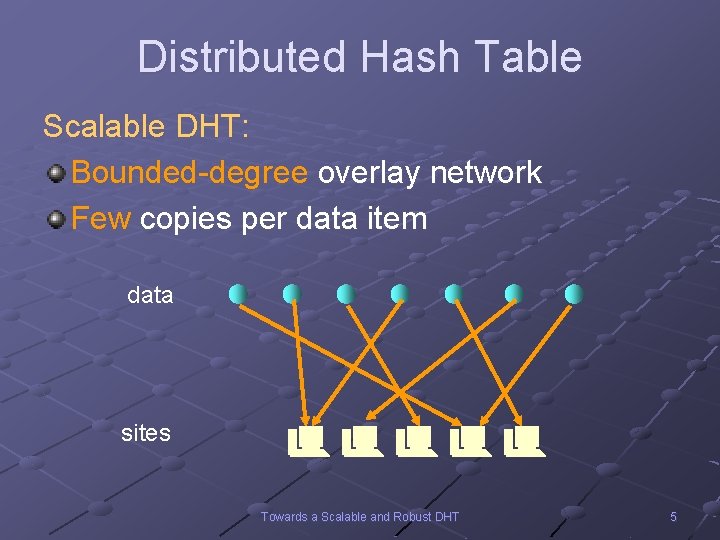

Distributed Hash Table Scalable DHT: Bounded-degree overlay network Few copies per data item data sites Towards a Scalable and Robust DHT 5

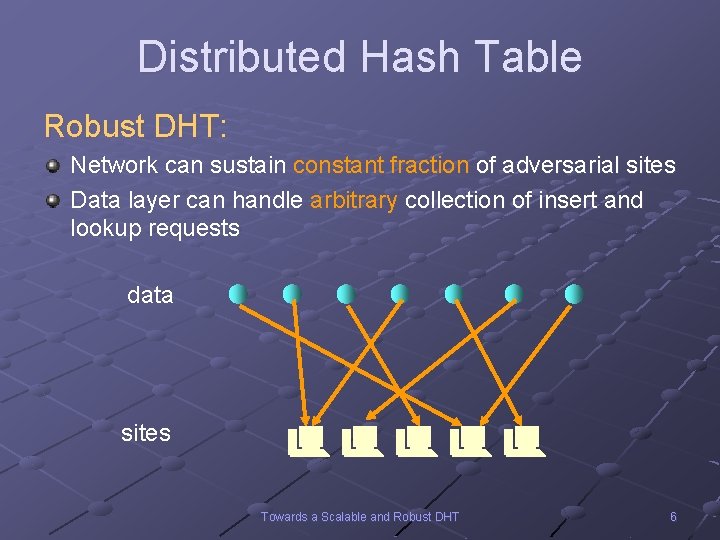

Distributed Hash Table Robust DHT: Network can sustain constant fraction of adversarial sites Data layer can handle arbitrary collection of insert and lookup requests data sites Towards a Scalable and Robust DHT 6

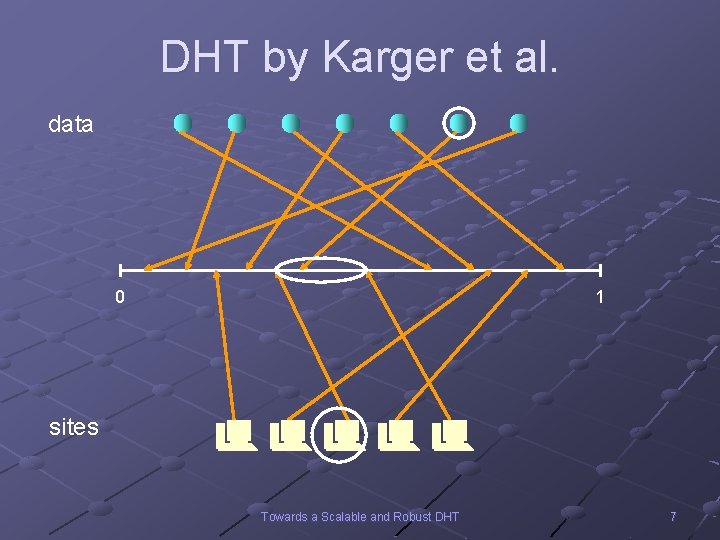

DHT by Karger et al. data 0 1 sites Towards a Scalable and Robust DHT 7

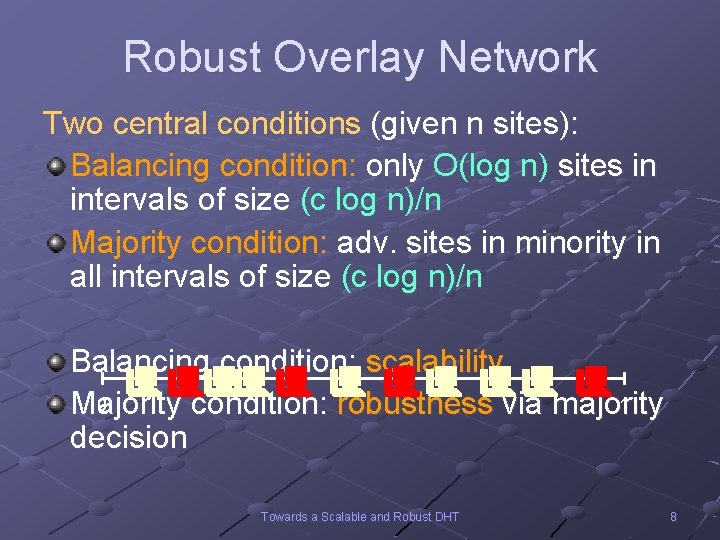

Robust Overlay Network Two central conditions (given n sites): Balancing condition: only O(log n) sites in intervals of size (c log n)/n Majority condition: adv. sites in minority in all intervals of size (c log n)/n Balancing condition: scalability 0 1 Majority condition: robustness via majority decision Towards a Scalable and Robust DHT 8

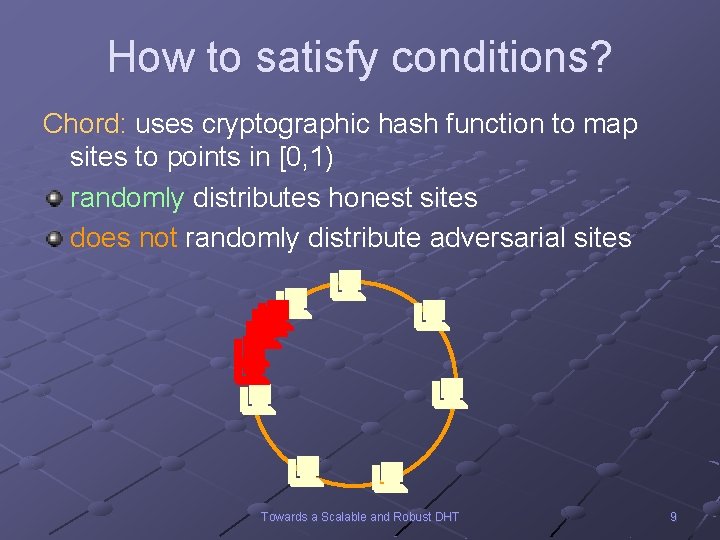

How to satisfy conditions? Chord: uses cryptographic hash function to map sites to points in [0, 1) randomly distributes honest sites does not randomly distribute adversarial sites Towards a Scalable and Robust DHT 9

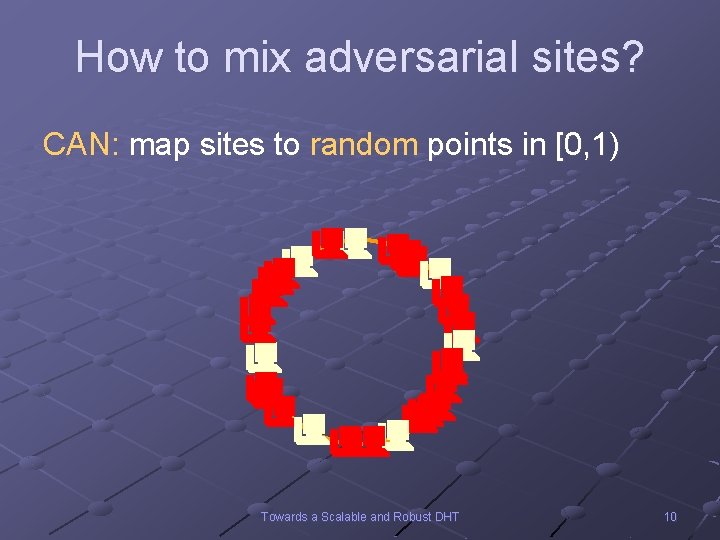

How to mix adversarial sites? CAN: map sites to random points in [0, 1) Towards a Scalable and Robust DHT 10

![How to mix adversarial sites? Group spreading [AS 04]: Map sites to random points How to mix adversarial sites? Group spreading [AS 04]: Map sites to random points](http://slidetodoc.com/presentation_image_h2/a98142335104190bb5c7de573c54098f/image-11.jpg)

How to mix adversarial sites? Group spreading [AS 04]: Map sites to random points in [0, 1) Limit lifetime of points Too expensive! Towards a Scalable and Robust DHT 11

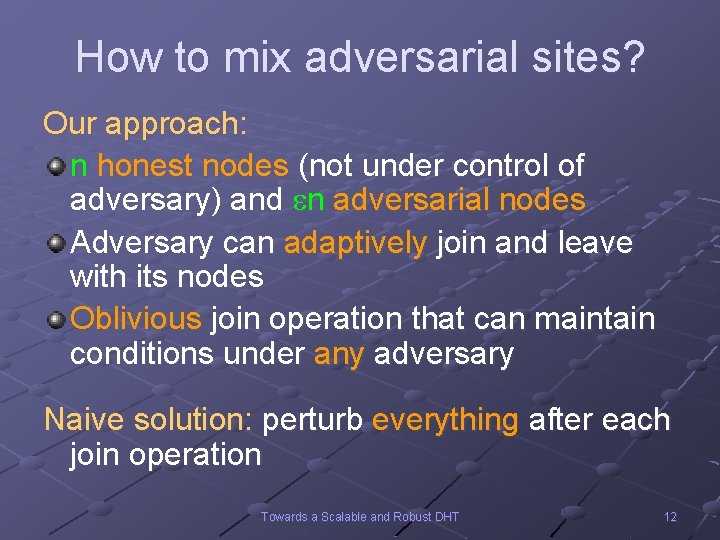

How to mix adversarial sites? Our approach: n honest nodes (not under control of adversary) and n adversarial nodes Adversary can adaptively join and leave with its nodes Oblivious join operation that can maintain conditions under any adversary Naive solution: perturb everything after each join operation Towards a Scalable and Robust DHT 12

![How to mix adversarial sites? Card shuffling [Diaconis & Shahshahani 81]: random transposition (n How to mix adversarial sites? Card shuffling [Diaconis & Shahshahani 81]: random transposition (n](http://slidetodoc.com/presentation_image_h2/a98142335104190bb5c7de573c54098f/image-13.jpg)

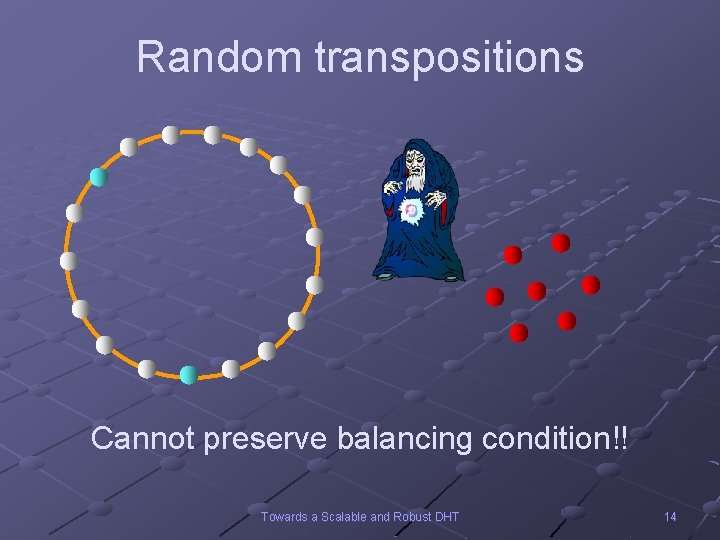

How to mix adversarial sites? Card shuffling [Diaconis & Shahshahani 81]: random transposition (n log n) transpositions: random permutation (log n) transpositions per join operation? ? Towards a Scalable and Robust DHT 13

Random transpositions Cannot preserve balancing condition!! Towards a Scalable and Robust DHT 14

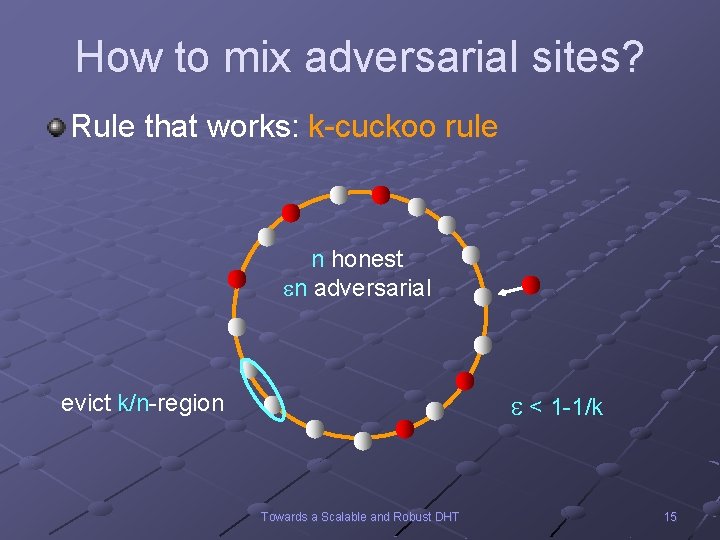

How to mix adversarial sites? Rule that works: k-cuckoo rule n honest n adversarial < 1 -1/k evict k/n-region Towards a Scalable and Robust DHT 15

Are we done? Dilemma: we cannot randomly distribute the data Reason: data unsearchable! So we need hash function, but then open to adversarial attacks on insert, lookup operations Towards a Scalable and Robust DHT 16

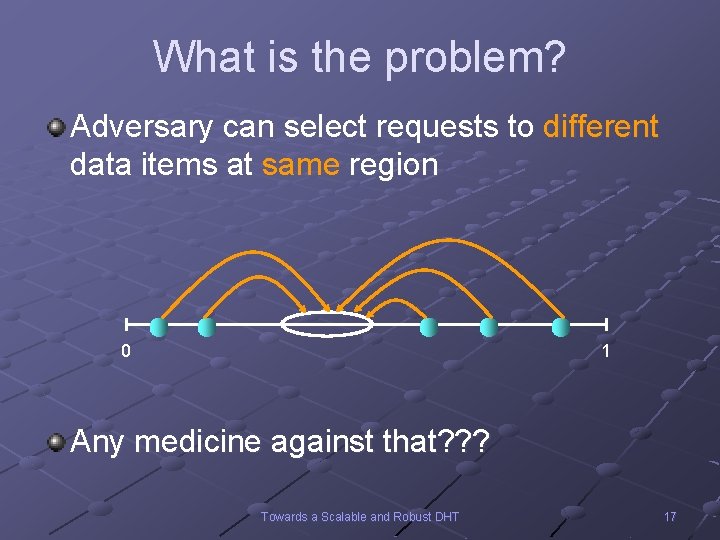

What is the problem? Adversary can select requests to different data items at same region 0 1 Any medicine against that? ? ? Towards a Scalable and Robust DHT 17

Yes!! Towards a Scalable and Robust DHT 18

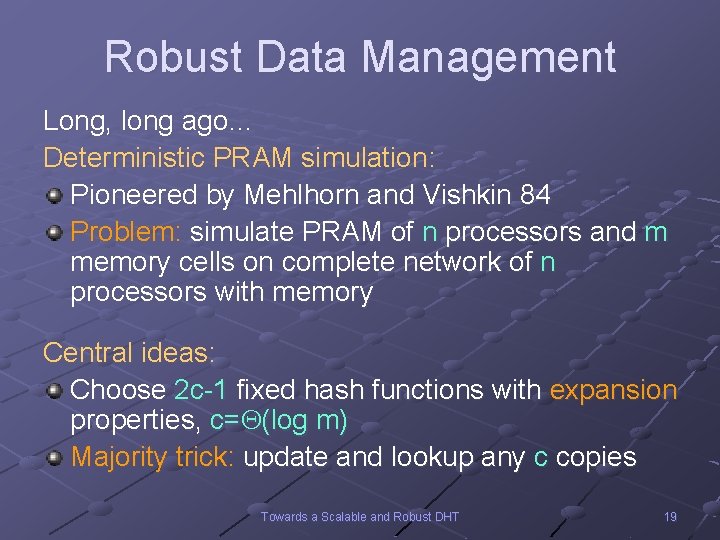

Robust Data Management Long, long ago… Deterministic PRAM simulation: Pioneered by Mehlhorn and Vishkin 84 Problem: simulate PRAM of n processors and m memory cells on complete network of n processors with memory Central ideas: Choose 2 c-1 fixed hash functions with expansion properties, c= (log m) Majority trick: update and lookup any c copies Towards a Scalable and Robust DHT 19

Robust Data Management Why deterministic strategies? Randomness expensive in dynamic systems with adversarial presence! More complex for dynamic networks: Congestion during routing Contention at destination regions Towards a Scalable and Robust DHT 20

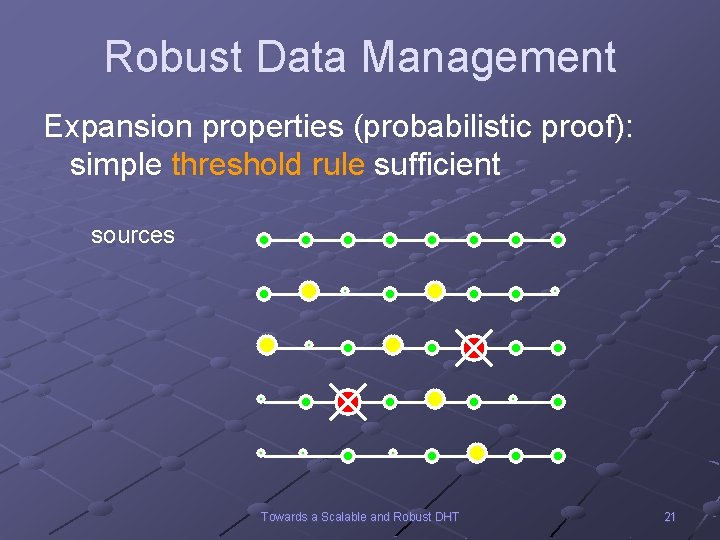

Robust Data Management Expansion properties (probabilistic proof): simple threshold rule sufficient sources Towards a Scalable and Robust DHT 21

Robust Data Management Strategy: Run several attempts In each attempt: n n n For each remaining request, route 2 c-1 packets, one for each copy Discard packets at congested nodes If c packets of a request successful, then request successful How many attempts? ? ? Towards a Scalable and Robust DHT 22

Robust Data Management Answer: O(log n) many attempts with congestion threshold O(polylog). Theorem 1: For any set of n lookup requests, one request per node, the lookup protocol can serve all requests in O(polylog) communication rounds. Theorem 2: Same for insert requests Towards a Scalable and Robust DHT 23

Conclusion We presented high-level solution for a scalable and robust DHT. Open problems: Low-level attacks (Do. S!) Adversary controls join-leave behavior of adversarial and honest nodes Elementary random number generator Towards a Scalable and Robust DHT 24

Questions? Towards a Scalable and Robust DHT 25

Hash table 0 1 data sites Towards a Scalable and Robust DHT 26

- Slides: 26