Topics 8 Advance in Parallel Computer Architectures 2022212

![Applications Demands [Courtesy of Erik P. De. Benedictis 2004] 1 Zettaflops Applications Plasma Fusion Applications Demands [Courtesy of Erik P. De. Benedictis 2004] 1 Zettaflops Applications Plasma Fusion](https://slidetodoc.com/presentation_image_h2/af6d572183d6e0459a41bcf25a94dab4/image-17.jpg)

![Applications Demands [Courtesy of Erik P. De. Benedictis 2004] 1 Zettaflops Applications Plasma Fusion Applications Demands [Courtesy of Erik P. De. Benedictis 2004] 1 Zettaflops Applications Plasma Fusion](https://slidetodoc.com/presentation_image_h2/af6d572183d6e0459a41bcf25a94dab4/image-23.jpg)

- Slides: 34

Topics 8: Advance in Parallel Computer Architectures 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 1

Reading List • Slides: Topic 8 x 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 2

Why Study Parallel Architecture? Role of a computer architect: To design and engineer the various levels of a computer system to maximize performance and programmability within limits of technology and cost. Parallelism: • Provides alternative to faster clock for performance • Applies at all levels of system design • Is a fascinating perspective from which to view architecture • Is increasingly central in information processing 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 3

Inevitability of Parallel Computing • • 2022/2/12 Application demands Technology Trends Architecture Trends Economics coursecpeg 323 -05 FTopic-final-323. ppt 4

Application Trends • Demand for cycles fuels advances in hardware, and viceversa • Range of performance demands • Goal of applications in using parallel machines: Speedup • Productivity requirement 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 5

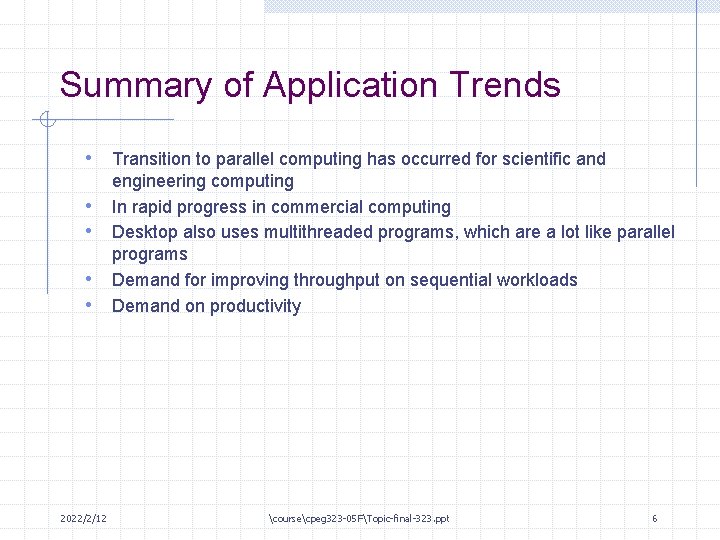

Summary of Application Trends • Transition to parallel computing has occurred for scientific and • • 2022/2/12 engineering computing In rapid progress in commercial computing Desktop also uses multithreaded programs, which are a lot like parallel programs Demand for improving throughput on sequential workloads Demand on productivity coursecpeg 323 -05 FTopic-final-323. ppt 6

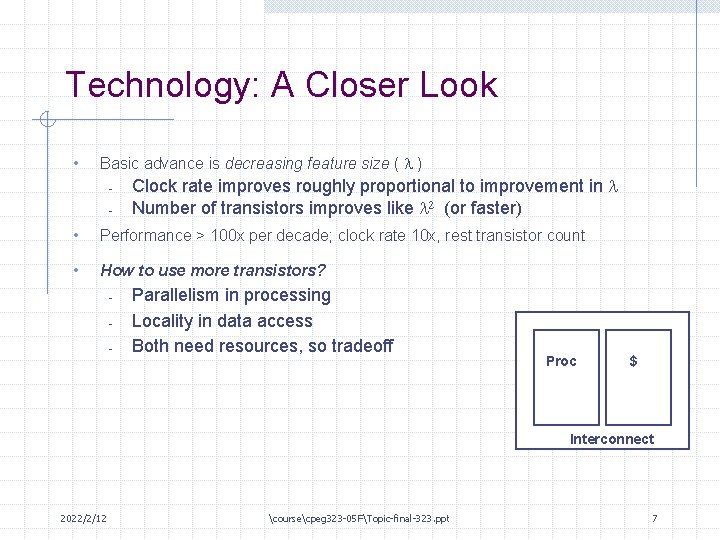

Technology: A Closer Look • Basic advance is decreasing feature size ( ) - Clock rate improves roughly proportional to improvement in Number of transistors improves like (or faster) • Performance > 100 x per decade; clock rate 10 x, rest transistor count • How to use more transistors? - Parallelism in processing Locality in data access Both need resources, so tradeoff Proc $ Interconnect 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 7

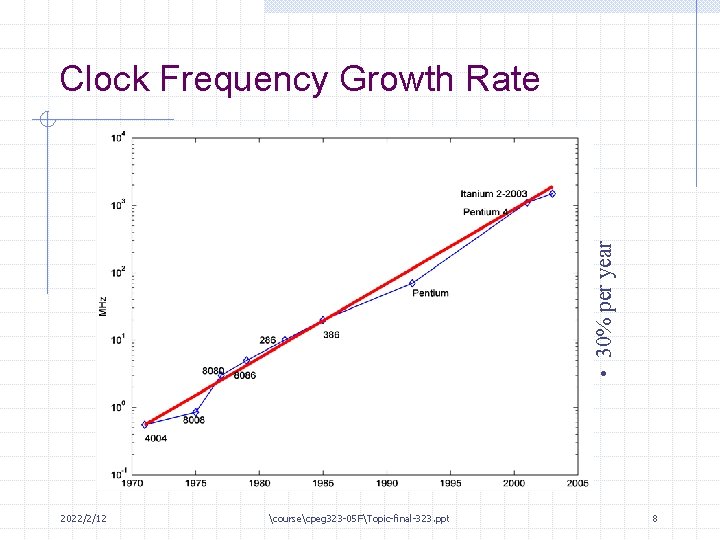

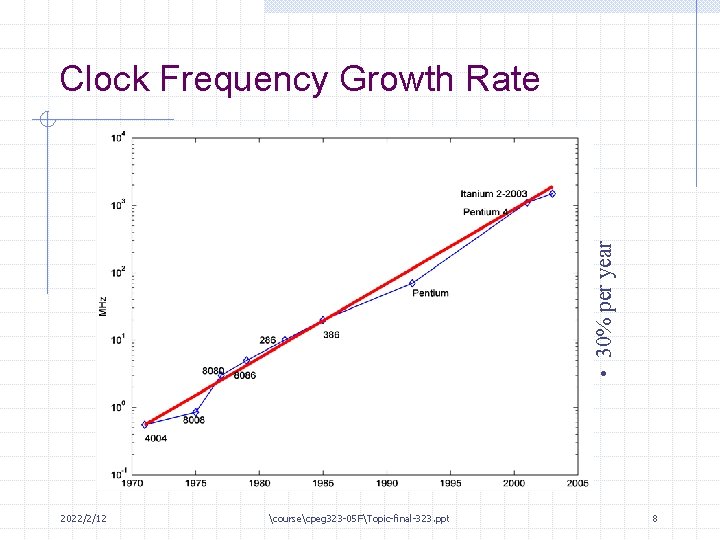

• 30% per year Clock Frequency Growth Rate 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 8

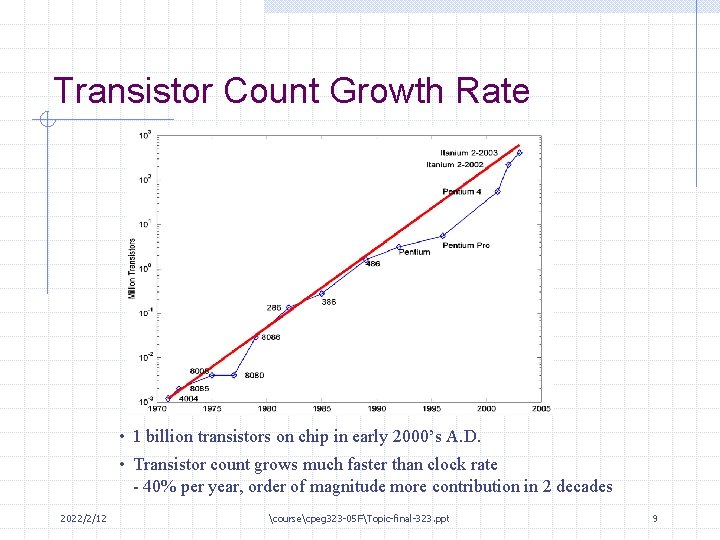

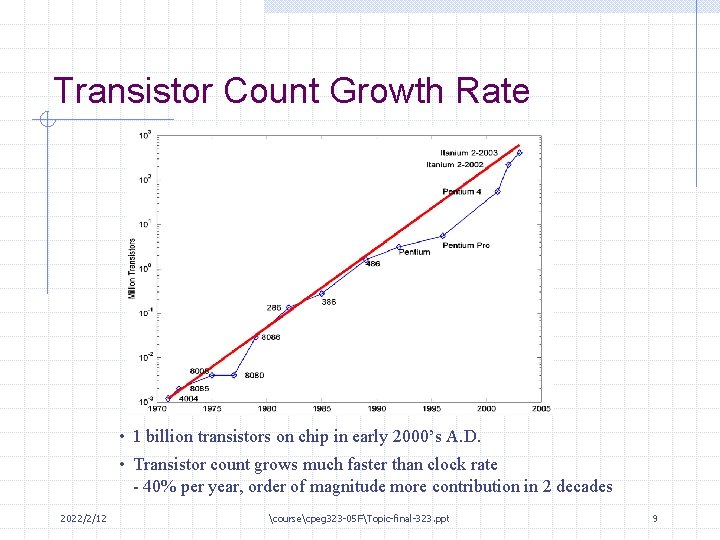

Transistor Count Growth Rate • 1 billion transistors on chip in early 2000’s A. D. • Transistor count grows much faster than clock rate - 40% per year, order of magnitude more contribution in 2 decades 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 9

Similar Story for Storage • Divergence between memory capacity and speed more pronounced • Larger memories are slower Need deeper cache hierarchies Parallelism and locality within memory systems Disks too: Parallel disks plus caching - • • 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 10

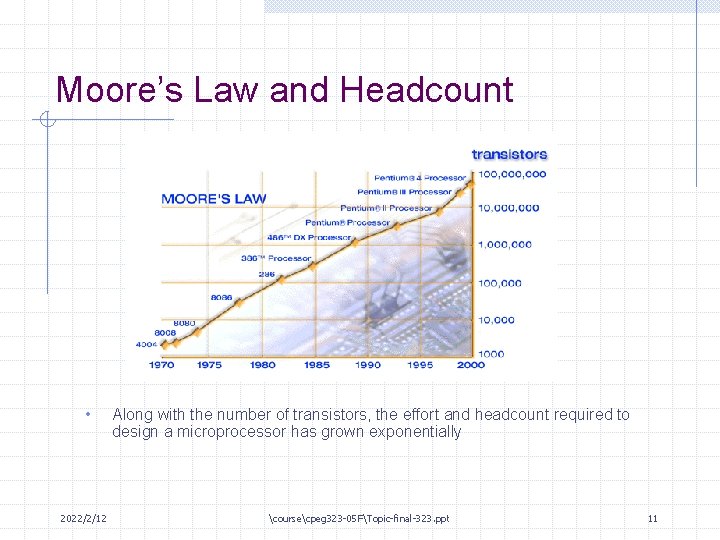

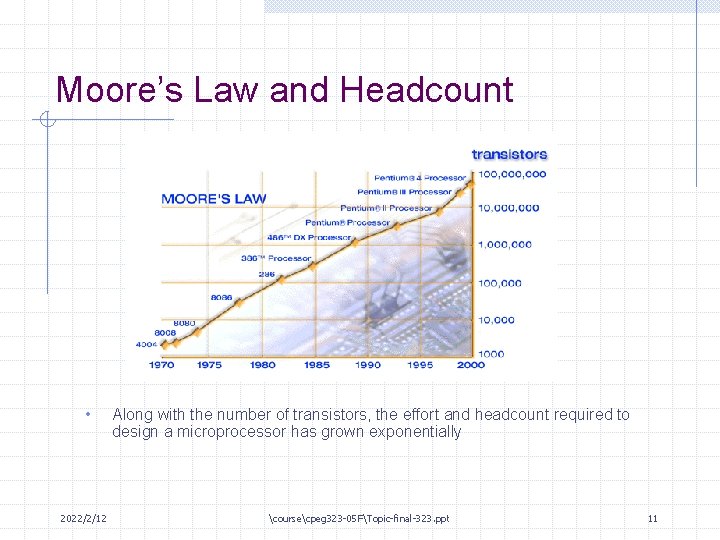

Moore’s Law and Headcount • 2022/2/12 Along with the number of transistors, the effort and headcount required to design a microprocessor has grown exponentially coursecpeg 323 -05 FTopic-final-323. ppt 11

Architectural Trends • Architecture: performance and capability • Tradeoff between parallelism and locality Current microprocessor: 1/3 compute, 1/3 cache, 1/3 off-chip connect Understanding microprocessor architectural trends Four generations of architectural history: tube, transistor, IC, VLSI - • • 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 12

Technology Progress Overview • Processor speed improvement: 2 x per year (since 85). 100 x in last decade. • DRAM Memory Capacity: 2 x in 2 years (since 96). 64 x in last decade. • DISK capacity: 2 x per year (since 97). 250 x in last decade. 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 13

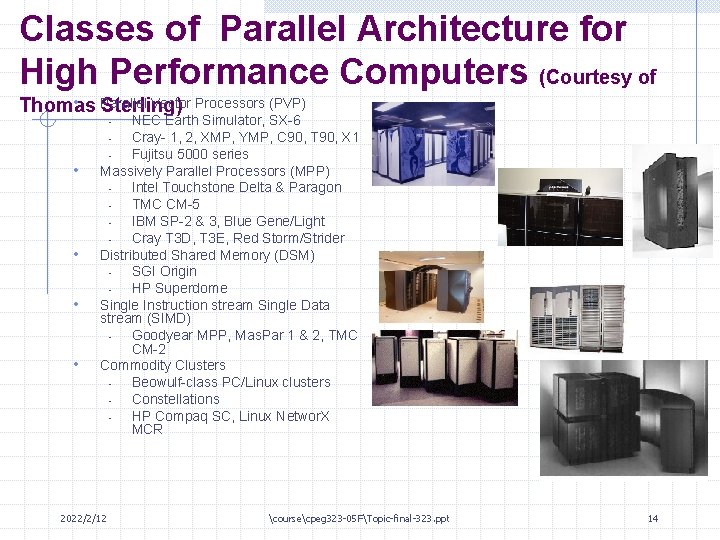

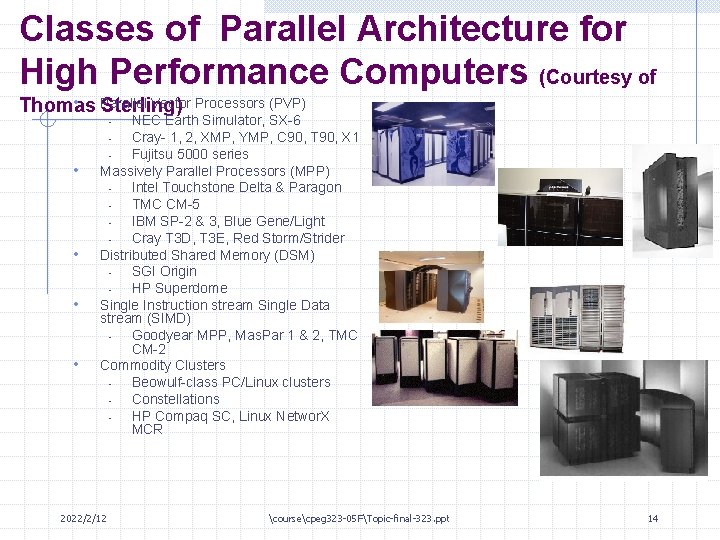

Classes of Parallel Architecture for High Performance Computers (Courtesy of • Parallel Vector Processors (PVP) Thomas Sterling) NEC Earth Simulator, SX-6 Cray- 1, 2, XMP, YMP, C 90, T 90, X 1 Fujitsu 5000 series Massively Parallel Processors (MPP) Intel Touchstone Delta & Paragon TMC CM-5 IBM SP-2 & 3, Blue Gene/Light Cray T 3 D, T 3 E, Red Storm/Strider Distributed Shared Memory (DSM) SGI Origin HP Superdome Single Instruction stream Single Data stream (SIMD) Goodyear MPP, Mas. Par 1 & 2, TMC CM-2 Commodity Clusters Beowulf-class PC/Linux clusters Constellations HP Compaq SC, Linux Networ. X MCR - • • 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 14

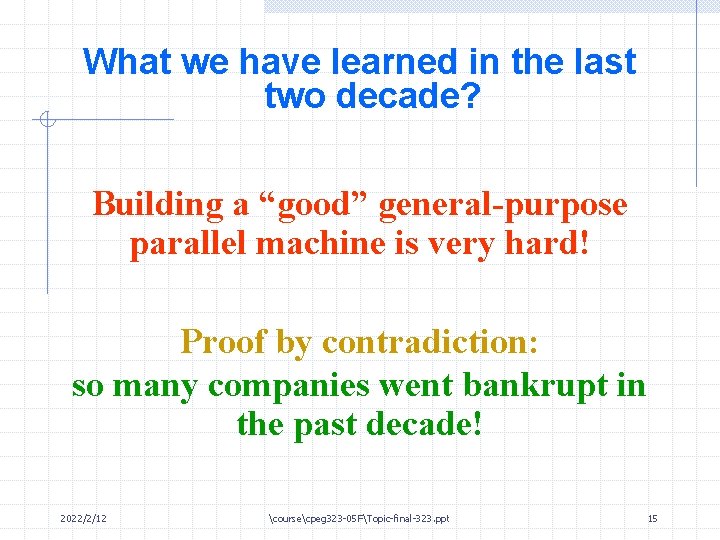

What we have learned in the last two decade? Building a “good” general-purpose parallel machine is very hard! Proof by contradiction: so many companies went bankrupt in the past decade! 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 15

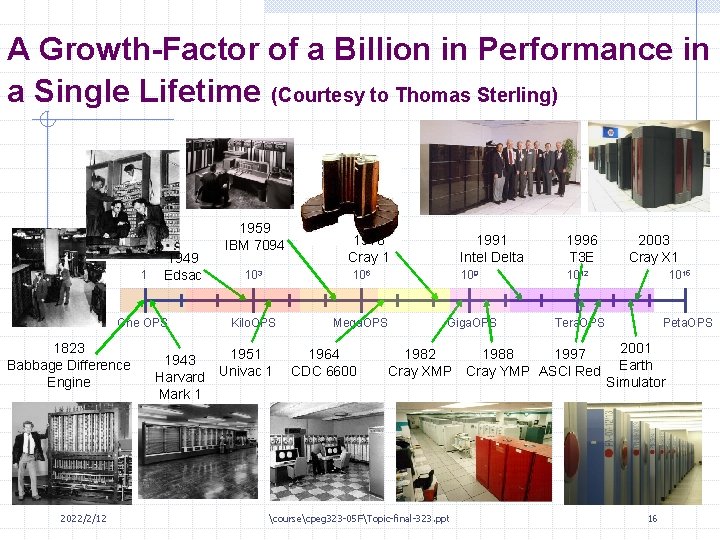

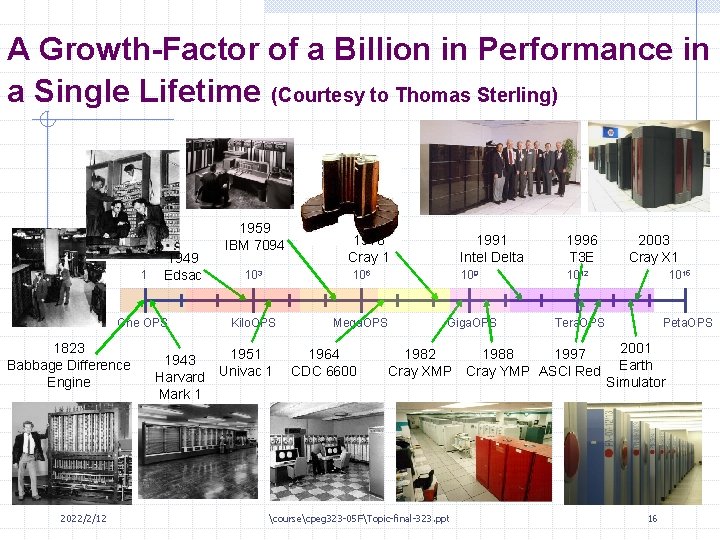

A Growth-Factor of a Billion in Performance in a Single Lifetime (Courtesy to Thomas Sterling) 1 1949 Edsac One OPS 1823 Babbage Difference Engine 2022/2/12 1959 IBM 7094 1976 Cray 1 1991 Intel Delta 1996 T 3 E 103 106 109 1012 Kilo. OPS Mega. OPS Giga. OPS Tera. OPS 1951 1943 Harvard Univac 1 Mark 1 1964 CDC 6600 1982 Cray XMP coursecpeg 323 -05 FTopic-final-323. ppt 1988 1997 Cray YMP ASCI Red 2003 Cray X 1 1015 Peta. OPS 2001 Earth Simulator 16

![Applications Demands Courtesy of Erik P De Benedictis 2004 1 Zettaflops Applications Plasma Fusion Applications Demands [Courtesy of Erik P. De. Benedictis 2004] 1 Zettaflops Applications Plasma Fusion](https://slidetodoc.com/presentation_image_h2/af6d572183d6e0459a41bcf25a94dab4/image-17.jpg)

Applications Demands [Courtesy of Erik P. De. Benedictis 2004] 1 Zettaflops Applications Plasma Fusion Simulation [Jardin 03] 100 Exaflops No schedule provided by source [HEC 04] Full Global Climate [Malone 03] 10 Exaflops 1 Exaflops Compute as fast as the engineer can think [NASA 99] 100 Petaflops 100 Teraflops 2000 Geodata Earth Station Range [NASA 02] System Performance 1000 [SCa. Le. S 03] 2010 Simulation of more complex biomolecular structures protein folding 1 PFLOPS simulation of large biomolecular structures (ms scale) 250 TFLOPS simulation of medium biomolecular structures (us scale) 50 TFLOPS 2020 [Jardin 03] S. C. Jardin, “Plasma Science Contribution to the SCa. Le. S Report, ” Princeton Plasma Physics Laboratory, PPPL-3879 UC-70, available on Internet. [Malone 03] Robert C. Malone, John B. Drake, Philip W. Jones, Douglas A. Rotman, “High-End Computing in Climate Modeling, ” contribution to SCa. Le. S report. [NASA 99] R. T. Biedron, P. Mehrotra, M. L. Nelson, F. S. Preston, J. J. Rehder, J. L. Rogers, D. H. Rudy, J. Sobieski, and O. O. Storaasli, “Compute as Fast as the Engineers Can Think!” NASA/TM-1999 -209715, available on Internet. [NASA 02] NASA Goddard Space Flight Center, “Advanced Weather Prediction Technologies: NASA’s Contribution to the Operational Agencies, ” available on Internet. [SCa. Le. S 03] Workshop on the Science Case for Large-scale Simulation, June 24 -25, proceedings on Internet a http: //www. pnl. gov/scales/. [De. Benedictis 04], Erik P. De. Benedictis, “Matching Supercomputing to Progress in Science, ” July 2004. Presentation at Lawrence Berkeley National Laboratory, also published as Sandia National Laboratories SAND report SAND 2004 -3333 P. Sandia technical reports are available by going to http: //www. sandia. gov and accessing the technical library. [HEC 04] Federal Plan for High-End Computing, May, 2004. 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 17

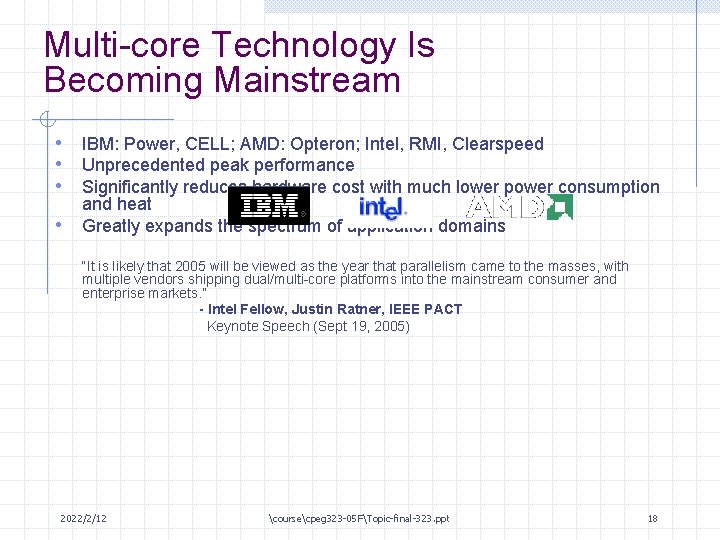

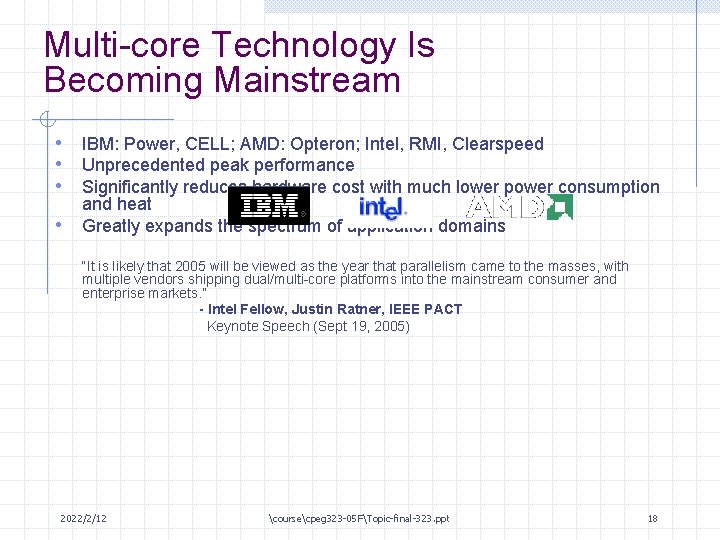

Multi-core Technology Is Becoming Mainstream • IBM: Power, CELL; AMD: Opteron; Intel, RMI, Clearspeed • Unprecedented peak performance • Significantly reduces hardware cost with much lower power consumption • and heat Greatly expands the spectrum of application domains “It is likely that 2005 will be viewed as the year that parallelism came to the masses, with multiple vendors shipping dual/multi-core platforms into the mainstream consumer and enterprise markets. ” - Intel Fellow, Justin Ratner, IEEE PACT Keynote Speech (Sept 19, 2005) 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 18

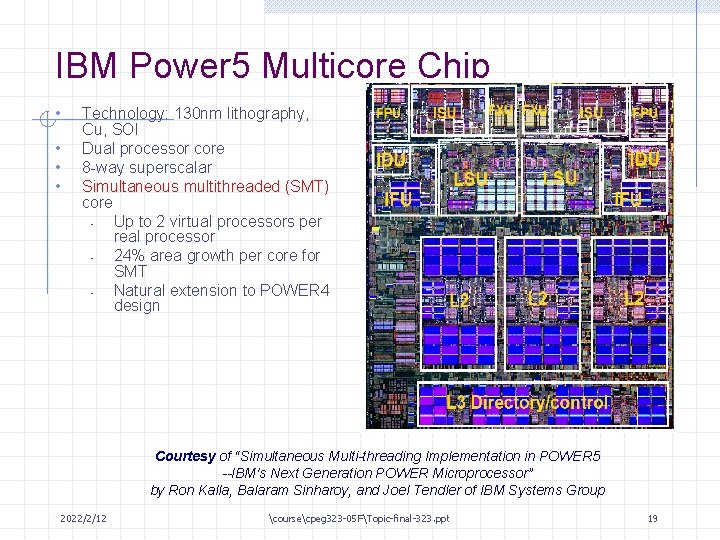

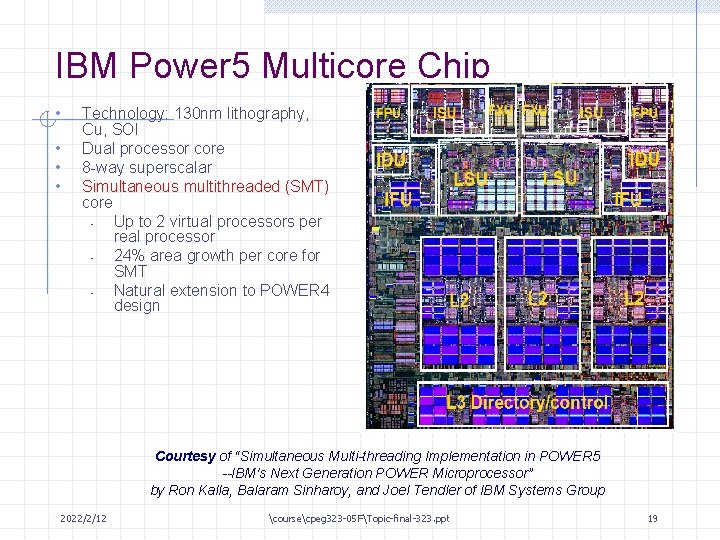

IBM Power 5 Multicore Chip • • Technology: 130 nm lithography, Cu, SOI Dual processor core 8 -way superscalar Simultaneous multithreaded (SMT) core Up to 2 virtual processors per real processor 24% area growth per core for SMT Natural extension to POWER 4 design Courtesy of “Simultaneous Multi-threading Implementation in POWER 5 --IBM's Next Generation POWER Microprocessor” by Ron Kalla, Balaram Sinharoy, and Joel Tendler of IBM Systems Group 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 19

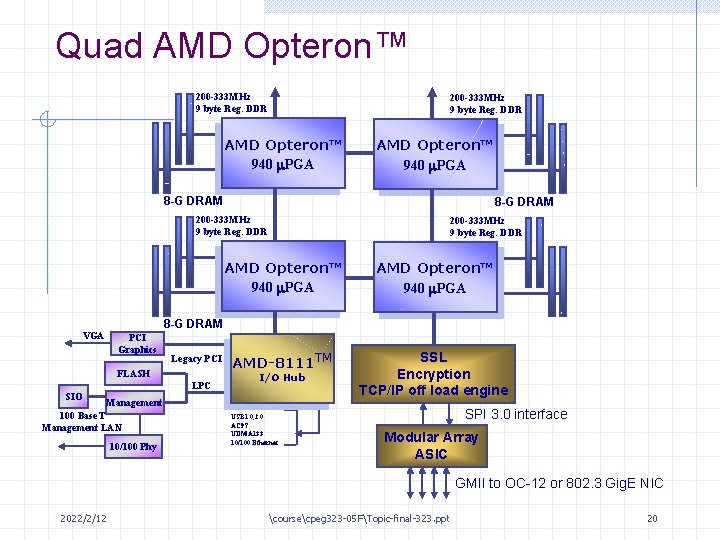

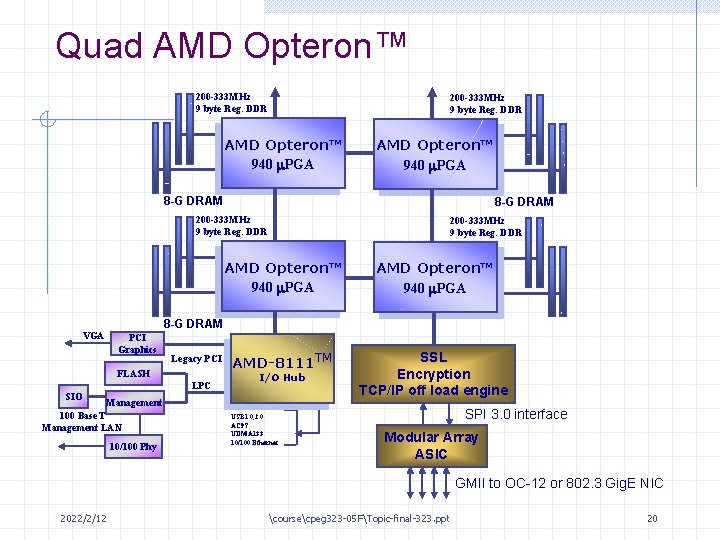

Quad AMD Opteron™ 200 -333 MHz 9 byte Reg. DDR AMD Opteron™ 940 m. PGA 8 -G DRAM 200 -333 MHz 9 byte Reg. DDR VGA 200 -333 MHz 9 byte Reg. DDR AMD Opteron™ 940 m. PGA AMD-8111 TM SSL Encryption TCP/IP off load engine 8 -G DRAM PCI Graphics Legacy PCI FLASH LPC I/O Hub SIO Management 100 Base. T Management LAN 10/100 Phy USB 1. 0, 2. 0 AC 97 UDMA 133 10/100 Ethernet SPI 3. 0 interface Modular Array ASIC GMII to OC-12 or 802. 3 Gig. E NIC 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 20

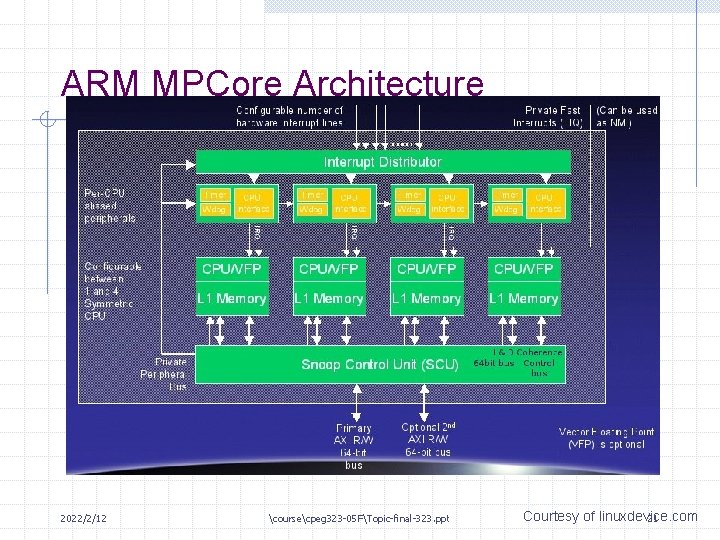

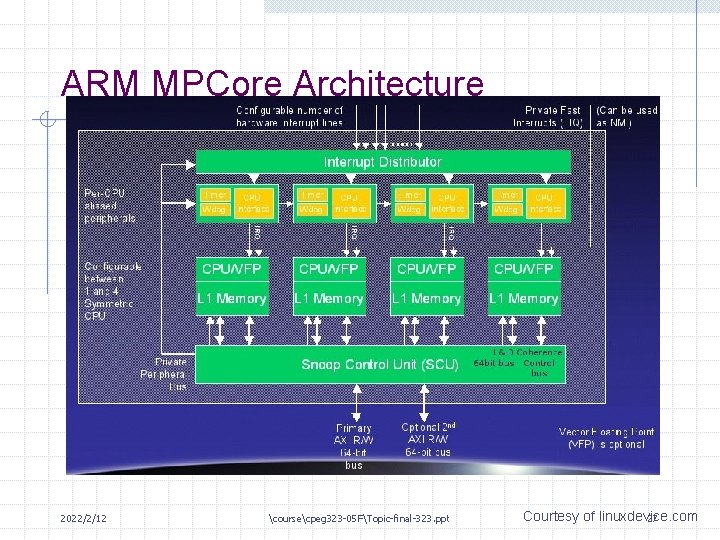

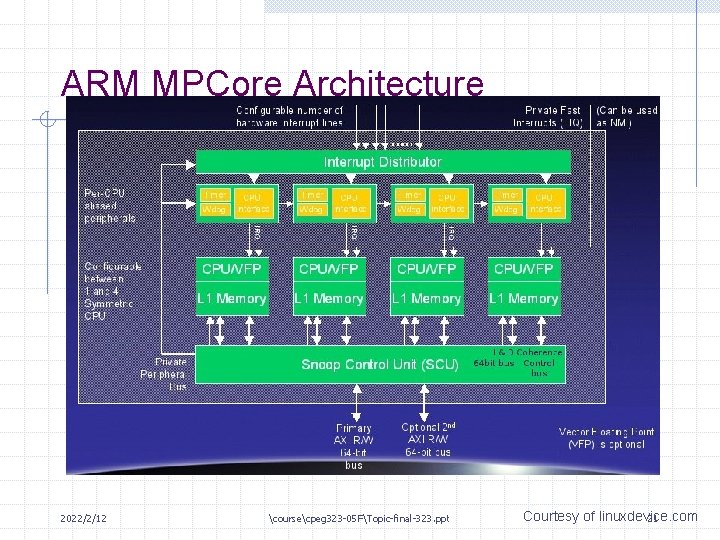

ARM MPCore Architecture 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt Courtesy of linuxdevice. com 21

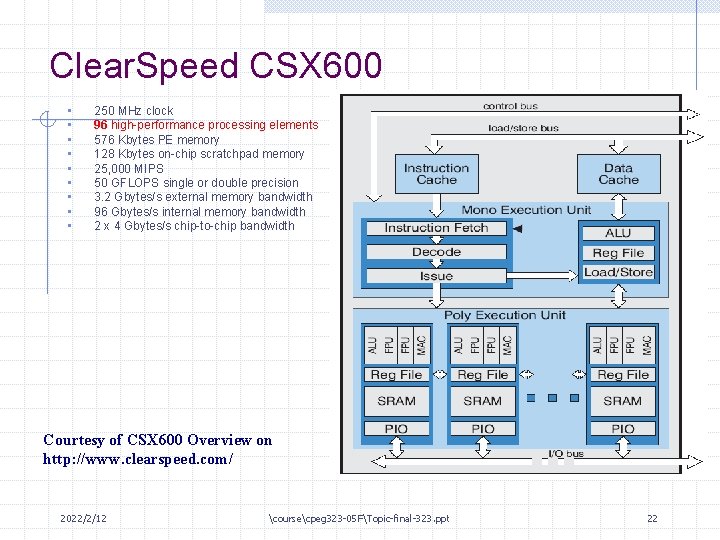

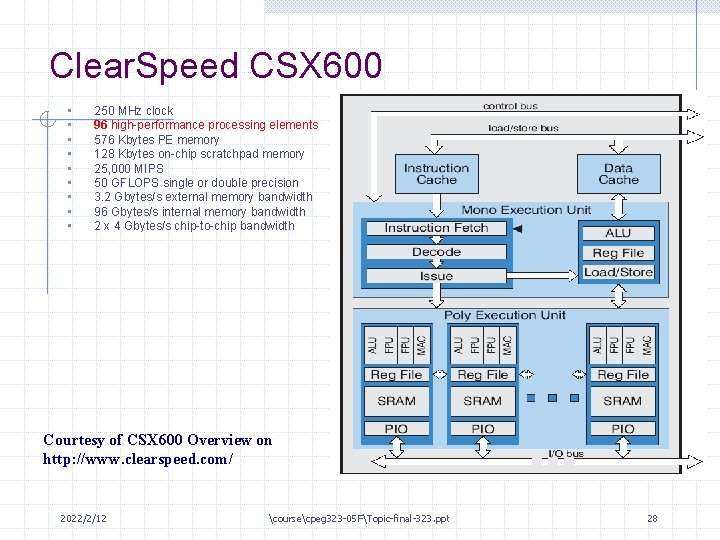

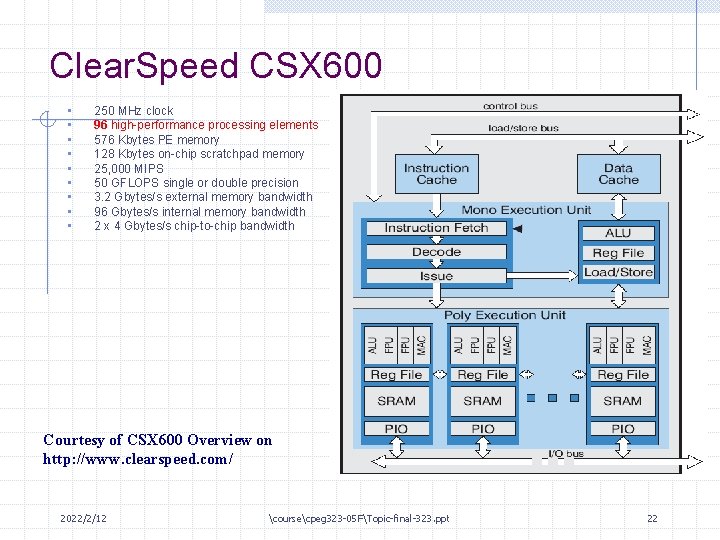

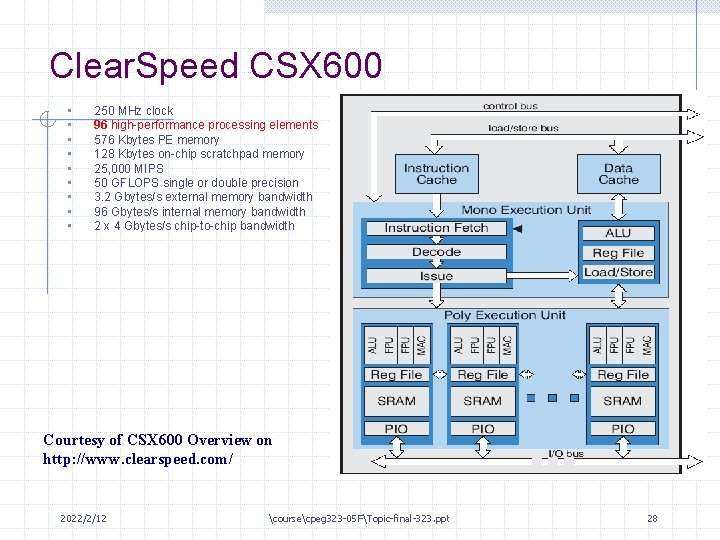

Clear. Speed CSX 600 • • • 250 MHz clock 96 high-performance processing elements 576 Kbytes PE memory 128 Kbytes on-chip scratchpad memory 25, 000 MIPS 50 GFLOPS single or double precision 3. 2 Gbytes/s external memory bandwidth 96 Gbytes/s internal memory bandwidth 2 x 4 Gbytes/s chip-to-chip bandwidth Courtesy of CSX 600 Overview on http: //www. clearspeed. com/ 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 22

![Applications Demands Courtesy of Erik P De Benedictis 2004 1 Zettaflops Applications Plasma Fusion Applications Demands [Courtesy of Erik P. De. Benedictis 2004] 1 Zettaflops Applications Plasma Fusion](https://slidetodoc.com/presentation_image_h2/af6d572183d6e0459a41bcf25a94dab4/image-23.jpg)

Applications Demands [Courtesy of Erik P. De. Benedictis 2004] 1 Zettaflops Applications Plasma Fusion Simulation [Jardin 03] 100 Exaflops No schedule provided by source [HEC 04] Full Global Climate [Malone 03] 10 Exaflops 1 Exaflops Compute as fast as the engineer can think [NASA 99] 100 Petaflops 100 Teraflops 2000 Geodata Earth Station Range [NASA 02] System Performance 1000 [SCa. Le. S 03] 2010 Simulation of more complex biomolecular structures protein folding 1 PFLOPS simulation of large biomolecular structures (ms scale) 250 TFLOPS simulation of medium biomolecular structures (us scale) 50 TFLOPS 2020 [Jardin 03] S. C. Jardin, “Plasma Science Contribution to the SCa. Le. S Report, ” Princeton Plasma Physics Laboratory, PPPL-3879 UC-70, available on Internet. [Malone 03] Robert C. Malone, John B. Drake, Philip W. Jones, Douglas A. Rotman, “High-End Computing in Climate Modeling, ” contribution to SCa. Le. S report. [NASA 99] R. T. Biedron, P. Mehrotra, M. L. Nelson, F. S. Preston, J. J. Rehder, J. L. Rogers, D. H. Rudy, J. Sobieski, and O. O. Storaasli, “Compute as Fast as the Engineers Can Think!” NASA/TM-1999 -209715, available on Internet. [NASA 02] NASA Goddard Space Flight Center, “Advanced Weather Prediction Technologies: NASA’s Contribution to the Operational Agencies, ” available on Internet. [SCa. Le. S 03] Workshop on the Science Case for Large-scale Simulation, June 24 -25, proceedings on Internet a http: //www. pnl. gov/scales/. [De. Benedictis 04], Erik P. De. Benedictis, “Matching Supercomputing to Progress in Science, ” July 2004. Presentation at Lawrence Berkeley National Laboratory, also published as Sandia National Laboratories SAND report SAND 2004 -3333 P. Sandia technical reports are available by going to http: //www. sandia. gov and accessing the technical library. [HEC 04] Federal Plan for High-End Computing, May, 2004. 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 23

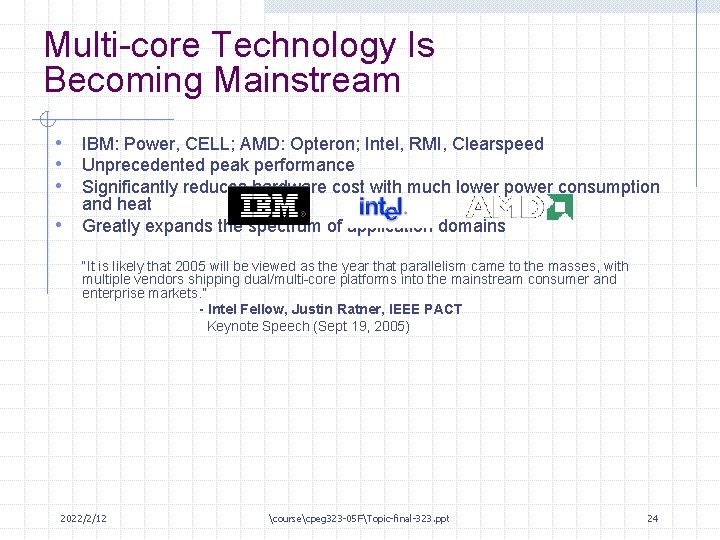

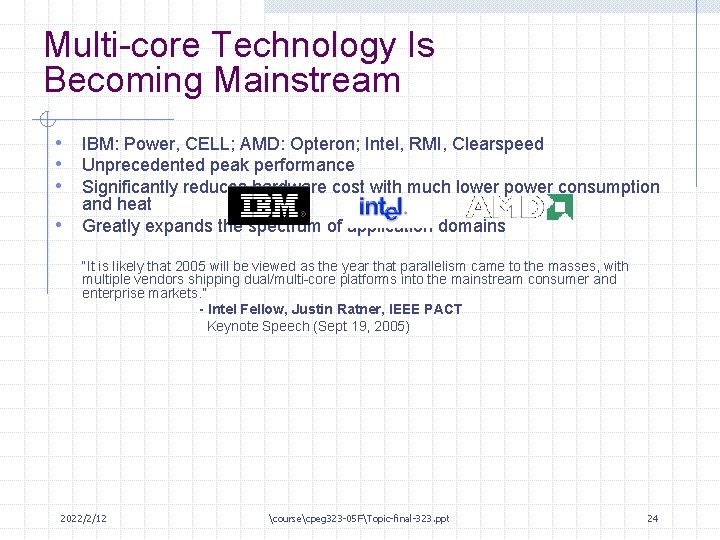

Multi-core Technology Is Becoming Mainstream • IBM: Power, CELL; AMD: Opteron; Intel, RMI, Clearspeed • Unprecedented peak performance • Significantly reduces hardware cost with much lower power consumption • and heat Greatly expands the spectrum of application domains “It is likely that 2005 will be viewed as the year that parallelism came to the masses, with multiple vendors shipping dual/multi-core platforms into the mainstream consumer and enterprise markets. ” - Intel Fellow, Justin Ratner, IEEE PACT Keynote Speech (Sept 19, 2005) 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 24

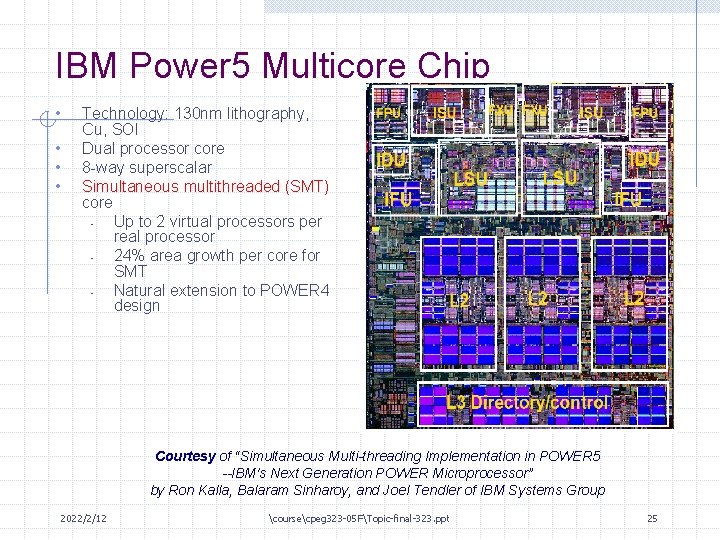

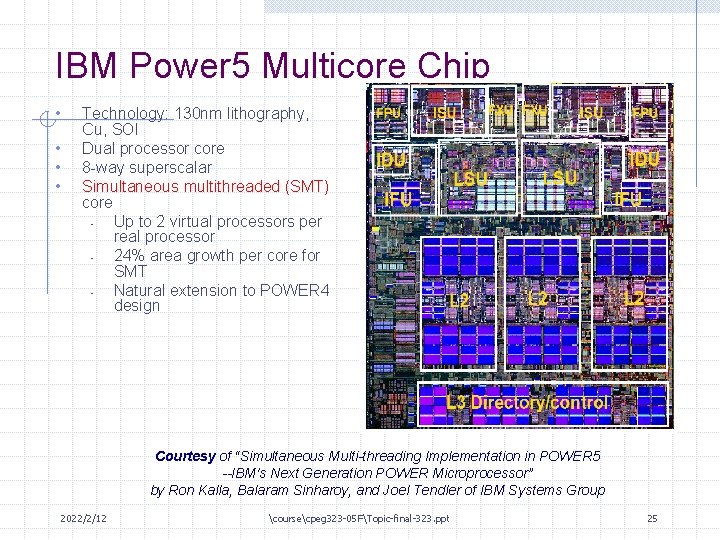

IBM Power 5 Multicore Chip • • Technology: 130 nm lithography, Cu, SOI Dual processor core 8 -way superscalar Simultaneous multithreaded (SMT) core Up to 2 virtual processors per real processor 24% area growth per core for SMT Natural extension to POWER 4 design Courtesy of “Simultaneous Multi-threading Implementation in POWER 5 --IBM's Next Generation POWER Microprocessor” by Ron Kalla, Balaram Sinharoy, and Joel Tendler of IBM Systems Group 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 25

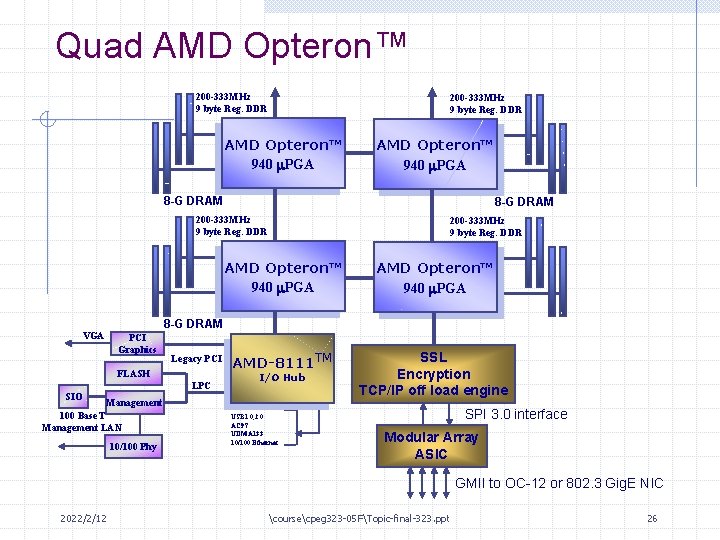

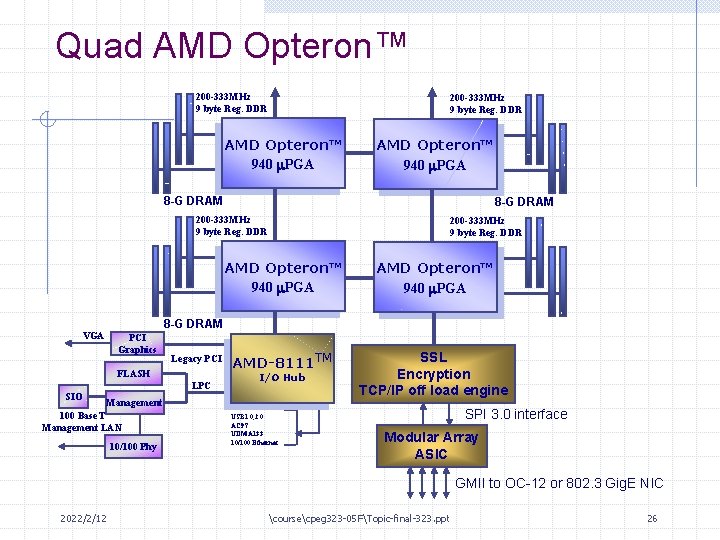

Quad AMD Opteron™ 200 -333 MHz 9 byte Reg. DDR AMD Opteron™ 940 m. PGA 8 -G DRAM 200 -333 MHz 9 byte Reg. DDR VGA 200 -333 MHz 9 byte Reg. DDR AMD Opteron™ 940 m. PGA AMD-8111 TM SSL Encryption TCP/IP off load engine 8 -G DRAM PCI Graphics Legacy PCI FLASH LPC I/O Hub SIO Management 100 Base. T Management LAN 10/100 Phy USB 1. 0, 2. 0 AC 97 UDMA 133 10/100 Ethernet SPI 3. 0 interface Modular Array ASIC GMII to OC-12 or 802. 3 Gig. E NIC 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 26

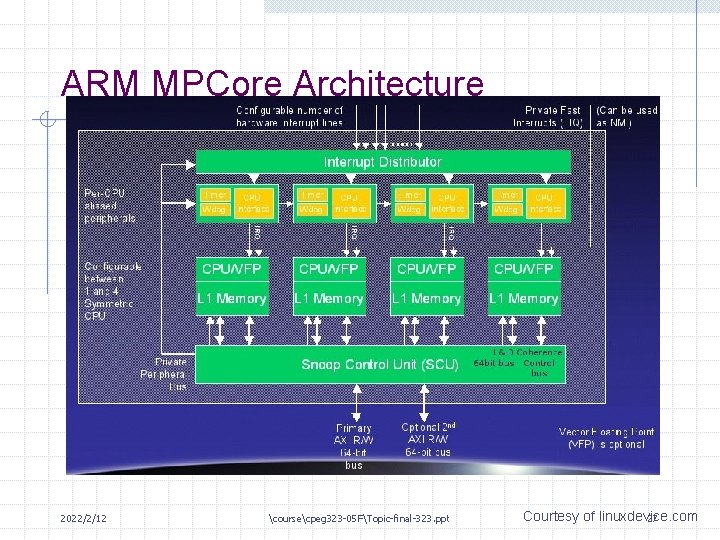

ARM MPCore Architecture 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt Courtesy of linuxdevice. com 27

Clear. Speed CSX 600 • • • 250 MHz clock 96 high-performance processing elements 576 Kbytes PE memory 128 Kbytes on-chip scratchpad memory 25, 000 MIPS 50 GFLOPS single or double precision 3. 2 Gbytes/s external memory bandwidth 96 Gbytes/s internal memory bandwidth 2 x 4 Gbytes/s chip-to-chip bandwidth Courtesy of CSX 600 Overview on http: //www. clearspeed. com/ 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 28

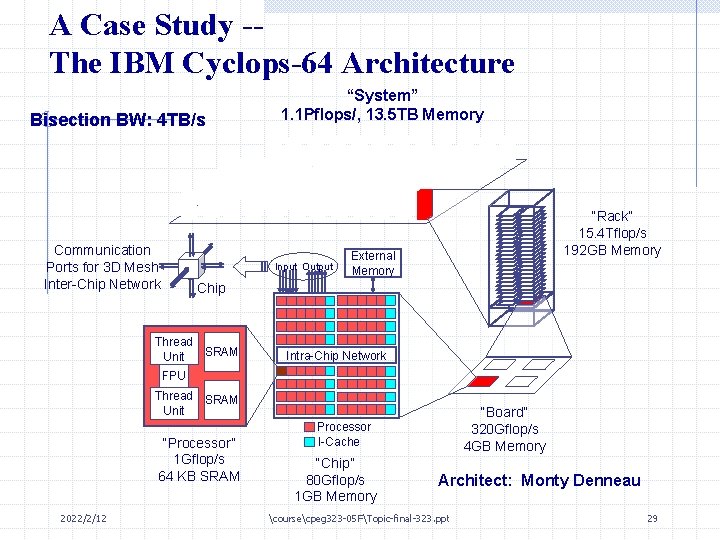

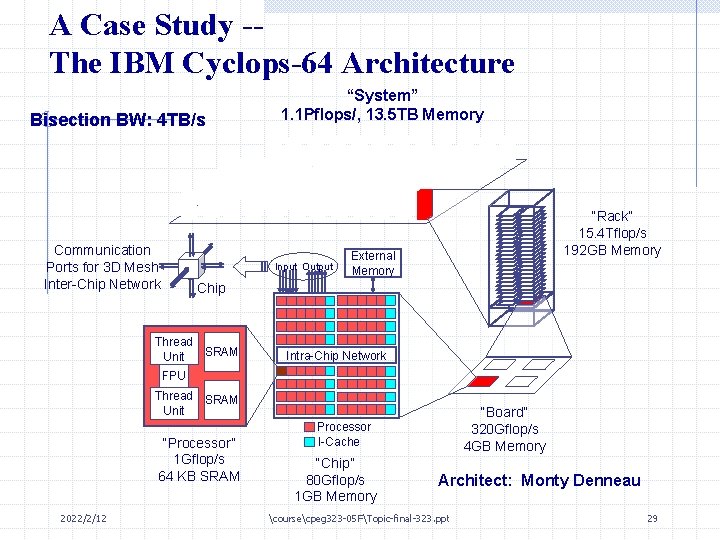

A Case Study -The IBM Cyclops-64 Architecture Bisection BW: 4 TB/s Communication Ports for 3 D Mesh Inter-Chip Network “System” 1. 1 Pflops/, 13. 5 TB Memory Input Output “Rack” 15. 4 Tflop/s 192 GB Memory External Memory Chip Thread SRAM Unit Intra-Chip Network FPU Thread SRAM Unit “Processor” 1 Gflop/s 64 KB SRAM 2022/2/12 “Board” 320 Gflop/s 4 GB Memory Processor I-Cache “Chip” 80 Gflop/s 1 GB Memory Architect: Monty Denneau coursecpeg 323 -05 FTopic-final-323. ppt 29

Data Points of a 1 Petaflop C 64 Machine • Cyclops Chip: 533 MHz, 5. 1 MB SRAM, 1 -2 GB DRAM • • • Disk space: 300 GB/node Total system power: 2 MW (chill-water cooling) Size: 20’ x 48’ Mean time to failure: 2 weeks Cost: 20 million ? 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 30

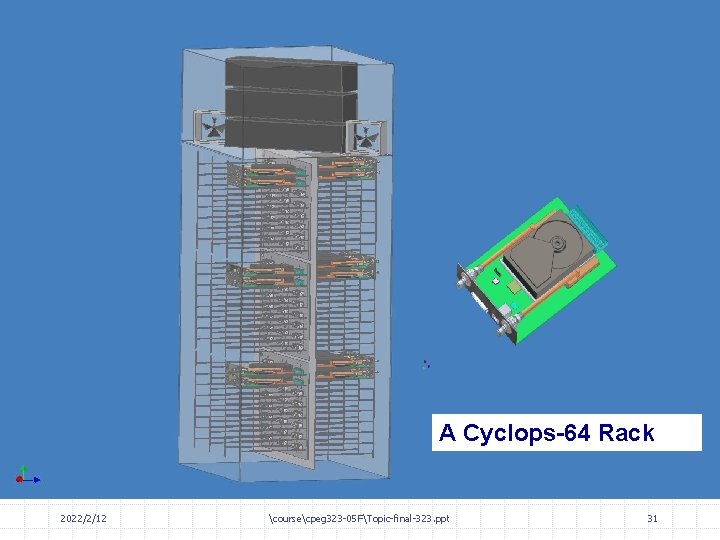

A Cyclops-64 Rack 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 31

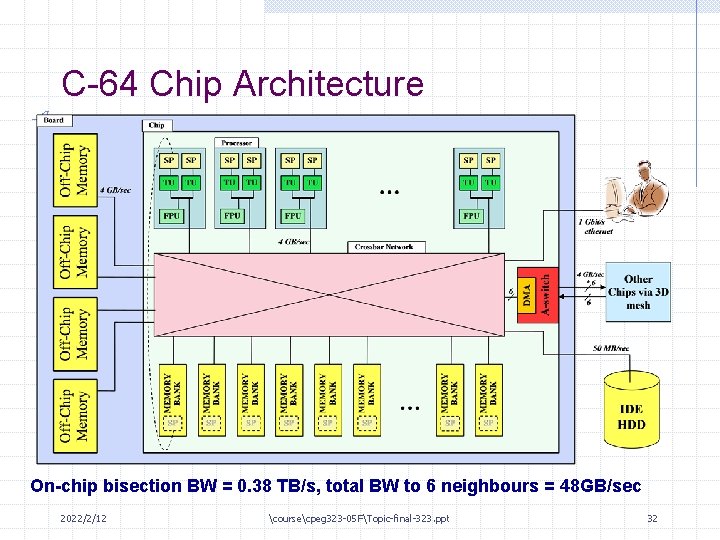

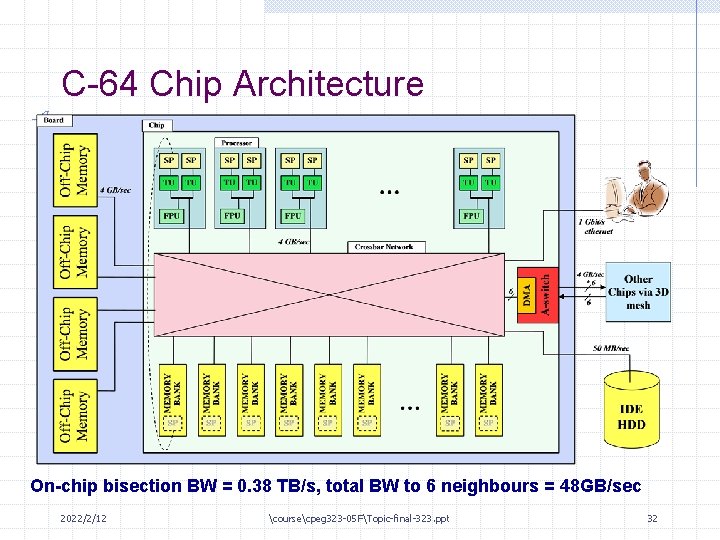

C-64 Chip Architecture On-chip bisection BW = 0. 38 TB/s, total BW to 6 neighbours = 48 GB/sec 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 32

Mrs. Clops 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 33

Summary 2022/2/12 coursecpeg 323 -05 FTopic-final-323. ppt 34