Topic Modeling Techniques for Library Chat Reference Data

Topic Modeling Techniques for Library Chat Reference Data : Challenges, Solutions, and Future Directions Hyun. Seung Koh & Mark Fienup, Ph. Ds hyunseung. koh@uni. edu & mark. fienup@uni. edu United States

Agenda ● ● ● Story Library Chat Research Goal Data Set Preprocessing Topic Modeling Techniques Evaluating and Selecting Topic Models Diagnosing the Quality of Topics Comparisons with Evaluation Techniques Conclusions Future Directions

Story ● As a newly-hired assessment librarian, ● Looking for most effective & efficient ways to understand a wide range of topics and issues in the library ● An answer was to explore chat transcripts and interactions, which is a part of front-end (custom/user) services ● However, analyzing chat transcripts was time-consuming ● Asking for help

Library Chat Research ● Coding-based qualitative content analysis (e. g. , Fuller & Dryden, 2015; Passonneau & Coffey, 2011). ● Conversation or language usage analysis (e. g. , Dempsey, 2016; Waugh, 2013) ● Simple descriptive count- or frequency-based analyses, accompanied by qualitative coding-based content analyses (e. g. , Brown, 2017; Maximiek, Rushton, & Brown, 2010). ● Advanced quantitative research methods, such as cluster analysis and topic modeling techniques (e. g. , Kohler, 2017; Stieve & Wallace, 2018; Tempelman. Kluit & Pearce, 2014)

Goal ● In order to obtain rich, actionable insights in a timely manner from vast amounts of chat reference data, we chose topic modeling techniques that would enable us to analyze unstructured text data requiring no or little human intervention (before in-depth qualitative analyses).

Data Set One academic library’s chat reference data collected from April 10, 2015 to May 31, 2019 ● ● Lib. Answers > Lib. Chat Library. H 3 lp Question Point Live. Person

Preprocessing : Data Format ● Excel spreadsheet - raw data needed to be “cleaned” ● Some of later chat dialogs included Initial Question column

Preprocessing : Cleaning of Raw Chats 1) 2) 3) 4) 5) 6) removing timestamps, removing chat patron and librarian identifiers, removing http tags (e. g. , URLs), removing non-ASCII characters, removing stopwords, lemmatized words using nltk. Word. Net. Lemmatizer()

Preprocessing : Data Sets Considered ● ● Questions only Whole Chats with All POS (Parts-of-Speech Tagging) Whole Chats with POS (Noun and Adjective) Whole Chats with POS (Noun, Adjective, & Verb)

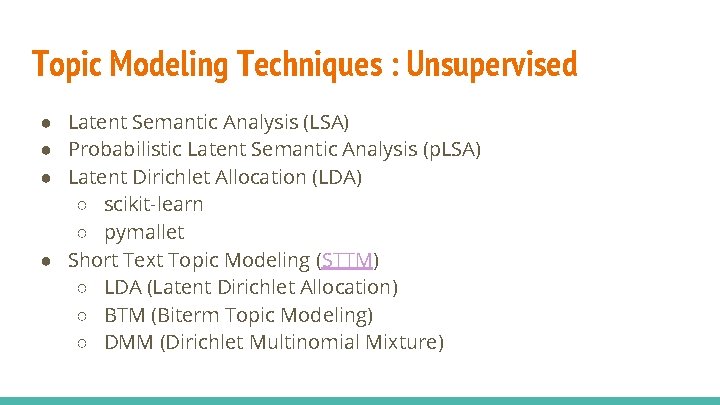

Topic Modeling Techniques : Unsupervised ● Latent Semantic Analysis (LSA) ● Probabilistic Latent Semantic Analysis (p. LSA) ● Latent Dirichlet Allocation (LDA) ○ scikit-learn ○ pymallet ● Short Text Topic Modeling (STTM) ○ LDA (Latent Dirichlet Allocation) ○ BTM (Biterm Topic Modeling) ○ DMM (Dirichlet Multinomial Mixture)

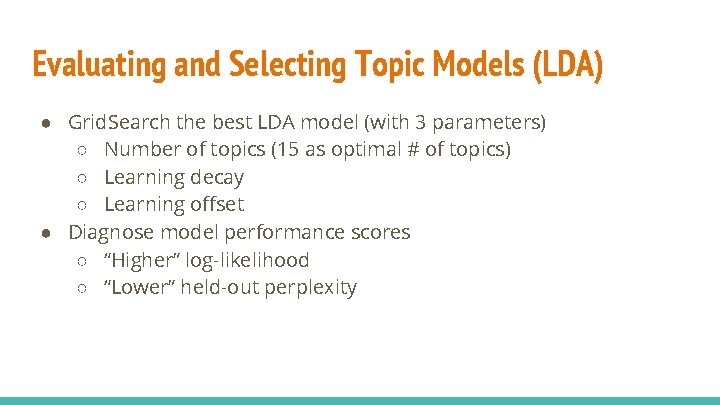

Evaluating and Selecting Topic Models (LDA) ● Grid. Search the best LDA model (with 3 parameters) ○ Number of topics (15 as optimal # of topics) ○ Learning decay ○ Learning offset ● Diagnose model performance scores ○ “Higher” log-likelihood ○ “Lower” held-out perplexity

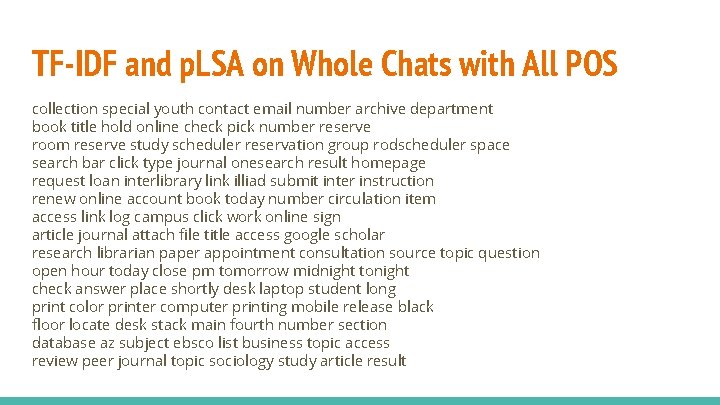

TF-IDF and p. LSA on Whole Chats with All POS collection special youth contact email number archive department book title hold online check pick number reserve room reserve study scheduler reservation group rodscheduler space search bar click type journal onesearch result homepage request loan interlibrary link illiad submit inter instruction renew online account book today number circulation item access link log campus click work online sign article journal attach file title access google scholar research librarian paper appointment consultation source topic question open hour today close pm tomorrow midnight tonight check answer place shortly desk laptop student long print color printer computer printing mobile release black floor locate desk stack main fourth number section database az subject ebsco list business topic access review peer journal topic sociology study article result

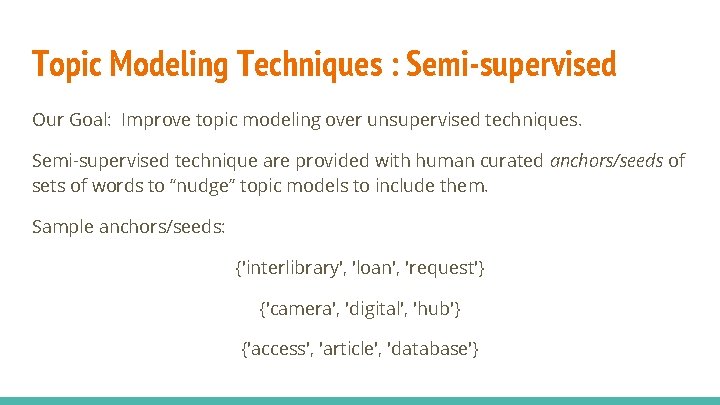

Topic Modeling Techniques : Semi-supervised Our Goal: Improve topic modeling over unsupervised techniques. Semi-supervised technique are provided with human curated anchors/seeds of sets of words to “nudge” topic models to include them. Sample anchors/seeds: {'interlibrary', 'loan', 'request'} {'camera', 'digital', 'hub'} {'access', 'article', 'database'}

Topic Modeling Techniques : Guided. LDA & Cor. Ex ● Guided. LDA ○ With 9 anchor words, confidence 0. 00 ○ With 9 anchor words, confidence 0. 75 ● Cor. Ex - Correlation Explanation ○ With no anchor words without bigrams ○ With 9 anchor words without bigrams

How to Select Anchors/Seeds? Methodology: 1) Execute unsupervised topic modeling techniques 2) Combine resulting topics from unsupervised topics 3) Analyze combined topics to find common bi-occurrences and trioccurrences of words 4) Human curates a set of anchors/seeds from these bi-occurrences and tri-occurrences

Diagnostic Measures : Word Intrusion Task “In the word intrusion task, the subject is presented with six randomly ordered words. The task of the user is to find the word which is out of place or does not belong with the others, i. e. , the intruder. ” “An additional finding was that there was not a clear association between traditional measures of topic models, such as held-out loglikelihood and more intuitive measures such as the word intrusion task. ” (Boyd-Graber, Mimno, & Newman, 2014, p. 238)

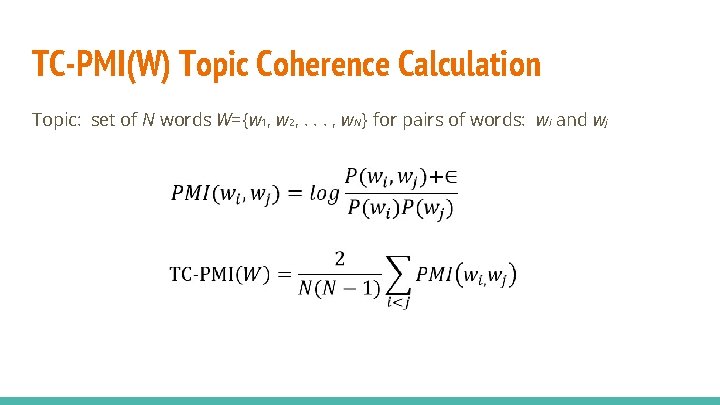

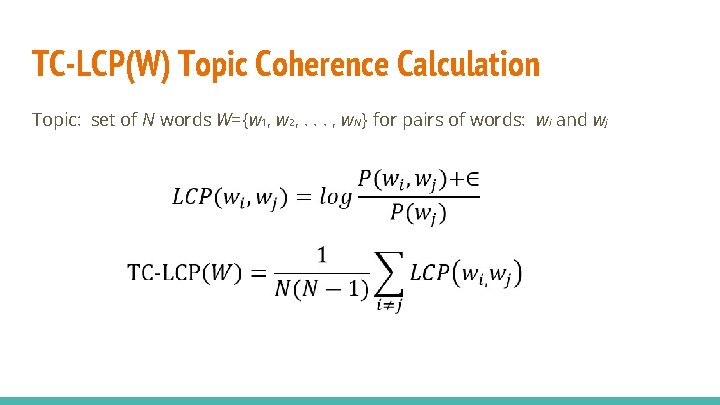

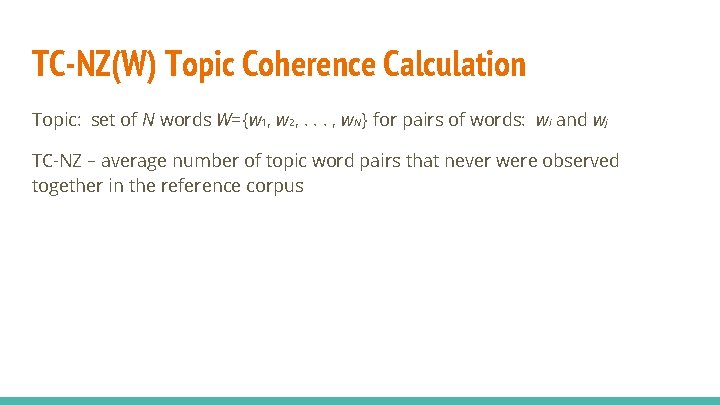

Diagnostic Measures : Topic Coherence ● ● TC-PMI - normalized Pointwise Mutual Information TC-LCP - normalized Log Conditional Probability TC-NZ - number of topic words never observed together in corpus STTM Mean Coherence PMI - user for comparison

TC-PMI(W) Topic Coherence Calculation Topic: set of N words W={w 1, w 2, . . . , w. N} for pairs of words: wi and wj

TC-LCP(W) Topic Coherence Calculation Topic: set of N words W={w 1, w 2, . . . , w. N} for pairs of words: wi and wj

TC-NZ(W) Topic Coherence Calculation Topic: set of N words W={w 1, w 2, . . . , w. N} for pairs of words: wi and wj TC-NZ – average number of topic word pairs that never were observed together in the reference corpus

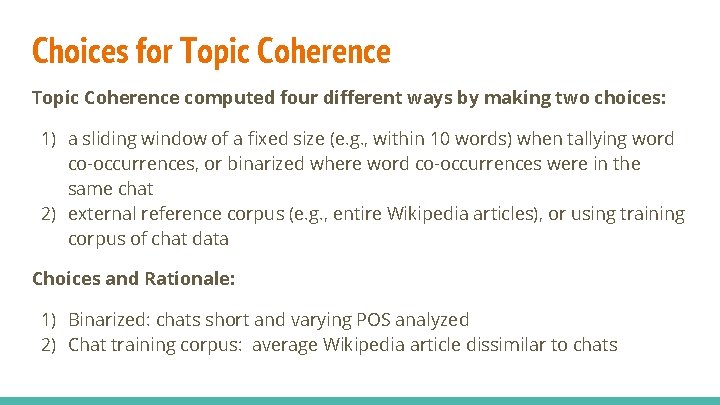

Choices for Topic Coherence computed four different ways by making two choices: 1) a sliding window of a fixed size (e. g. , within 10 words) when tallying word co-occurrences, or binarized where word co-occurrences were in the same chat 2) external reference corpus (e. g. , entire Wikipedia articles), or using training corpus of chat data Choices and Rationale: 1) Binarized: chats short and varying POS analyzed 2) Chat training corpus: average Wikipedia article dissimilar to chats

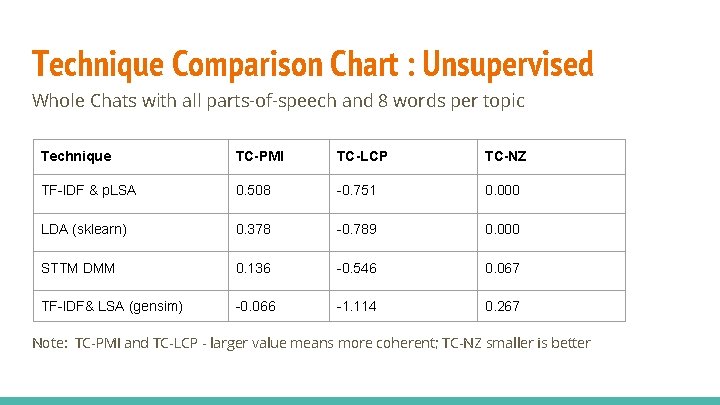

Technique Comparison Chart : Unsupervised Whole Chats with all parts-of-speech and 8 words per topic Technique TC-PMI TC-LCP TC-NZ TF-IDF & p. LSA 0. 508 -0. 751 0. 000 LDA (sklearn) 0. 378 -0. 789 0. 000 STTM DMM 0. 136 -0. 546 0. 067 TF-IDF& LSA (gensim) -0. 066 -1. 114 0. 267 Note: TC-PMI and TC-LCP - larger value means more coherent; TC-NZ smaller is better

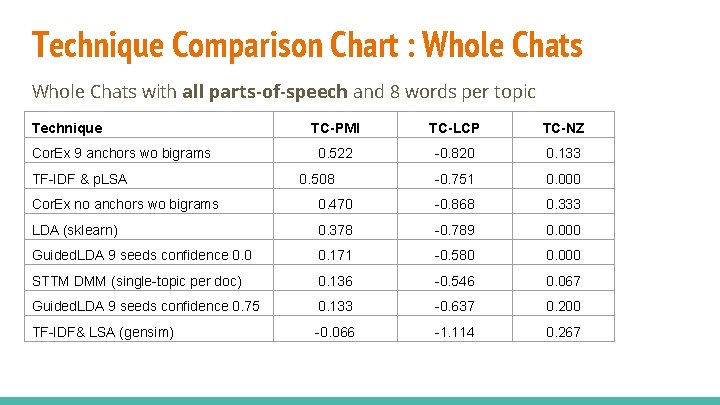

Technique Comparison Chart : Whole Chats with all parts-of-speech and 8 words per topic Technique Cor. Ex 9 anchors wo bigrams TF-IDF & p. LSA TC-PMI TC-LCP TC-NZ 0. 522 -0. 820 0. 133 -0. 751 0. 000 0. 508 Cor. Ex no anchors wo bigrams 0. 470 -0. 868 0. 333 LDA (sklearn) 0. 378 -0. 789 0. 000 Guided. LDA 9 seeds confidence 0. 0 0. 171 -0. 580 0. 000 STTM DMM (single-topic per doc) 0. 136 -0. 546 0. 067 Guided. LDA 9 seeds confidence 0. 75 0. 133 -0. 637 0. 200 TF-IDF& LSA (gensim) -0. 066 -1. 114 0. 267

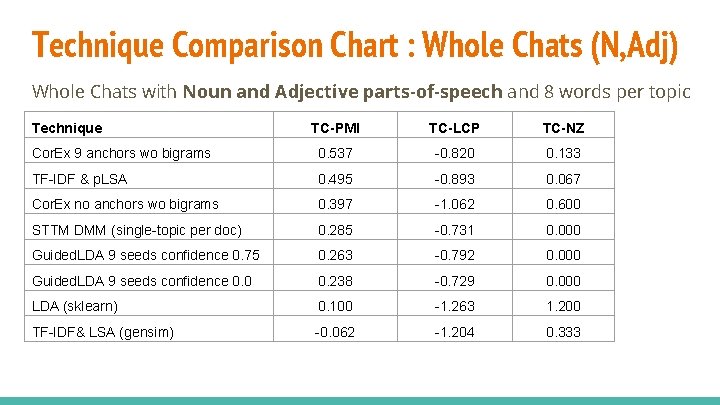

Technique Comparison Chart : Whole Chats (N, Adj) Whole Chats with Noun and Adjective parts-of-speech and 8 words per topic Technique TC-PMI TC-LCP TC-NZ Cor. Ex 9 anchors wo bigrams 0. 537 -0. 820 0. 133 TF-IDF & p. LSA 0. 495 -0. 893 0. 067 Cor. Ex no anchors wo bigrams 0. 397 -1. 062 0. 600 STTM DMM (single-topic per doc) 0. 285 -0. 731 0. 000 Guided. LDA 9 seeds confidence 0. 75 0. 263 -0. 792 0. 000 Guided. LDA 9 seeds confidence 0. 0 0. 238 -0. 729 0. 000 LDA (sklearn) 0. 100 -1. 263 1. 200 TF-IDF& LSA (gensim) -0. 062 -1. 204 0. 333

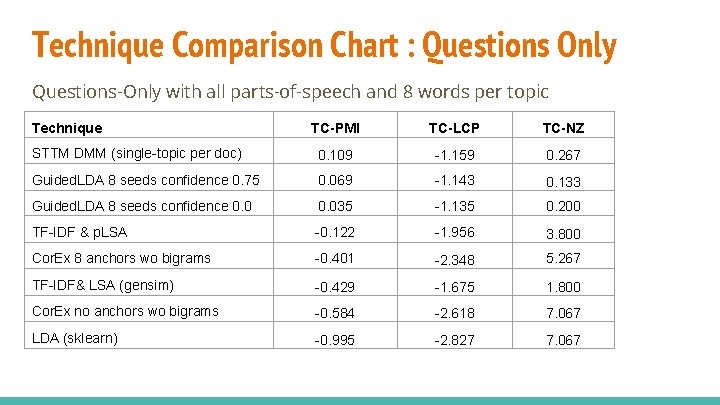

Technique Comparison Chart : Questions Only Questions-Only with all parts-of-speech and 8 words per topic Technique TC-PMI TC-LCP TC-NZ STTM DMM (single-topic per doc) 0. 109 -1. 159 0. 267 Guided. LDA 8 seeds confidence 0. 75 0. 069 -1. 143 0. 133 Guided. LDA 8 seeds confidence 0. 035 -1. 135 0. 200 TF-IDF & p. LSA -0. 122 -1. 956 3. 800 Cor. Ex 8 anchors wo bigrams -0. 401 -2. 348 5. 267 TF-IDF& LSA (gensim) -0. 429 -1. 675 1. 800 Cor. Ex no anchors wo bigrams -0. 584 -2. 618 7. 067 LDA (sklearn) -0. 995 -2. 827 7. 067

Conclusions ● Unsupervised ○ p. LSA ○ STTM DMM - single question only ● Semi-supervised ○ Cor. Ex with anchor words ○ Guided. LDA with anchor words - questions only ● Whole-chat dataset better than questions only, but parts-ofspeech analysis probably not worthwhile

Future Directions ● To increase the amount of data and diversify types of data, we would need to collect more data from diverse institutions. ● Semi-supervised approaches look promising, but they require human subjective judgment for selecting anchor words. In overcoming this, we would need to identify a library-specific corpus and automate the processes of selecting anchor words. ● We would need to identify and use new methods in terms of diagnosing or evaluating models or output to help us obtain novel types of insights (e. g. , MALLET’s Diagnostic Measures).

Contributors ● John Russell, Digital Humanities Librarian and Associate Director of the Center for Humanities and Information, Pennsylvania State University ● Scott Enderle, Digital Humanities Specialist, University of Pennsylvania ● Ellie Kohler, Library Data Analyst, Virginia Tech University Libraries ● Megan Ozeran, Data Analytics & Visualization Librarian, University of Illinois at Urbana-Champaign ● Xanda Schofield, Assistant Professor of Computer Science and Mathematics, Harvey Mudd College

Acknowledgment This project was made possible in part by the Institute of Museum and Library Services [National Leadership Grants for Libraries, LG-34 -190074 -19].

Q&A Thank you!

- Slides: 30