Topic 5 Selection Theory Paul L Schumann Ph

- Slides: 36

Topic #5: Selection Theory Paul L. Schumann, Ph. D. Professor of Management MGMT 440: Human Resource Management © 2008 by Paul L. Schumann. All rights reserved. 1

Outline �Selection in HR �Selection Process �Review of Correlation �Criteria for Selection Methods �Using Selection Methods �Utility Analysis �Taylor-Russell Table �Decision-Making in Selection 2

Selection in HR Selection is the HR term for hiring Problem: we don’t know the applicant’s job performance until after we hire the applicant Solution: we need to predict the applicant’s job performance Selection methods: the predictors of job performance used to make the selection decision Examples of selection methods: résumé evaluations, employment interviews, tests of various KSAs, … Selection Methods (Predictors of Job Performance) Predicted Job Performance of Each Applicant Selection Decision (Positive or Negative: Hire or Don’t Hire) 3

Selection in HR Selection Methods (Predictors of Job Performance) Predicted Job Performance of Each Applicant Selection Decision (Positive or Negative: Hire or Don’t Hire) We use the selection methods (which are measures of the applicant’s qualifications) to predict the job performance of each applicant to make the selection decision Positive selection decision: Hire the applicant if we predict the applicant will have good job performance Negative selection decision: Don’t hire the applicant if we predict the applicant will have poor job performance 4

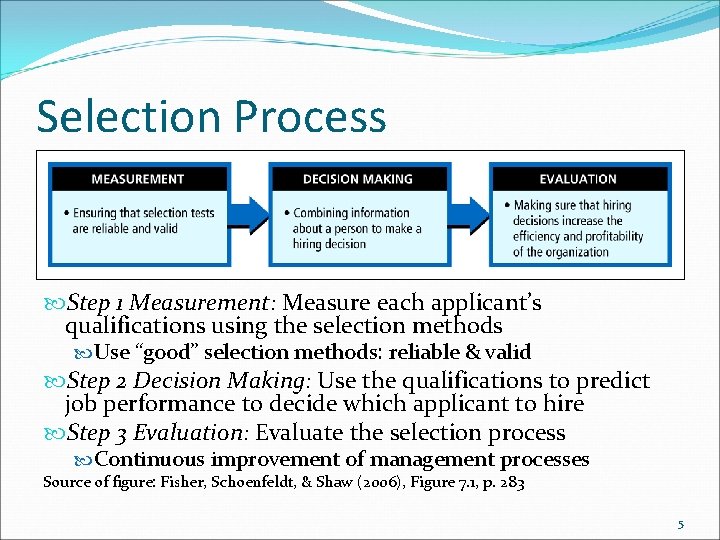

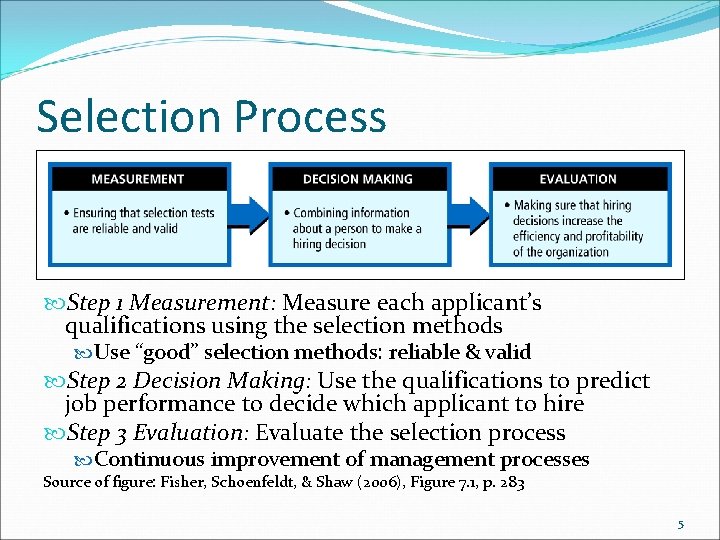

Selection Process Step 1 Measurement: Measure each applicant’s qualifications using the selection methods Use “good” selection methods: reliable & valid Step 2 Decision Making: Use the qualifications to predict job performance to decide which applicant to hire Step 3 Evaluation: Evaluate the selection process Continuous improvement of management processes Source of figure: Fisher, Schoenfeldt, & Shaw (2006), Figure 7. 1, p. 283 5

Review of Correlation �Correlation measures the degree of relationship between 2 variables �Example: What is the relationship (correlation) between the job interview & job performance? � Do applicants who do better on the interview also do better on the job? � Strong correlation means the job interview accurately predicts job performance � Use the job interview as a selection method � Weak correlation means the job interview does not predict job performance � Don’t use the job interview as a selection method 6

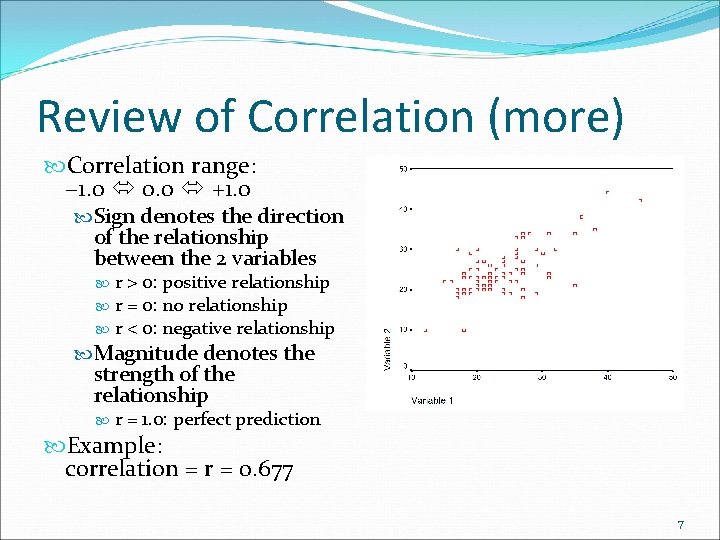

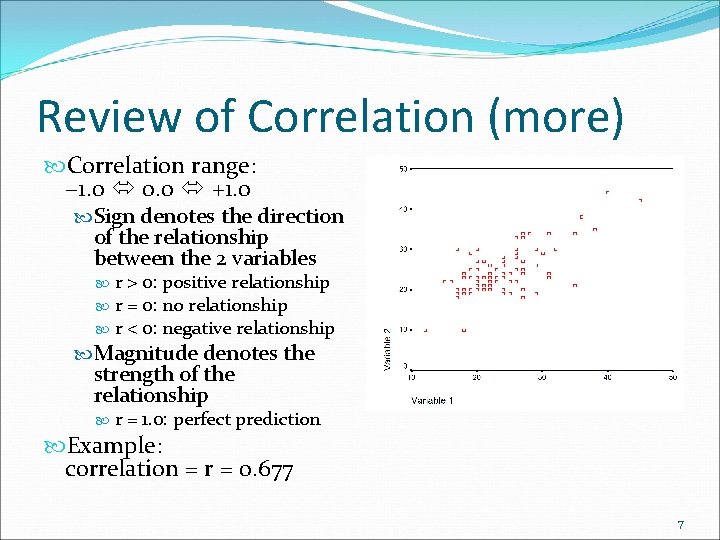

Review of Correlation (more) Correlation range: − 1. 0 0. 0 +1. 0 Sign denotes the direction of the relationship between the 2 variables r > 0: positive relationship r = 0: no relationship r < 0: negative relationship Magnitude denotes the strength of the relationship r = 1. 0: perfect prediction Example: correlation = r = 0. 677 7

2 Criteria for Selection Methods � 1. Reliability: consistency of measurement �Does the measuring tool give us the same measurement every time we measure the same thing? � Example: a reliable ruler vs. an unreliable ruler � Example: a reliable programming skills test? � 2. Validity: Does the measuring tool measure what we really want to measure? �Example: Is a ruler a valid measure of temperature? No. �Example: Is the programming skills test a valid predictor of job performance of computer programmers? Maybe. 8

Criteria 1: Reliability �Reliability: consistency of measurement � 3 methods of determining reliability: � 1. Test-retest reliability: measure the same thing twice � Example: Give the programming skills test to people (applicants or employees) twice and correlate their scores � 2. Inter-rater reliability: see if 2 raters agree in their ratings � Example: Have 2 interviewers witness job interviews, each interviewer rates each applicant, then correlate the ratings � 3. Internal consistency reliability: “coefficient alpha” � Example: Programming skills test 9

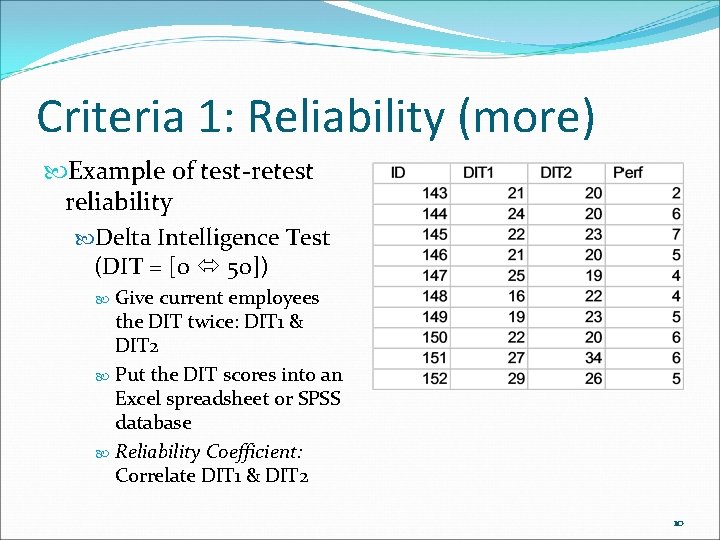

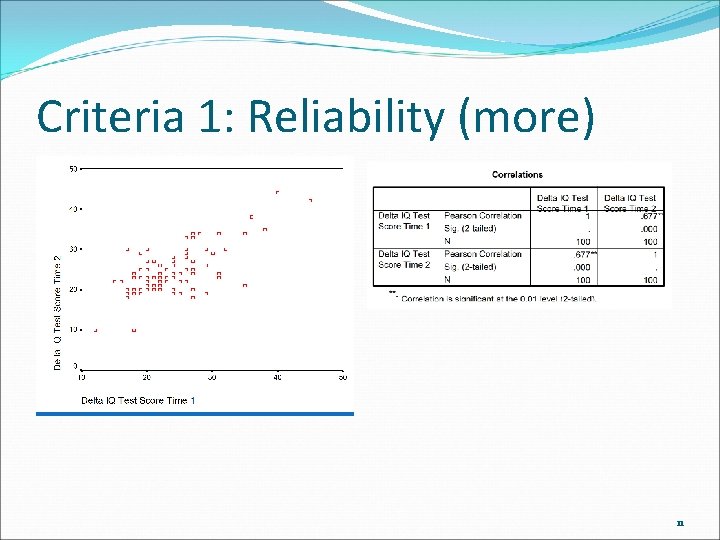

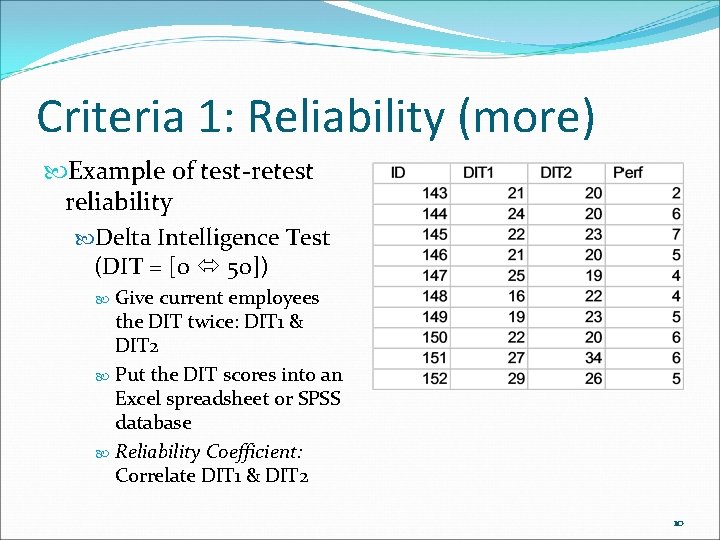

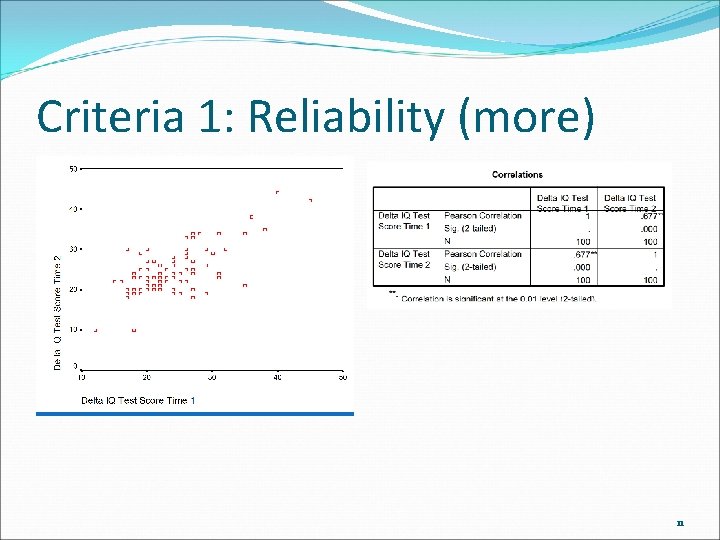

Criteria 1: Reliability (more) Example of test-retest reliability Delta Intelligence Test (DIT = [0 50]) Give current employees the DIT twice: DIT 1 & DIT 2 Put the DIT scores into an Excel spreadsheet or SPSS database Reliability Coefficient: Correlate DIT 1 & DIT 2 10

Criteria 1: Reliability (more) 11

Criteria 2: Validity �Validity: Are we measuring what we want to measure? � 3 methods of determining validity: � 1. Content validity: Judge if the content of the selection method is a good match with the content of the job � Example: Are the questions on the programming skills test asking about programming skills that are used on the job? � Example: The questions on the test are mostly about writing programs in COBOL while the job uses C++ and Java � Based on the mismatch of the content of the test versus the content of the job, we might judge the programming skills test to have poor validity for our purposes 12

Criteria 2: Validity (more) � 3 methods of determining validity (more): � 2. Criterion-related concurrent validity: � Measure 2 things at the same time (concurrently): � Selection method (predictor of job performance) � Example: Programming skills test � Job performance � Example: Job performance ratings of programmers � Use our current employees as our sample (we don’t observe the concurrent job performance of the applicants) � Example: Use our current programmers — give them the test � Correlate selection method scores with job performance ratings 13

Criteria 2: Validity (more) � 3 methods of determining validity (more): � 3. Criterion-related predictive validity: � Measure 2 things at different times: � Measure now: Selection method (predictor of job performance) � Example: Programming skills test � Measure in the future: Job performance � Example: Job performance ratings of programmers � Use the applicants we hire as our sample � Example: Give applicants the test, keep scores on file � Correlate selection method scores with job performance ratings after the new hires have been on the job for a while 14

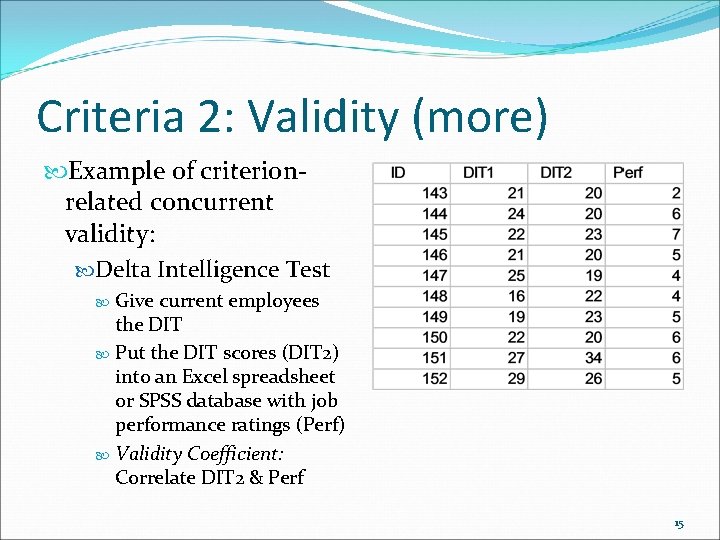

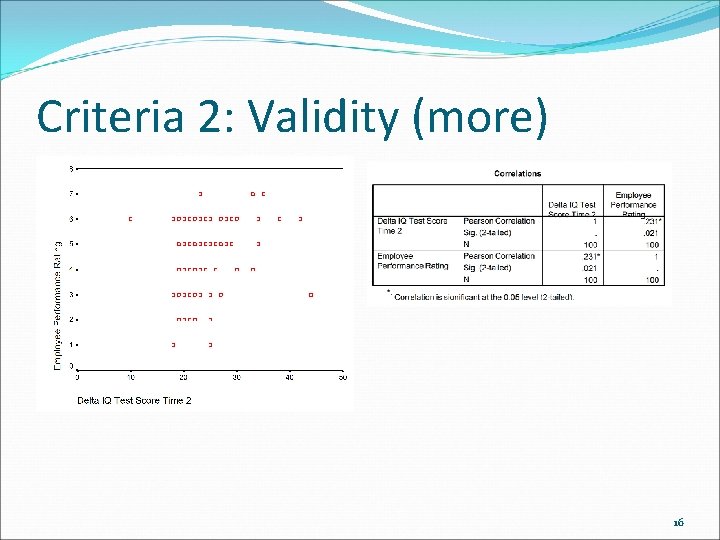

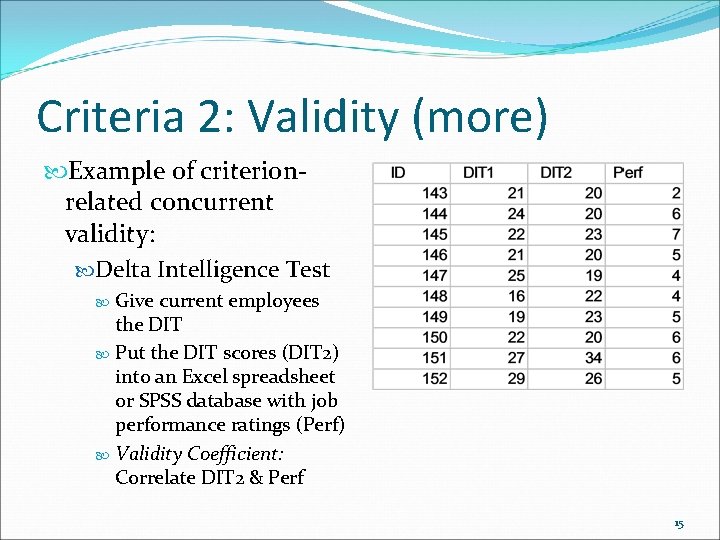

Criteria 2: Validity (more) Example of criterionrelated concurrent validity: Delta Intelligence Test Give current employees the DIT Put the DIT scores (DIT 2) into an Excel spreadsheet or SPSS database with job performance ratings (Perf) Validity Coefficient: Correlate DIT 2 & Perf 15

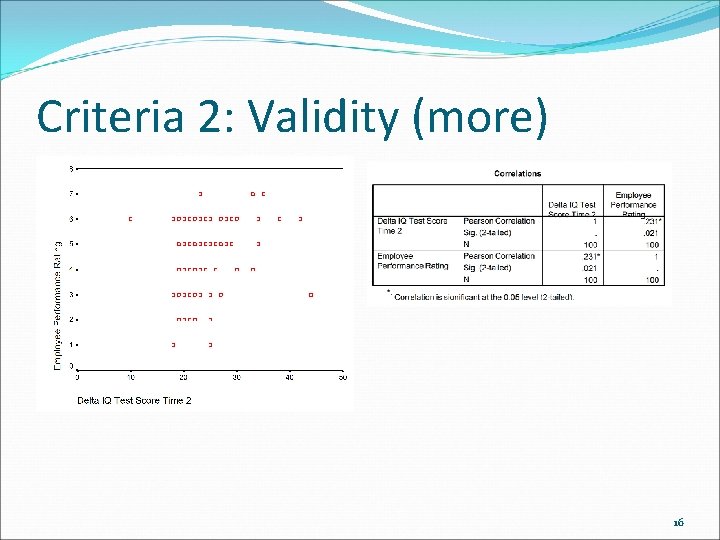

Criteria 2: Validity (more) 16

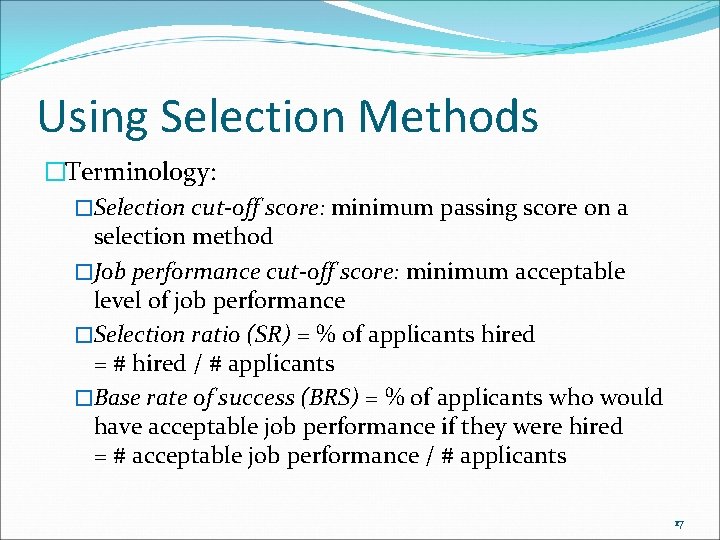

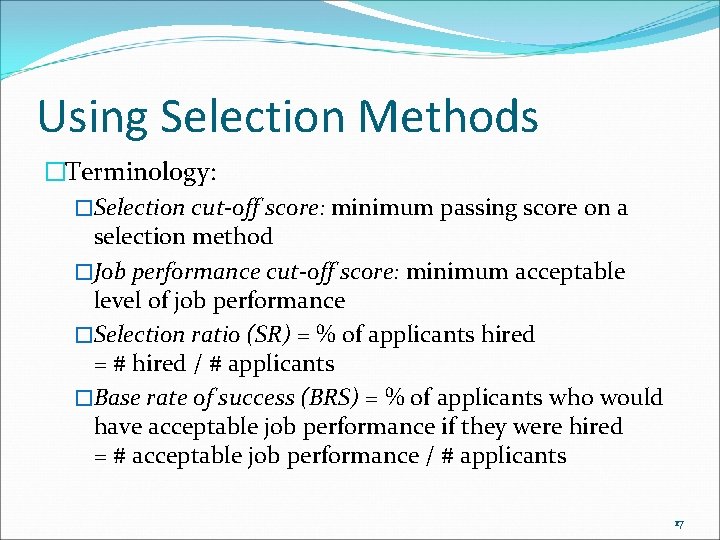

Using Selection Methods �Terminology: �Selection cut-off score: minimum passing score on a selection method �Job performance cut-off score: minimum acceptable level of job performance �Selection ratio (SR) = % of applicants hired = # hired / # applicants �Base rate of success (BRS) = % of applicants who would have acceptable job performance if they were hired = # acceptable job performance / # applicants 17

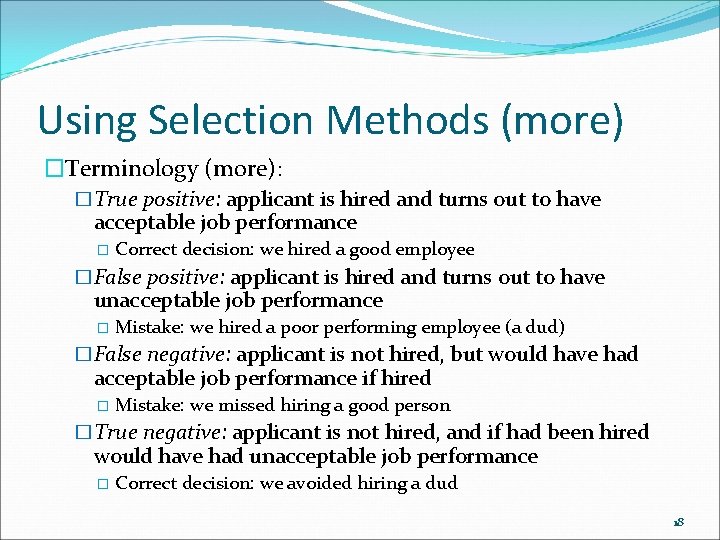

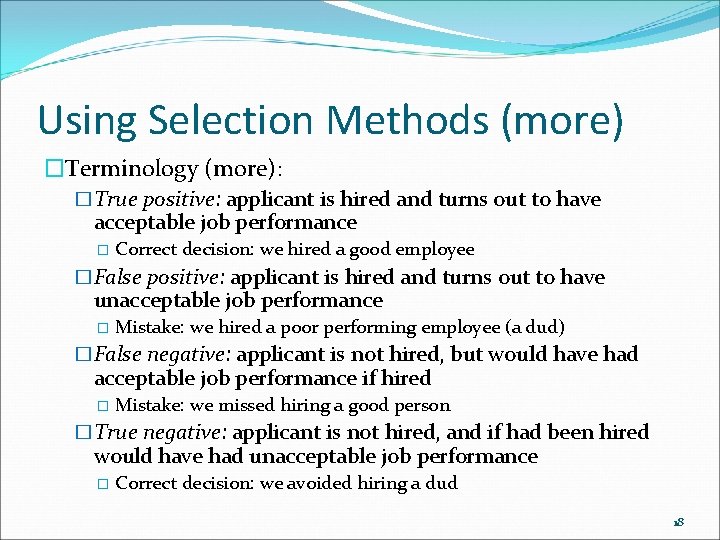

Using Selection Methods (more) �Terminology (more): �True positive: applicant is hired and turns out to have acceptable job performance � Correct decision: we hired a good employee �False positive: applicant is hired and turns out to have unacceptable job performance � Mistake: we hired a poor performing employee (a dud) �False negative: applicant is not hired, but would have had acceptable job performance if hired � Mistake: we missed hiring a good person �True negative: applicant is not hired, and if had been hired would have had unacceptable job performance � Correct decision: we avoided hiring a dud 18

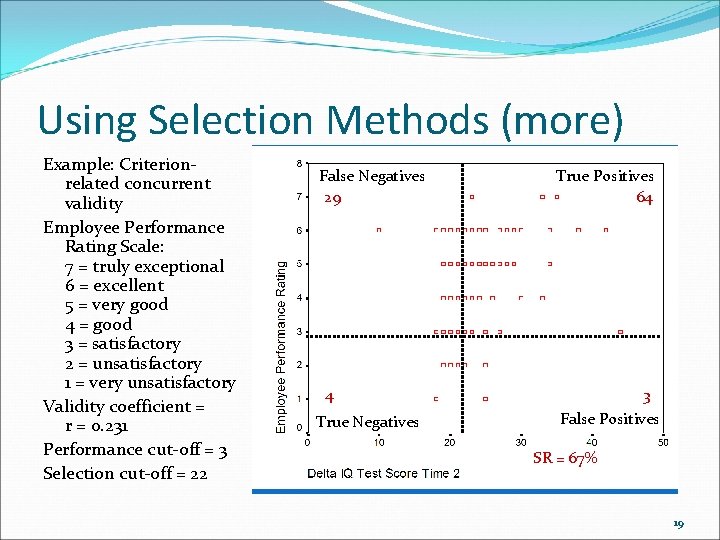

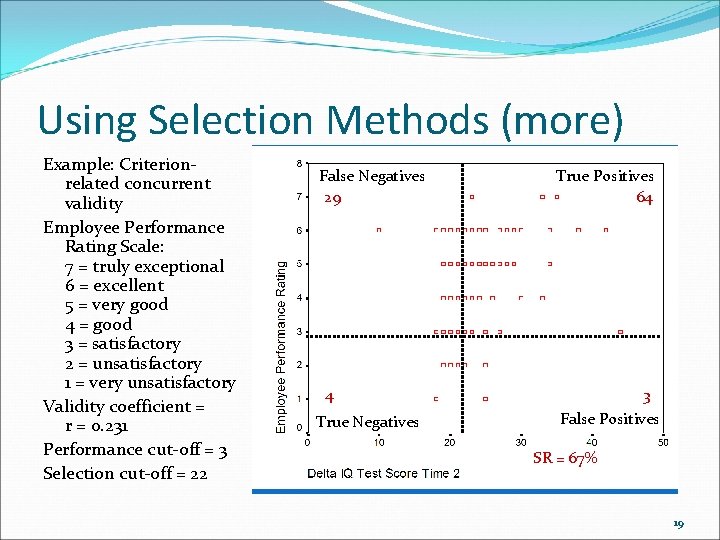

Using Selection Methods (more) Example: Criterionrelated concurrent validity Employee Performance Rating Scale: 7 = truly exceptional 6 = excellent 5 = very good 4 = good 3 = satisfactory 2 = unsatisfactory 1 = very unsatisfactory Validity coefficient = r = 0. 231 Performance cut-off = 3 Selection cut-off = 22 False Negatives 29 4 True Negatives True Positives 64 3 False Positives SR = 67% 19

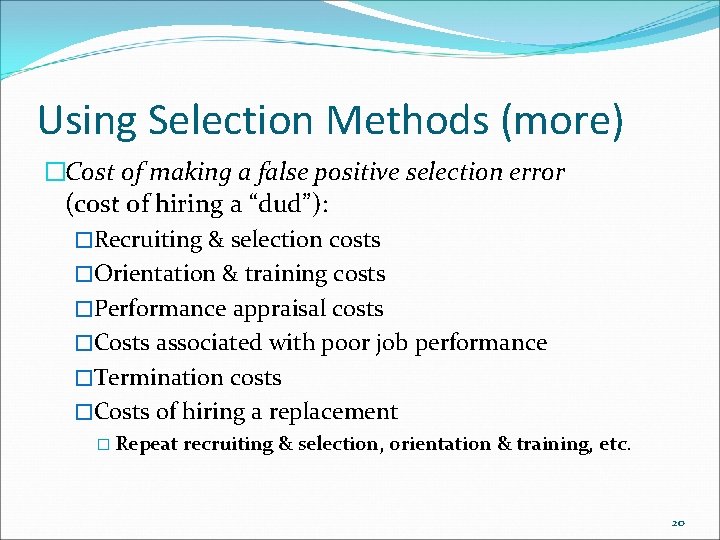

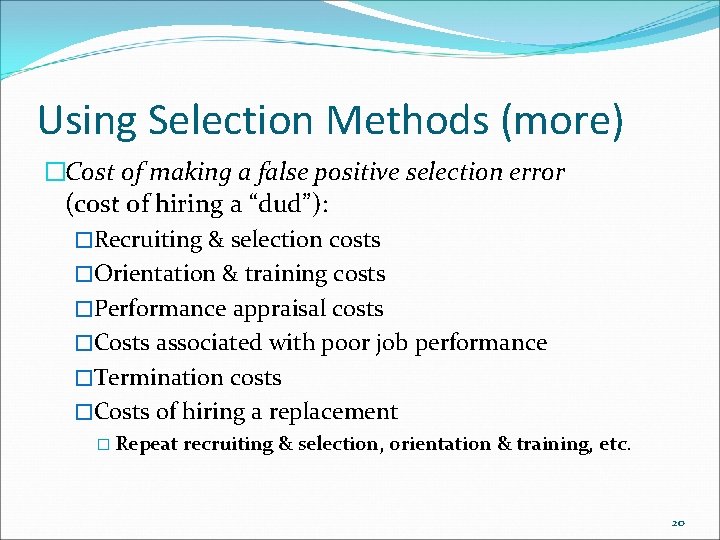

Using Selection Methods (more) �Cost of making a false positive selection error (cost of hiring a “dud”): �Recruiting & selection costs �Orientation & training costs �Performance appraisal costs �Costs associated with poor job performance �Termination costs �Costs of hiring a replacement � Repeat recruiting & selection, orientation & training, etc. 20

Using Selection Methods (more) �Cost of making a false negative selection error (cost of failing to hire an applicant who would have good job performance): �Recruiting & selection costs �Opportunity costs: cost of letting good talent slip through our fingers �Costs associated with needing a bigger pool of applicants � Increases recruiting & selection costs 21

Using Selection Methods (more) �What can we do to manage the costs associated with both types of selection mistakes? �Change our hiring standards � Raise our hiring standards: raise the selection cut-off score � Example: raise the minimum passing score on the DIT � Lower our hiring standards: lower the selection cut-off score �Change which selection methods we use � Switch from a less valid selection method to a more valid selection method � Switch from a more valid selection method to a less valid selection method 22

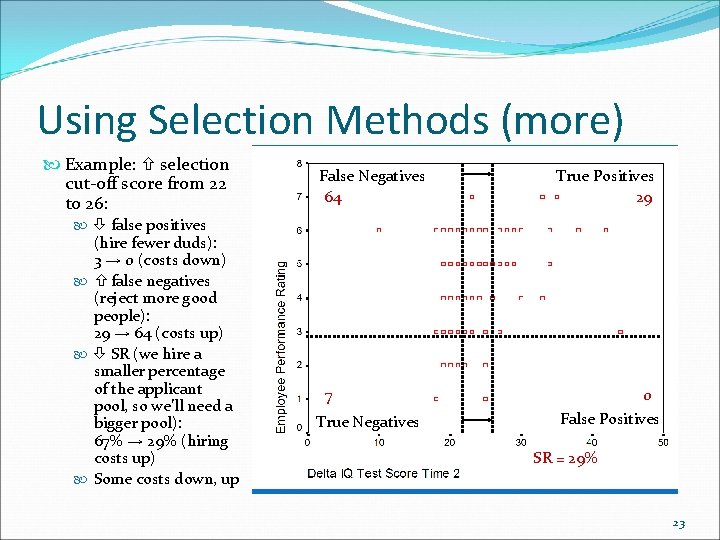

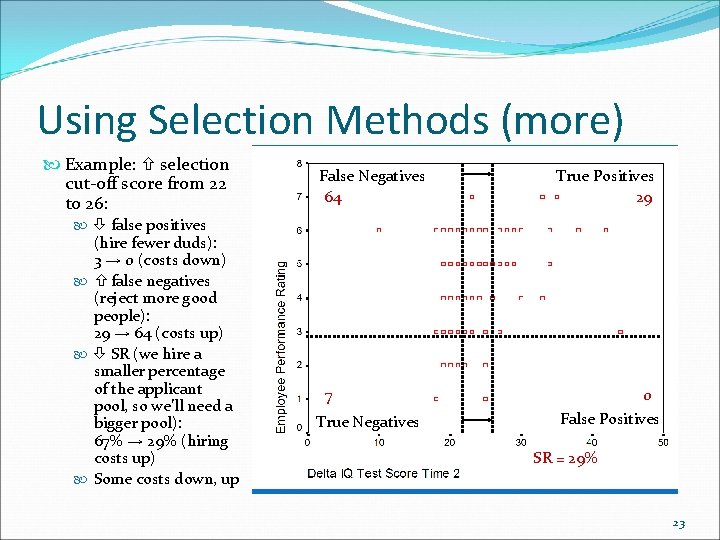

Using Selection Methods (more) Example: selection cut-off score from 22 to 26: False Negatives 64 True Positives 29 false positives (hire fewer duds): 3 → 0 (costs down) false negatives (reject more good people): 29 → 64 (costs up) SR (we hire a smaller percentage of the applicant pool, so we’ll need a bigger pool): 67% → 29% (hiring costs up) Some costs down, up 7 True Negatives 0 False Positives SR = 29% 23

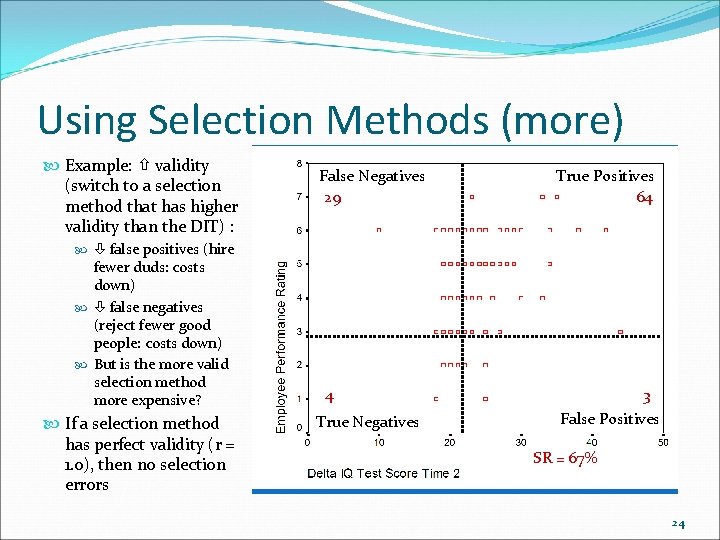

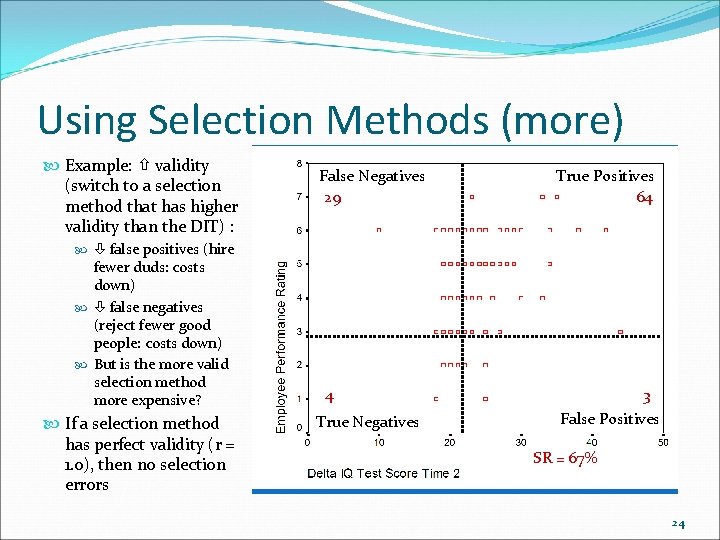

Using Selection Methods (more) Example: validity (switch to a selection method that has higher validity than the DIT) : False Negatives 29 True Positives 64 false positives (hire fewer duds: costs down) false negatives (reject fewer good people: costs down) But is the more valid selection method more expensive? If a selection method has perfect validity (r = 1. 0), then no selection errors 4 True Negatives 3 False Positives SR = 67% 24

Utility Analysis �We see that there are tradeoffs to be analyzed �If we raise our hiring standards (raise the selection cutoff score), there are: � Fewer false positives: we hire fewer duds (costs go down) � More false negatives: more good talent slips away (costs go up) � Selection ratio goes down: we reject more applicants, and so need a bigger pool of applicants (costs go up) �If we switch to a more valid selection method, there are: � Fewer false positives: we hire fewer duds (costs go down) � Fewer false negatives: less good talent slips away (costs go down) � The more valid method might be more expensive (costs go up) 25

Utility Analysis (more) �Goal of utility analysis: Determine the value of the selection system or of changes in the selection system �Utility analysis is a method of cost-benefit analysis � “Value” is measured in money terms � How does the current selection system add value to the organization? � How would changes to the selection system add value to the organization? � Example: Evaluate the effect of changing our hiring standards � Example: raise the selection cut-off score Evaluate the effect of switching selection methods � Example: switch to a more valid selection method 26

Utility Analysis (more) �Complete utility analysis evaluates both the benefits and the costs (in money terms) of changing a selection procedure �Mathematical formula (we’ll leave the formula for HR majors & minors when they take MGMT 441: Staffing) �Partial utility analysis uses the Taylor-Russell Table �Table tells us what percentage of our hires will turn out to have acceptable job performance � Doesn’t use money as the unit of measurement � Doesn’t directly consider the costs 27

Taylor-Russell Table �Table tells us what percentage of our hires will turn out to have acceptable job performance �If the table gives an answer of “. 67” in a particular situation, it means that 67% of the people hired in the situation will turn out to have acceptable job performance � Which implies that 100% − 67% = 33% of the people we hire in the situation will turn out to have unacceptable job performance � 67% good hires (true positives) � 33% poor hires (false positives) 28

Taylor-Russell Table �To use the table, we need to estimate 3 things: �Base rate of success (BRS): What percentage of our applicant pool could do the job well? � Estimate how good a job of recruiting we’ve done: Do we have a good pool of applicants, or a bad pool, or something in between? � If everyone in the pool could do the job well (BRS = 100%), then we could randomly hire anyone in the pool �Level of validity (r): What’s the validity coefficient for our selection method? �Selection ratio (SR): What percentage of the applicant pool are we going to hire? 29

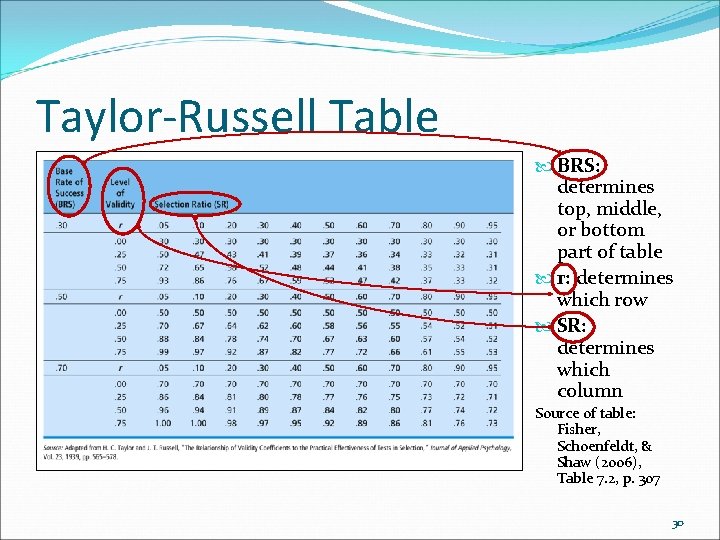

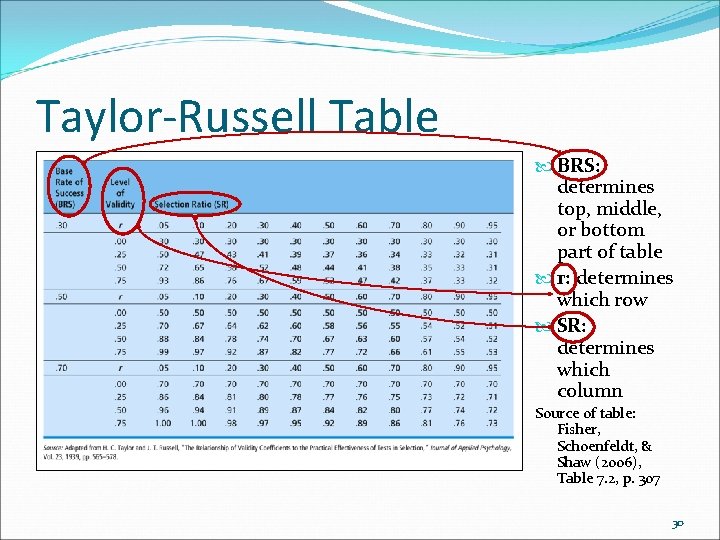

Taylor-Russell Table BRS: determines top, middle, or bottom part of table r: determines which row SR: determines which column Source of table: Fisher, Schoenfeldt, & Shaw (2006), Table 7. 2, p. 307 30

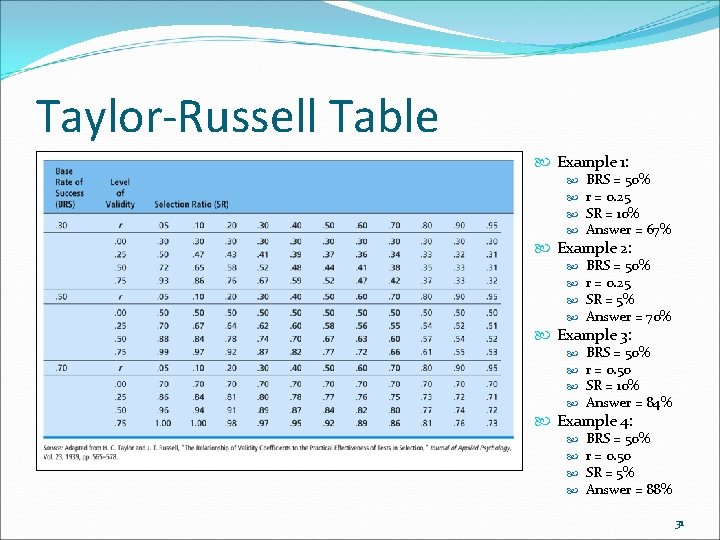

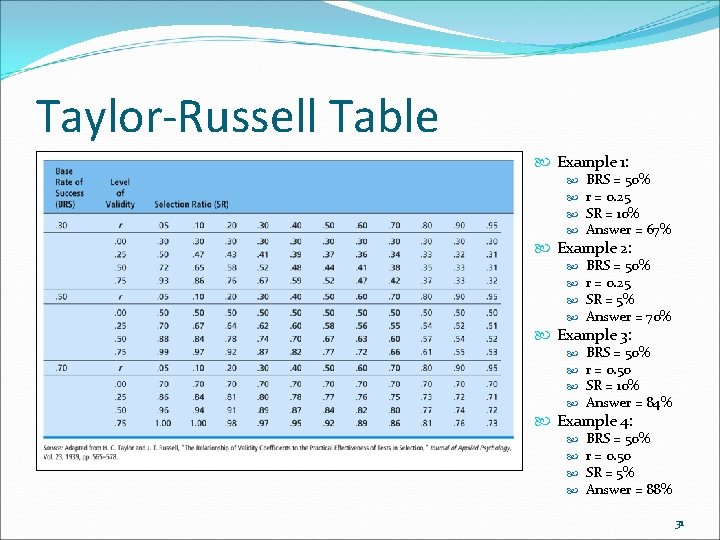

Taylor-Russell Table Example 1: BRS = 50% r = 0. 25 SR = 10% Answer = 67% Example 2: BRS = 50% r = 0. 25 SR = 5% Answer = 70% Example 3: BRS = 50% r = 0. 50 SR = 10% Answer = 84% Example 4: BRS = 50% r = 0. 50 SR = 5% Answer = 88% 31

Decision-Making in Selection �Most organizations use more than one selection method to make the selection decision �How do you combine information about an applicant from multiple selection methods to make the selection decision? � Example: An organization uses 3 selection methods to hire a computer programmer: � Résumé evaluation: résumés are rated on a 100 -point scale � Programming skills test: test scores go up to 100% � Interview: interviews are rated by interviewers on a 100 -point scale 32

Decision-Making in Selection �Additive (compensatory) model �All applicants go through all selection methods �Add together the scores on the selection methods to get a total score for each applicant � Example: Total = résumé score + test score + interview score �Establish a selection cut-off score for the total score �Hire the applicant if the applicant’s total score exceeds the cut-off � Note that a high score on one selection method can compensate for a low score on another selection method 33

Decision-Making in Selection �Multiple cut-off method �All applicants go through all selection methods �Establish a selection cut-off score for each selection method �Hire the applicant if their score on every selection method exceeds the corresponding cut-off score � Example: pass the résumé evaluation and pass the test and pass the interview � Wasteful? � If an applicant fails the résumé evaluation, why waste time and money on the test and the interview? 34

Decision-Making in Selection �Multiple hurdle method �Put the selection methods into a sequence � Each selection method is a “hurdle” �Establish a selection cut-off score for each hurdle � Only applicants who pass the first hurdle go on to the second hurdle � Only applicants who then pass the second hurdle go on to the third hurdle � Only applicants who then pass the third hurdle. . . , etc. �Use cost to determine the sequence � Put the cheapest hurdle first, etc. 35

Outline �Selection in HR �Selection Process �Review of Correlation �Criteria for Selection Methods �Using Selection Methods �Utility Analysis �Taylor-Russell Table �Decision-Making in Selection 36