Topic 4 Syntactic Analysis Parsing EE 456 Compiling

- Slides: 82

Topic #4: Syntactic Analysis (Parsing) EE 456 – Compiling Techniques Prof. Carl Sable Fall 2003

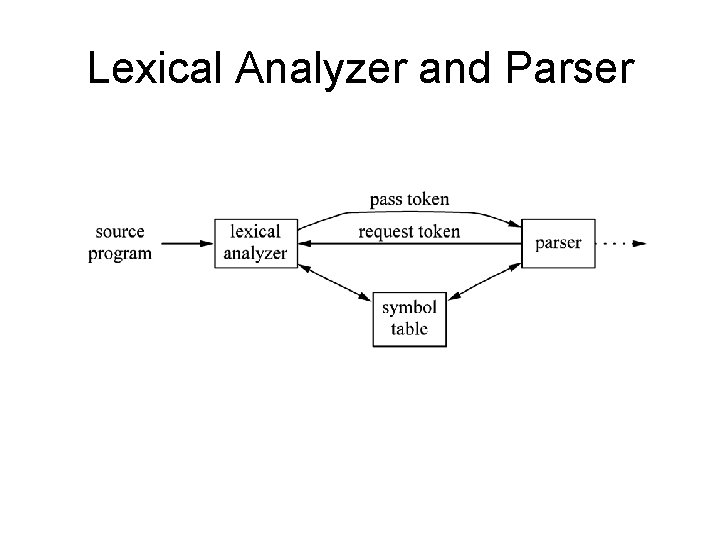

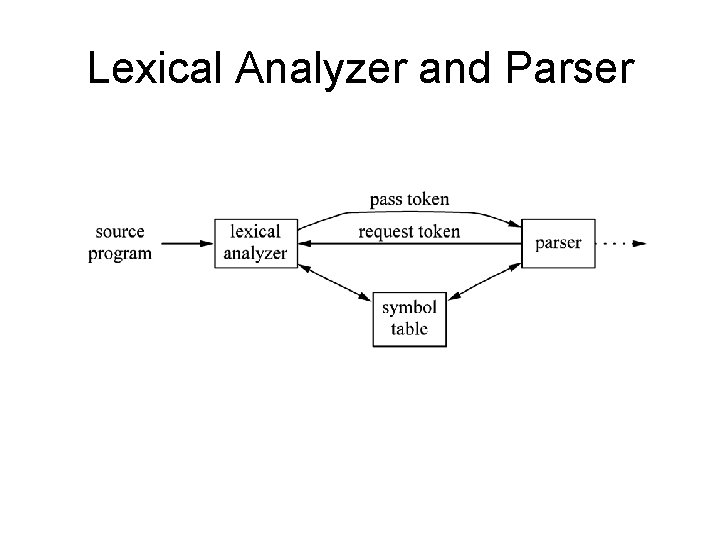

Lexical Analyzer and Parser

Parser • Accepts string of tokens from lexical analyzer (usually one token at a time) • Verifies whether or not string can be generated by grammar • Reports syntax errors (recovers if possible)

Errors • Lexical errors (e. g. misspelled word) • Syntax errors (e. g. unbalanced parentheses, missing semicolon) • Semantic errors (e. g. type errors) • Logical errors (e. g. infinite recursion)

Error Handling • Report errors clearly and accurately • Recover quickly if possible • Poor error recover may lead to avalanche of errors

Error Recovery • Panic mode: discard tokens one at a time until a synchronizing token is found • Phrase-level recovery: Perform local correction that allows parsing to continue • Error Productions: Augment grammar to handle predicted, common errors • Global Production: Use a complex algorithm to compute least-cost sequence of changes leading to parseable code

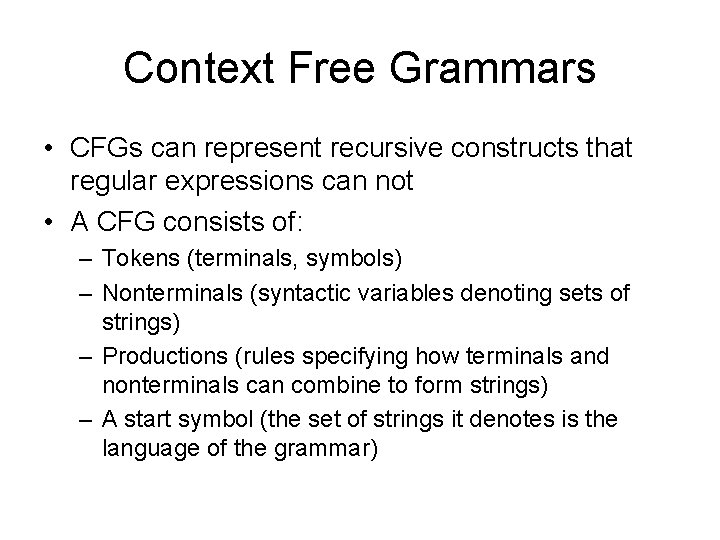

Context Free Grammars • CFGs can represent recursive constructs that regular expressions can not • A CFG consists of: – Tokens (terminals, symbols) – Nonterminals (syntactic variables denoting sets of strings) – Productions (rules specifying how terminals and nonterminals can combine to form strings) – A start symbol (the set of strings it denotes is the language of the grammar)

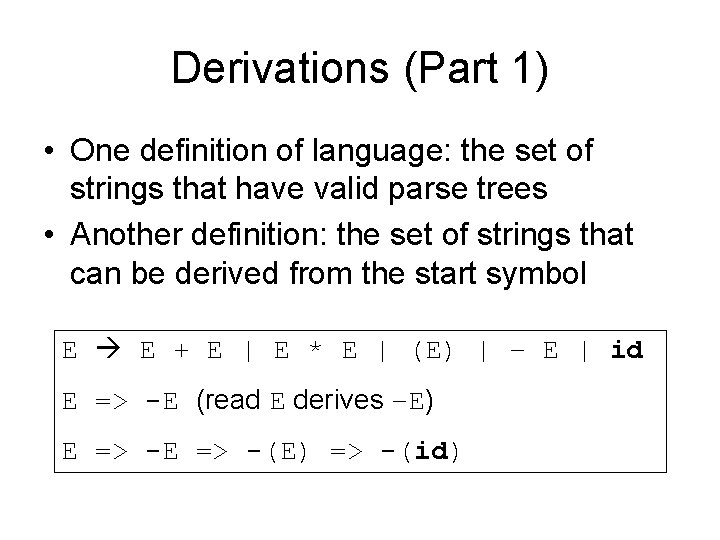

Derivations (Part 1) • One definition of language: the set of strings that have valid parse trees • Another definition: the set of strings that can be derived from the start symbol E E + E | E * E | (E) | – E | id E => -E (read E derives –E) E => -(E) => -(id)

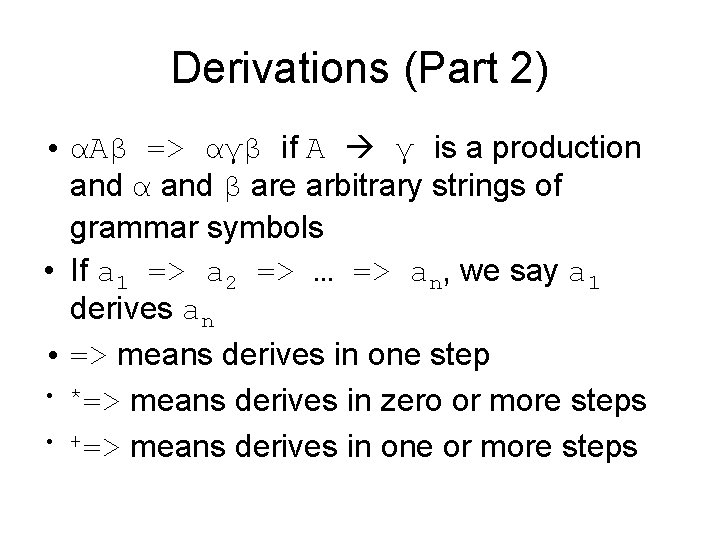

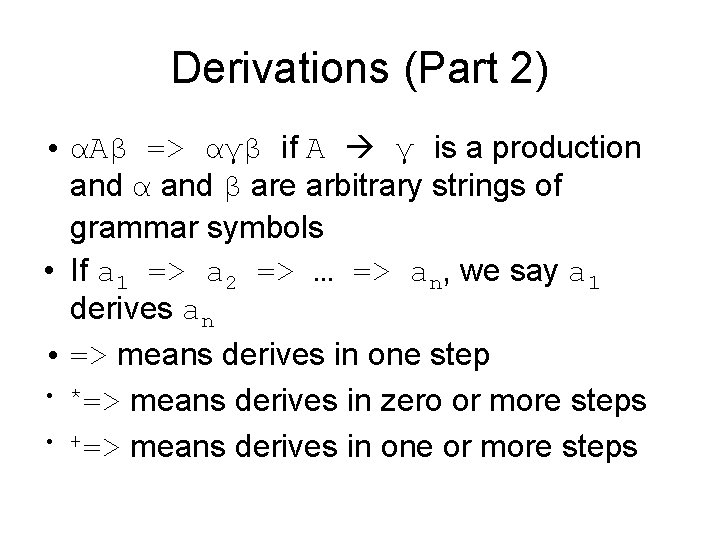

Derivations (Part 2) • αAβ => αγβ if A γ is a production and α and β are arbitrary strings of grammar symbols • If a 1 => a 2 => … => an, we say a 1 derives an • => means derives in one step • *=> means derives in zero or more steps • +=> means derives in one or more steps

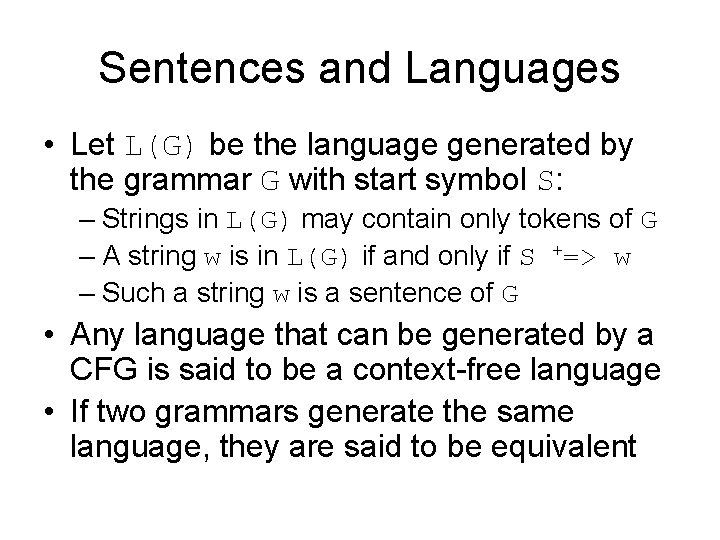

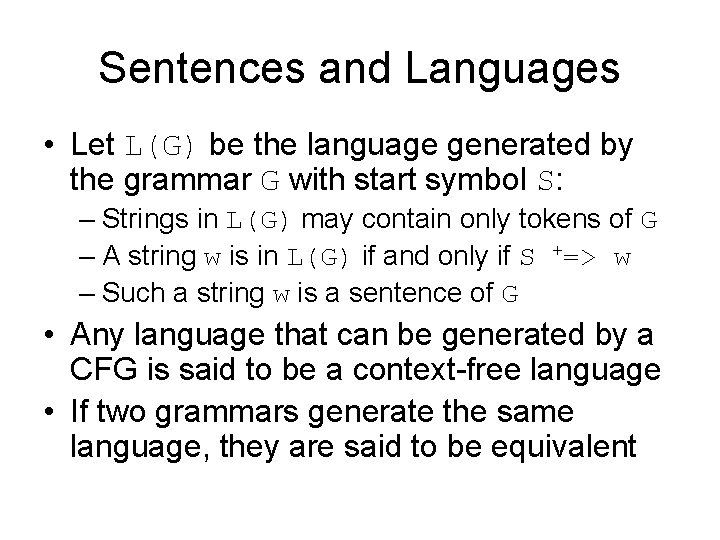

Sentences and Languages • Let L(G) be the language generated by the grammar G with start symbol S: – Strings in L(G) may contain only tokens of G – A string w is in L(G) if and only if S +=> w – Such a string w is a sentence of G • Any language that can be generated by a CFG is said to be a context-free language • If two grammars generate the same language, they are said to be equivalent

Sentential Forms • If S *=> α, where α may contain nonterminals, we say that α is a sentential form of G • A sentence is a sentential form with no nonterminals

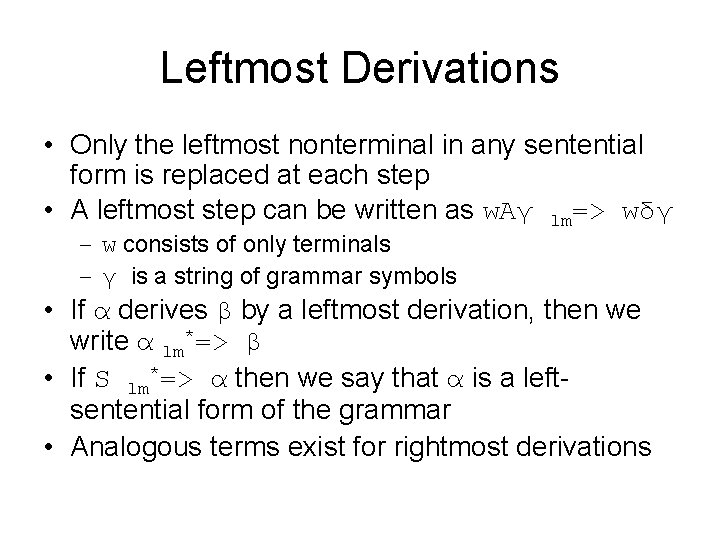

Leftmost Derivations • Only the leftmost nonterminal in any sentential form is replaced at each step • A leftmost step can be written as w. Aγ lm=> wδγ – w consists of only terminals – γ is a string of grammar symbols • If α derives β by a leftmost derivation, then we write α lm*=> β • If S lm*=> α then we say that α is a leftsentential form of the grammar • Analogous terms exist for rightmost derivations

Parse Trees • A parse tree can be viewed as a graphical representation of a derivation • Every parse tree has a unique leftmost derivation (not true of every sentence) • An ambiguous grammars has: – more than one parse tree for at least one sentence – more than one leftmost derivation for at least one sentence

Capability of Grammars • Can describe most programming language constructs • An exception: requiring that variables are declared before they are used – Therefore, grammar accepts superset of actual language – Later phase (semantic analysis) does type checking

Regular Expressions vs. CFGs • Every construct that can be described by an RE and also be described by a CFG • Why use REs at all? – Lexical rules are simpler to describe this way – REs are often easier to read – More efficient lexical analyzers can be constructed

Verifying Grammars • A proof that a grammar verifies a language has two parts: – Must show that every string generated by the grammar is part of the language – Must show that every string that is part of the language can be generated by the grammar • Rarely done for complete programming languages!

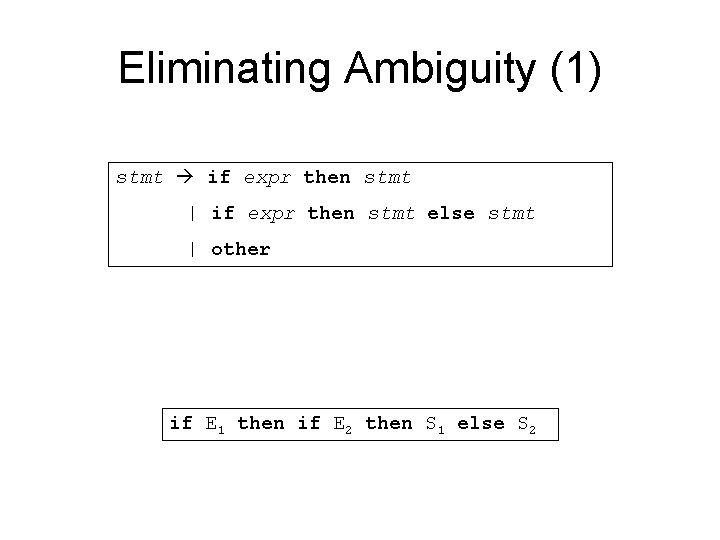

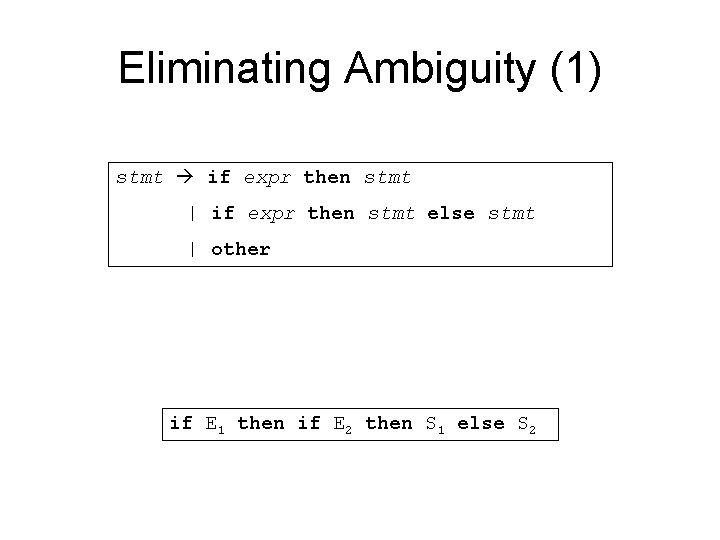

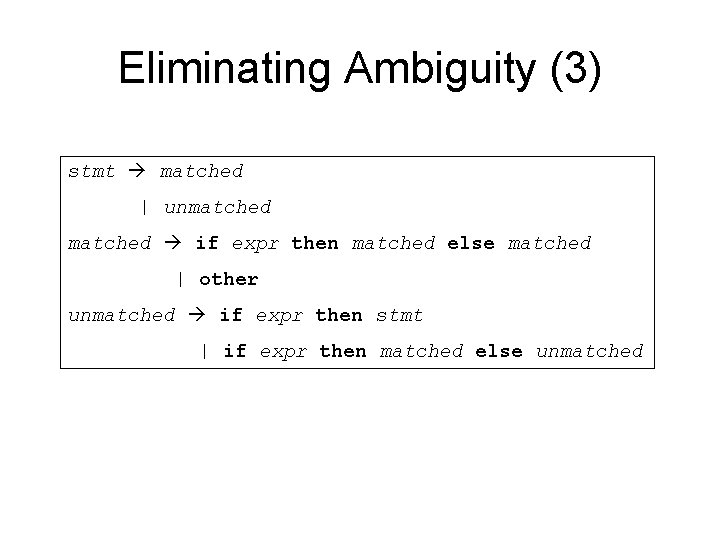

Eliminating Ambiguity (1) stmt if expr then stmt | if expr then stmt else stmt | other if E 1 then if E 2 then S 1 else S 2

Eliminating Ambiguity (2)

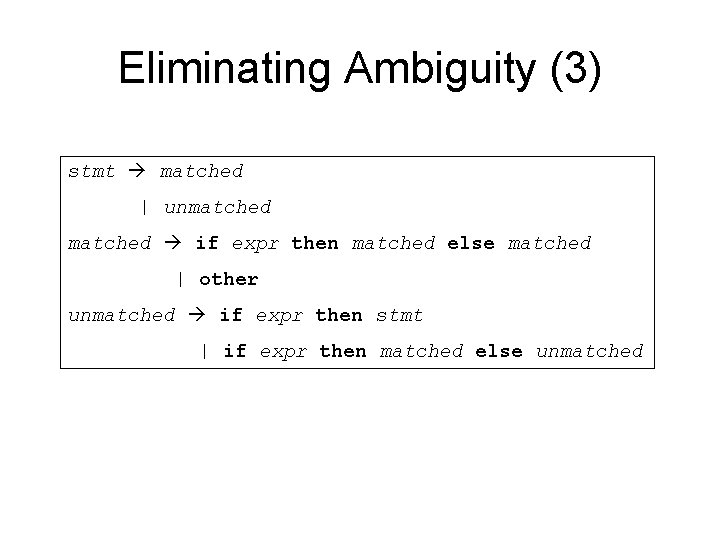

Eliminating Ambiguity (3) stmt matched | unmatched if expr then matched else matched | other unmatched if expr then stmt | if expr then matched else unmatched

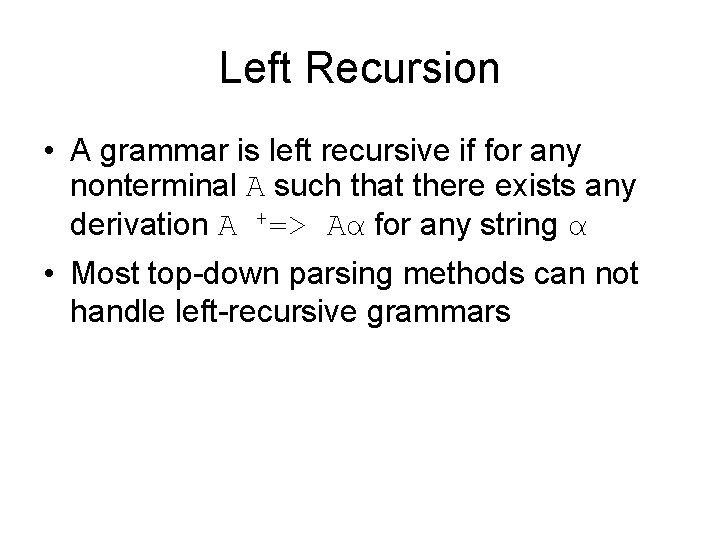

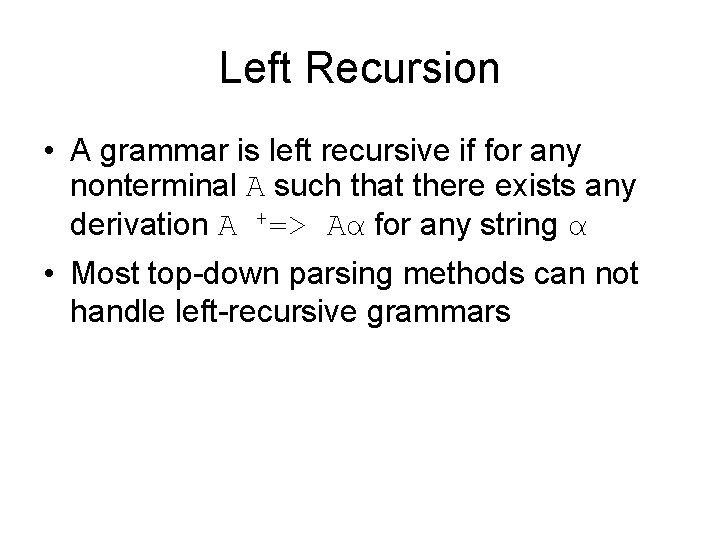

Left Recursion • A grammar is left recursive if for any nonterminal A such that there exists any derivation A +=> Aα for any string α • Most top-down parsing methods can not handle left-recursive grammars

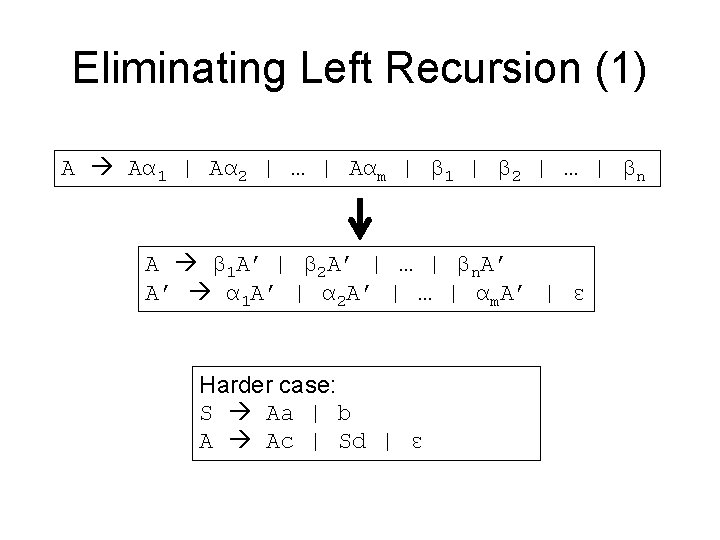

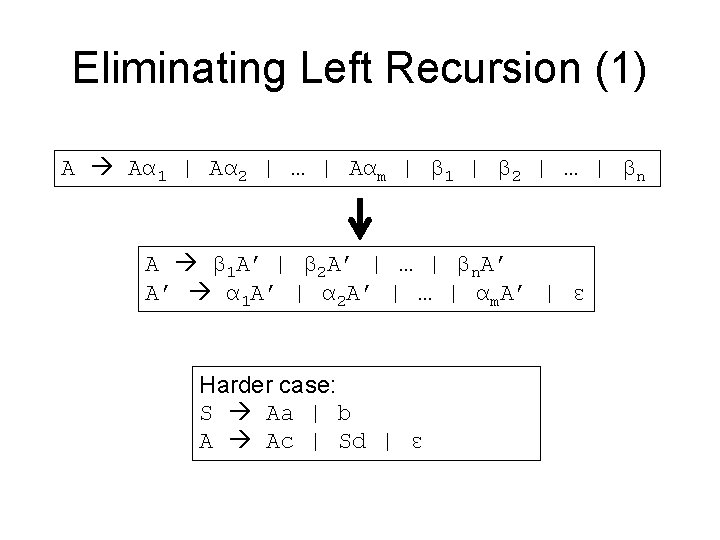

Eliminating Left Recursion (1) A Aα 1 | Aα 2 | … | Aαm | β 1 | β 2 | … | βn A β 1 A’ | β 2 A’ | … | βn. A’ A’ α 1 A’ | α 2 A’ | … | αm. A’ | ε Harder case: S Aa | b A Ac | Sd | ε

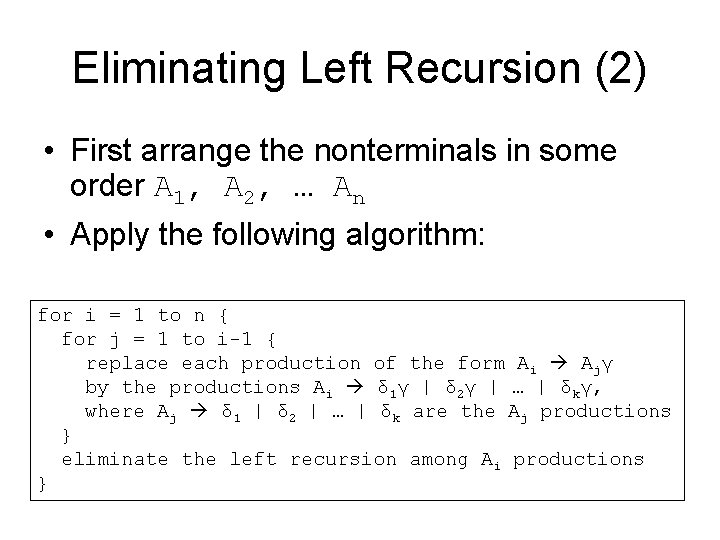

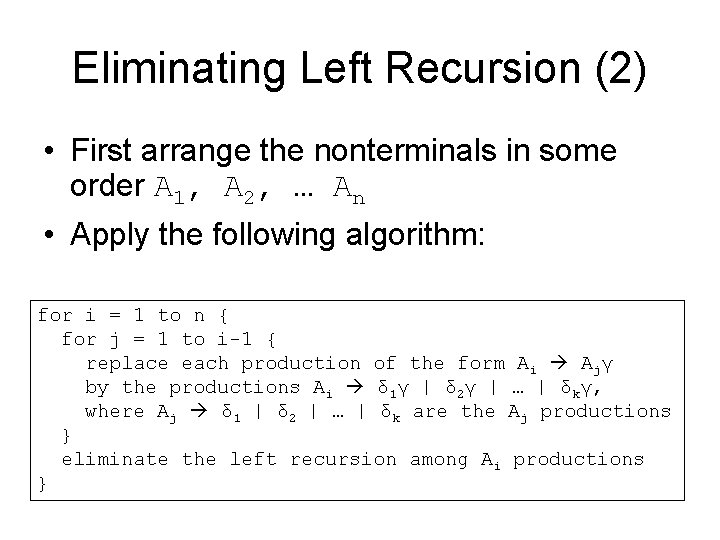

Eliminating Left Recursion (2) • First arrange the nonterminals in some order A 1, A 2, … An • Apply the following algorithm: for i = 1 to n { for j = 1 to i-1 { replace each production of the form Ai Ajγ by the productions Ai δ 1γ | δ 2γ | … | δkγ, where Aj δ 1 | δ 2 | … | δk are the Aj productions } eliminate the left recursion among Ai productions }

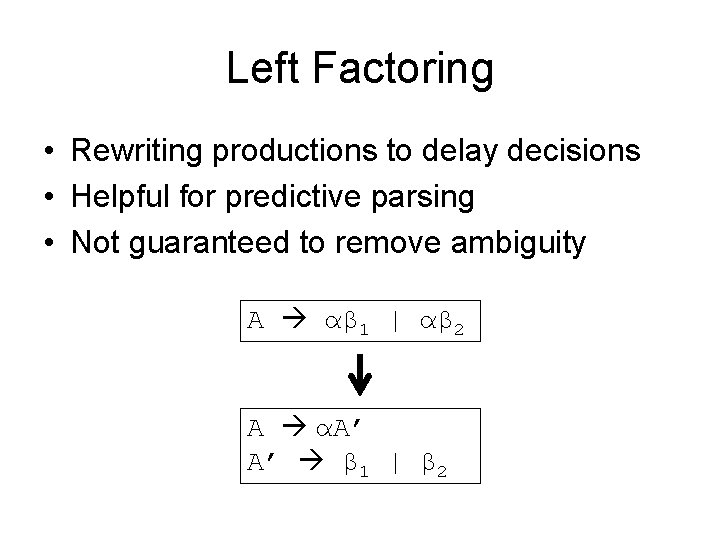

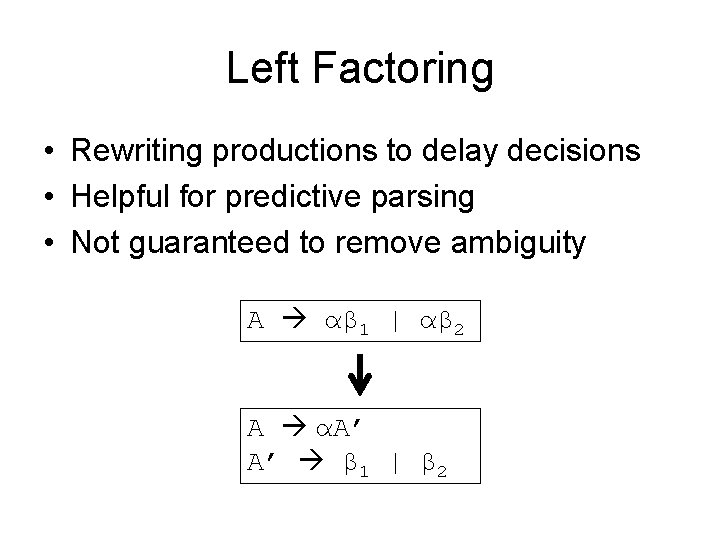

Left Factoring • Rewriting productions to delay decisions • Helpful for predictive parsing • Not guaranteed to remove ambiguity A αβ 1 | αβ 2 A αA’ A’ β 1 | β 2

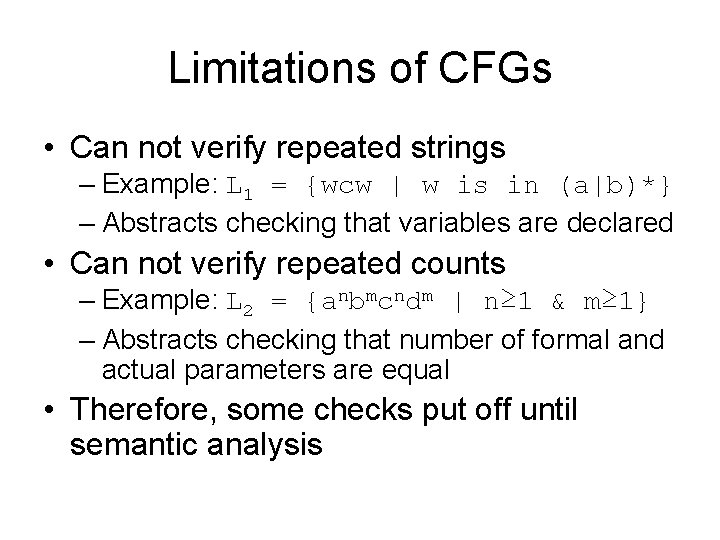

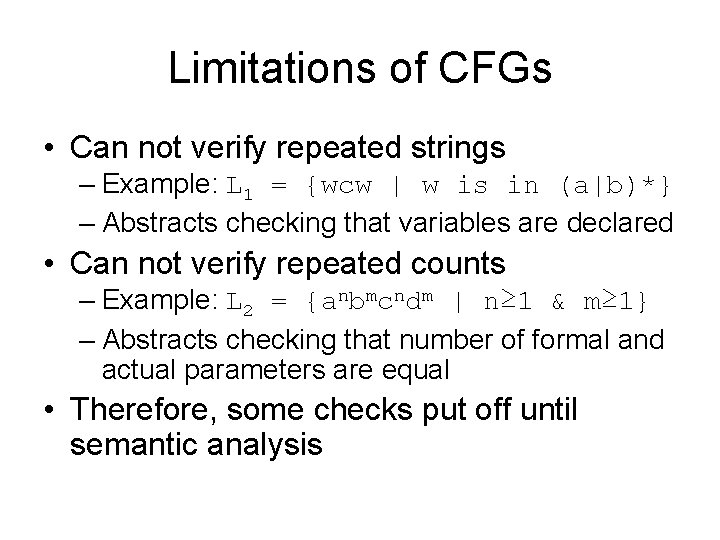

Limitations of CFGs • Can not verify repeated strings – Example: L 1 = {wcw | w is in (a|b)*} – Abstracts checking that variables are declared • Can not verify repeated counts – Example: L 2 = {anbmcndm | n≥ 1 & m≥ 1} – Abstracts checking that number of formal and actual parameters are equal • Therefore, some checks put off until semantic analysis

Top Down Parsing • Can be viewed two ways: – Attempt to find leftmost derivation for input string – Attempt to create parse tree, starting from at root, creating nodes in preorder • General form is recursive descent parsing – May require backtracking – Backtracking parsers not used frequently because not needed

Predictive Parsing • A special case of recursive-descent parsing that does not require backtracking • Must always know which production to use based on current input symbol • Can often create appropriate grammar: – removing left-recursion – left factoring the resulting grammar

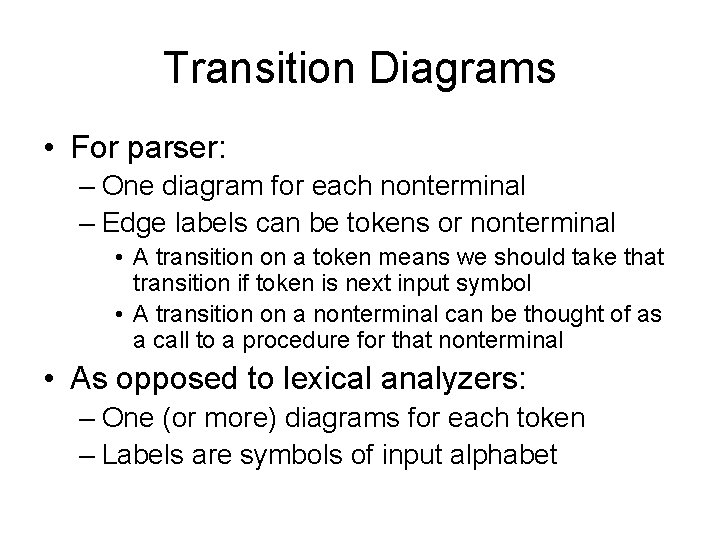

Transition Diagrams • For parser: – One diagram for each nonterminal – Edge labels can be tokens or nonterminal • A transition on a token means we should take that transition if token is next input symbol • A transition on a nonterminal can be thought of as a call to a procedure for that nonterminal • As opposed to lexical analyzers: – One (or more) diagrams for each token – Labels are symbols of input alphabet

Creating Transition Diagrams • First eliminate left recursion from grammar • Then left factor grammar • For each nonterminal A: – Create an initial and final state – For every production A X 1 X 2…Xn, create a path from initial to final state with edges labeled X 1, X 2, …, Xn.

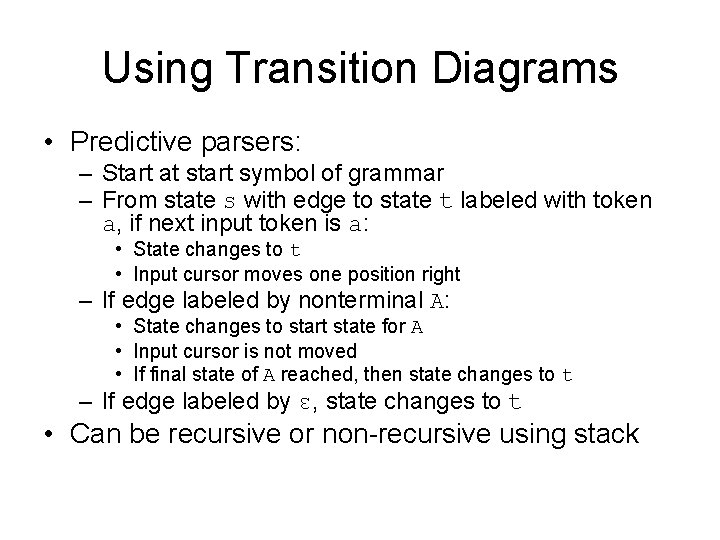

Using Transition Diagrams • Predictive parsers: – Start at start symbol of grammar – From state s with edge to state t labeled with token a, if next input token is a: • State changes to t • Input cursor moves one position right – If edge labeled by nonterminal A: • State changes to start state for A • Input cursor is not moved • If final state of A reached, then state changes to t – If edge labeled by ε, state changes to t • Can be recursive or non-recursive using stack

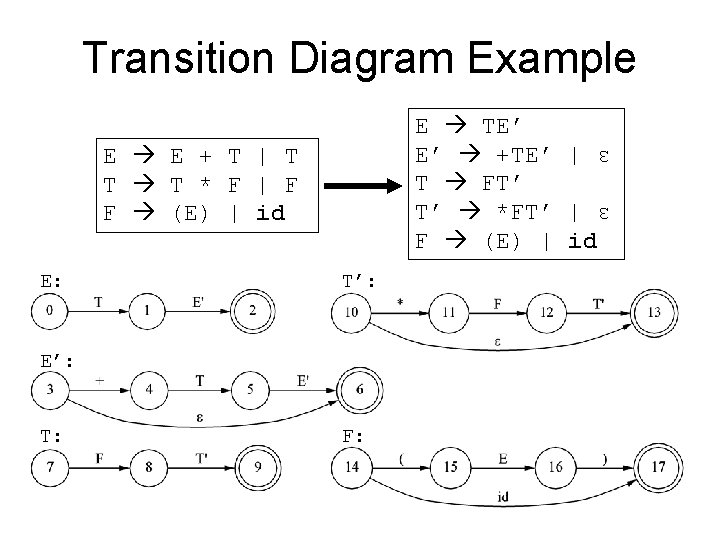

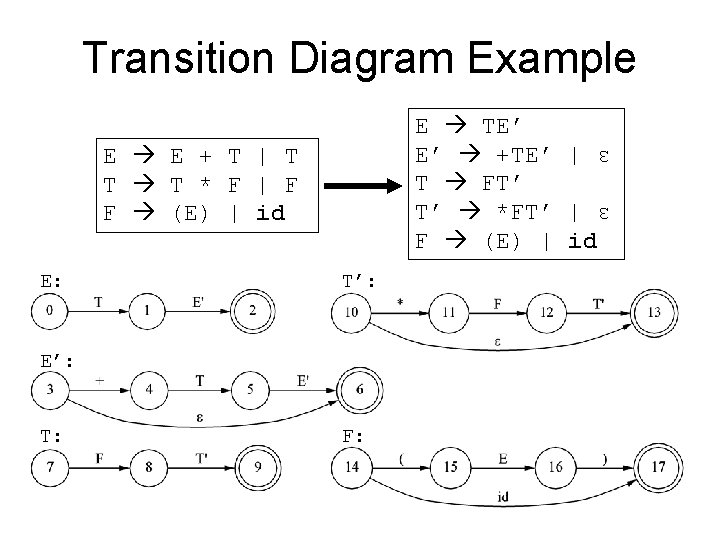

Transition Diagram Example E TE’ E’ +TE’ | ε T FT’ T’ *FT’ | ε F (E) | id E E + T | T T T * F | F F (E) | id E: T’: E’: T: F:

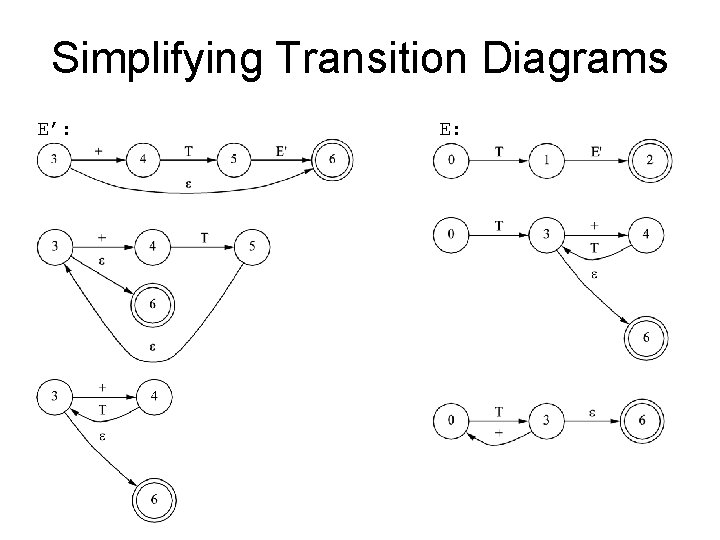

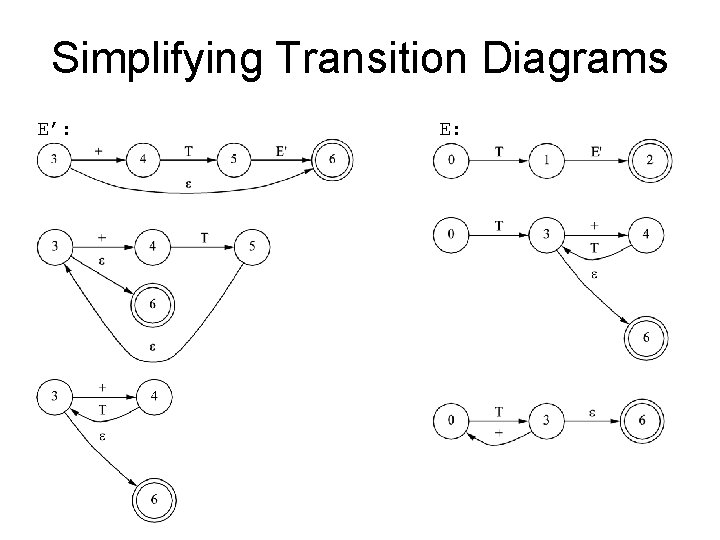

Simplifying Transition Diagrams E’: E:

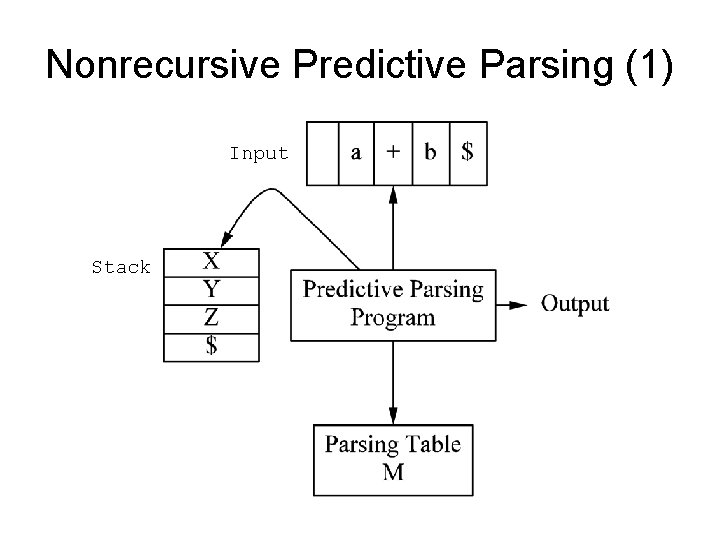

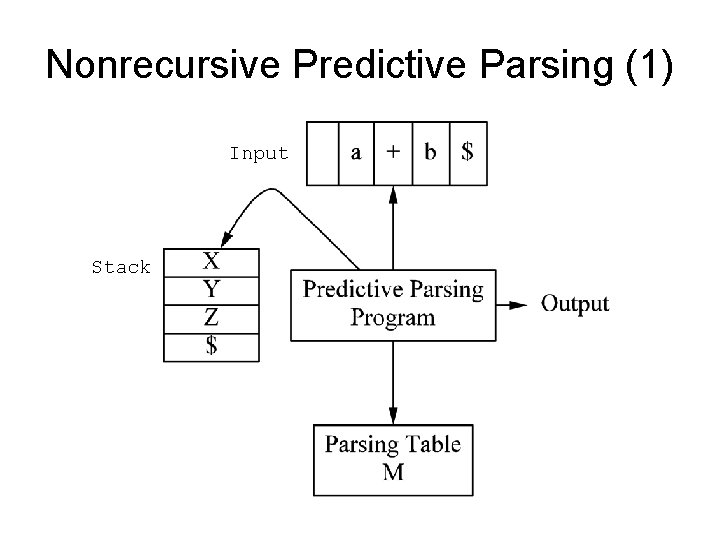

Nonrecursive Predictive Parsing (1) Input Stack

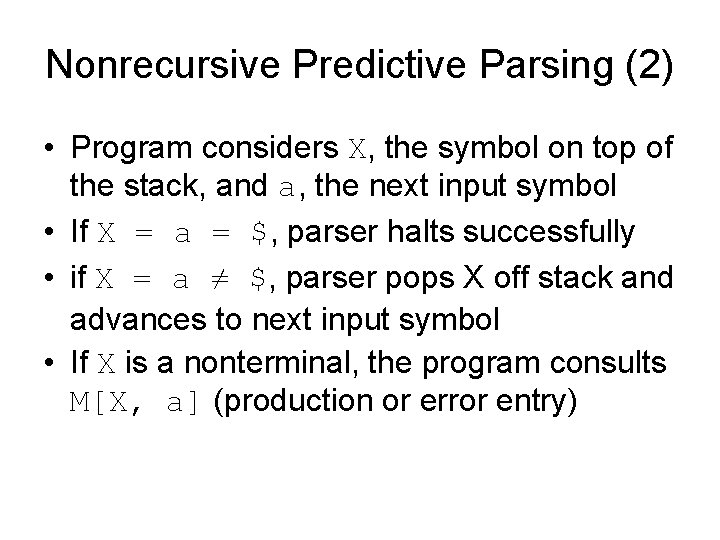

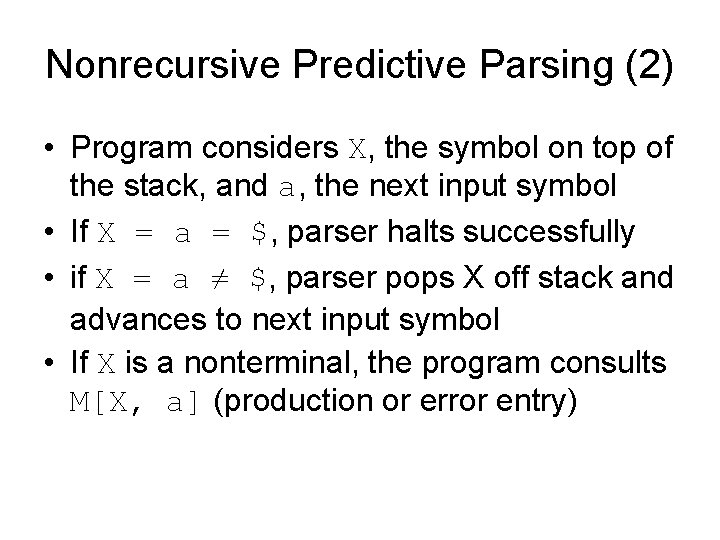

Nonrecursive Predictive Parsing (2) • Program considers X, the symbol on top of the stack, and a, the next input symbol • If X = a = $, parser halts successfully • if X = a ≠ $, parser pops X off stack and advances to next input symbol • If X is a nonterminal, the program consults M[X, a] (production or error entry)

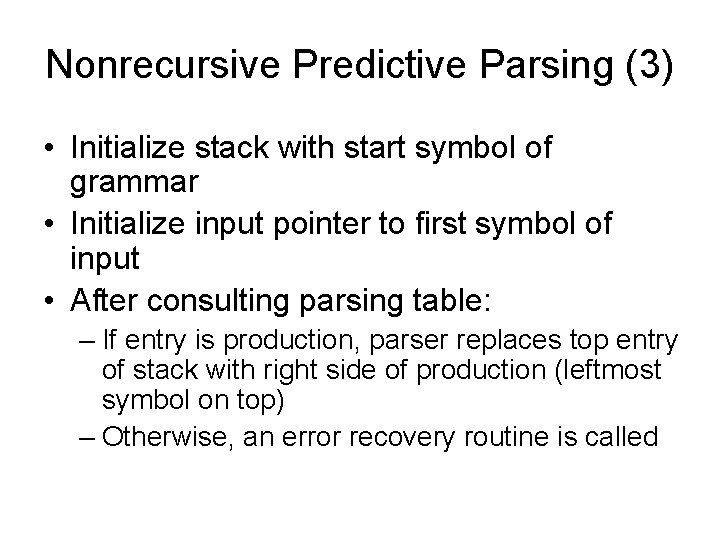

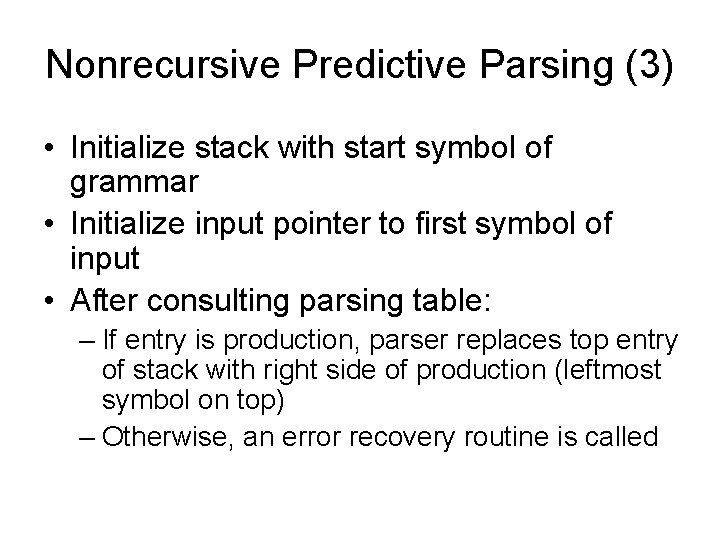

Nonrecursive Predictive Parsing (3) • Initialize stack with start symbol of grammar • Initialize input pointer to first symbol of input • After consulting parsing table: – If entry is production, parser replaces top entry of stack with right side of production (leftmost symbol on top) – Otherwise, an error recovery routine is called

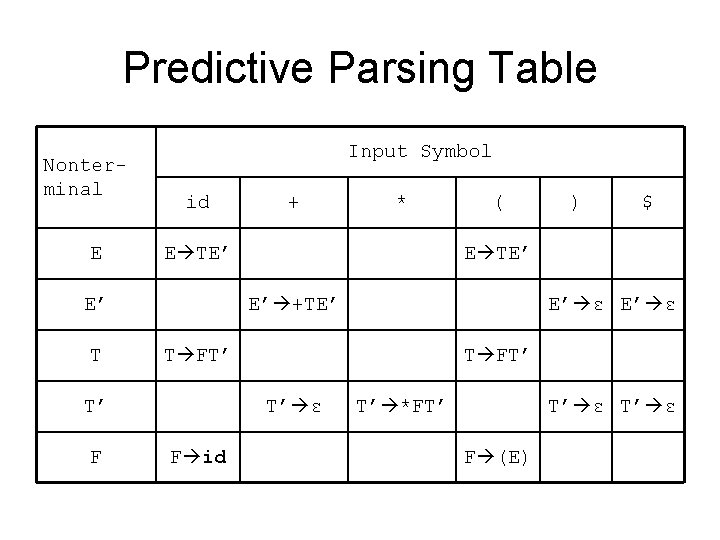

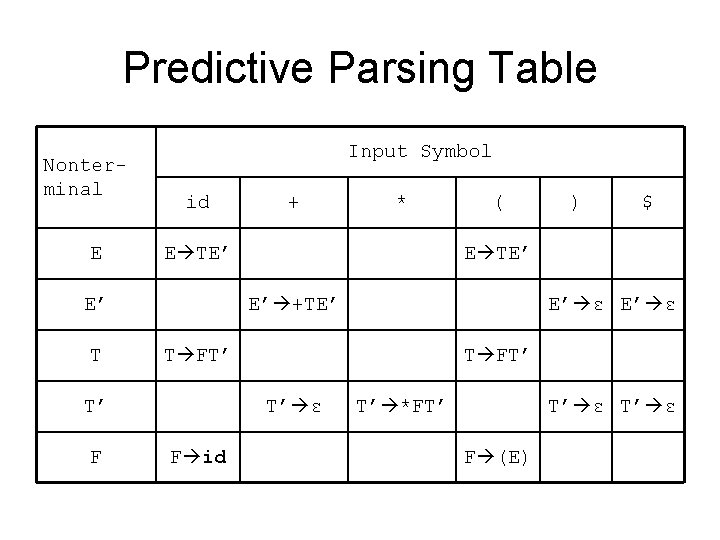

Predictive Parsing Table Nonterminal E Input Symbol id ( $ E’ ε T FT’ T’ ε F id ) E TE’ E’ +TE’ T’ F * E TE’ E’ T + T’ *FT’ T’ ε F (E)

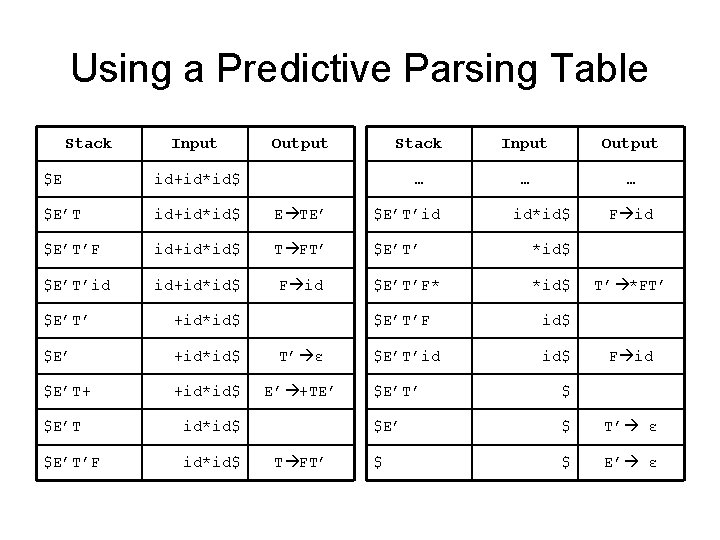

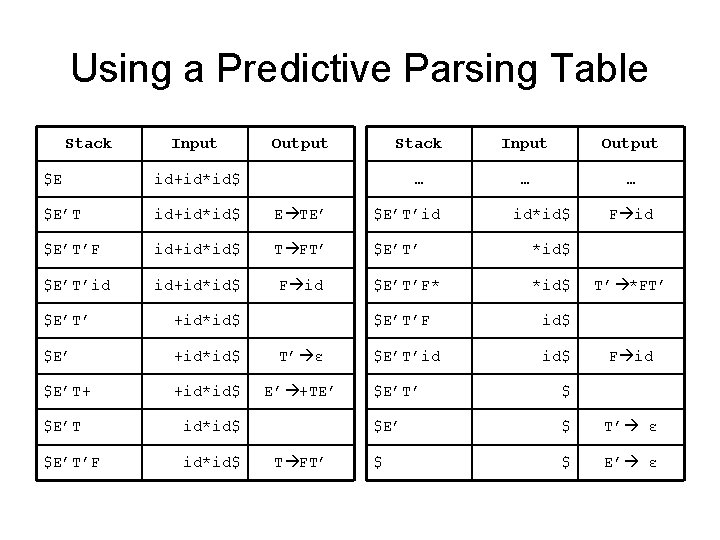

Using a Predictive Parsing Table Stack Input Output … … … $E id+id*id$ $E’T id+id*id$ E TE’ $E’T’id $E’T’F id+id*id$ T FT’ $E’T’ *id$ $E’T’id id+id*id$ F id $E’T’F* *id$ $E’T’ +id*id$ $E’ +id*id$ T’ ε $E’T+ +id*id$ E’ +TE’ $E’T id*id$ $E’T’F id*id$ T FT’ id*id$ $E’T’F id$ $E’T’id id$ F id T’ *FT’ F id $E’T’ $ $E’ $ T’ ε $ $ E’ ε

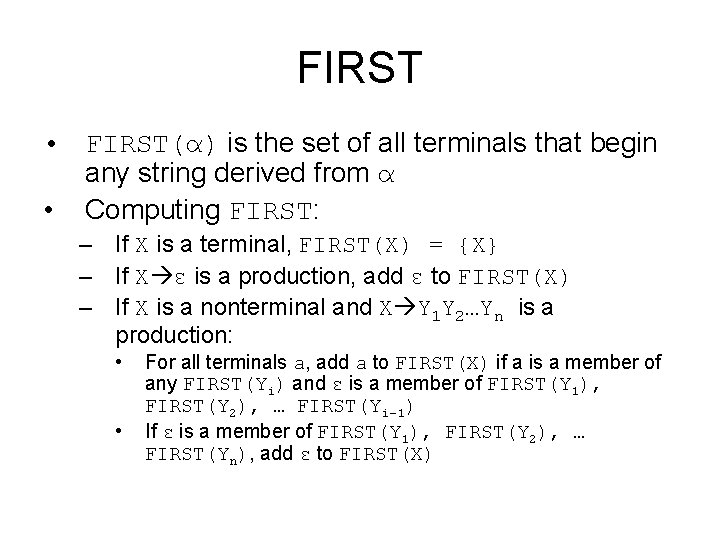

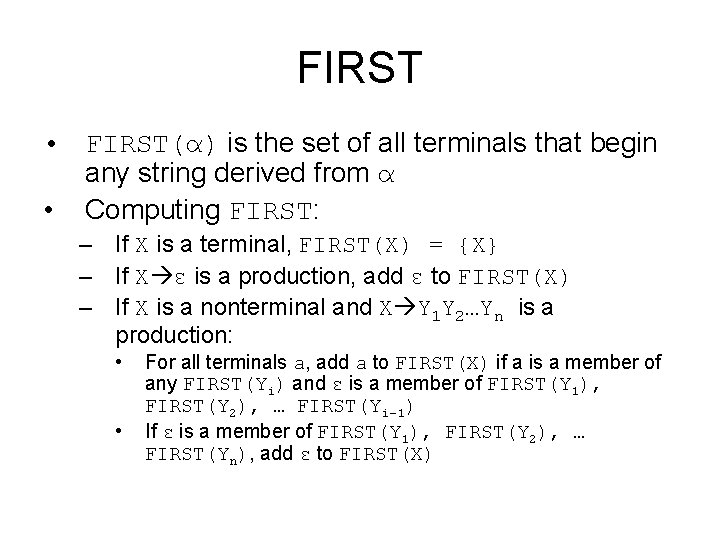

FIRST • FIRST(α) is the set of all terminals that begin any string derived from α • Computing FIRST: – If X is a terminal, FIRST(X) = {X} – If X ε is a production, add ε to FIRST(X) – If X is a nonterminal and X Y 1 Y 2…Yn is a production: • • For all terminals a, add a to FIRST(X) if a is a member of any FIRST(Yi) and ε is a member of FIRST(Y 1), FIRST(Y 2), … FIRST(Yi-1) If ε is a member of FIRST(Y 1), FIRST(Y 2), … FIRST(Yn), add ε to FIRST(X)

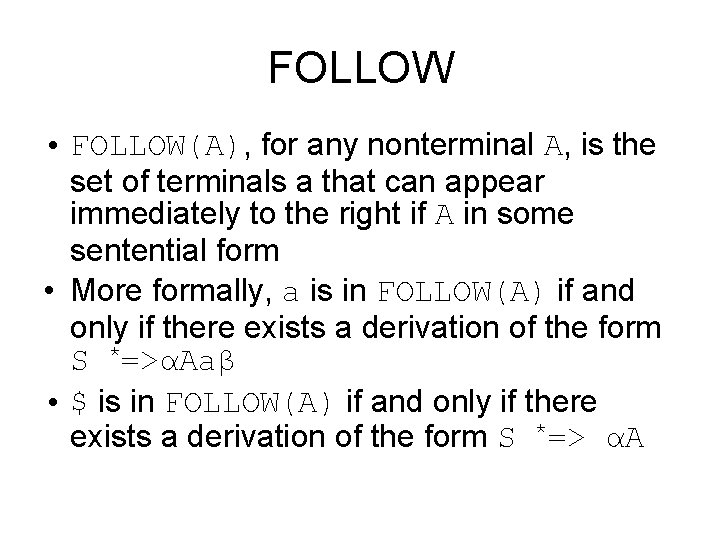

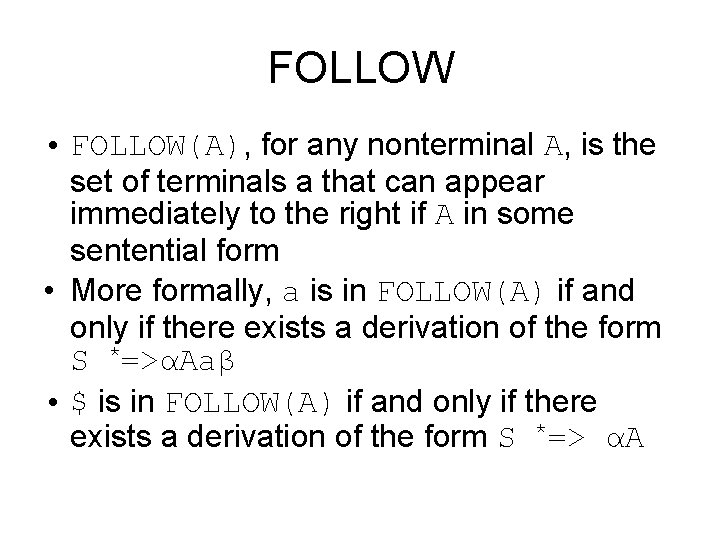

FOLLOW • FOLLOW(A), for any nonterminal A, is the set of terminals a that can appear immediately to the right if A in some sentential form • More formally, a is in FOLLOW(A) if and only if there exists a derivation of the form S *=>αAaβ • $ is in FOLLOW(A) if and only if there exists a derivation of the form S *=> αA

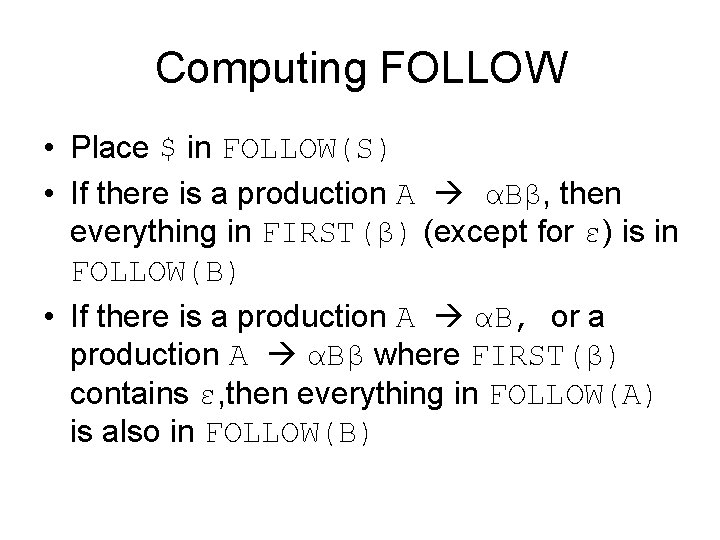

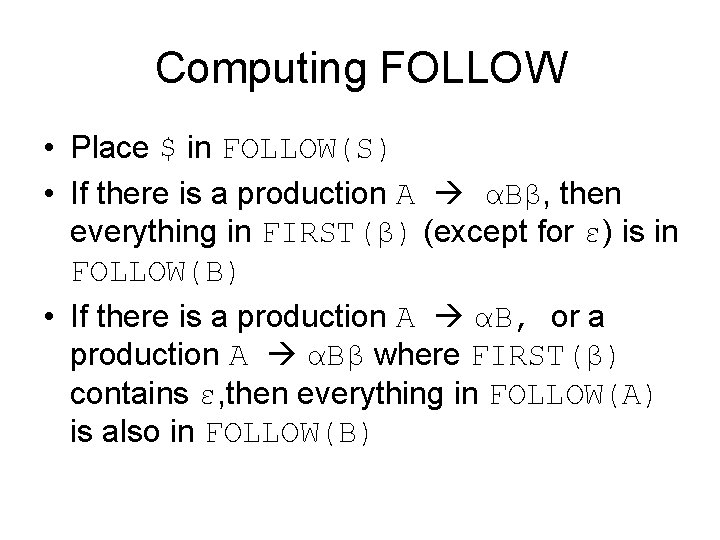

Computing FOLLOW • Place $ in FOLLOW(S) • If there is a production A αBβ, then everything in FIRST(β) (except for ε) is in FOLLOW(B) • If there is a production A αB, or a production A αBβ where FIRST(β) contains ε, then everything in FOLLOW(A) is also in FOLLOW(B)

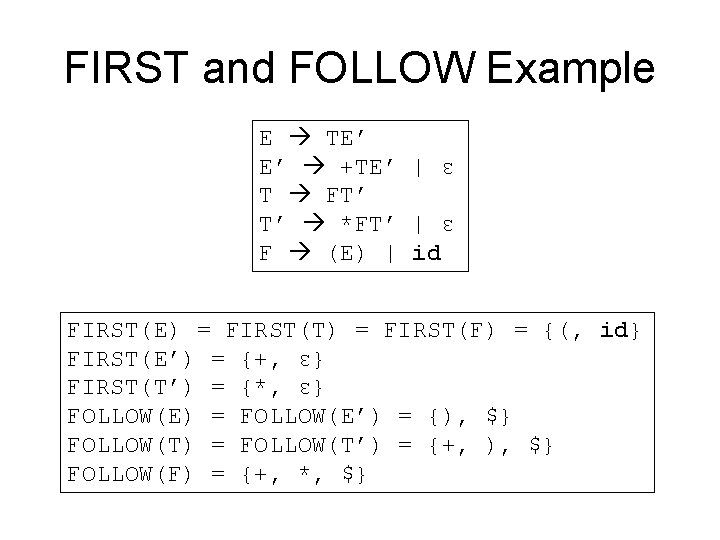

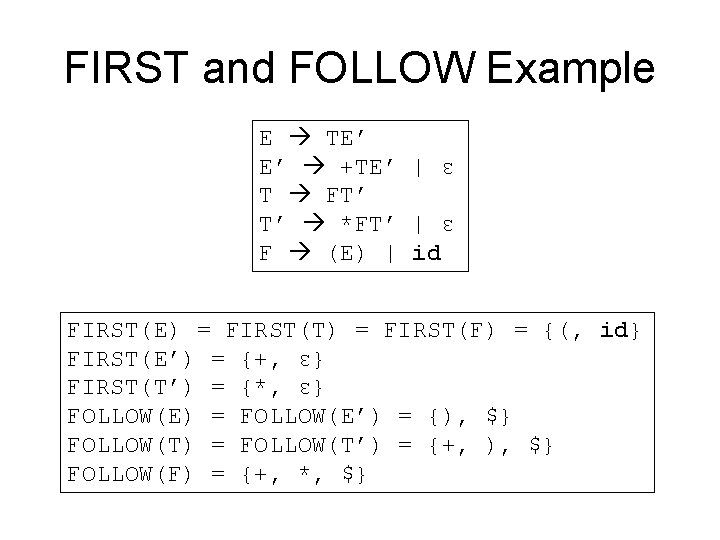

FIRST and FOLLOW Example E TE’ E’ +TE’ | ε T FT’ T’ *FT’ | ε F (E) | id FIRST(E) = FIRST(T) = FIRST(F) = {(, id} FIRST(E’) = {+, ε} FIRST(T’) = {*, ε} FOLLOW(E) = FOLLOW(E’) = {), $} FOLLOW(T) = FOLLOW(T’) = {+, ), $} FOLLOW(F) = {+, *, $}

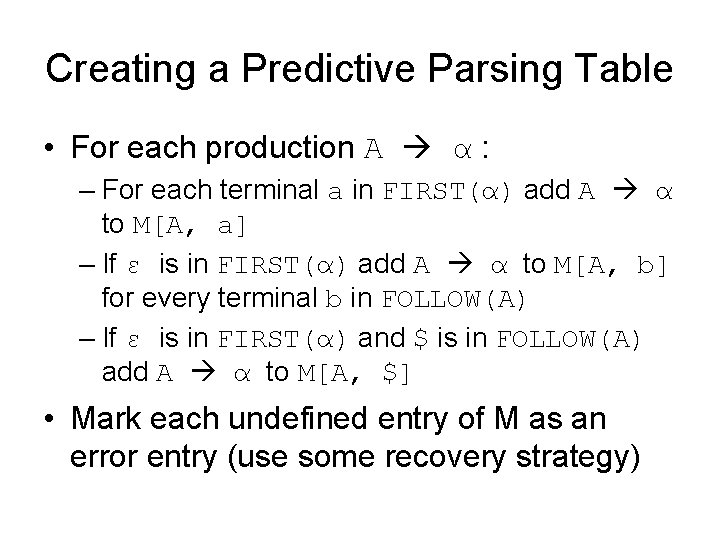

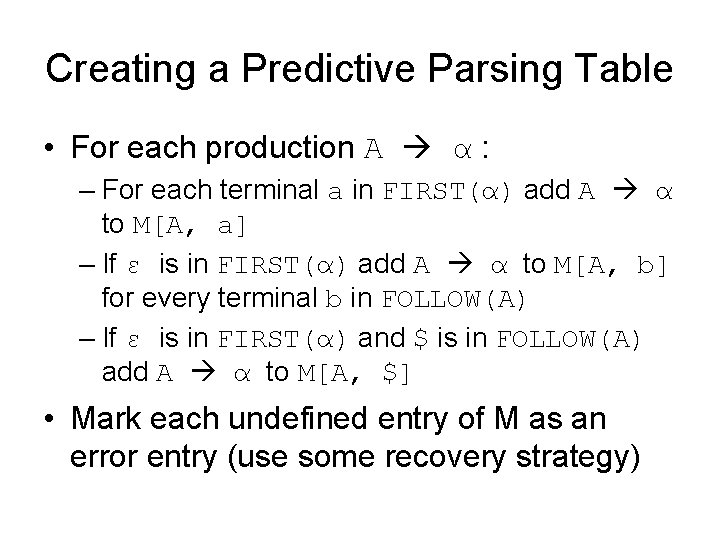

Creating a Predictive Parsing Table • For each production A α : – For each terminal a in FIRST(α) add A α to M[A, a] – If ε is in FIRST(α) add A α to M[A, b] for every terminal b in FOLLOW(A) – If ε is in FIRST(α) and $ is in FOLLOW(A) add A α to M[A, $] • Mark each undefined entry of M as an error entry (use some recovery strategy)

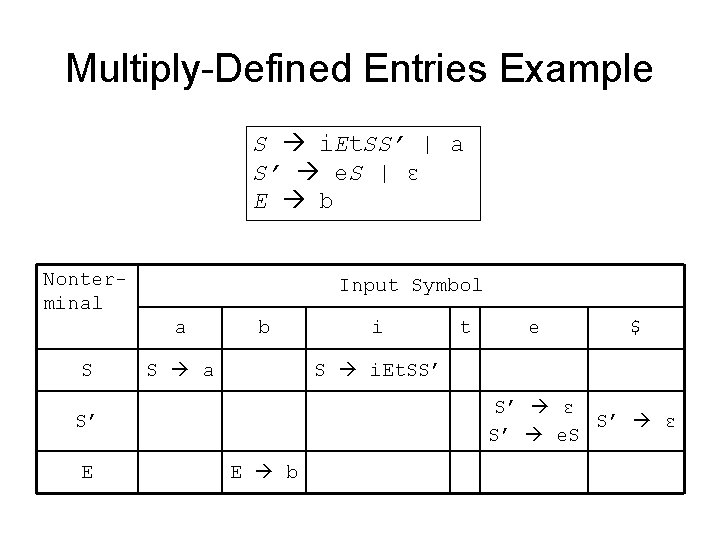

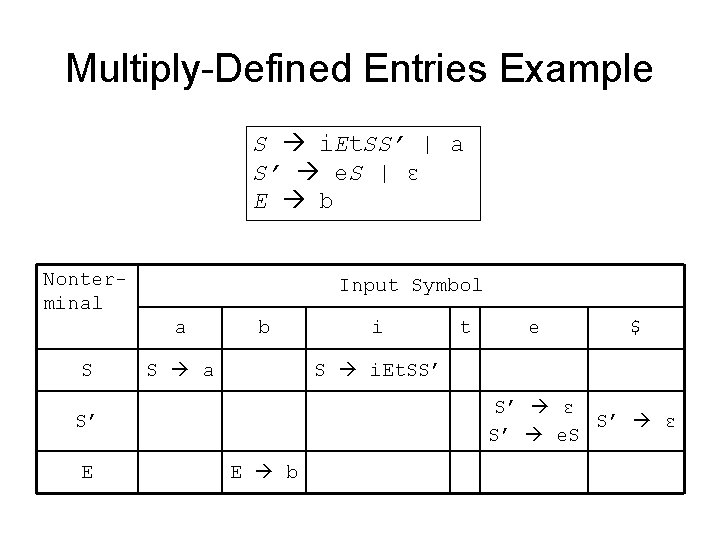

Multiply-Defined Entries Example S i. Et. SS’ | a S’ e. S | ε E b Nonterminal Input Symbol a S b S a t e $ S i. Et. SS’ S’ ε S’ e. S S’ E i E b

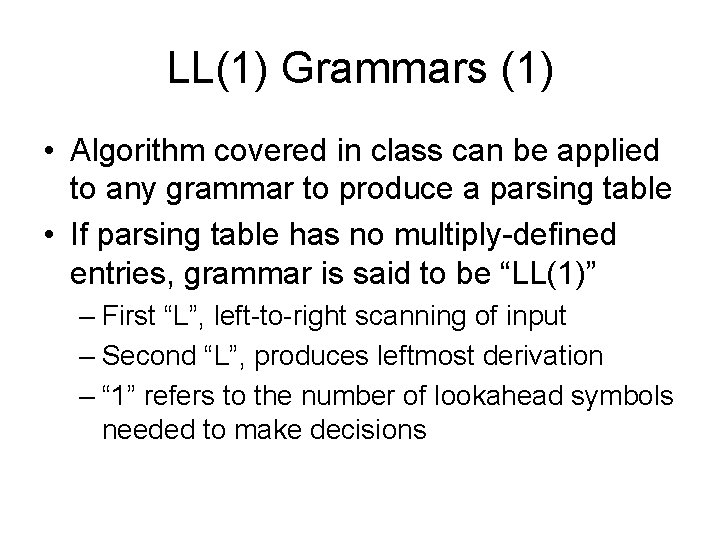

LL(1) Grammars (1) • Algorithm covered in class can be applied to any grammar to produce a parsing table • If parsing table has no multiply-defined entries, grammar is said to be “LL(1)” – First “L”, left-to-right scanning of input – Second “L”, produces leftmost derivation – “ 1” refers to the number of lookahead symbols needed to make decisions

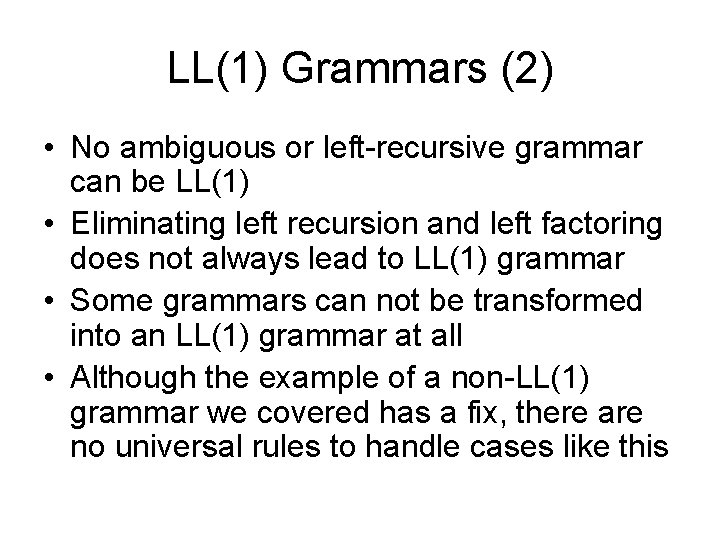

LL(1) Grammars (2) • No ambiguous or left-recursive grammar can be LL(1) • Eliminating left recursion and left factoring does not always lead to LL(1) grammar • Some grammars can not be transformed into an LL(1) grammar at all • Although the example of a non-LL(1) grammar we covered has a fix, there are no universal rules to handle cases like this

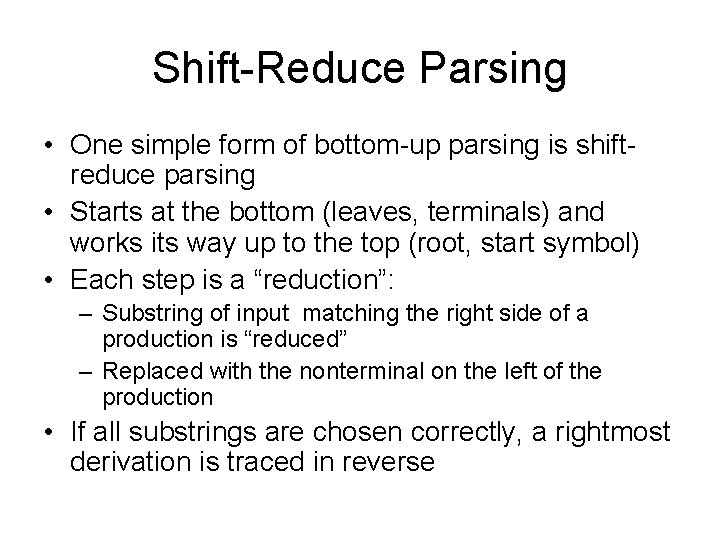

Shift-Reduce Parsing • One simple form of bottom-up parsing is shiftreduce parsing • Starts at the bottom (leaves, terminals) and works its way up to the top (root, start symbol) • Each step is a “reduction”: – Substring of input matching the right side of a production is “reduced” – Replaced with the nonterminal on the left of the production • If all substrings are chosen correctly, a rightmost derivation is traced in reverse

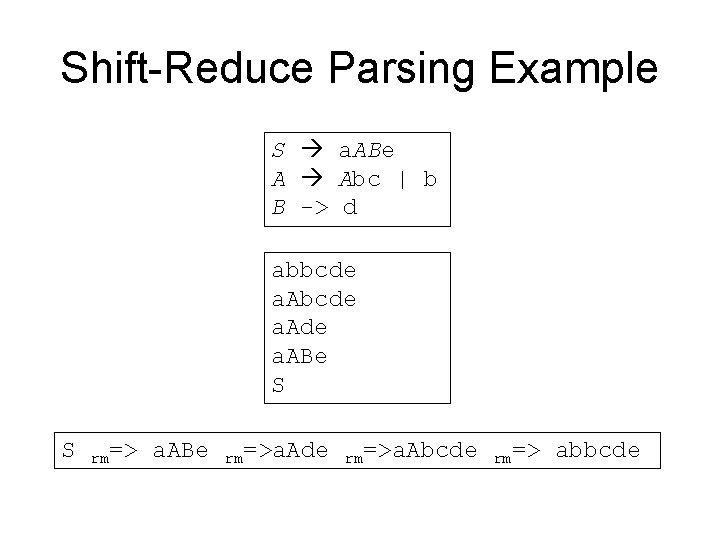

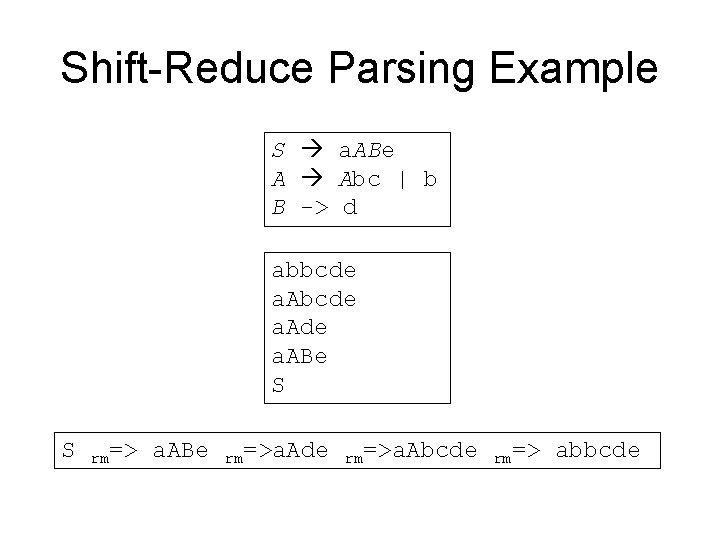

Shift-Reduce Parsing Example S a. ABe A Abc | b B -> d abbcde a. Ade a. ABe S S rm=> a. ABe rm=>a. Ade rm=>a. Abcde rm=> abbcde

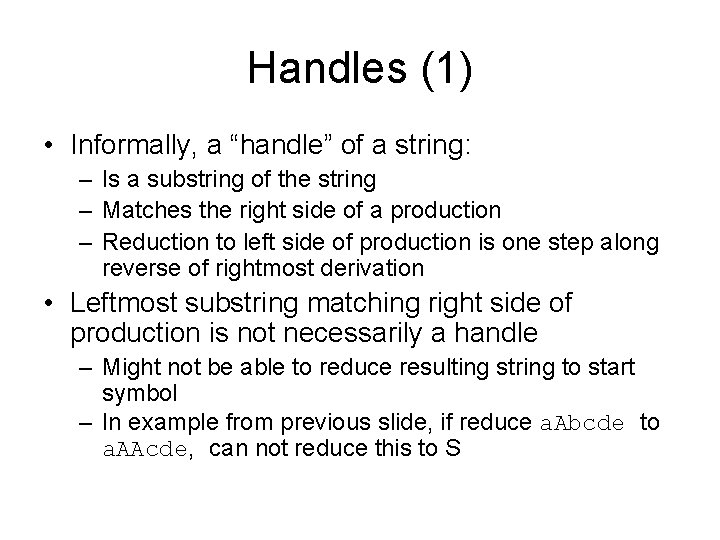

Handles (1) • Informally, a “handle” of a string: – Is a substring of the string – Matches the right side of a production – Reduction to left side of production is one step along reverse of rightmost derivation • Leftmost substring matching right side of production is not necessarily a handle – Might not be able to reduce resulting string to start symbol – In example from previous slide, if reduce a. Abcde to a. AAcde, can not reduce this to S

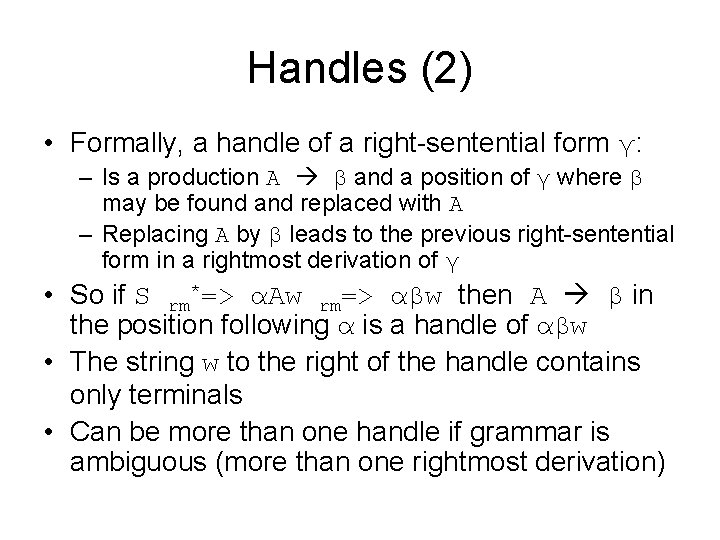

Handles (2) • Formally, a handle of a right-sentential form γ: – Is a production A β and a position of γ where β may be found and replaced with A – Replacing A by β leads to the previous right-sentential form in a rightmost derivation of γ • So if S rm*=> αAw rm=> αβw then A β in the position following α is a handle of αβw • The string w to the right of the handle contains only terminals • Can be more than one handle if grammar is ambiguous (more than one rightmost derivation)

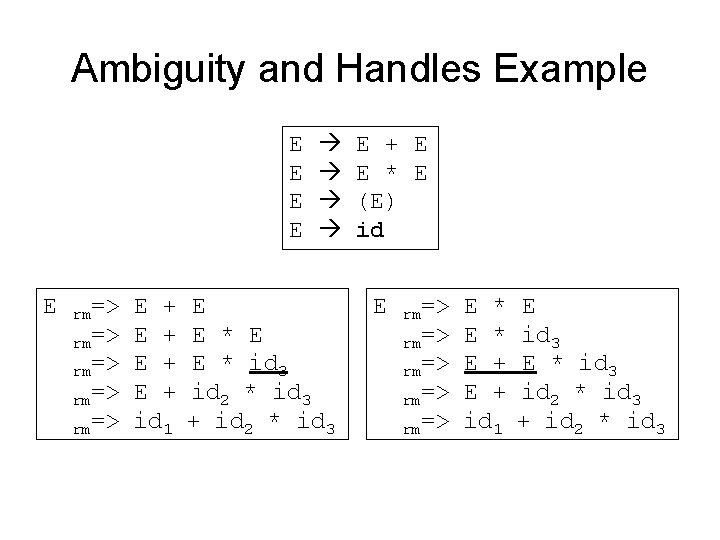

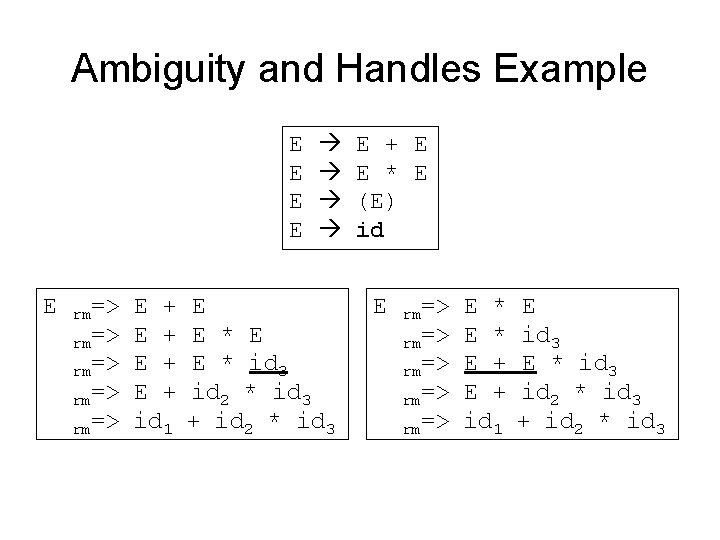

Ambiguity and Handles Example E E E rm=> rm=> E + E + id 1 E E * id 3 id 2 * id 3 + id 2 * id 3 E + E E * E (E) id E rm=> rm=> E * E + id 1 E id 3 E * id 3 id 2 * id 3 + id 2 * id 3

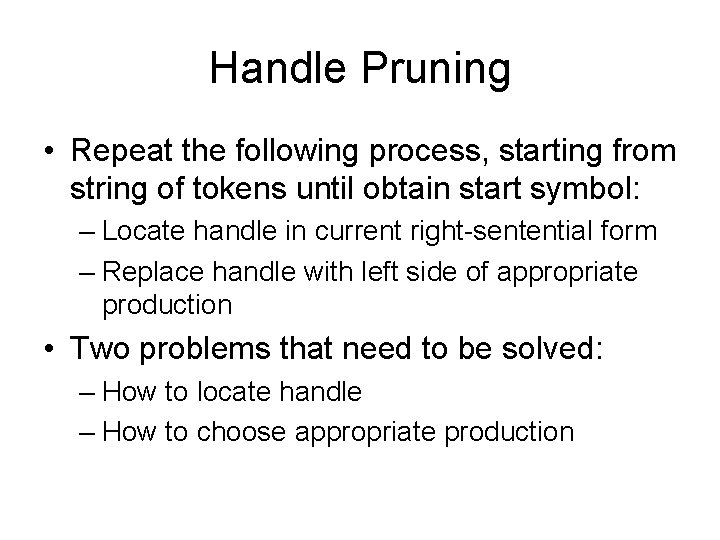

Handle Pruning • Repeat the following process, starting from string of tokens until obtain start symbol: – Locate handle in current right-sentential form – Replace handle with left side of appropriate production • Two problems that need to be solved: – How to locate handle – How to choose appropriate production

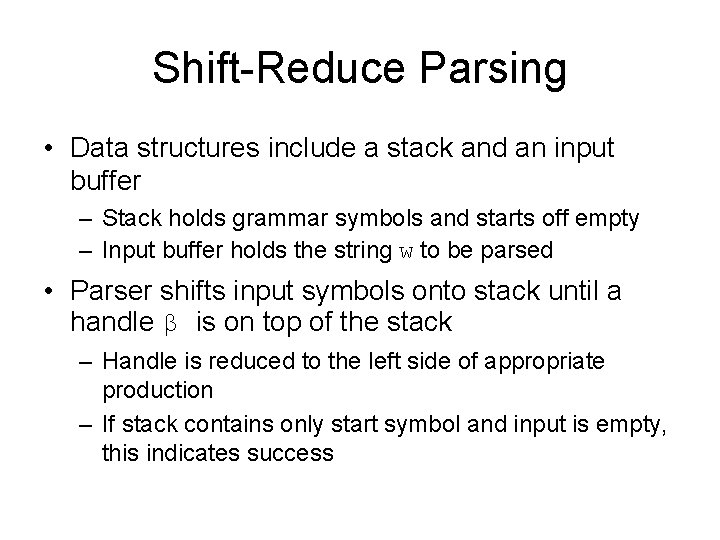

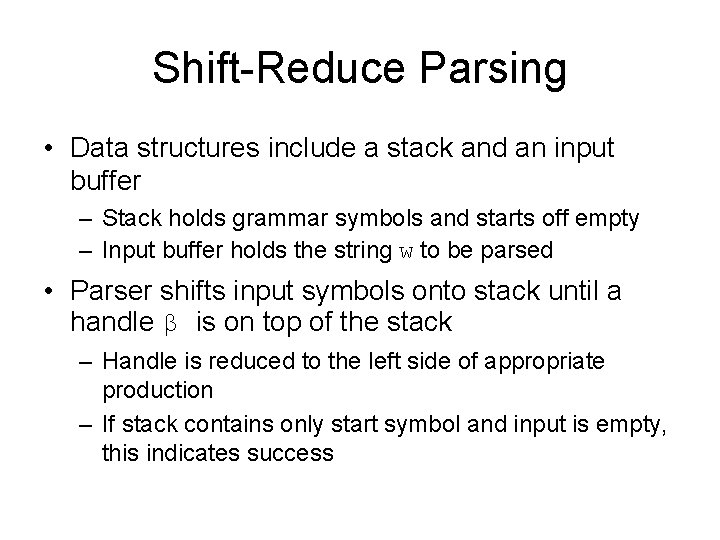

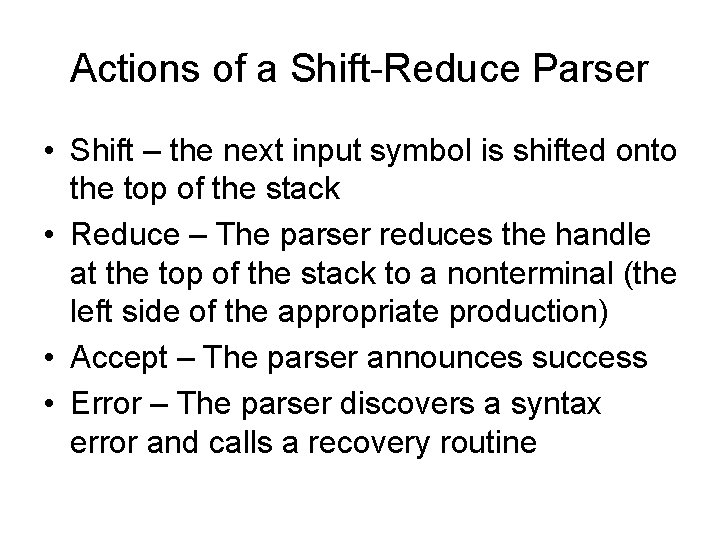

Shift-Reduce Parsing • Data structures include a stack and an input buffer – Stack holds grammar symbols and starts off empty – Input buffer holds the string w to be parsed • Parser shifts input symbols onto stack until a handle β is on top of the stack – Handle is reduced to the left side of appropriate production – If stack contains only start symbol and input is empty, this indicates success

Actions of a Shift-Reduce Parser • Shift – the next input symbol is shifted onto the top of the stack • Reduce – The parser reduces the handle at the top of the stack to a nonterminal (the left side of the appropriate production) • Accept – The parser announces success • Error – The parser discovers a syntax error and calls a recovery routine

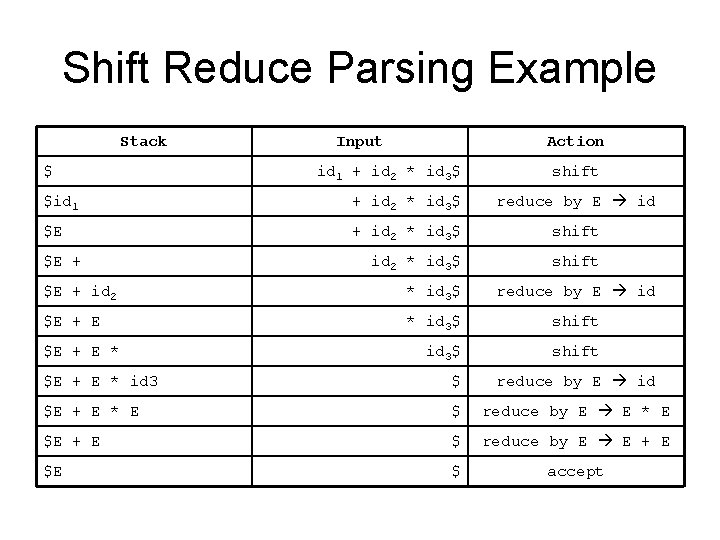

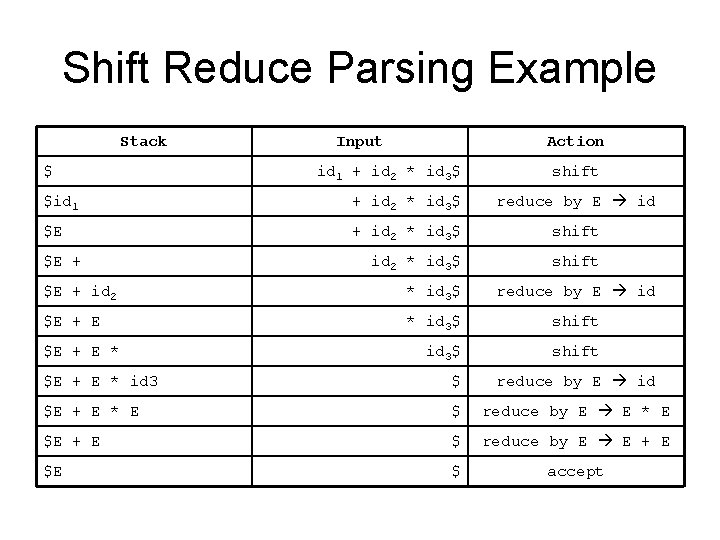

Shift Reduce Parsing Example Stack $ Input Action id 1 + id 2 * id 3$ shift $id 1 + id 2 * id 3$ reduce by E id $E + id 2 * id 3$ shift $E + id 2 * id 3$ reduce by E id $E + E * id 3$ shift $E + E * id 3 $ reduce by E id $E + E * E $ reduce by E E * E $E + E $ reduce by E E + E $E $ accept

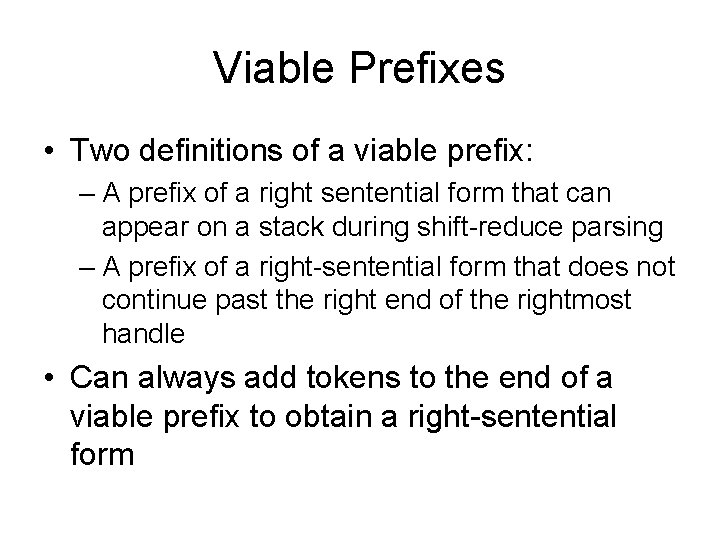

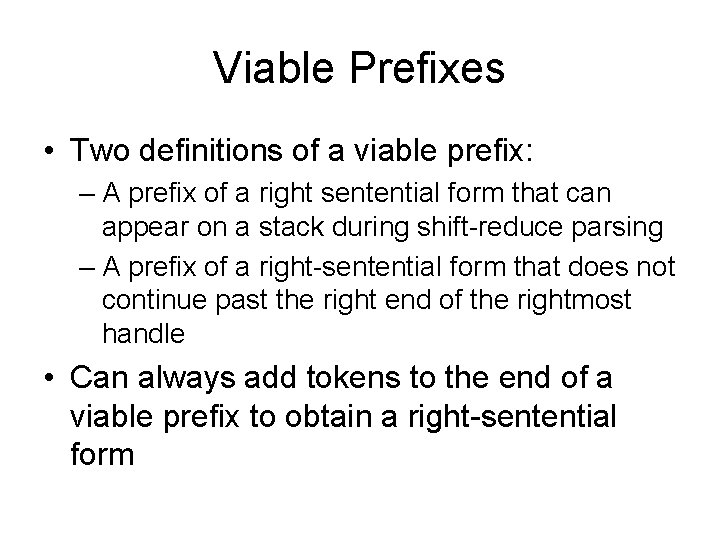

Viable Prefixes • Two definitions of a viable prefix: – A prefix of a right sentential form that can appear on a stack during shift-reduce parsing – A prefix of a right-sentential form that does not continue past the right end of the rightmost handle • Can always add tokens to the end of a viable prefix to obtain a right-sentential form

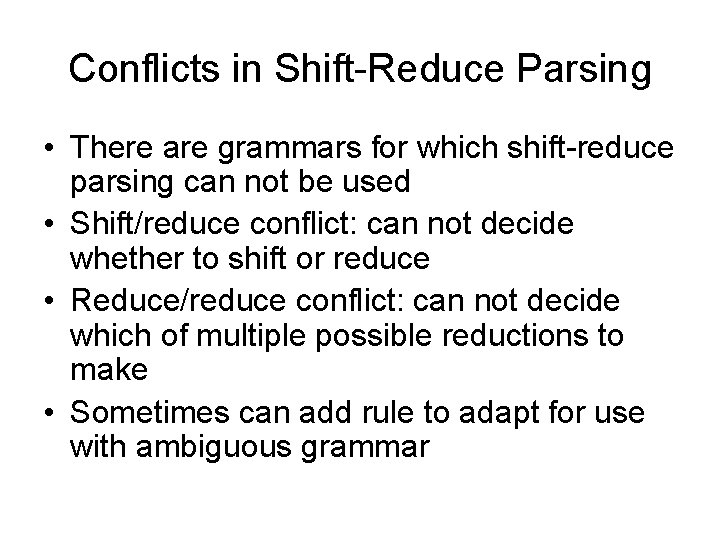

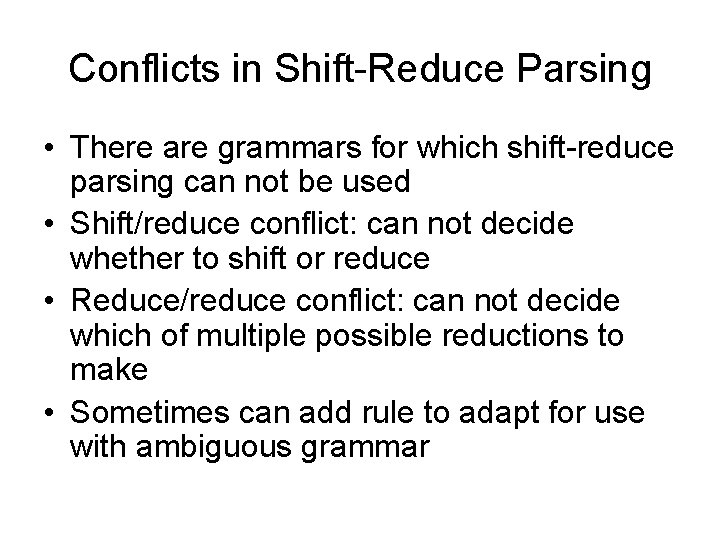

Conflicts in Shift-Reduce Parsing • There are grammars for which shift-reduce parsing can not be used • Shift/reduce conflict: can not decide whether to shift or reduce • Reduce/reduce conflict: can not decide which of multiple possible reductions to make • Sometimes can add rule to adapt for use with ambiguous grammar

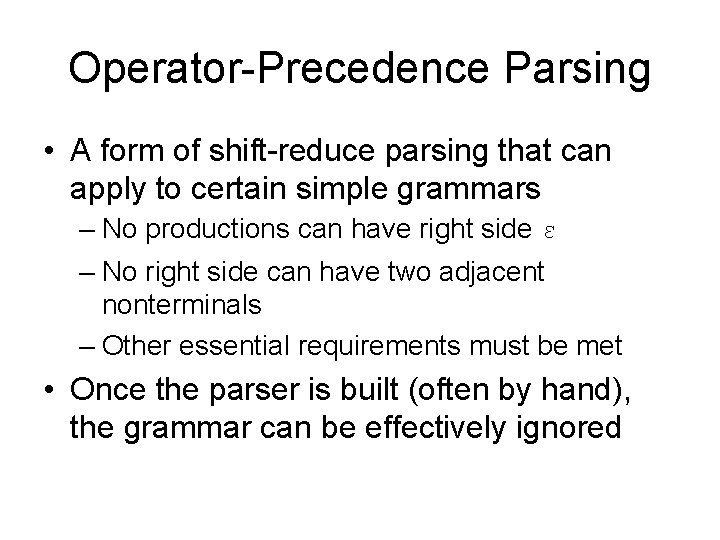

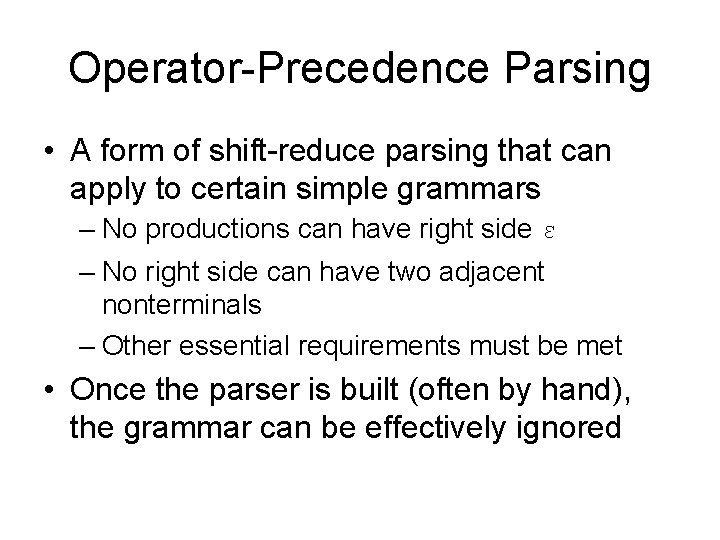

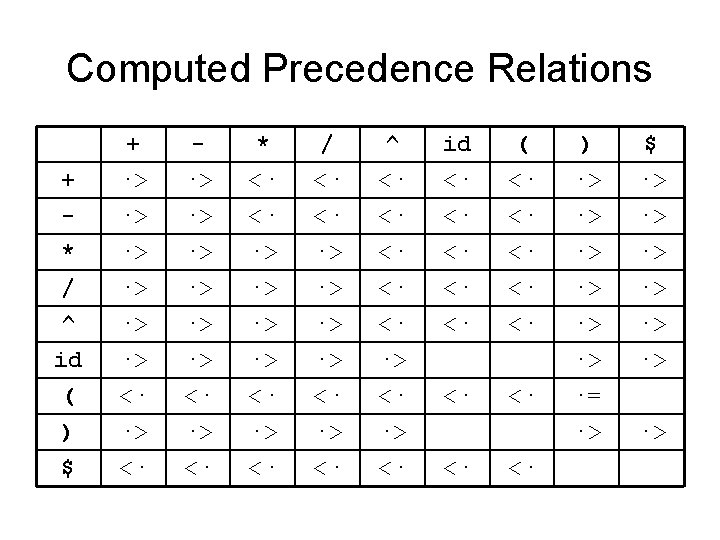

Operator-Precedence Parsing • A form of shift-reduce parsing that can apply to certain simple grammars – No productions can have right side ε – No right side can have two adjacent nonterminals – Other essential requirements must be met • Once the parser is built (often by hand), the grammar can be effectively ignored

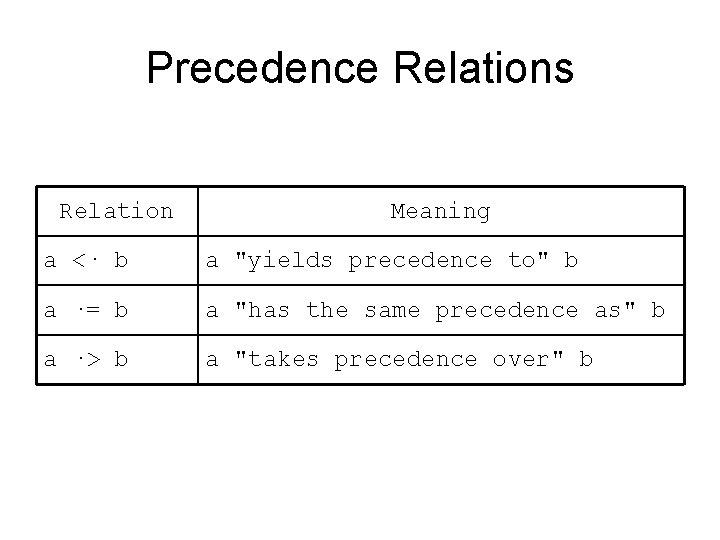

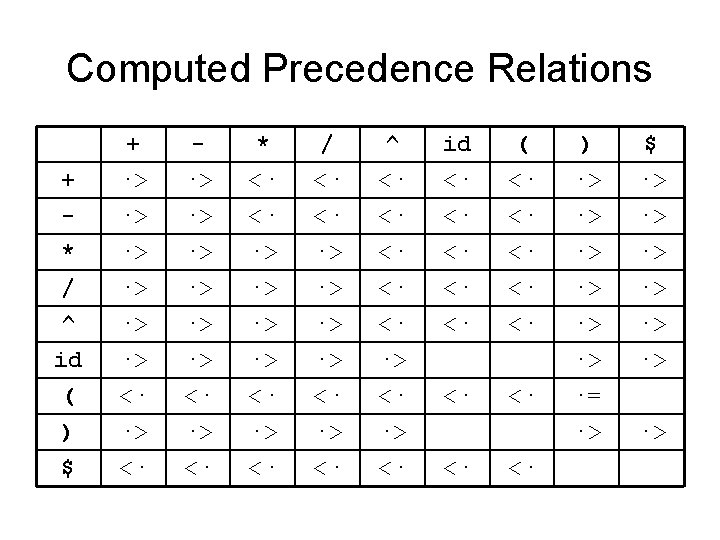

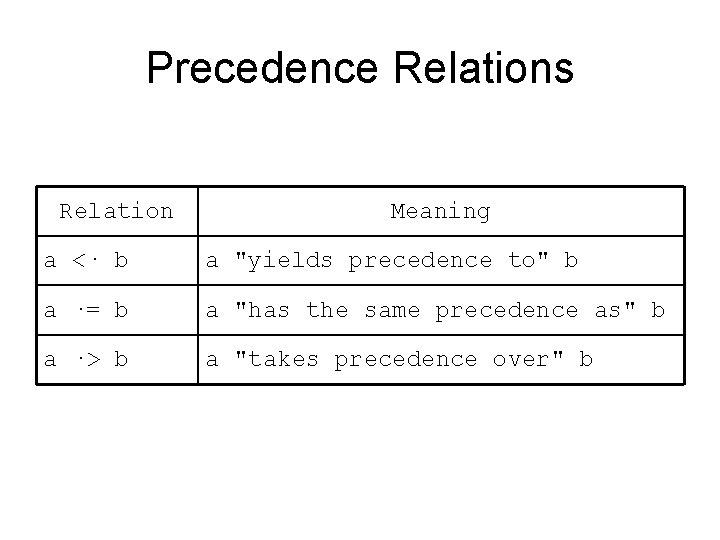

Precedence Relations Relation Meaning a <· b a "yields precedence to" b a ·= b a "has the same precedence as" b a ·> b a "takes precedence over" b

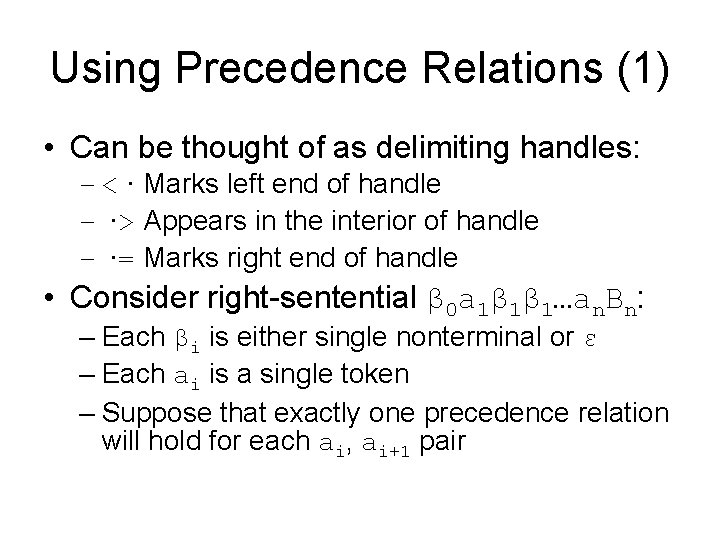

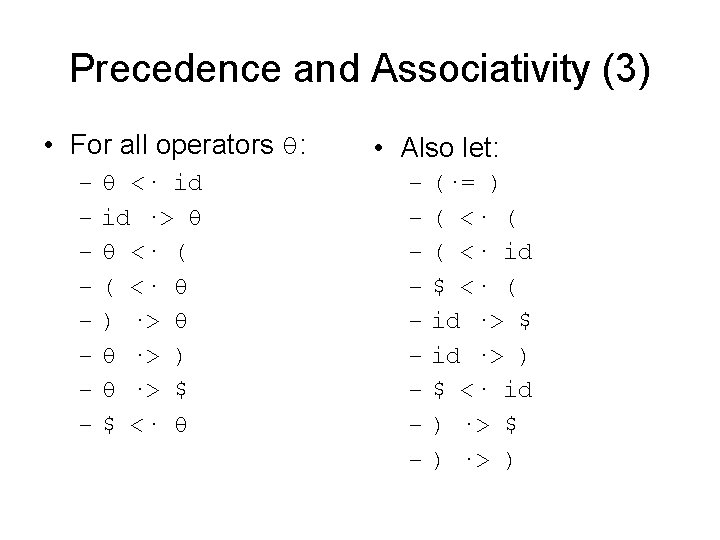

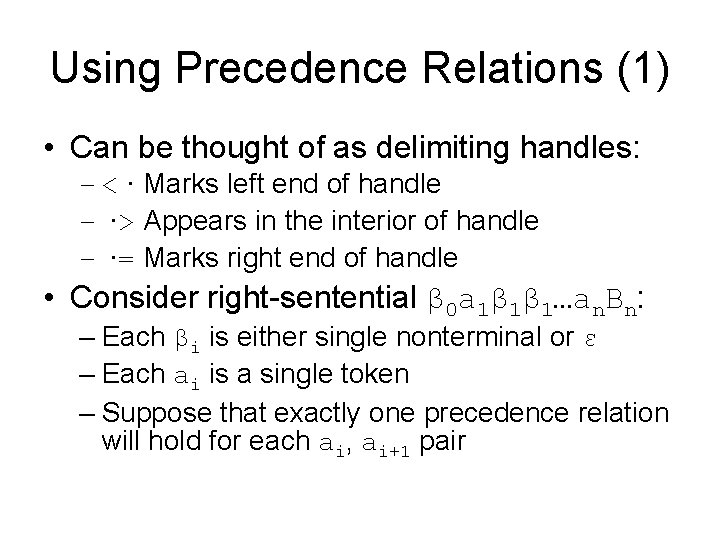

Using Precedence Relations (1) • Can be thought of as delimiting handles: – <· Marks left end of handle – ·> Appears in the interior of handle – ·= Marks right end of handle • Consider right-sentential β 0 a 1β 1β 1…an. Bn: – Each βi is either single nonterminal or ε – Each ai is a single token – Suppose that exactly one precedence relation will hold for each ai, ai+1 pair

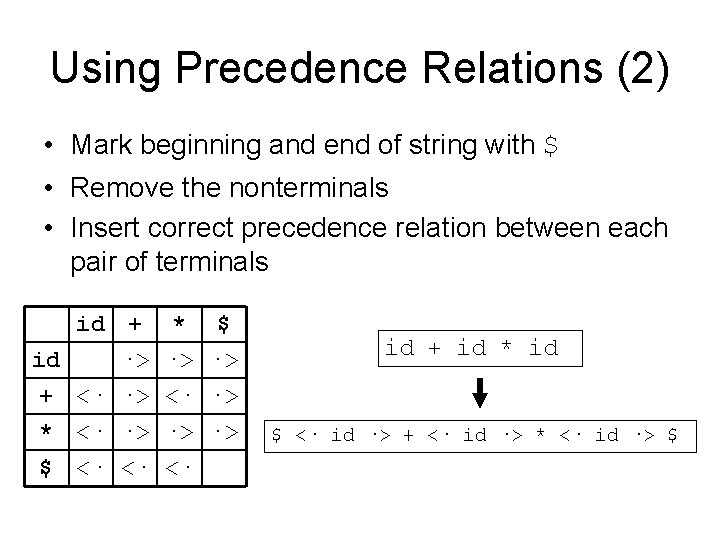

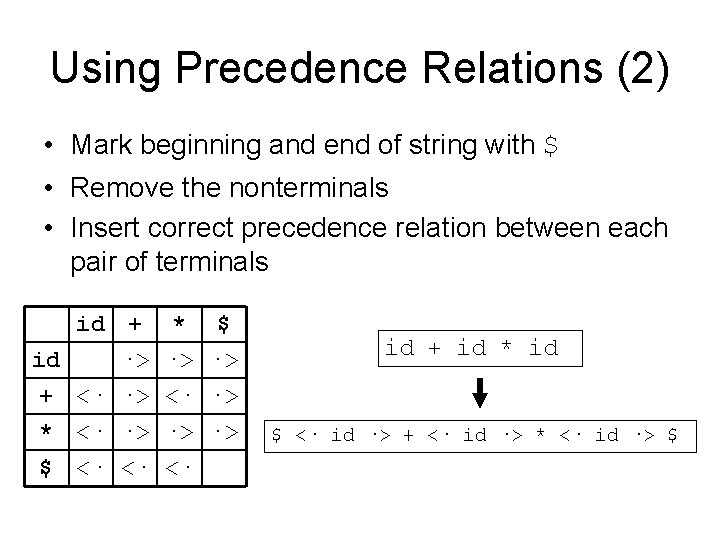

Using Precedence Relations (2) • Mark beginning and end of string with $ • Remove the nonterminals • Insert correct precedence relation between each pair of terminals id + id ·> + <· ·> * ·> <· ·> $ <· <· <· $ ·> ·> ·> id + id * id $ <· id ·> + <· id ·> * <· id ·> $

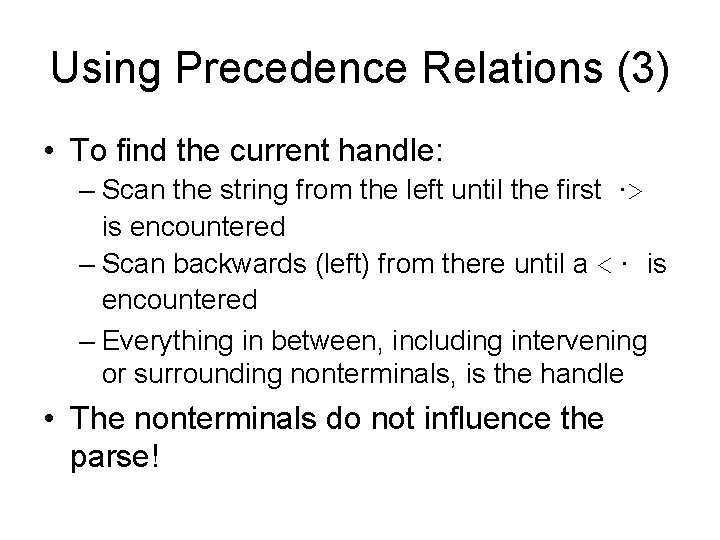

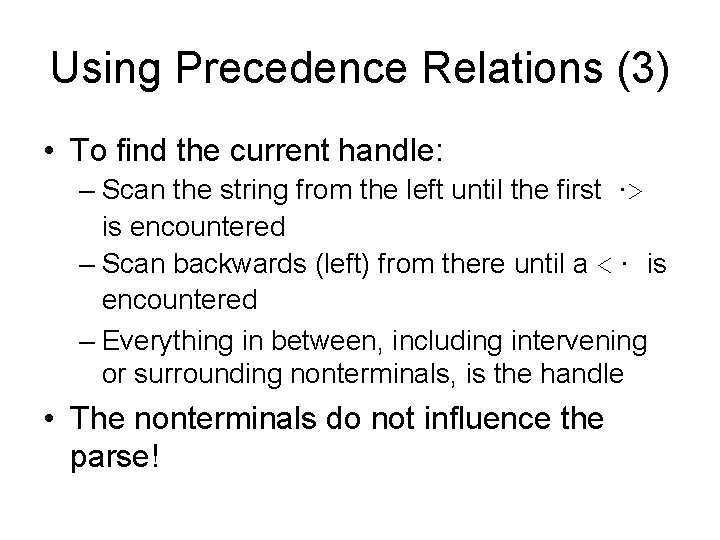

Using Precedence Relations (3) • To find the current handle: – Scan the string from the left until the first ·> is encountered – Scan backwards (left) from there until a <· is encountered – Everything in between, including intervening or surrounding nonterminals, is the handle • The nonterminals do not influence the parse!

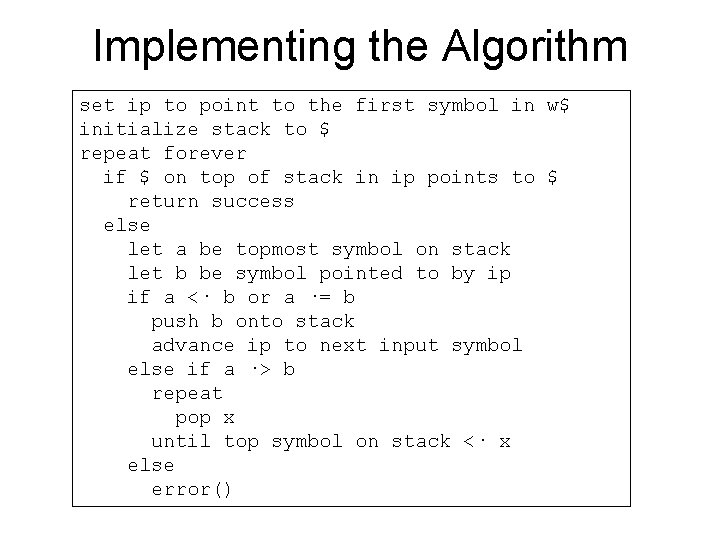

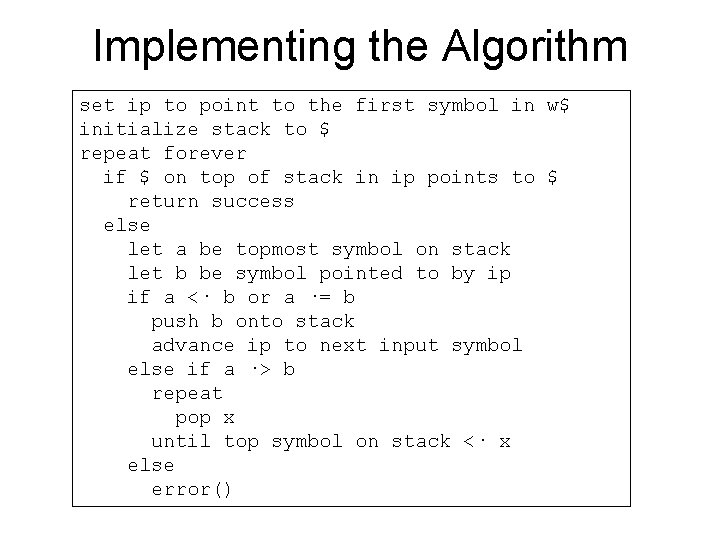

Implementing the Algorithm set ip to point to the first symbol in w$ initialize stack to $ repeat forever if $ on top of stack in ip points to $ return success else let a be topmost symbol on stack let b be symbol pointed to by ip if a <· b or a ·= b push b onto stack advance ip to next input symbol else if a ·> b repeat pop x until top symbol on stack <· x else error()

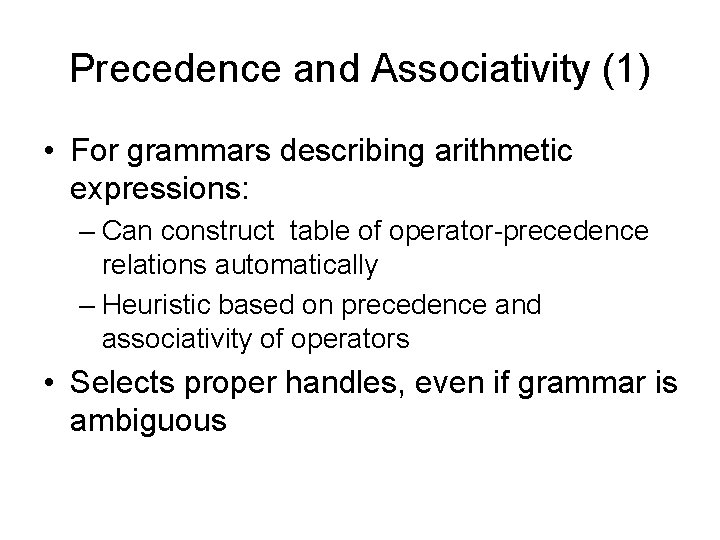

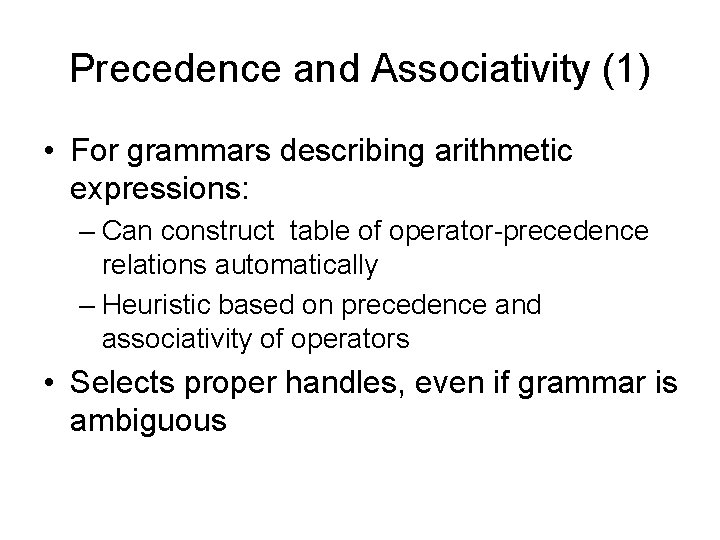

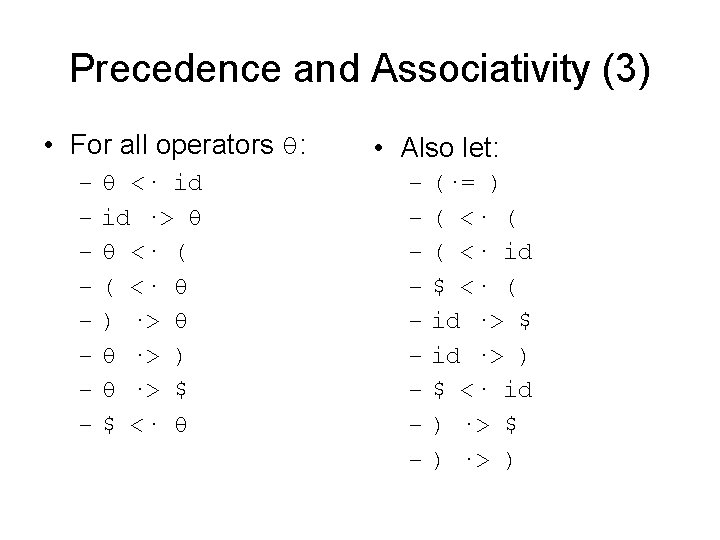

Precedence and Associativity (1) • For grammars describing arithmetic expressions: – Can construct table of operator-precedence relations automatically – Heuristic based on precedence and associativity of operators • Selects proper handles, even if grammar is ambiguous

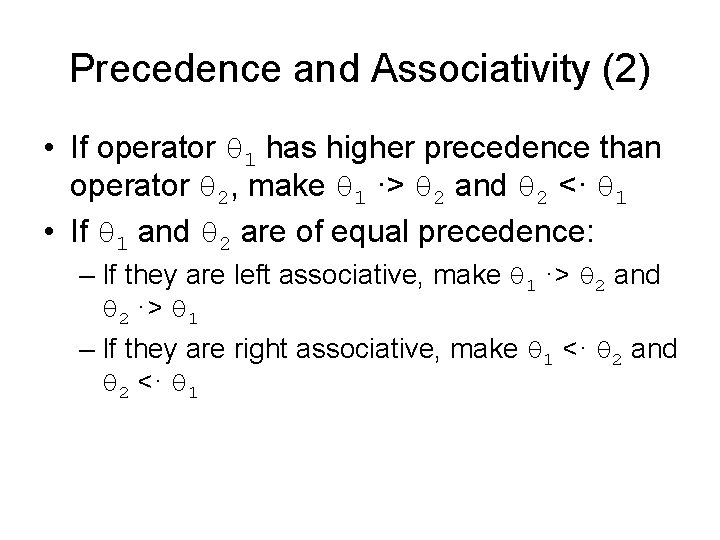

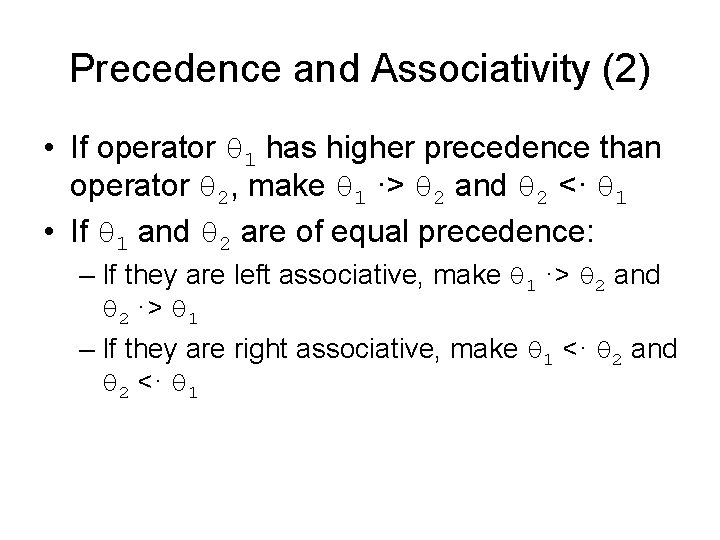

Precedence and Associativity (2) • If operator θ 1 has higher precedence than operator θ 2, make θ 1 ·> θ 2 and θ 2 <· θ 1 • If θ 1 and θ 2 are of equal precedence: – If they are left associative, make θ 1 ·> θ 2 and θ 2 ·> θ 1 – If they are right associative, make θ 1 <· θ 2 and θ 2 <· θ 1

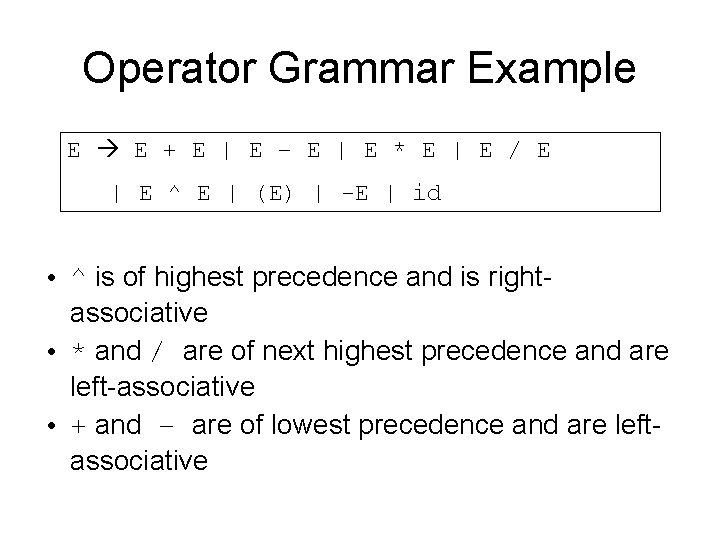

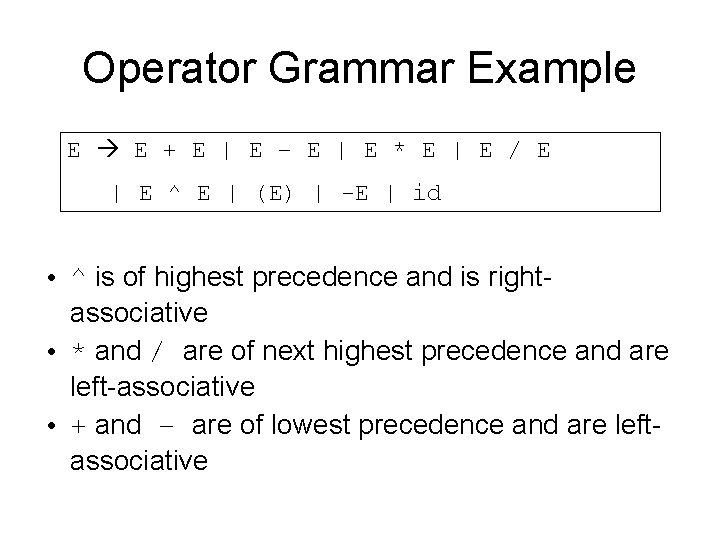

Operator Grammar Example E E + E | E – E | E * E | E / E | E ^ E | (E) | -E | id • ^ is of highest precedence and is rightassociative • * and / are of next highest precedence and are left-associative • + and – are of lowest precedence and are leftassociative

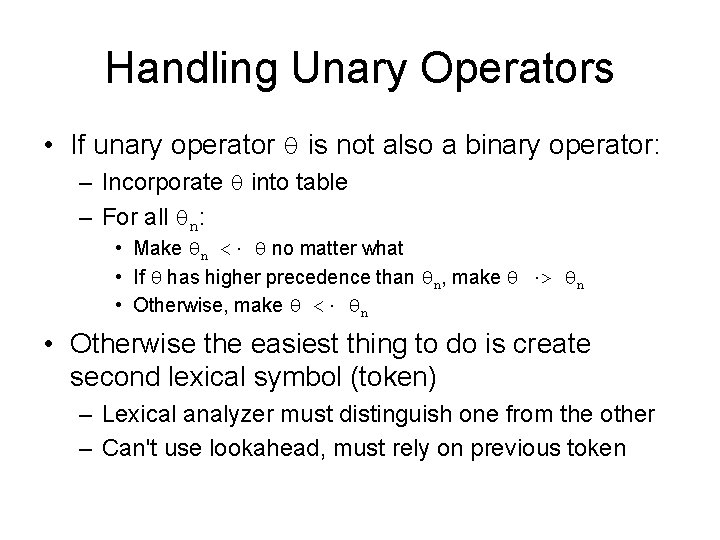

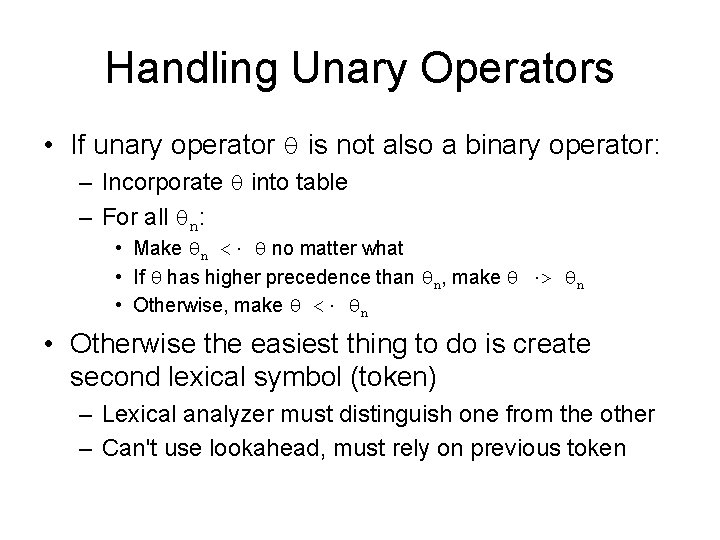

Handling Unary Operators • If unary operator θ is not also a binary operator: – Incorporate θ into table – For all θn: • Make θn <· θ no matter what • If θ has higher precedence than θn, make θ ·> θn • Otherwise, make θ <· θn • Otherwise the easiest thing to do is create second lexical symbol (token) – Lexical analyzer must distinguish one from the other – Can't use lookahead, must rely on previous token

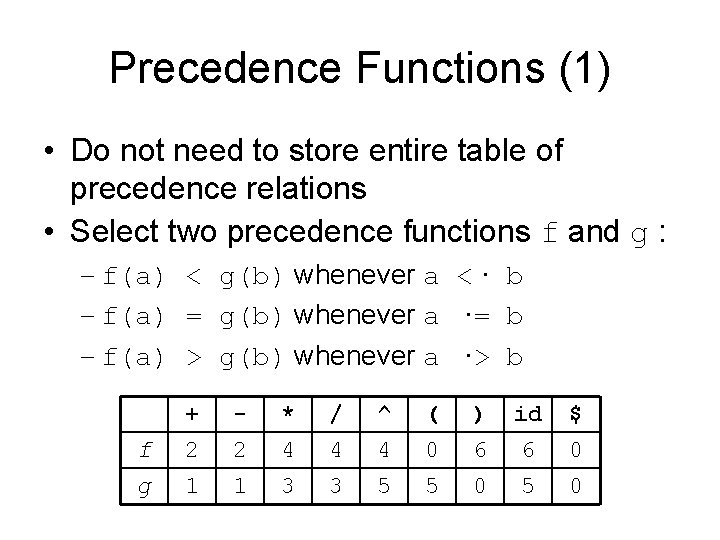

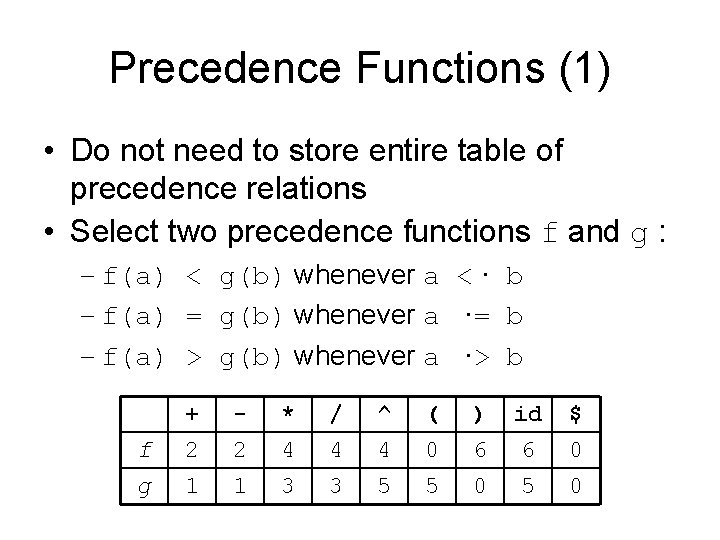

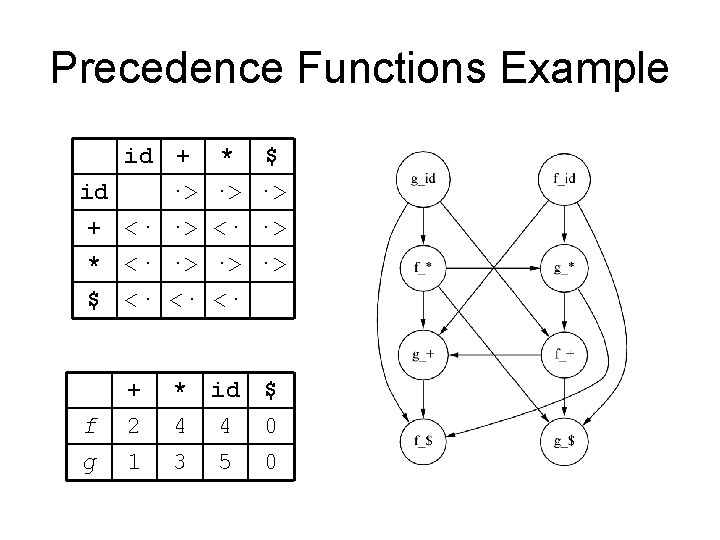

Precedence Functions (1) • Do not need to store entire table of precedence relations • Select two precedence functions f and g : – f(a) < g(b) whenever a <· b – f(a) = g(b) whenever a ·= b – f(a) > g(b) whenever a ·> b f g + 2 1 * 4 3 / 4 3 ^ 4 5 ( 0 5 ) 6 0 id 6 5 $ 0 0

Precedence Functions (2) • Precedence relation between a and b is determined by comparing f(a) to g(b) • Loss of error detection capability (errors caught later when no reduction for handle is found) • It is not always possible to construct valid precedence functions • When it is possible, functions can be computed automatically

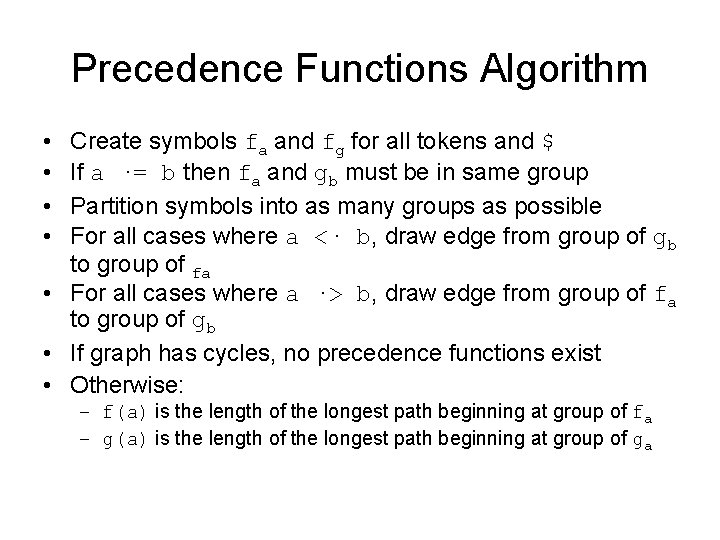

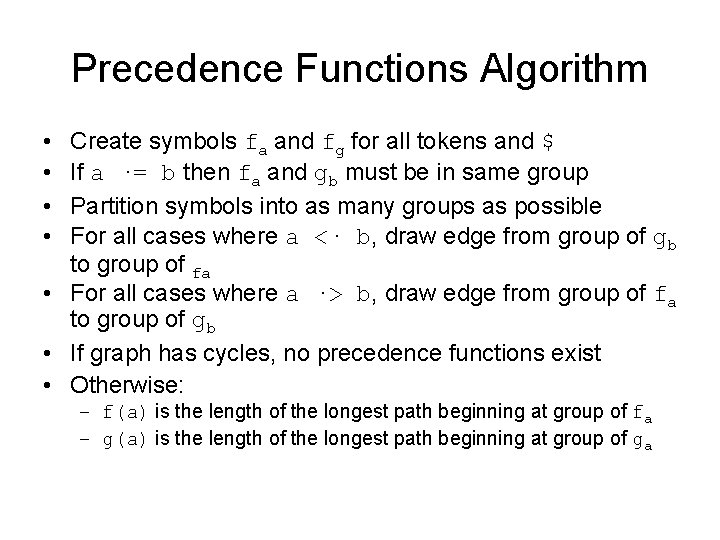

Precedence Functions Algorithm • • Create symbols fa and fg for all tokens and $ If a ·= b then fa and gb must be in same group Partition symbols into as many groups as possible For all cases where a <· b, draw edge from group of gb to group of fa • For all cases where a ·> b, draw edge from group of fa to group of gb • If graph has cycles, no precedence functions exist • Otherwise: – f(a) is the length of the longest path beginning at group of fa – g(a) is the length of the longest path beginning at group of ga

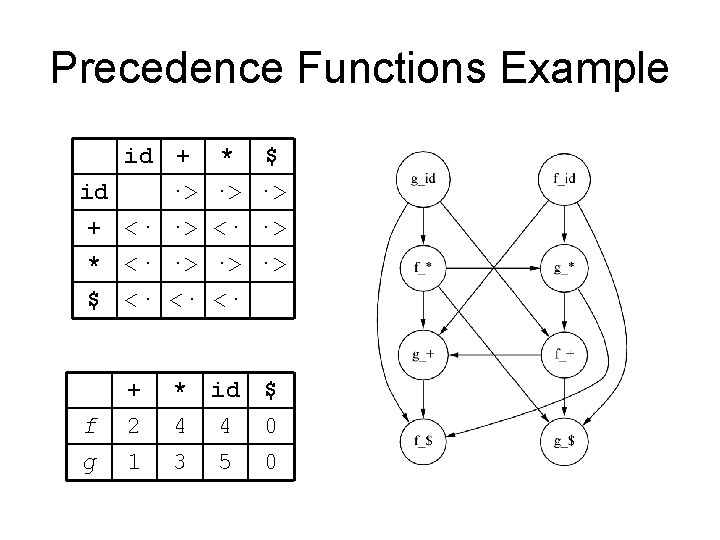

Precedence Functions Example id + id ·> + <· ·> * ·> <· ·> $ ·> ·> ·> $ <· <· <· f g + 2 1 * id 4 4 3 5 $ 0 0

Detecting and Handling Errors • Errors can occur at two points: – If no precedence relation holds between the terminal on top of stack and current input – If a handle has been found, but no production is found with this handle as right side • Errors during reductions can be handled with diagnostic message • Errors due to lack of precedence relation can be handled by recovery routines specified in table

LR Parsers • LR Parsers us an efficient, bottom-up parsing technique useful for a large class of CFGs • Too difficult to construct by hand, but automatic generators to create them exist (e. g. Yacc) • LR(k) grammars – “L” refers to left-to-right scanning of input – “R” refers to rightmost derivation (produced in reverse order) – “k” refers to the number of lookahead symbols needed for decisions (if omitted, assumed to be 1)

Benefits of LR Parsing • Can be constructed to recognize virtually all programming language construct for which a CFG can be written • Most general non-backtracking shift-reduce parsing method known • Can be implemented efficiently • Handles a class of grammars that is a superset of those handled by predictive parsing • Can detect syntactic errors as soon as possible with a left-to-right scan of input

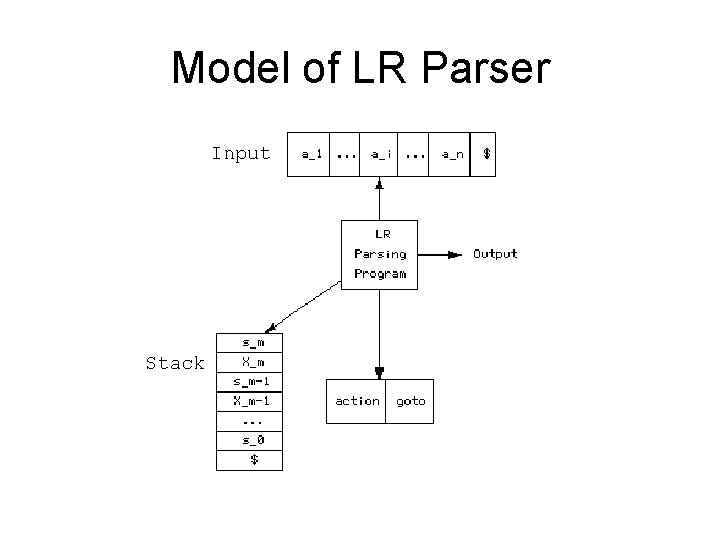

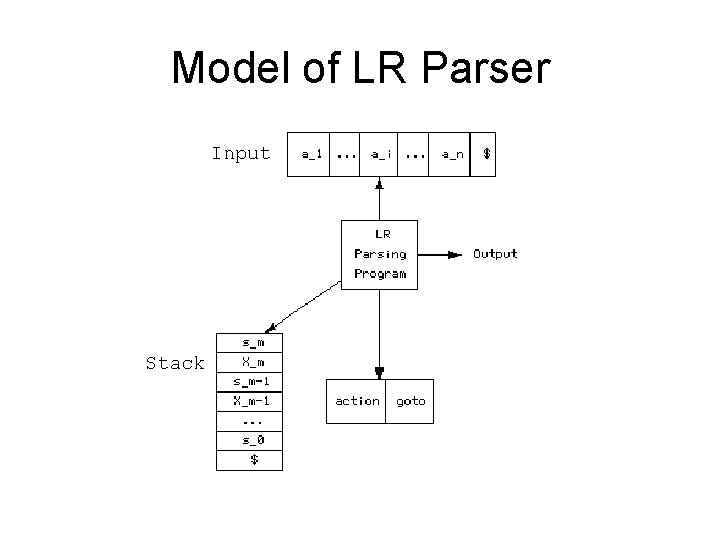

Model of LR Parser Input Stack

LR Parser (1) • Driver program is the same for all LR Parsers • Stack consists of states (si) and grammar symbols (Xi) – Each state summarizes information contained in stack below it – Grammar symbols do not actually need to be stored on stack in most implementations • State symbol on top of stack and next input symbol used to determine shift/reduce decision

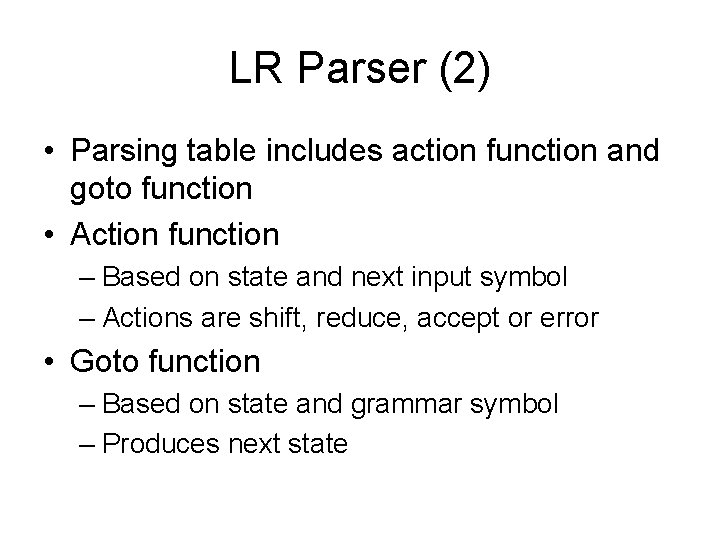

LR Parser (2) • Parsing table includes action function and goto function • Action function – Based on state and next input symbol – Actions are shift, reduce, accept or error • Goto function – Based on state and grammar symbol – Produces next state

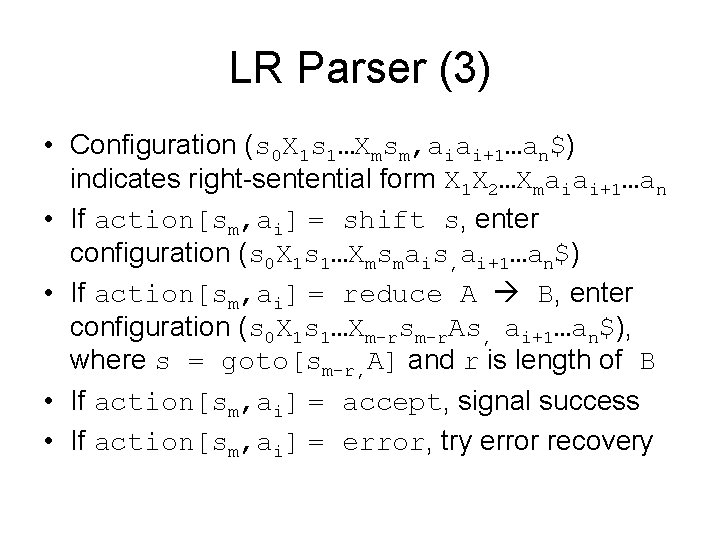

LR Parser (3) • Configuration (s 0 X 1 s 1…Xmsm, aiai+1…an$) indicates right-sentential form X 1 X 2…Xmaiai+1…an • If action[sm, ai] = shift s, enter configuration (s 0 X 1 s 1…Xmsmais, ai+1…an$) • If action[sm, ai] = reduce A B, enter configuration (s 0 X 1 s 1…Xm-rsm-r. As, ai+1…an$), where s = goto[sm-r, A] and r is length of B • If action[sm, ai] = accept, signal success • If action[sm, ai] = error, try error recovery

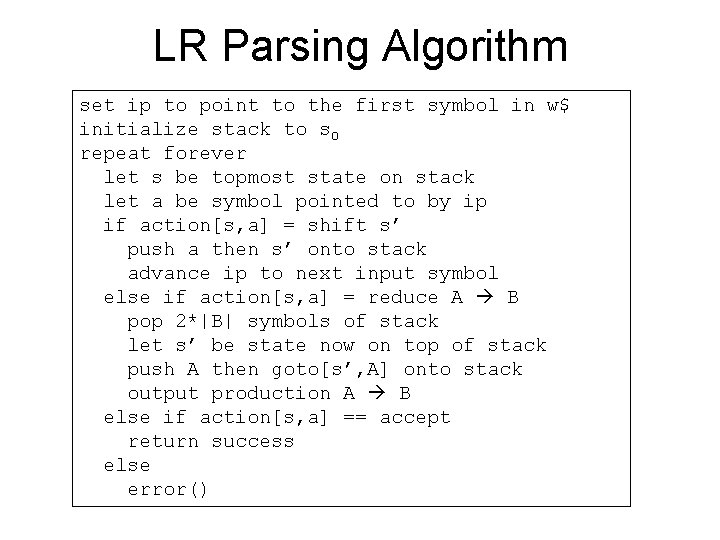

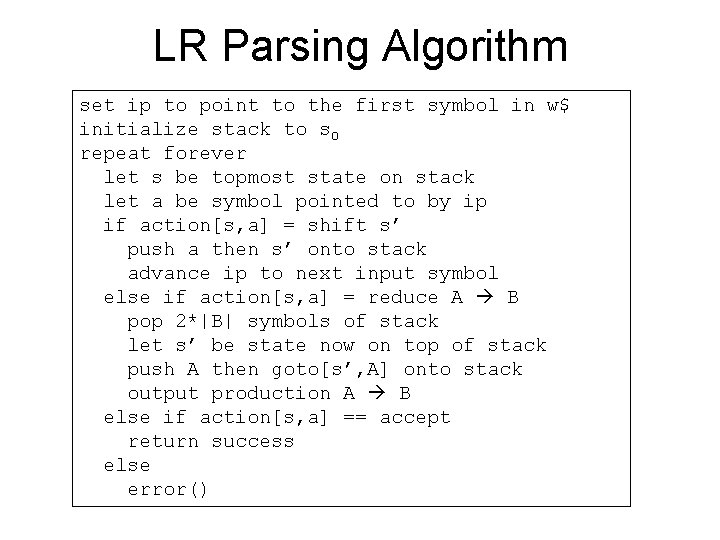

LR Parsing Algorithm set ip to point to the first symbol in w$ initialize stack to s 0 repeat forever let s be topmost state on stack let a be symbol pointed to by ip if action[s, a] = shift s’ push a then s’ onto stack advance ip to next input symbol else if action[s, a] = reduce A B pop 2*|B| symbols of stack let s’ be state now on top of stack push A then goto[s’, A] onto stack output production A B else if action[s, a] == accept return success else error()

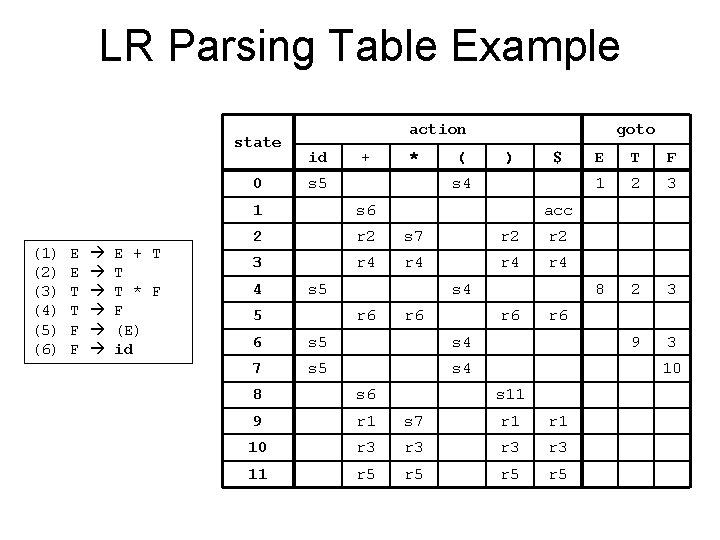

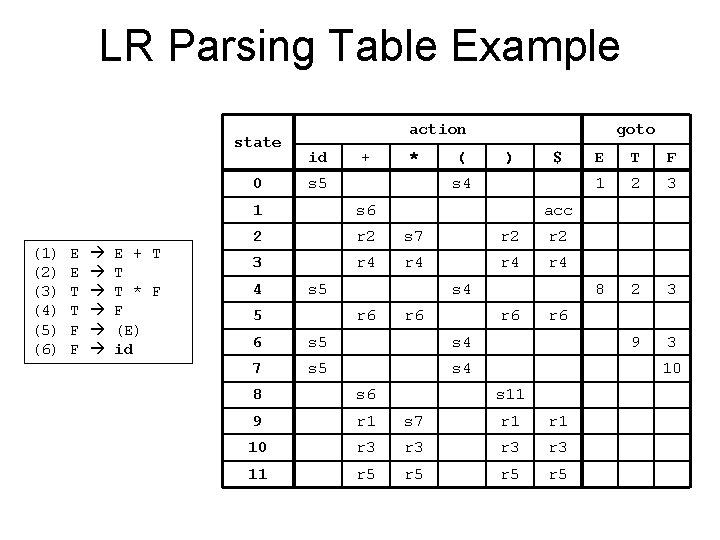

LR Parsing Table Example state 0 (1) (2) (3) (4) (5) (6) E E T T F F E + T T T * F F (E) id action id + * s 5 ( goto ) $ s 4 1 s 6 2 r 2 s 7 r 2 3 r 4 r 4 4 s 4 r 6 T F 1 2 3 8 2 3 9 3 acc s 5 5 E r 6 6 s 5 s 4 7 s 5 s 4 r 6 10 8 s 6 s 11 9 r 1 s 7 r 1 10 r 3 r 3 11 r 5 r 5

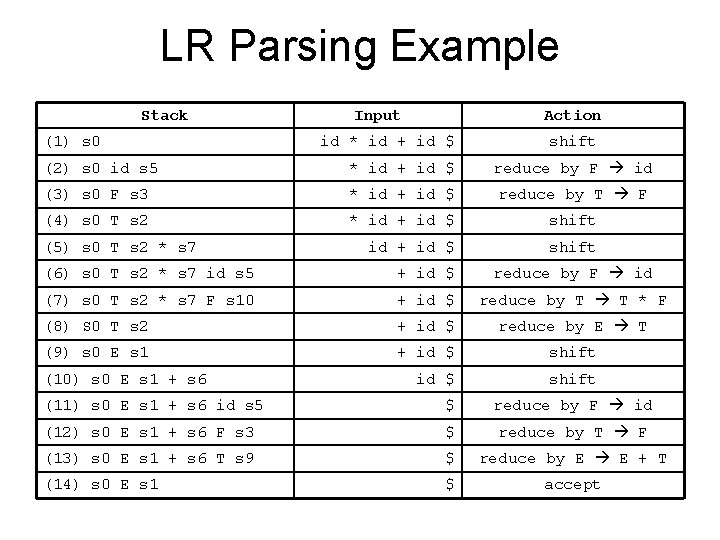

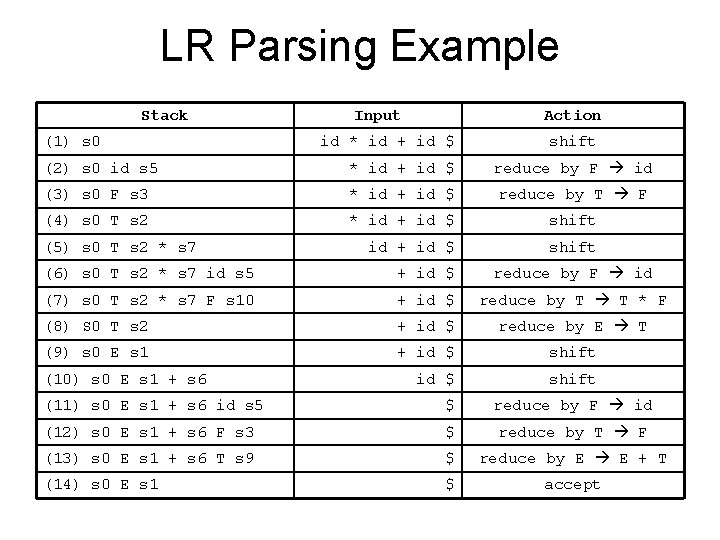

LR Parsing Example Stack (1) s 0 Input Action id * id + id $ shift (2) s 0 id s 5 * id + id $ reduce by F id (3) s 0 F s 3 * id + id $ reduce by T F (4) s 0 T s 2 * id + id $ shift (5) s 0 T s 2 * s 7 (6) s 0 T s 2 * s 7 id s 5 + id $ reduce by F id (7) s 0 T s 2 * s 7 F s 10 + id $ reduce by T T * F (8) S 0 T s 2 + id $ reduce by E T (9) s 0 E s 1 + id $ shift (10) s 0 E s 1 + s 6 (11) s 0 E s 1 + s 6 id s 5 $ reduce by F id (12) s 0 E s 1 + s 6 F s 3 $ reduce by T F (13) s 0 E s 1 + s 6 T s 9 $ reduce by E E + T (14) s 0 E s 1 $ accept

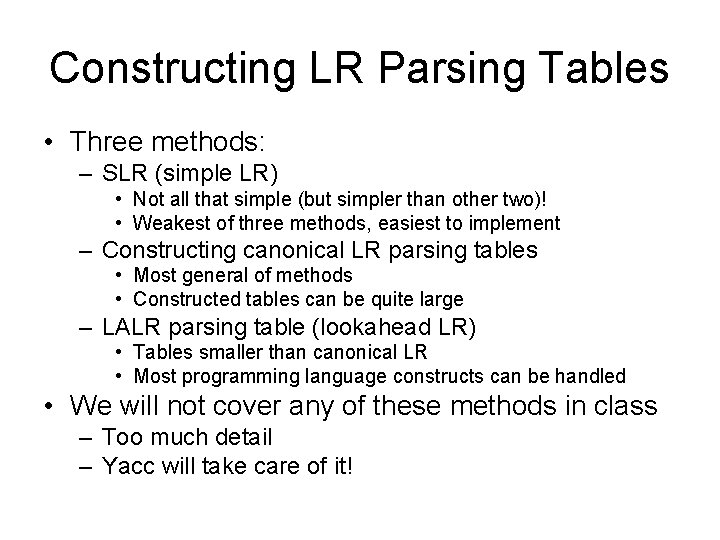

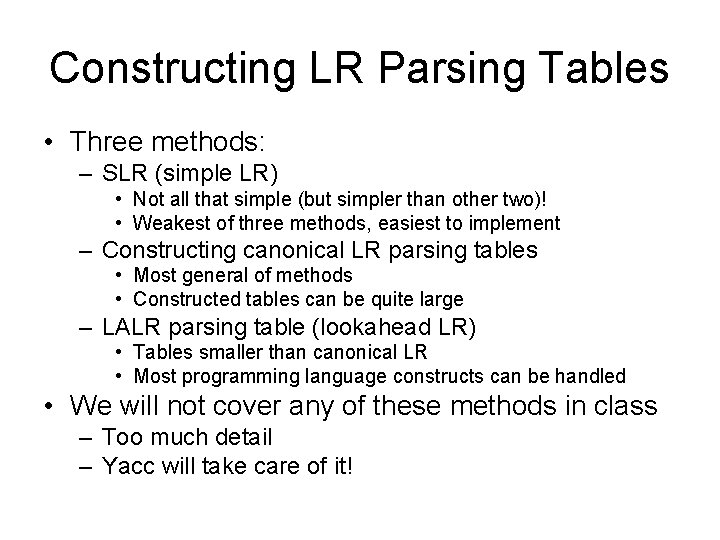

Constructing LR Parsing Tables • Three methods: – SLR (simple LR) • Not all that simple (but simpler than other two)! • Weakest of three methods, easiest to implement – Constructing canonical LR parsing tables • Most general of methods • Constructed tables can be quite large – LALR parsing table (lookahead LR) • Tables smaller than canonical LR • Most programming language constructs can be handled • We will not cover any of these methods in class – Too much detail – Yacc will take care of it!