TOPIC 3 COMPUTER ARCHITECTURE AND THE FECTHEXECUTE CYCLE

- Slides: 8

TOPIC 3 COMPUTER ARCHITECTURE AND THE FECTH-EXECUTE CYCLE CONTENT: 3. 1. Von Neumann architecture 3. 2. Registers: purpose and use 3. 3. Fetch-execute cycle 3. 4. Parallel processors

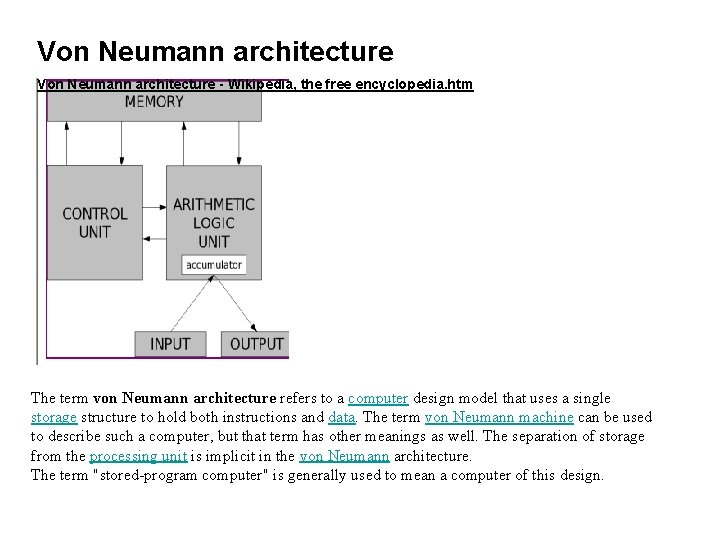

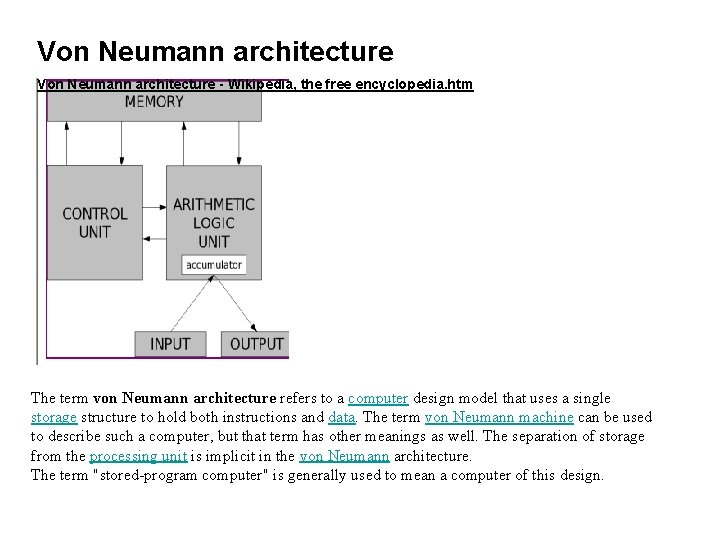

Von Neumann architecture - Wikipedia, the free encyclopedia. htm The term von Neumann architecture refers to a computer design model that uses a single storage structure to hold both instructions and data. The term von Neumann machine can be used to describe such a computer, but that term has other meanings as well. The separation of storage from the processing unit is implicit in the von Neumann architecture. The term "stored-program computer" is generally used to mean a computer of this design.

The earliest computing machines had fixed programs. Some very simple computers still use this design, either for simplicity or training purposes. For example, a desk calculator (in principle) is a fixed program computer. It can do basic mathematics, but it cannot be used as a word processor or to run video games. To change the program of such a machine, you have to re-wire, re-structure, or even re-design the machine. Indeed, the earliest computers were not so much "programmed" as they were "designed". "Reprogramming", when it was possible at all, was a very manual process, starting with flow charts and paper notes, followed by detailed engineering designs, and then the often-arduous process of implementing the physical changes. The idea of the stored-program computer changed all that. By creating an instruction set architecture and detailing the computation as a series of instructions (the program), the machine becomes much more flexible. By treating those instructions in the same way as data, a stored-program machine can easily change the program, and can do so under program control. The terms "von Neumann architecture" and "stored-program computer" are generally used interchangeably, and that usage is followed in this article. However, the Harvard architecture concept should be mentioned as a design which stores the program in an easily modifiable form, but not using the same storage as for general data.

Von Neumann bottleneck The separation between the CPU and memory leads to what is known as the von Neumann bottleneck. The bandwidth (data transfer rate) between the CPU and memory is very small in comparison with the amount of memory. In modern machines, bandwidth is also very small in comparison with the rate at which the CPU itself can work. Under some circumstances (when the CPU is required to perform minimal processing on large amounts of data), this gives rise to a serious limitation in overall effective processing speed. The CPU is continuously forced to wait for vital data to be transferred to or from memory. As CPU speed and memory size have increased much faster than the bandwidth between the two, the bottleneck has become more and more of a problem.

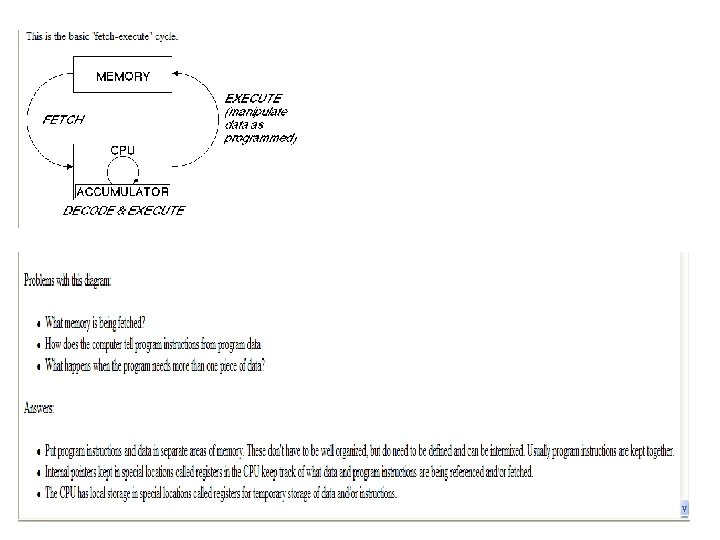

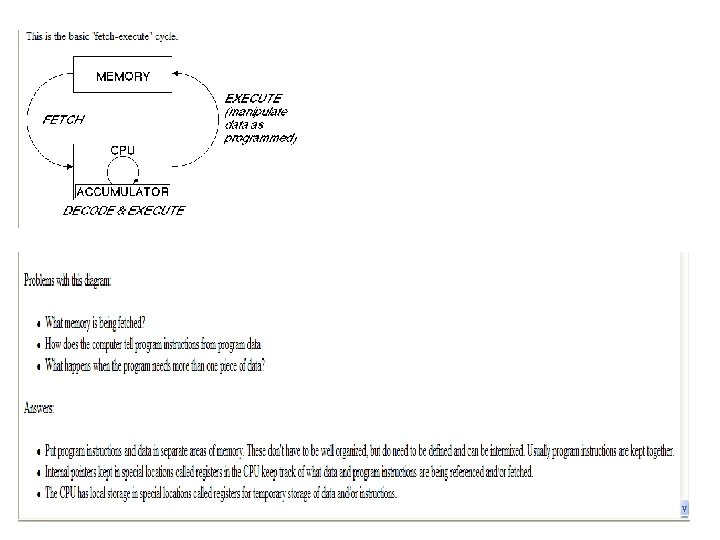

Basic computer operation and organization From an engineering viewpoint, a computer manipulates coded data and responds to events occurring in the external world. This is called a stored-program or von Neumann machine architecture. Memory is used to store both programs and data instructions (this is the core of the von Neumann architecture). Program instructions are coded data which tell the computer to do something, i. e. add two numbers together. Data is simply information to be used by the program, i. e. two numbers to be added together. A central processing unit (CPU) gets instructions and/or data from memory, decodes the instructions, and performs a sequence of programmed tasks. (We need something to decode the memory and determine what represents instructions and what represents data; this is the CPU's purpose. ) Nothing can occur simultaneously or instantaneously in a computer. Important operations are : fetching instruction's) from memory decoding the instruction's) performing the indicated operations

Registers: purpose and use A register is a sequential circuit with n + 1 (not counting the clock) inputs and n output. To each of the outputs corresponds an input. The first n inputs will be called x 0 trough xn-1 and the last input will be called ld (for load). The n outputs will be called y 0 trough yn-1. When the ld input is 0, the outputs are unaffected by any clock transition. When the ld input is 1, the x inputs are stored in the register at the next clock transition, making the y outputs into copies of the x inputs before the clock transition.

Parallel processors Parallel processing is the ability of a computer system to undertake more than one task simultaneously.