Todays Topics Learning Without a Teacher KMeans Clustering

- Slides: 15

Today’s Topics Learning Without a Teacher • K-Means Clustering • Hierarchical Clustering • Expectation-Maximization (using NB) • Auto-Association ANNs (formerly heavily used in Deep Neural Networks) • Read Section 20. 3. 1 of Russell & Norvig plus "Standard Algorithm" section of the Wikipedia article on K-Means Clustering 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 1

No teacher Unsupervised ML • Data abounds but labels hard to get, recall: – Labels from Experts (sometimes that is us) – Time-will-tell can produce training labels (eg, patients who responsed well to drug X) – “The crowd” (eg, Amazon Turk, hobbyists annotating sky images) can label • What might we do with unlabeled data? – Tells us where future data will lie in feature space – We might want algorithms to group/cluster data 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 2

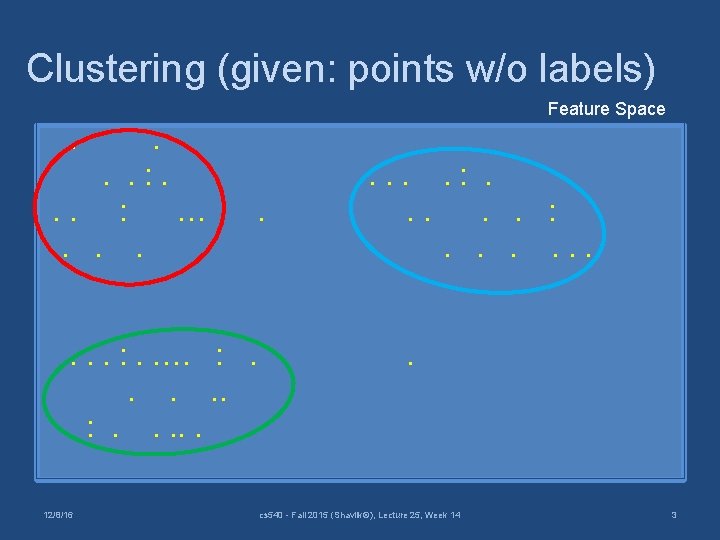

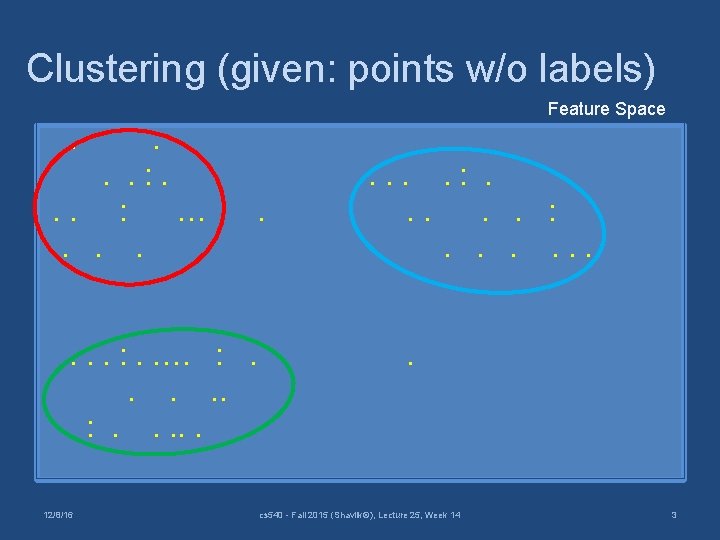

Clustering (given: points w/o labels) Feature Space . . : …. . . . : . …. : . . 12/8/16 . . . . : . . . . cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 3

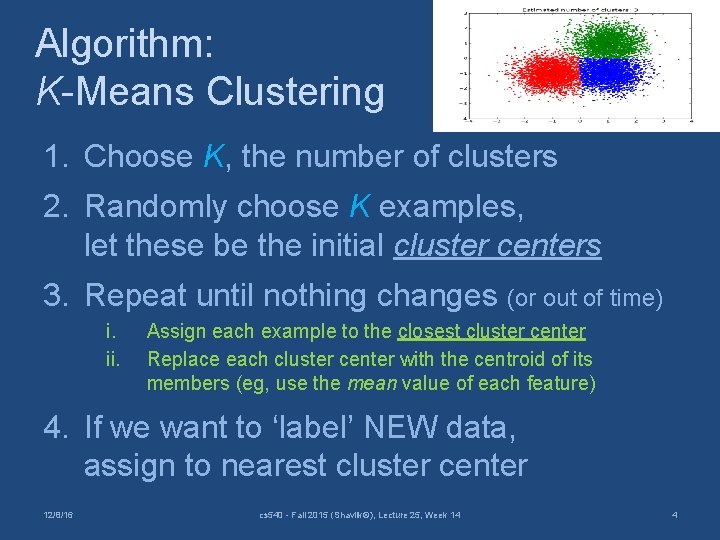

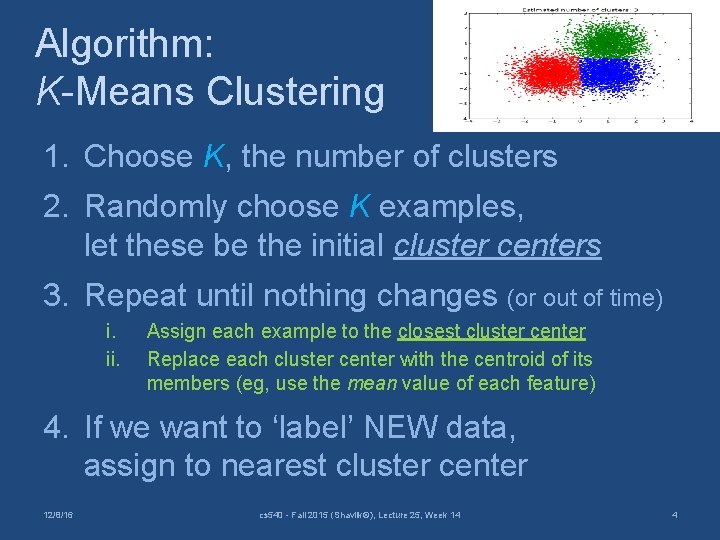

Algorithm: K-Means Clustering 1. Choose K, the number of clusters 2. Randomly choose K examples, let these be the initial cluster centers 3. Repeat until nothing changes (or out of time) i. ii. Assign each example to the closest cluster center Replace each cluster center with the centroid of its members (eg, use the mean value of each feature) 4. If we want to ‘label’ NEW data, assign to nearest cluster center 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 4

Some On-Line Visualizations Very short video (13 seconds) https: //www. youtube. com/watch? v=g. St 4_kc. ZPx. E Short video (140 seconds) https: //www. youtube. com/watch? v=BVFG 7 fd 1 H 30 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 5

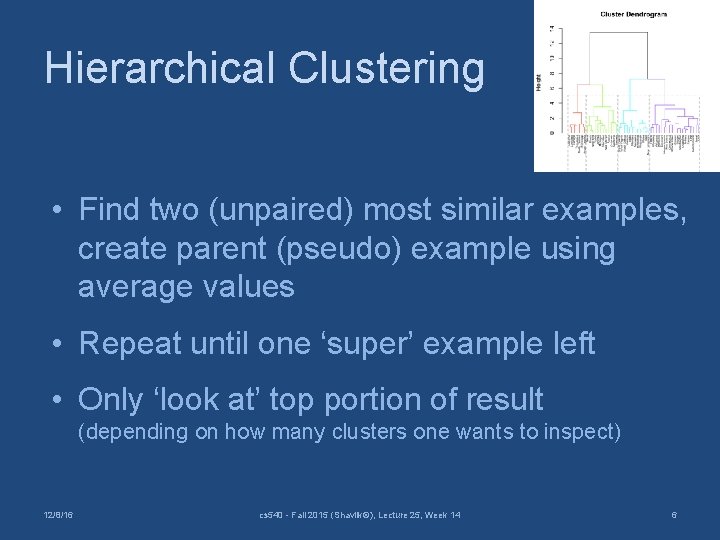

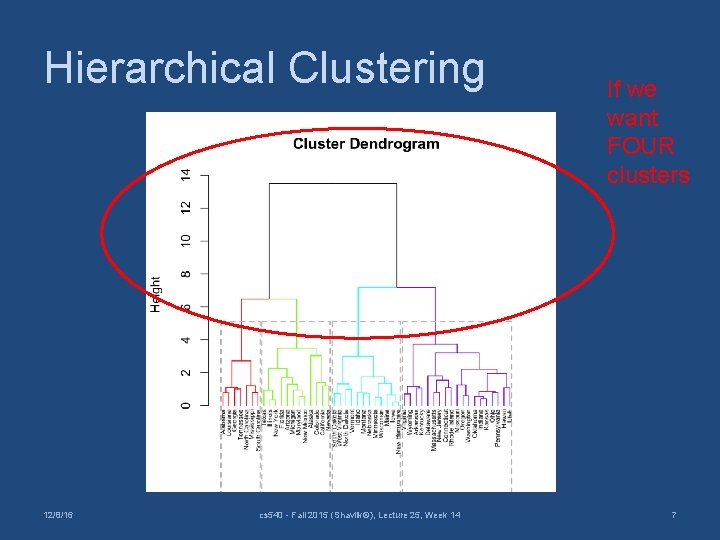

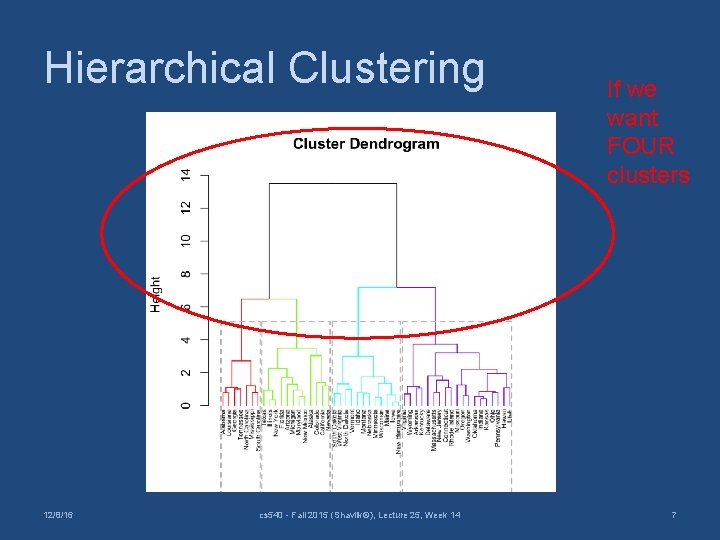

Hierarchical Clustering • Find two (unpaired) most similar examples, create parent (pseudo) example using average values • Repeat until one ‘super’ example left • Only ‘look at’ top portion of result (depending on how many clusters one wants to inspect) 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 6

Hierarchical Clustering 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 If we want FOUR clusters 7

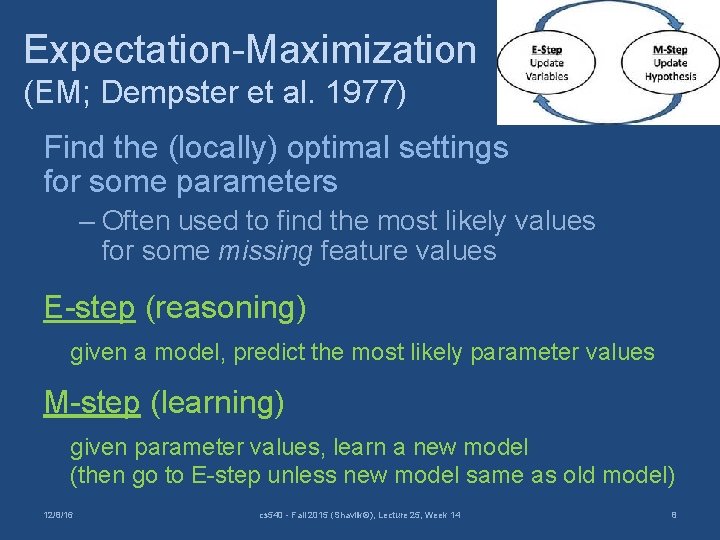

Expectation-Maximization (EM; Dempster et al. 1977) Find the (locally) optimal settings for some parameters – Often used to find the most likely values for some missing feature values E-step (reasoning) given a model, predict the most likely parameter values M-step (learning) given parameter values, learn a new model (then go to E-step unless new model same as old model) 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 8

EM: One Concrete Usage Assume our missing parameter is the LABEL for all our examples (ie, unsupervised learning) 1) Initially guess labels for each example 2) Train Naïve Bayes using these now labeled examples 3) Use the Naïve Bayes model to predict the labels for all the (originally) unlabeled ex’s 4) If any labels changed, go to Step 2 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 9

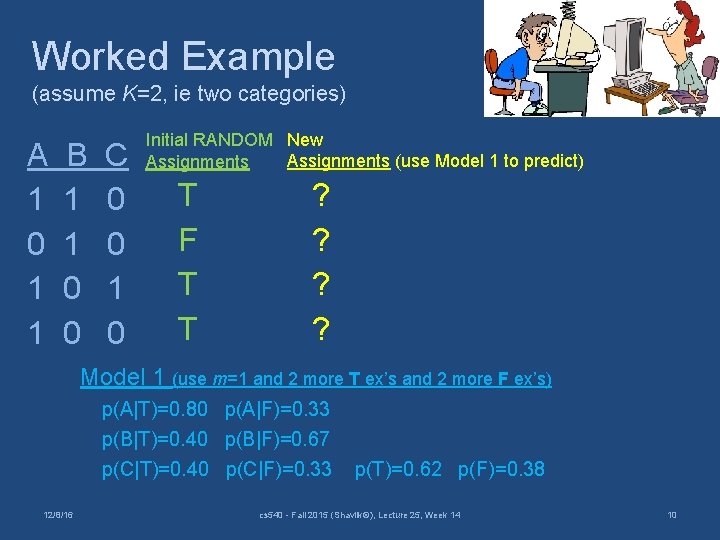

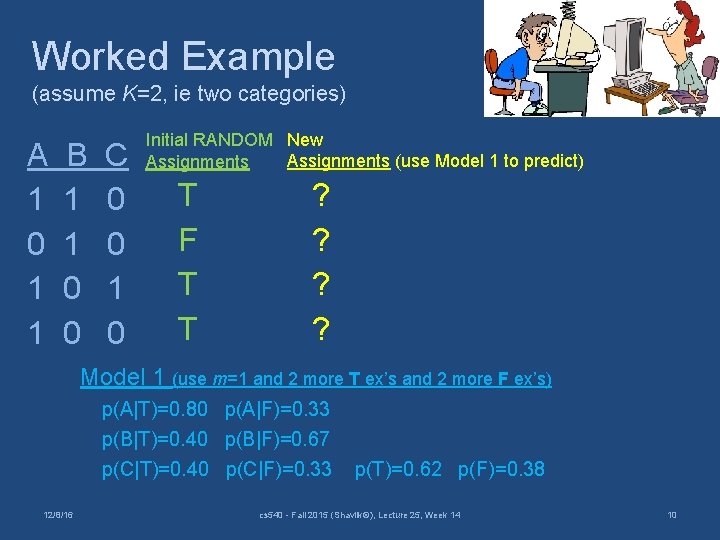

Worked Example (assume K=2, ie two categories) A 1 0 1 1 B 1 1 0 0 C 0 0 1 0 Initial RANDOM New Assignments (use Model 1 to predict) Assignments T F T T ? ? Model 1 (use m=1 and 2 more T ex’s and 2 more F ex’s) p(A|T)=0. 80 p(A|F)=0. 33 p(B|T)=0. 40 p(B|F)=0. 67 p(C|T)=0. 40 p(C|F)=0. 33 12/8/16 p(T)=0. 62 p(F)=0. 38 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 10

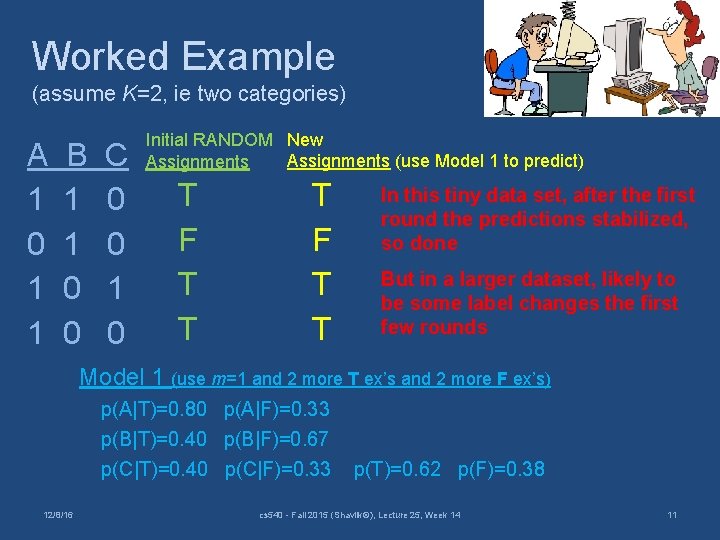

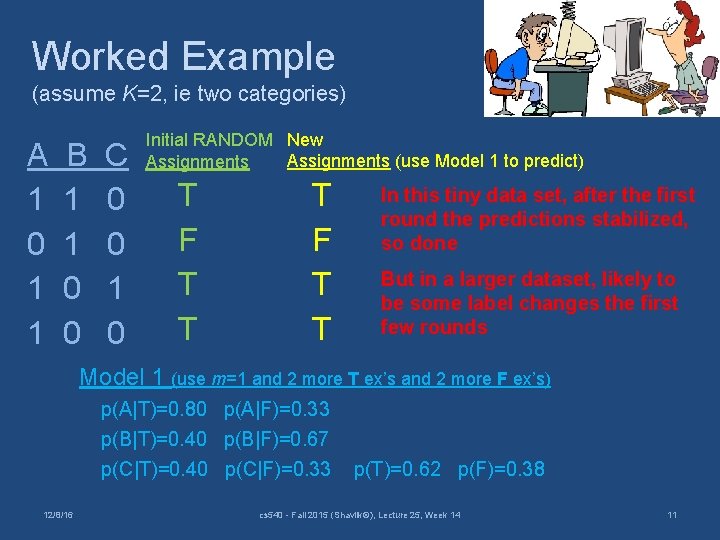

Worked Example (assume K=2, ie two categories) A 1 0 1 1 B 1 1 0 0 C 0 0 1 0 Initial RANDOM New Assignments (use Model 1 to predict) Assignments T F T T In this tiny data set, after the first round the predictions stabilized, so done But in a larger dataset, likely to be some label changes the first few rounds Model 1 (use m=1 and 2 more T ex’s and 2 more F ex’s) p(A|T)=0. 80 p(A|F)=0. 33 p(B|T)=0. 40 p(B|F)=0. 67 p(C|T)=0. 40 p(C|F)=0. 33 12/8/16 p(T)=0. 62 p(F)=0. 38 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 11

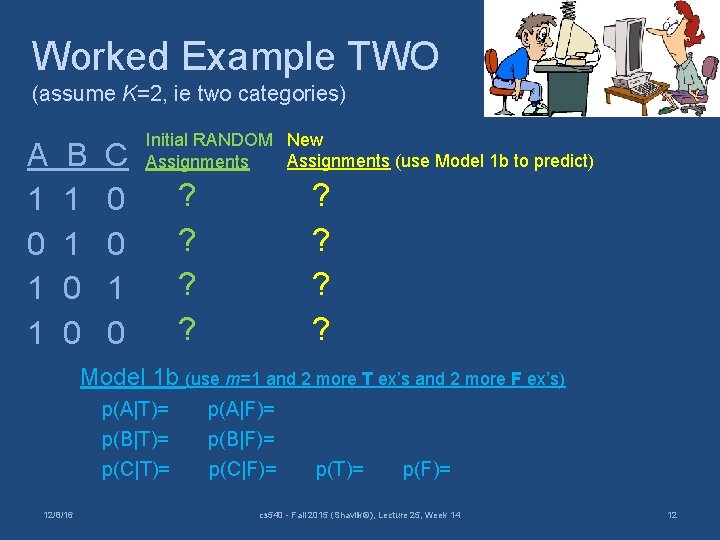

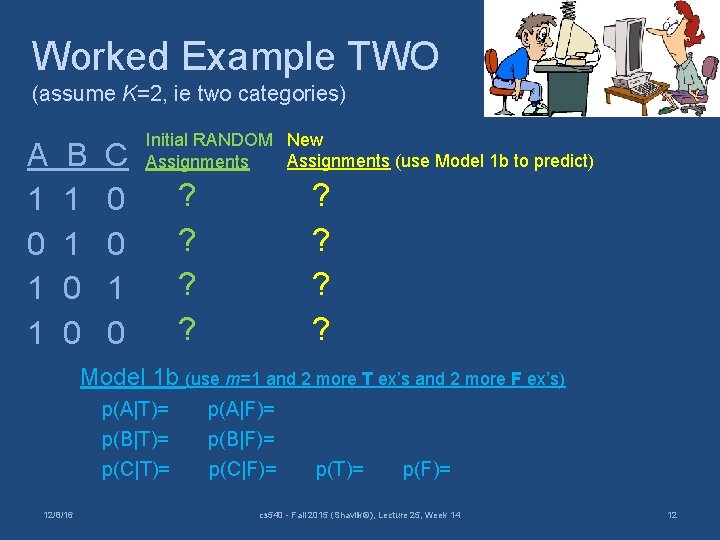

Worked Example TWO (assume K=2, ie two categories) A 1 0 1 1 B 1 1 0 0 C 0 0 1 0 Initial RANDOM New Assignments (use Model 1 b to predict) Assignments ? ? ? ? Model 1 b (use m=1 and 2 more T ex’s and 2 more F ex’s) p(A|T)= p(B|T)= p(C|T)= 12/8/16 p(A|F)= p(B|F)= p(C|F)= p(T)= p(F)= cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 12

Some Notes on EM via NB • Could have BOTH labeled and unlabelled data – called semi-supervised ML • Could use same idea to fill in missing feature values (common use of EM) use that feature as the category to predict using the other features (might do this separately for POS and NEG examples) • More mathematical treatments of EM: https: //en. wikipedia. org/wiki/Expectation%E 2%80%93 maximization_algorithm http: //www. cs. cmu. edu/~awm/15781/assignments/EM. pdf 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 13

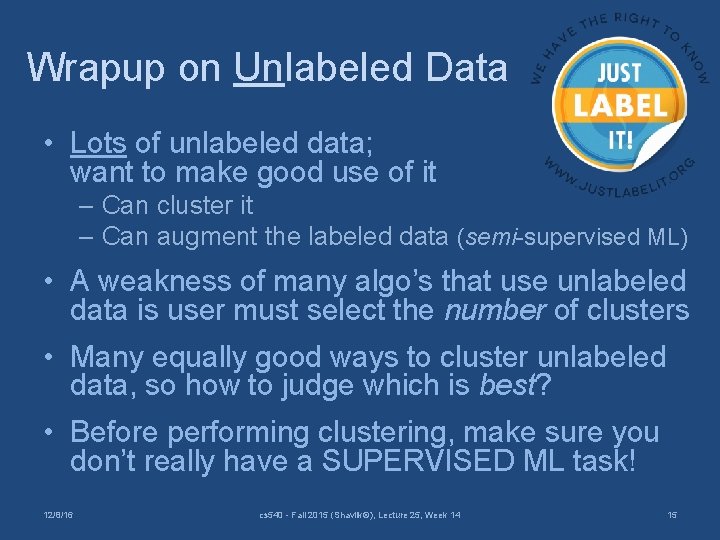

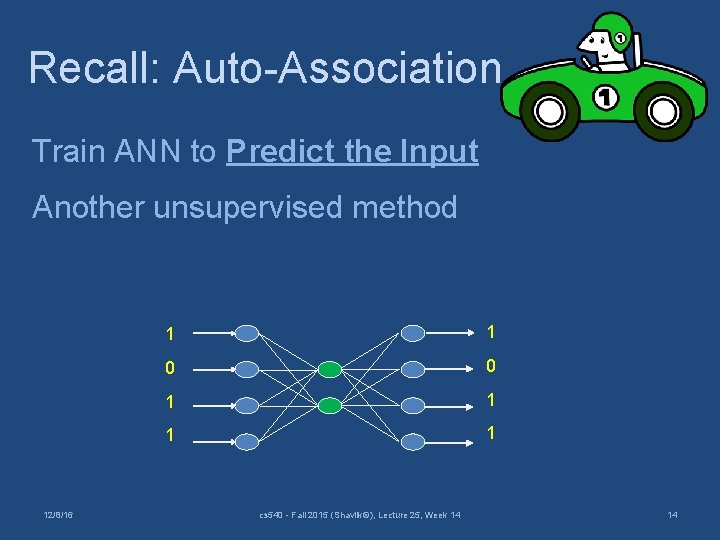

Recall: Auto-Association Train ANN to Predict the Input Another unsupervised method 12/8/16 1 1 0 0 1 1 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 14

Wrapup on Unlabeled Data • Lots of unlabeled data; want to make good use of it – Can cluster it – Can augment the labeled data (semi-supervised ML) • A weakness of many algo’s that use unlabeled data is user must select the number of clusters • Many equally good ways to cluster unlabeled data, so how to judge which is best? • Before performing clustering, make sure you don’t really have a SUPERVISED ML task! 12/8/16 cs 540 - Fall 2015 (Shavlik©), Lecture 25, Week 14 15