TMVA Andreas Hcker CERN CERN meeting Oct 6

- Slides: 18

TMVA Andreas Höcker (CERN) CERN meeting, Oct 6, 2006 Toolkit for Multivariate Data Analysis

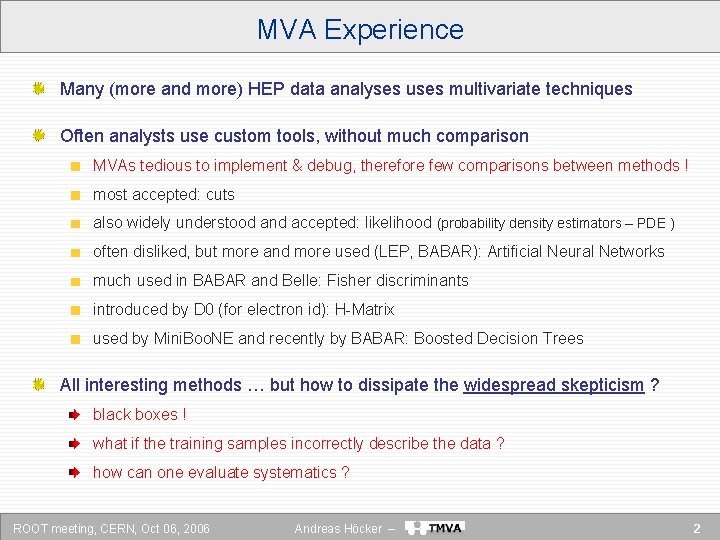

MVA Experience Many (more and more) HEP data analyses uses multivariate techniques Often analysts use custom tools, without much comparison MVAs tedious to implement & debug, therefore few comparisons between methods ! most accepted: cuts also widely understood and accepted: likelihood (probability density estimators – PDE ) often disliked, but more and more used (LEP, BABAR): Artificial Neural Networks much used in BABAR and Belle: Fisher discriminants introduced by D 0 (for electron id): H-Matrix used by Mini. Boo. NE and recently by BABAR: Boosted Decision Trees All interesting methods … but how to dissipate the widespread skepticism ? black boxes ! what if the training samples incorrectly describe the data ? how can one evaluate systematics ? ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 2

What is TMVA Toolkit for Multivariate Analysis (TMVA): provides a ROOT-integrated environment for the parallel processing and evaluation of MVA techniques to discriminate signal from background samples. Note that TMVA does not seek to promote a particular method ! TMVA presently includes (ranked by complexity): Rectangular cut optimisation Likelihood estimator (PDE approach) Multi-dimensional likelihood estimator (PDE - range-search approach) Fisher discriminant H-Matrix approach ( 2 estimator) Multilayer Perceptron Artificial Neural Network (three different implementations) Boosted Decision Trees Rule. Fit upcoming: Support Vector Machines, “Committee” methods Common preprocessing of input data: decorrelation, principal-component analysis The TMVA analysis provides training, testing and evaluation of the MVAs Each MVA method provides a ranking of the input variables MVAs produce weight files that are read by reader class for actual MVA analysis ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 3

TMVA Technicalities TMVA is a sourceforge (SF) package to accommodate world-wide access code can be downloaded as tar file, or via anonymous or developer cvs access home page ……………… http: //tmva. sourceforge. net/ SF project page …………… http: //sourceforge. net/projects/tmva view CVS ………………. . http: //tmva. cvs. sourceforge. net/tmva/TMVA/ mailing lists ………………http: //sourceforge. net/mail/? group_id=152074 ROOT: : TMVA class index…………. . . http: //root. cern. ch/root/htmldoc/TMVA_Index. html TMVA is written in C++ and is built upon ROOT Since 5. 11/03 integrated and distributed within ROOT TMVA is modular training, testing and evaluation factory iterates over all registered methods each method has specific options that can be conveniently set by the user ROOT scripts are provided for most important performance assessments We enthusiastically (!) welcome new users and developers at present: 18 SF-registered TMVA developers ! (http: //sourceforge. net/project/memberlist. php? group_id=152074) ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 4

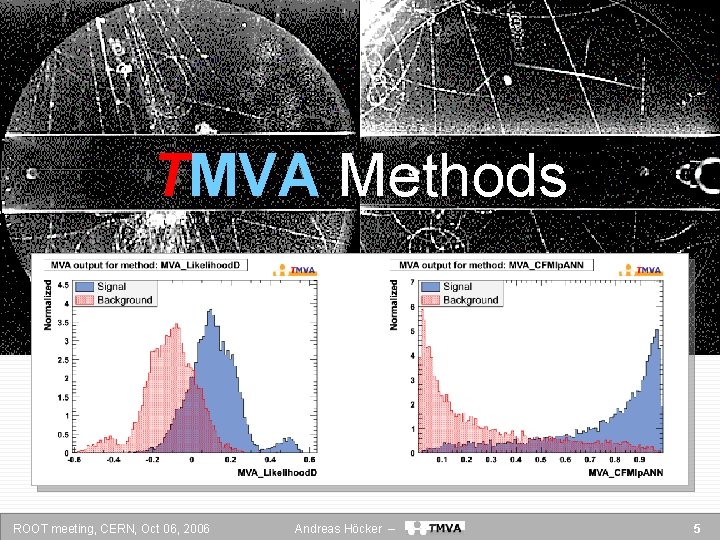

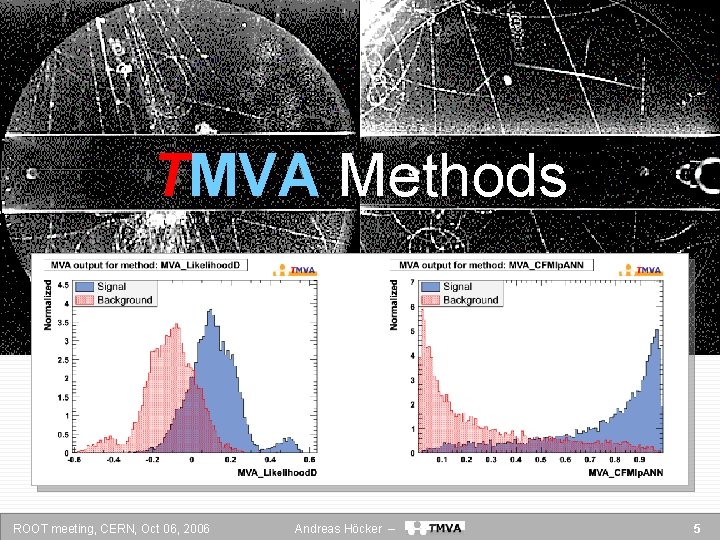

TMVA Methods ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 5

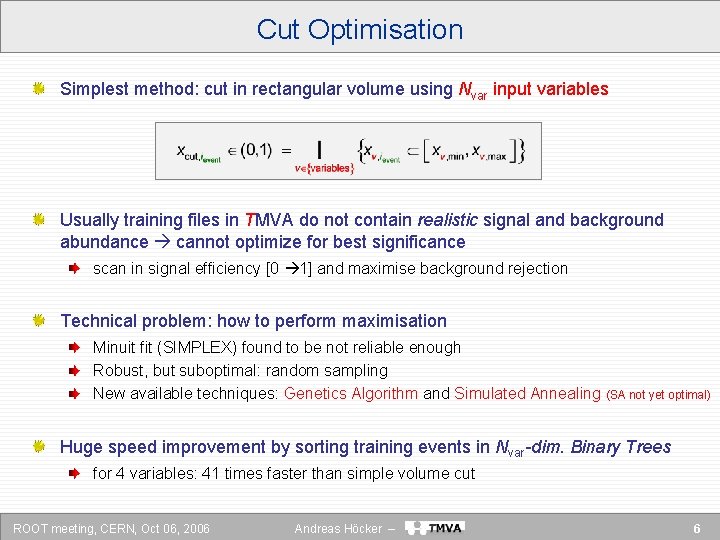

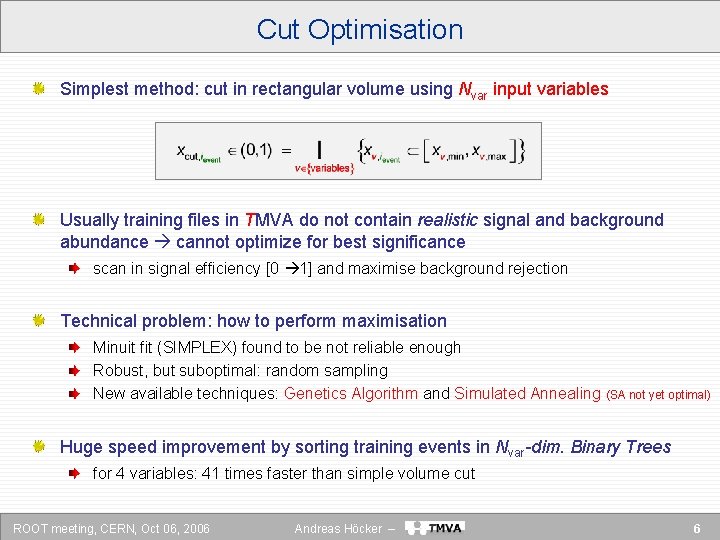

Cut Optimisation Simplest method: cut in rectangular volume using Nvar input variables Usually training files in TMVA do not contain realistic signal and background abundance cannot optimize for best significance scan in signal efficiency [0 1] and maximise background rejection Technical problem: how to perform maximisation Minuit fit (SIMPLEX) found to be not reliable enough Robust, but suboptimal: random sampling New available techniques: Genetics Algorithm and Simulated Annealing (SA not yet optimal) Huge speed improvement by sorting training events in Nvar-dim. Binary Trees for 4 variables: 41 times faster than simple volume cut ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 6

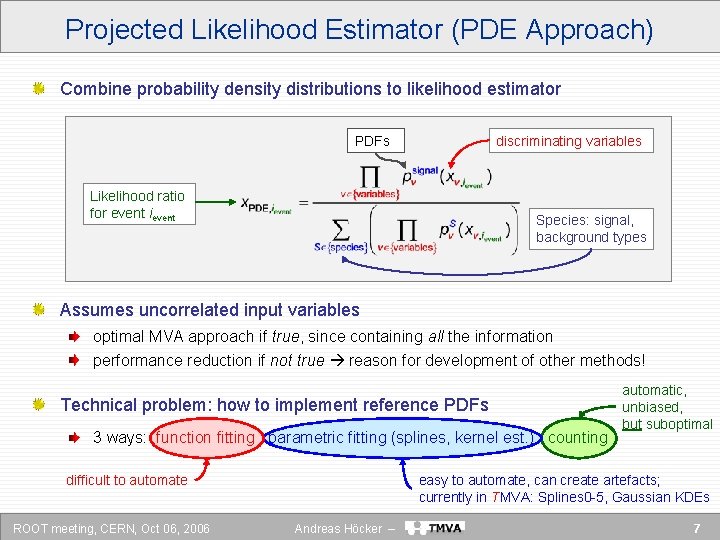

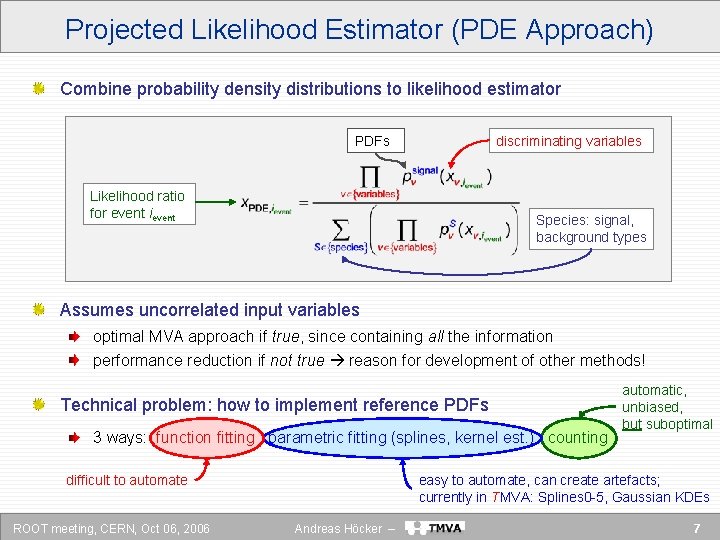

Projected Likelihood Estimator (PDE Approach) Combine probability density distributions to likelihood estimator PDFs discriminating variables Likelihood ratio for event ievent Species: signal, background types Assumes uncorrelated input variables optimal MVA approach if true, since containing all the information performance reduction if not true reason for development of other methods! Technical problem: how to implement reference PDFs 3 ways: function fitting parametric fitting (splines, kernel est. ) counting difficult to automate ROOT meeting, CERN, Oct 06, 2006 automatic, unbiased, but suboptimal easy to automate, can create artefacts; currently in TMVA: Splines 0 -5, Gaussian KDEs Andreas Höcker – 7

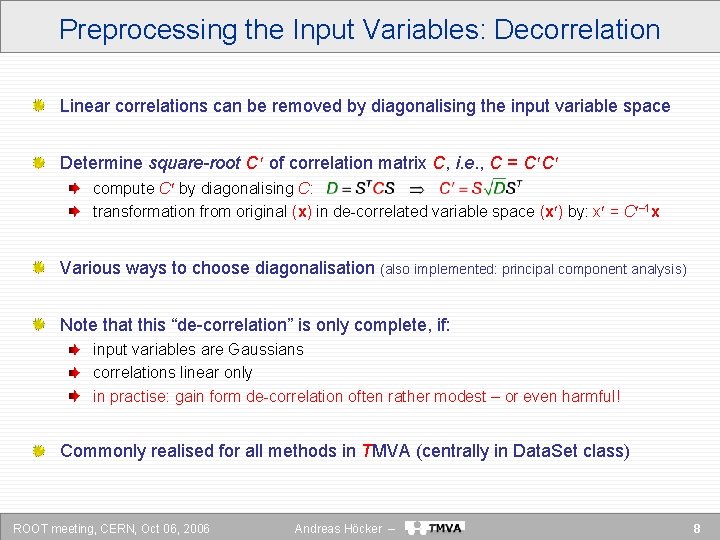

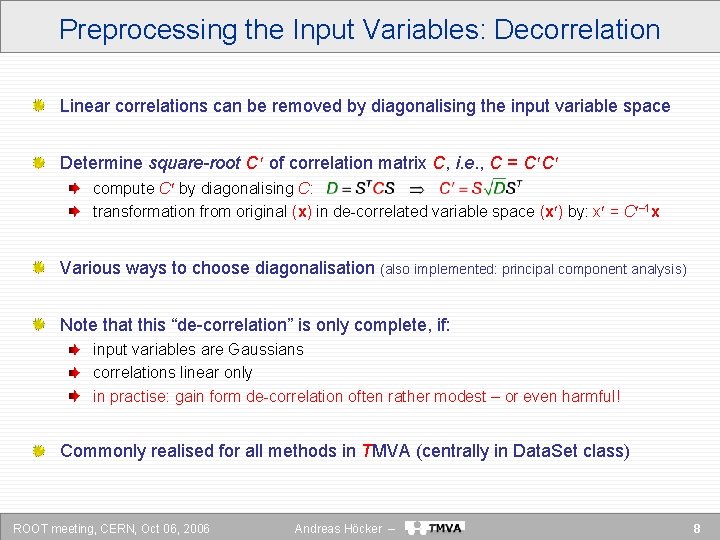

Preprocessing the Input Variables: Decorrelation Linear correlations can be removed by diagonalising the input variable space Determine square-root C of correlation matrix C, i. e. , C = C C compute C by diagonalising C: transformation from original (x) in de-correlated variable space (x ) by: x = C 1 x Various ways to choose diagonalisation (also implemented: principal component analysis) Note that this “de-correlation” is only complete, if: input variables are Gaussians correlations linear only in practise: gain form de-correlation often rather modest – or even harmful! Commonly realised for all methods in TMVA (centrally in Data. Set class) ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 8

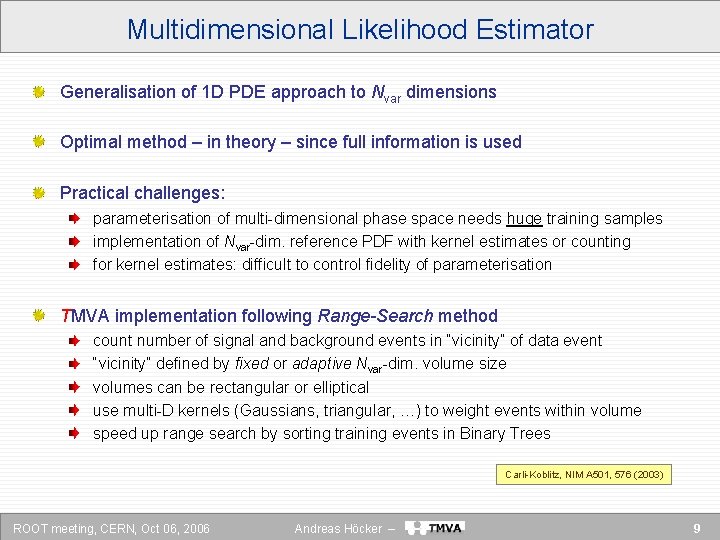

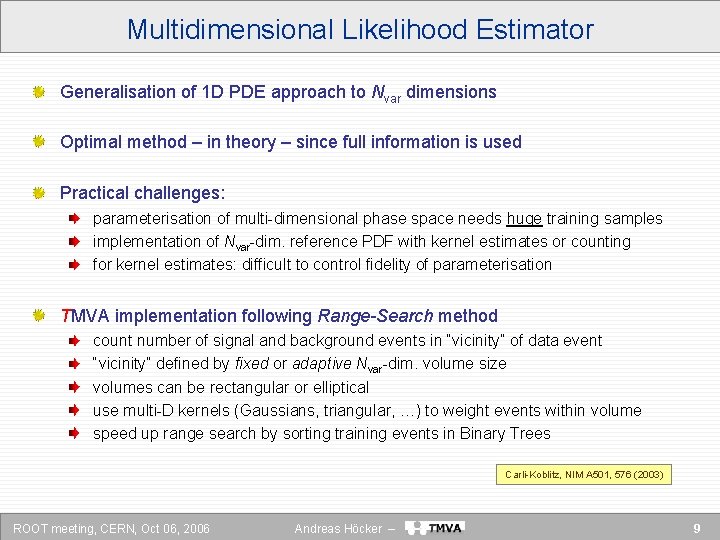

Multidimensional Likelihood Estimator Generalisation of 1 D PDE approach to Nvar dimensions Optimal method – in theory – since full information is used Practical challenges: parameterisation of multi-dimensional phase space needs huge training samples implementation of Nvar-dim. reference PDF with kernel estimates or counting for kernel estimates: difficult to control fidelity of parameterisation TMVA implementation following Range-Search method count number of signal and background events in “vicinity” of data event “vicinity” defined by fixed or adaptive Nvar-dim. volume size volumes can be rectangular or elliptical use multi-D kernels (Gaussians, triangular, …) to weight events within volume speed up range search by sorting training events in Binary Trees Carli-Koblitz, NIM A 501, 576 (2003) ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 9

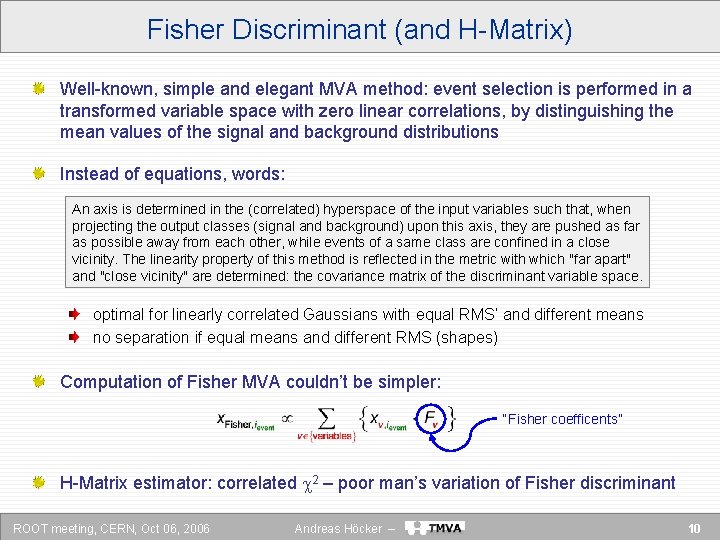

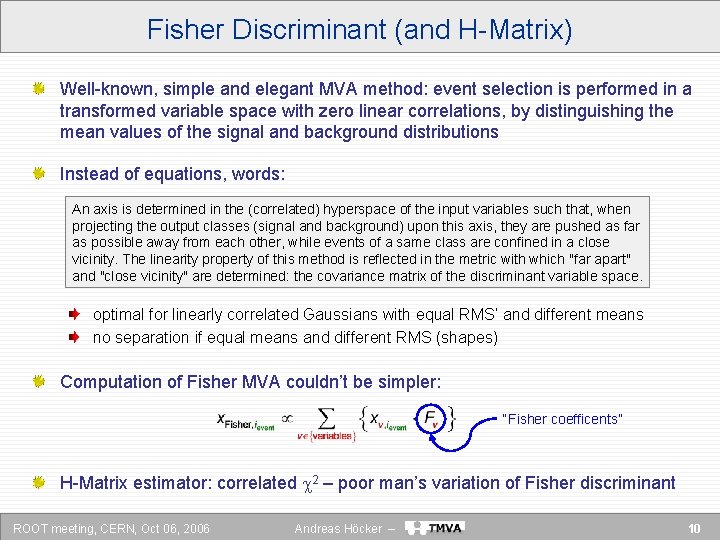

Fisher Discriminant (and H-Matrix) Well-known, simple and elegant MVA method: event selection is performed in a transformed variable space with zero linear correlations, by distinguishing the mean values of the signal and background distributions Instead of equations, words: An axis is determined in the (correlated) hyperspace of the input variables such that, when projecting the output classes (signal and background) upon this axis, they are pushed as far as possible away from each other, while events of a same class are confined in a close vicinity. The linearity property of this method is reflected in the metric with which "far apart" and "close vicinity" are determined: the covariance matrix of the discriminant variable space. optimal for linearly correlated Gaussians with equal RMS’ and different means no separation if equal means and different RMS (shapes) Computation of Fisher MVA couldn’t be simpler: “Fisher coefficents” H-Matrix estimator: correlated 2 – poor man’s variation of Fisher discriminant ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 10

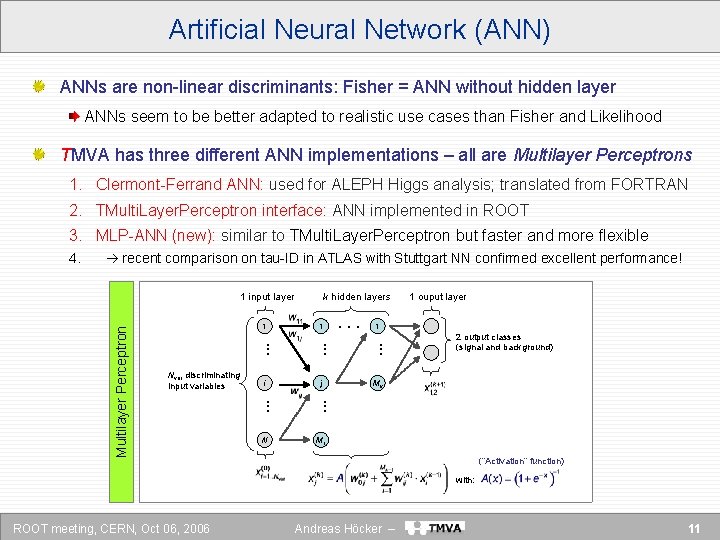

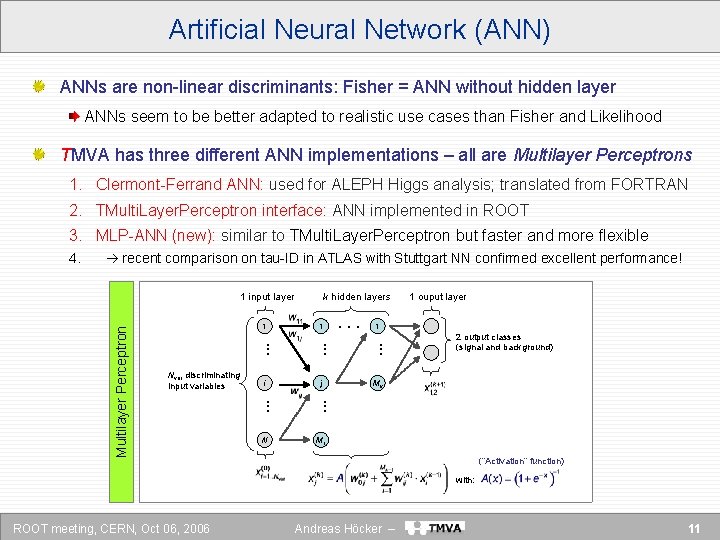

Artificial Neural Network (ANN) ANNs are non-linear discriminants: Fisher = ANN without hidden layer ANNs seem to be better adapted to realistic use cases than Fisher and Likelihood TMVA has three different ANN implementations – all are Multilayer Perceptrons 1. Clermont-Ferrand ANN: used for ALEPH Higgs analysis; translated from FORTRAN 2. TMulti. Layer. Perceptron interface: ANN implemented in ROOT 3. MLP-ANN (new): similar to TMulti. Layer. Perceptron but faster and more flexible 4. recent comparison on tau-ID in ATLAS with Stuttgart NN confirmed excellent performance! Multilayer Perceptron 1 input layer 1 . . . Nvar discriminating input variables k hidden layers 1 . . . i j N M 1 . . 1 ouput layer 1 . . . 2 output classes (signal and background) Mk (“Activation” function) with: ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 11

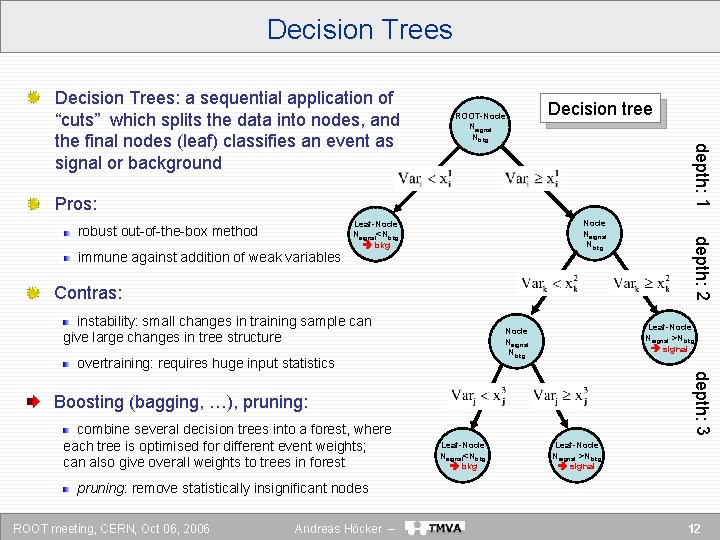

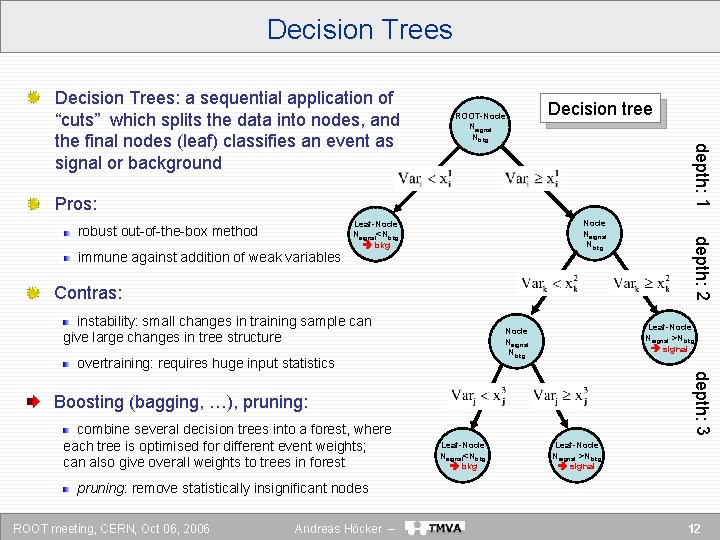

Decision Trees ROOT-Node Nsignal Nbkg Decision tree depth: 1 Decision Trees: a sequential application of “cuts” which splits the data into nodes, and the final nodes (leaf) classifies an event as signal or background Pros: immune against addition of weak variables Node Nsignal Nbkg Leaf-Node Nsignal<Nbkg Contras: instability: small changes in training sample can give large changes in tree structure Leaf-Node Nsignal >Nbkg signal Node Nsignal Nbkg overtraining: requires huge input statistics depth: 3 Boosting (bagging, …), pruning: combine several decision trees into a forest, where each tree is optimised for different event weights; can also give overall weights to trees in forest depth: 2 robust out-of-the-box method Leaf-Node Nsignal<Nbkg Leaf-Node Nsignal >Nbkg signal pruning: remove statistically insignificant nodes ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 12

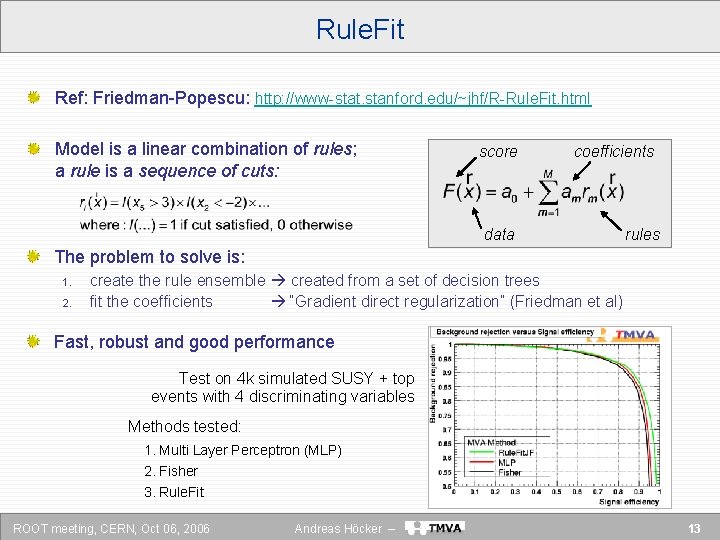

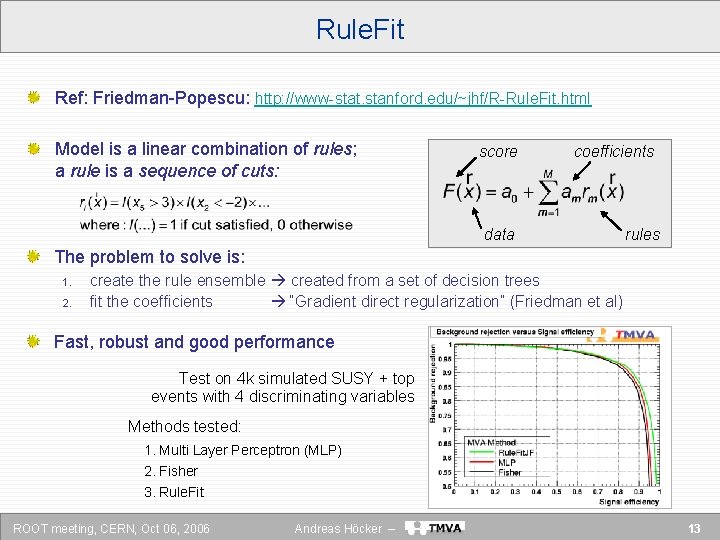

Rule. Fit Ref: Friedman-Popescu: http: //www-stat. stanford. edu/~jhf/R-Rule. Fit. html Model is a linear combination of rules; a rule is a sequence of cuts: score coefficients data rules The problem to solve is: 1. 2. create the rule ensemble created from a set of decision trees fit the coefficients “Gradient direct regularization” (Friedman et al) Fast, robust and good performance Test on 4 k simulated SUSY + top events with 4 discriminating variables Methods tested: 1. Multi Layer Perceptron (MLP) 2. Fisher 3. Rule. Fit ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 13

TMVA – an example ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 14

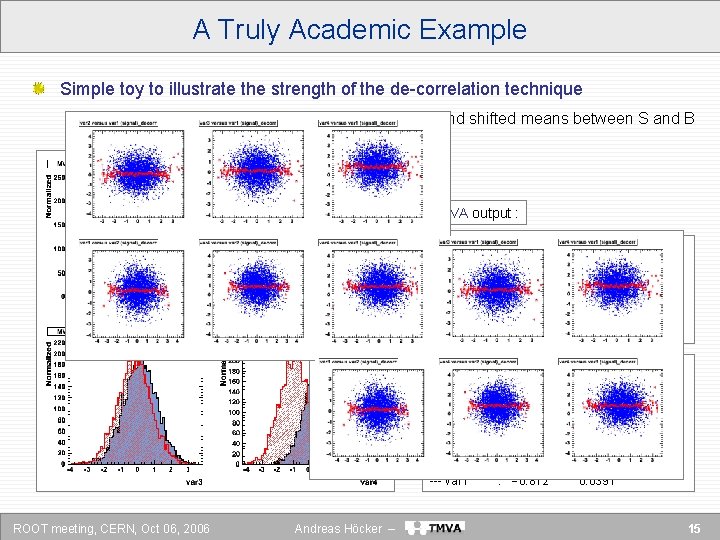

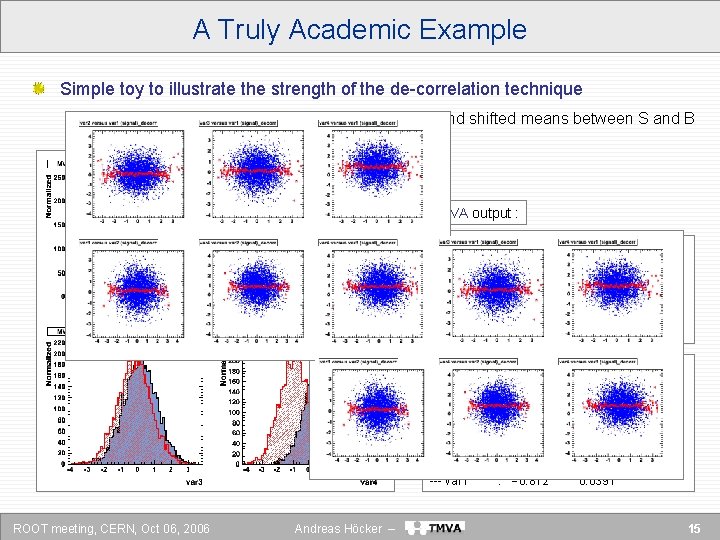

A Truly Academic Example Simple toy to illustrate the strength of the de-correlation technique 4 linearly correlated Gaussians, with equal RMS and shifted means between S and B TMVA output : --- TMVA_Factory: correlation matrix (signal): ---------------------------------var 1 var 2 var 3 var 4 --var 1: +1. 000 +0. 336 +0. 393 +0. 447 --var 2: +0. 336 +1. 000 +0. 613 +0. 668 --var 3: +0. 393 +0. 613 +1. 000 +0. 907 --var 4: +0. 447 +0. 668 +0. 907 +1. 000 --- TMVA_Method. Fisher: ranked output (top --variable is best ranked) --------------------------------- Variable : Coefficient: Discr. power: --------------------------------- var 4 : +8. 077 0. 3888 --- var 3 : 3. 417 0. 2629 --- var 2 : 0. 982 0. 1394 --- var 1 : 0. 812 0. 0391 ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 15

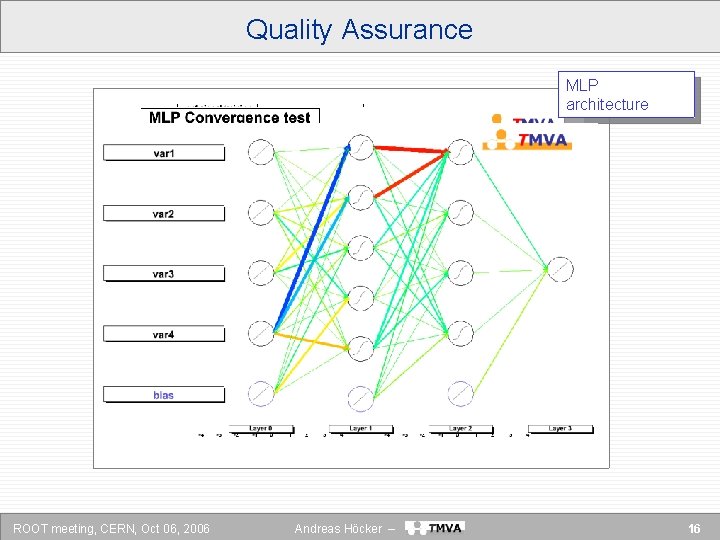

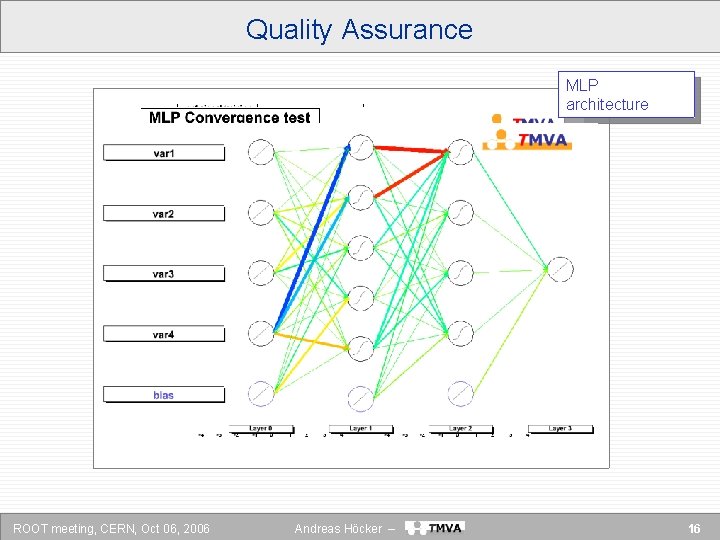

Quality Assurance Likelihood MLP reference PDFs convergence architecture ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 16

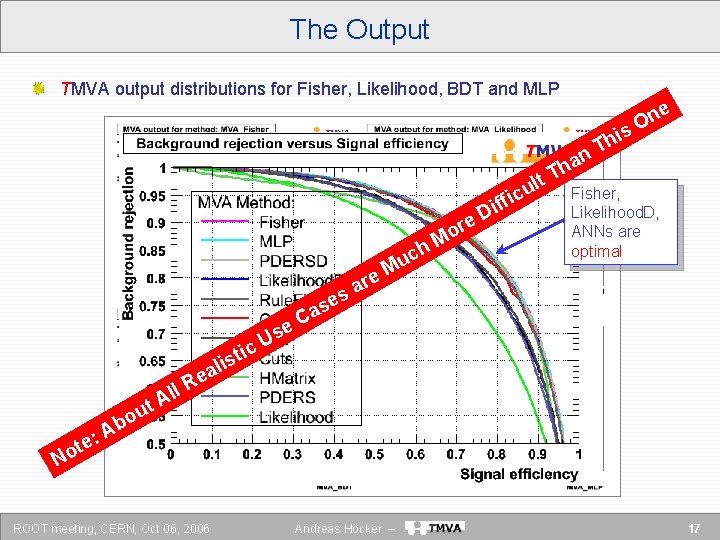

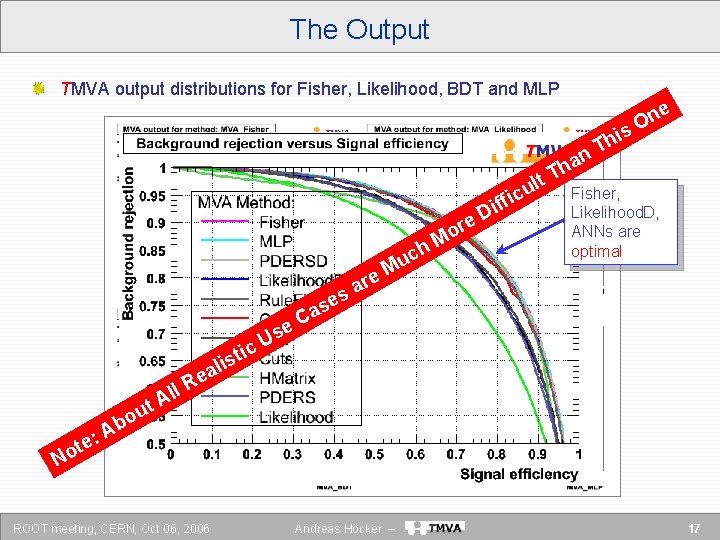

The Output TMVA output distributions for Fisher, Likelihood, BDT and MLP ne O s i lt u ic h : te o N ou b A ll A t Us c ti s i al e R ROOT meeting, CERN, Oct 06, 2006 e s Ca re a s c u M re o M f f i D T Th n ha Fisher, Likelihood. D, ANNs are optimal e Andreas Höcker – 17

Concluding Remarks TMVA is still a young project ! TMVA is open source: we want to gather as much development help as possible (from all areas, including real experts from statistics faculties – there is no one so far!) to make it to a reference tool in HEP New MVA methods are important, but the emphasis lies on the consolidation of the currently implemented methods: Implement fully independent, phase-space-dependent MVAs Implement use of event weights throughout all methods (missing: Cuts, Fisher, H-Matr. , Rule. Fit) Improve kernel estimators Improve decorrelation techniques Expect performance gain from combination of MVAs in Committees Although the number of users rises, still need more real-life experience ! Need to write a manual ! ROOT meeting, CERN, Oct 06, 2006 Andreas Höcker – 18