Timing Model of a Superscalar OoO processor in

- Slides: 15

Timing Model of a Superscalar O-o-O processor in HAsim Framework Murali Vijayaraghavan

What is HAsim n n n Framework to write software-like timing models and run it on FPGAs Software timing models are inherently sequential – hence slow Parallelism is achieved by implementing the timing model on FPGAs

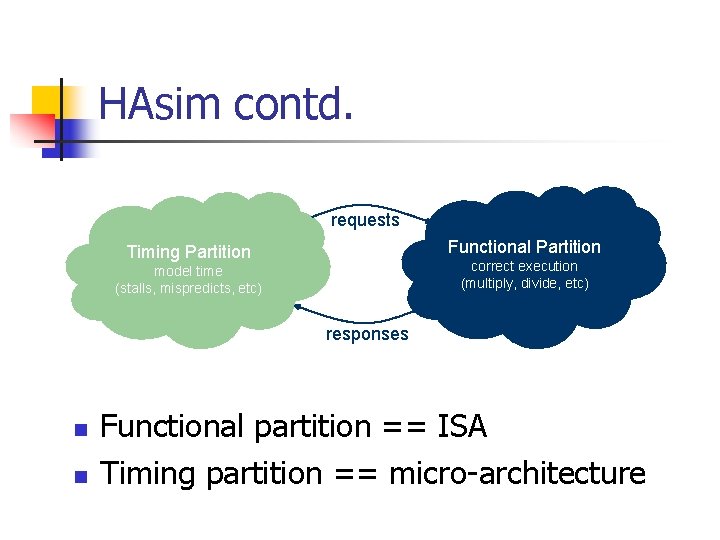

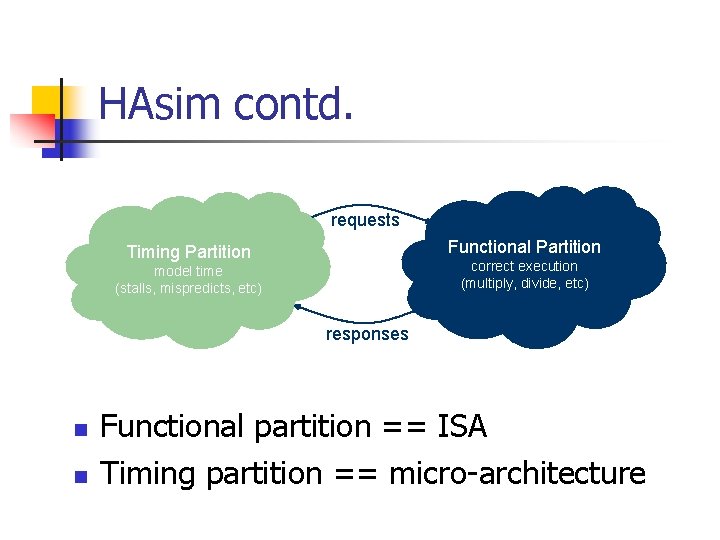

HAsim contd. requests Functional Partition Timing Partition correct execution (multiply, divide, etc) model time (stalls, mispredicts, etc) responses n n Functional partition == ISA Timing partition == micro-architecture

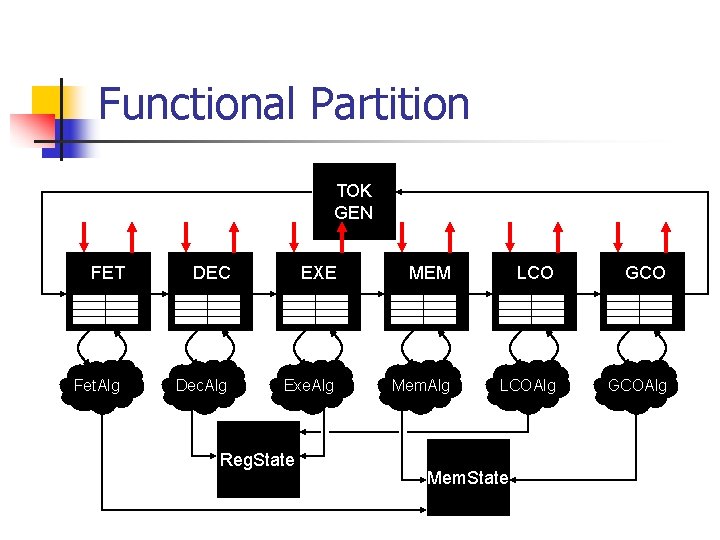

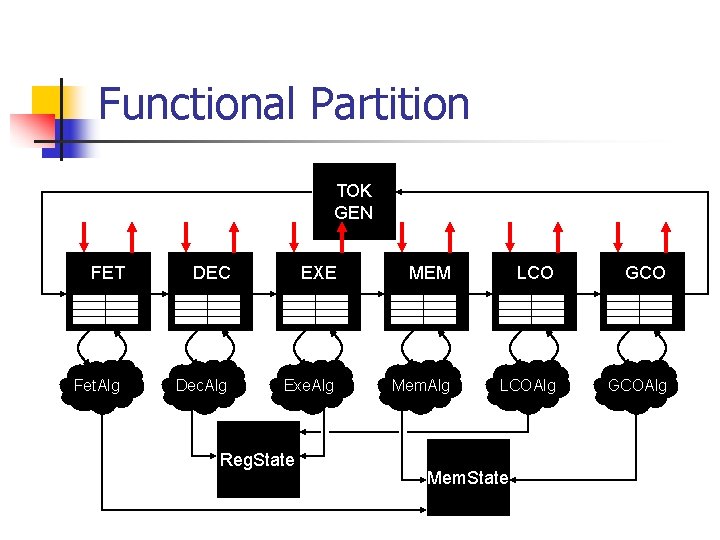

Functional Partition TOK GEN FET Fet. Alg DEC EXE MEM LCO GCO Dec. Alg Exe. Alg Mem. Alg LCOAlg GCOAlg Reg. State Mem. State

Model cycle vs FPGA cycle n n Functional simulator can take any number of FPGA cycles for an operation So there must be an explicit mechanism to monitor the ticks of the processor being modelled

APorts – monitoring ticks n n n Each module in timing partition is connected with each other using APorts A clock tick conceptually begins when the module has read from every input APort and ends when it writes to every output APort But the tick localized to each port

MIPS R 10 -k specs n n n 64 -bit processor Out-of-order execution Superscalar n n n Fetch. Width – 4 Commit. Width – 4 2 ALUs 1 Load/Store unit 1 FPU

Timing model design n n Functional partition operates only on one instruction at a time But timing model time-multiplexes multiple operations to operate on more than one instruction at a time

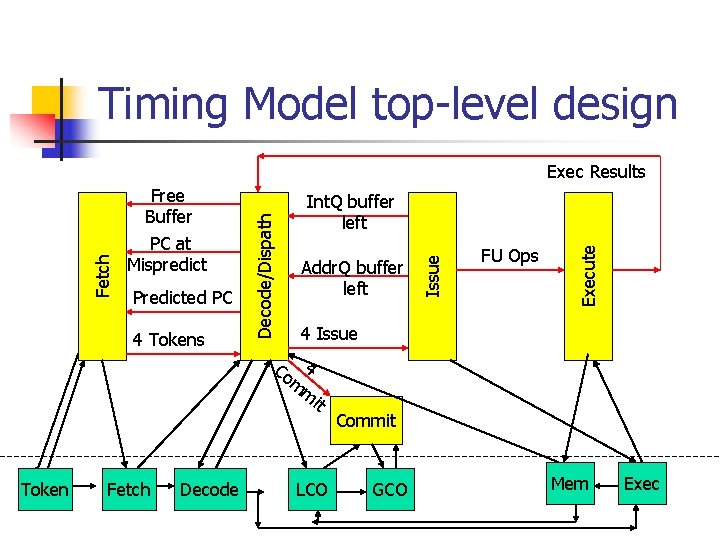

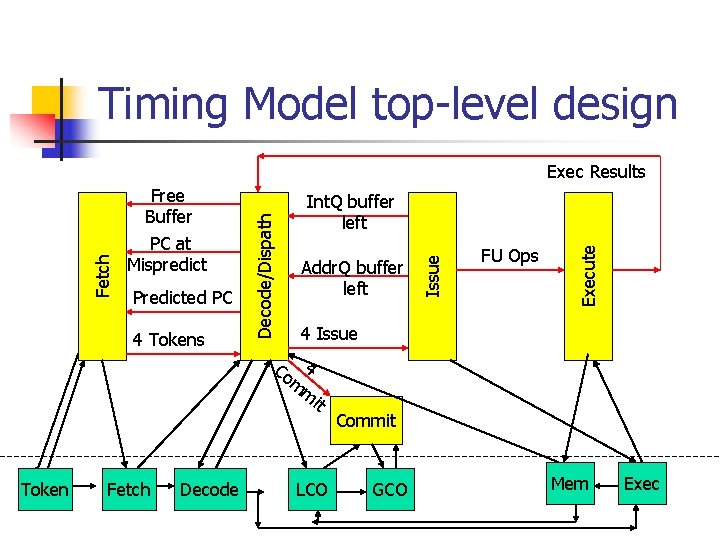

Timing Model top-level design 4 Tokens Addr. Q buffer left 4 Issue Co 4 m m it Token Fetch Decode FU Ops Execute Predicted PC Int. Q buffer left Issue Free Buffer PC at Mispredict Decode/Dispath Fetch Exec Results LCO Commit GCO Mem Exec

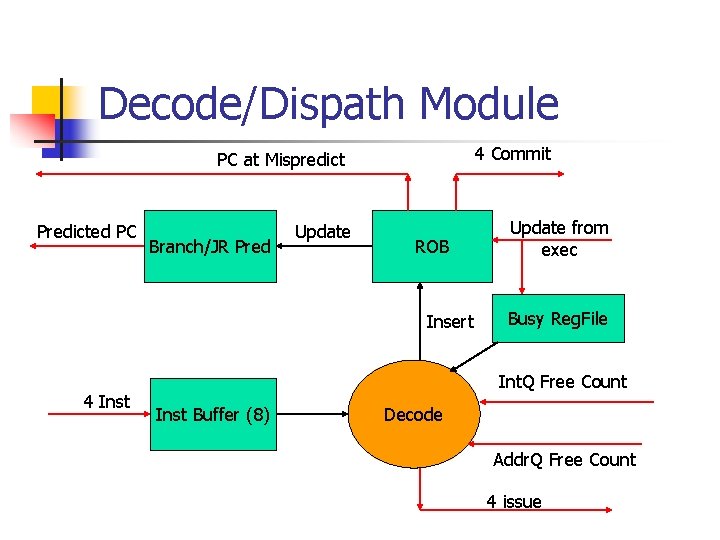

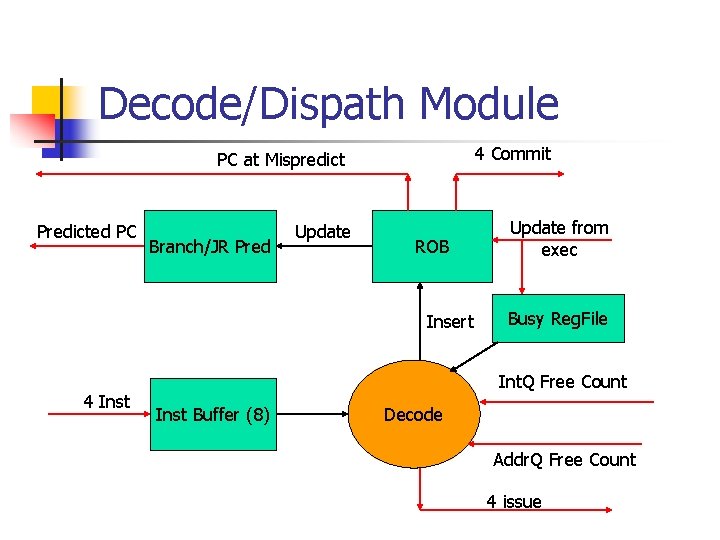

Decode/Dispath Module 4 Commit PC at Mispredict Predicted PC Branch/JR Pred Update ROB Insert 4 Inst Update from exec Busy Reg. File Int. Q Free Count Inst Buffer (8) Decode Addr. Q Free Count 4 issue

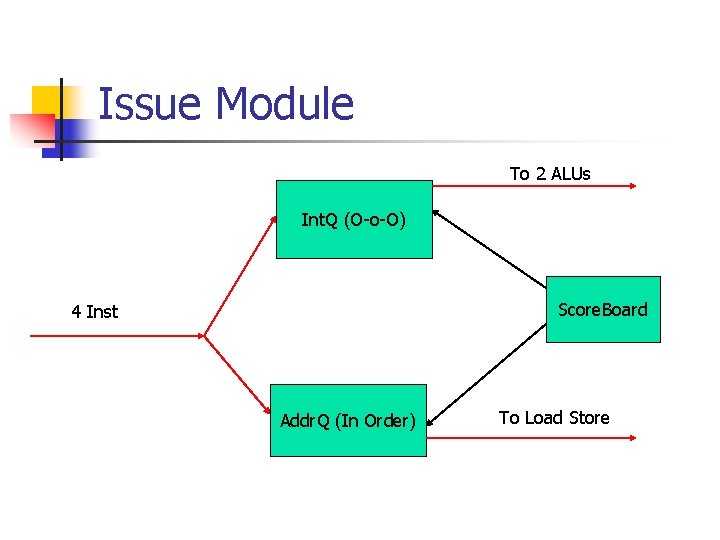

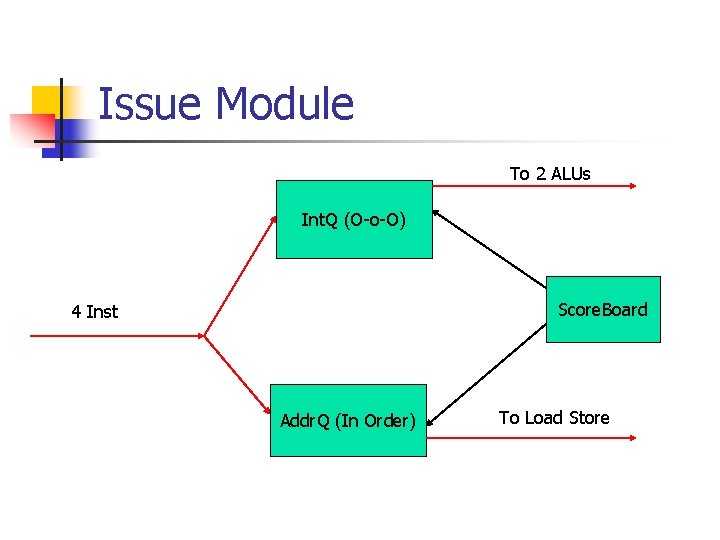

Issue Module To 2 ALUs Int. Q (O-o-O) Score. Board 4 Inst Addr. Q (In Order) To Load Store

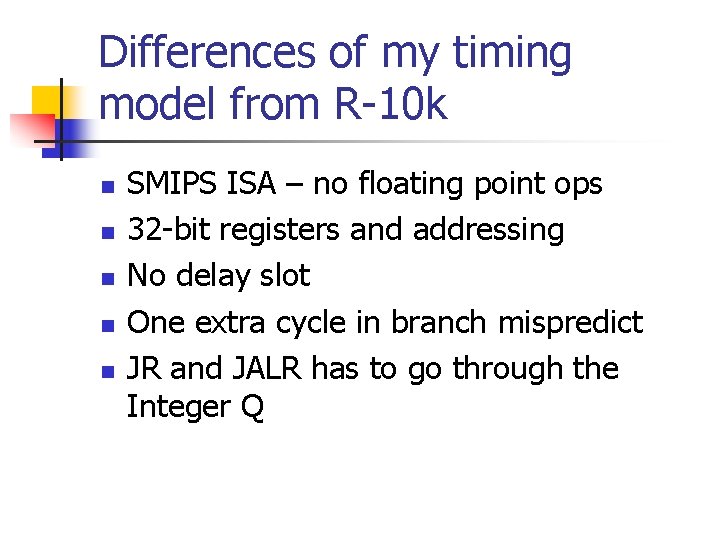

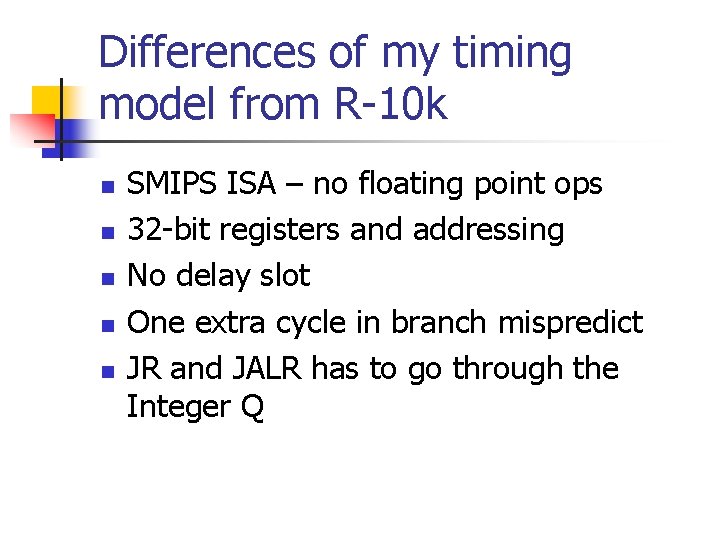

Differences of my timing model from R-10 k n n n SMIPS ISA – no floating point ops 32 -bit registers and addressing No delay slot One extra cycle in branch mispredict JR and JALR has to go through the Integer Q

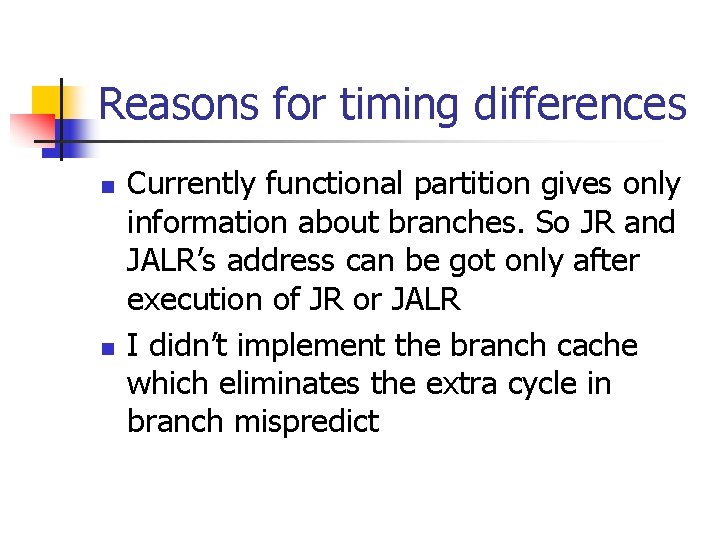

Reasons for timing differences n n Currently functional partition gives only information about branches. So JR and JALR’s address can be got only after execution of JR or JALR I didn’t implement the branch cache which eliminates the extra cycle in branch mispredict

Simulation results n n Simulated SMIPS v 2 ADDUI test case Took 239 FPGA cycles to simulate 7 model cycles – must look into this number as the “bottleneck” is the instruction queue, which takes 7 * 21 cycles = 147 cycles

Miscellaneous n n Lines of code for timing model ~ 1300 Compared to ~1200 for a simple SMIPS processor in Lab 2, excluding caches