Time critical condition data handling in the CMS

- Slides: 28

Time critical condition data handling in the CMS experiment during the first data taking period CHEP 2010 Software Engineering, Data Stores, and Databases Tuesday 19 October – Taipei Salvatore Di Guida (CERN), Francesca Cavallari (INFN Roma), Giacomo Govi (Fermilab), Vincenzo Innocente (CERN), Giuseppe Antonio Pierro (University and INFN Bari) On behalf of the CMS experiment

Outline • Condition data • Condition Database design • Condition Database population – Pop. Con application – Offline dropbox • Condition Database real-time monitoring – Pop. Con web based monitoring – Advanced web technologies 10/19/10 Salvatore di Guida 2

Condition DB during data taking • The whole infrastructure was intensively developed and tested during the 2008 cosmic ray data taking. • It was deployed for the collision data taking in November 2009. • It is now running smoothly for collision data taking at 7 Te. V: the data are stored by our tools and accessed by reconstruction applications; – We provide also 24/7 coverage by experts. 10/19/10 Salvatore di Guida 3

Data types in condition DB • A proper reconstruction of collision events makes use of “secondary data” accessed during the execution of reconstruction applications: – These data are labeled as condition data; they do not come from collision events. • These data must be stored in order to be accessed by reconstruction applications – CMS stores them using ORACLE RDBMS. 10/19/10 Salvatore di Guida 4

What are condition data? • Construction data; • Equipment management data: history of all items installed at CMS: – Detector parts, – Off detector electronics; • Configuration data: needed to bring the detector in running mode; • Detector condition data: describe the state of the detector: – Data quality indicators (bad channels…), – Sub-detector settings (pedestals…); • Calibration data: needed to calculate the physics quantities from raw data; • Alignment data: needed to retrieve the exact position of each sub-detector inside CMS. All these data (except construction data) can be grouped by a version and the time range (interval of validity, IOV) in which they are valid. 10/19/10 Salvatore di Guida 5

Condition DB structure (I) • Time dependent condition data are stored together with their interval of validity (IOV): – An IOV is the contiguous (in time) set of collision events for which non-event data must be used in reconstruction of collision events. • IOV sequences are labeled by tags (IOV tag): – An IOV tag is a human readable string describing the version of the set of non-event data associated to the IOV sequence. 10/19/10 Salvatore di Guida 6

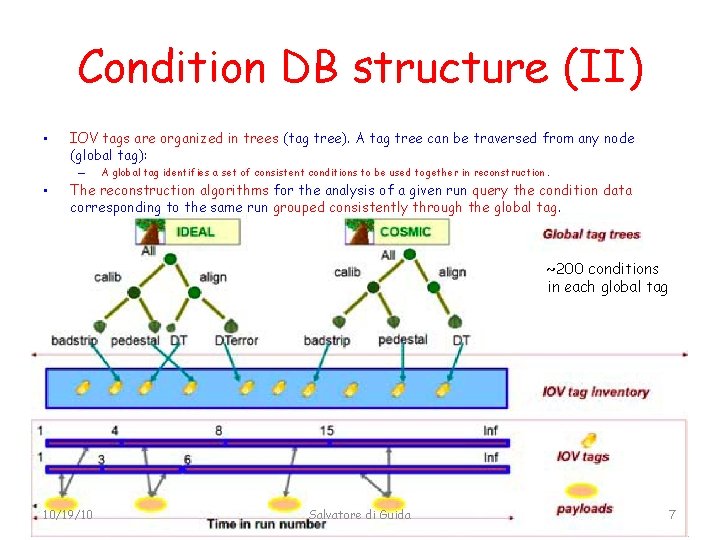

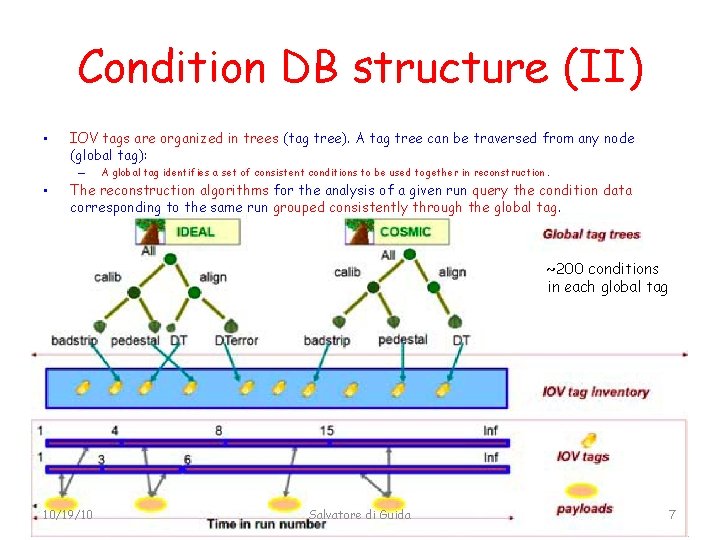

Condition DB structure (II) • IOV tags are organized in trees (tag tree). A tag tree can be traversed from any node (global tag): – • A global tag identifies a set of consistent conditions to be used together in reconstruction. The reconstruction algorithms for the analysis of a given run query the condition data corresponding to the same run grouped consistently through the global tag. ~200 conditions in each global tag 10/19/10 Salvatore di Guida 7

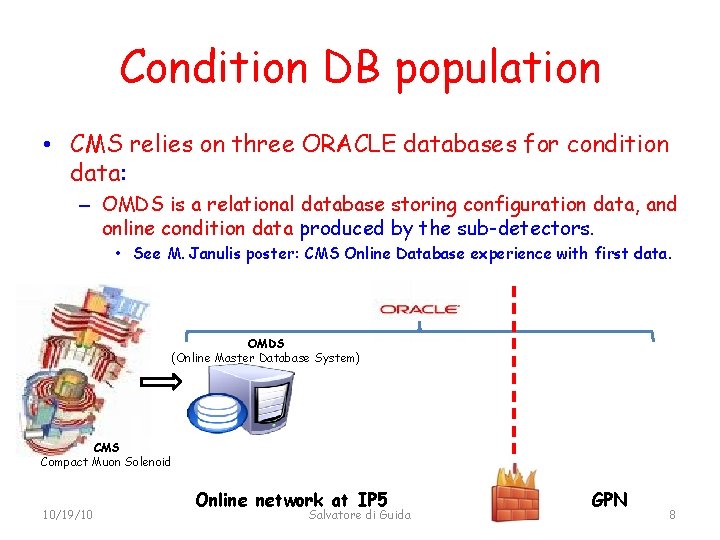

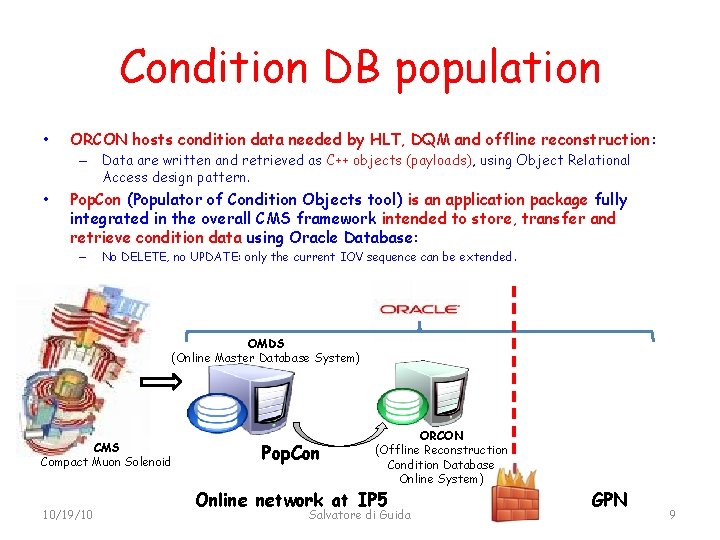

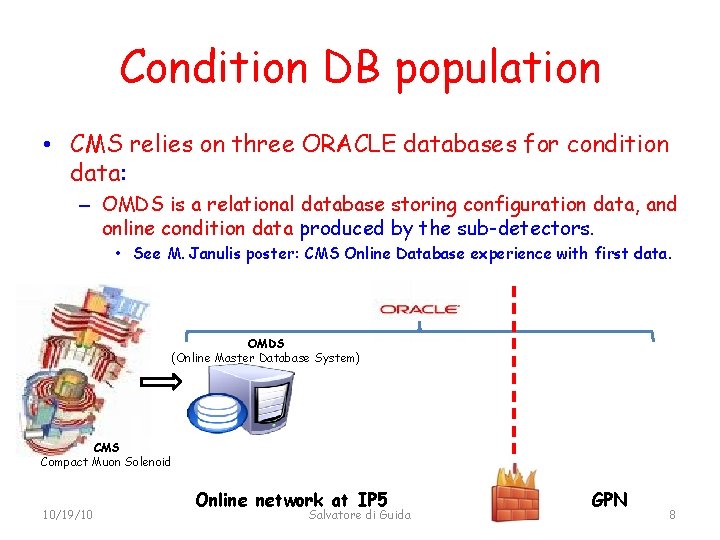

Condition DB population • CMS relies on three ORACLE databases for condition data: – OMDS is a relational database storing configuration data, and online condition data produced by the sub-detectors. • See M. Janulis poster: CMS Online Database experience with first data. OMDS (Online Master Database System) CMS Compact Muon Solenoid 10/19/10 Online network at IP 5 Salvatore di Guida GPN 8

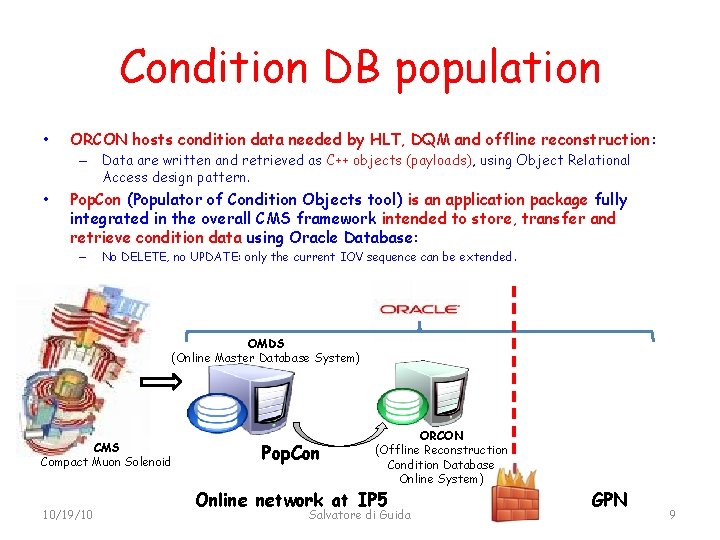

Condition DB population • ORCON hosts condition data needed by HLT, DQM and offline reconstruction: – Data are written and retrieved as C++ objects (payloads), using Object Relational Access design pattern. • Pop. Con (Populator of Condition Objects tool) is an application package fully integrated in the overall CMS framework intended to store, transfer and retrieve condition data using Oracle Database: – No DELETE, no UPDATE: only the current IOV sequence can be extended. OMDS (Online Master Database System) CMS Compact Muon Solenoid 10/19/10 Pop. Con ORCON (Offline Reconstruction Condition Database Online System) Online network at IP 5 Salvatore di Guida GPN 9

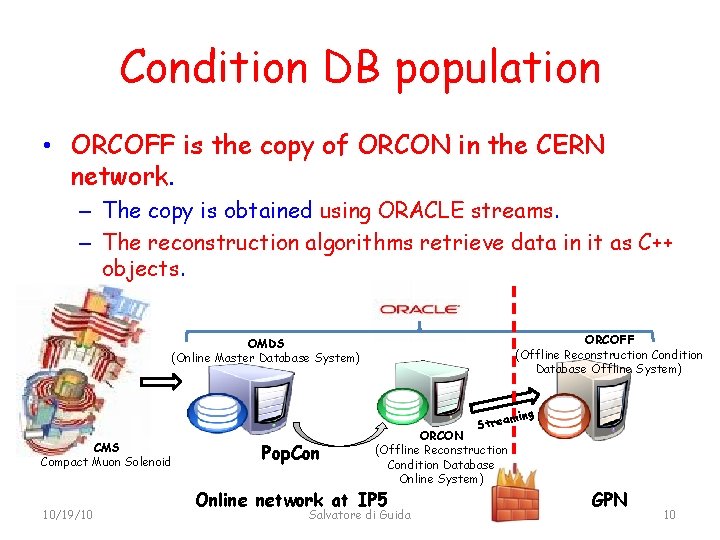

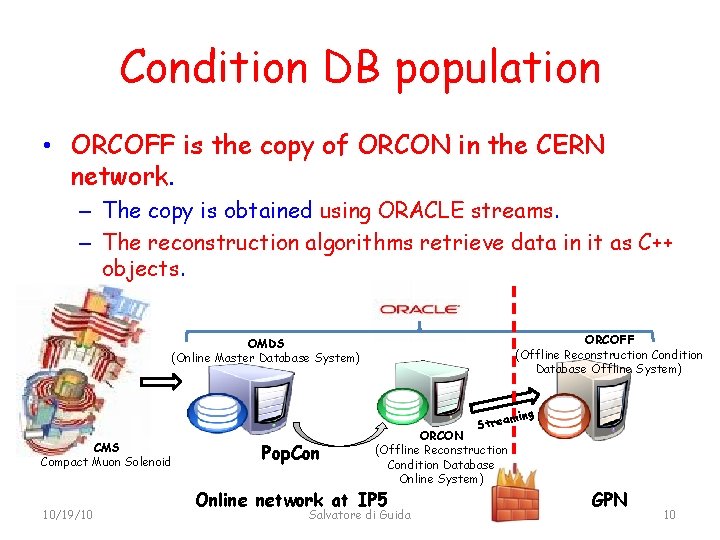

Condition DB population • ORCOFF is the copy of ORCON in the CERN network. – The copy is obtained using ORACLE streams. – The reconstruction algorithms retrieve data in it as C++ objects. ORCOFF (Offline Reconstruction Condition Database Offline System) OMDS (Online Master Database System) ming Strea CMS Compact Muon Solenoid 10/19/10 Pop. Con ORCON (Offline Reconstruction Condition Database Online System) Online network at IP 5 Salvatore di Guida GPN 10

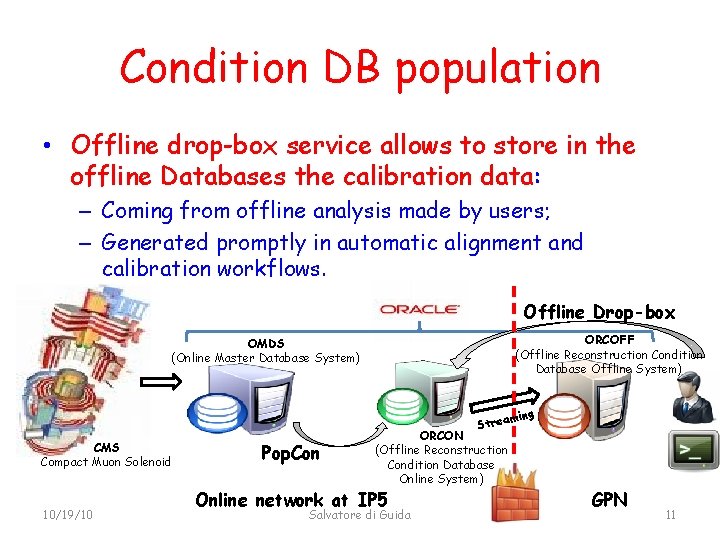

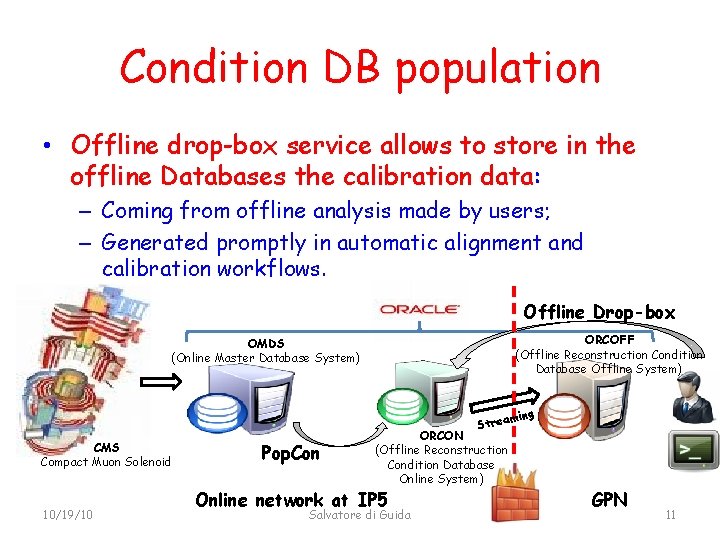

Condition DB population • Offline drop-box service allows to store in the offline Databases the calibration data: – Coming from offline analysis made by users; – Generated promptly in automatic alignment and calibration workflows. Offline Drop-box ORCOFF (Offline Reconstruction Condition Database Offline System) OMDS (Online Master Database System) ming Strea CMS Compact Muon Solenoid 10/19/10 Pop. Con ORCON (Offline Reconstruction Condition Database Online System) Online network at IP 5 Salvatore di Guida GPN 11

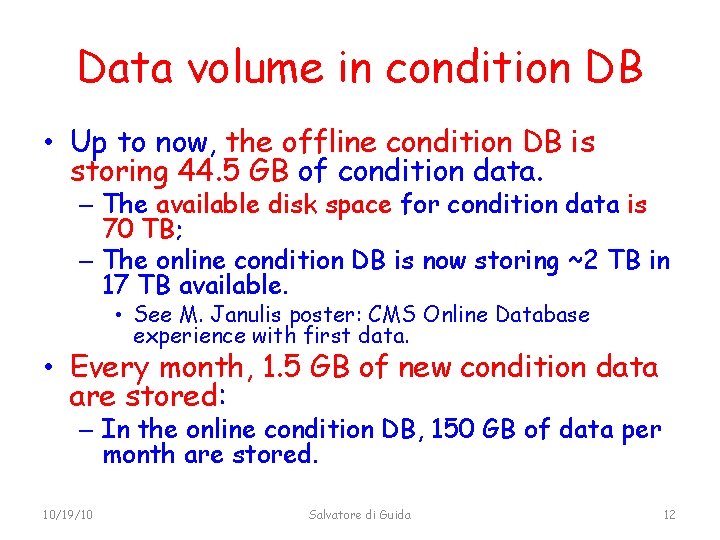

Data volume in condition DB • Up to now, the offline condition DB is storing 44. 5 GB of condition data. – The available disk space for condition data is 70 TB; – The online condition DB is now storing ~2 TB in 17 TB available. • See M. Janulis poster: CMS Online Database experience with first data. • Every month, 1. 5 GB of new condition data are stored: – In the online condition DB, 150 GB of data per month are stored. 10/19/10 Salvatore di Guida 12

Time critical conditions • L 1 Trigger: after run configuration, some important parameters must be stored: – Global trigger menu and masks; – Hardware configuration parameters for muon triggers, calorimetric triggers and global trigger: • • Conversion of ADC values to Et and p. T, Channel masks, Alignment constants, Parameters controlling firmware algorithms; • These parameters are needed by: – High Level Trigger (HLT) in order to choose the correct paths; – Online Data Quality Monitor (DQM) in order to run L 1 emulator: • The emulated output can be compared meaningfully with real data. These configurations must therefore be stored reliably and quickly, since they are crucial for starting correctly data taking. 10/19/10 Salvatore di Guida 13

Time critical conditions • Run Summary: as soon as the run starts, some important parameters must be made available: – FEDs involved in the run; – Magnetic currents in the solenoid, in order to calculate the magnetic field. • These parameters are needed by: – HLT in order to find good particle candidates; – Online DQM in order to know which modules are included in the data taking; – Reconstruction algorithms in order to fit correctly particle tracks. These basic detector conditions must be stored consistently and retrieved efficiently in order to obtain meaningful data. 10/19/10 Salvatore di Guida 14

Central Population of Condition Databases • Centralized account in the online network: üDeploy a set of automatic jobs for each sub-detector, üPopulate ORCON accounts, üMonitor any transactions towards them. 15 10/19/10 Salvatore di Guida

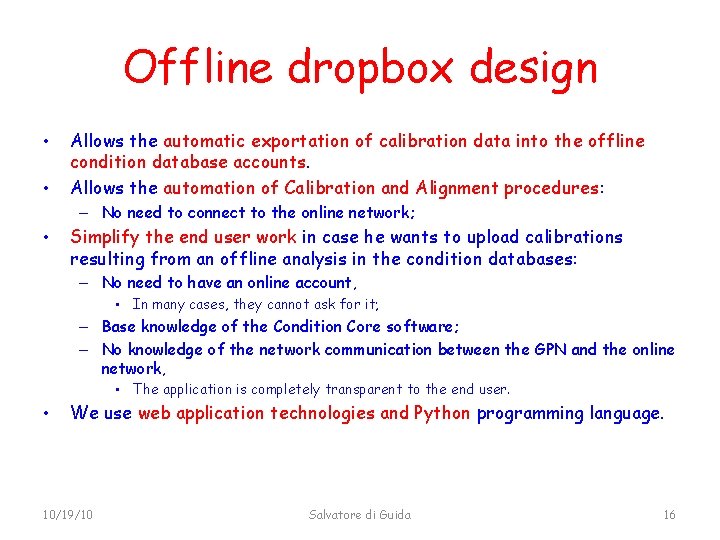

Offline dropbox design • • Allows the automatic exportation of calibration data into the offline condition database accounts. Allows the automation of Calibration and Alignment procedures: – No need to connect to the online network; • Simplify the end user work in case he wants to upload calibrations resulting from an offline analysis in the condition databases: – No need to have an online account, • In many cases, they cannot ask for it; – Base knowledge of the Condition Core software; – No knowledge of the network communication between the GPN and the online network, • The application is completely transparent to the end user. • We use web application technologies and Python programming language. 10/19/10 Salvatore di Guida 16

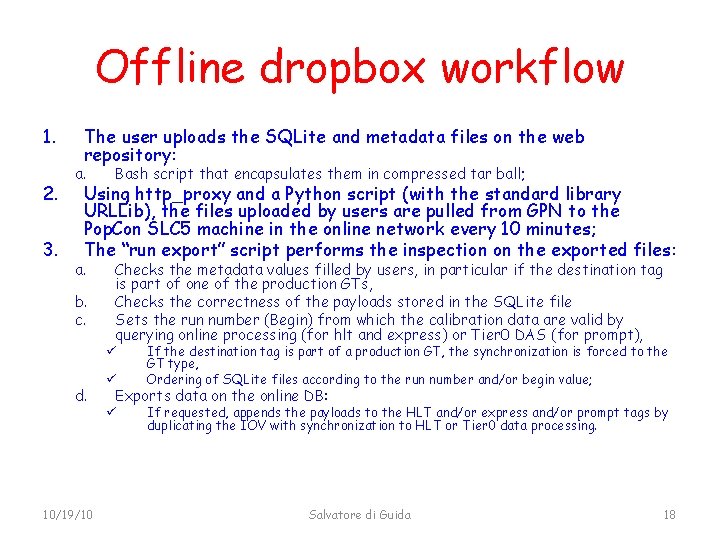

Offline dropbox workflow Web repository Runs crontab every 10 minutes HTTP Proxy s 3 b ru n nu mb er 3 c fr om wh ich 3 d th Backend ca lib ra tio n Oracle Streaming ORCON da ta ar e va li 1 d ORCOFF Tier 0 -DAS Offline network Online network 10/19/10 e copy cure - se 3 a Se t SCP 2 a 2 b Salvatore di Guida 17

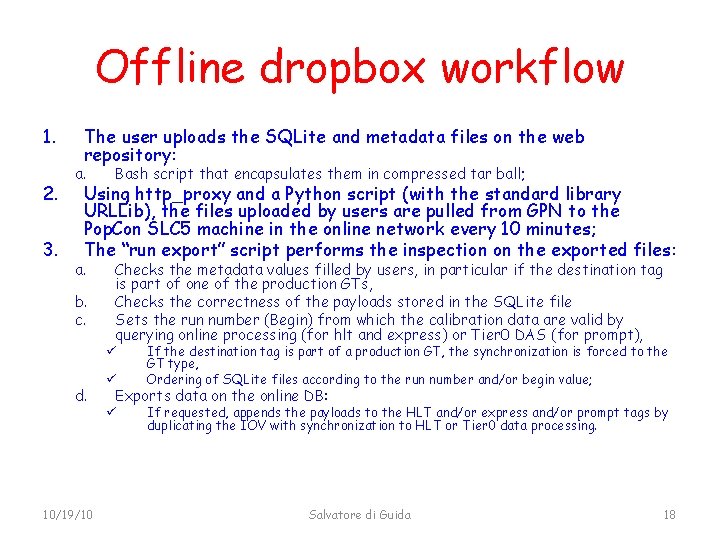

Offline dropbox workflow 1. 2. 3. The user uploads the SQLite and metadata files on the web repository: a. Bash script that encapsulates them in compressed tar ball; a. Checks the metadata values filled by users, in particular if the destination tag is part of one of the production GTs, Checks the correctness of the payloads stored in the SQLite file Sets the run number (Begin) from which the calibration data are valid by querying online processing (for hlt and express) or Tier 0 DAS (for prompt), Using http_proxy and a Python script (with the standard library URLLib), the files uploaded by users are pulled from GPN to the Pop. Con SLC 5 machine in the online network every 10 minutes; The “run export” script performs the inspection on the exported files: b. c. ü d. 10/19/10 ü If the destination tag is part of a production GT, the synchronization is forced to the GT type, Ordering of SQLite files according to the run number and/or begin value; Exports data on the online DB: ü If requested, appends the payloads to the HLT and/or express and/or prompt tags by duplicating the IOV with synchronization to HLT or Tier 0 data processing. Salvatore di Guida 18

Offline dropbox workflow • Such a complex infrastructure was needed in order to meet the requirements of the online system administrators: – Transferring data from GPN to online network is not envisaged in the online network design; – Strict security policy of the online network: • Files cannot be copied from GPN to the online network, but they must be pulled in the online cluster from the offline network. • In order to maintain such tool, we need to monitor many different parts of the infrastructure: – Maintained by us: • Web service, • Cronjobs in the online machine; – Non maintained by us: • HTTP proxy, • T 0 Data Service, HLT data processing, • Oracle Streams. 10/19/10 Salvatore di Guida 19

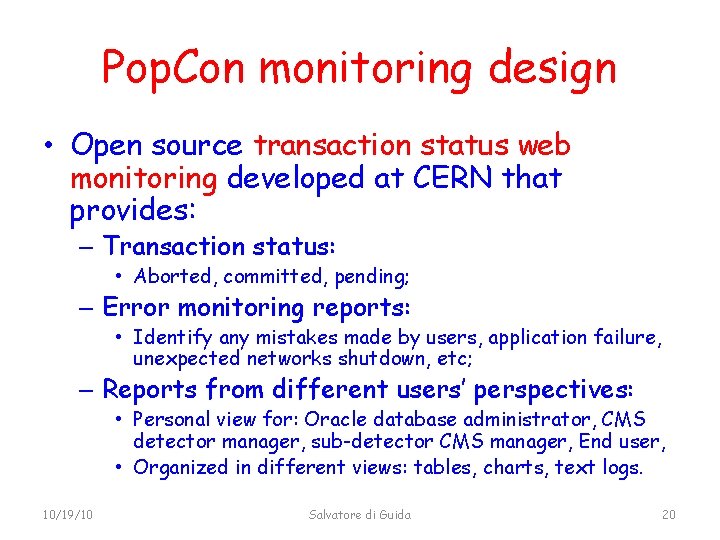

Pop. Con monitoring design • Open source transaction status web monitoring developed at CERN that provides: – Transaction status: • Aborted, committed, pending; – Error monitoring reports: • Identify any mistakes made by users, application failure, unexpected networks shutdown, etc; – Reports from different users’ perspectives: • Personal view for: Oracle database administrator, CMS detector manager, sub-detector CMS manager, End user, • Organized in different views: tables, charts, text logs. 10/19/10 Salvatore di Guida 20

Pop. Con monitoring motivation • We might use the existing web monitoring tool for our purpose, but we need to fulfill the challenging requirements of CMS experiment: – Usage of CMSSW standards: • Generic CMSSW component to feel comfortable developers and end -users in building and using new package in CMSSW; – Monitoring the heterogeneous software environment: • Oracle DBs, CMSSW framework and other open source packages; – Open source product; – CERN Participation in Oracle Technology Beta Programs: • We need a flexible architecture to handle unexpected error; – Maximize the performance: • Stress test of CMSSW infrastructure, • Avoiding bottlenecks due to Huge Data Access (history and current data). 10/19/10 Salvatore di Guida 21

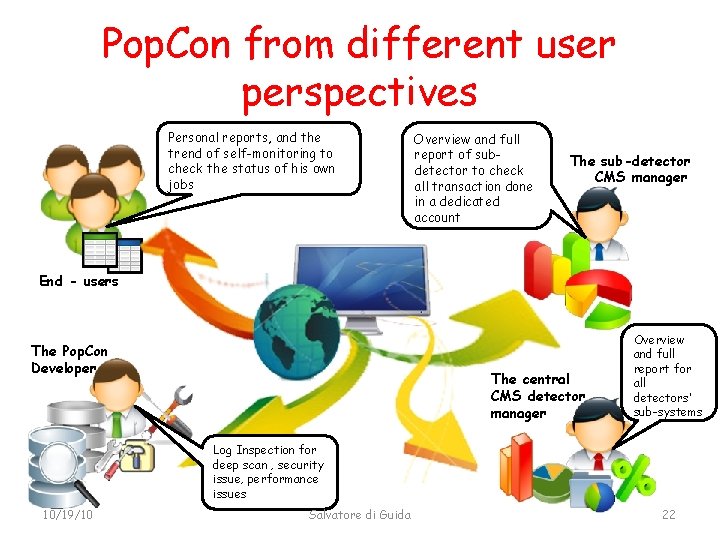

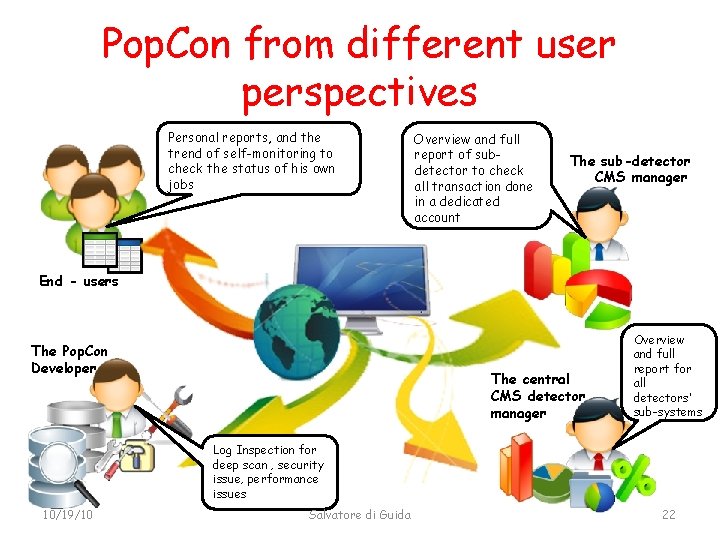

Pop. Con from different user perspectives Personal reports, and the trend of self-monitoring to check the status of his own jobs Overview and full report of subdetector to check all transaction done in a dedicated account The sub-detector CMS manager End - users The Pop. Con Developer The central CMS detector manager Overview and full report for all detectors’ sub-systems Log Inspection for deep scan , security issue, performance issues 10/19/10 Salvatore di Guida 22

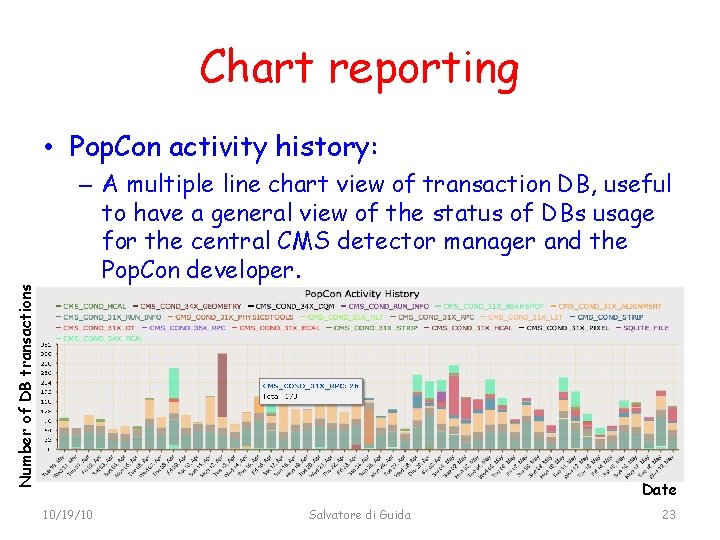

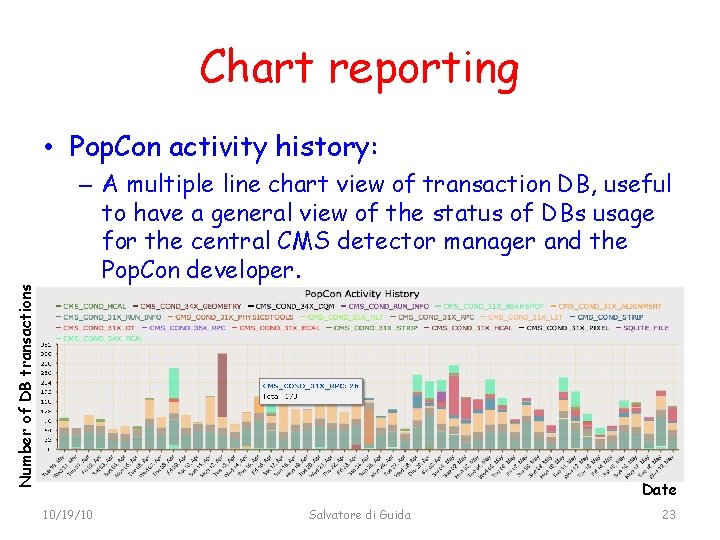

Chart reporting Number of DB transactions • Pop. Con activity history: – A multiple line chart view of transaction DB, useful to have a general view of the status of DBs usage for the central CMS detector manager and the Pop. Con developer. Date 10/19/10 Salvatore di Guida 23

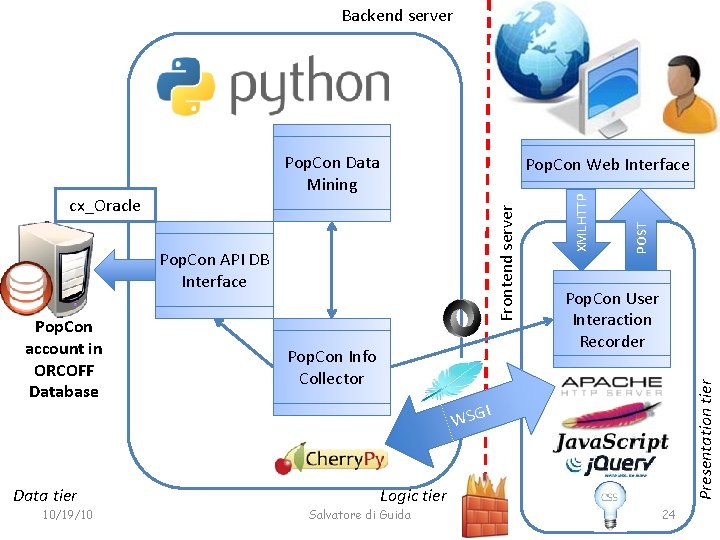

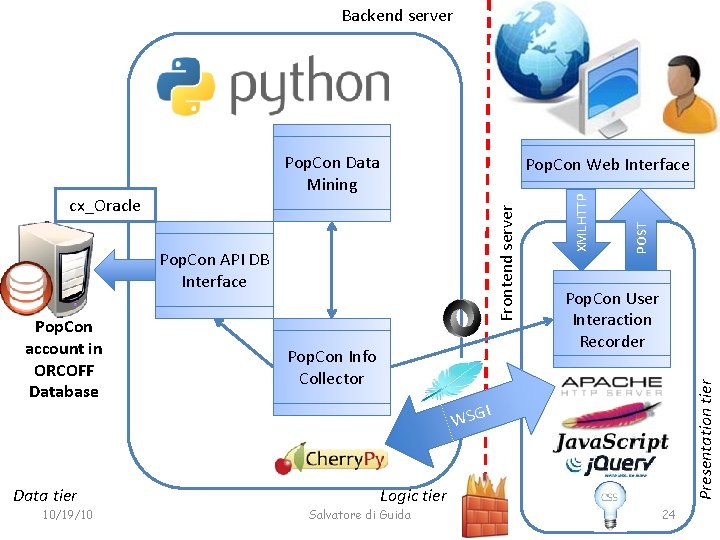

Backend server Pop. Con Data Mining Data tier 10/19/10 Pop. Con Info Collector Pop. Con User Interaction Recorder Presentation tier Pop. Con account in ORCOFF Database POST Pop. Con API DB Interface XMLHTTP Frontend server cx_Oracle Pop. Con Web Interface I WSG Logic tier Salvatore di Guida 24

Backend server Data tier 10/19/10 Pop. Con Info Collector Pop. Con User Interaction Recorder Presentation tier Pop. Con account in ORCOFF Database POST Pop. Con API DB Interface XMLHTTP cx_Oracle Frontend server Using Web Socket, the monitoring system is faster! Pop. Con Data. See A. Pierro presentation: Pop. Con Web Interface Mining Fast access to CMS detector condition data employing HTML 5 technologies I WSG Logic tier Salvatore di Guida 25

Conclusions • The CMS offline condition DB is a complex infrastructure designed to store reliably and efficiently, and retrieve consistently condition data needed for trigger, DQM, offline reconstruction. • We are storing ~45 GB of condition data: – ~40 times less w. r. t. the online condition DB. • Data increase rate is 1. 5 GB/month: – ~100 times less w. r. t the online condition DB. • The offline DB stores only a subset of online conditions, and uses data compression. 10/19/10 Salvatore di Guida 26

Conclusions • Pop. Con allows to retrieve online data and store them in the offline DB very efficiently: – Especially for time critical data (e. g. trigger and run information); – Can be automated for conditions changing frequently. • The offline dropbox allows to store safely and reliably calibrations coming from offline analysis. 10/19/10 Salvatore di Guida 27

Conclusions • The Pop. Con web based monitoring allows to check the status of the infrastructure and spot hardware and software problems very quickly: – Even faster using new HTML 5 technologies. • The condition DB infrastructure is running smoothly since November 2009: – Both for 900 Ge. V and 7 Te. V collision data taking. 10/19/10 Salvatore di Guida 28