Tight Coupling between ASR and MT in SpeechtoSpeech

Tight Coupling between ASR and MT in Speech-to-Speech Translation Arthur Chan Prepared for Advanced Machine Translation Seminar

This Seminar l Introduction (4 slides)

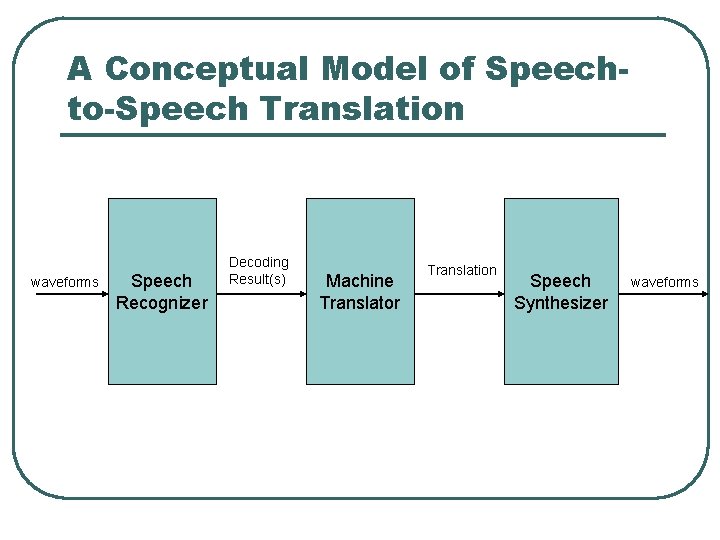

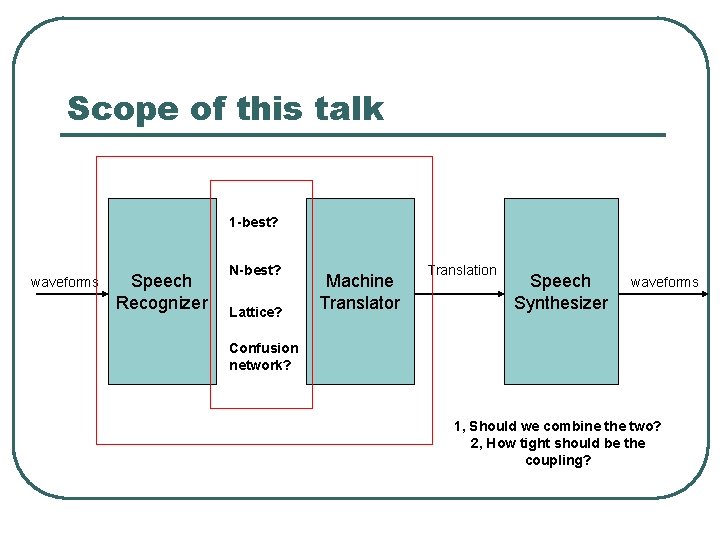

A Conceptual Model of Speechto-Speech Translation waveforms Speech Recognizer Decoding Result(s) Machine Translator Translation Speech Synthesizer waveforms

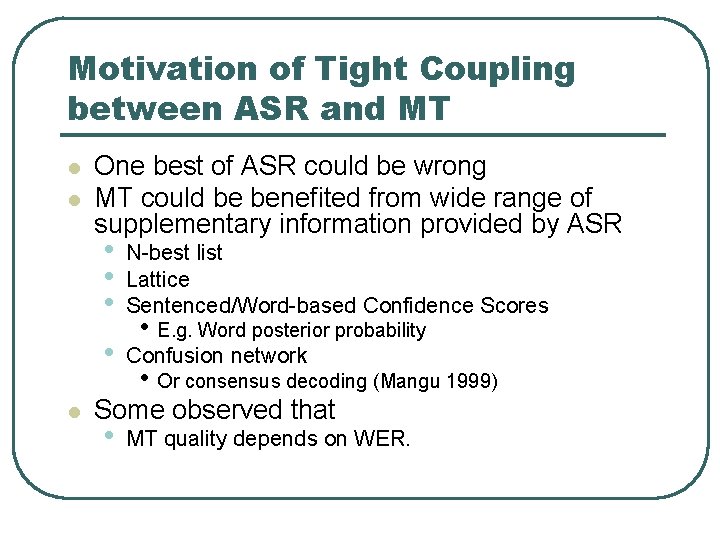

Motivation of Tight Coupling between ASR and MT l l l One best of ASR could be wrong MT could be benefited from wide range of supplementary information provided by ASR • • • N-best list Lattice Sentenced/Word-based Confidence Scores • Confusion network • E. g. Word posterior probability • Or consensus decoding (Mangu 1999) Some observed that • MT quality depends on WER.

Scope of this talk 1 -best? waveforms Speech Recognizer N-best? Lattice? Machine Translator Translation Speech Synthesizer waveforms Confusion network? 1, Should we combine the two? 2, How tight should be the coupling?

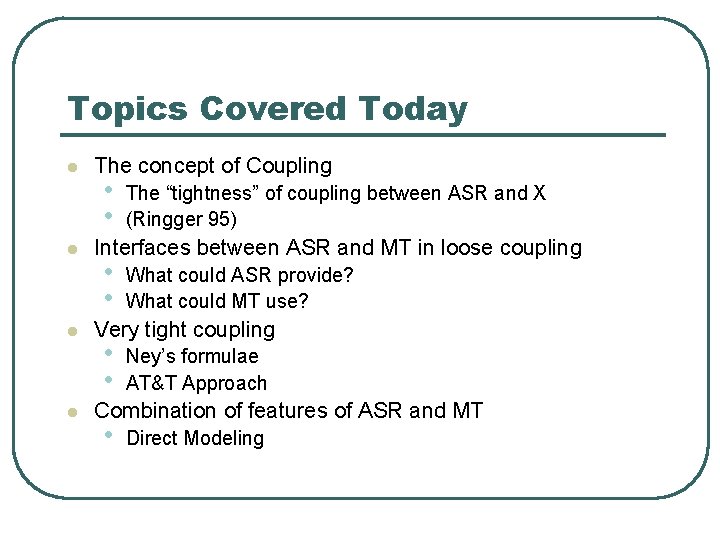

Topics Covered Today l l The concept of Coupling • • The “tightness” of coupling between ASR and X (Ringger 95) Interfaces between ASR and MT in loose coupling • • What could ASR provide? What could MT use? Very tight coupling • • Ney’s formulae AT&T Approach Combination of features of ASR and MT • Direct Modeling

The Concept of Coupling

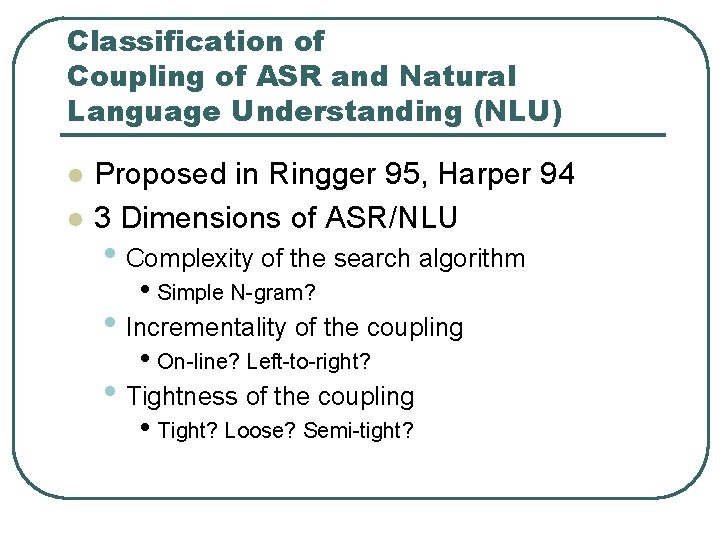

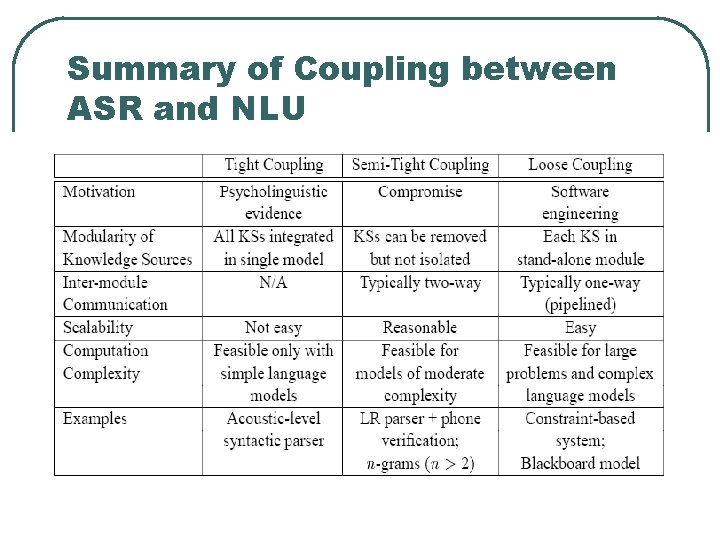

Classification of Coupling of ASR and Natural Language Understanding (NLU) l l Proposed in Ringger 95, Harper 94 3 Dimensions of ASR/NLU • Complexity of the search algorithm • Simple N-gram? • Incrementality of the coupling • On-line? Left-to-right? • Tightness of the coupling • Tight? Loose? Semi-tight?

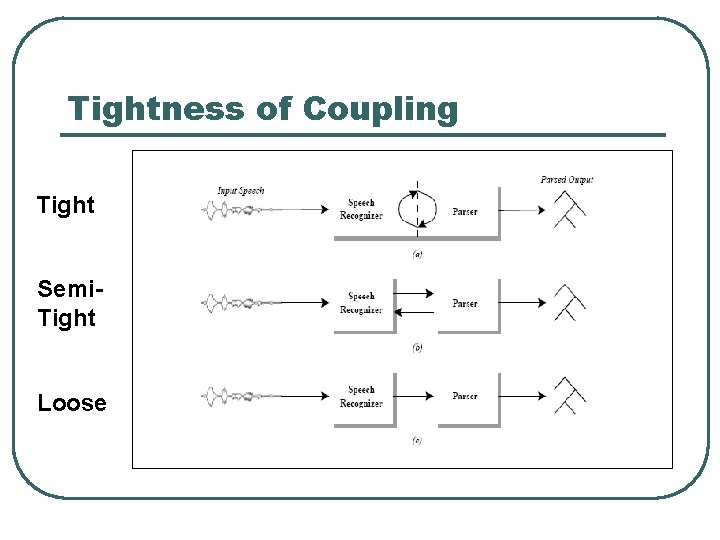

Tightness of Coupling Tight Semi. Tight Loose

Summary of Coupling between ASR and NLU

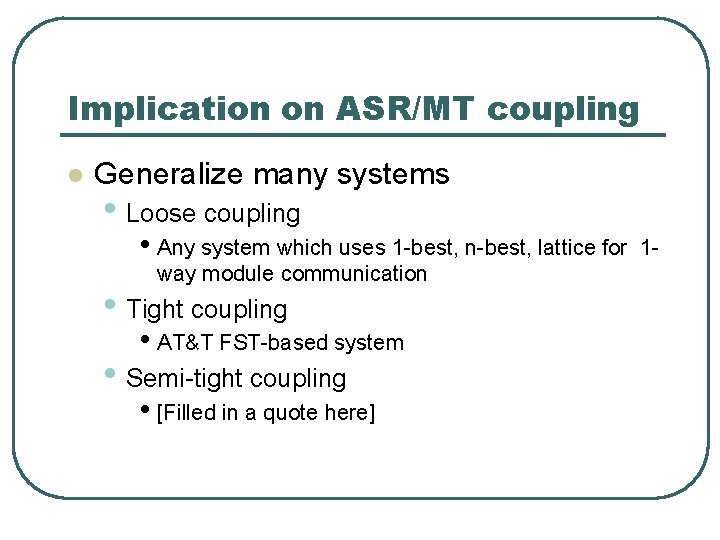

Implication on ASR/MT coupling l Generalize many systems • Loose coupling • Any system which uses 1 -best, n-best, lattice for way module communication • Tight coupling • AT&T FST-based system • Semi-tight coupling • [Filled in a quote here] 1 -

Interfaces in Loose Coupling

Perspectives l What output could an ASR generates? • Not all of them are used but it could mean opportunity in future. l What algorithms could MT uses given a certain inputs? • On-line algorithm is a focus

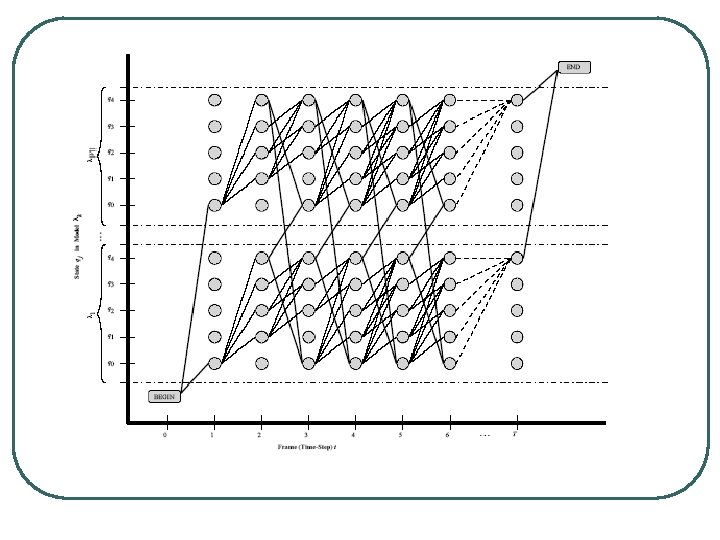

Decoding of HMM-based ASR l Decoding of HMM-based ASR • Searching the best path in a huge HMM-state lattice. l 1 -best ASR result • The best path one could find from backtracking. l State Lattice (Next page)

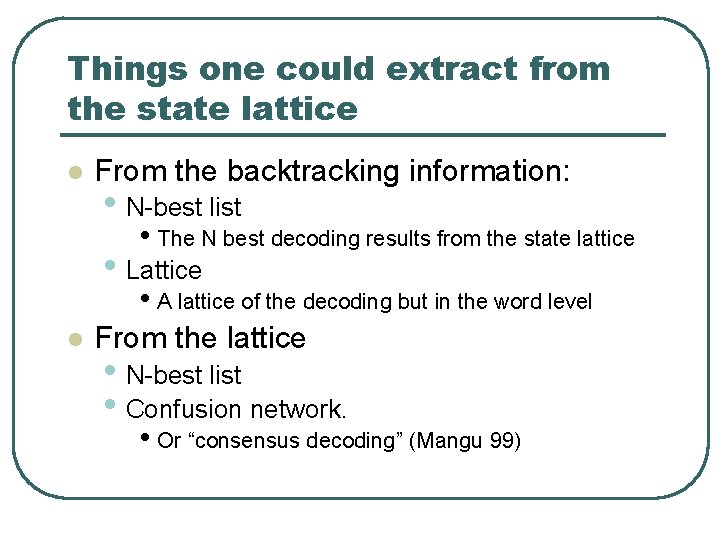

Things one could extract from the state lattice l From the backtracking information: • N-best list • The N best decoding results from the state lattice • Lattice • A lattice of the decoding but in the word level l From the lattice • N-best list • Confusion network. • Or “consensus decoding” (Mangu 99)

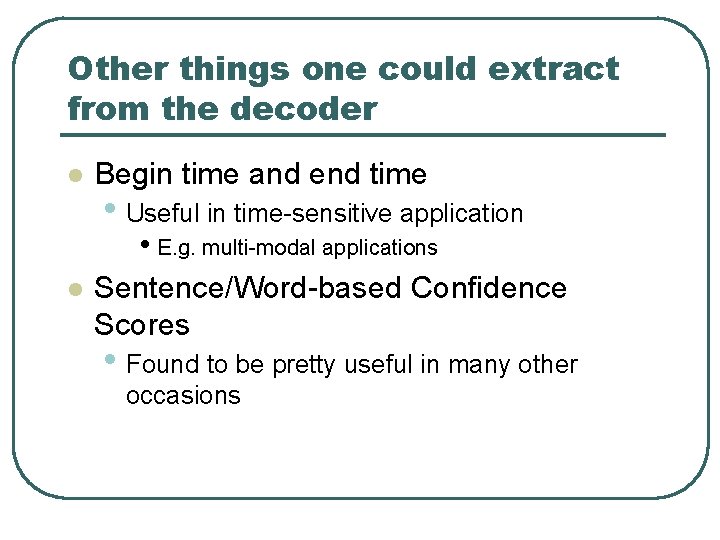

Other things one could extract from the decoder l Begin time and end time • Useful in time-sensitive application • E. g. multi-modal applications l Sentence/Word-based Confidence Scores • Found to be pretty useful in many other occasions

Experimental Results

How MT used the output? l What decoding algorithms are using?

Tight Coupling

Literature Eric K. Ringger, “A Robust Loose Coupling for Speech Recognition and Natural Language Understanding”, Technical Report 592, Computer Science Department, Rochester University, 1995 [The AT&T paper]

- Slides: 21