Tier 3 and Tier 3 monitoring 17 05

- Slides: 38

Tier 3 and Tier 3 monitoring 17. 05. 2012 Ivan Kadochnikov LIT JINR

Overview WLCG structure Tier 3 T 3 mon concept Monitoring tools T 3 mon implementation

WLCG structure Goals of WLCG Hierarchic approach Production and analysis Argument for Tier 3

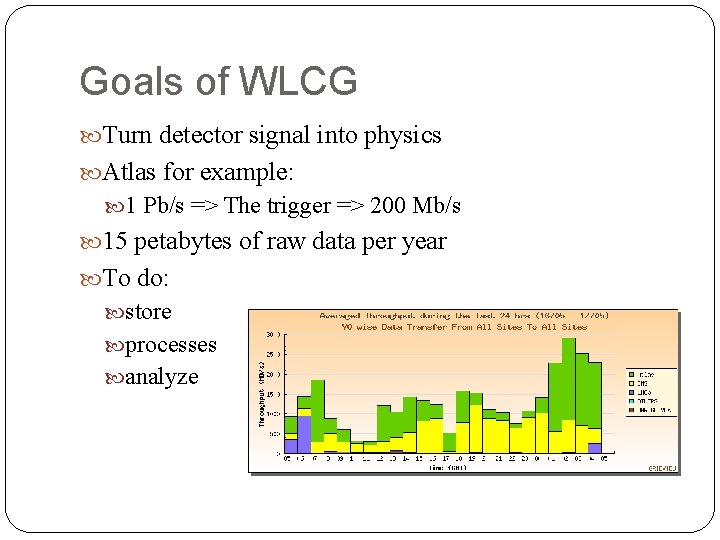

Goals of WLCG Turn detector signal into physics Atlas for example: 1 Pb/s => The trigger => 200 Mb/s 15 petabytes of raw data per year To do: store processes analyze

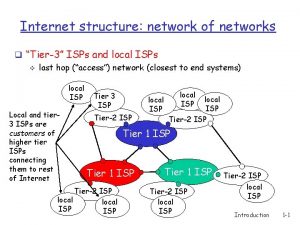

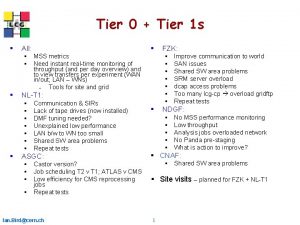

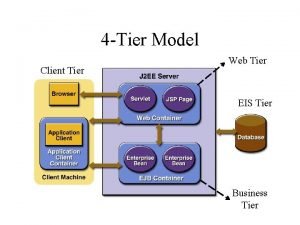

Hierarchic approach Tier 0 the CERN computer centre safe-keeping the first copy of raw data first pass reconstruction Tier 1 11 centers all around the world safe-keeping shares of raw, reconstructed, reprocessed and simulated data reprocessing Tier 2 about 140 sites production and reconstruction of simulated events analysis

Production and analysis Data selection algorithms improve Calibration data change Re-processing several times a year of all data gathered since LHC start-up

Argument for Tier 3 Analysis on Tier 2 is inconvenient Institutions have local computing resources Local access and resources dedicated to analysis improve user response time dramatically

Tier 3 What is Tier 3? Types of Tier 3 Compare and contrast: Tier 2 Current status Need for monitoring

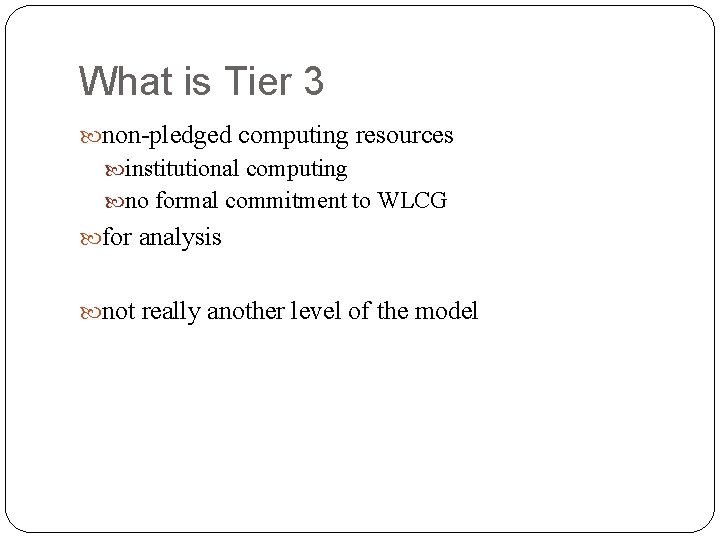

What is Tier 3 non-pledged computing resources institutional computing no formal commitment to WLCG for analysis not really another level of the model

Types of Tier 3 sites Tier 3 with Tier 2 functionality Collocated with Tier 2 National analysis facilities Non-grid Tier 3’s

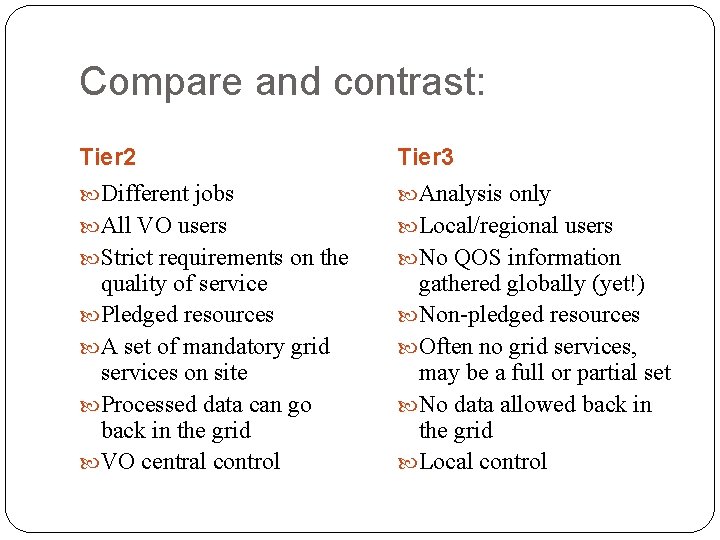

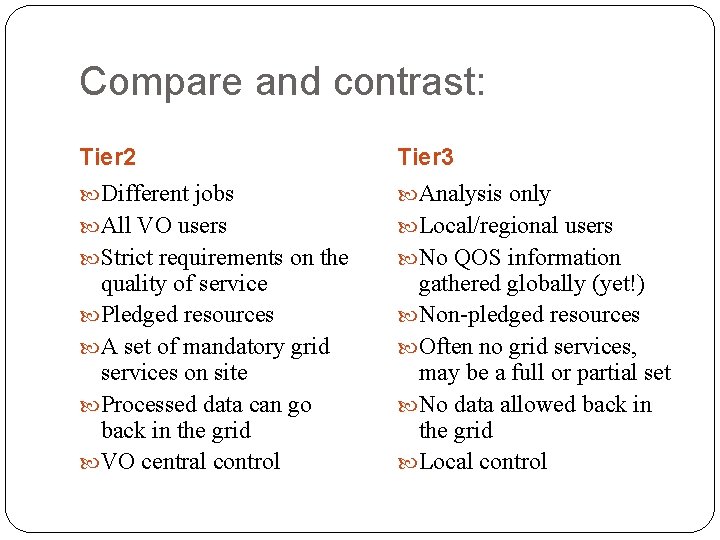

Compare and contrast: Tier 2 Tier 3 Different jobs Analysis only All VO users Local/regional users Strict requirements on the No QOS information quality of service Pledged resources A set of mandatory grid services on site Processed data can go back in the grid VO central control gathered globally (yet!) Non-pledged resources Often no grid services, may be a full or partial set No data allowed back in the grid Local control

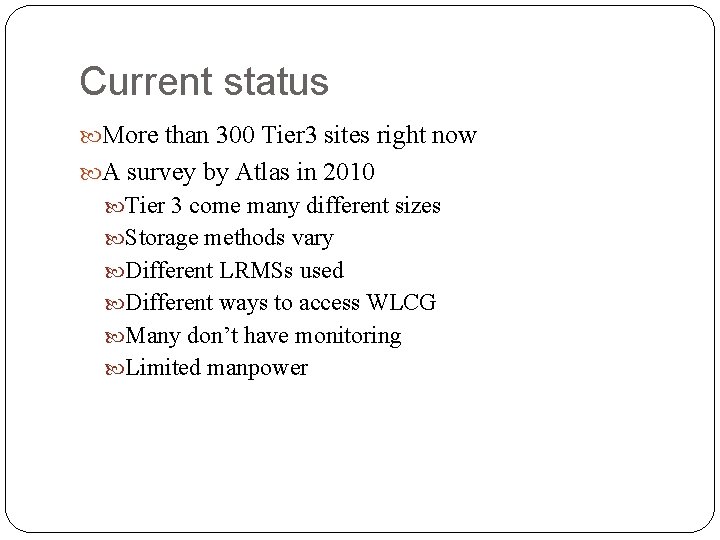

Current status More than 300 Tier 3 sites right now A survey by Atlas in 2010 Tier 3 come many different sizes Storage methods vary Different LRMSs used Different ways to access WLCG Many don’t have monitoring Limited manpower

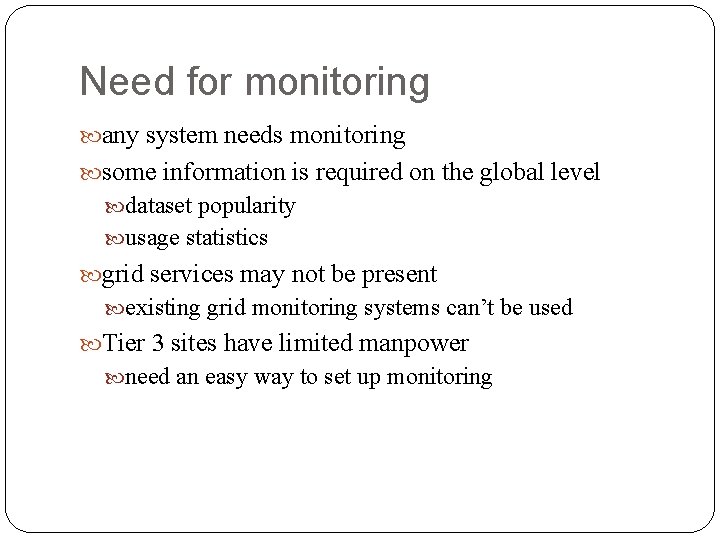

Need for monitoring any system needs monitoring some information is required on the global level dataset popularity usage statistics grid services may not be present existing grid monitoring systems can’t be used Tier 3 sites have limited manpower need an easy way to set up monitoring

T 3 mon concept Users and requirements What to monitor Structure Local monitoring Global monitoring

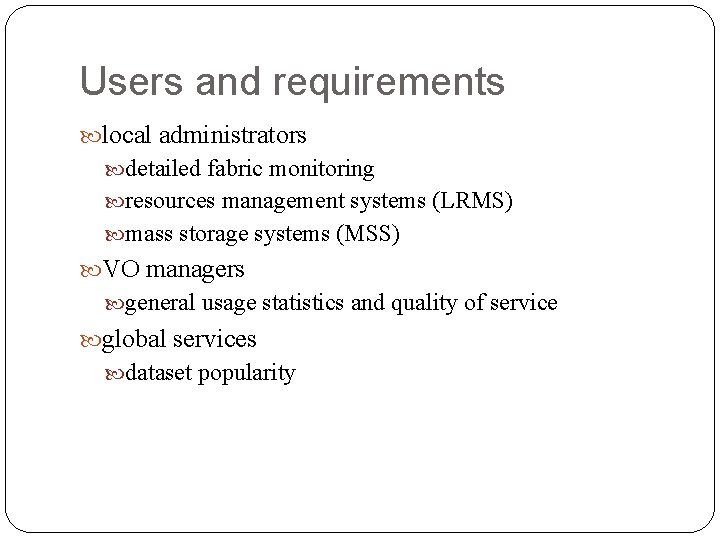

Users and requirements local administrators detailed fabric monitoring resources management systems (LRMS) mass storage systems (MSS) VO managers general usage statistics and quality of service global services dataset popularity

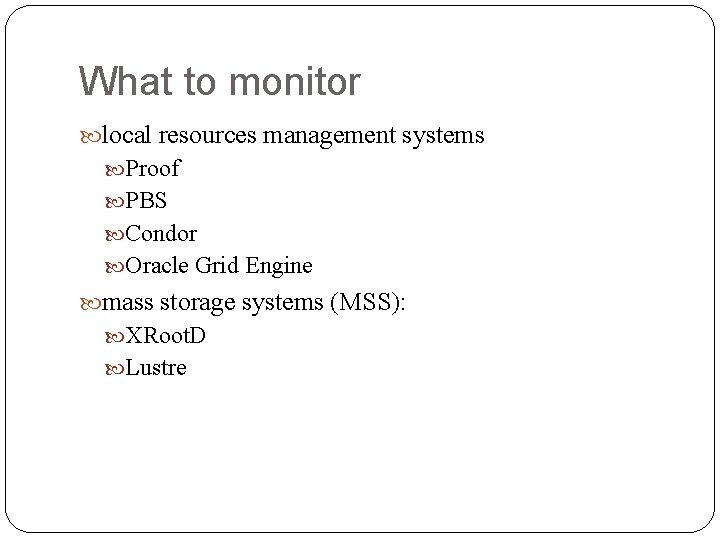

What to monitor local resources management systems Proof PBS Condor Oracle Grid Engine mass storage systems (MSS): XRoot. D Lustre

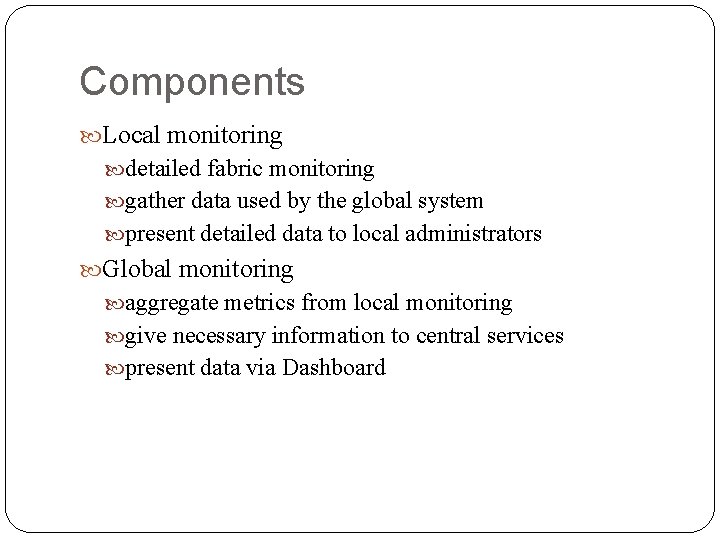

Components Local monitoring detailed fabric monitoring gather data used by the global system present detailed data to local administrators Global monitoring aggregate metrics from local monitoring give necessary information to central services present data via Dashboard

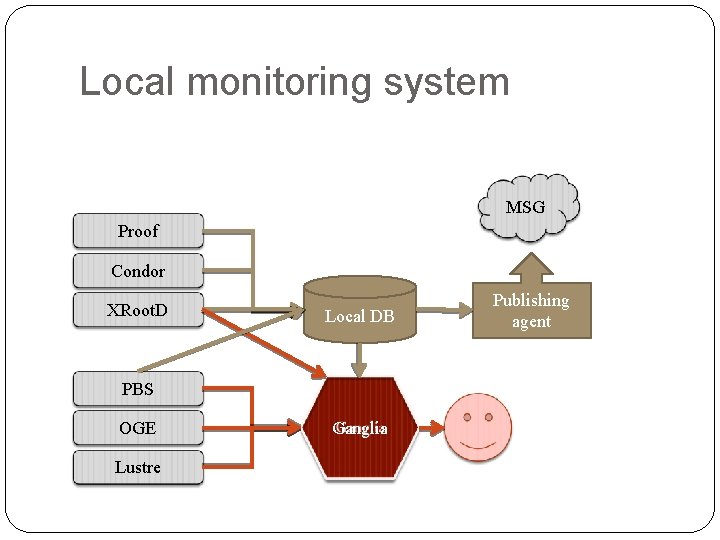

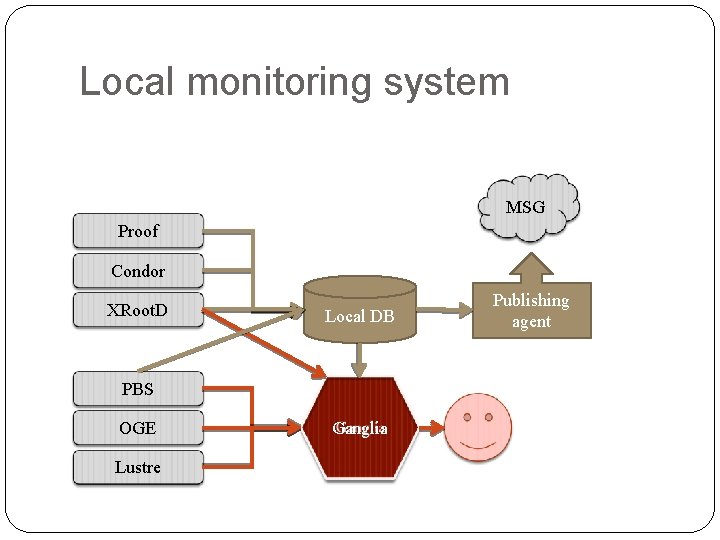

Local monitoring system MSG Proof Condor XRoot. D Local DB PBS OGE Lustre Ganglia Publishing agent

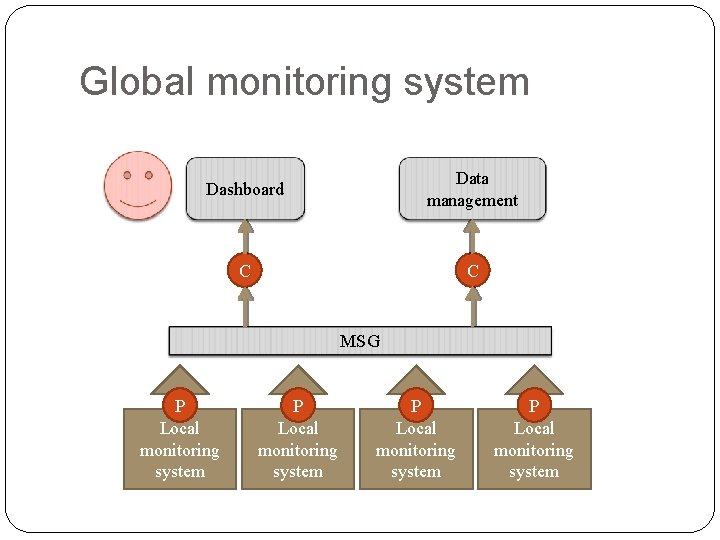

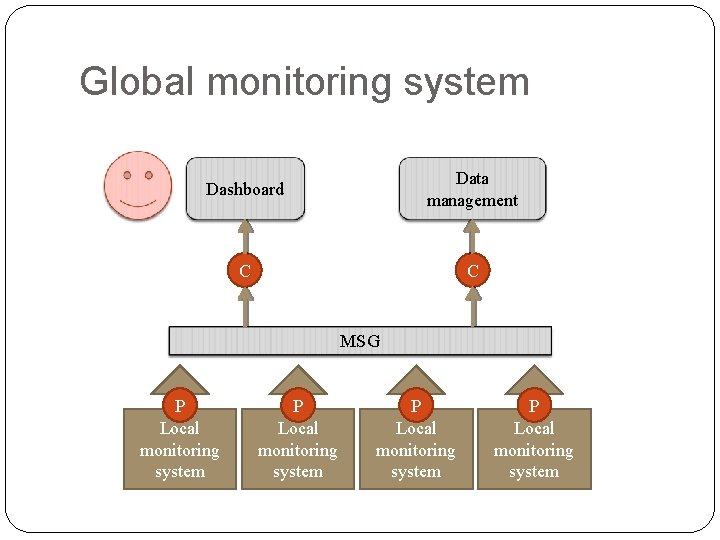

Global monitoring system Dashboard Data management C C MSG P Local monitoring system

Tools Ganglia data flow plug-in system Dashboard MSG Active. MQ

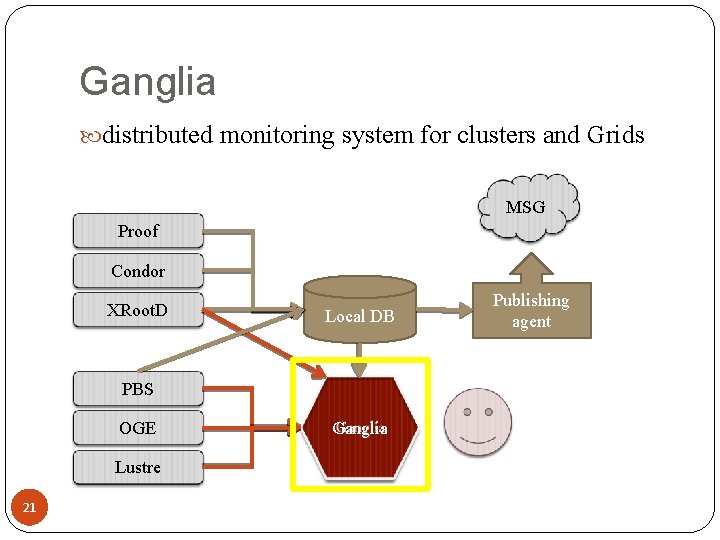

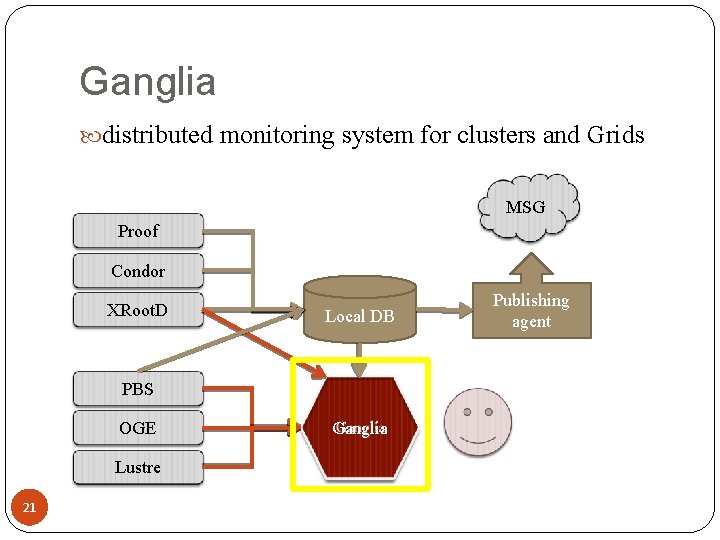

Ganglia distributed monitoring system for clusters and Grids MSG Proof Condor XRoot. D Local DB PBS OGE Lustre 21 Ganglia Publishing agent

Why Ganglia? easy to set up fabric monitoring popular choice among Tier 3 sites extension modules for LRMS and MSS monitoring

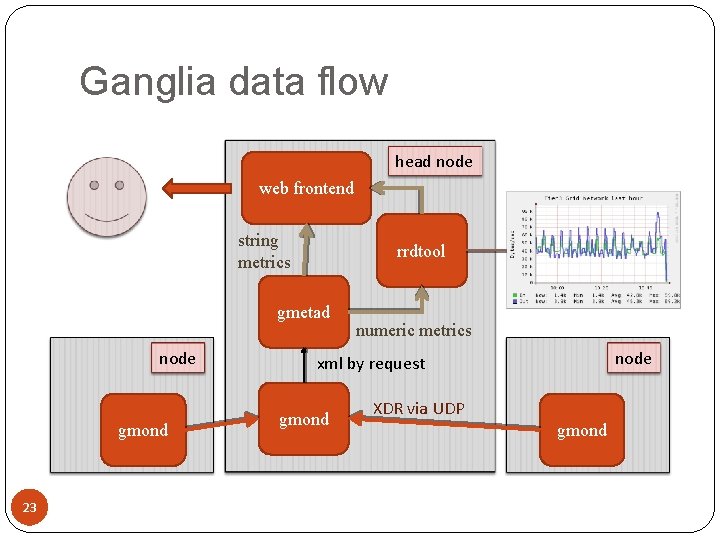

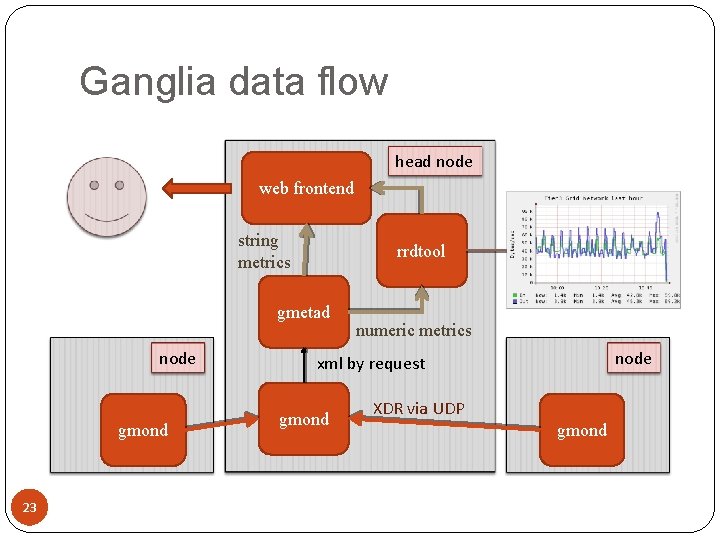

Ganglia data flow head node web frontend string metrics rrdtool gmetad node gmond 23 numeric metrics node xml by request gmond XDR via UDP gmond

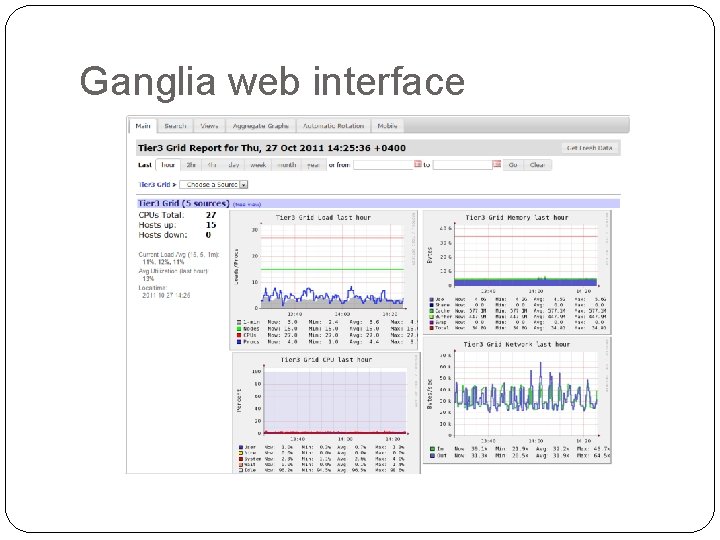

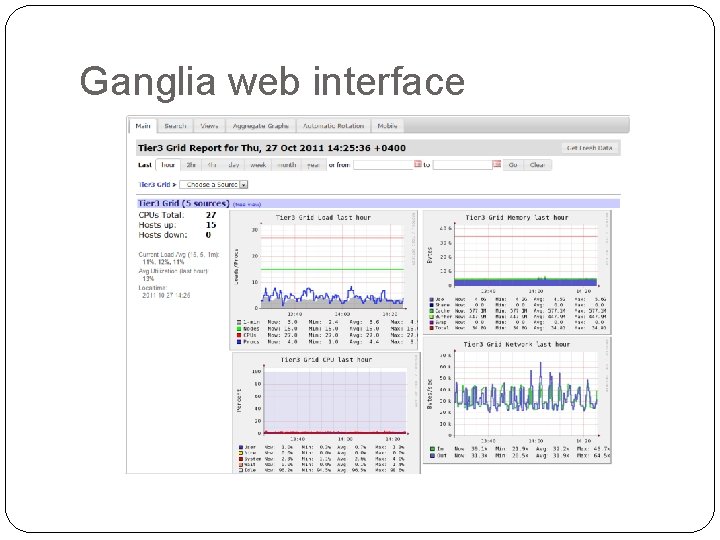

Ganglia web interface

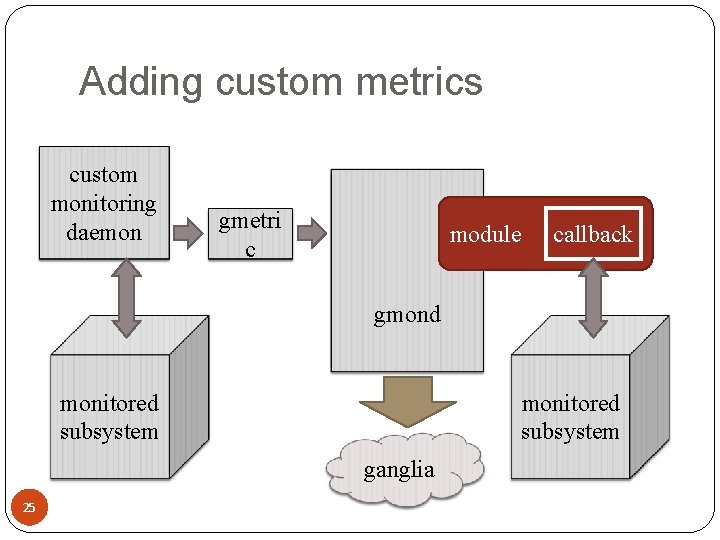

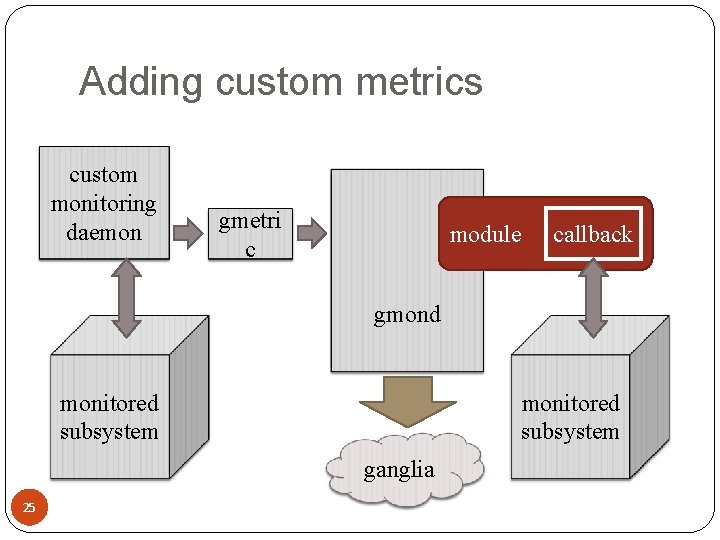

Adding custom metrics custom monitoring daemon gmetri c module callback gmond monitored subsystem ganglia 25

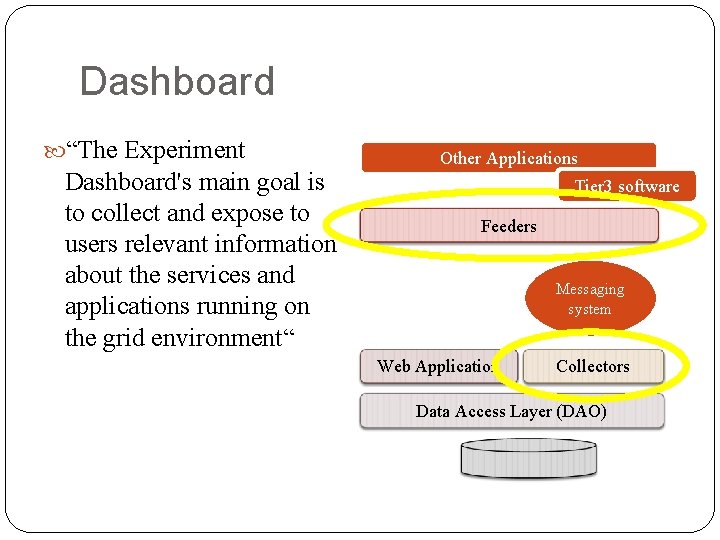

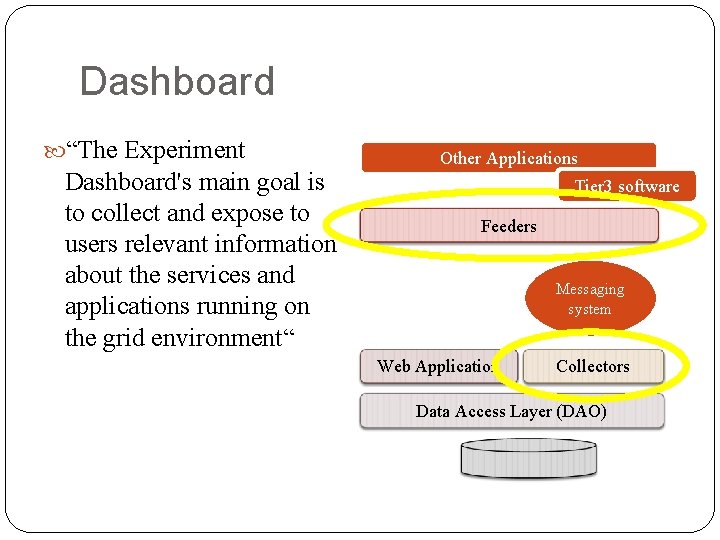

Dashboard “The Experiment Dashboard's main goal is to collect and expose to users relevant information about the services and applications running on the grid environment“ Other Applications Tier 3 software Feeders Messaging system Web Application Collectors Data Access Layer (DAO)

MSG WLCG Messaging System for Grids “Aims to help the integration and consolidation of the various grid monitoring systems used in WLCG” Based on Active. MQ open-source message broker

T 3 Mon implementation Project structure Subsystem modules Proof monitoring module PBS monitoring module Condor monitoring module Lustre monitoring module XRoot. D monitoring module Testing infrastructure

Project structure Python SVN provided by CERN RPM repository with a separate package for each monitoring module Each module handles one software system to be monitored on Tier 3 One configuration file for all modules

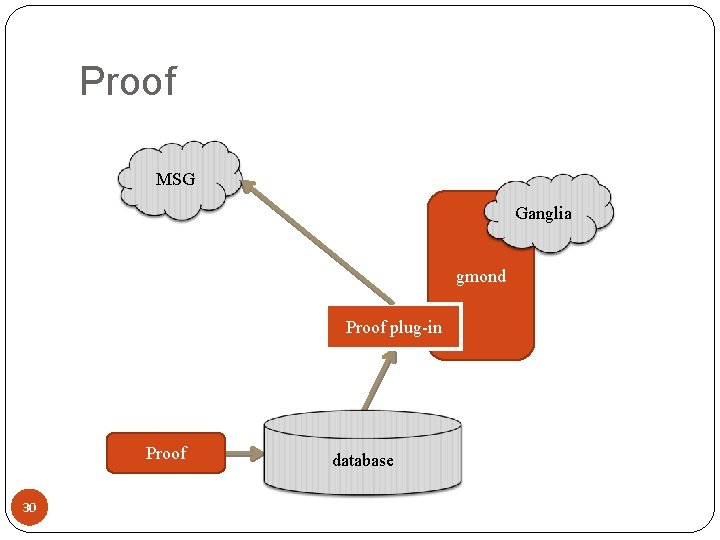

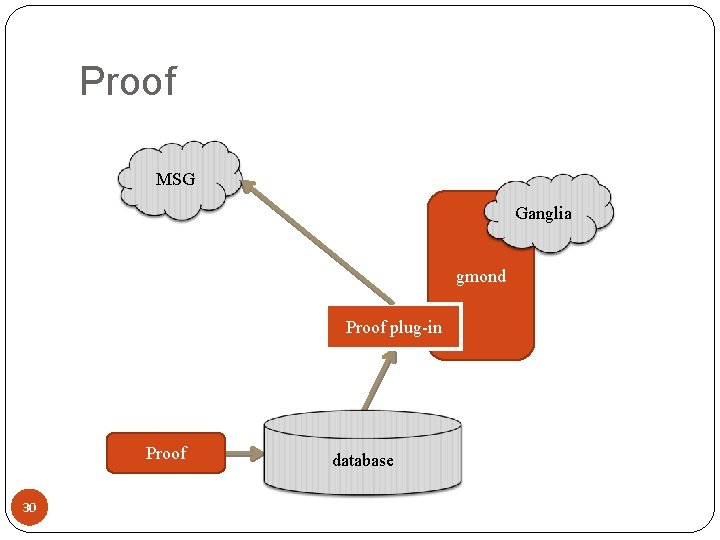

Proof MSG Ganglia gmond Proof plug-in Proof 30 database

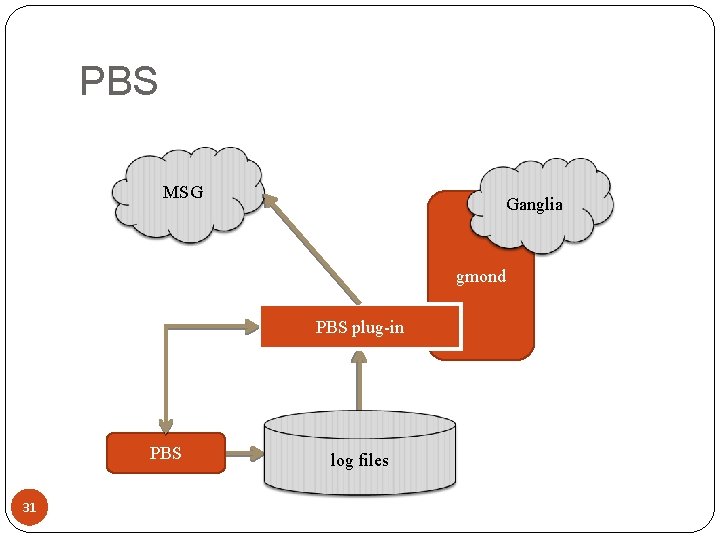

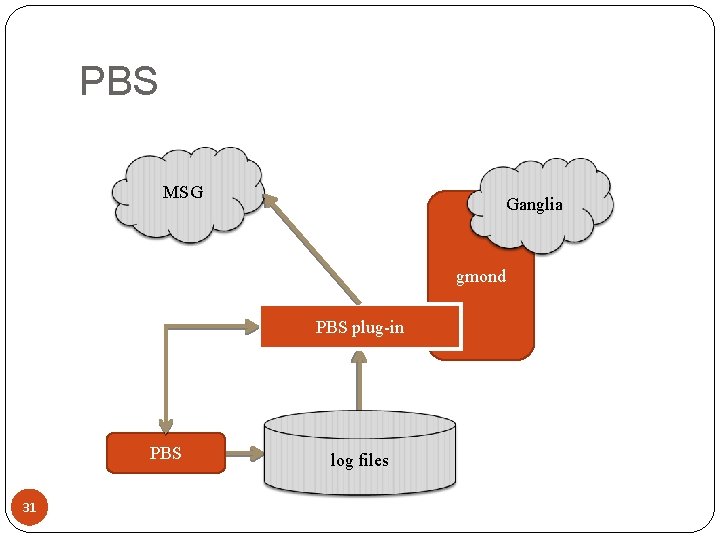

PBS MSG Ganglia gmond PBS plug-in PBS 31 log files

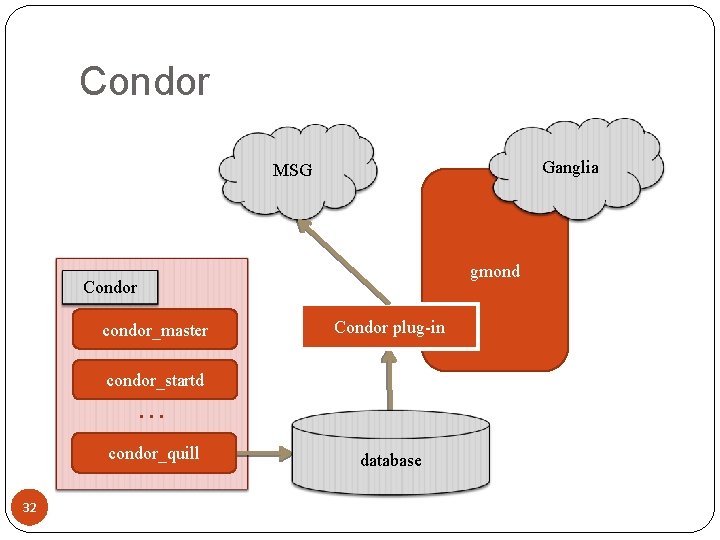

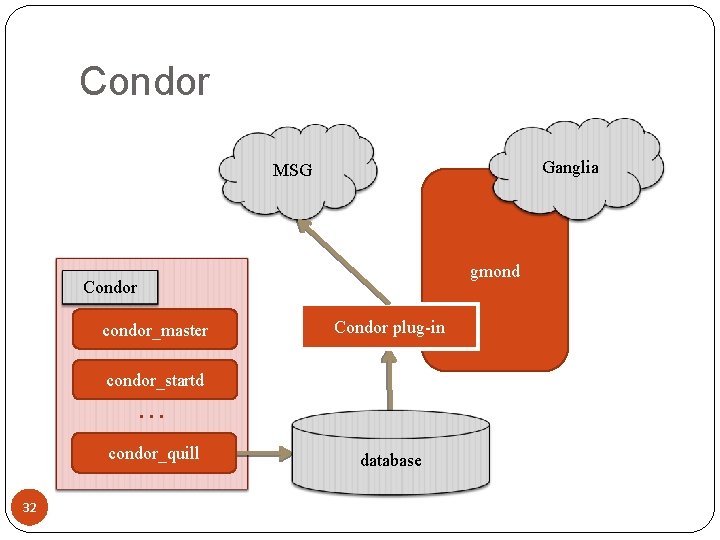

Condor Ganglia MSG gmond Condor condor_master Condor plug-in condor_startd … condor_quill 32 database

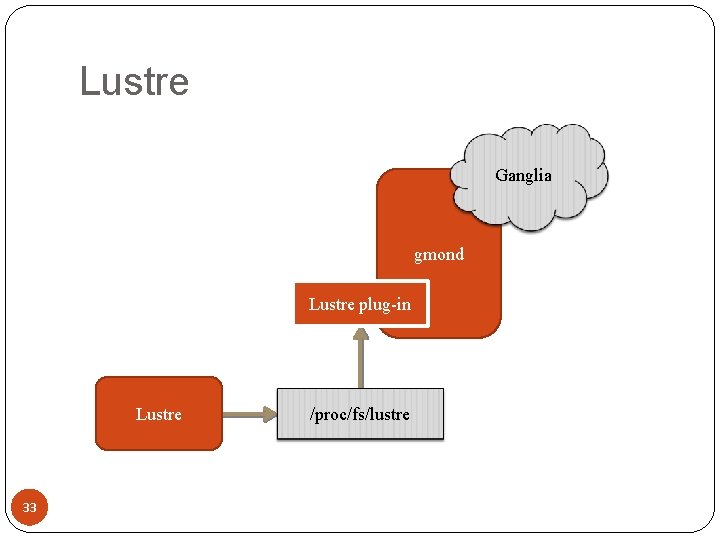

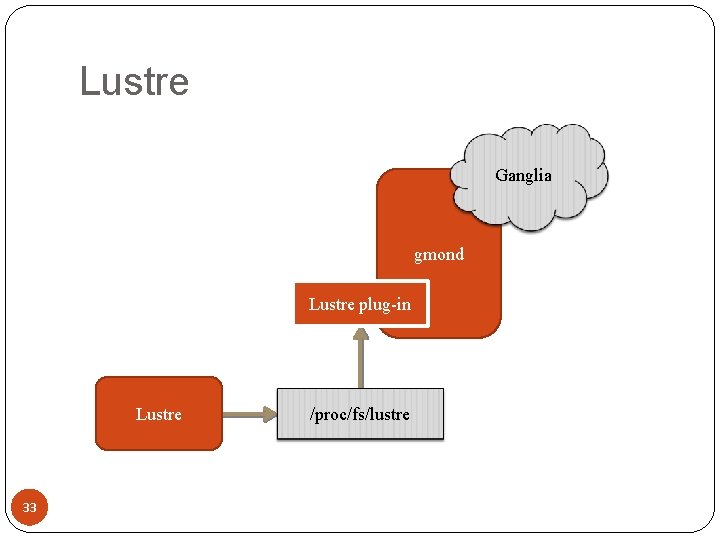

Lustre Ganglia gmond Lustre plug-in Lustre 33 /proc/fs/lustre

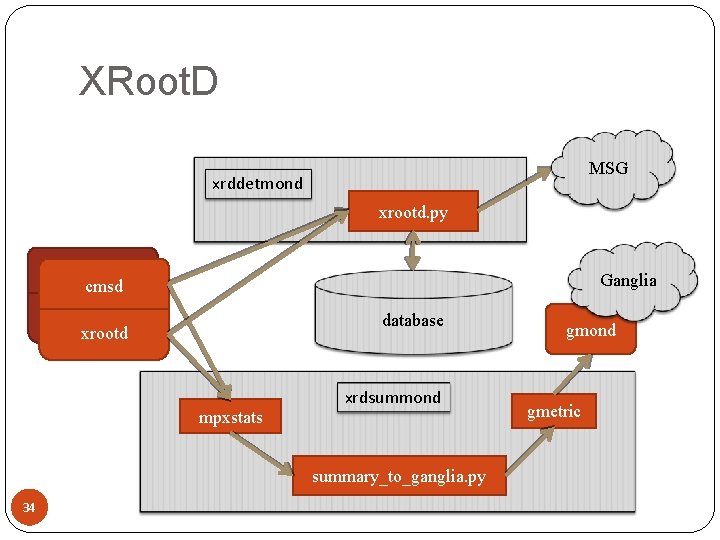

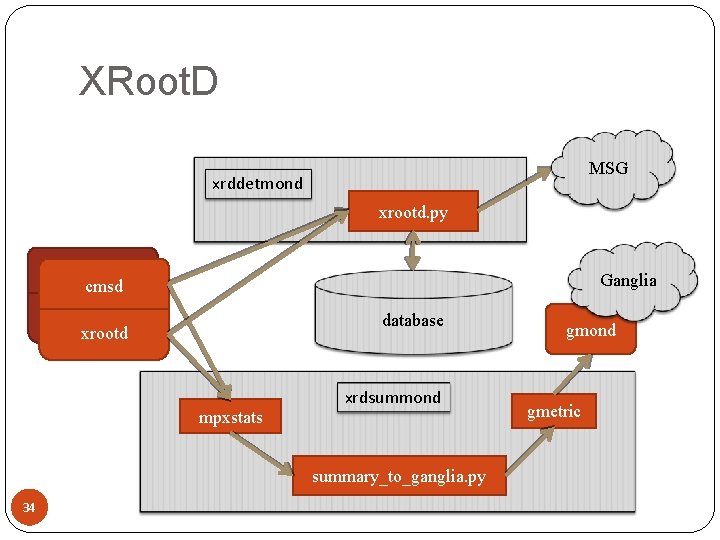

XRoot. D MSG xrddetmond xrootd. py cmsd Ganglia xrootd database mpxstats xrdsummond summary_to_ganglia. py 34 gmond gmetric

Testing infrastructure Goals Document installing Ganglia on a cluster Document configuring Tier 3 subsystems for monitoring Test modules in a minimal cluster environment Clusters: PBS: 3 nodes (1 head node, 2 worker nodes) Proof: 3 nodes (1 hn, 2 wns) Condor: 3 nodes (1 hn, 1 wn, 1 client) OGE: 3 nodes (1 hn, 2 wn) Lustre: 3 nodes (1 MDS, 1 OSS, 1 client) Xrootd: 3 nodes (1 manager, 2 servers) Xrootd II: 3 nodes (1 manager, 2 servers) Development machine Installation testing machine

Virtual testing infrastructure 23 nodes total only 2 physical servers running virtualization software (Open. VZ and Xen) fast deployment and reconfiguring of nodes as required performance is not a deciding factor

Results and plans The project is nearing completion Most modules are done Proof and XRoot. D modules already testing on real clusters Next steps: Message consumers OGE Testing and support Data transfer monitoring project

Thank you!

Tier 1 tier 2 tier 3 vocabulary

Tier 1 tier 2 tier 3 vocabulary Tiered vocabulary pyramid

Tiered vocabulary pyramid N tier architecture advantages and disadvantages

N tier architecture advantages and disadvantages Picme2.0

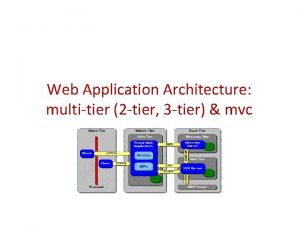

Picme2.0 3 tier web app

3 tier web app Three tier system of health infrastructure

Three tier system of health infrastructure Tier 2 advanced power strip

Tier 2 advanced power strip Maria tier 0

Maria tier 0 Tier 1 interventions examples

Tier 1 interventions examples Tier net

Tier net Tier 1 status

Tier 1 status Six tier health system in ethiopia

Six tier health system in ethiopia Lsw leader standard work

Lsw leader standard work Tier 3 isp

Tier 3 isp Nsw health standard precautions

Nsw health standard precautions Two tier data warehouse architecture

Two tier data warehouse architecture Gocc cpcs salary table 2021

Gocc cpcs salary table 2021 Bay row tier

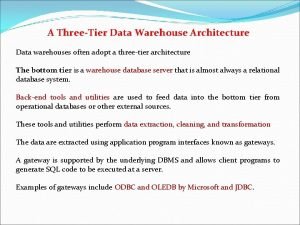

Bay row tier Three tier data warehouse

Three tier data warehouse Service tier

Service tier Muscles cut in episiotomy

Muscles cut in episiotomy Fro.comtier

Fro.comtier Modules of tier net

Modules of tier net Tier bari

Tier bari Tier 1 metrics

Tier 1 metrics K-trider level 1

K-trider level 1 Example of rhythm

Example of rhythm Nba sar tier ii

Nba sar tier ii Handowo dipo

Handowo dipo Middle tier acquisition documentation requirements

Middle tier acquisition documentation requirements Louisiana tier ii reporting

Louisiana tier ii reporting Tier.net training manual

Tier.net training manual Application layer

Application layer Tier net

Tier net Zenia chopra

Zenia chopra Two pillars of wot

Two pillars of wot Modern siem

Modern siem Telekommunikációs hálózatok elte

Telekommunikációs hálózatok elte Tier 1 isp

Tier 1 isp