Thumbs up Sentiment Classification using Machine Learning Techniques

Thumbs up? Sentiment Classification using Machine Learning Techniques Zoe Grippo, Alaz Tanyeri, Leon Zhan

Introduction ● Large precedent of topical categorization ○ The paper seeks to categorize by sentiment: positive or negative ● Data Set: Movie Reviews from IMDb ● 3 Machine Learning Methods: they outperform human-selected lexical methods ○ Topic classification to be more accurate than sentiment classification ● Issue: topics are identifiable by keywords alone, sentiment can be expressed in a subtler manner ○ "How could anyone sit through this movie? " ○ Missing long-term context

Previous Work ● Classifying documents according to their source or source style ● Determining the genre of texts, identifying features that detect subjectivity ● Lexical approaches where humans determine dictionary elements ● Similar work: uses unsupervised learning based on mutual information between document phrases and the words “excellent” and “poor” ● Groundbreaking: Use prior-knowledge-free supervised machine learning methods

Movie Review Data: Reviews From IMDb ● IMDb database: large, online collection of movie reviews ○ Reviews with numerical scores, limit 20 reviews per user ○ Extract ratings and convert reviews to positive, negative, and neutral reviews from star-ratings out of five ● Most difficult data of several domains for sentiment classification ● Corpus: 752 negative reviews, 1301 positive reviews with 144 reviewers total

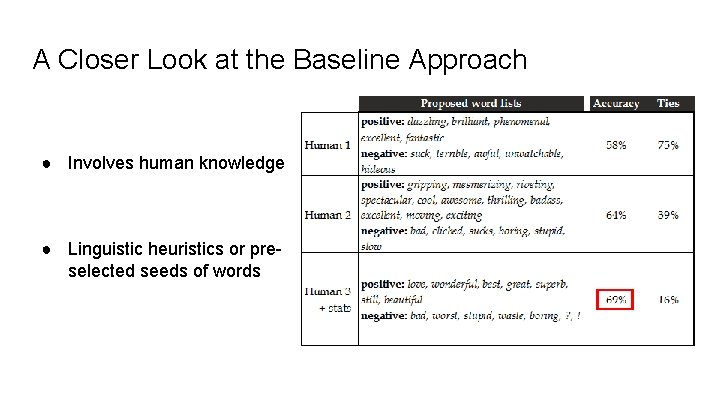

A Closer Look at the Baseline Approach ● Involves human knowledge ● Linguistic heuristics or preselected seeds of words

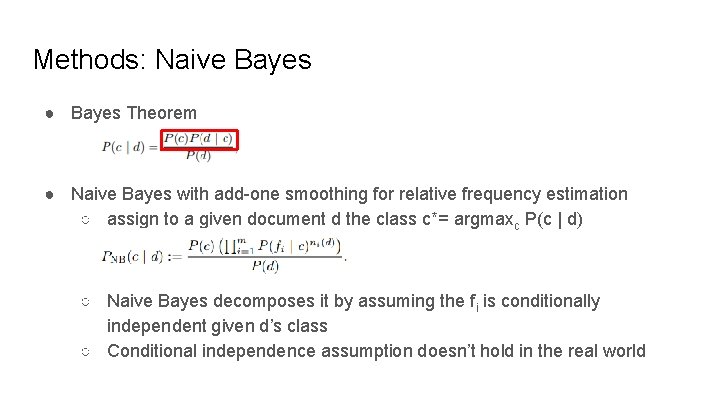

Methods: Naive Bayes ● Bayes Theorem ● Naive Bayes with add-one smoothing for relative frequency estimation ○ assign to a given document d the class c*= argmaxc P(c | d) ○ Naive Bayes decomposes it by assuming the fi is conditionally independent given d’s class ○ Conditional independence assumption doesn’t hold in the real world

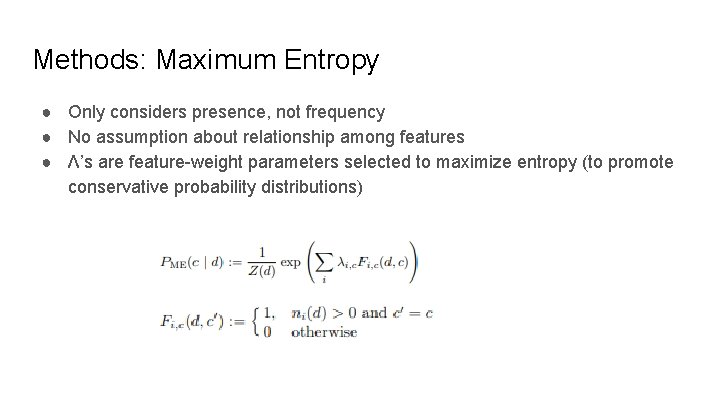

Methods: Maximum Entropy ● Only considers presence, not frequency ● No assumption about relationship among features ● Λ’s are feature-weight parameters selected to maximize entropy (to promote conservative probability distributions)

Methods: Support Vector Machines ● Construct separating hyperplanes between document vector representations ● Large margin and not probabilistic like Naive Bayes and Maximum Entropy

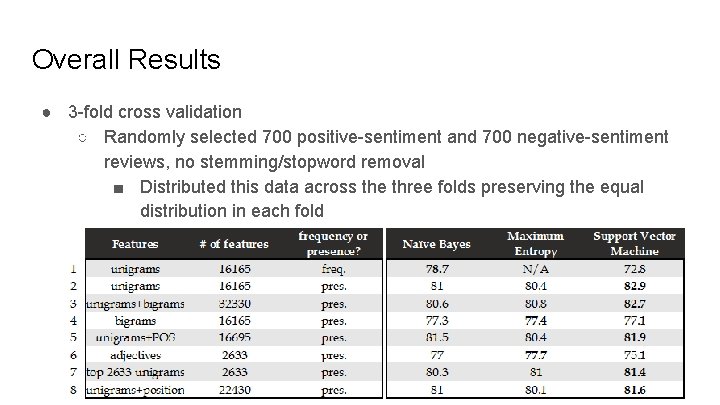

Overall Results ● 3 -fold cross validation ○ Randomly selected 700 positive-sentiment and 700 negative-sentiment reviews, no stemming/stopword removal ■ Distributed this data across the three folds preserving the equal distribution in each fold

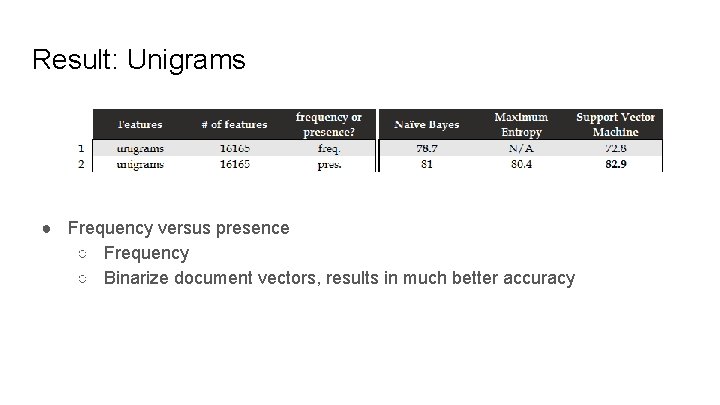

Result: Unigrams ● Frequency versus presence ○ Frequency ○ Binarize document vectors, results in much better accuracy

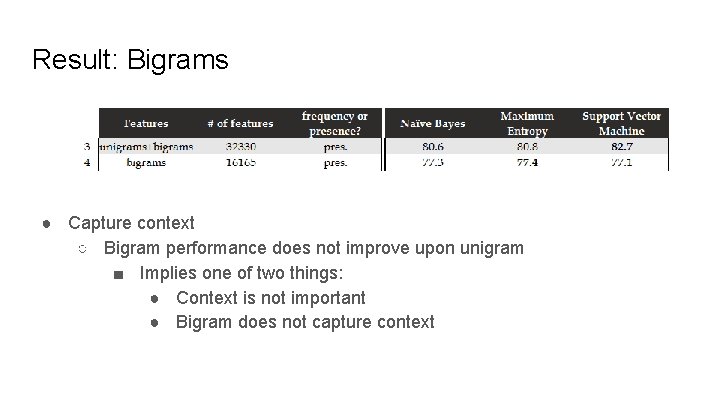

Result: Bigrams ● Capture context ○ Bigram performance does not improve upon unigram ■ Implies one of two things: ● Context is not important ● Bigram does not capture context

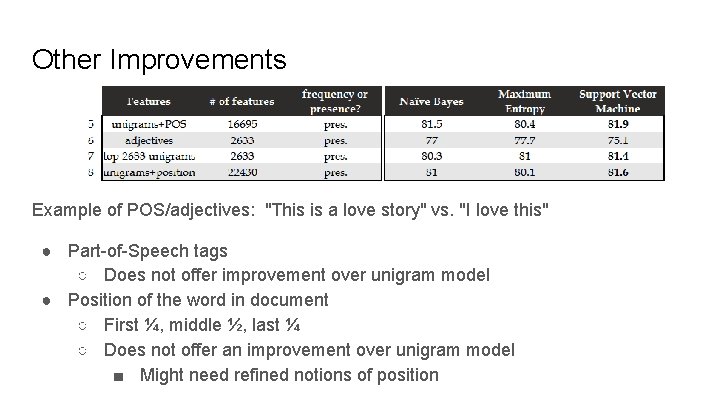

Other Improvements Example of POS/adjectives: "This is a love story" vs. "I love this" ● Part-of-Speech tags ○ Does not offer improvement over unigram model ● Position of the word in document ○ First ¼, middle ½, last ¼ ○ Does not offer an improvement over unigram model ■ Might need refined notions of position

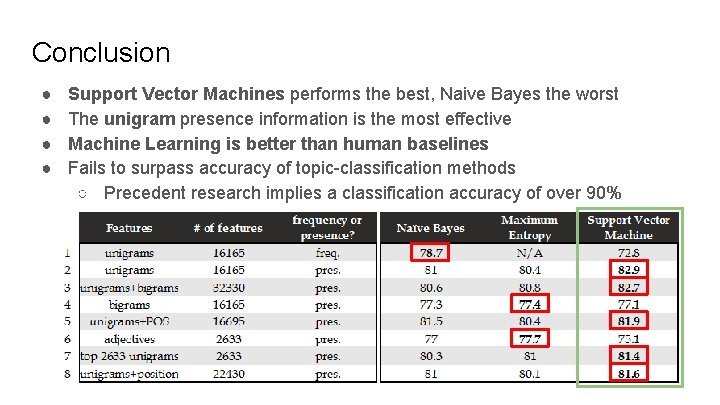

Conclusion ● ● Support Vector Machines performs the best, Naive Bayes the worst The unigram presence information is the most effective Machine Learning is better than human baselines Fails to surpass accuracy of topic-classification methods ○ Precedent research implies a classification accuracy of over 90%

Limitations ● “Thwarted Expectations” phenomenon “This film should be brilliant. It sounds like a great plot, the actors are first grade, and the supporting cast is good as well, and Stallone is attempting to deliver a good performance. However, it can’t hold up” or “I hate the Spice Girls. . [3 things the author hates about them]. . . Why I saw this movie is a really, really long story, but I did, and one would think I’d despise every minute of it. But. . . Okay, I’m really ashamed of it, but I enjoyed it. I mean, I admit it’s a really awful movie. . . the ninth floor of hell. . . The plot is such a mess that it’s terrible. But I loved it. ” ● Further Improvements: ○ Way of determining focus of each sentence

- Slides: 14