Thread Contracts for Safe Parallelism Rajesh Karmani P

![Illustration: Parallel Matrix Multiplication void mm (int [m, n] A, int[n, p] B) { Illustration: Parallel Matrix Multiplication void mm (int [m, n] A, int[n, p] B) {](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-6.jpg)

![Illustration: Parallel Matrix Multiplication ACCORD void mm (int [m, n] A, int[n, p] B) Illustration: Parallel Matrix Multiplication ACCORD void mm (int [m, n] A, int[n, p] B)](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-7.jpg)

![Fully Parallel Matrix Multiplication void mm ( int [m, n] A, int[n, p] B Fully Parallel Matrix Multiplication void mm ( int [m, n] A, int[n, p] B](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-8.jpg)

![Buggy Parallel Matrix Multiplication void mm ( int [m, n] A, int [n, p] Buggy Parallel Matrix Multiplication void mm ( int [m, n] A, int [n, p]](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-12.jpg)

![Task II: Program satisfies annotations? void mm ( int [m, n] A, int[n, p] Task II: Program satisfies annotations? void mm ( int [m, n] A, int[n, p]](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-14.jpg)

![Montecarlo void mc (int[n. Tasks] tasks) { int slice : = (n. Tasks+n. Threads-1)/n. Montecarlo void mc (int[n. Tasks] tasks) { int slice : = (n. Tasks+n. Threads-1)/n.](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-18.jpg)

![Quicksort void qsort (int[n] A, int i, int j) reads i, j writes A[$k] Quicksort void qsort (int[n] A, int i, int j) reads i, j writes A[$k]](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-19.jpg)

![Sparse Matrix Vector Multiplication sparsematmult-jgf writes Sparse. Matmult. yt[row[$j]] where $j >= low[t-id] and Sparse Matrix Vector Multiplication sparsematmult-jgf writes Sparse. Matmult. yt[row[$j]] where $j >= low[t-id] and](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-20.jpg)

- Slides: 25

Thread Contracts for Safe Parallelism Rajesh Karmani, P Madhusudan, Brandon Moore University of Illinois at Urbana-Champaign PPo. PP 2011 NSF

What’s the problem? Data-races in parallel programs is just plain wrong. �For example, C++ gives no semantics for programs with races! But no mechanism currently for building large data-racefree programs, without significantly changing the programming style Proposal of this paper: � An annotation mechanism for data-parallel programs that helps building large data-race-free programs � Annotation augments existing code �Annotation Race-freedom (automatically checked) 2

Data-race and language semantics “simultaneous” accesses to a memory location by two different threads, where one of them is a write. � Memory models for programming languages �Specifies what exactly will be ensured for a read instruction in the program �Java memory model [Manson et al. , POPL ‘ 05] � A complex , buggy model for programs with races �C++ (new version) [Boehm, Adve, PLDI ’ 08] � No semantics for programs with races! Consensus – Data-Race-Free (DRF) Guarantee: Memory model assures that Data-race-free programs sequentially consistent 3

ACCORD (Annotations for Concurrent Co-ORDination) �Light-weight annotation that help develop parallel software �Formally express the coordination strategy that the programmer has in mind �Check the strategy (for data-race-freedom) and its implementation �Annotations have been successful in avoiding memory errors in sequential programs �Eiffel, JML, Microsoft Code Contracts, Cofoja �NOT a type system to express memory regions or 4 program synchronization

In this work… Annotations for data race freedom in �data-parallel programs that access arrays, vectors, matrices �with fork-join synchronization and locks �Typical for Open. MP applications Annotations express the sharing strategy 5

![Illustration Parallel Matrix Multiplication void mm int m n A intn p B Illustration: Parallel Matrix Multiplication void mm (int [m, n] A, int[n, p] B) {](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-6.jpg)

Illustration: Parallel Matrix Multiplication void mm (int [m, n] A, int[n, p] B) { for (int i: =0; i < m; i : = i + 1) for (int j: =0; j < n; j : = j + 1) foreach (int k: =0; k < p; k : = k+1) { C[i, k] : = C[i, k] + (A[i, j] * B[j, k]) ; } } foreach loop: • semantically creates p threads; one for each iteration of the loop • implicit barrier at end of foreach loop 6

![Illustration Parallel Matrix Multiplication ACCORD void mm int m n A intn p B Illustration: Parallel Matrix Multiplication ACCORD void mm (int [m, n] A, int[n, p] B)](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-7.jpg)

Illustration: Parallel Matrix Multiplication ACCORD void mm (int [m, n] A, int[n, p] B) { annotation for (int i: =0; i < m; i : = i + 1) for (int j: =0; j < n; j : = j + 1) foreach (int k: =0; k < p; k : = k+1) reads A[i, j], B[j, k], C[i, k] writes C[i, k] { C[i, k] : = C[i, k] + (A[i, j] * B[j, k]) ; } } Annotation specifies the read and write sets for kth thread, for each k. Sets can be an over-approximation. Annotation utilizes current values of the program variables (i, j) 7 and thread-id (k)

![Fully Parallel Matrix Multiplication void mm int m n A intn p B Fully Parallel Matrix Multiplication void mm ( int [m, n] A, int[n, p] B](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-8.jpg)

Fully Parallel Matrix Multiplication void mm ( int [m, n] A, int[n, p] B ) { foreach (int i: =0; i < m; i : = i + 1) reads A[i, $x], B[$x, $y], C[i, $y] writes C[i, $y] where 0 <=$x and $x< n and 0 <= $y and $y <p { for (int j: =0; j < n; j : = j + 1) foreach (int k: =0; k < p ; k : = k+1) reads A[i, j], B[j, k], C[i, k] writes C[i, k] { C[i, k] : = C[i, k] + (A[i, j] * B[j, k]) ; } } Aux variables ($x, $y) are quantified over specified ranges where clause: arithmetic and logical constraints 8

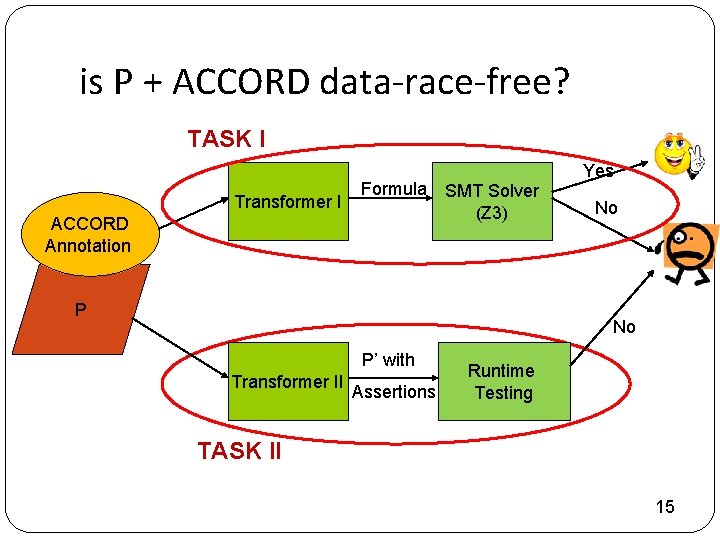

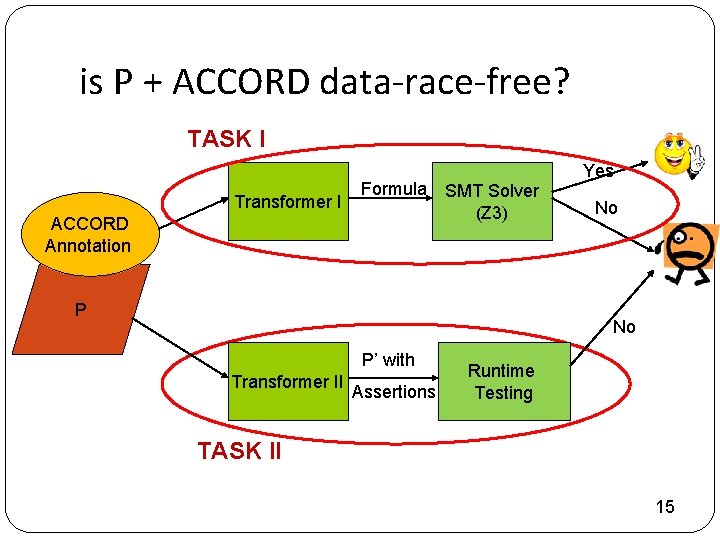

Checking data-race-freedom Given a program with an ACCORD annotation, program has no data-races iff: I. ACCORD annotations imply race-freedom? � Check the strategy, independent of the program II. Program satisfies the ACCORD annotations? � Check program implementation against strategy 9

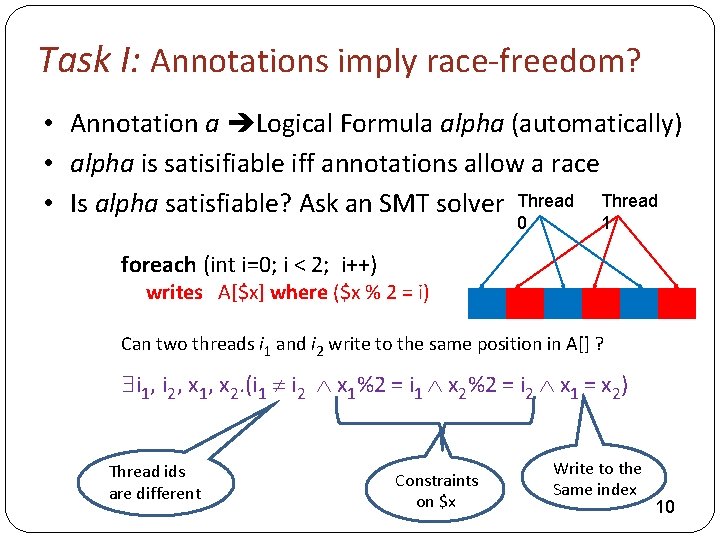

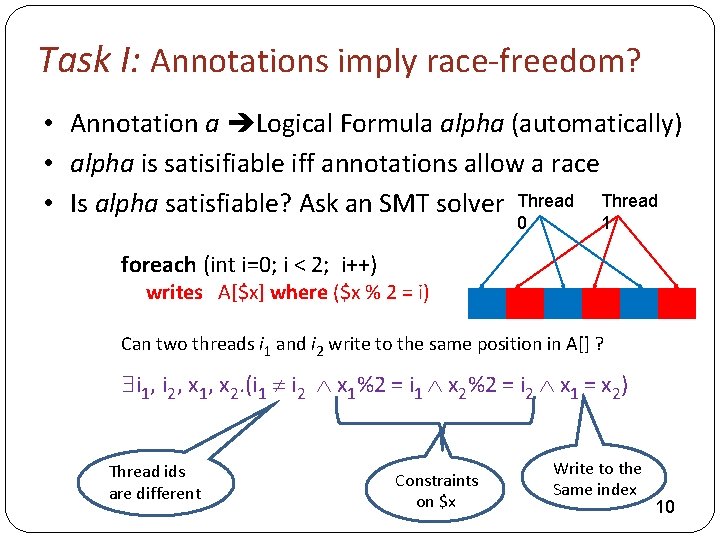

Task I: Annotations imply race-freedom? • Annotation a Logical Formula alpha (automatically) • alpha is satisifiable iff annotations allow a race • Is alpha satisfiable? Ask an SMT solver Thread 0 1 foreach (int i=0; i < 2; i++) writes A[$x] where ($x % 2 = i) Can two threads i 1 and i 2 write to the same position in A[] ? $i 1, i 2, x 1, x 2. (i 1 i 2 x 1%2 = i 1 x 2%2 = i 2 x 1 = x 2) Thread ids are different Constraints on $x Write to the Same index 10

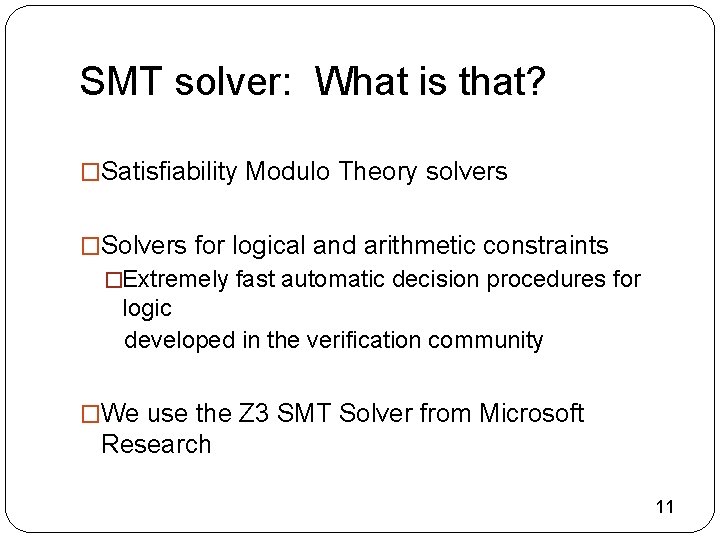

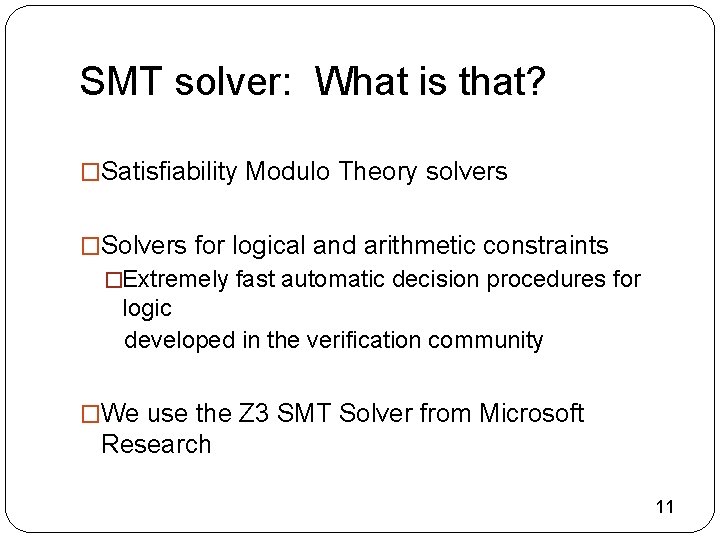

SMT solver: What is that? �Satisfiability Modulo Theory solvers �Solvers for logical and arithmetic constraints �Extremely fast automatic decision procedures for logic developed in the verification community �We use the Z 3 SMT Solver from Microsoft Research 11

![Buggy Parallel Matrix Multiplication void mm int m n A int n p Buggy Parallel Matrix Multiplication void mm ( int [m, n] A, int [n, p]](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-12.jpg)

Buggy Parallel Matrix Multiplication void mm ( int [m, n] A, int [n, p] B ) { foreach (int i: =0; i < m; i : = i + 1) reads A[i, x], B[x, y], C[i, y] writes C[i, y] where 0 <=x and x< n and 0 <= y and y <p foreach (int j: =0; j < n; j : = j + 1) reads A[i, j], B[j, $y], C[i, $y] writes C[i, $y] where 0 <= $y and $y <p foreach (int k: =0; k < p ; k : = k+1) reads A[i, j], B[j, k], C[i, k] writes C[i, k] { C[i, k] : = C[i, k] + (A[i, j] * B[j, k]) ; } } $j 1, j 2 (j 1 j 2 0 y y < p i=i y=y) Satisfiable; hence annotation does not imply race-freedom 12

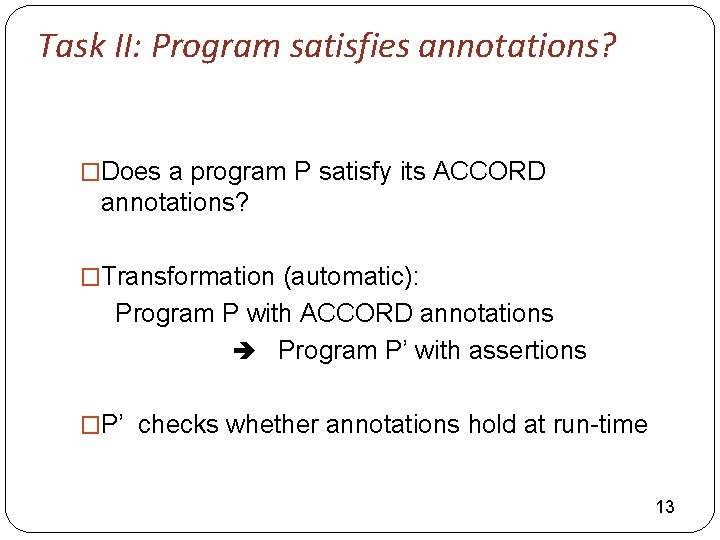

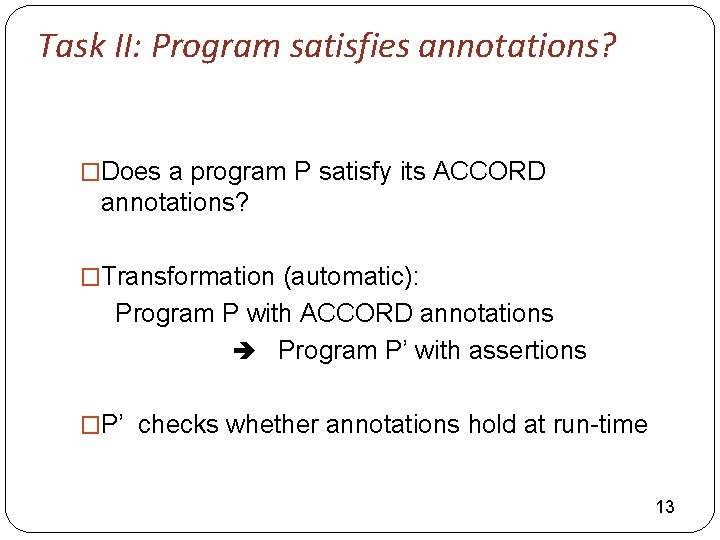

Task II: Program satisfies annotations? �Does a program P satisfy its ACCORD annotations? �Transformation (automatic): Program P with ACCORD annotations Program P’ with assertions �P’ checks whether annotations hold at run-time 13

![Task II Program satisfies annotations void mm int m n A intn p Task II: Program satisfies annotations? void mm ( int [m, n] A, int[n, p]](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-14.jpg)

Task II: Program satisfies annotations? void mm ( int [m, n] A, int[n, p] B ) { foreach (int i: =0; i < m; i : = i + 1) // reads A[i, $x], B[$x, $y], C[i, $y] writes C[i, $y] // where 0 <=$x and $x< n and 0 <= $y and $y <p { Memoiz n’ : = n; p’ : = p; e for (int j: =0; j < n; j : = j + 1) variable for (int k: =0; k < p ; k : = k+1) { s assert ( i=i and 0 <= j and j < n’); // A[i, $x] assert ( 0 <= j and j < n’ and 0 <= k and k < p’); // B[$x, $y] assert ( i=i and 0 <= k and k < p’); // C[i, $y] Convert C[i, k] : = C[i, k] + (A[i, j] * B[j, k]) ; annotatio } n to 14 }

is P + ACCORD data-race-free? TASK I Transformer I ACCORD Annotation Formula SMT Solver (Z 3) P Yes No No P’ with Transformer II Assertions Runtime Testing TASK II 15

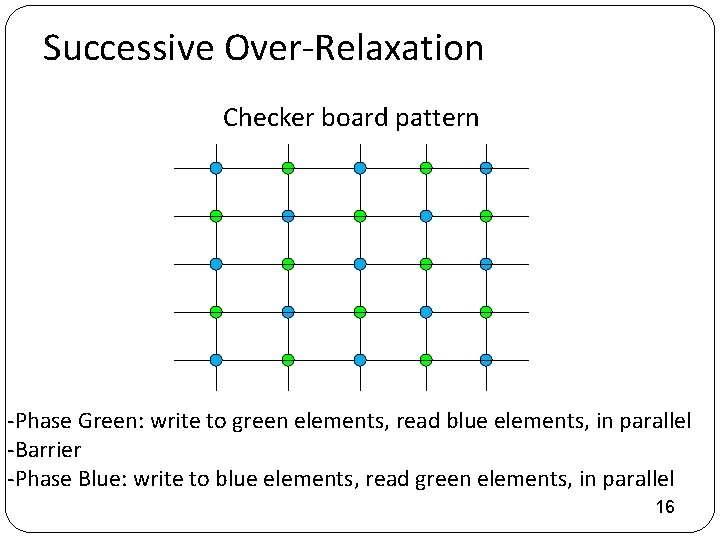

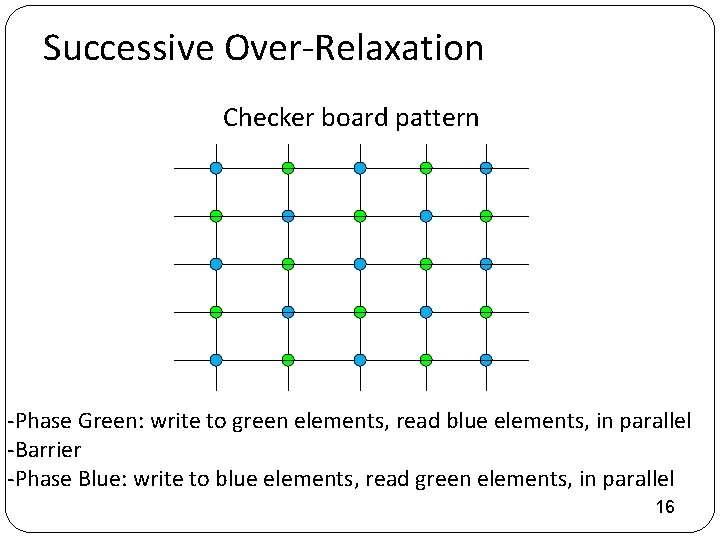

Successive Over-Relaxation Checker board pattern -Phase Green: write to green elements, read blue elements, in parallel -Barrier -Phase Blue: write to blue elements, read green elements, in parallel 16

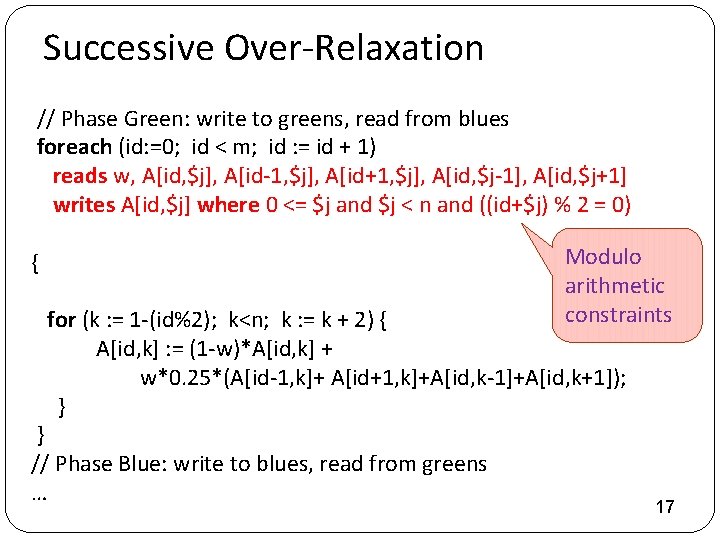

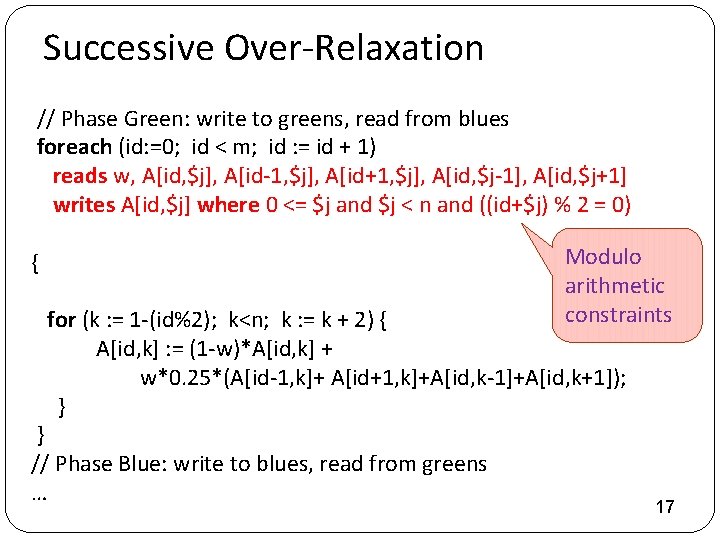

Successive Over-Relaxation // Phase Green: write to greens, read from blues foreach (id: =0; id < m; id : = id + 1) reads w, A[id, $j], A[id-1, $j], A[id+1, $j], A[id, $j-1], A[id, $j+1] writes A[id, $j] where 0 <= $j and $j < n and ((id+$j) % 2 = 0) { Modulo arithmetic constraints for (k : = 1 -(id%2); k<n; k : = k + 2) { A[id, k] : = (1 -w)*A[id, k] + w*0. 25*(A[id-1, k]+ A[id+1, k]+A[id, k-1]+A[id, k+1]); } } // Phase Blue: write to blues, read from greens … 17

![Montecarlo void mc intn Tasks tasks int slice n Tasksn Threads1n Montecarlo void mc (int[n. Tasks] tasks) { int slice : = (n. Tasks+n. Threads-1)/n.](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-18.jpg)

Montecarlo void mc (int[n. Tasks] tasks) { int slice : = (n. Tasks+n. Threads-1)/n. Threads; foreach (int i: =0; i<n. Threads; i: =i+1) reads next under lock gl, tasks[$k] writes next, results[$j] under lock gl where (i*slice)<= $k and $k<((i+1)*slice) and 0 <= $j and $j < n. Tasks { int ilow : = i*slice; int iupper : = (i+1)*slice; if (i = n. Threads-1) iupper : = n. Tasks; for(int run: =ilow; run<iupper; run: =run+1) { int result : = simulate(tasks[run]); } } } synchronized (gl) { next : = next + 1; results[next] : = result; } Annotations for expressing accesses protected by a lock Threads read, write to a global location by first acquiring a lock 18

![Quicksort void qsort intn A int i int j reads i j writes Ak Quicksort void qsort (int[n] A, int i, int j) reads i, j writes A[$k]](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-19.jpg)

Quicksort void qsort (int[n] A, int i, int j) reads i, j writes A[$k] where (i <= $k and $k < j) { if (j-i < 2) return; int pivot : = A[i]; //first element int p_index : = partition(A, pivot, i, j); // swap 1 st element and element at p_index par requires p_index >= i and p_index < j { thread writes A[$k] where (i<=$k and $k<p_index) { qsort(A, i, p_index); } with thread writes A[$k] where (p_index<$k and $k<j) { qsort(A, p_index + 1, j); } } } 19

![Sparse Matrix Vector Multiplication sparsematmultjgf writes Sparse Matmult ytrowj where j lowtid and Sparse Matrix Vector Multiplication sparsematmult-jgf writes Sparse. Matmult. yt[row[$j]] where $j >= low[t-id] and](https://slidetodoc.com/presentation_image_h2/15372e3fc65c4d0d4bb7df71b6d118e9/image-20.jpg)

Sparse Matrix Vector Multiplication sparsematmult-jgf writes Sparse. Matmult. yt[row[$j]] where $j >= low[t-id] and $j <= high[t-id] requires forall $i 1, $i 2, $x, $y. ($i 1 != $i 2 and low[$i 1] <= $x and $x <= high[$i 1] and low[$i 2] <= $y and $y <= high[$i 2] implies row[$x] != row [$y]) 1 0 Thread 1 3 Low 2 0 4 2 3 5 Hig h 4 Ro w Sparse. Matmult. y t 20

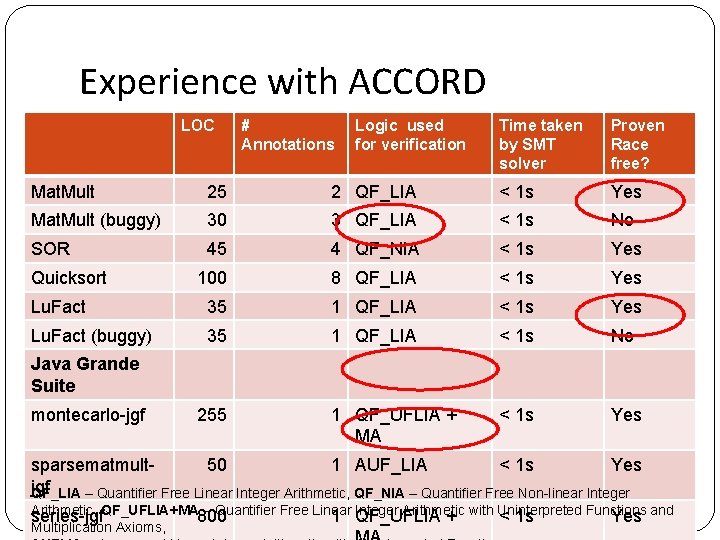

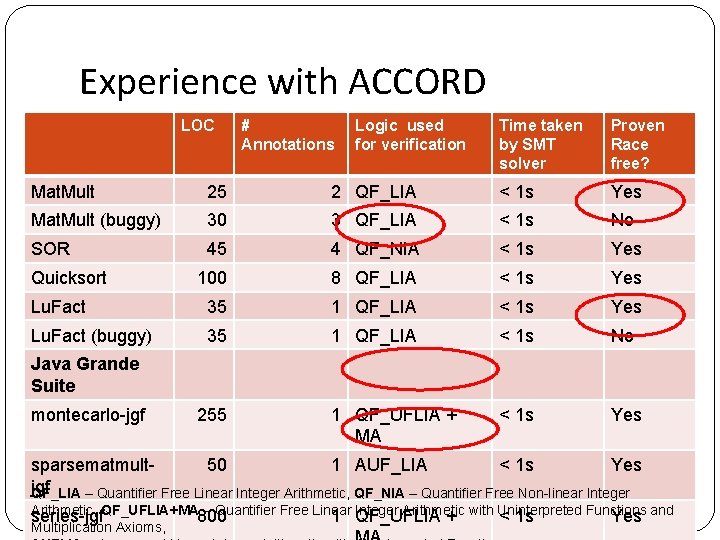

Experience with ACCORD LOC # Annotations Logic used for verification Time taken by SMT solver Proven Race free? Mat. Mult 25 2 QF_LIA < 1 s Yes Mat. Mult (buggy) 30 3 QF_LIA < 1 s No SOR 45 4 QF_NIA < 1 s Yes 100 8 QF_LIA < 1 s Yes Lu. Fact 35 1 QF_LIA < 1 s Yes Lu. Fact (buggy) 35 1 QF_LIA < 1 s No 1 QF_UFLIA + MA < 1 s Yes Quicksort Java Grande Suite montecarlo-jgf 255 sparsematmult 50 1 AUF_LIA < 1 s Yes jgf QF_LIA – Quantifier Free Linear Integer Arithmetic, QF_NIA – Quantifier Free Non-linear Integer 21 Arithmetic, QF_UFLIA+MA 800 – Quantifier Free Linear Arithmetic Functions series-jgf 1 Integer QF_UFLIA + with Uninterpreted < 1 s Yes and Multiplication Axioms,

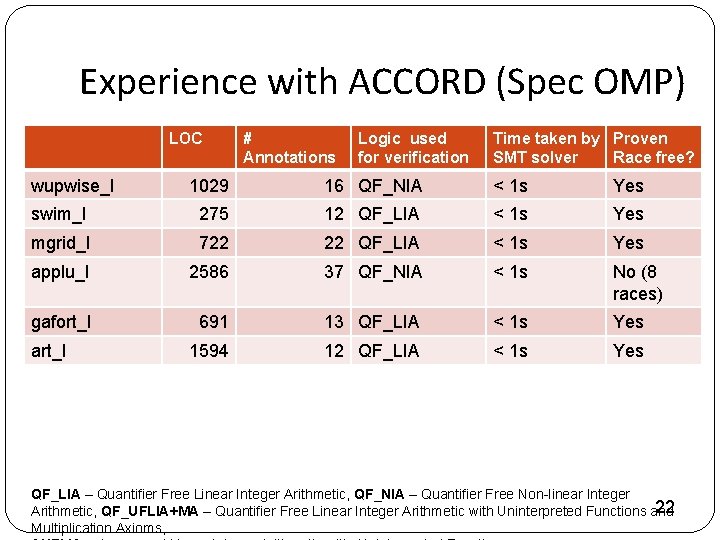

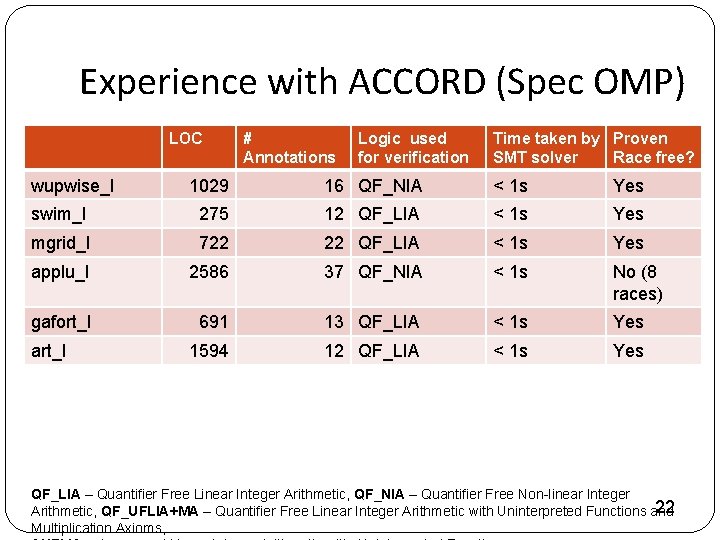

Experience with ACCORD (Spec OMP) LOC wupwise_l # Annotations Logic used for verification Time taken by Proven SMT solver Race free? 1029 16 QF_NIA < 1 s Yes swim_l 275 12 QF_LIA < 1 s Yes mgrid_l 722 22 QF_LIA < 1 s Yes applu_l 2586 37 QF_NIA < 1 s No (8 races) gafort_l 691 13 QF_LIA < 1 s Yes 1594 12 QF_LIA < 1 s Yes art_l QF_LIA – Quantifier Free Linear Integer Arithmetic, QF_NIA – Quantifier Free Non-linear Integer 22 Arithmetic, QF_UFLIA+MA – Quantifier Free Linear Integer Arithmetic with Uninterpreted Functions and Multiplication Axioms,

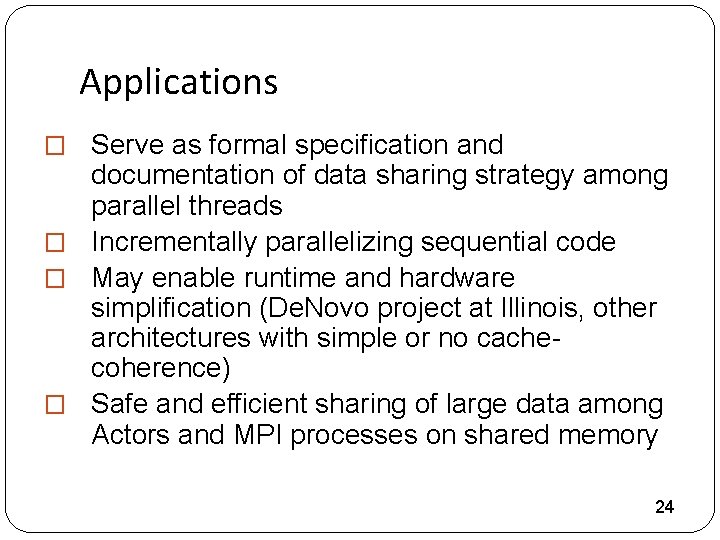

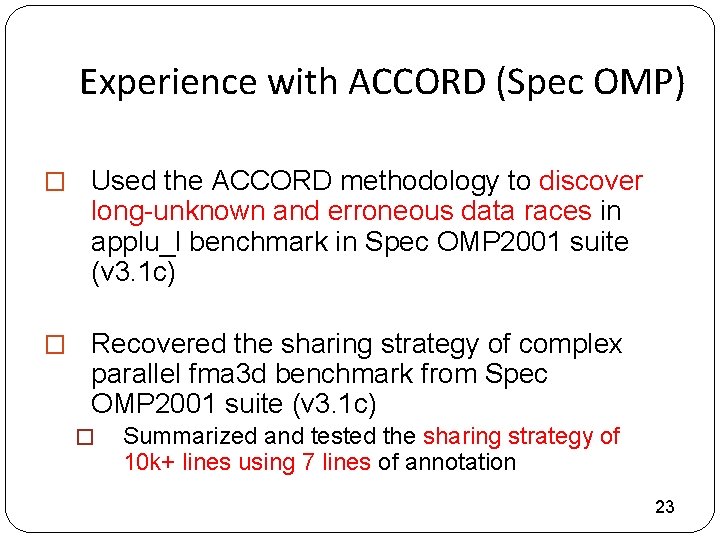

Experience with ACCORD (Spec OMP) � Used the ACCORD methodology to discover long-unknown and erroneous data races in applu_l benchmark in Spec OMP 2001 suite (v 3. 1 c) � Recovered the sharing strategy of complex parallel fma 3 d benchmark from Spec OMP 2001 suite (v 3. 1 c) � Summarized and tested the sharing strategy of 10 k+ lines using 7 lines of annotation 23

Applications � Serve as formal specification and documentation of data sharing strategy among parallel threads � Incrementally parallelizing sequential code � May enable runtime and hardware simplification (De. Novo project at Illinois, other architectures with simple or no cachecoherence) � Safe and efficient sharing of large data among Actors and MPI processes on shared memory 24

Take home message “Thread Contracts for Safe Parallelism, ” Rajesh Karmani, P Madhusudan, Brandon Moore. �ACCORD: a light-weight, formal annotation language that allows programmers to document the sharing strategy among threads �Reduces the task of checking race-freedom to two simpler problems of constraint satisfaction and program testing �Does NOT change the style of programming �Only fork-join parallelism on arrays/matrices now �Future work: �Combine Static regions of dynamic heaps (See work on DPJ) and Dynamic regions (ACCORD) �Support aliasing and richer synchronization idioms �Help in efficiently testing parallel programs 25