Thesis Defense Semantic Image Representation for Visual Recognition

![Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-6.jpg)

![Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-23.jpg)

![Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-28.jpg)

![Evaluation • Dataset? – Wikipedia Featured Articles [Novel] Around 850, out of obscurity rose Evaluation • Dataset? – Wikipedia Featured Articles [Novel] Around 850, out of obscurity rose](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-35.jpg)

![Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-40.jpg)

![Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-49.jpg)

- Slides: 66

Thesis Defense Semantic Image Representation for Visual Recognition Nikhil Rasiwasia, Rasiwasia Nuno Vasconcelos Statistical Visual Computing Laboratory University of California, San Diego SVCL 1

• Ill pause for a few moments so that you all can finish reading this. © Bill Watterson SVCL 2

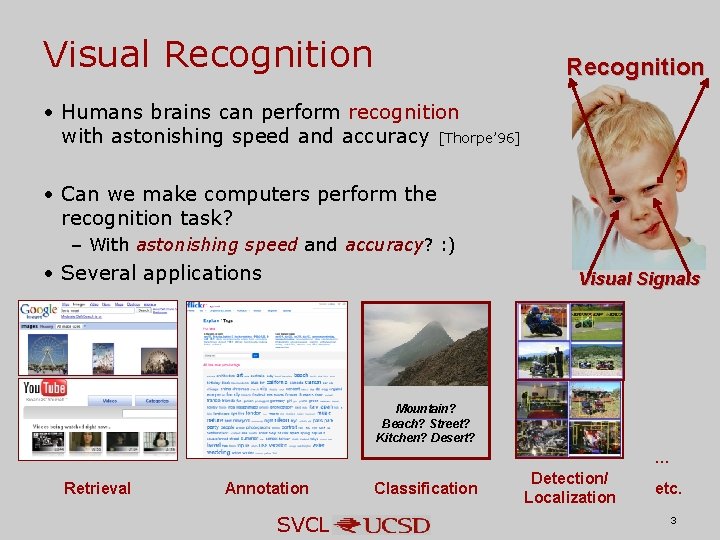

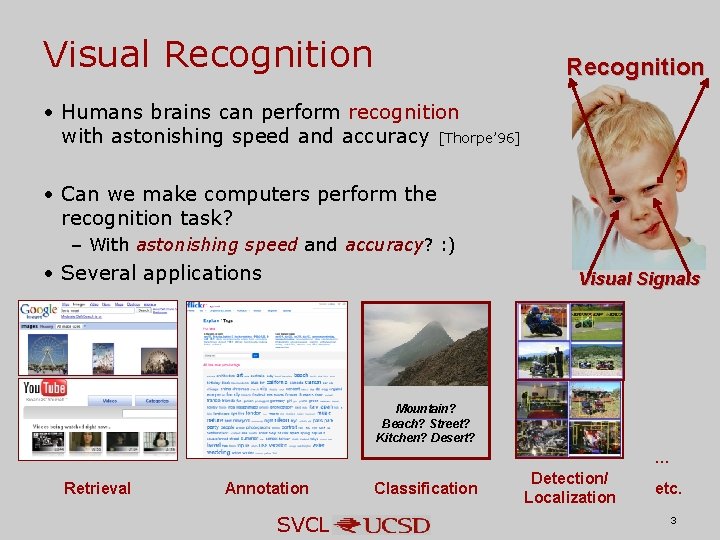

Visual Recognition • Humans brains can perform recognition with astonishing speed and accuracy [Thorpe’ 96] • Can we make computers perform the recognition task? – With astonishing speed and accuracy? : ) • Several applications Visual Signals Mountain? Beach? Street? Kitchen? Desert? … Retrieval Annotation SVCL Classification Detection/ Localization etc. 3

Why? • Internet in Numbers – 5, 000, 000 – Photos hosted by Flickr (Sept’ 2010). – 3000+ – Photos uploaded per minute to Flickr. – 3, 000, 000 – Photos uploaded per month to Facebook. – 20, 000 – Videos uploaded to Facebook per month. – 2, 000, 000 – Videos watched per day on You. Tube. – 35 – Hours of video uploaded to You. Tube every minute. – Source: http: //www. cbsnews. com/8301 -501465_162 -20028418 -501465. html • Several other sources of visual content – Printed media, surveillance, medical imaging, movies, robots, other automated machines, etc. …manual processing of the visual content is prohibitive. SVCL 4

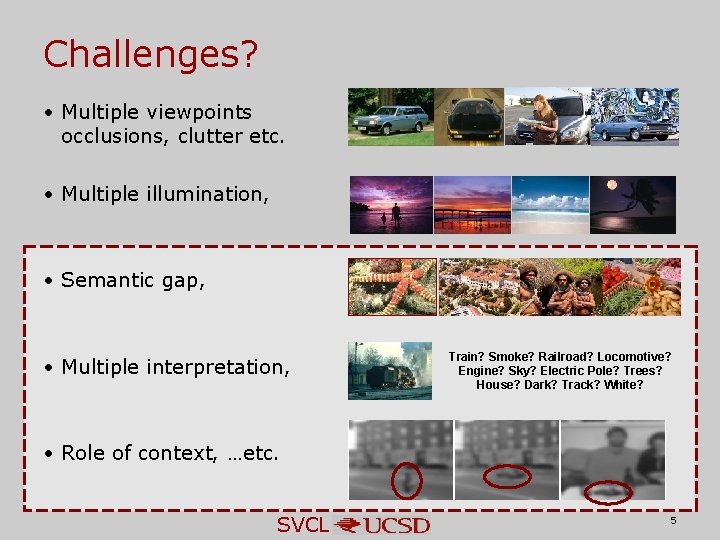

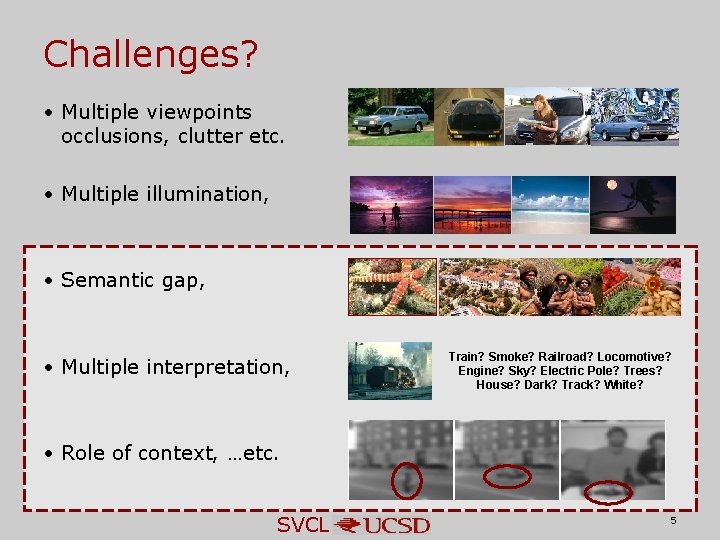

Challenges? • Multiple viewpoints occlusions, clutter etc. • Multiple illumination, • Semantic gap, • Multiple interpretation, Train? Smoke? Railroad? Locomotive? Engine? Sky? Electric Pole? Trees? House? Dark? Track? White? • Role of context, …etc. SVCL 5

![Outline Semantic Image Representation Appearance Based Image Representation Semantic Multinomial Contribution Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-6.jpg)

Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] • Benefits for Visual Recognition – Abstraction: Bridging the Semantic Gap (QBSE) [Contribution] – Sensory Integration: Cross-modal Retrieval [Contribution] – Context: Holistic Context Models [Contribution] • Connections to the literature – Topic Models: Latent Dirichlet Allocation – Text vs Images – Importance of Supervision: Topic-supervised Latent Dirichlet Allocation (ts LDA) [Contribution] SVCL 6

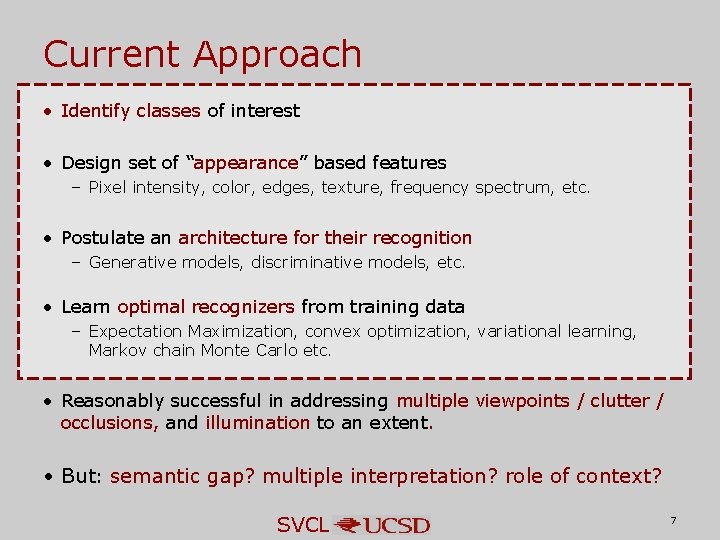

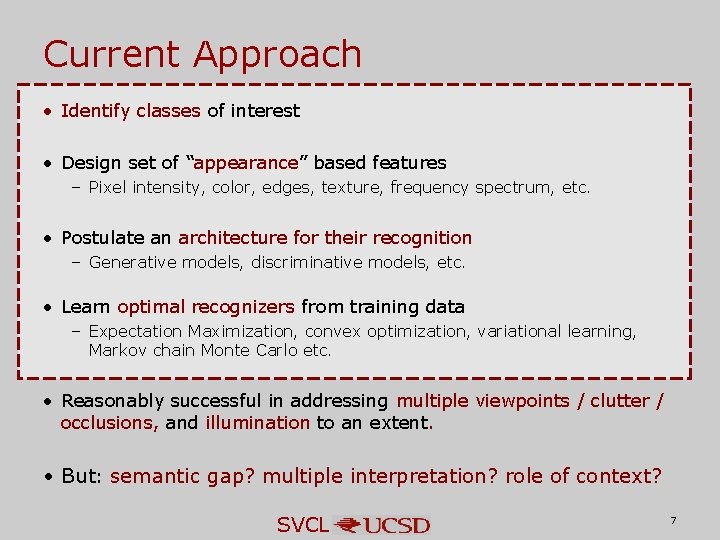

Current Approach • Identify classes of interest • Design set of “appearance” based features – Pixel intensity, color, edges, texture, frequency spectrum, etc. • Postulate an architecture for their recognition – Generative models, discriminative models, etc. • Learn optimal recognizers from training data – Expectation Maximization, convex optimization, variational learning, Markov chain Monte Carlo etc. • Reasonably successful in addressing multiple viewpoints / clutter / occlusions, and illumination to an extent. • But: semantic gap? multiple interpretation? role of context? SVCL 7

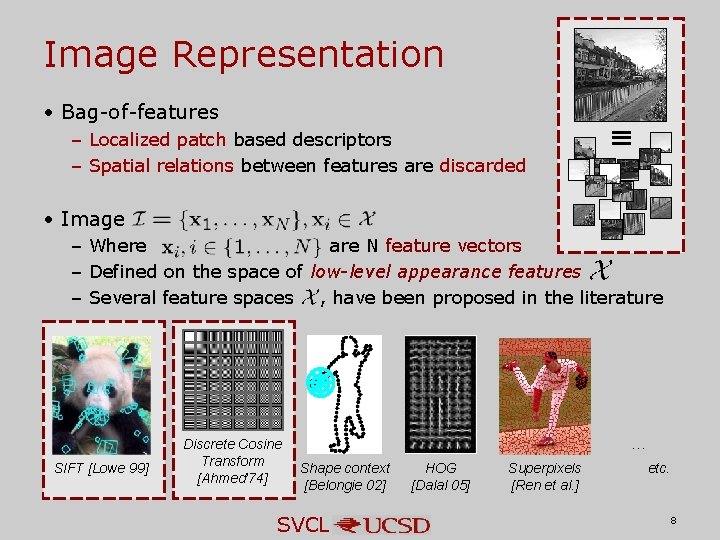

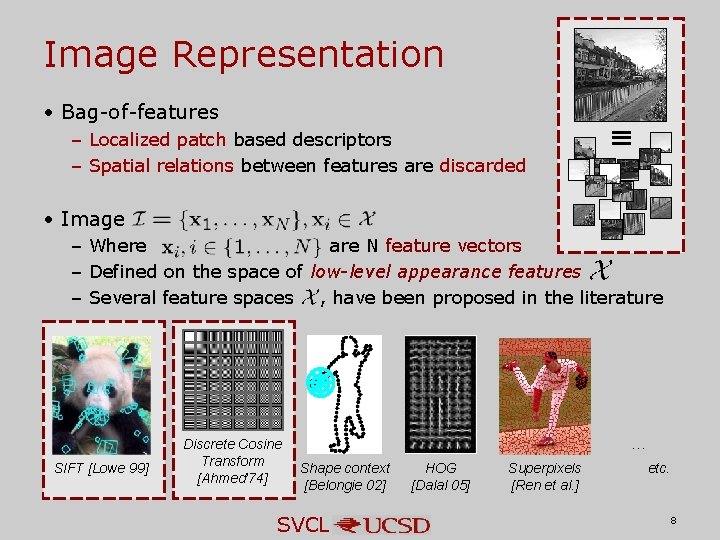

Image Representation • Bag-of-features – Localized patch based descriptors – Spatial relations between features are discarded • Image – Where are N feature vectors – Defined on the space of low-level appearance features – Several feature spaces , have been proposed in the literature SIFT [Lowe 99] Discrete Cosine Transform [Ahmed’ 74] … Shape context [Belongie 02] SVCL HOG [Dalal 05] Superpixels [Ren et al. ] etc. 8

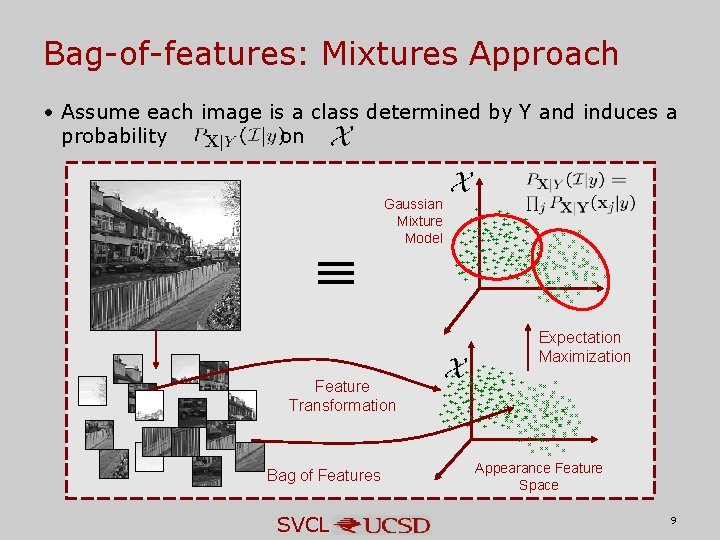

Bag-of-features: Mixtures Approach • Assume each image is a class determined by Y and induces a probability on Bag of Features SVCL + + + ++ ++++ +++ ++++++ ++ + + +++ ++ + + +++++++ + + + + ++ ++ + + + Expectation Maximization + +++ + + + ++ + + + ++++++ ++ + + ++ ++ + + + +++ + + + + + + ++ + Feature Transformation + + + + ++ ++ +++ +++++ ++ + + + +++++ + + + + + + + + + ++ + + +++ + + + + ++ ++ + + ++ +++ + + ++ ++ + + + ++ + Gaussian Mixture Model Appearance Feature Space 9

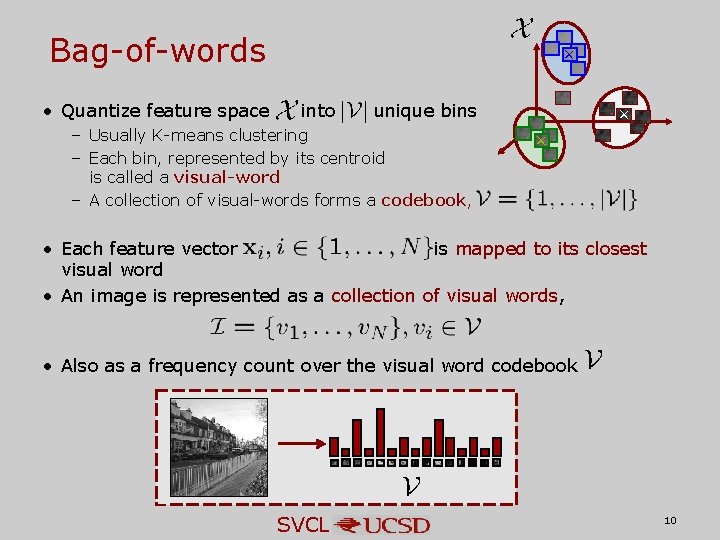

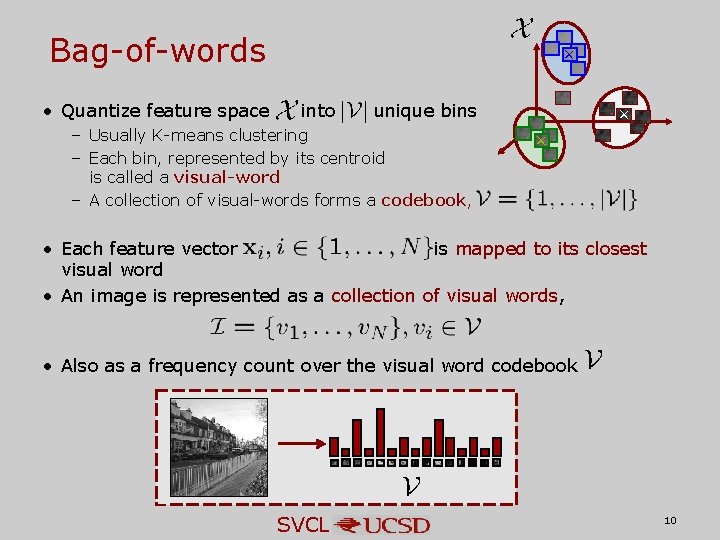

into unique bins + – Usually K-means clustering – Each bin, represented by its centroid is called a visual-word – A collection of visual-words forms a codebook, + • Quantize feature space + Bag-of-words • Each feature vector is mapped to its closest visual word • An image is represented as a collection of visual words, • Also as a frequency count over the visual word codebook SVCL 10

Eg. Image Retrieval QUERY TOP MATCHES SVCL 11

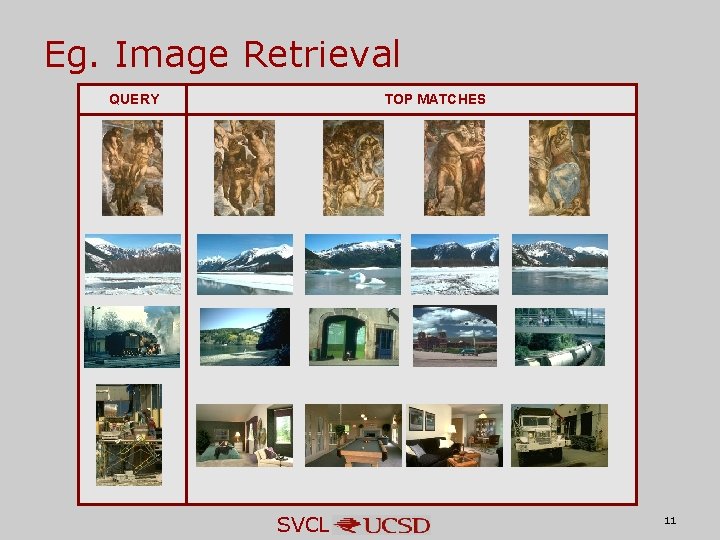

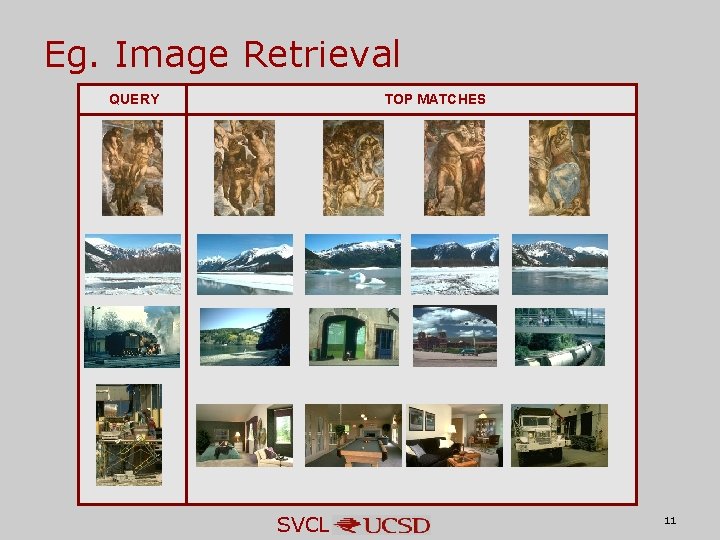

Pause for a moment – The Human Perspective • What is this ------> – An image of • Buildings • Street • Cars • Sky • Flowers • City scene • … • Some concepts are more prominent than others. • From ‘Street’ class! SVCL 12

An Image – An Intuition. • Human understanding of images suggests that they are “visual representations” of certain “meaningful” semantic concepts. • There can be several concepts represented by an image. • But, practically impossible to enlist all possible concepts represented • So, define a ‘vocabulary’ of concepts. SVCL Vocabulary Bedroom Suburb Kitchen Living room Coast Forest Street Highway Tall building Inside city Office Mountain Store Open country Industrial bedroom suburb kitchen livingroom coast forest highway insidecity mountain opencountry street tall building office store industrial • Assign weights to the concepts based on their prominence in the image. {buildings, street, sky, clouds, tree, cars, people, window, footpath, flowers, poles, wires, tires, …} 13

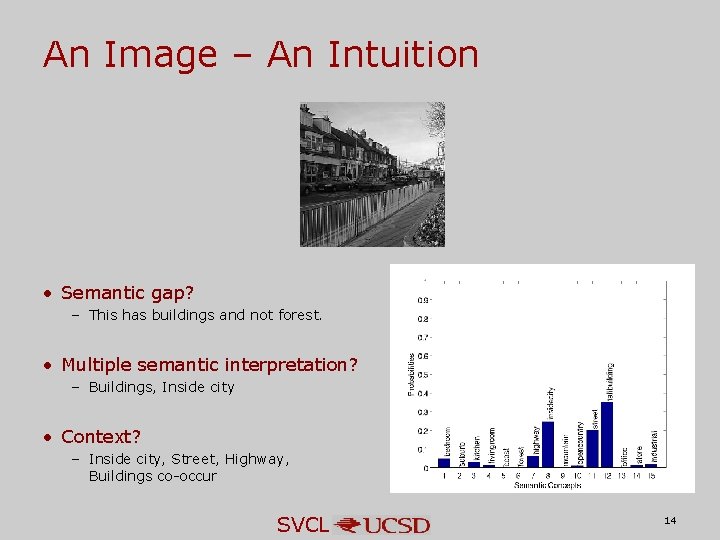

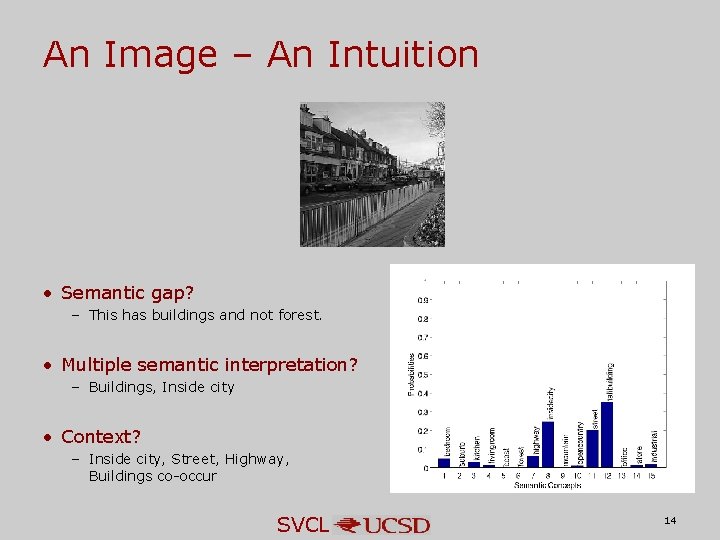

An Image – An Intuition • Semantic gap? – This has buildings and not forest. • Multiple semantic interpretation? – Buildings, Inside city • Context? – Inside city, Street, Highway, Buildings co-occur SVCL 14

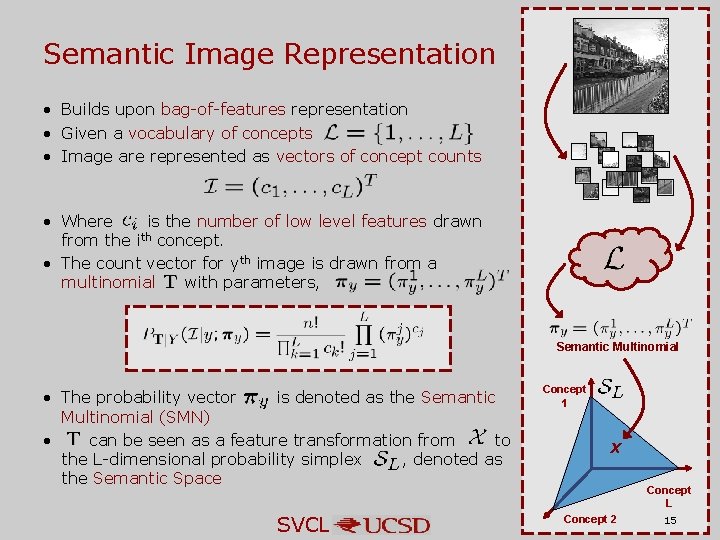

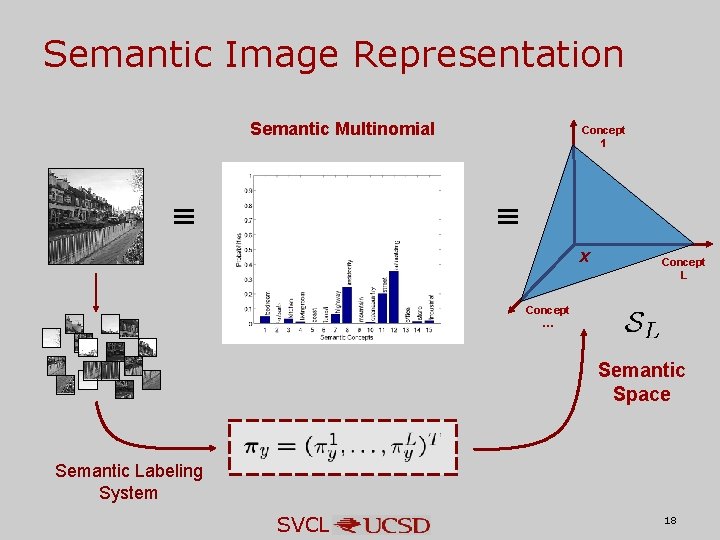

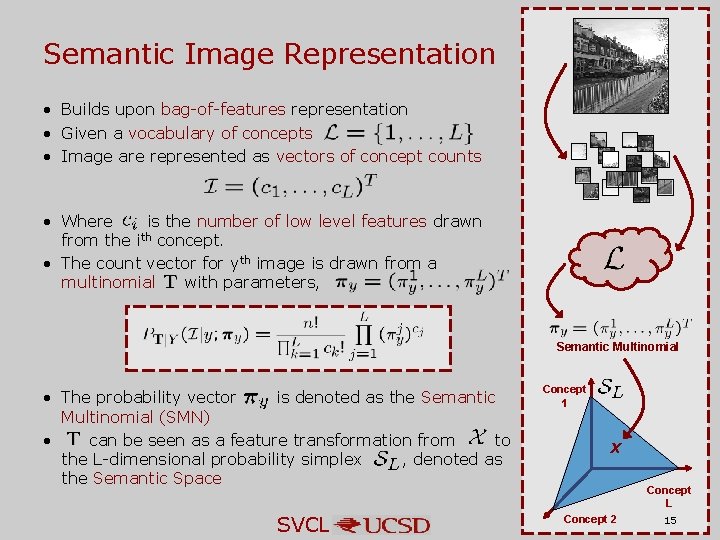

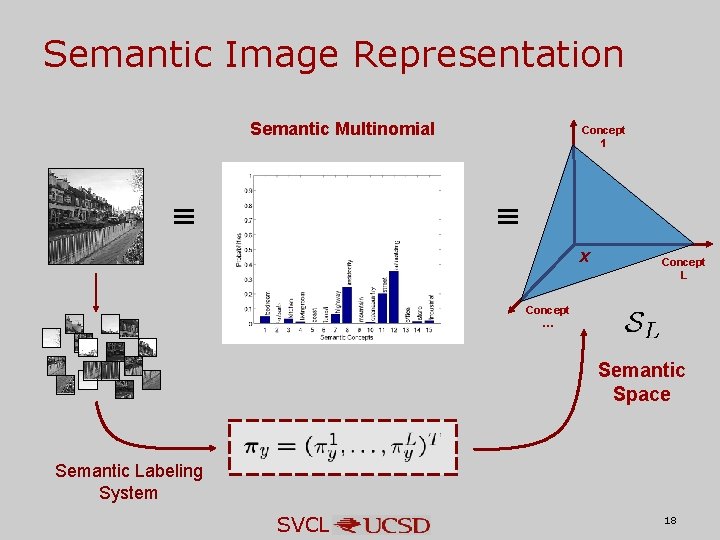

Semantic Image Representation • Builds upon bag-of-features representation • Given a vocabulary of concepts • Image are represented as vectors of concept counts • Where is the number of low level features drawn from the ith concept. • The count vector for yth image is drawn from a multinomial with parameters, Semantic Multinomial • The probability vector is denoted as the Semantic Multinomial (SMN) • can be seen as a feature transformation from to the L-dimensional probability simplex , denoted as the Semantic Space SVCL Concept 1 x Concept L Concept 2 15

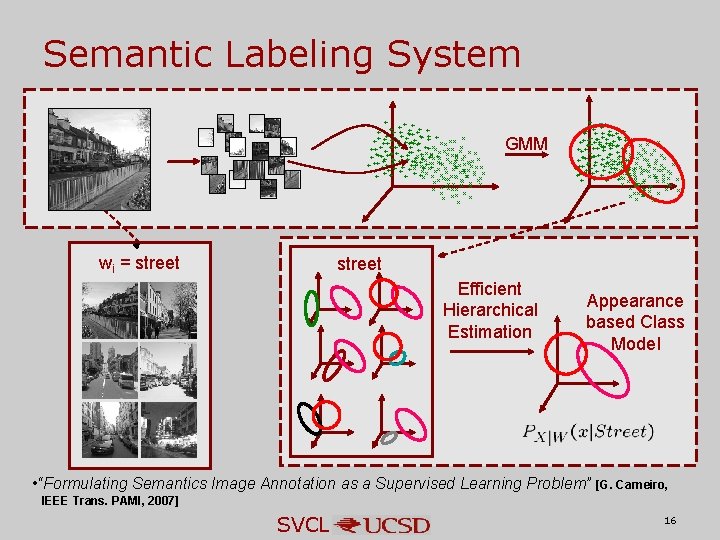

Semantic Labeling System wi = street GMM + ++ + ++ ++ +++++ ++ + + ++++++ + ++ ++++ +++++ ++++ ++ +++ + + ++ ++++ + + ++++++ + + +++ ++ + + + ++ +++ ++++ + + ++ +++ + ++ + + ++++ + + + + + ++ ++++ ++ + ++ ++ +++++ + ++ + +++ ++ + + + ++ ++++ ++ + + ++ ++ ++++++++++ ++ +++ + ++++ + + +++++ ++++++ ++ + + + street Efficient Hierarchical Estimation Appearance based Class Model • “Formulating Semantics Image Annotation as a Supervised Learning Problem” [G. Carneiro, IEEE Trans. PAMI, 2007] SVCL 16

Semantic Labeling System Image Concepts Appearance based concept models. Posterior Probabilities Likelihoods Bedroom Forest Inside city Street . . Tall building . . Likelihood under various models … SVCL 17

Semantic Image Representation Semantic Multinomial Concept 1 x Concept L Concept … Semantic Space Semantic Labeling System SVCL 18

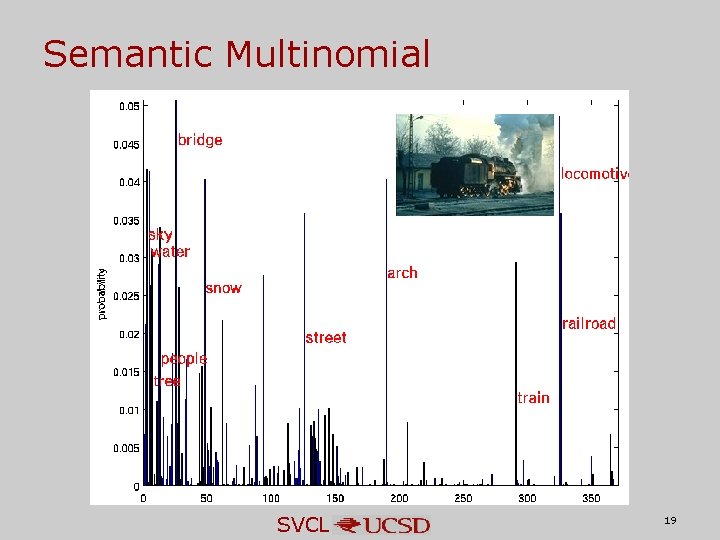

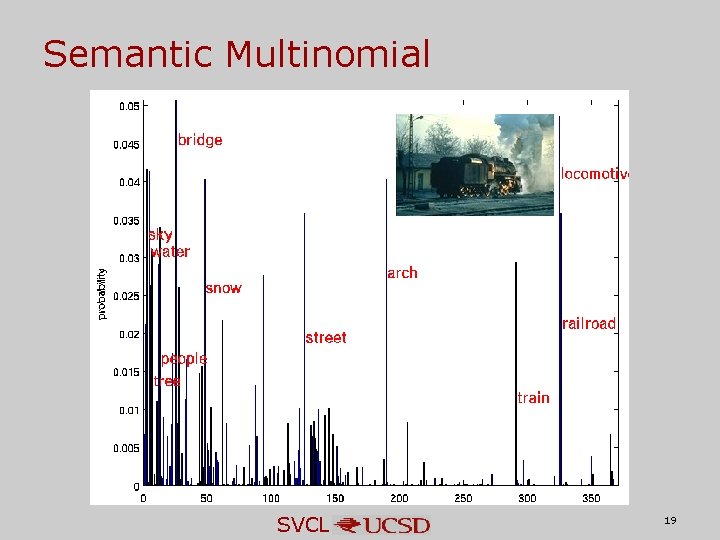

Semantic Multinomial SVCL 19

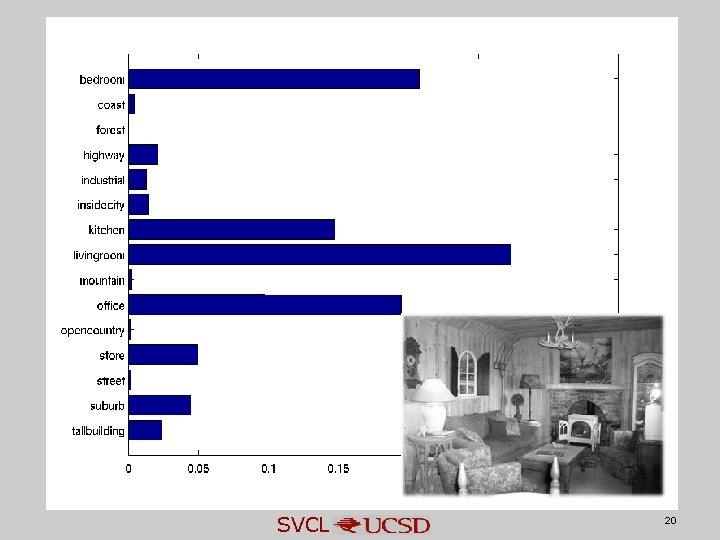

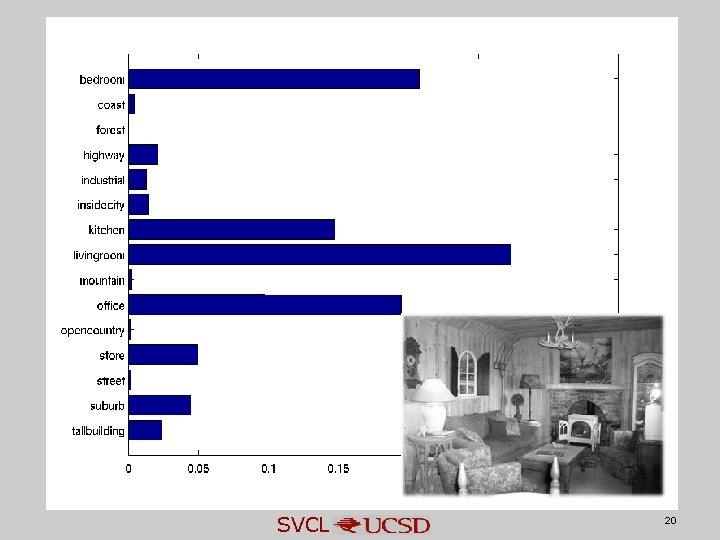

SVCL 20

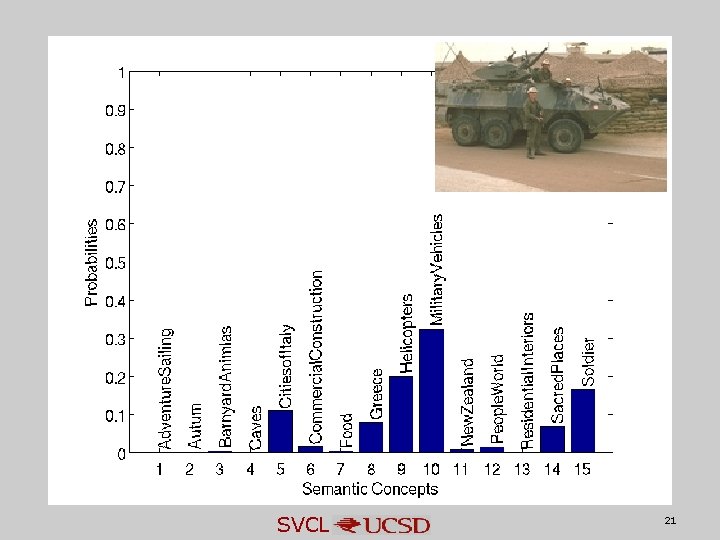

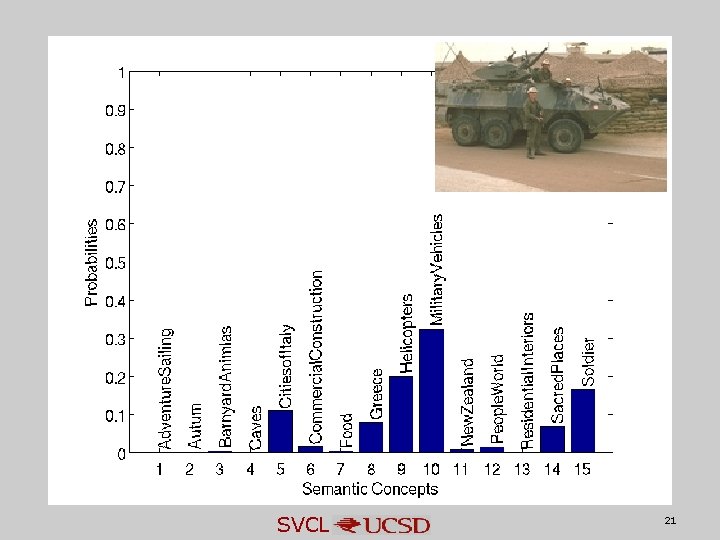

SVCL 21

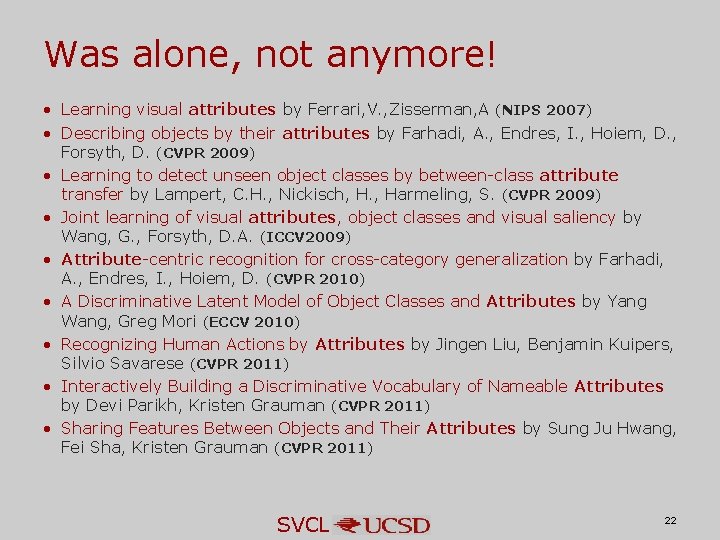

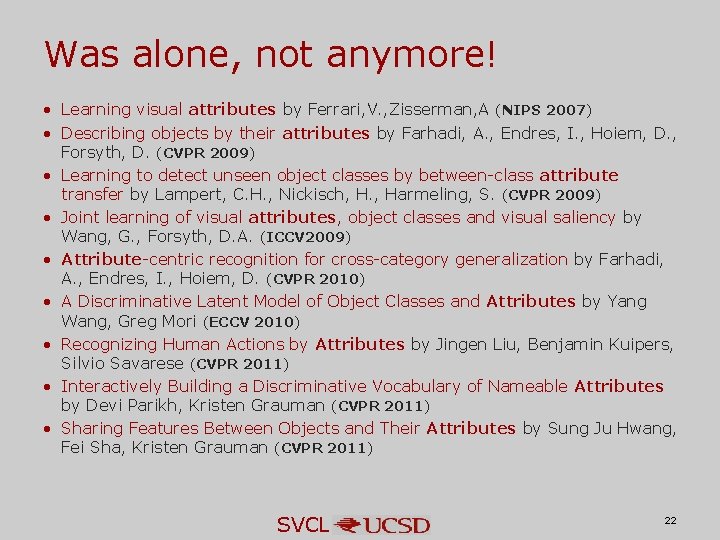

Was alone, not anymore! • Learning visual attributes by Ferrari, V. , Zisserman, A (NIPS 2007) • Describing objects by their attributes by Farhadi, A. , Endres, I. , Hoiem, D. , Forsyth, D. (CVPR 2009) • Learning to detect unseen object classes by between-class attribute transfer by Lampert, C. H. , Nickisch, H. , Harmeling, S. (CVPR 2009) • Joint learning of visual attributes, object classes and visual saliency by Wang, G. , Forsyth, D. A. (ICCV 2009) • Attribute-centric recognition for cross-category generalization by Farhadi, A. , Endres, I. , Hoiem, D. (CVPR 2010) • A Discriminative Latent Model of Object Classes and Attributes by Yang Wang, Greg Mori (ECCV 2010) • Recognizing Human Actions by Attributes by Jingen Liu, Benjamin Kuipers, Silvio Savarese (CVPR 2011) • Interactively Building a Discriminative Vocabulary of Nameable Attributes by Devi Parikh, Kristen Grauman (CVPR 2011) • Sharing Features Between Objects and Their Attributes by Sung Ju Hwang, Fei Sha, Kristen Grauman (CVPR 2011) SVCL 22

![Outline Semantic Image Representation Appearance Based Image Representation Semantic Multinomial Contribution Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-23.jpg)

Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] • Benefits for Visual Recognition – Abstraction: Bridging the Semantic Gap (QBSE) [Contribution] – Sensory Integration: Cross-modal Retrieval [Contribution] – Context: Holistic Context Models [Contribution] • Connections to the literature – Topic Models: Latent Dirichlet Allocation – Text vs Images – Importance of Supervision: Topic-supervised Latent Dirichlet Allocation (ts LDA) [Contribution] SVCL 23

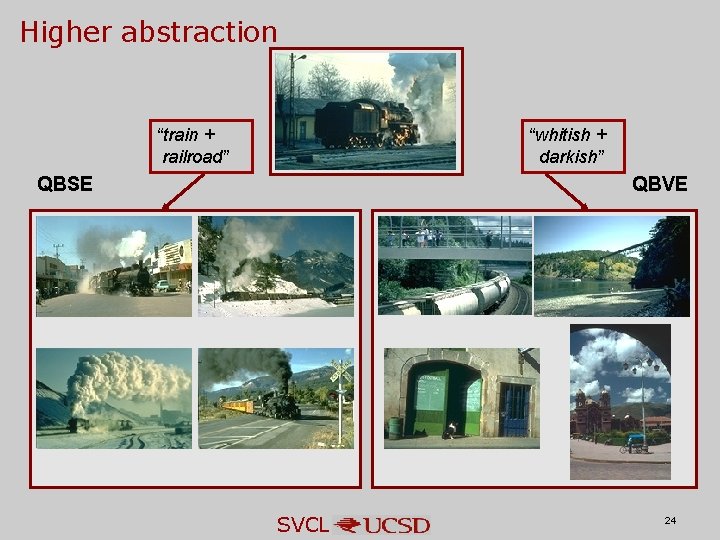

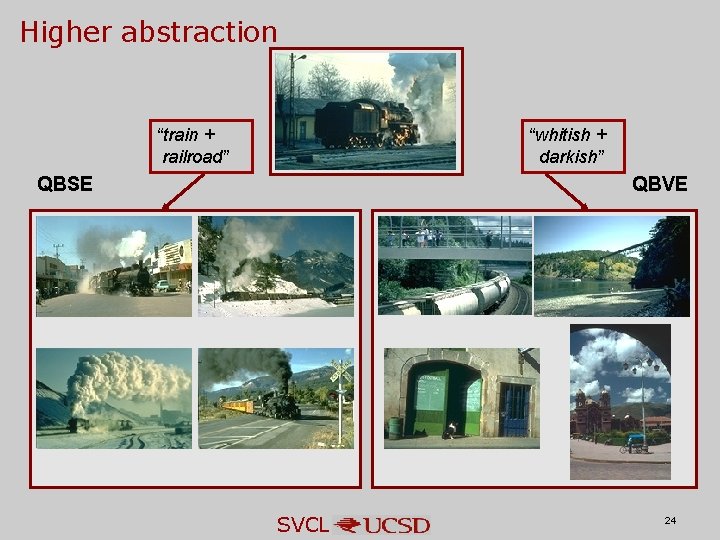

Higher abstraction “train + railroad” “whitish + darkish” QBSE QBVE SVCL 24

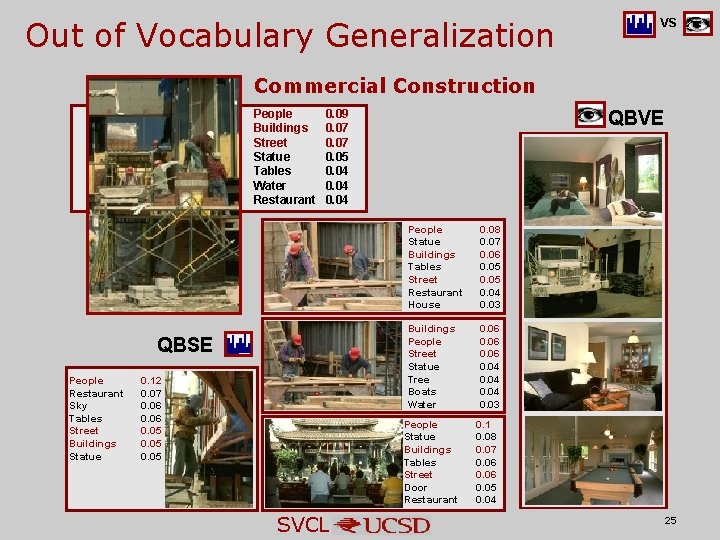

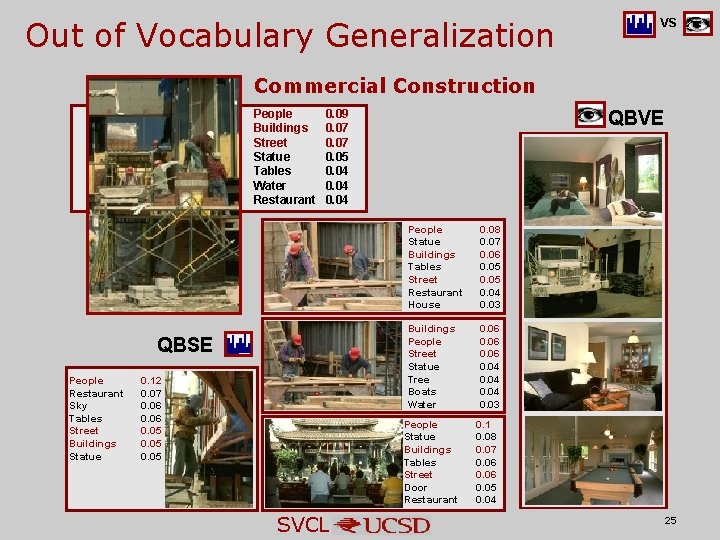

Out of Vocabulary Generalization VS Commercial Construction People Buildings Street Statue Tables Water Restaurant QBSE People Restaurant Sky Tables Street Buildings Statue QBVE 0. 09 0. 07 0. 05 0. 04 0. 12 0. 07 0. 06 0. 05 People Statue Buildings Tables Street Restaurant House 0. 08 0. 07 0. 06 0. 05 0. 04 0. 03 Buildings People Street Statue Tree Boats Water 0. 06 0. 04 0. 03 People Statue Buildings Tables Street Door Restaurant SVCL 0. 1 0. 08 0. 07 0. 06 0. 05 0. 04 25

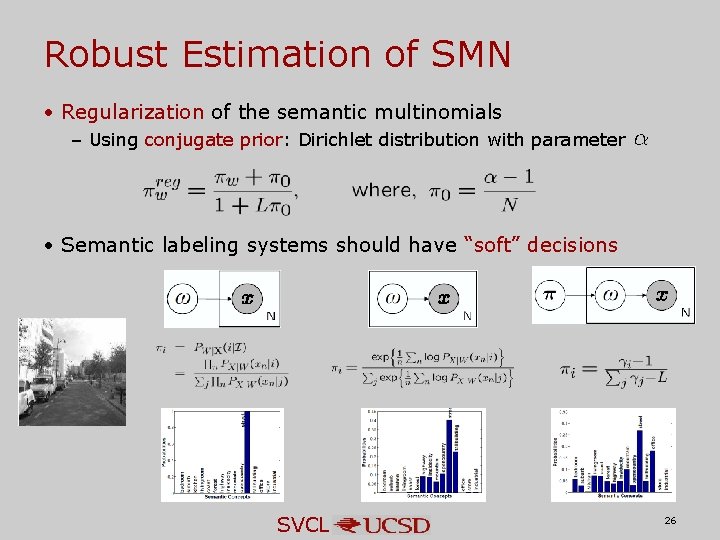

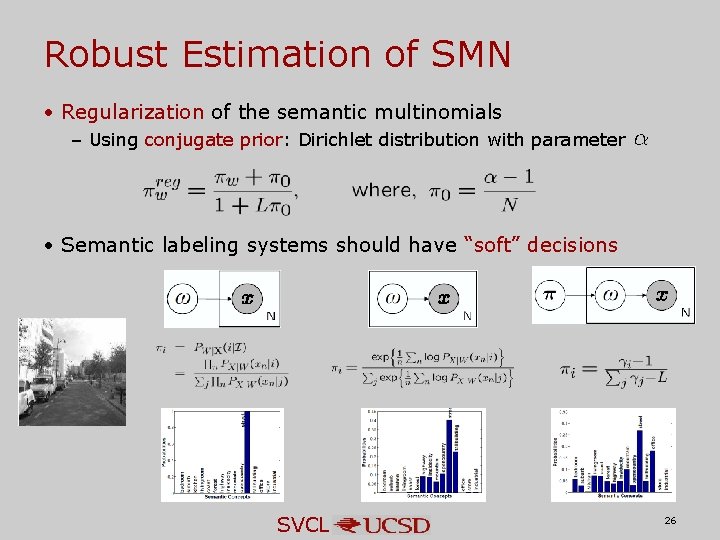

Robust Estimation of SMN • Regularization of the semantic multinomials – Using conjugate prior: Dirichlet distribution with parameter • Semantic labeling systems should have “soft” decisions SVCL 26

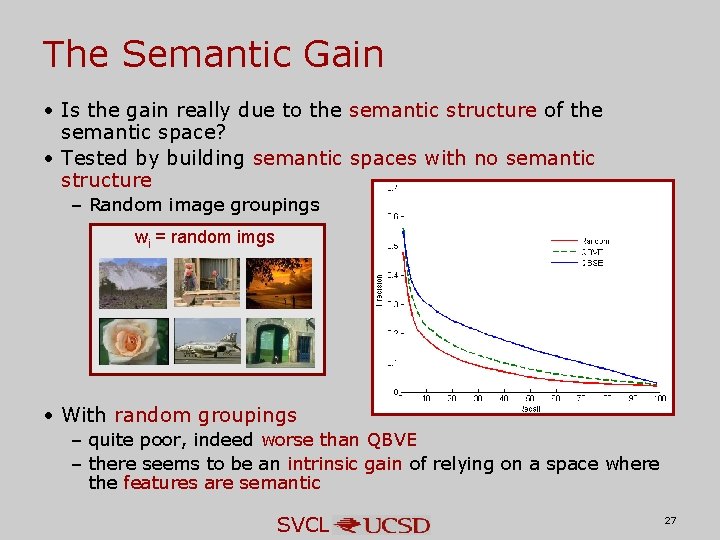

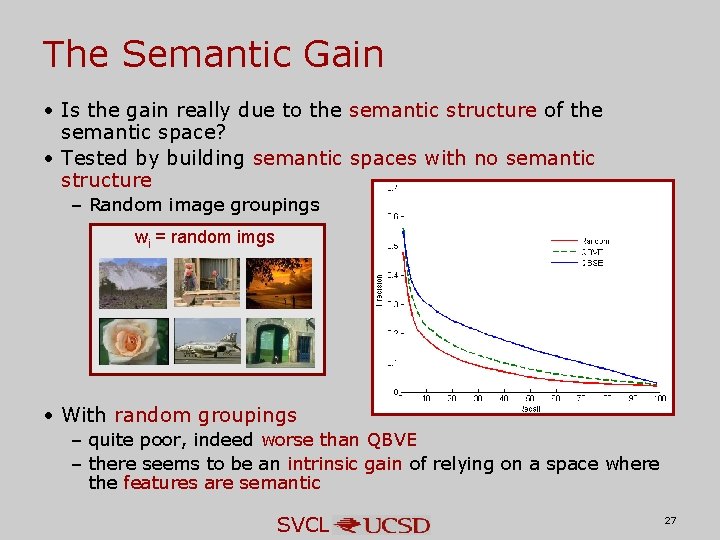

The Semantic Gain • Is the gain really due to the semantic structure of the semantic space? • Tested by building semantic spaces with no semantic structure – Random image groupings wi = random imgs • With random groupings – quite poor, indeed worse than QBVE – there seems to be an intrinsic gain of relying on a space where the features are semantic SVCL 27

![Outline Semantic Image Representation Appearance Based Image Representation Semantic Multinomial Contribution Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-28.jpg)

Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] • Benefits for Visual Recognition – Abstraction: Bridging the Semantic Gap (QBSE) [Contribution] – Sensory Integration: Cross-modal Retrieval [Contribution] – Context: Holistic Context Models [Contribution] • Connections to the literature – Topic Models: Latent Dirichlet Allocation – Text vs Images – Importance of Supervision: Topic-supervised Latent Dirichlet Allocation (ts LDA) [Contribution] SVCL 28

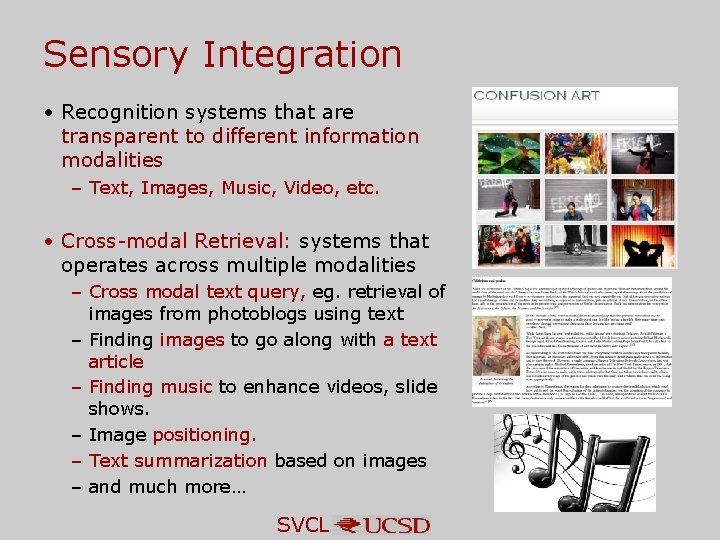

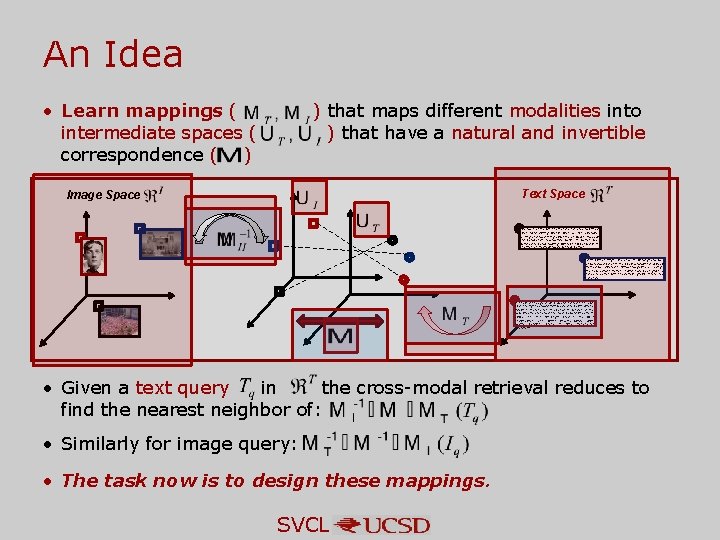

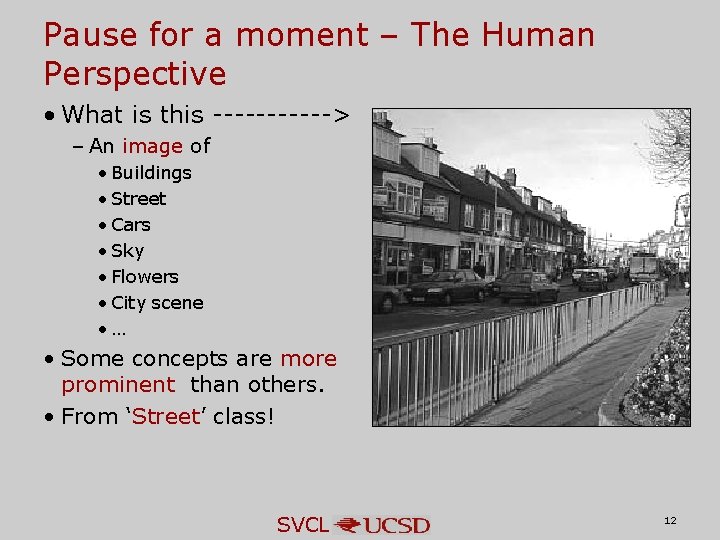

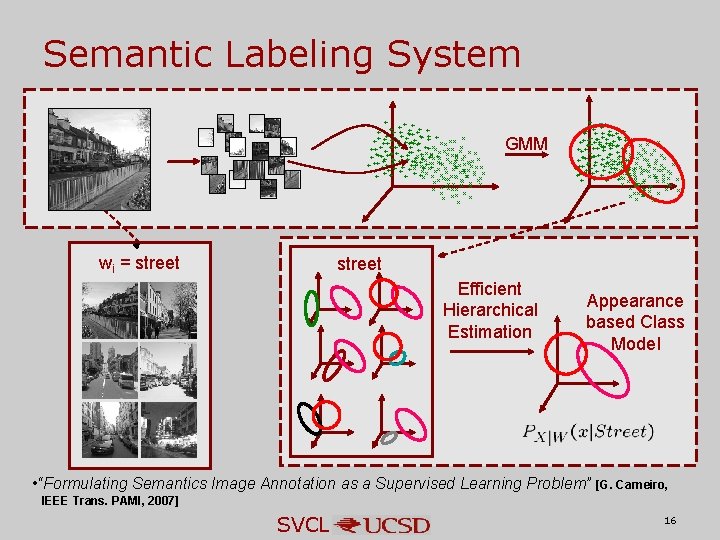

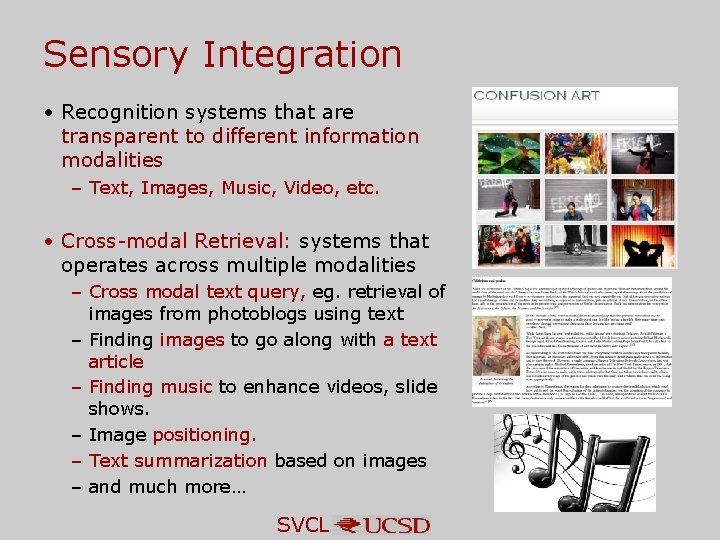

Sensory Integration • Recognition systems that are transparent to different information modalities – Text, Images, Music, Video, etc. • Cross-modal Retrieval: systems that operates across multiple modalities – Cross modal text query, eg. retrieval of images from photoblogs using text – Finding images to go along with a text article – Finding music to enhance videos, slide shows. – Image positioning. – Text summarization based on images – and much more… SVCL

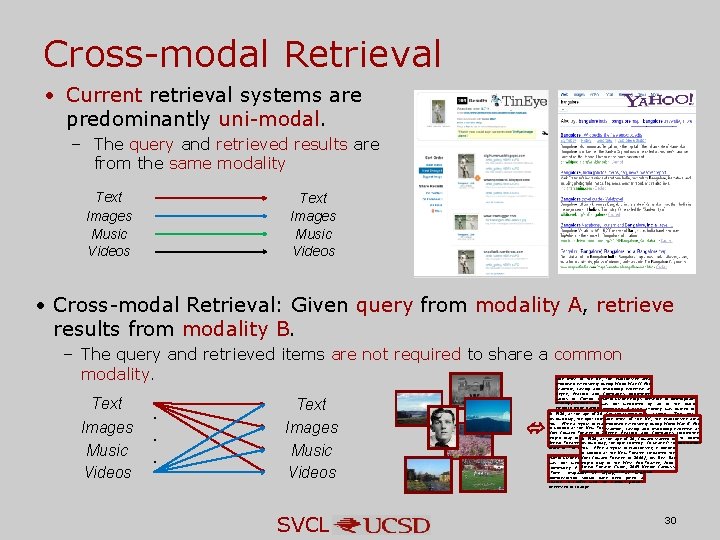

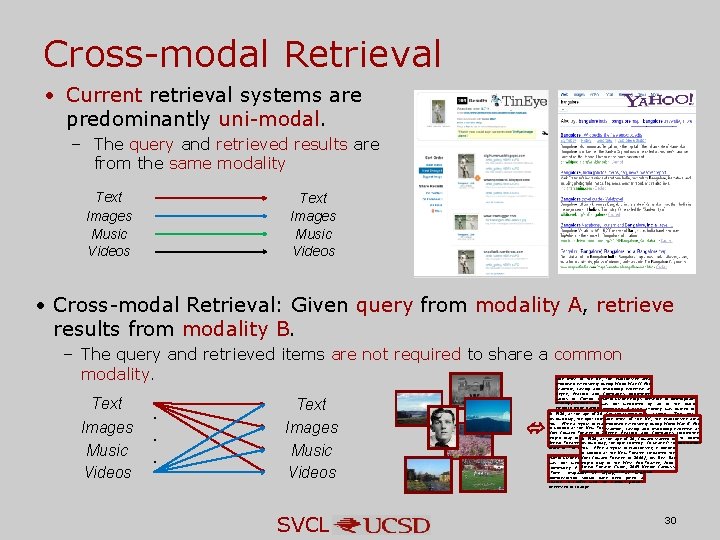

Cross-modal Retrieval • Current retrieval systems are predominantly uni-modal. – The query and retrieved results are from the same modality Text Images Music Videos • Cross-modal Retrieval: Given query from modality A, retrieve results from modality B. – The query and retrieved items are not required to share a common modality. Text Images Music Videos . . . Text Images Music Videos SVCL Like most of the UK, the Manchester area mobilised extensively during World War II. For example, casting and machining expertise at Beyer, Peacock and Company's locomotive works in Gorton was switched to bomb Martin Luther King's presence in Birmingham making; Dunlop's rubber Chorlton-onwasworks not inwelcomed by all in the black Medlock made barragecommunity. balloons; A black attorney was quoted in ''Time'' In 1920, at the age of 20, Cowardmagazine starred in as saying, "The new administration given area a his own play, the light comedy Leave It to UK, have Like''I'll most of should the been Manchester chance to itconfer the various groups You''. After a tryout in Manchester, openedwithduring mobilised extensively World War II. For interested incasting change. … machining expertise at in London at the New Theatre (renamed theand example, Noël Coward Theatre in 2006), first full. Beyer, his. Peacock and Company's locomotive length play in In the 1920, Westat. End. Thaxter, John. works was starred switched the ageinof Gorton 20, Coward in to bomb British Theatre his Guide, Neville Cardus's making; Dunlop's works. It in own 2009 play, the light comedyrubber ''I'll Leave to. Chorlton-onpraise in ''The Manchester Medlock barrage balloons; You''. After. Guardian'' a tryout made in Manchester, it opened in London at the New Theatre (renamed the Noël Coward in 2006), his first full. Martin Luther King's presence. Theatre in Birmingham length by play all in the Westblack End. Thaxter, John. was not welcomed in the Britishattorney Theatre was Guide, 2009 in. Neville Cardus's community. A black quoted praiseas in ''The Manchester ''Time'' magazine saying, "The Guardian'' new administration should have been given a chance to confer with the various groups interested in change. … 30

The problem. • No natural correspondence between representations of different modalities. • For example, we use Bag-of-words representation for both images and text – Images: vectors over visual textures ( – Text: vectors of word counts ( ) ) Image Space Text Space Like most of the UK, the Manchester area mobilised extensively during World War II. For example, casting and machining expertise at Beyer, Peacock and Company's locomotive works in Gorton was switched to bomb making; Dunlop's rubber works in Chorlton-on-Medlock made barrage balloons; ? ? Martin Luther King's presence in Birmingham was not welcomed by all in the black community. A black attorney was quoted in ''Time'' magazine as saying, "The new administration should have been given a chance to confer with the various groups interested in change. … In 1920, at the age of 20, Coward starred in his own play, the light comedy ''I'll Leave It to You''. After a tryout in Manchester, it opened in London at the New Theatre (renamed the Noël Coward Theatre in 2006), his first full-length play in the West End. Thaxter, John. British Theatre Guide, 2009 Neville Cardus's praise in ''The Manchester Guardian'' Sky. Bomb Terrorist India Success Weather Prime President Navy Army Sun Books Music Food Poverty Iran America The population of Turkey stood at 71. 5 million with a growth rate of 1. 31% per annum, based on the 2008 Census. It has an average population density of 92 persons per km². The proportion of the population residing in urban areas is 70. 5%. People within the 15– 64 age group constitute 66. 5% of the total population, the 0– 14 age group corresponds 26. 4% of th In 1920, at the age of 20, Coward starred in his own play, the light comedy ''I'll Leave It to You''. After a tryout in Manchester, it opened in London at the • How do we compute similarity? An intermediate space. SVCL

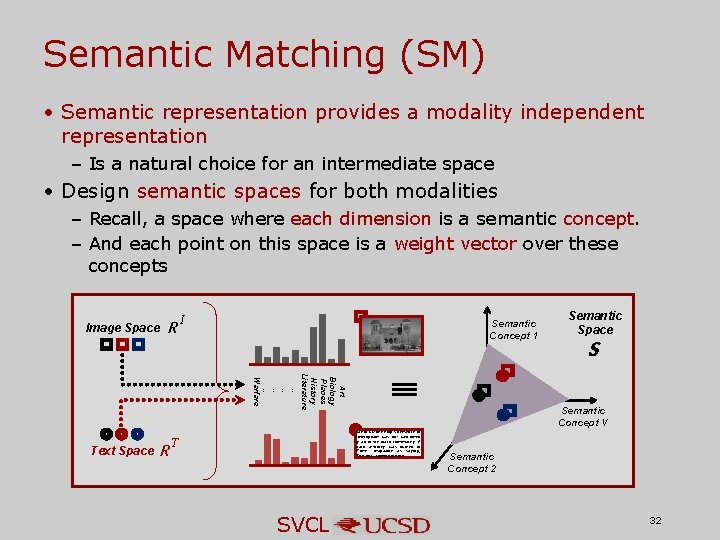

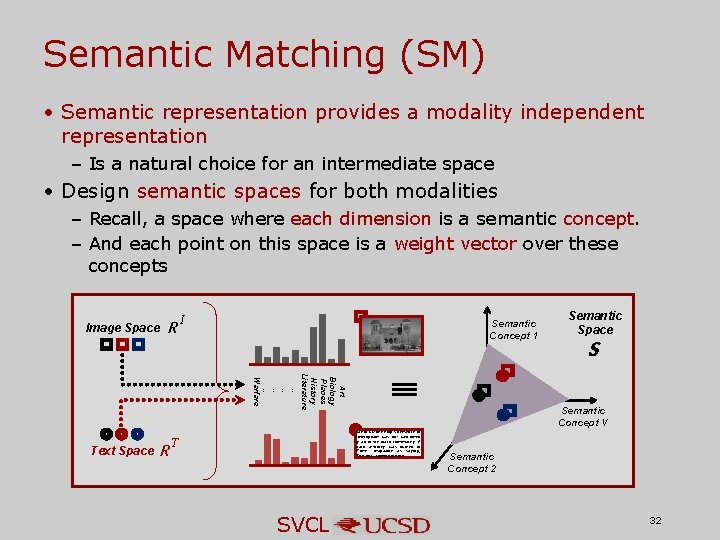

Semantic Matching (SM) • Semantic representation provides a modality independent representation – Is a natural choice for an intermediate space • Design semantic spaces for both modalities – Recall, a space where each dimension is a semantic concept. – And each point on this space is a weight vector over these concepts Image Space R I Semantic Concept 1 Art Biology Places History Literature … … Warfare Text Space R SVCL S Semantic Concept V Martin Luther King's presence in Birmingham was not welcomed by all in the black community. A black attorney was quoted in ''Time'' magazine as saying, "The new administration T Semantic Space Semantic Concept 2 32

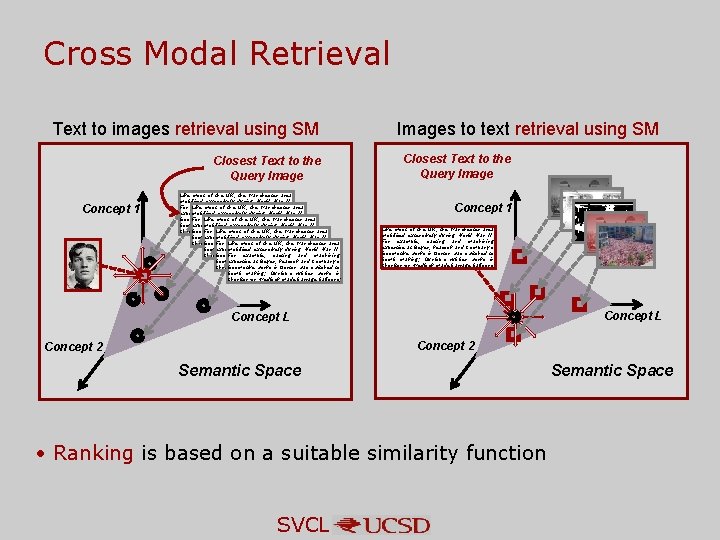

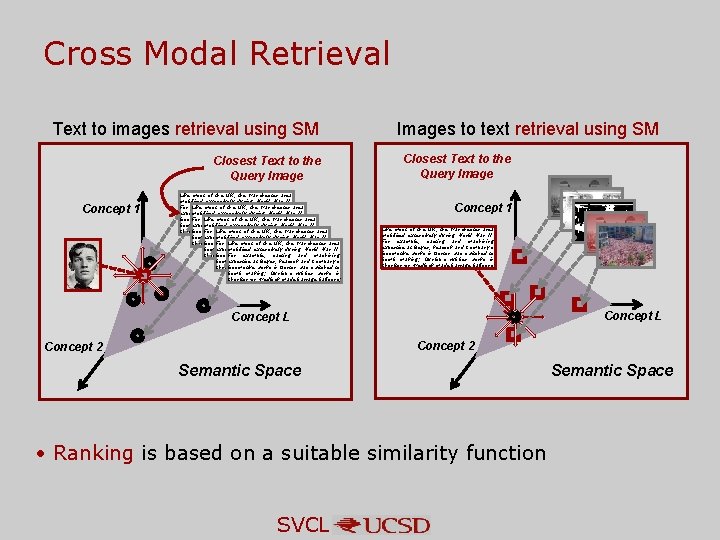

Cross Modal Retrieval Text to images retrieval using SM Closest Text to the Query Image Concept 1 Like most of the UK, the Manchester area mobilised extensively during World War II. most of casting the UK, and the Manchester For Like example, machining area mobilised extensively World War II. expertise at Beyer, Peacockduring and Company's most the UK, the Manchester area locomotive For Like example, works in of Gorton casting was and switched machining to mobilised extensively during World bombexpertise making; at Dunlop's Beyer, Peacock rubber and works Company's in War II. most the UK, the Manchester area Chorlton-on-Medlock locomotive For Like example, works made in of Gorton casting barrage wasballoons; and switched machining to mobilised extensively during World bombexpertise making; at Dunlop's Beyer, Peacock rubber and works Company's in War II. Chorlton-on-Medlock locomotive For example, worksmade in Gorton casting barrage wasballoons; and switched machining to bombexpertise making; at Dunlop's Beyer, Peacock rubber and works Company's in Chorlton-on-Medlock locomotive worksmade in Gorton barrage wasballoons; switched to bomb making; Dunlop's rubber works in Chorlton-on-Medlock made barrage balloons; Images to text retrieval using SM Closest Text to the Query Image Concept 1 Like most of the UK, the Manchester area mobilised extensively during World War II. For example, casting and machining expertise at Beyer, Peacock and Company's locomotive works in Gorton was switched to bomb making; Dunlop's rubber works in Chorlton-on-Medlock made barrage balloons; Concept L Concept 2 Semantic Space • Ranking is based on a suitable similarity function SVCL Semantic Space

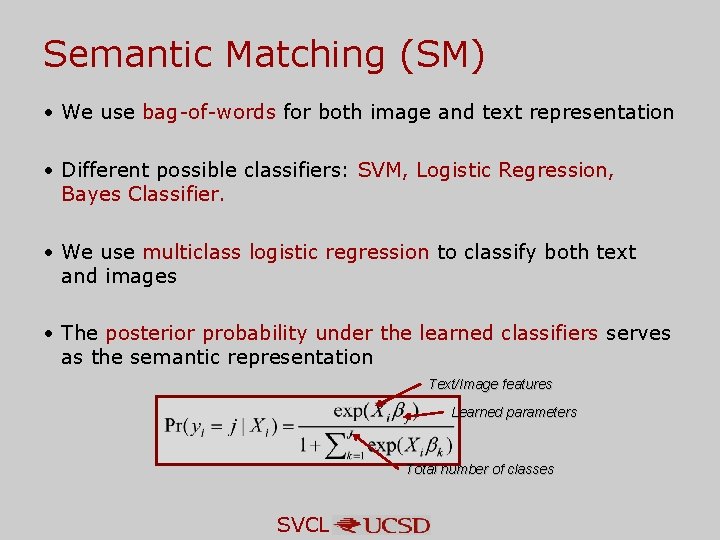

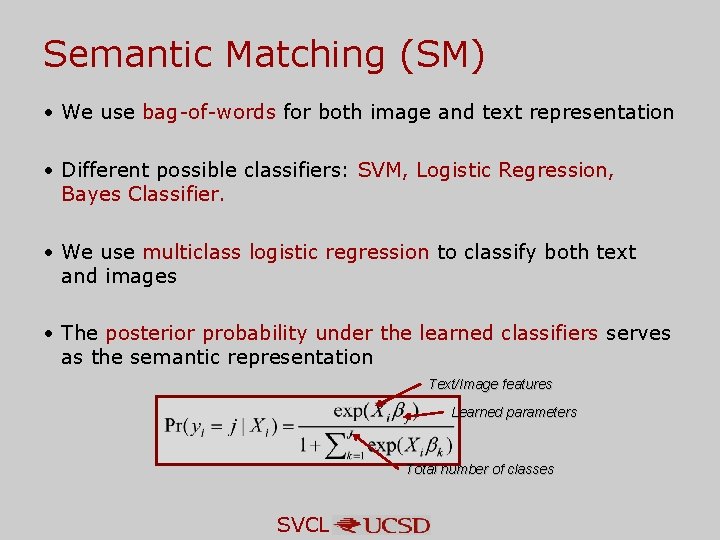

Semantic Matching (SM) • We use bag-of-words for both image and text representation • Different possible classifiers: SVM, Logistic Regression, Bayes Classifier. • We use multiclass logistic regression to classify both text and images • The posterior probability under the learned classifiers serves as the semantic representation Text/Image features Learned parameters Total number of classes SVCL

![Evaluation Dataset Wikipedia Featured Articles Novel Around 850 out of obscurity rose Evaluation • Dataset? – Wikipedia Featured Articles [Novel] Around 850, out of obscurity rose](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-35.jpg)

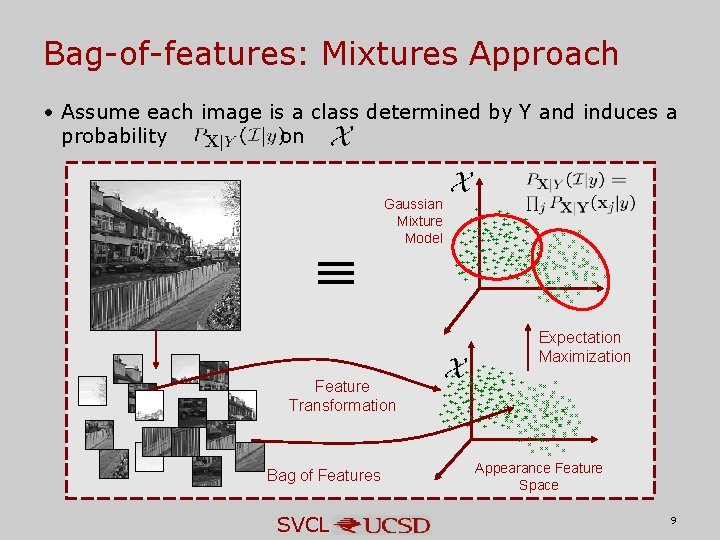

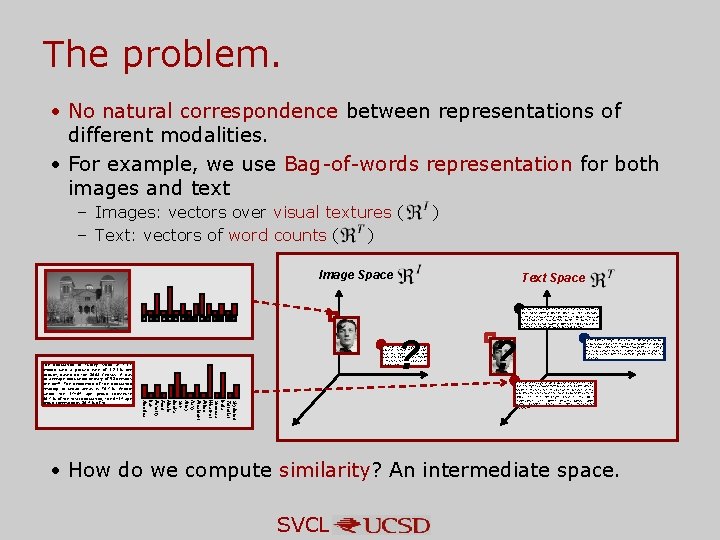

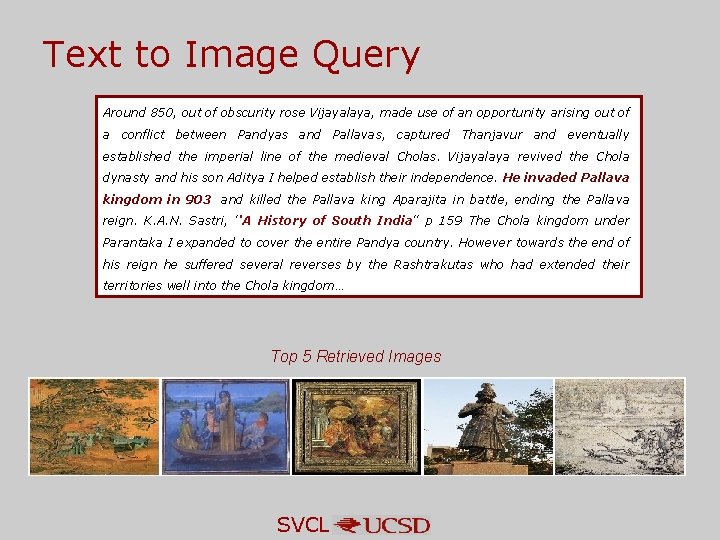

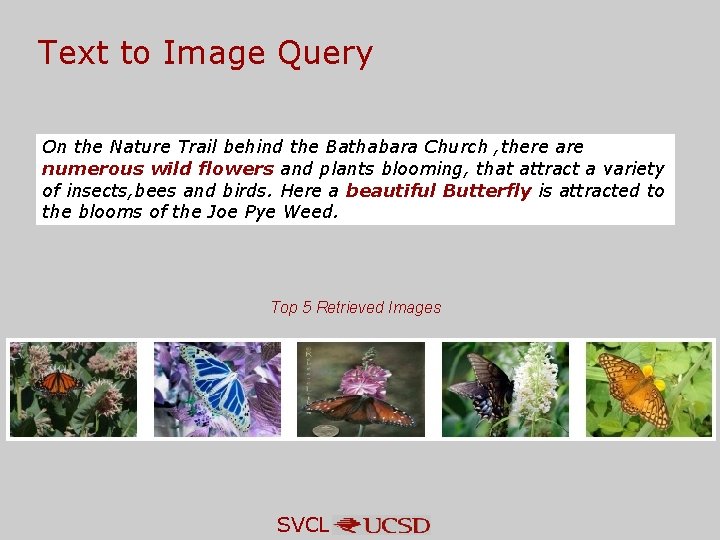

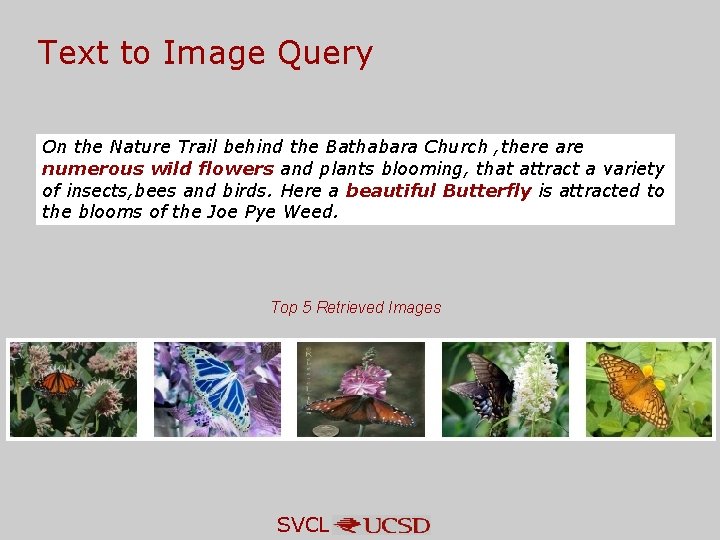

Evaluation • Dataset? – Wikipedia Featured Articles [Novel] Around 850, out of obscurity rose Vijayalaya, made use of an opportunity arising out of a conflict between Pandyas and Pallavas, captured Thanjavur and eventually established the imperial line of the medieval Cholas. Vijayalaya revived the Chola dynasty and his son Aditya I helped establish their independence. He invaded Pallava kingdom in 903 and killed the Pallava king Aparajita in battle, ending the Pallava reign. K. A. N. Sastri, ''A History of South India‘’… Source: http: //en. wikipedia. org/wiki/History_of_Tamil_Nadu#Cholas – TVGraz [Khan et al’ 09] On the Nature Trail behind the Bathabara Church , there are numerous wild flowers and plants blooming, that attract a variety of insects, bees and birds. Here a beautiful Butterfly is attracted to the blooms of the Joe Pye Weed. Source: www 2. journalnow. com/ugc/snap/community-events/beautifulbutterfly/1528/ – Both datasets have 10 classes and about 3000 image-text pairs. SVCL 35

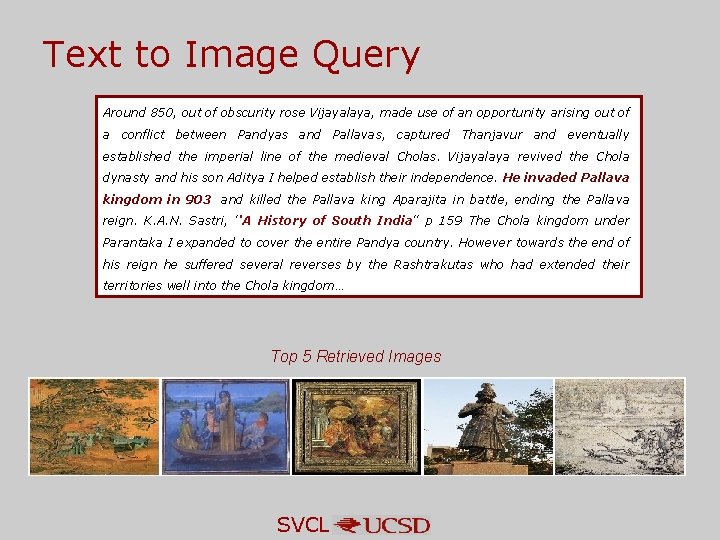

Text to Image Query Around 850, out of obscurity rose Vijayalaya, made use of an opportunity arising out of a conflict between Pandyas and Pallavas, captured Thanjavur and eventually established the imperial line of the medieval Cholas. Vijayalaya revived the Chola dynasty and his son Aditya I helped establish their independence. He invaded Pallava kingdom in 903 and killed the Pallava king Aparajita in battle, ending the Pallava reign. K. A. N. Sastri, ''A History of South India'' p 159 The Chola kingdom under Parantaka I expanded to cover the entire Pandya country. However towards the end of his reign he suffered several reverses by the Rashtrakutas who had extended their territories well into the Chola kingdom… Top 5 Retrieved Images SVCL

Text to Image Query On the Nature Trail behind the Bathabara Church , there are numerous wild flowers and plants blooming, that attract a variety of insects, bees and birds. Here a beautiful Butterfly is attracted to the blooms of the Joe Pye Weed. Top 5 Retrieved Images SVCL

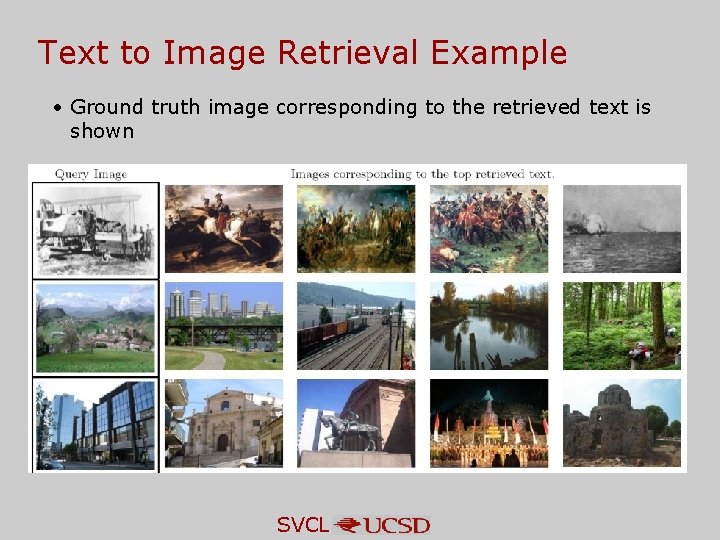

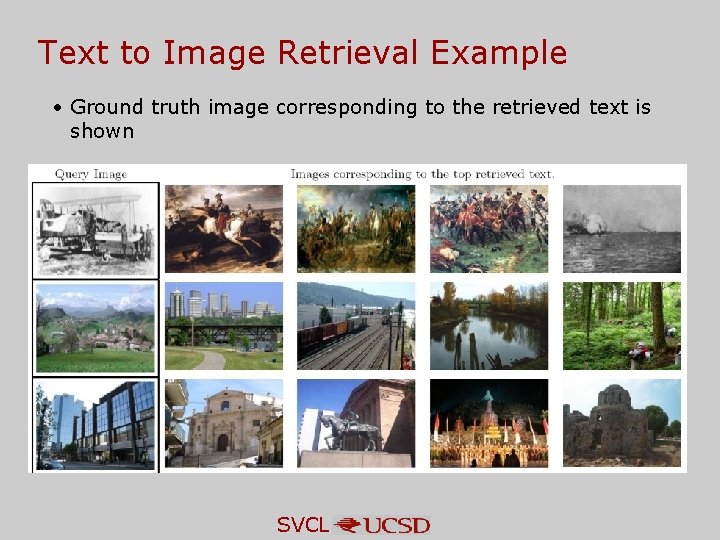

Text to Image Retrieval Example • Ground truth image corresponding to the retrieved text is shown SVCL

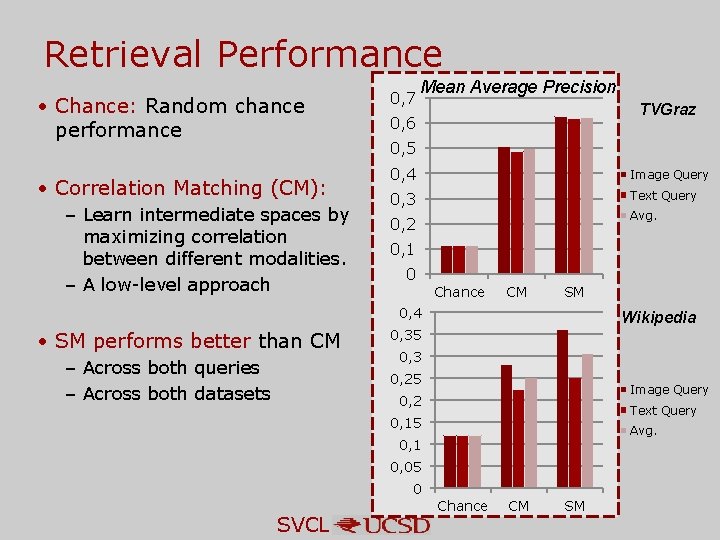

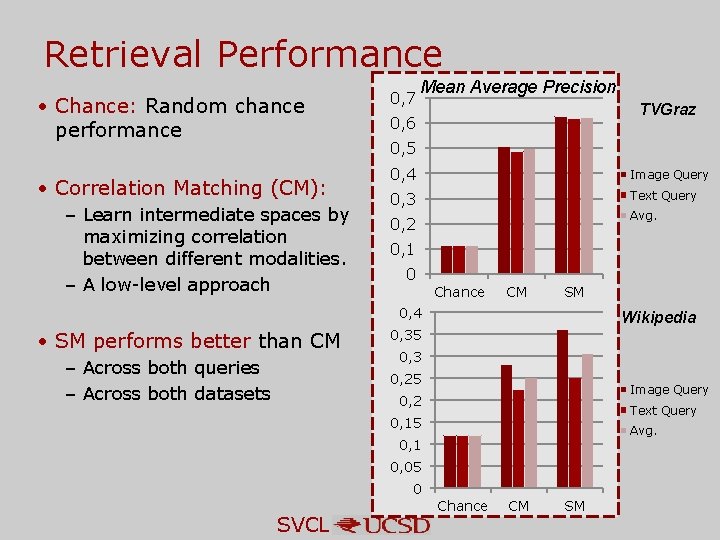

Retrieval Performance • Chance: Random chance performance • Correlation Matching (CM): – Learn intermediate spaces by maximizing correlation between different modalities. – A low-level approach 0, 7 Mean Average Precision TVGraz 0, 6 0, 5 0, 4 Image Query 0, 3 Text Query Avg. 0, 2 0, 1 0 Chance CM SM 0, 4 • SM performs better than CM – Across both queries – Across both datasets Wikipedia 0, 35 0, 3 0, 25 Image Query 0, 2 Text Query 0, 15 Avg. 0, 1 0, 05 0 SVCL Chance CM SM

![Outline Semantic Image Representation Appearance Based Image Representation Semantic Multinomial Contribution Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-40.jpg)

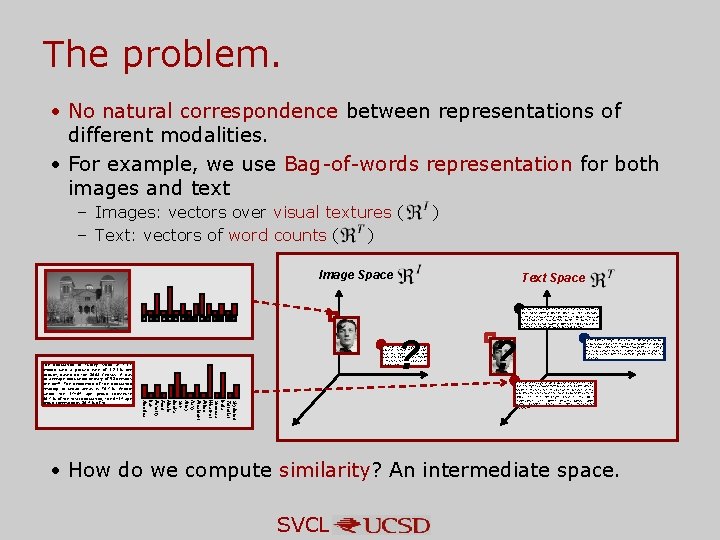

Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] • Benefits for Visual Recognition – Abstraction: Bridging the Semantic Gap (QBSE) [Contribution] – Sensory Integration: Cross-modal Retrieval [Contribution] – Context: Holistic Context Models [Contribution] • Connections to the literature – Topic Models: Latent Dirichlet Allocation – Text vs Images – Importance of Supervision: Topic-supervised Latent Dirichlet Allocation (ts LDA) [Contribution] SVCL 40

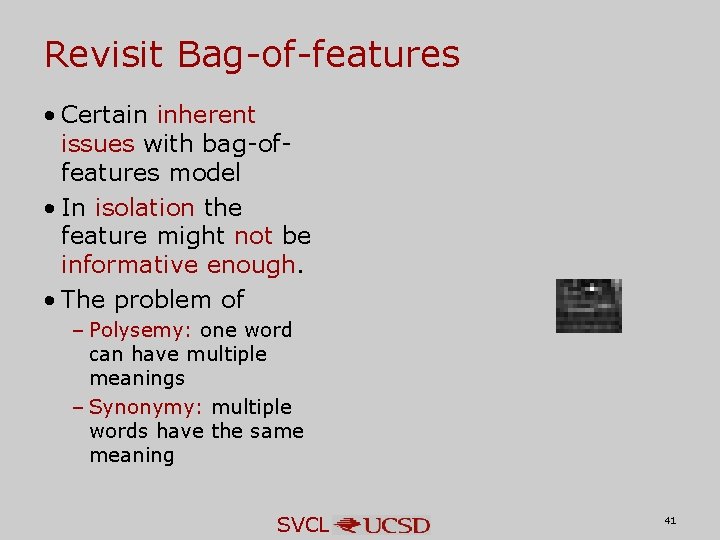

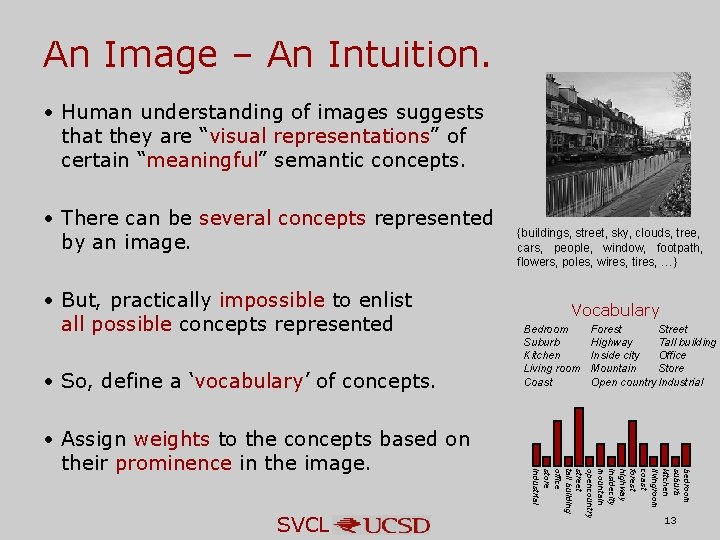

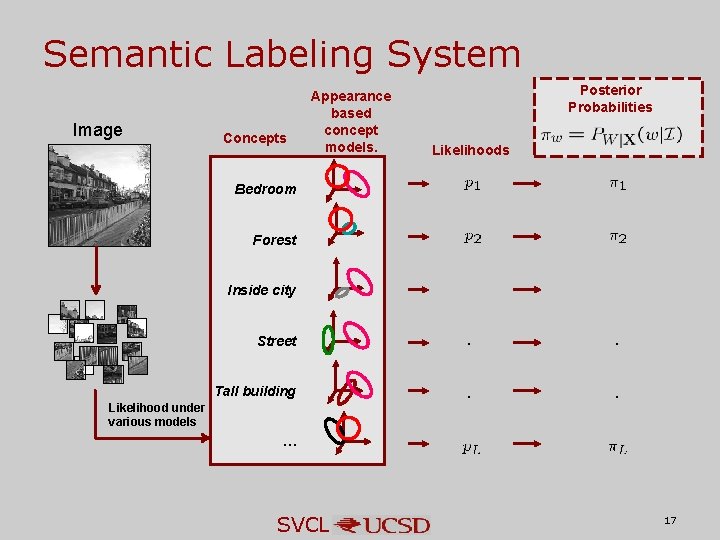

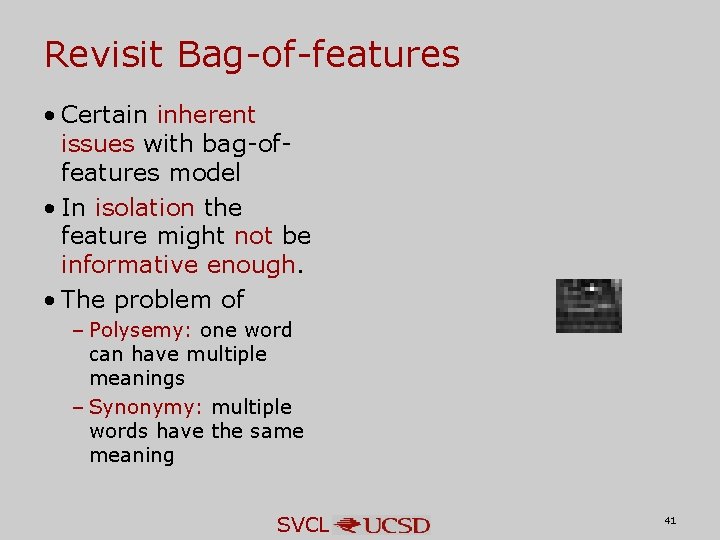

Revisit Bag-of-features • Certain inherent issues with bag-offeatures model • In isolation the feature might not be informative enough. • The problem of – Polysemy: one word can have multiple meanings – Synonymy: multiple words have the same meaning SVCL 41

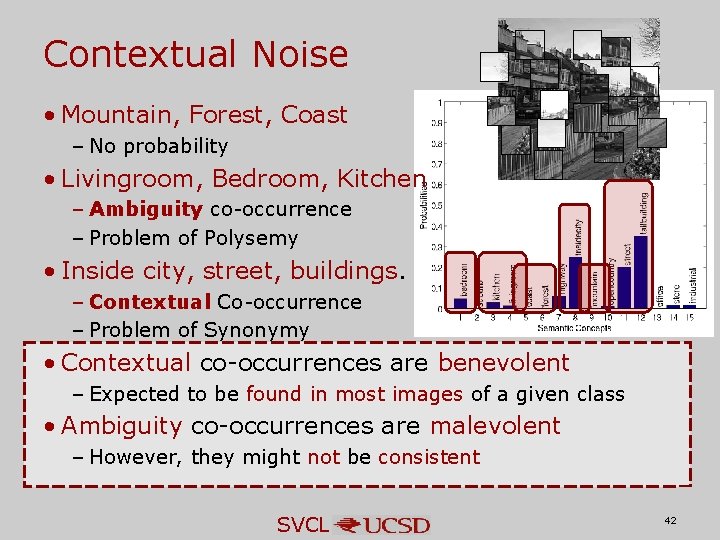

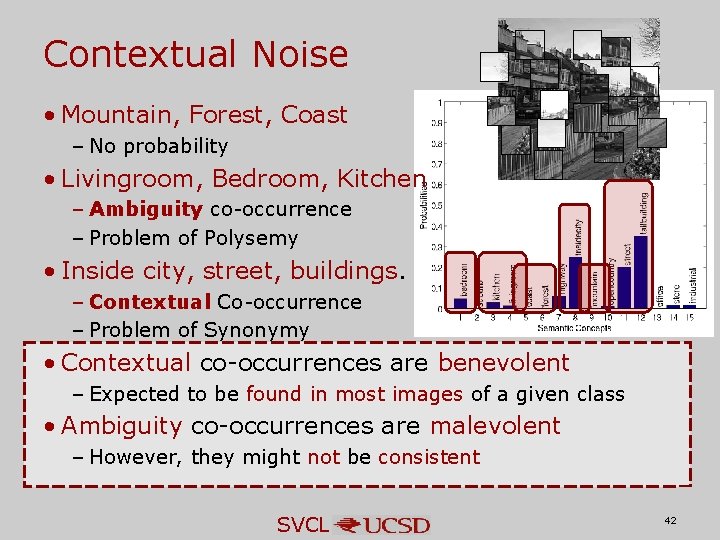

Contextual Noise • Mountain, Forest, Coast – No probability • Livingroom, Bedroom, Kitchen – Ambiguity co-occurrence – Problem of Polysemy • Inside city, street, buildings. – Contextual Co-occurrence – Problem of Synonymy • Contextual co-occurrences are benevolent – Expected to be found in most images of a given class • Ambiguity co-occurrences are malevolent – However, they might not be consistent SVCL 42

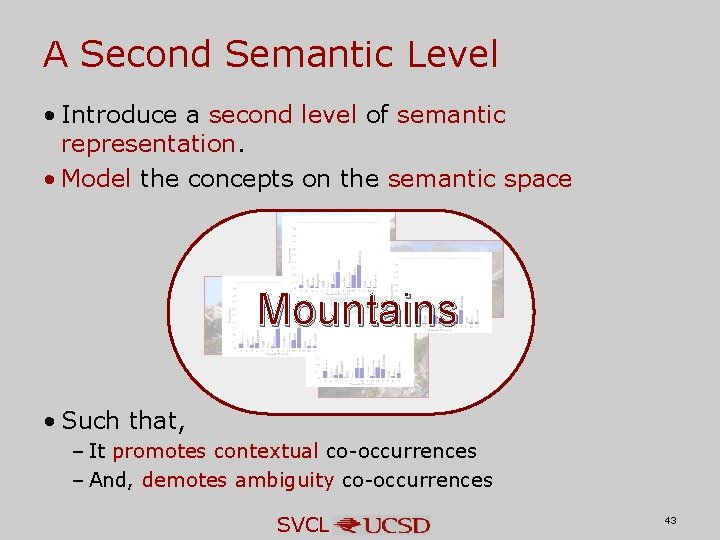

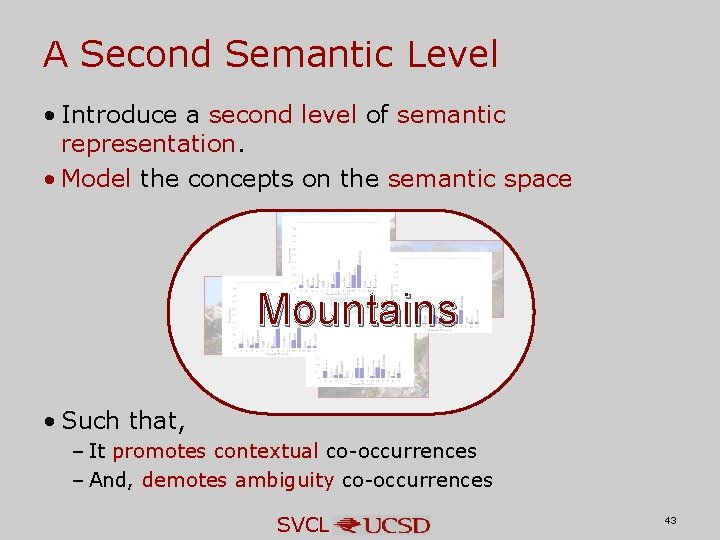

A Second Semantic Level • Introduce a second level of semantic representation. • Model the concepts on the semantic space Mountains • Such that, – It promotes contextual co-occurrences – And, demotes ambiguity co-occurrences SVCL 43

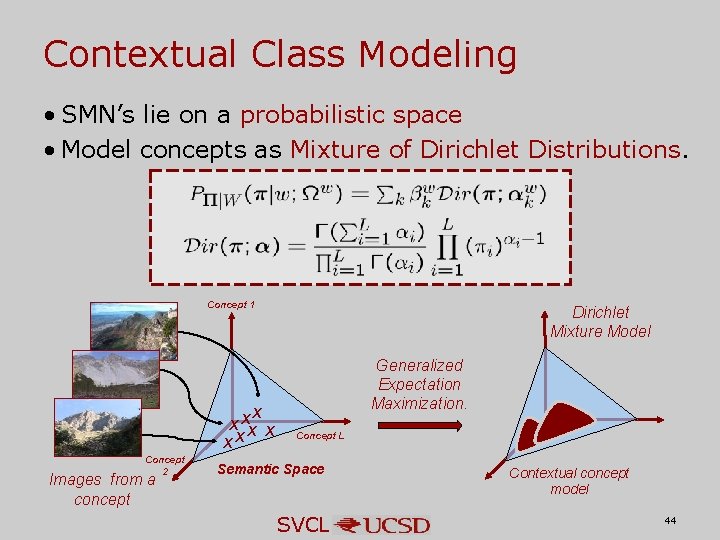

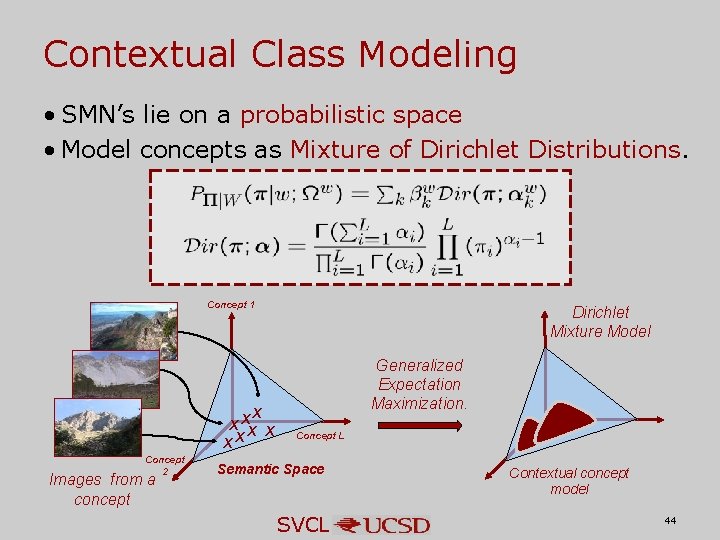

Contextual Class Modeling • SMN’s lie on a probabilistic space • Model concepts as Mixture of Dirichlet Distributions. Concept 1 x x xx Concept 2 Images from a concept Dirichlet Mixture Model Generalized Expectation Maximization. Concept L Semantic Space SVCL Contextual concept model 44

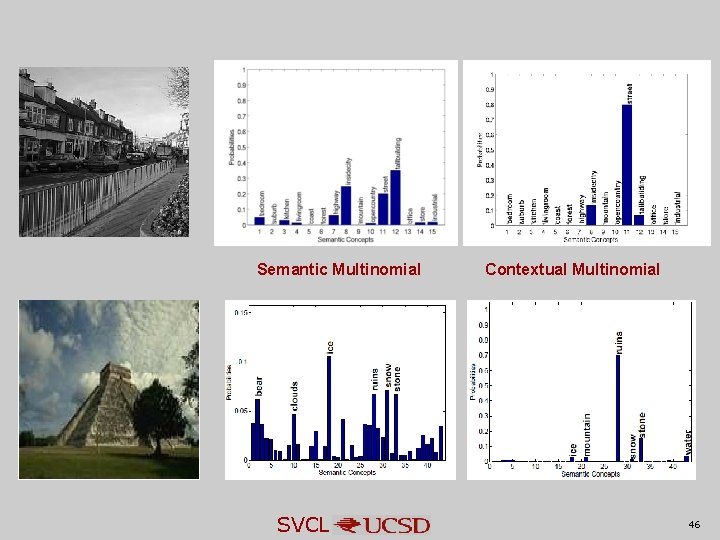

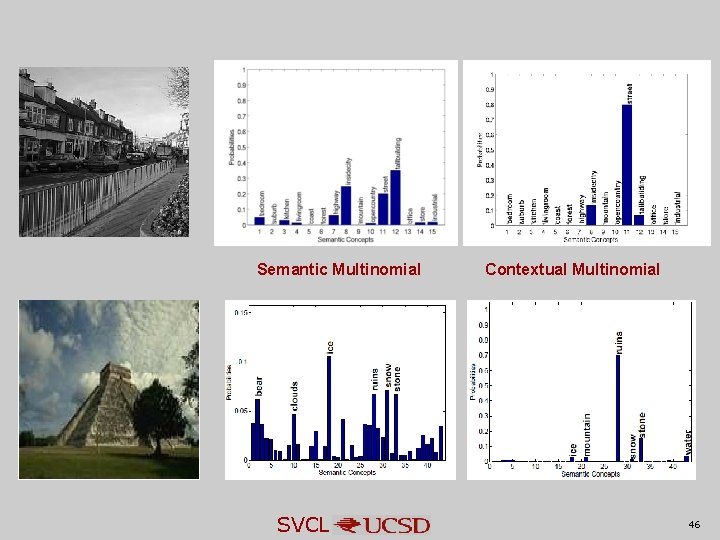

Generating the contextual representation Visual concept models Training / Query Image Semantic Multinomial Contextual Concept models Contextual Multinomial Concept 1 . . . Concept 2 Bag of features Learning the Visual Class Models [Carneiro’ 05] Efficient Hierarchical Estimation concept training images Visual Features Space SVCL Dirichlet Mixture Model Concept 1 . . . Mountain + + + + ++ ++++ + + + ++++ ++ + + + + ++ + + ++ + + + + + + + + ++ + + + + ++ + + ++ + + + ++ +++ + + + + + wi = mountain Gaussian Mixture Model + + ++ + + + + ++ ++ + + + ++ + + + ++ ++ + + + + + + + ++ + + + +++ ++ ++ + ++ + + + ++ + + ++ + + + + Contextual Space Learning the Contextual Class Models Bag of features + Concept L x Concept 2 x x Concept L x x x Semantic Space Contextual model of the semantic concept. 45

Semantic Multinomial SVCL Contextual Multinomial 46

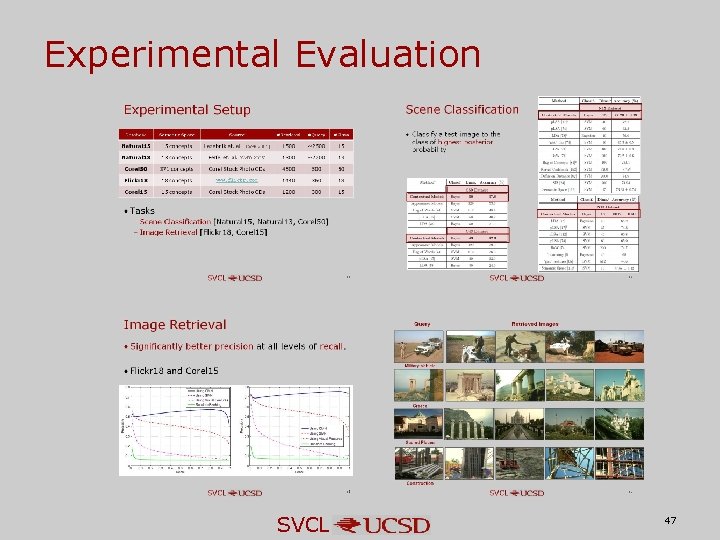

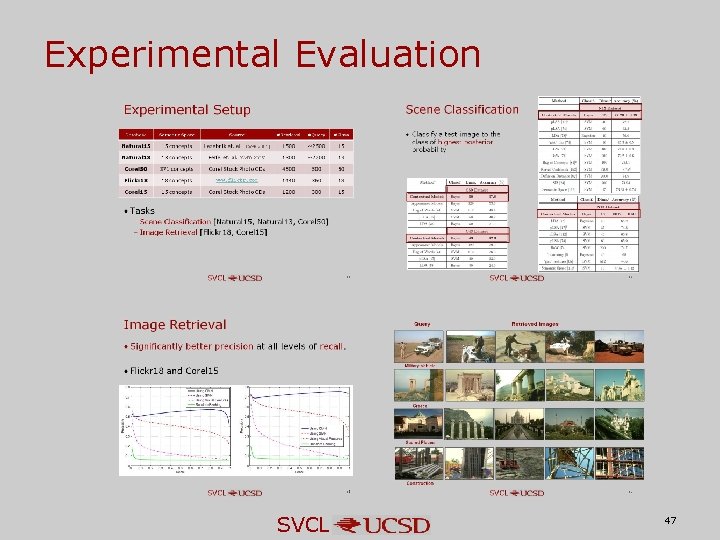

Experimental Evaluation SVCL 47

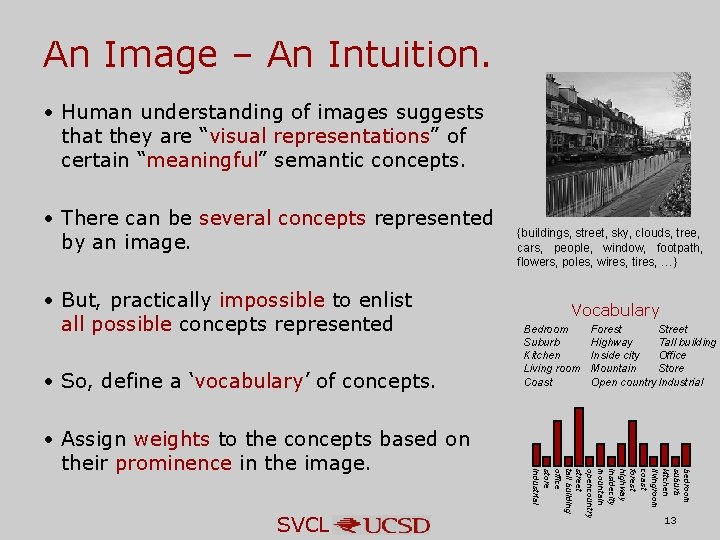

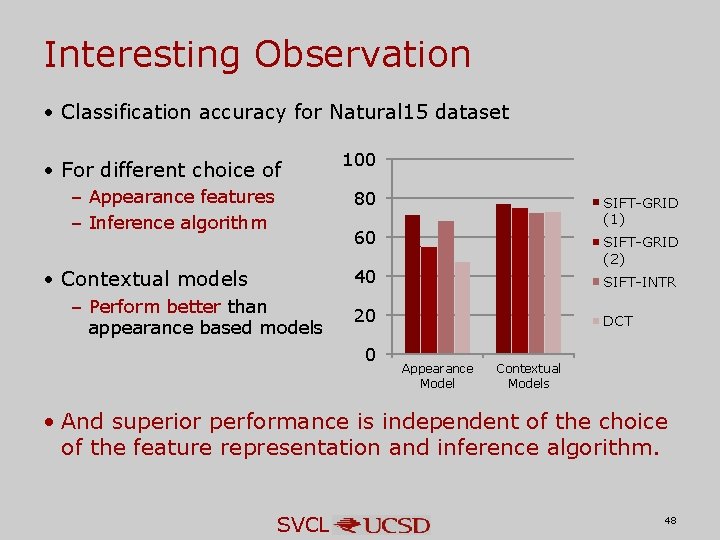

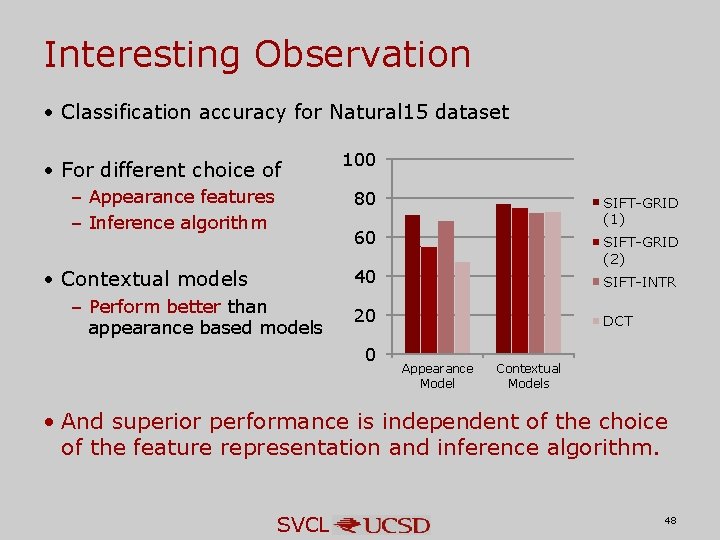

Interesting Observation • Classification accuracy for Natural 15 dataset • For different choice of – Appearance features – Inference algorithm 100 80 SIFT-GRID (1) 60 SIFT-GRID (2) 40 • Contextual models – Perform better than appearance based models SIFT-INTR 20 0 DCT Appearance Model Contextual Models • And superior performance is independent of the choice of the feature representation and inference algorithm. SVCL 48

![Outline Semantic Image Representation Appearance Based Image Representation Semantic Multinomial Contribution Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution]](https://slidetodoc.com/presentation_image_h2/9dd030d71b6c1f13a2a8af341c632151/image-49.jpg)

Outline. • Semantic Image Representation – Appearance Based Image Representation – Semantic Multinomial [Contribution] • Benefits for Visual Recognition – Abstraction: Bridging the Semantic Gap (QBSE) [Contribution] – Sensory Integration: Cross-modal Retrieval [Contribution] – Context: Holistic Context Models [Contribution] • Connections to the literature – Topic Models: Latent Dirichlet Allocation – Text vs Images – Importance of Supervision: Topic-supervised Latent Dirichlet Allocation (ts LDA) [Contribution] SVCL 49

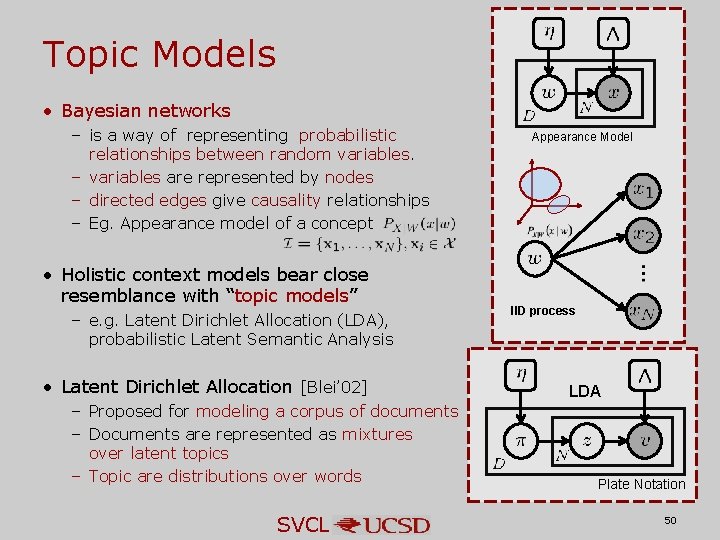

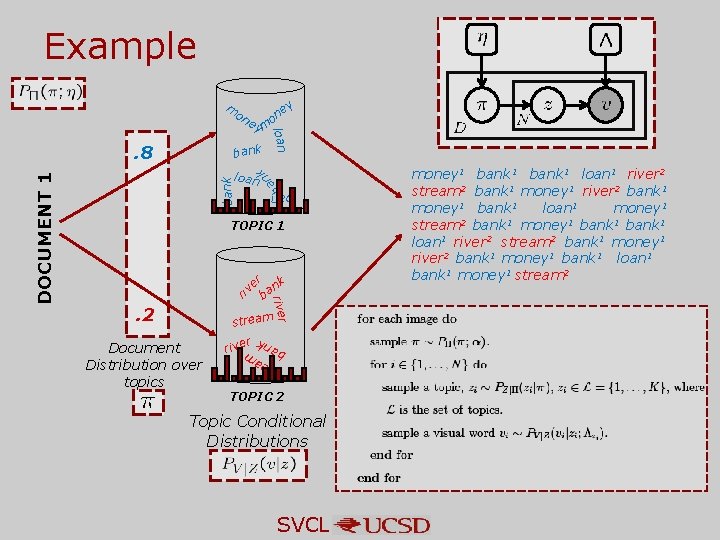

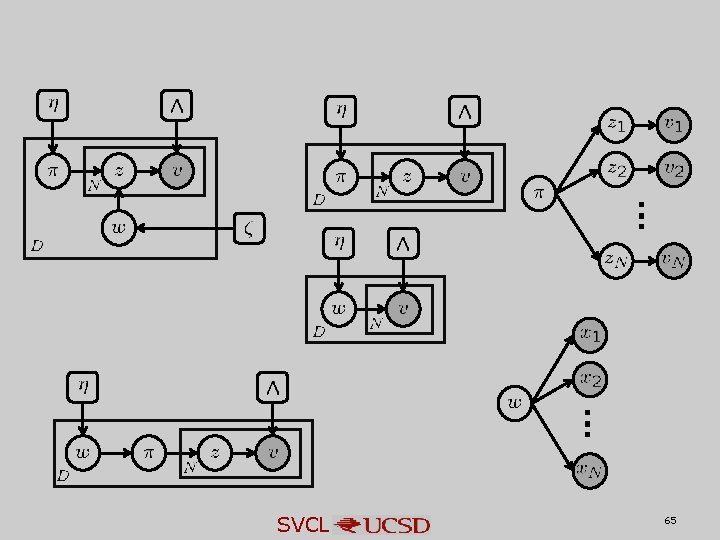

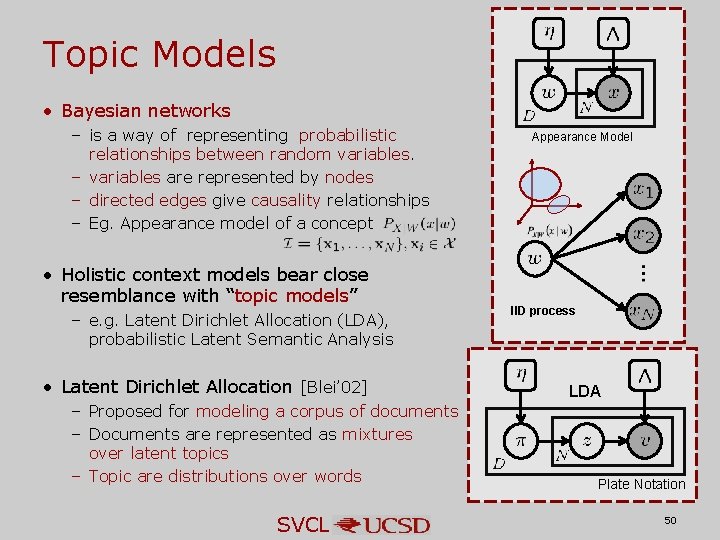

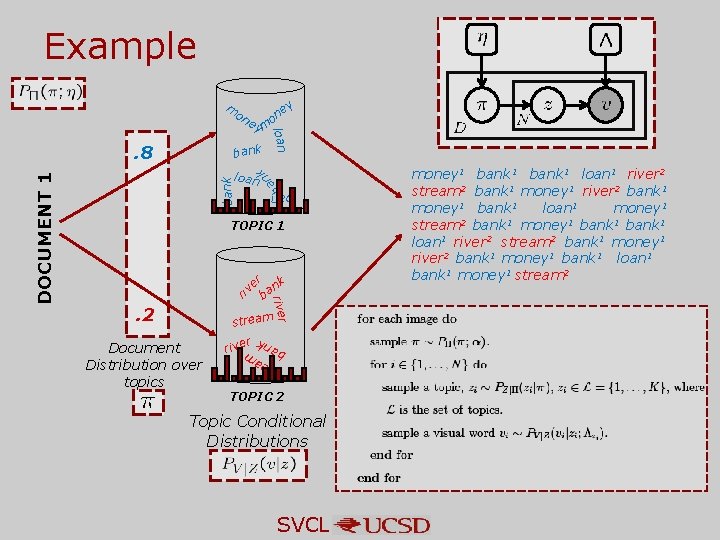

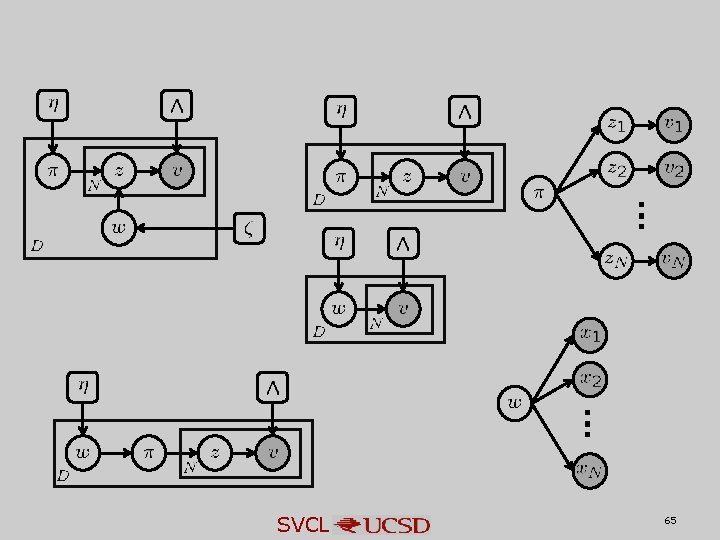

Topic Models • Bayesian networks – is a way of representing probabilistic relationships between random variables. – variables are represented by nodes – directed edges give causality relationships – Eg. Appearance model of a concept • Holistic context models bear close resemblance with “topic models” – e. g. Latent Dirichlet Allocation (LDA), probabilistic Latent Semantic Analysis • Latent Dirichlet Allocation [Blei’ 02] – Proposed for modeling a corpus of documents – Documents are represented as mixtures over latent topics – Topic are distributions over words SVCL Appearance Model IID process LDA Plate Notation 50

Example m on nk a b bank loan TOPIC 1 er nk v i r ba river . 2 stream Document Distribution over topics r rive s t r ea m ba nk DOCUMENT 1 ey m loan . 8 ey on TOPIC 2 Topic Conditional Distributions SVCL money 1 bank 1 loan 1 river 2 stream 2 bank 1 money 1 river 2 bank 1 money 1 bank 1 loan 1 money 1 stream 2 bank 1 money 1 bank 1 loan 1 river 2 stream 2 bank 1 money 1 river 2 bank 1 money 1 bank 1 loan 1 bank 1 money 1 stream 2

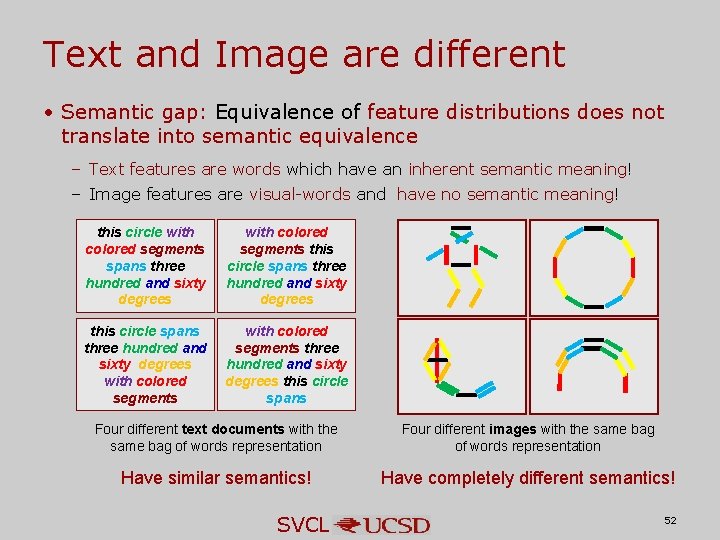

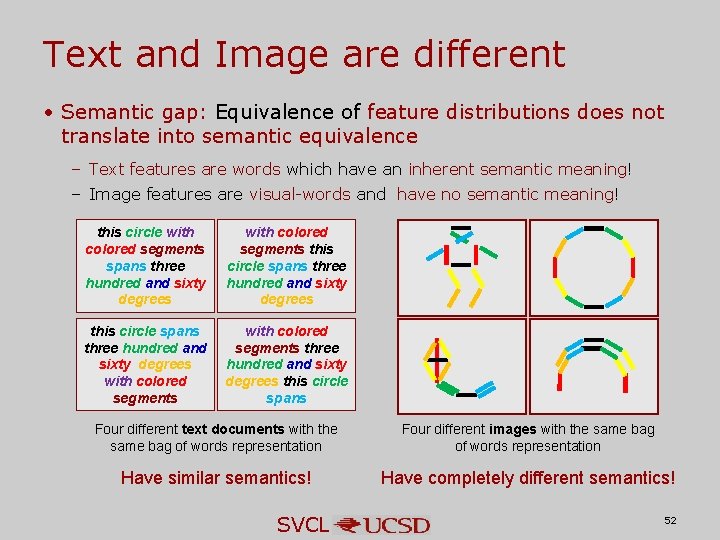

Text and Image are different • Semantic gap: Equivalence of feature distributions does not translate into semantic equivalence – Text features are words which have an inherent semantic meaning! – Image features are visual-words and have no semantic meaning! this circle with colored segments spans three hundred and sixty degrees with colored segments this circle spans three hundred and sixty degrees with colored segments three hundred and sixty degrees this circle spans Four different text documents with the same bag of words representation Four different images with the same bag of words representation Have similar semantics! Have completely different semantics! SVCL 52

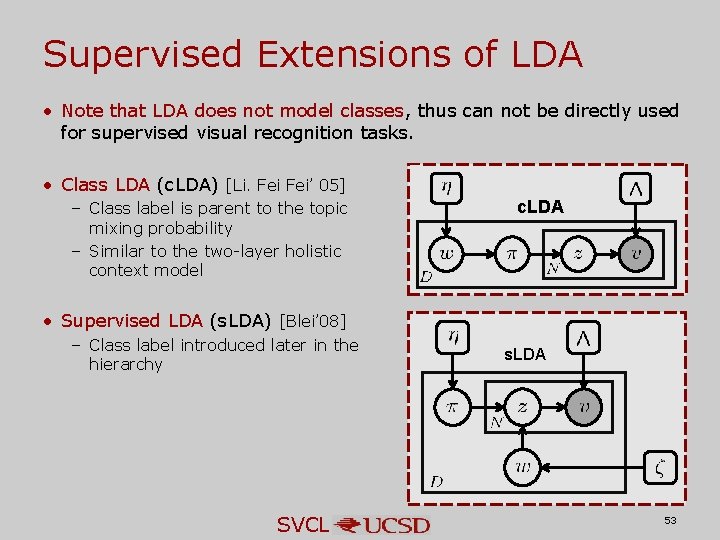

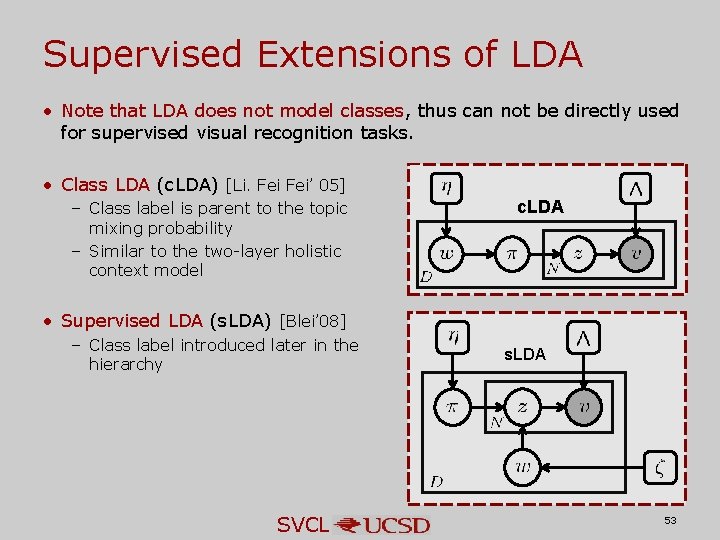

Supervised Extensions of LDA • Note that LDA does not model classes, thus can not be directly used for supervised visual recognition tasks. • Class LDA (c. LDA) [Li. Fei’ 05] – Class label is parent to the topic mixing probability – Similar to the two-layer holistic context model c. LDA • Supervised LDA (s. LDA) [Blei’ 08] – Class label introduced later in the hierarchy SVCL s. LDA 53

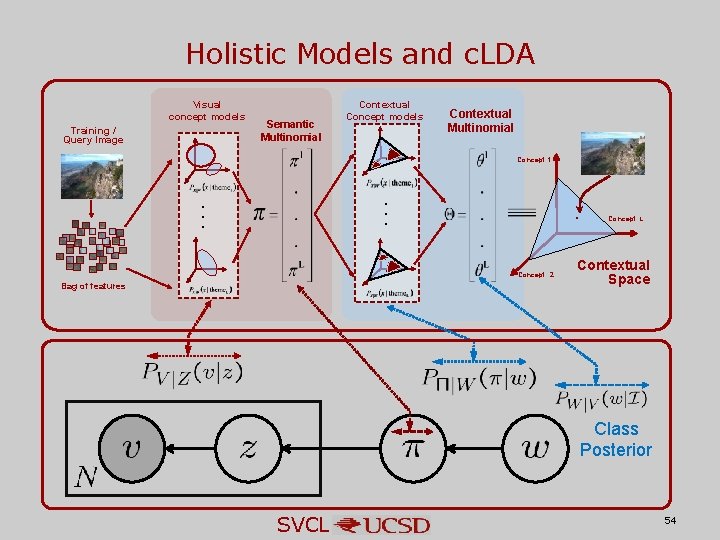

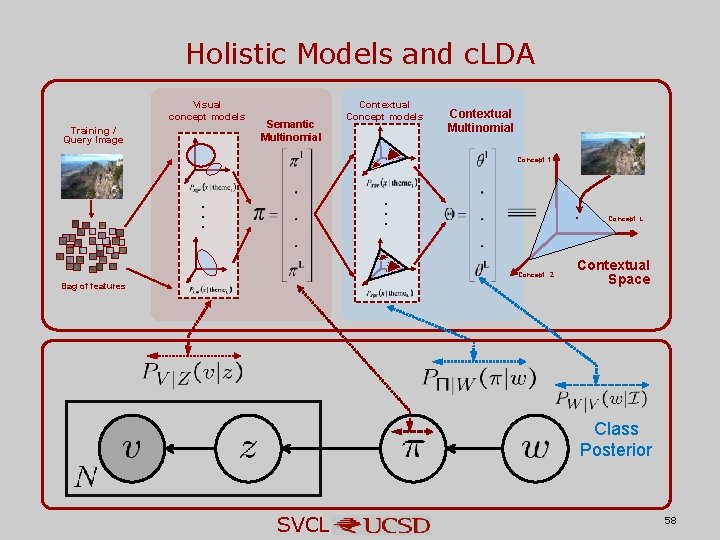

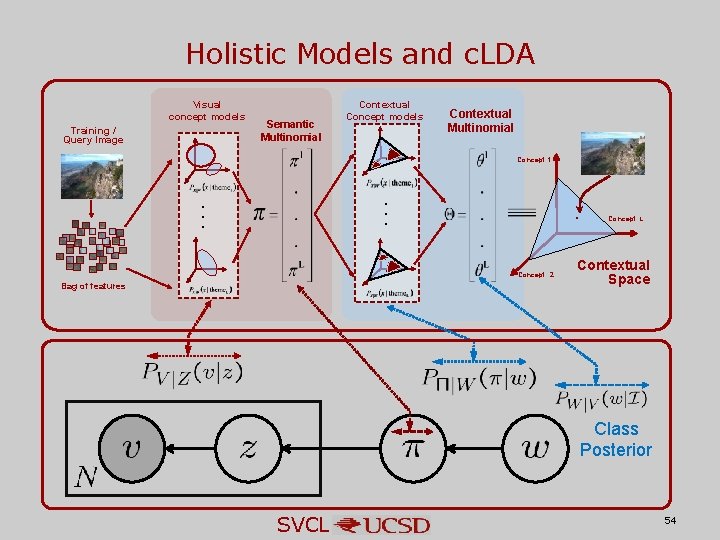

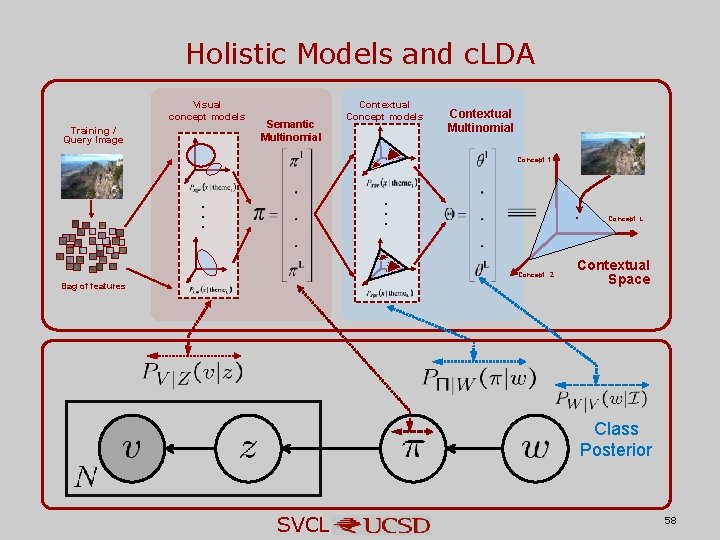

Holistic Models and c. LDA Visual concept models Training / Query Image Semantic Multinomial Contextual Concept models Contextual Multinomial Concept 1 . . . x Concept 2 Bag of features Concept L Contextual Space Class Posterior SVCL 54

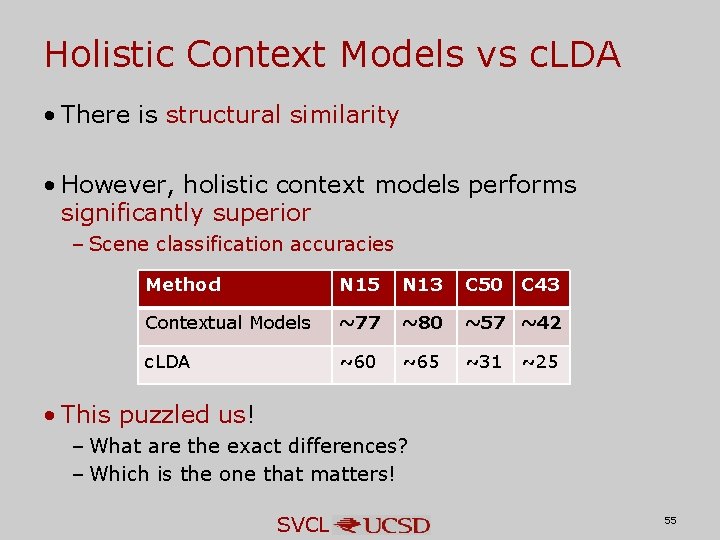

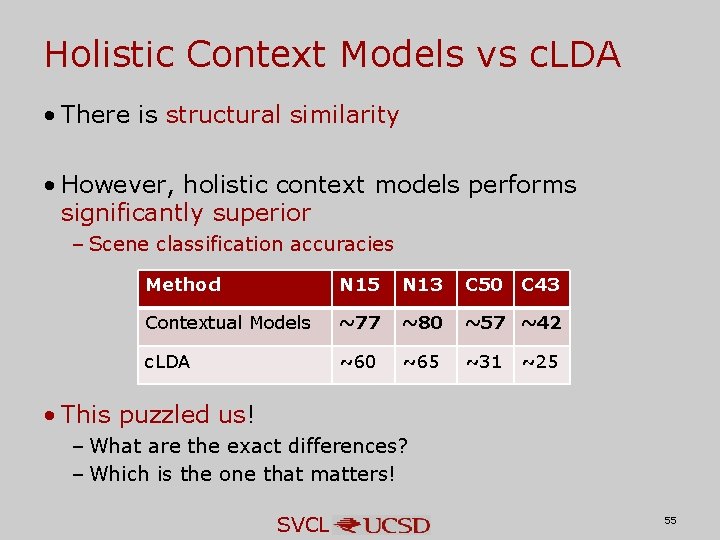

Holistic Context Models vs c. LDA • There is structural similarity • However, holistic context models performs significantly superior – Scene classification accuracies Method N 15 N 13 C 50 C 43 Contextual Models ~77 ~80 ~57 ~42 c. LDA ~60 ~65 ~31 ~25 • This puzzled us! – What are the exact differences? – Which is the one that matters! SVCL 55

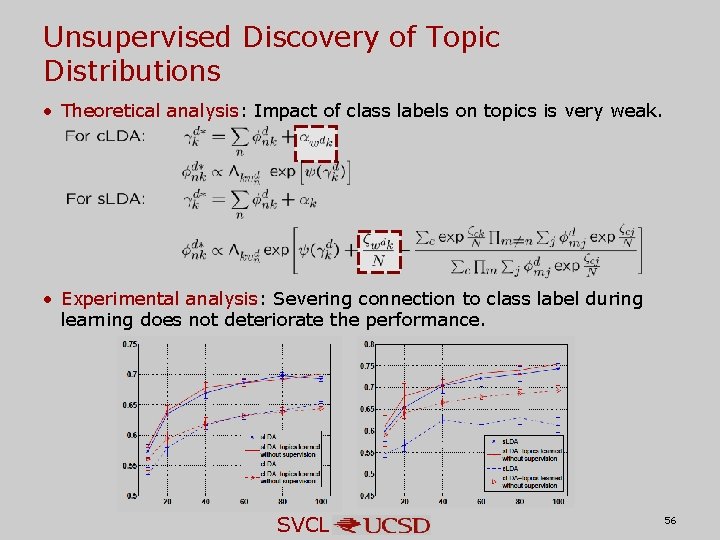

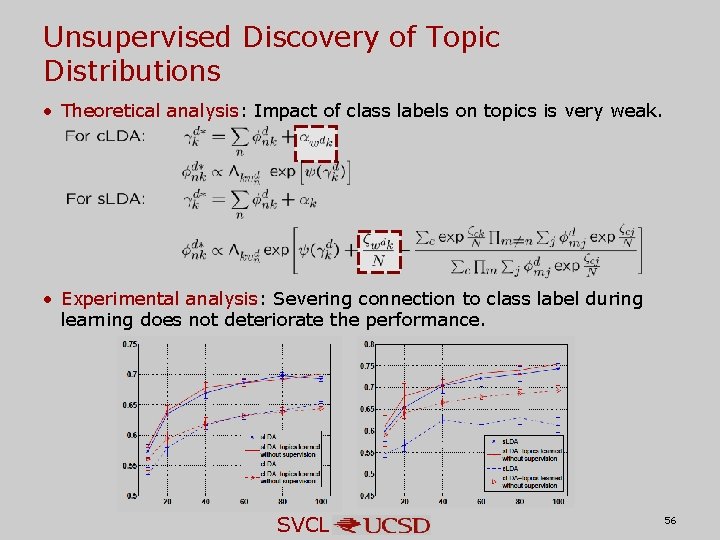

Unsupervised Discovery of Topic Distributions • Theoretical analysis: Impact of class labels on topics is very weak. • Experimental analysis: Severing connection to class label during learning does not deteriorate the performance. SVCL 56

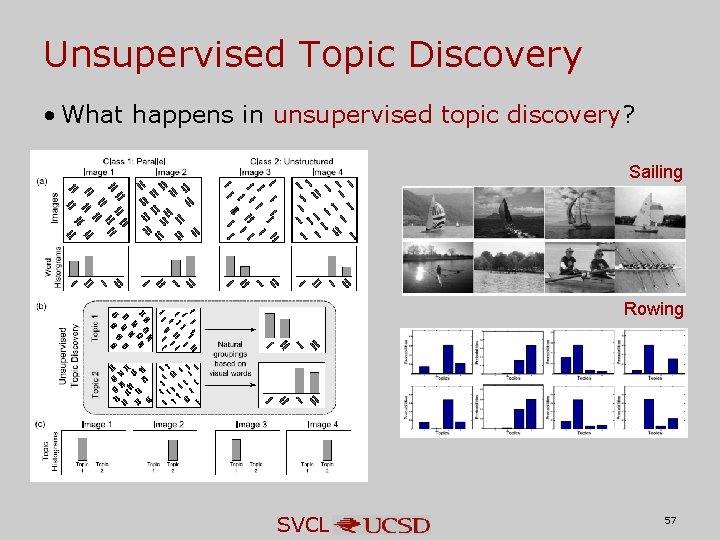

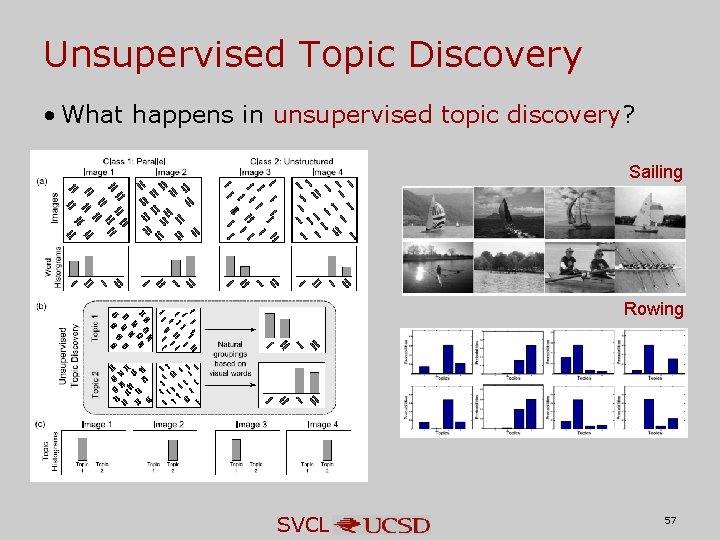

Unsupervised Topic Discovery • What happens in unsupervised topic discovery? Sailing Rowing SVCL 57

Holistic Models and c. LDA Visual concept models Training / Query Image Semantic Multinomial Contextual Concept models Contextual Multinomial Concept 1 . . . x Concept 2 Bag of features Concept L Contextual Space Class Posterior SVCL 58

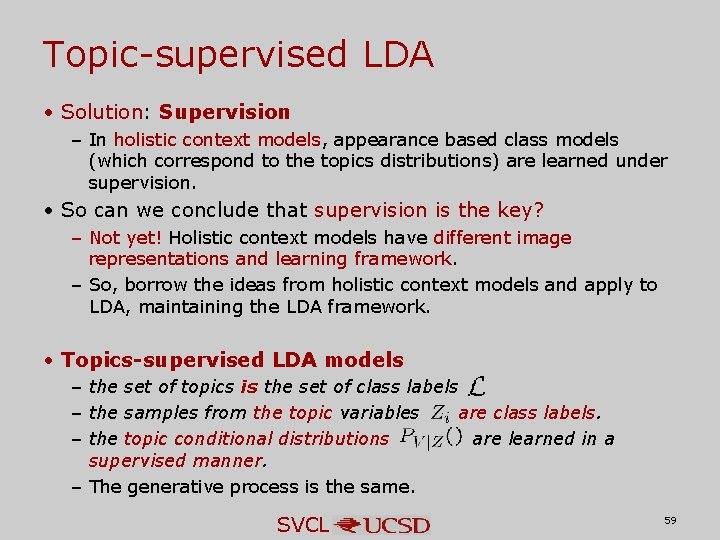

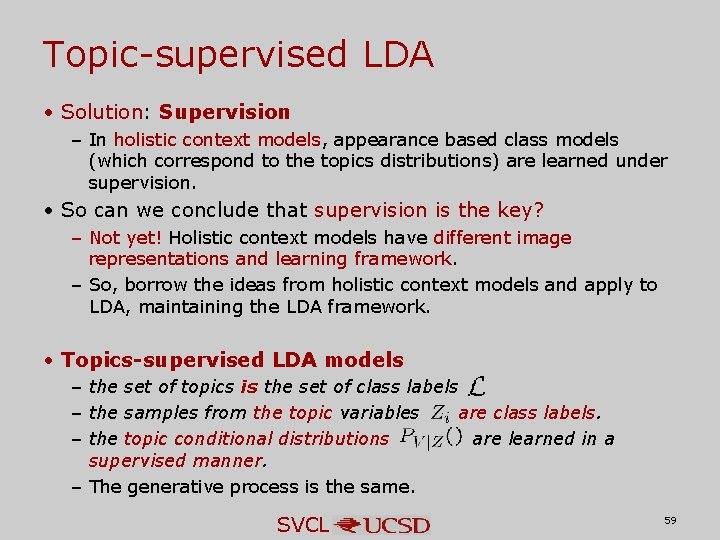

Topic-supervised LDA • Solution: Supervision – In holistic context models, appearance based class models (which correspond to the topics distributions) are learned under supervision. • So can we conclude that supervision is the key? – Not yet! Holistic context models have different image representations and learning framework. – So, borrow the ideas from holistic context models and apply to LDA, maintaining the LDA framework. • Topics-supervised LDA models – the set of topics is the set of class labels – the samples from the topic variables are class labels. – the topic conditional distributions are learned in a supervised manner. – The generative process is the same. SVCL 59

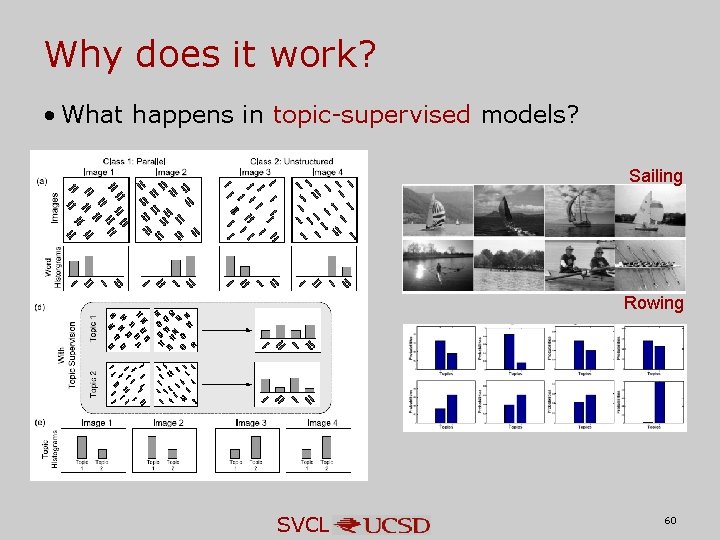

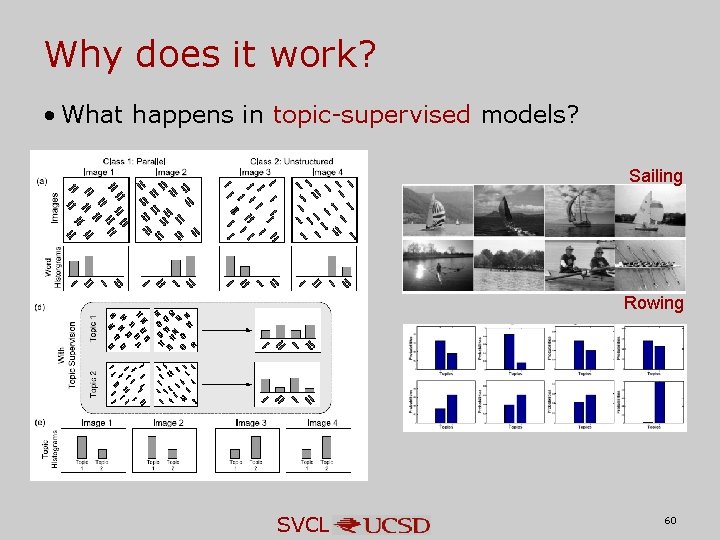

Why does it work? • What happens in topic-supervised models? Sailing Rowing SVCL 60

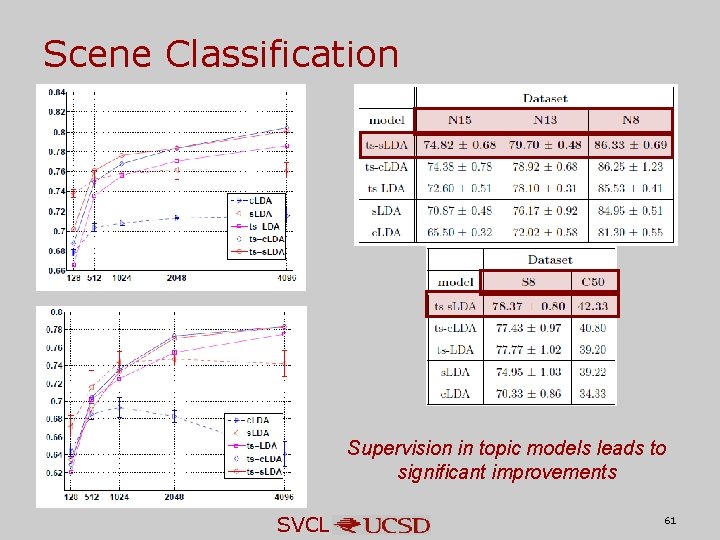

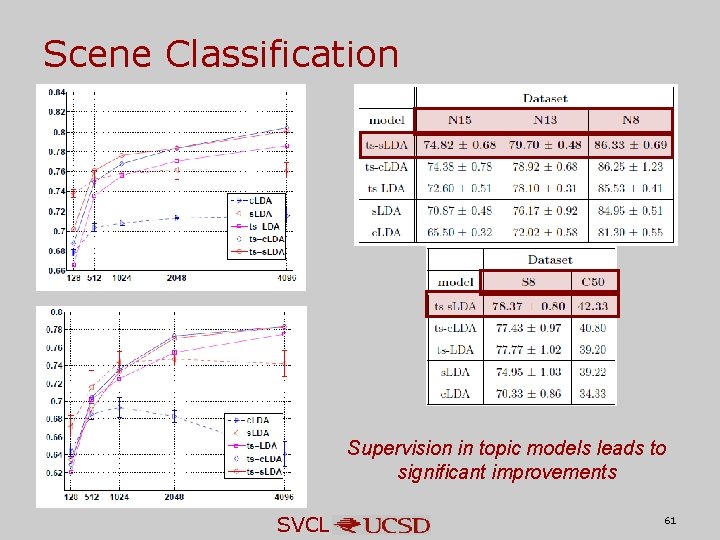

Scene Classification Supervision in topic models leads to significant improvements SVCL 61

In conclusion • Low-level representation – Improving low level classifiers is not the complete answer – Postpone hard decision – Data processing theorem • Semantic representation – – Provides a higher level of abstraction Bridges the semantic gap Is a universal representation and bridges the ‘modality gap’ Accounts for contextual relationships between concepts • Text and images are different – Techniques from text might not directly apply to images. – LDA and its variants as proposed, are not successful for supervised visual recognition tasks • Importance of supervision – Supervision is the key in building high performance recognition systems. SVCL 62

Acknowledgements • Ph. D advisor: Nuno Vasconcelos • Doctoral Committee: – – Prof. Serge J. Belongie, Kenneth Kreutz-Delgado, David Kriegman, Truong Nguyen • Colleagues and Collaborators – Antoni Chan, Dashan Gao, Hamed Masnadi-Shirazi, Sunhyoung Han, Vijay Mahadevan, Jose Maria Costa Pereira, Mandar Dixit, Mohammad Saberian, Kritika Muralidharan and Weixin Li – Emanuele Coviello, Gabe Doyle, Gert Lanckriet, Roger Levy, Pedro Moreno. • Friends from San Diego, most of whom are no longer in San Diego. • My parents and my family SVCL 63

Questions? © Bill Watterson SVCL 64

SVCL 65

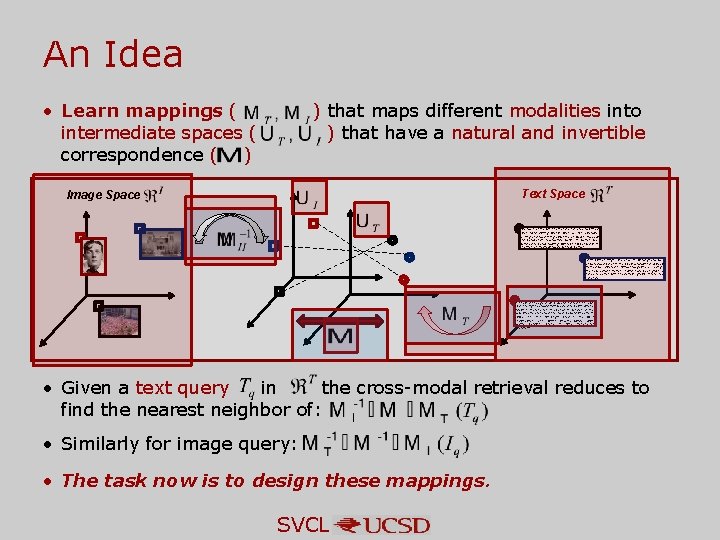

An Idea • Learn mappings ( intermediate spaces ( correspondence ( ) ) that maps different modalities into ) that have a natural and invertible Text Space Image Space Like most of the UK, the Manchester area mobilised extensively during World War II. For example, casting and machining expertise at Beyer, Peacock and Company's locomotive works in Gorton was switched to bomb making; Dunlop's rubber works in Chorlton-on-Medlock made barrage balloons; Martin Luther King's presence in Birmingham was not welcomed by all in the black community. A black attorney was quoted in ''Time'' magazine as saying, "The new administration should have been given a chance to confer with the various groups interested in change. … In 1920, at the age of 20, Coward starred in his own play, the light comedy ''I'll Leave It to You''. After a tryout in Manchester, it opened in London at the New Theatre (renamed the Noël Coward Theatre in 2006), his first full-length play in the West End. Thaxter, John. British Theatre Guide, 2009 Neville Cardus's praise in ''The Manchester Guardian'' • Given a text query in the cross-modal retrieval reduces to find the nearest neighbor of: • Similarly for image query: • The task now is to design these mappings. SVCL