The Use of Hidden Markov Model in Natural

- Slides: 23

The Use of Hidden Markov Model in Natural Arabic Language Processing: A Survey DIMA SULEIMAN, ARAFAT AWAJAN AND WAEL AL ETAIWI The 8 th International Conference on Emerging Ubiquitous Systems and Pervasive Networks (EUSPN 2017)

ABOUT THE PRESENTER • • Dima Suleiman Education: • • Ph. D. Computer Science, Deep Learning, NLP (2015 -) MSc. Computer Science (2002 -2004) BSc. Computer Science(1998 -2002) Contact: • • Dima. suleiman@ju. edu. jo Dimah_1999@yahoo. com PSUT © 2017 2

AGENDA Ø Introduction Ø Hidden Markov Model Ø The Chain Rule Ø Arabic Language Features ØContribution ØApplications Ø Morphological Analysis Ø Part of Speech Tagging(Po. ST) Ø Text Classification ØConclusion 3

INTRODUCTION • Natural Language Processing (NLP) applications that utilize statistical approach, have been increased in recent years. • One of the most important models of machine learning used for the purpose of processing natural language is Hidden Markov Model (HMM)*. • Markov Model is a probabilistic model that is considered as sequence classifier such as letters or words classifier * Lussier E. , Markov Models and Hidden Markov Models_ A Brief Tutorial, INTERNATIONAL COMPUTER SCIENCE INSTITUTE, 1998 4

INTRODUCTION • Hidden Markov Model contains a set of state and transitions where transition from one state to another state is determined according to certain input. • Each transition contains a value or weight that is determined according to certain probability distribution. • Therefore, if certain input causes transmission from state x to state y then the overall weight will be augmented by the weight w that is the value of transition or transition probability between state x and state y. 5

INTRODUCTION • HMM depends on conditional probability. • Conditional probability is the probability of occurrence a certain event X depends on the probability of occurrence of previous event Y, this conditional probability can be represented as follows: p(X|Y) which is equal to P(X ∩ Y ) / P(Y) 6

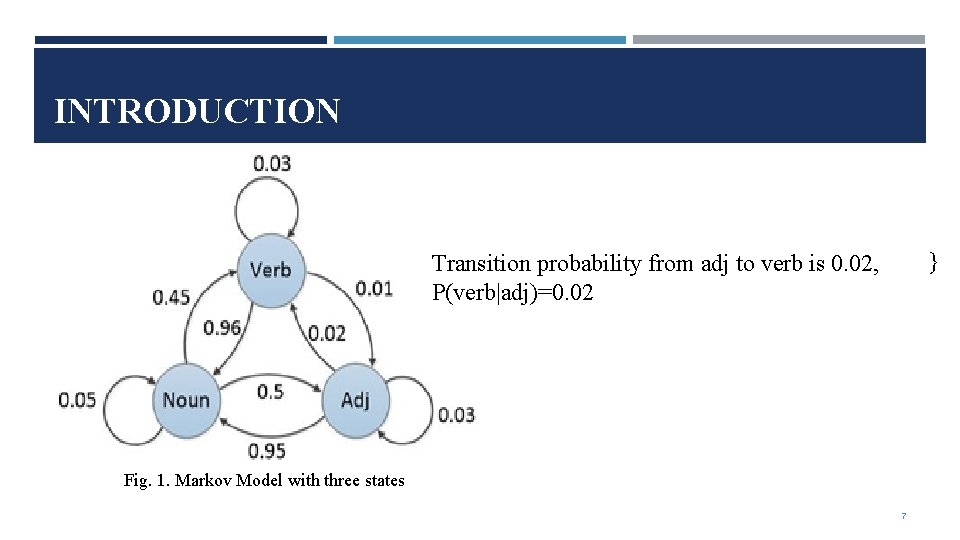

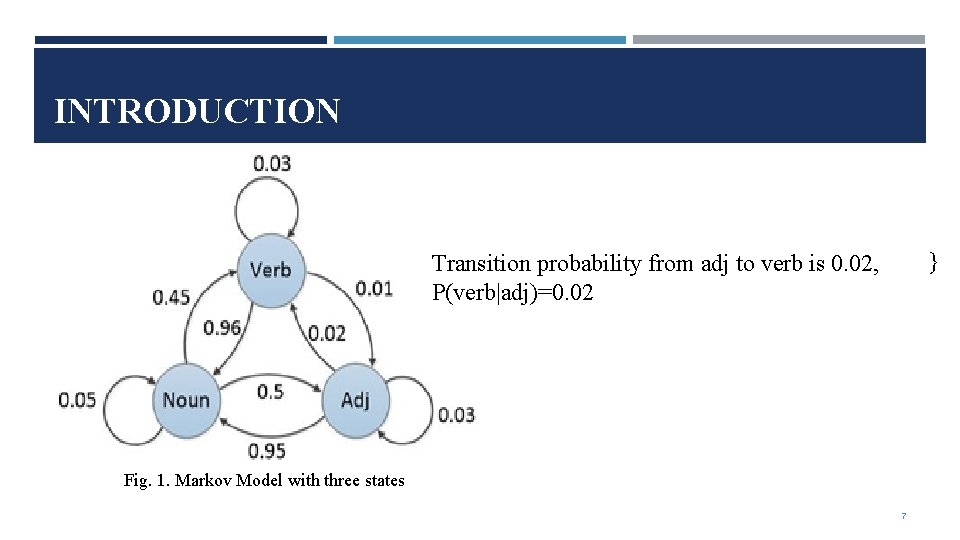

INTRODUCTION verb adj is Noun, 0. 01, Assume that we have three {Verb, Transition probability from states adj toto verb 0. 02, Adj} P(adj|verb)=0. 01 as shown in Fig. 1 P(verb|adj)=0. 02 Fig. 1. Markov Model with three states 7

INTRODUCTION • Chain Rule: Chain consists of sequence of words or letters, such as w 1 w 2 w 3… wn. • The probability of w 1 w 2 w 3 …. wn equal: P(w 1, w 2, w 3, …. , wn) =P(w 1)*P(w 2|w 1)*P(w 3|w 1, w 2)*…*P(wn | w 1, w 2, w 3, …. . , wn) which is called the probability of chain rule 8

INTRODUCTION • HMM is used to compute the probability of a certain word, and there is no need to consider all the previous words from the beginning of the sentence. • Bigram(first order Markov model) where the probability of word N depends on the probability of word N-1*. • Trigram(second order Markov model) where the probability of word N depends on the probability of word N-1 and word N-2**. * Jurafsky D. , Martin H, Speech and Language Processing. Copyright c 2016. All rights reserved. Draft of November 7, 2016. **Al-Anziand F. , Abu. Zeina D. , A SURVEY OF MARKOV CHAIN MODELS IN LINGUISTICS APPLICATIONS, 10. 5121/csit. 2016. 61305 9

INTRODUCTION ARABIC LANGUAGE FEATURES: • Arabic language is full of morphology that can be divided into concatenative and templatic. • Concatenative morpheme consist of stem in addition to affixes and clitics. • There are three types of Affixes : prefix, circumfixes and suffix. • prefixes are zero or up to four characters that may precede the stem • suffixes are zero, one, two or three characters that may follow the stem • circumfixes which combine both prefixes and suffixes 10

INTRODUCTION ARABIC LANGUAGE FEATURES: • There are two types of clitics : proclitics and enclitics. • proclitics occur at the beginning of the word • enclitics occur at the end of the word • The general representation of concatenative morphemes can be as follows where character [] indicates optional morpheme: [Proclitic(s)+[Prefix(es)]] + stem + [Suffix(es) + [Enclitic]]. • morphemes of the word “ ”ﻭﺳﻴﻜﺘﺒﻮﻧﻬﺎ , which means “and they will write it, ” read in transliteration as “wa-saya-ktub-uwna-h. A”*. *Habash, N. , Soudi, A. , and Buckwalter, T. 2007. On Arabic transliteration. In Arabic Computational Morphology. Springer. 15– 22. 11

INTRODUCTION ARABIC LANGUAGE FEATURES: • Most of words in Arabic language are generated using Templatic morpheme or root-and -pattern scheme(derivative). • The word can be derived by applying the morphological pattern on the root or stem, where morphological patterns or balances are already listed and predefined. • Accordingly, ten of words (surface form) can be generated from one root. The number of words having three letters long root is around 5, 000 which is the most common root length*. *Beesley, R. 1996. Arabic finite-state morphological analysis and generation. In Proceedings of the 16 th International Conference on Computational Linguistics (COLING’ 96). 89– 94. 12

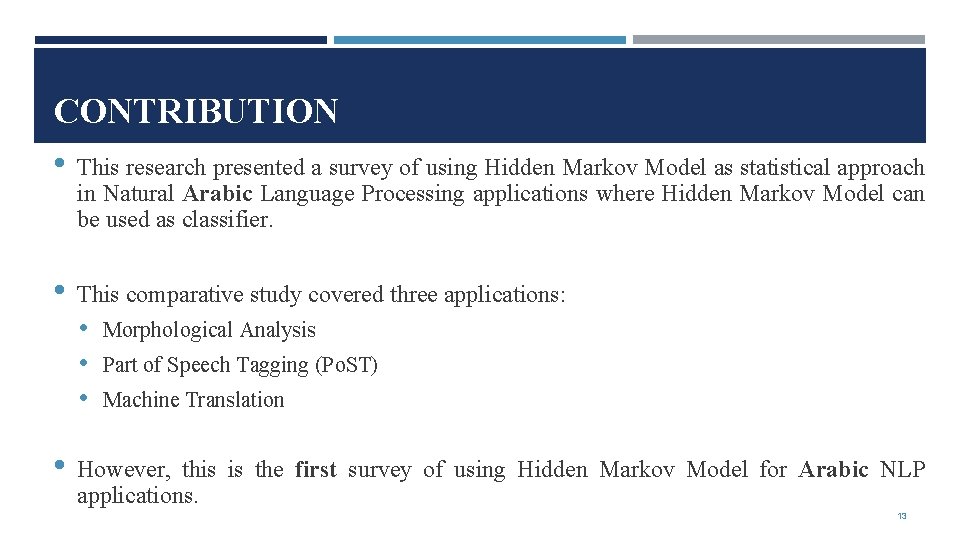

CONTRIBUTION • This research presented a survey of using Hidden Markov Model as statistical approach in Natural Arabic Language Processing applications where Hidden Markov Model can be used as classifier. • This comparative study covered three applications: • • Morphological Analysis Part of Speech Tagging (Po. ST) Machine Translation However, this is the first survey of using Hidden Markov Model for Arabic NLP applications. 13

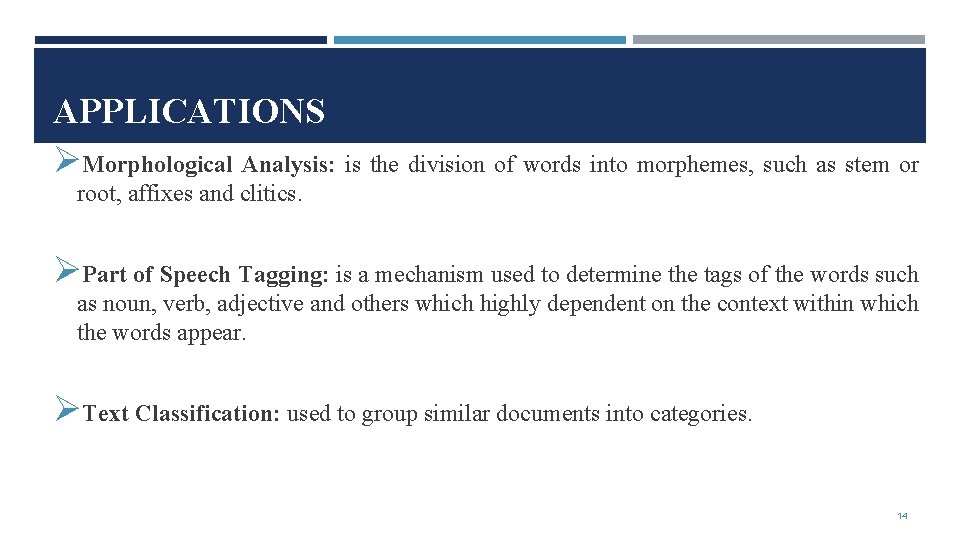

APPLICATIONS ØMorphological Analysis: is the division of words into morphemes, such as stem or root, affixes and clitics. ØPart of Speech Tagging: is a mechanism used to determine the tags of the words such as noun, verb, adjective and others which highly dependent on the context within which the words appear. ØText Classification: used to group similar documents into categories. 14

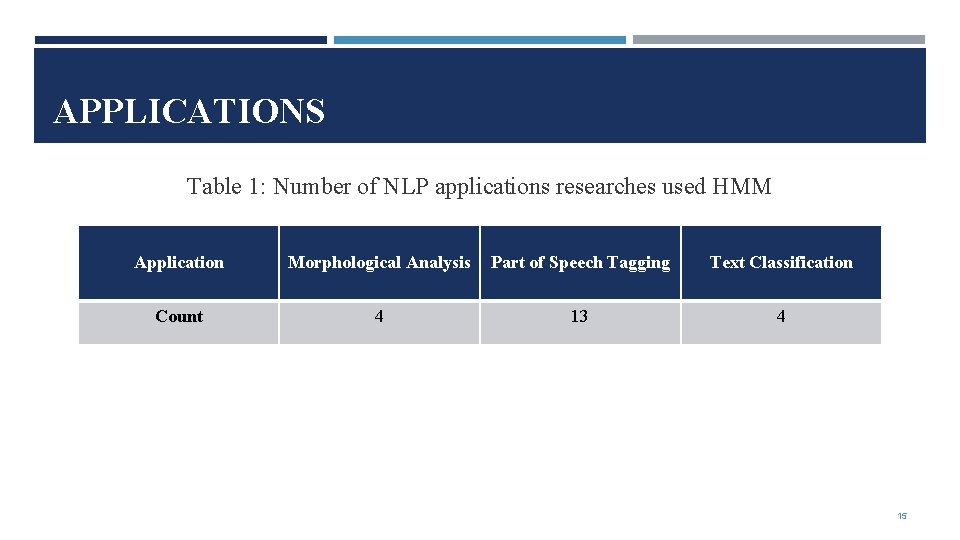

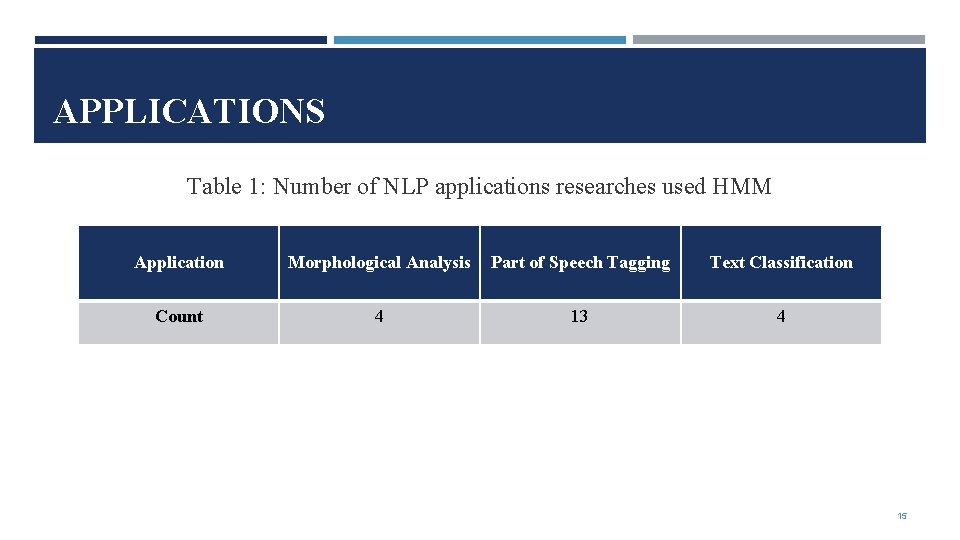

APPLICATIONS Table 1: Number of NLP applications researches used HMM Application Morphological Analysis Part of Speech Tagging Text Classification Count 4 13 4 15

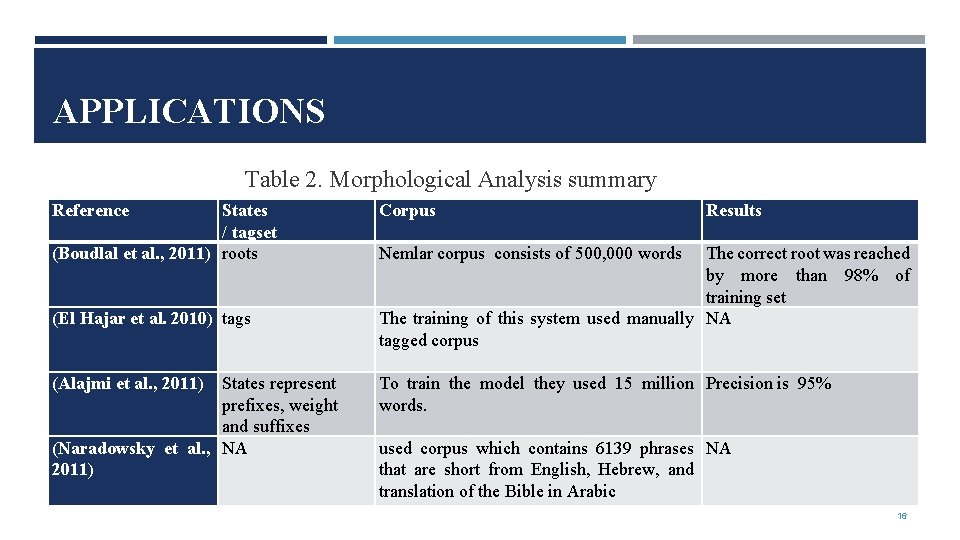

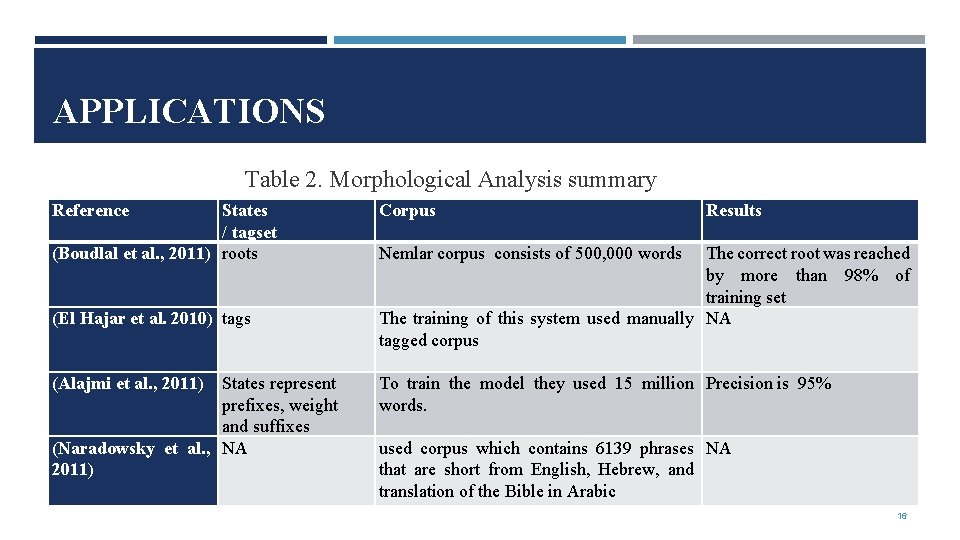

APPLICATIONS Table 2. Morphological Analysis summary Reference States / tagset (Boudlal et al. , 2011) roots (El Hajar et al. 2010) tags (Alajmi et al. , 2011) States represent prefixes, weight and suffixes (Naradowsky et al. , NA 2011) Corpus Results Nemlar corpus consists of 500, 000 words The correct root was reached by more than 98% of training set The training of this system used manually NA tagged corpus To train the model they used 15 million Precision is 95% words. used corpus which contains 6139 phrases NA that are short from English, Hebrew, and translation of the Bible in Arabic 16

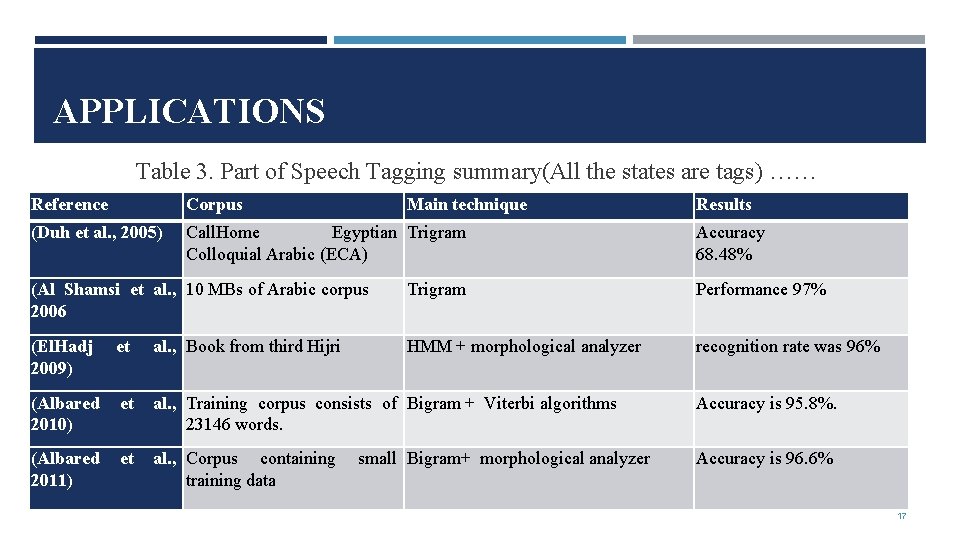

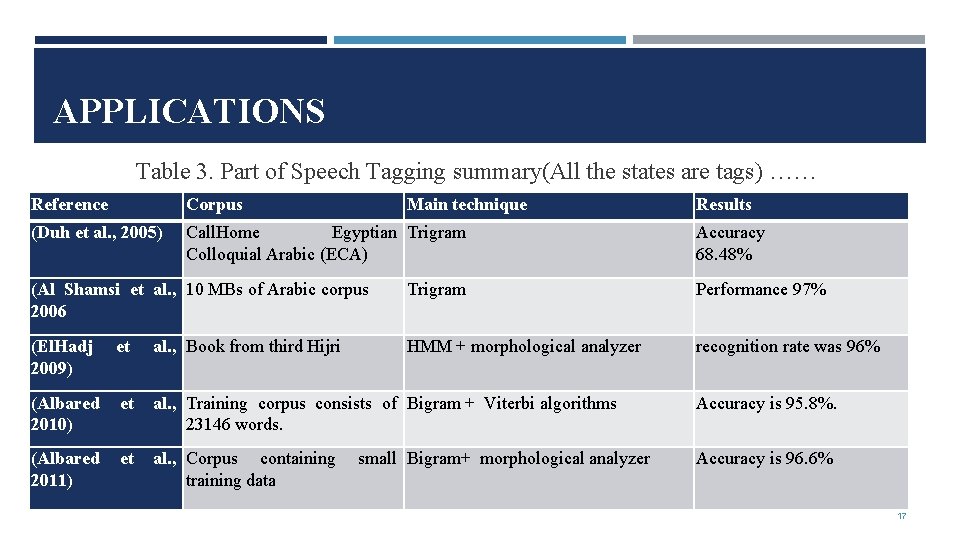

APPLICATIONS Table 3. Part of Speech Tagging summary(All the states are tags) …… Reference Corpus Main technique (Duh et al. , 2005) Call. Home Egyptian Trigram Colloquial Arabic (ECA) Results Accuracy 68. 48% (Al Shamsi et al. , 10 MBs of Arabic corpus 2006 Trigram Performance 97% (El. Hadj 2009) et al. , Book from third Hijri HMM + morphological analyzer recognition rate was 96% (Albared 2010) et al. , Training corpus consists of Bigram + Viterbi algorithms 23146 words. Accuracy is 95. 8%. (Albared 2011) et al. , Corpus containing training data Accuracy is 96. 6% small Bigram+ morphological analyzer 17

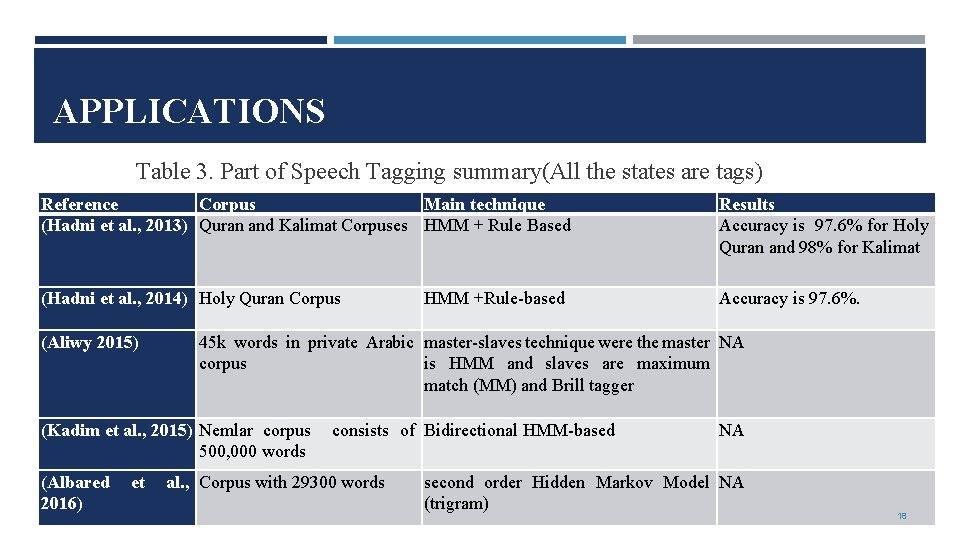

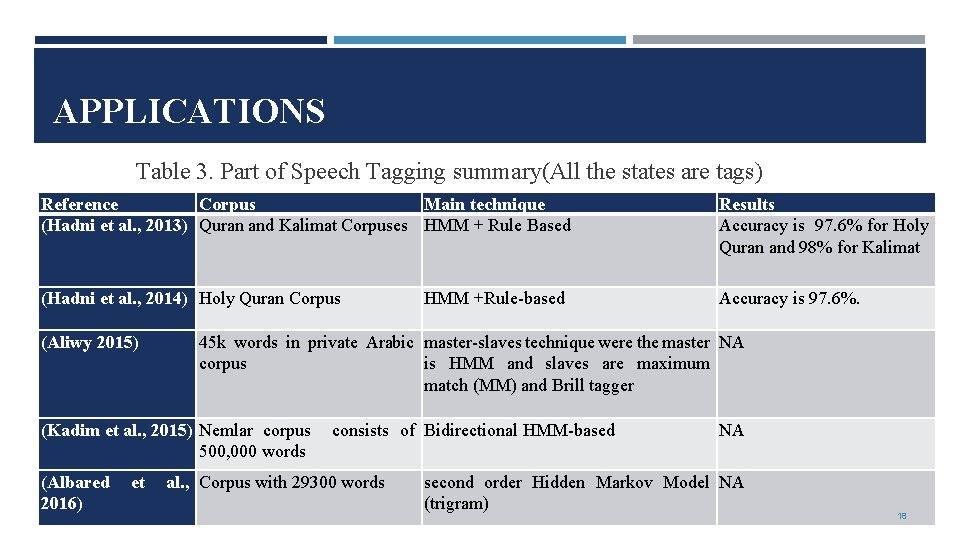

APPLICATIONS Table 3. Part of Speech Tagging summary(All the states are tags) Reference Corpus Main technique (Hadni et al. , 2013) Quran and Kalimat Corpuses HMM + Rule Based Results Accuracy is 97. 6% for Holy Quran and 98% for Kalimat (Hadni et al. , 2014) Holy Quran Corpus Accuracy is 97. 6%. (Aliwy 2015) 45 k words in private Arabic master-slaves technique were the master NA corpus is HMM and slaves are maximum match (MM) and Brill tagger (Kadim et al. , 2015) Nemlar corpus 500, 000 words (Albared 2016) et HMM +Rule-based consists of Bidirectional HMM-based al. , Corpus with 29300 words NA second order Hidden Markov Model NA (trigram) 18

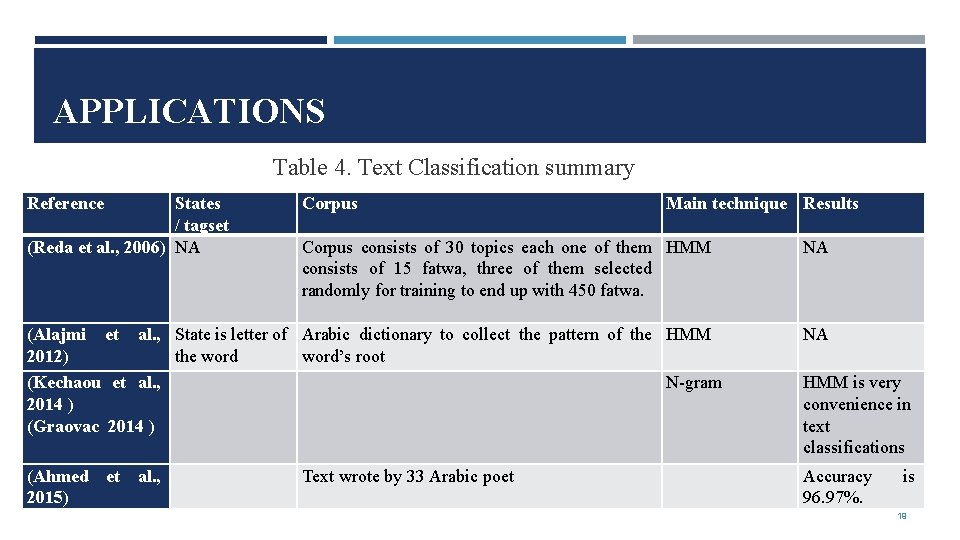

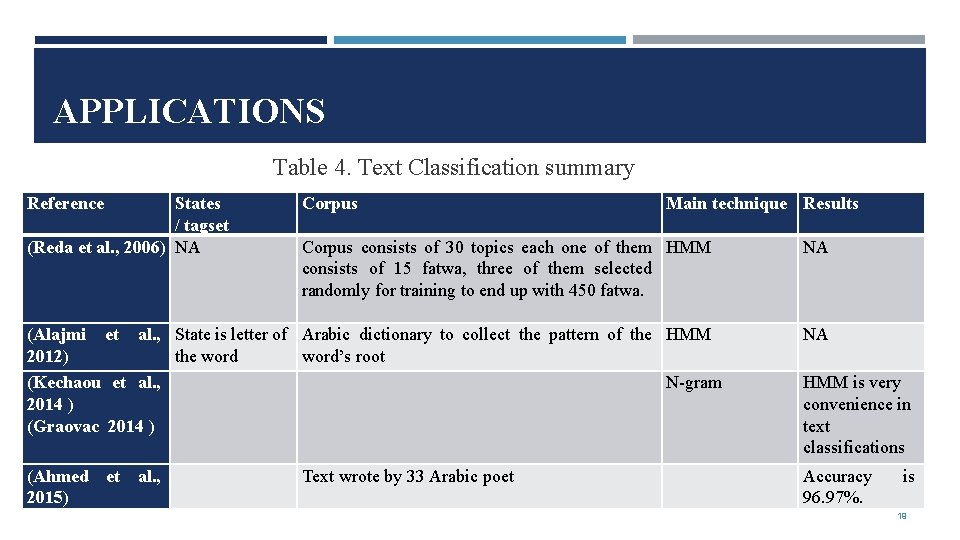

APPLICATIONS Table 4. Text Classification summary Reference States / tagset (Reda et al. , 2006) NA Corpus Main technique Results Corpus consists of 30 topics each one of them HMM consists of 15 fatwa, three of them selected randomly for training to end up with 450 fatwa. NA (Alajmi et al. , State is letter of Arabic dictionary to collect the pattern of the HMM 2012) the word’s root (Kechaou et al. , N-gram 2014 ) (Graovac 2014 ) NA (Ahmed et al. , 2015) Accuracy 96. 97%. Text wrote by 33 Arabic poet HMM is very convenience in text classifications is 19

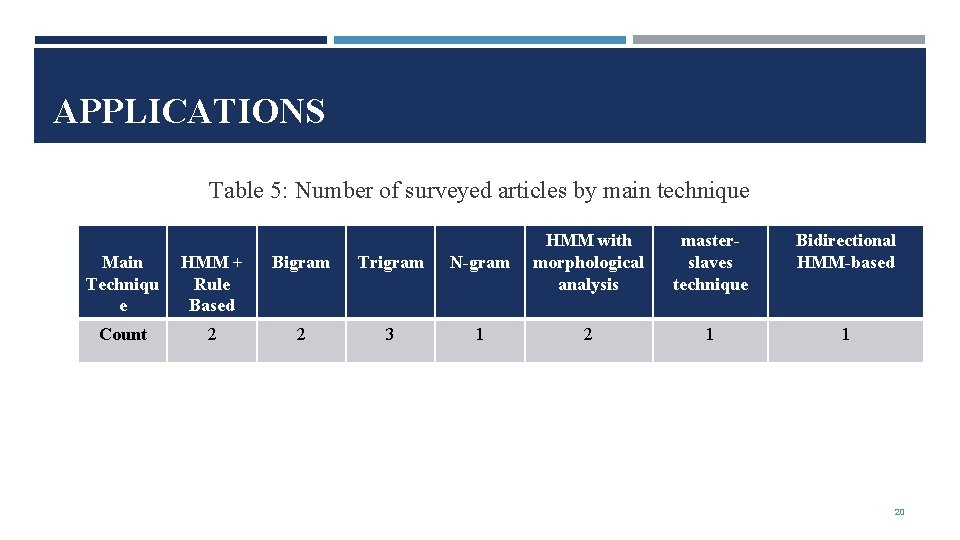

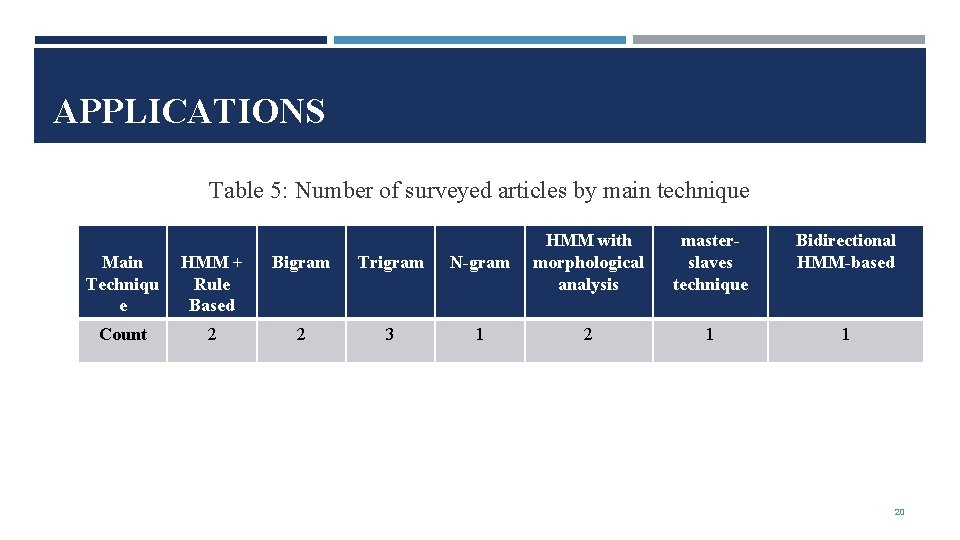

APPLICATIONS Table 5: Number of surveyed articles by main technique Main Techniqu e HMM + Rule Based Bigram Trigram N-gram HMM with morphological analysis Count 2 2 3 1 2 masterslaves technique Bidirectional HMM-based 1 1 20

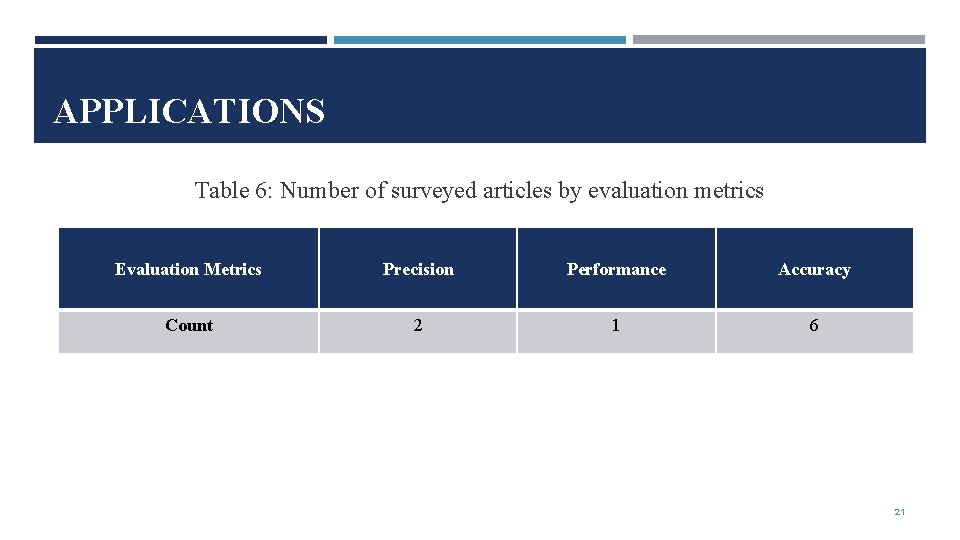

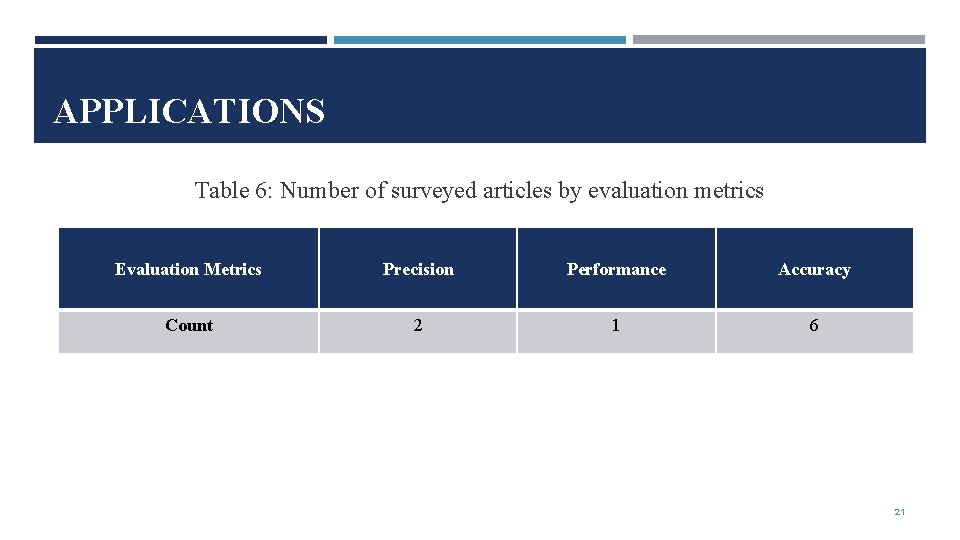

APPLICATIONS Table 6: Number of surveyed articles by evaluation metrics Evaluation Metrics Precision Performance Accuracy Count 2 1 6 21

CONCLUSION • In this research, a comparative study is made between different applications that use HMM in their processing of Arabic language text. • The study concluded that HMM can be used in different layers of NLP, but the significant affect was in Morphological Analysis and part of speech tagging that is used in most of NLP applications in pre-processing phase. • On the other hand, limited number of high level NLP application can use HMM such as Text classifications 22

Thank You 23