The TheoryImplementationTheory Iterative C Through the Eye of

- Slides: 19

The Theory-Implementation-Theory Iterative C Through the Eye of an AIBO

Goals Provide a practical demonstration of the synthetic phenomenology approach. synthetic : artificially (re-)created phenomenology : study of experience synthetic phenomenology = machine consciousness? Implement and test a particular model of change recognition / change blindness. Provide a practical demonstration of the value of a tight theoryimplemented model-theory iterative cycle.

Assumptions Experience is more than visual experience, and visual experience is more than current visual input. Expectations based on past experience drive present experience. Experience is more complicated than “real” vs. “illusion”. There are different ways of being “real”: consider “centre of gravity”. Using a robot in place of a human subject has major advantages and major disadvantages. An AIBO is not a dog, and a dog is not a human.

Synthetic Phenomenology Methods of specifying the contents of experience that go beyond the capacity of language (or concepts) to express. Visual change recognition / change blindness Concepts and conceptualized experience Representational approach, but perhaps only to point-of-view of observer, not to the agent or agent-model. Caveats: Need to get building blocks right. Content of visual experience ≠ photograph(s). Probably need model of experiencing agent.

Change Blindness Don't want just to specify content of (visual) experience but also say something about what is missing from the content. “Perhaps you have had the following experience: you are searching for an open seat in a crowded movie theater. After scanning for several minutes, you eventually spot one and sit down. The next day, your friends ask you why you ignored them at theater. They were waving at you, and you looked right at them but did not see them. ” (Simons and Chabris, “Gorillas in Our Midst”) Is therefore a Grand Illusion?

An Algorithm 1) An experiencing subject has an expectation-as-visual experience that if she were to look at L she would see X if and only if: a) L is located within her current field of view, or b) She has previously focused her attention on (foveated to) L. 2) If the scene changes at L from X to Y and L is located anywhere within the current visual field and the change is sufficiently localized (i. e. , not to count as global), then a change flag will be raised to indicate that a local change has been detected.

An Algorithm 3) What happens next depends on the number of change flags raised. a) If a single change flag is raised at location L, then foveal attention will be drawn to L. b) Otherwise, if several change flags have been raised in addition to the change flag at L, such that the number of change flags is less than some threshold n, foveal attention will be drawn to the location of one of those flags according to some measure of salience. c) Otherwise, if the number of change flags exceeds the threshold n, then all of the flags will be reset and the change treated as a global change or ignored.

Theory into Practice Advantages: How do you know what is in the subject's current visual field? Can implement changes in the model very quickly. No ethical limits on what you can do to a robot? There's no need to assume that the robot model is actually experiencing anything, and ethically it's just as well if it's not! Disadvantages: One is limited by what can be expressed within the particular model and on the particular hardware! Erm. . . how does one interpret the results?

The AIBO in Action AIBO has only one “eye”. The “eye” can only be re-directed by turning the entire head. There is no equivalent to an optic nerve, so no blind spot! There is no equivalent to rods and cones! Such a distinction is necessary if the AIBO's visual field is not to be equivalent to its field of view – as it is not in all animals that have recognizable eyes. AIBO is a mass-produced product for a commercial market. The toolkit we're using to interact with the AIBO is beta software. Camera images are something like projections on an elliptical plane. The AIBO never quite looks where you tell it to, or where it thinks it's looking.

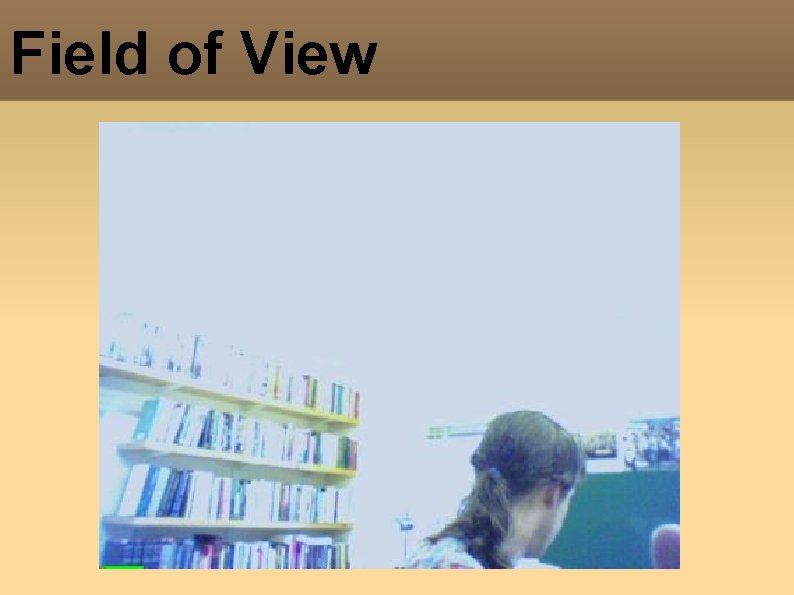

Field of View

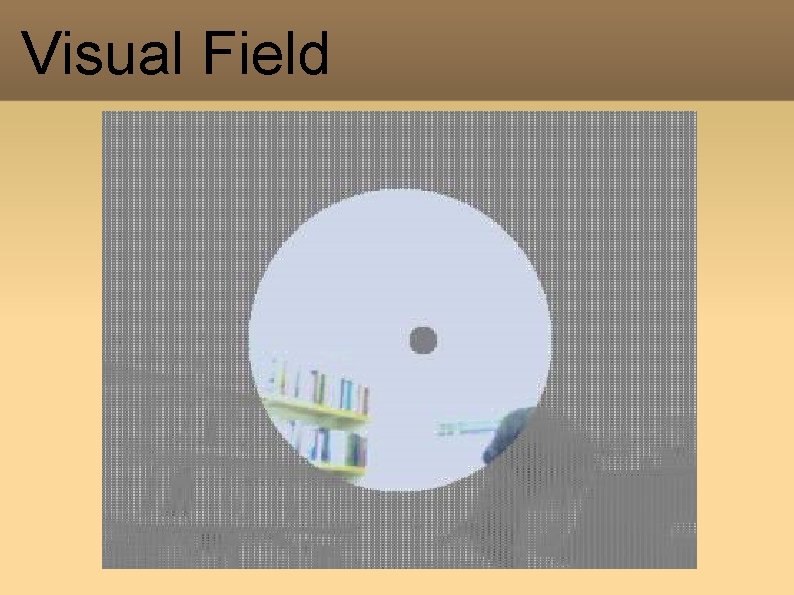

Visual Field

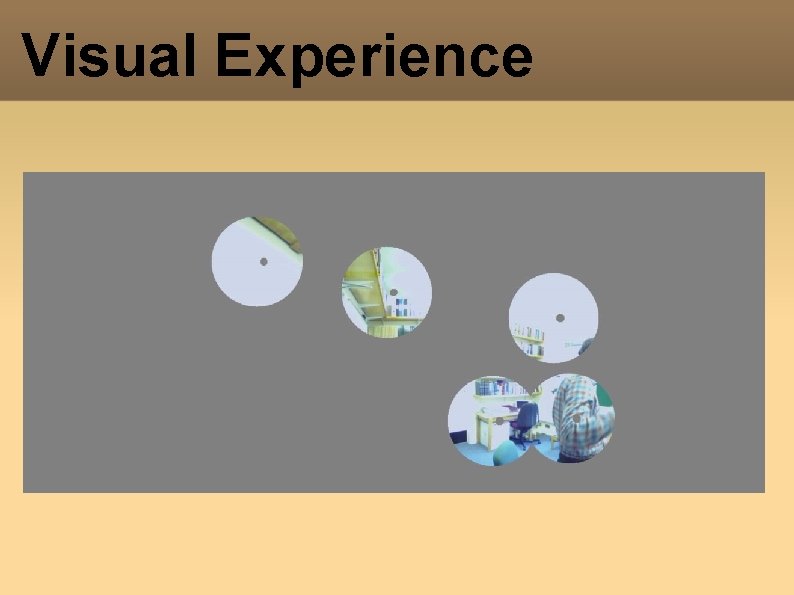

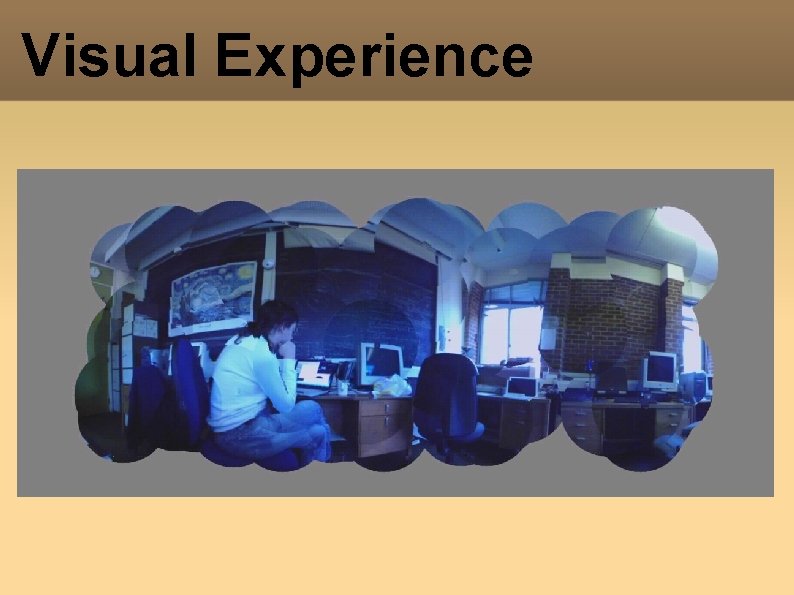

Visual Experience

Visual Experience

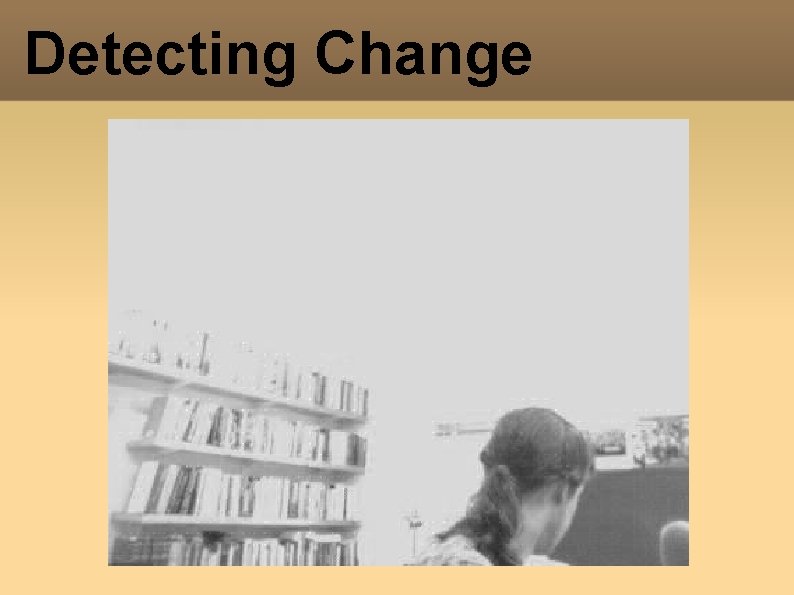

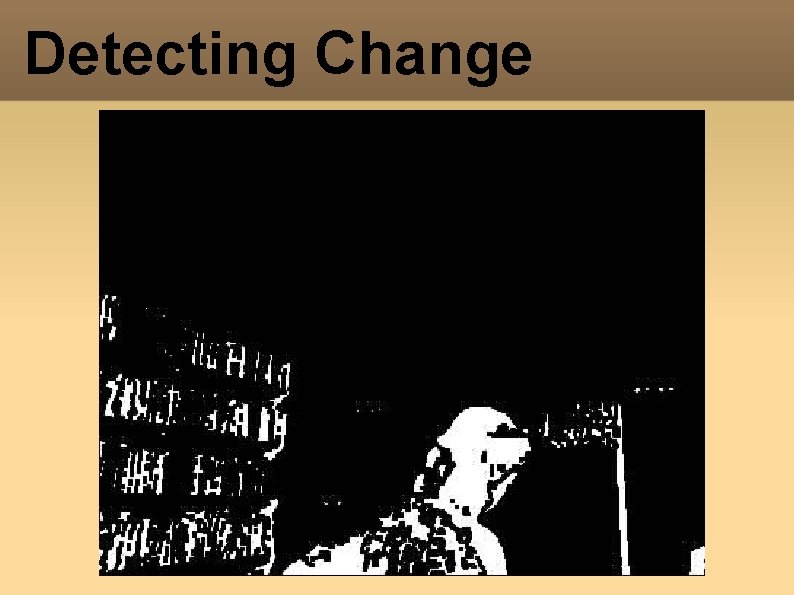

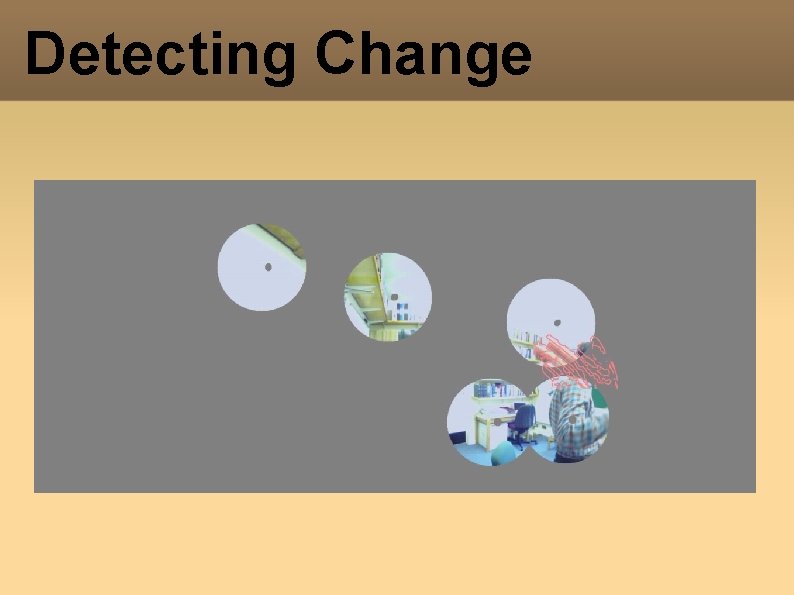

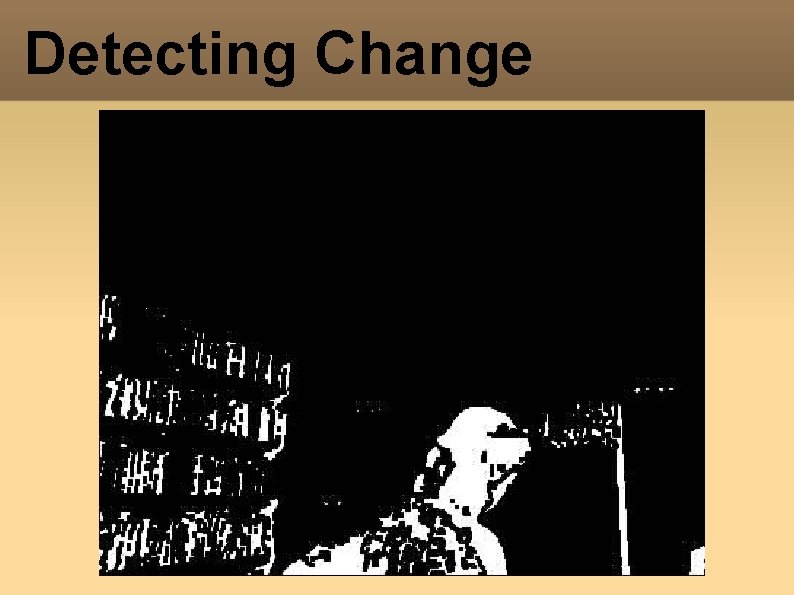

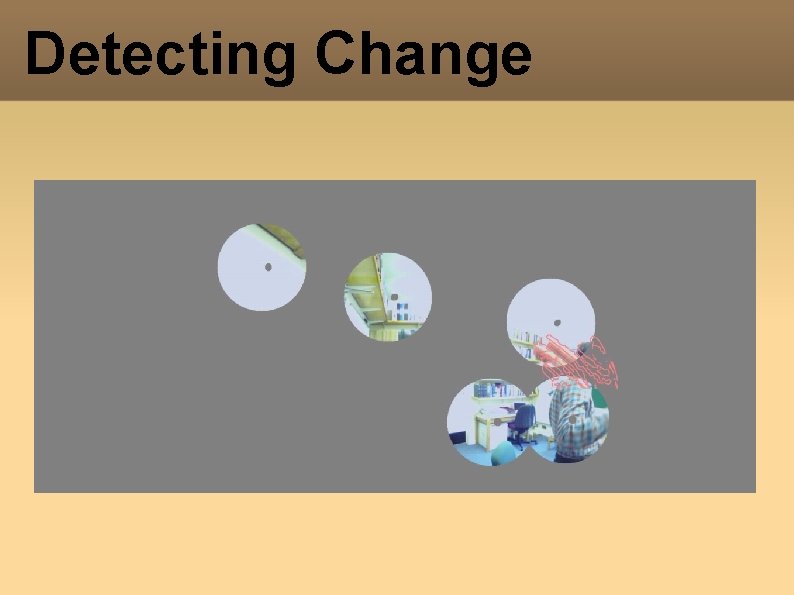

Detecting Change

Detecting Change

Detecting Change

Where Next? Multiple change flags. What about objects? The model is embedded in its environment in only a very weak sense. What happens when the AIBO starts moving around? What happens when the AIBO starts interacting with its environment?

Application to Other Domai Synthetic phenomenology: Specifying the content of concepts. Avoiding Grelling's Paradox. Building external models of conceptual domains. Theory – implemented model – theory approach: Established tradition of “hands on” philosophy. Making implicit assumptions explicit (and testable). Pursuing a project like CYC without, hopefully, some of the pitfalls!

Conclusions Non-linguistic means of specifying the contents of experience are urgently needed. The Grand Illusion argument may be persuasive, but it is not conclusive. (Illusions may be illusory, too!) Work like this presents pitfalls of many hidden assumptions and unintended ambiguities. If synthetic phenomenology has merit, then it also has applications far beyond the change blindness.