The temporal resolution of binding brightness and loudness

The temporal resolution of binding brightness and loudness in dynamic random sequences Vision Sciences Society Annual Meeting 2012 Daniel Mann, Charles Chubb Department of Cognitive Sciences University of California, Irvine

Introduction �In what ways can observers combine dynamic visual and auditory information (brightness & loudness)? Use a selective attention task to extract achievable attention filters for various brightness/loudness judgments Test the temporal limit of multimodal binding derived by Fujisaki & Nishida (2010)

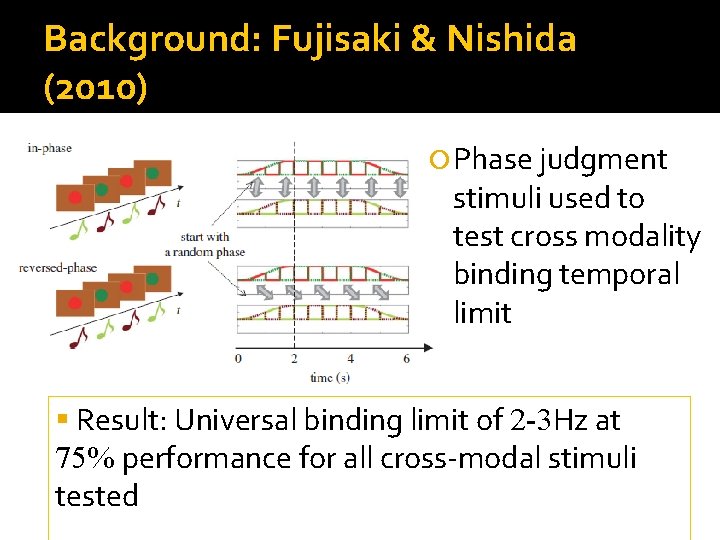

Background: Fujisaki & Nishida (2010) Phase judgment stimuli used to test cross modality binding temporal limit Result: Universal binding limit of 2 -3 Hz at 75% performance for all cross-modal stimuli tested

Methods: Stimuli �Each stimulus was a quick randomly-ordered stream of 18 gray disks (83 ms per disk), each accompanied by a simultaneous burst of auditory white noise. �Three levels of disk brightness and of noise loudness were used to produce 9 different types of audiovisual pairings (tokens).

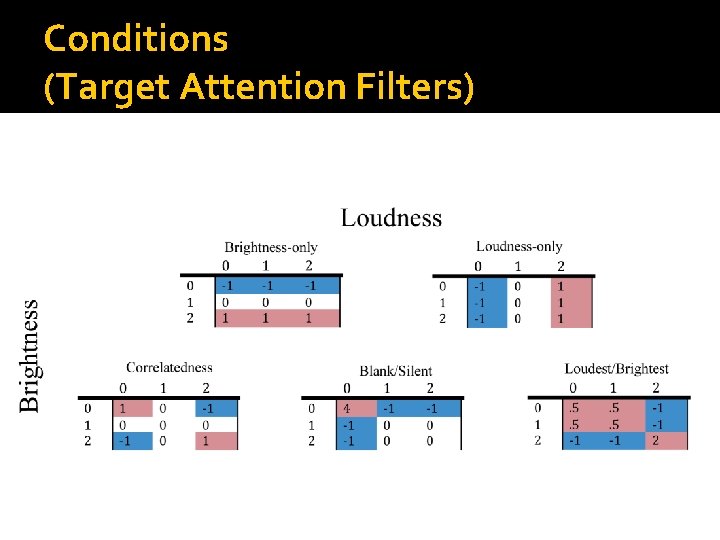

Conditions (Target Attention Filters)

Sample Trials

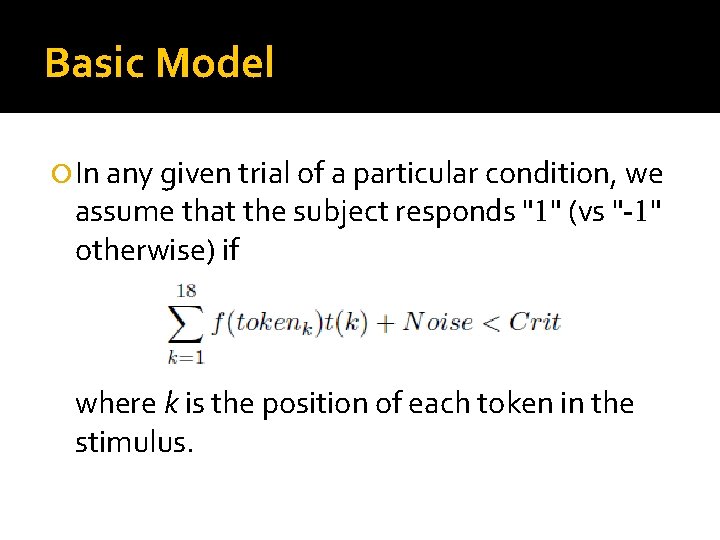

Basic Model In any given trial of a particular condition, we assume that the subject responds "1" (vs "-1" otherwise) if where k is the position of each token in the stimulus.

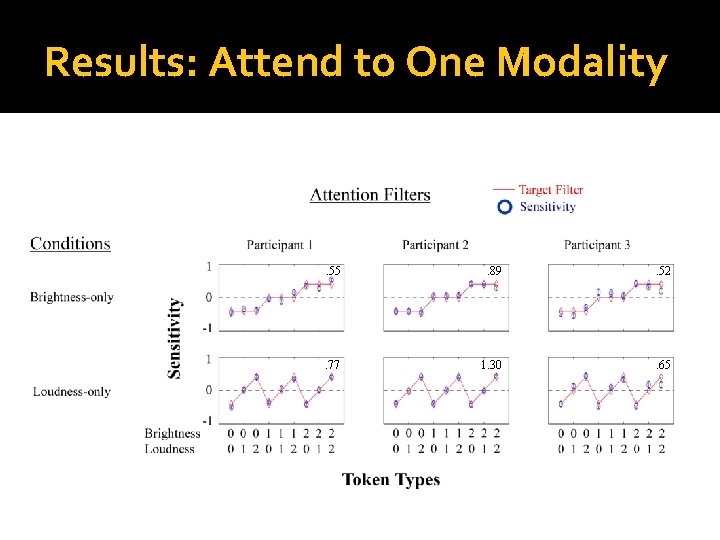

Results: Attend to One Modality . 55 . 89 . 52 . 77 1. 30 . 65

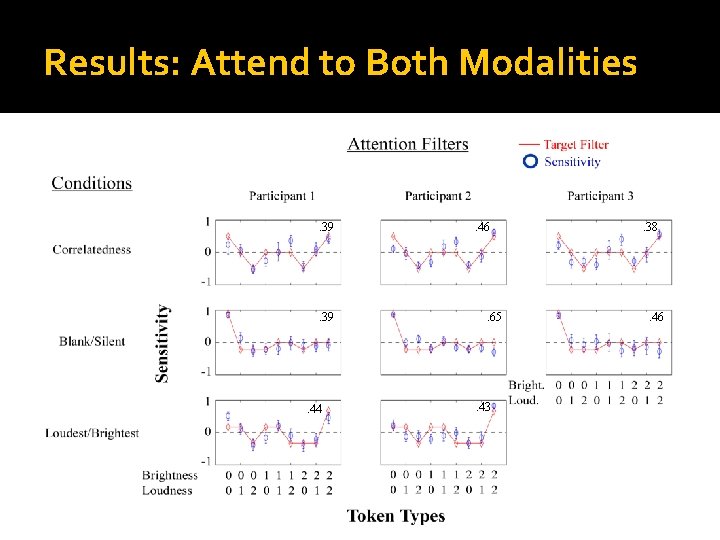

Results: Attend to Both Modalities . 39 . 44 . 46 . 65 . 43 . 38 . 46

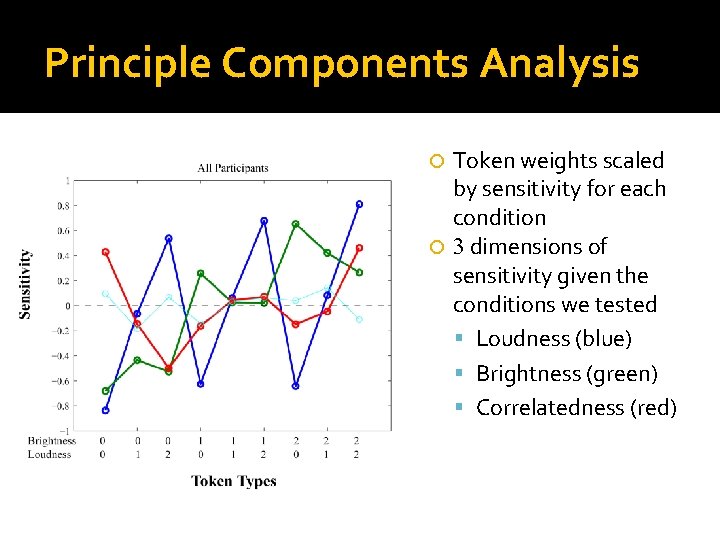

Principle Components Analysis Token weights scaled by sensitivity for each condition 3 dimensions of sensitivity given the conditions we tested Loudness (blue) Brightness (green) Correlatedness (red)

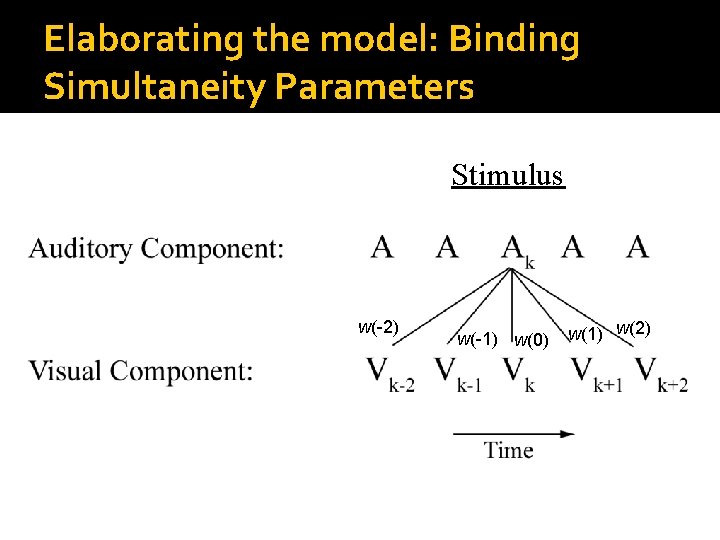

Elaborating the model: Binding Simultaneity Parameters Stimulus w(-2) w(-1) w(0) w(1) w(2)

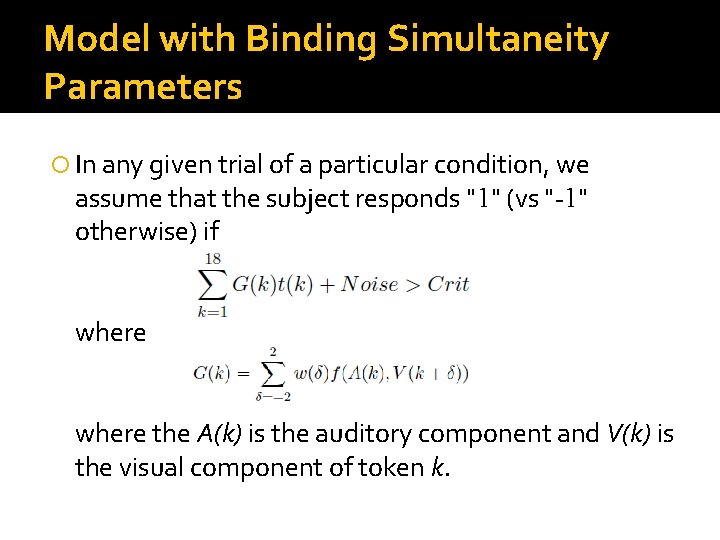

Model with Binding Simultaneity Parameters In any given trial of a particular condition, we assume that the subject responds "1" (vs "-1" otherwise) if where the A(k) is the auditory component and V(k) is the visual component of token k.

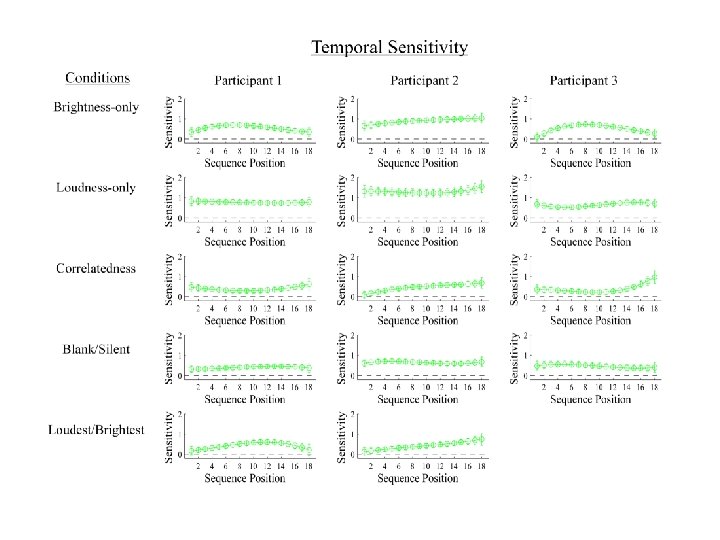

Results: Temporal Resolution

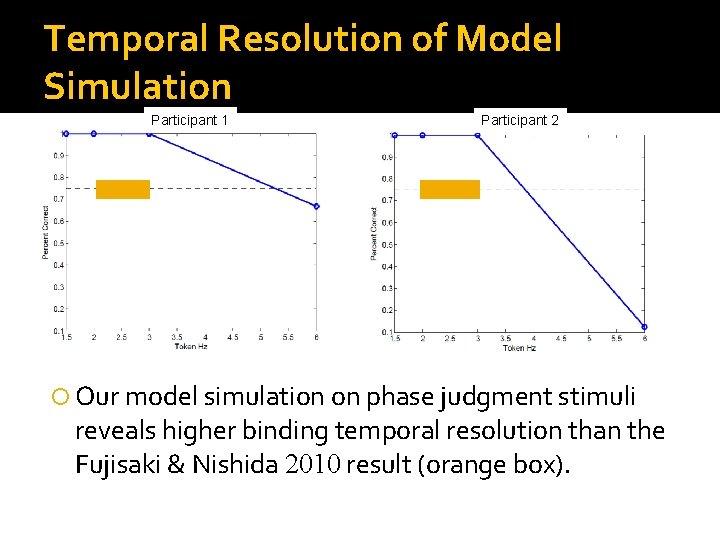

Temporal Resolution of Model Simulation Participant 1 Participant 2 Our model simulation on phase judgment stimuli reveals higher binding temporal resolution than the Fujisaki & Nishida 2010 result (orange box).

Conclusions �Observers can achieve a range of different audiovisual attention filters. �There were 3 dimensions of attention sensitivity given our tasks. Dynamic random stimuli allowed multimodal binding at higher temporal acuity (3. 8 -5. 25 Hz) than phase judgment stimuli (2 -3 Hz) Suggests that auditory and visual information from randomly varying stimuli can be bound more precisely in time than strictly oscillating stimuli

Acknowledgments The Chubb-Wright Lab at UC Irvine This work was funded by NSF Award BCS 0843897 Thanks for listening!

Supplemental slides

- Slides: 18