The Scale VectorThread Processor Computer Science and Artificial

- Slides: 1

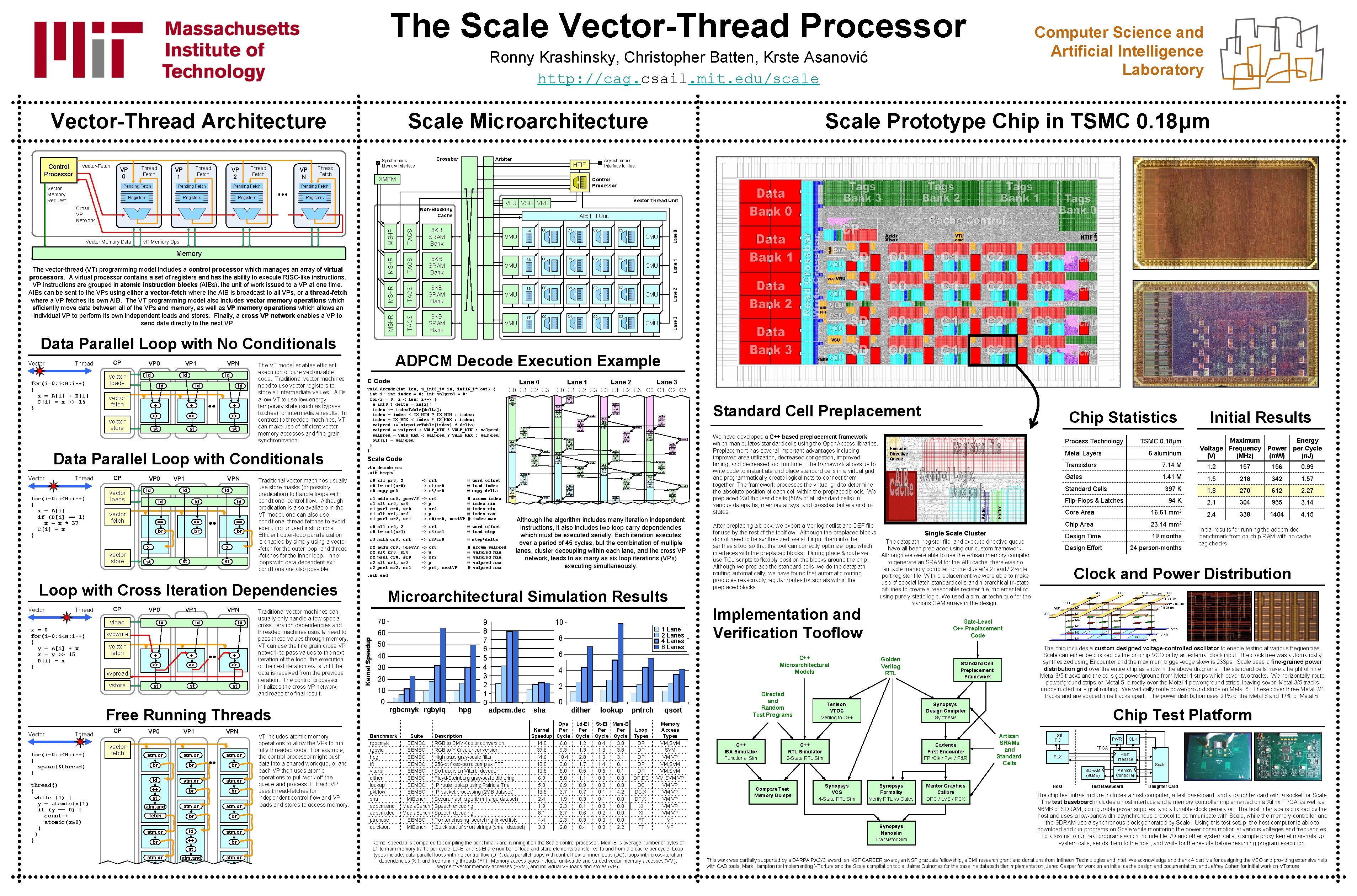

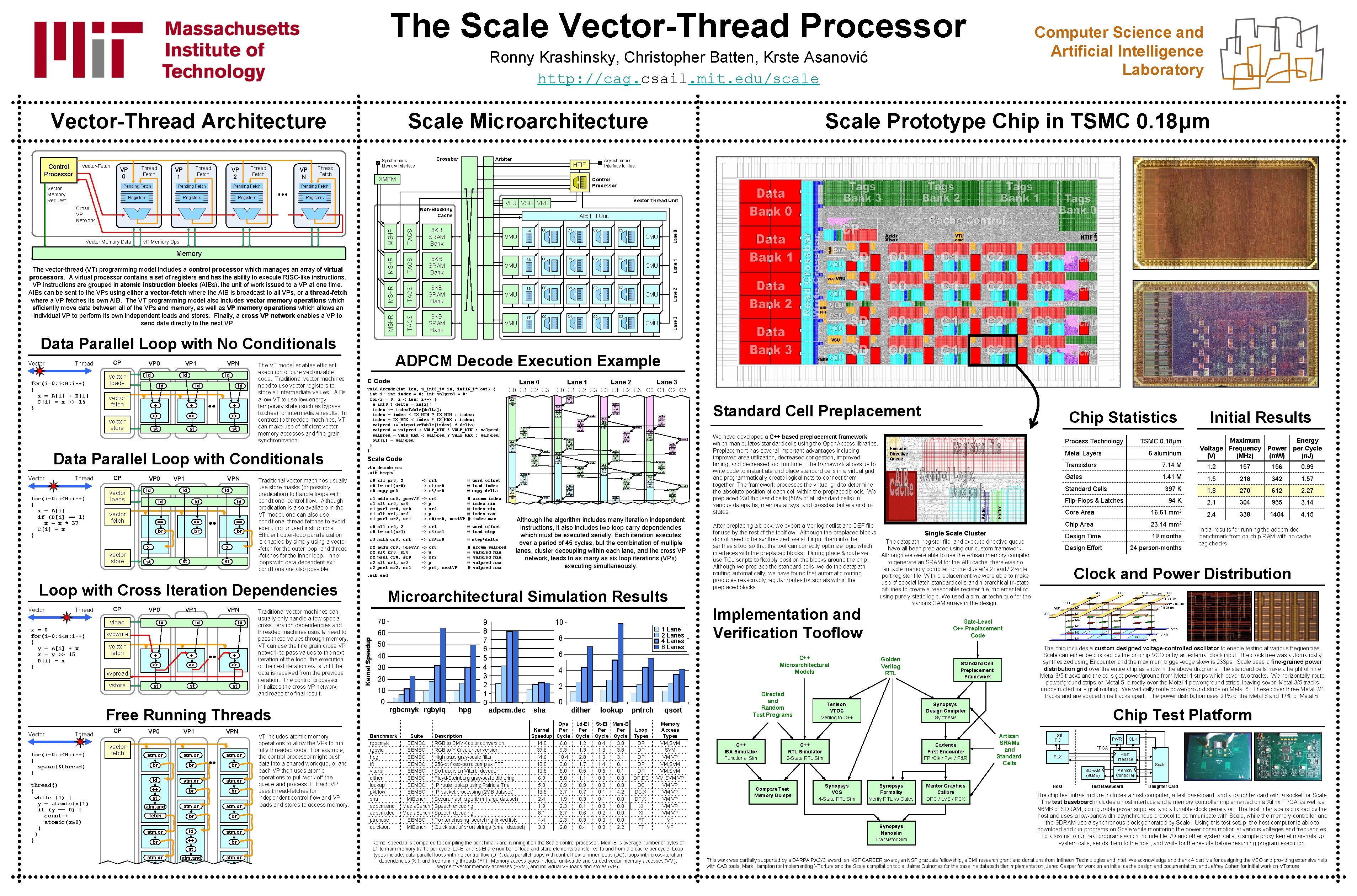

The Scale Vector-Thread Processor Computer Science and Artificial Intelligence Laboratory Ronny Krashinsky, Christopher Batten, Krste Asanović http: //cag. csail. mit. edu/scale Vector-Thread Architecture Thread Fetch Pending Fetch Registers XMEM Control Processor Cross VP Network Data Parallel Loop with No Conditionals Thread for(i=0; i<N; i++) { x = A[i] + B[i] C[i] = x >> 15 } CP vector loads VP 1 VP 0 ld ld vector fetch vector store VPN ld ld + + + >> >> >> st st st The VT model enables efficient execution of pure vectorizable code. Traditional vector machines need to use vector registers to store all intermediate values. AIBs allow VT to use low-energy temporary state (such as bypass latches) for intermediate results. In contrast to threaded machines, VT can make use of efficient vector memory accesses and fine grain synchronization. Data Parallel Loop with Conditionals Vector Thread for(i=0; i<N; i++) { x = A[i] if (B[i] == 1) x = x * 37 C[i] = x } CP vector loads VP 0 VP 1 VPN ld ld ld vector fetch == == == br br br x x vector store ld st st st Traditional vector machines usually use store masks (or possibly predication) to handle loops with conditional control flow. Although predication is also available in the VT model, one can also use conditional thread-fetches to avoid executing unused instructions. Efficient outer-loop parallelization is enabled by simply using a vector -fetch for the outer loop, and thread -fetches for the inner loop. Inner loops with data dependent exit conditions are also possible. TAGS MSHR The vector-thread (VT) programming model includes a control processor which manages an array of virtual processors. A virtual processor contains a set of registers and has the ability to execute RISC-like instructions. VP instructions are grouped in atomic instruction blocks (AIBs), the unit of work issued to a VP at one time. AIBs can be sent to the VPs using either a vector-fetch where the AIB is broadcast to all VPs, or a thread-fetch where a VP fetches its own AIB. The VT programming model also includes vector memory operations which efficiently move data between all of the VPs and memory, as well as VP memory operations which allows an individual VP to perform its own independent loads and stores. Finally, a cross VP network enables a VP to send data directly to the next VP. TAGS MSHR Memory 8 KB SRAM Bank TAGS VP Memory Ops 8 KB SRAM Bank TAGS MSHR Non-Blocking Cache Vector Memory Data Vector Thread Unit VLU VSU VRU 8 KB SRAM Bank AIB Fill Unit C 0 SD C 1 C 2 C 3 VMU CMU ADPCM Decode Execution Example C Code Lane 0 void decode(int len, u_int 8_t* in, int 16_t* out) { int i; int index = 0; int valpred = 0; for(i = 0; i < len; i++) { u_int 8_t delta = in[i]; index += index. Table[delta]; index = index < IX_MIN ? IX_MIN : index; index = IX_MAX < index ? IX_MAX : index; valpred += stepsize. Table[index] * delta; valpred = valpred < VALP_MIN ? VALP_MIN : valpred; valpred = VALP_MAX < valpred ? VALP_MAX : valpred; out[i] = valpred; } } C 0 C 1 C 2 C 3 slt psel sll lw copy mul addu slt psel c 0 sll pr 0, 2 c 0 lw cr 1(sr 0) c 0 copy pr 0 -> cr 1 -> c 1/cr 0 -> c 3/cr 0 # word offset # load index # copy delta c 1 c 1 c 1 -> -> -> # # # cr 0 p sr 2 p c 0/cr 0, next. VP accum index index min max c 0 sll cr 0, 2 c 0 lw cr 1(sr 1) -> cr 1 -> c 3/cr 1 # word offset # load step c 3 mulh cr 0, cr 1 -> c 2/cr 0 # step*delta c 2 c 2 c 2 -> -> -> # # # addu cr 0, prev. VP slt cr 0, sr 0 psel cr 0, sr 0 slt sr 1, sr 2 psel sr 2, sr 1 cr 0 p sr 2 p pr 0, next. VP accum valpred min valpred max mul addu slt psel sll lw copy mul sll lw copy mul addu slt psel slt psel addu slt psel sll lw copy Lane 3 C 0 C 1 C 2 C 3 addu slt psel vtu_decode_ex: . aib begin Lane 2 slt psel Scale Code addu cr 0, prev. VP slt cr 0, sr 0 psel cr 0, sr 0 slt sr 1, sr 2 psel sr 2, sr 1 Lane 1 addu slt psel sll lw copy mul addu slt psel slt psel sll lw copy mul addu slt psel sll lw Standard Cell Preplacement addu slt psel Although the algorithm includes many iteration independent instructions, it also includes two loop carry dependencies which must be executed serially. Each iteration executes over a period of 45 cycles, but the combination of multiple lanes, cluster decoupling within each lane, and the cross VP network, leads to as many as six loop iterations (VPs) executing simultaneously. . aib end Loop with Cross Iteration Dependencies Thread CP VP 0 vload x = 0 for(i=0; i<N; i++) { y = A[i] + x x = y >> 15 B[i] = x } VP 1 VPN ld ld ld xvpwrite vector fetch + + + >> >> >> xvpread vstore st st st Traditional vector machines can usually only handle a few special cross iteration dependencies and threaded machines usually need to pass these values through memory. VT can use the fine grain cross VP network to pass values to the next iteration of the loop; the execution of the next iteration waits until the data is received from the previous iteration. The control processor initializes the cross VP network and reads the final result. 70 60 Kernel Speedup Vector Microarchitectural Simulation Results 50 40 30 20 10 0 Free Running Threads Vector Thread for(i=0; i<N; i++) { spawn(&thread) } thread() { while (1) { y = atomic(x|1) if (y == 0) { count++ atomic(x&0) } } } CP vector fetch VP 0 VP 1 VPN atm. or br br br ld atm. or ++ br br atm. and atm. or fetch br br atm. or ld atm. or br ++ br st st atm. or atm. and atm. or VT includes atomic memory operations to allow the VPs to run fully threaded code. For example, the control processor might push data into a shared work queue, and each VP then uses atomic operations to pull work off the queue and process it. Each VP uses thread-fetches for independent control flow and VP loads and stores to access memory. rgbcmyk rgbyiq Benchmark rgbcmyk rgbyiq hpg fft viterbi dither lookup pktflow sha adpcm. enc adpcm. dec ptrchase quicksort Suite EEMBC EEMBC Mi. Bench Media. Bench EEMBC Mi. Bench hpg 9 8 7 6 5 4 3 2 1 0 10 1 Lane 2 Lanes 4 Lanes 8 6 We have developed a C++ based preplacement framework which manipulates standard cells using the Open. Access libraries. Preplacement has several important advantages including improved area utilization, decreased congestion, improved timing, and decreased tool run time. The framework allows us to write code to instantiate and place standard cells in a virtual grid and programmatically create logical nets to connect them together. The framework processes the virtual grid to determine the absolute position of each cell within the preplaced block. We preplaced 230 thousand cells (58% of all standard cells) in various datapaths, memory arrays, and crossbar buffers and tristates. Chip Statistics Process Technology Execute Directive Queue 0 adpcm. dec Description RGB to CMYK color conversion RGB to YIQ color conversion High pass gray-scale filter 256 -pt fixed-point complex FFT Soft decision Viterbi decoder Floyd-Steinberg gray-scale dithering IP route lookup using Patricia Trie IP packet processing (2 MB dataset) Secure hash algorithm (large dataset) Speech encoding Speech decoding Pointer chasing, searching linked lists Quick sort of short strings (small dataset) sha Ops Per Kernel Speedup Cycle 14. 8 6. 8 39. 8 9. 3 44. 6 10. 4 18. 8 3. 8 10. 5 5. 0 6. 9 5. 0 5. 8 6. 9 13. 5 3. 7 2. 4 1. 9 2. 3 8. 1 6. 7 4. 4 2. 3 3. 0 2. 0 dither Ld-El Per Cycle 1. 2 1. 3 2. 8 1. 7 0. 5 1. 1 0. 9 0. 7 0. 3 0. 1 0. 6 0. 3 0. 4 lookup pntrch St-El Mem-B Per Loop Cycle Types 0. 4 3. 0 DP 1. 3 3. 8 DP 1. 0 3. 1 DP 1. 4 0. 1 DP 0. 5 0. 1 DP 0. 3 DP, DC 0. 0 DC 0. 1 4. 2 DC, XI 0. 1 0. 0 DP, XI 0. 0 XI 0. 2 0. 0 XI 0. 0 FT 0. 3 2. 2 FT qsort Memory Access Types VM, SVM VM, VP VM, SVM, VP VM, VP VP VP Kernel speedup is compared to compiling the benchmark and running it on the Scale control processor. Mem-B is average number of bytes of L 1 to main memory traffic per cycle. Ld-El and St-El are number of load and store elements transferred to and from the cache per cycle. Loop types include: data parallel loops with no control flow (DP), data parallel loops with control flow or inner loops (DC), loops with cross-iteration dependencies (XI), and free running threads (FT). Memory access types include: unit-stride and strided vector memory accesses (VM), segment vector memory accesses (SVM), and individual VP loads and stores (VP). C++ ISA Simulator Functional Sim Golden Verilog RTL Tenison VTOC Verilog to C++ 1. 2 157 156 0. 99 Gates 1. 41 M 1. 5 218 342 1. 57 397 K 1. 8 270 612 2. 27 94 K 2. 1 304 955 3. 14 Core Area 16. 61 mm 2 2. 4 338 1404 4. 15 Chip Area 23. 14 mm 2 Design Time 19 months Design Effort 24 person-months Initial results for running the adpcm. dec benchmark from on-chip RAM with no cache tag checks Clock and Power Distribution The chip includes a custom designed voltage-controlled oscillator to enable testing at various frequencies. Scale can either be clocked by the on-chip VCO or by an external clock input. The clock tree was automatically synthesized using Encounter and the maximum trigger-edge skew is 233 ps. Scale uses a fine-grained power distribution grid over the entire chip as show in the above diagrams. The standard cells have a height of nine Metal 3/5 tracks and the cells get power/ground from Metal 1 strips which cover two tracks. We horizontally route power/ground strips on Metal 5, directly over the Metal 1 power/ground strips, leaving seven Metal 3/5 tracks unobstructed for signal routing. We vertically route power/ground strips on Metal 6. These cover three Metal 2/4 tracks and are spaced nine tracks apart. The power distribution uses 21% of the Metal 6 and 17% of Metal 5. Standard Cell Preplacement Framework Cadence First Encounter FP /Clk / Pwr / P&R Chip Test Platform Artisan SRAMs and Standard Cells Host PC PWR Synopsys Formality Verify RTL vs Gates Synopsys Nanosim Transistor Sim Mentor Graphics Calibre DRC / LVS / RCX CLK FPGA Host Interface PLX Scale SDRAM (96 MB) Synopsys VCS 4 -State RTL Sim Energy per Cycle (n. J) 7. 14 M Synopsys Design Compiler Synthesis C++ RTL Simulator 2 -State RTL Sim Compare Test Memory Dumps Maximum Voltage Frequency Power (MHz) (V) (m. W) Gate-Level C++ Preplacement Code 2 Directed and Random Test Programs Initial Results Transistors Flip-Flops & Latches Implementation and Verification Tooflow 4 6 aluminum Standard Cells After preplacing a block, we export a Verilog netlist and DEF file for use by the rest of the toolflow. Although the preplaced blocks Single Scale Cluster do not need to be synthesized, we still input them into the The datapath, register file, and execute directive queue synthesis tool so that the tool can correctly optimize logic which have all been preplaced using our custom framework. interfaces with the preplaced blocks. During place & route we Although we were able to use the Artisan memory compiler use TCL scripts to flexibly position the blocks around the chip. to generate an SRAM for the AIB cache, there was no Although we preplace the standard cells, we do the datapath suitable memory compiler for the cluster’s 2 read / 2 write routing automatically; we have found that automatic routing port register file. With preplacement we were able to make produces reasonably regular routes for signals within the use of special latch standard cells and hierarchical tri-state preplaced blocks. bit-lines to create a reasonable register file implementation using purely static logic. We used a similar technique for the various CAM arrays in the design. C++ Microarchitectural Models TSMC 0. 18μm Metal Layers Shifter VP N Adder Thread Fetch Lane 0 VP 2 Asynchronous Interface to Host HTIF Lane 1 Vector Memory Request Thread Fetch VP 1 Arbiter Lane 2 Thread Fetch VP 0 Crossbar Synchronous Memory Interface Scale Prototype Chip in TSMC 0. 18μm Lane 3 Control Processor Vector-Fetch Scale Microarchitecture Host Memory Controller Test Baseboard Daughter Card The chip test infrastructure includes a host computer, a test baseboard, and a daughter card with a socket for Scale. The test baseboard includes a host interface and a memory controller implemented on a Xilinx FPGA as well as 96 MB of SDRAM, configurable power supplies, and a tunable clock generator. The host interface is clocked by the host and uses a low-bandwidth asynchronous protocol to communicate with Scale, while the memory controller and the SDRAM use a synchronous clock generated by Scale. Using this test setup, the host computer is able to download and run programs on Scale while monitoring the power consumption at various voltages and frequencies. To allow us to run real programs which include file I/O and other system calls, a simple proxy kernel marshals up system calls, sends them to the host, and waits for the results before resuming program execution. This work was partially supported by a DARPA PAC/C award, an NSF CAREER award, an NSF graduate fellowship, a CMI research grant and donations from Infineon Technologies and Intel. We acknowledge and thank Albert Ma for designing the VCO and providing extensive help with CAD tools, Mark Hampton for implementing VTorture and the Scale compilation tools, Jaime Quinonez for the baseline datapath tiler implementation, Jared Casper for work on an initial cache design and documentation, and Jeffrey Cohen for initial work on VTorture.