The ROOT system Status Roadmap NEC 2009 Varna

- Slides: 34

The ROOT system Status & Roadmap NEC’ 2009 Varna, 8 September 2009 René Brun/CERN Rene Brun Global Overview of ROOT system 1

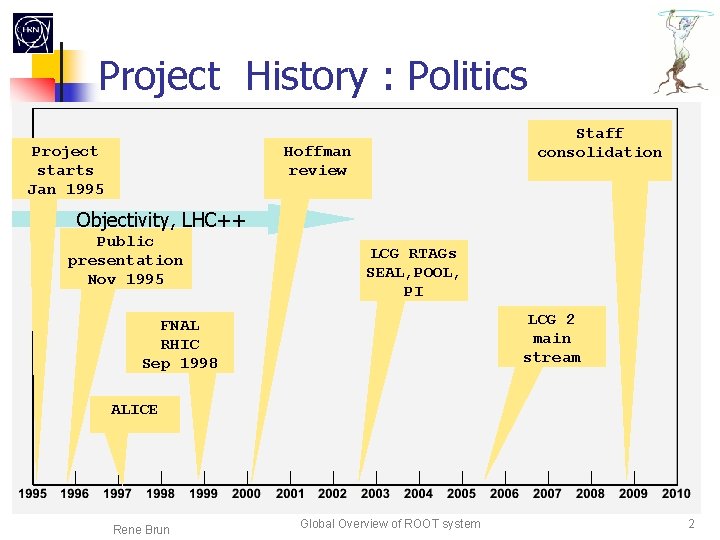

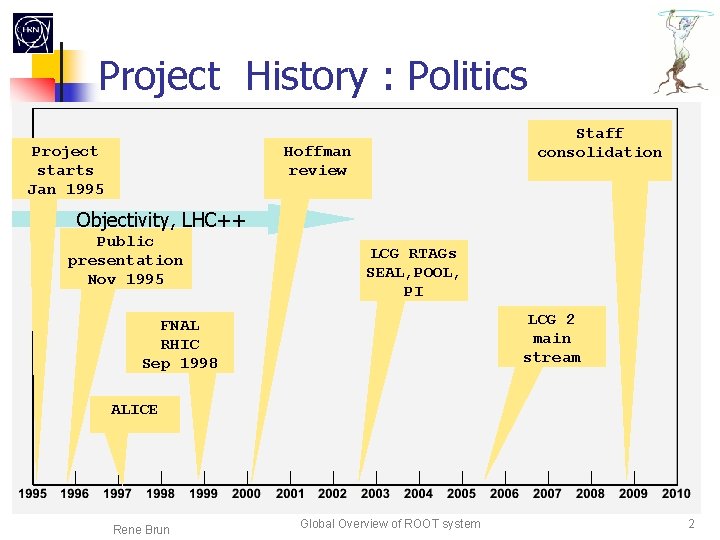

Project History : Politics Project starts Jan 1995 Staff consolidation Hoffman review Objectivity, LHC++ Public presentation Nov 1995 LCG RTAGs SEAL, POOL, PI LCG 2 main stream FNAL RHIC Sep 1998 ALICE Rene Brun Global Overview of ROOT system 2

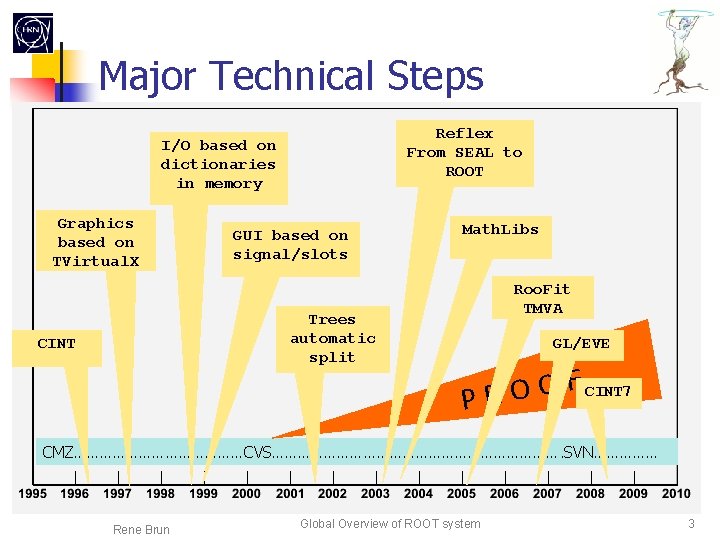

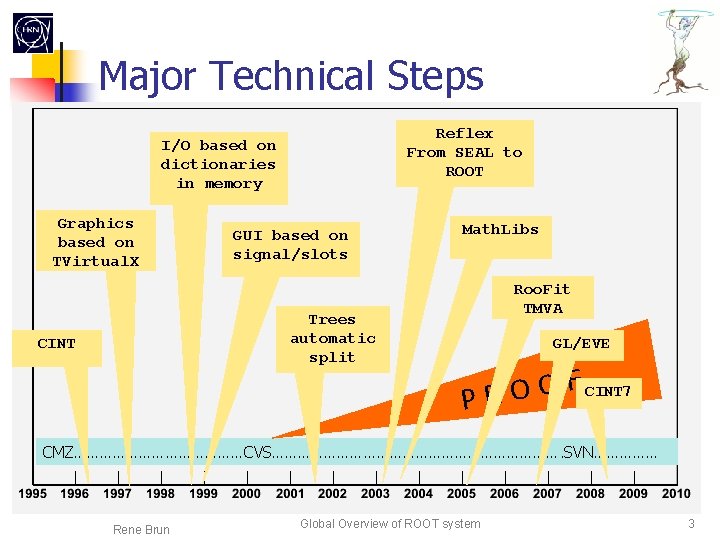

Major Technical Steps Reflex From SEAL to ROOT I/O based on dictionaries in memory Graphics based on TVirtual. X GUI based on signal/slots Trees automatic split CINT Math. Libs Roo. Fit TMVA GL/EVE F CINT 7 O O PR CMZ…………………CVS……………………………. SVN…………… Rene Brun Global Overview of ROOT system 3

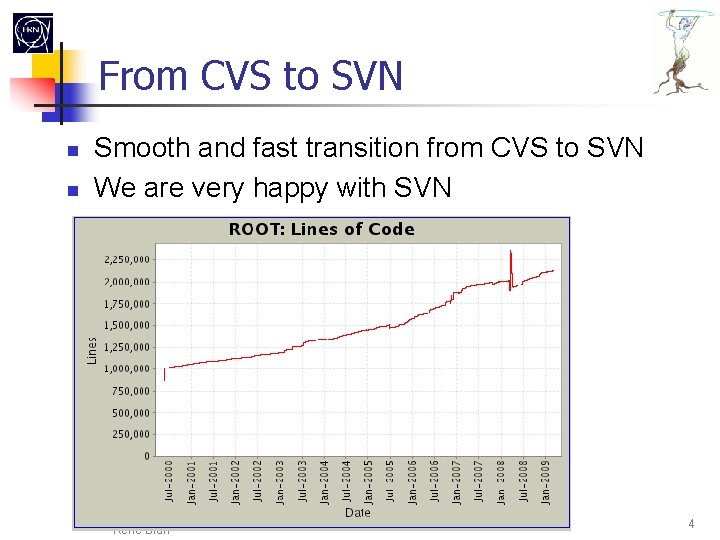

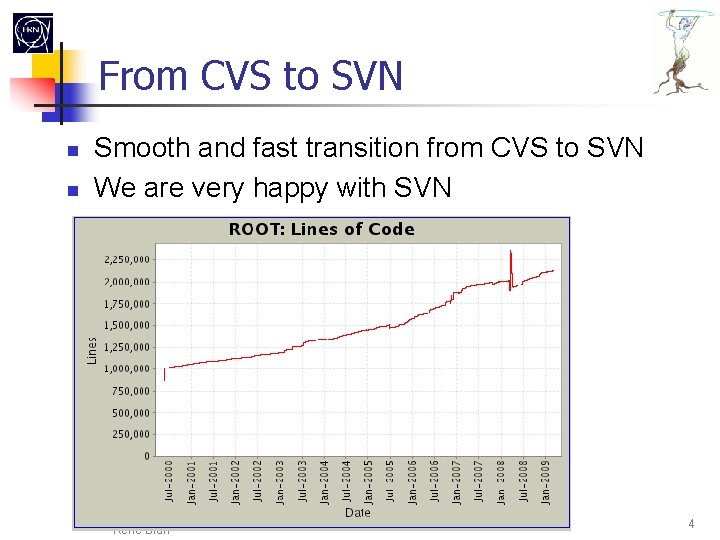

From CVS to SVN Smooth and fast transition from CVS to SVN We are very happy with SVN Rene Brun Global Overview of ROOT system 4

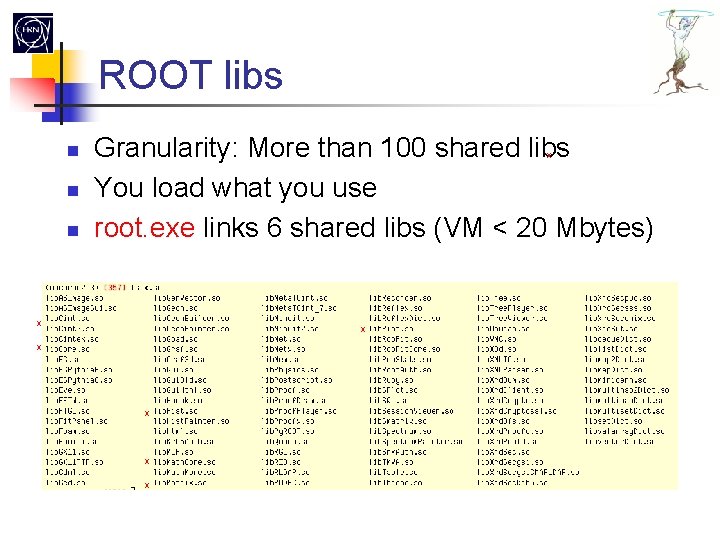

ROOT libs Granularity: More than 100 shared libs You load what you use root. exe links 6 shared libs (VM < 20 Mbytes) x x x x

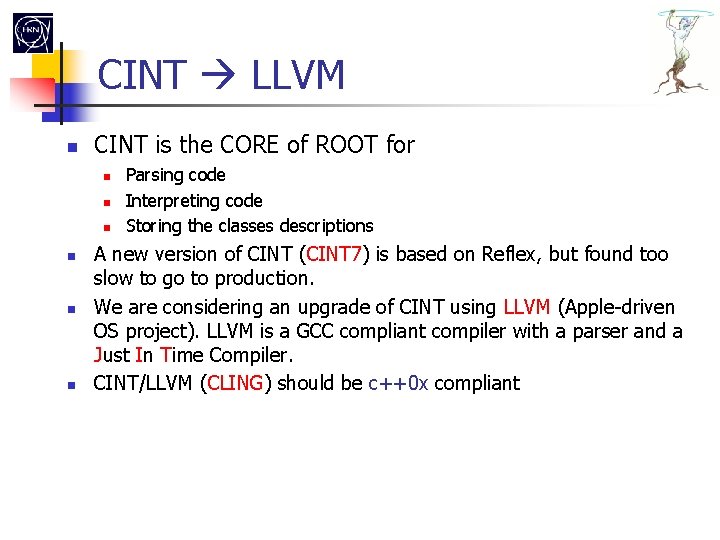

CINT LLVM CINT is the CORE of ROOT for Parsing code Interpreting code Storing the classes descriptions A new version of CINT (CINT 7) is based on Reflex, but found too slow to go to production. We are considering an upgrade of CINT using LLVM (Apple-driven OS project). LLVM is a GCC compliant compiler with a parser and a Just In Time Compiler. CINT/LLVM (CLING) should be c++0 x compliant

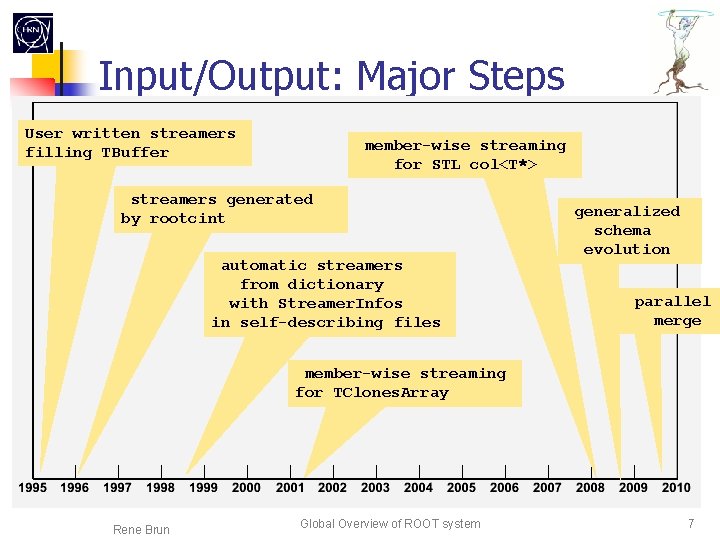

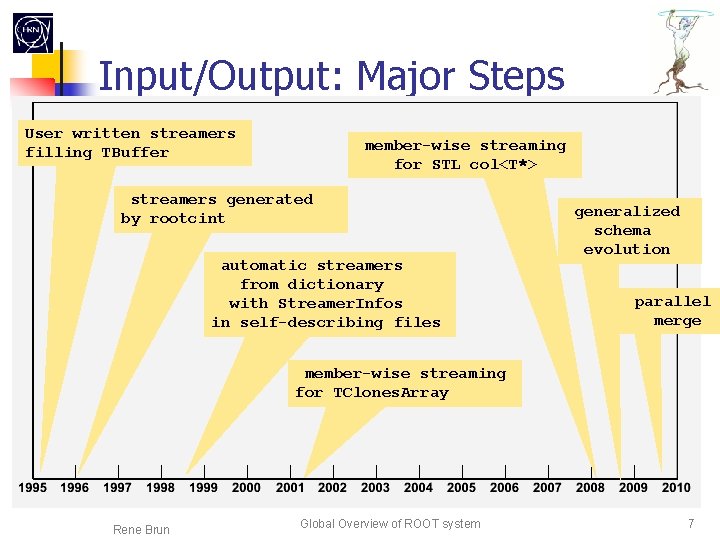

Input/Output: Major Steps User written streamers filling TBuffer member-wise streaming for STL col<T*> streamers generated by rootcint automatic streamers from dictionary with Streamer. Infos in self-describing files generalized schema evolution parallel merge member-wise streaming for TClones. Array Rene Brun Global Overview of ROOT system 7

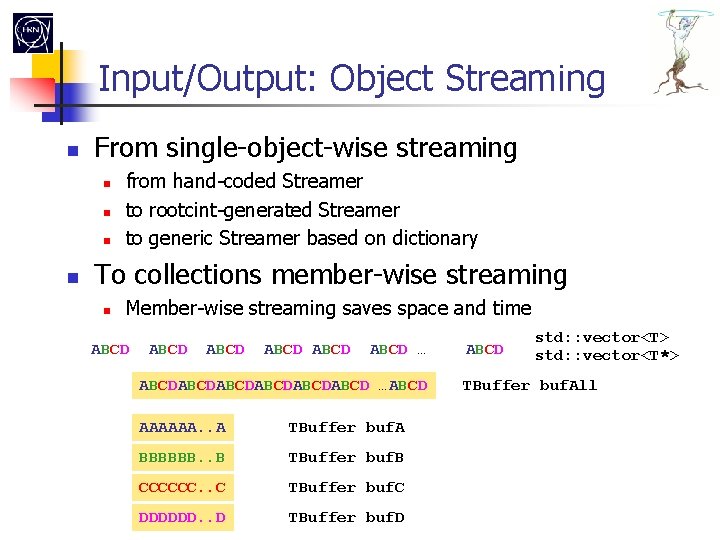

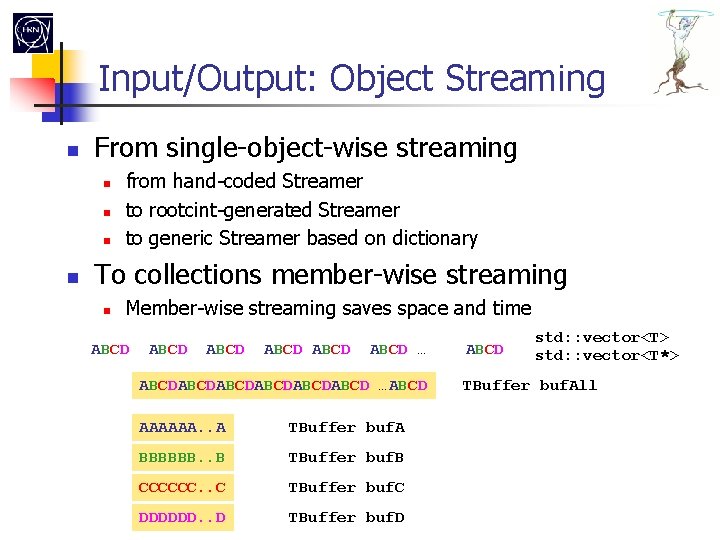

Input/Output: Object Streaming From single-object-wise streaming from hand-coded Streamer to rootcint-generated Streamer to generic Streamer based on dictionary To collections member-wise streaming Member-wise streaming saves space and time ABCD ABCD … ABCDABCDABCD …ABCD AAAAAA. . A TBuffer buf. A BBBBBB. . B TBuffer buf. B CCCCCC. . C TBuffer buf. C DDDDDD. . D TBuffer buf. D ABCD std: : vector<T> std: : vector<T*> TBuffer buf. All

I/O and Trees from branches of basic types created by hand to branches automatically generated from very complex objects to branches automatically generated for complex polymorphic objects Support for object weak-references across branches (TRef) with load on demand Tree Friends TEntry. List Automatic branch buffer size optimisation (5. 26)

2 -D Graphics New functions added at each new release. Always new requests for new styles, coordinate systems. ps, pdf, svg, gif, jpg, png, c, root, etc Move to GL ? Rene Brun Global Overview of ROOT system 10

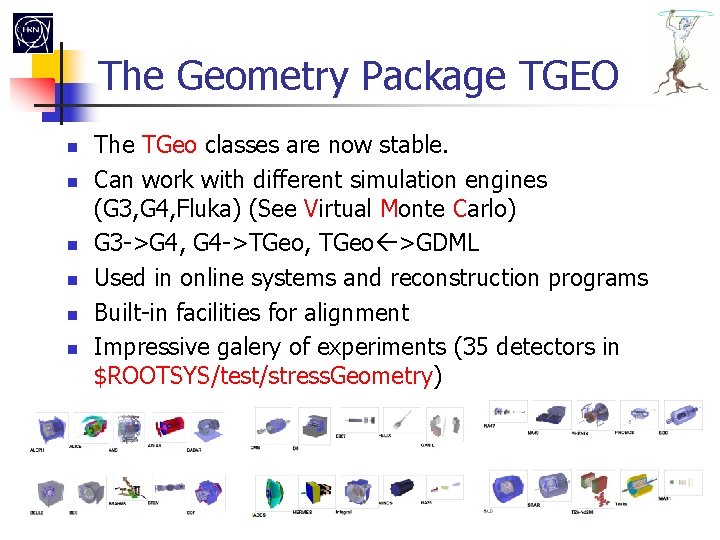

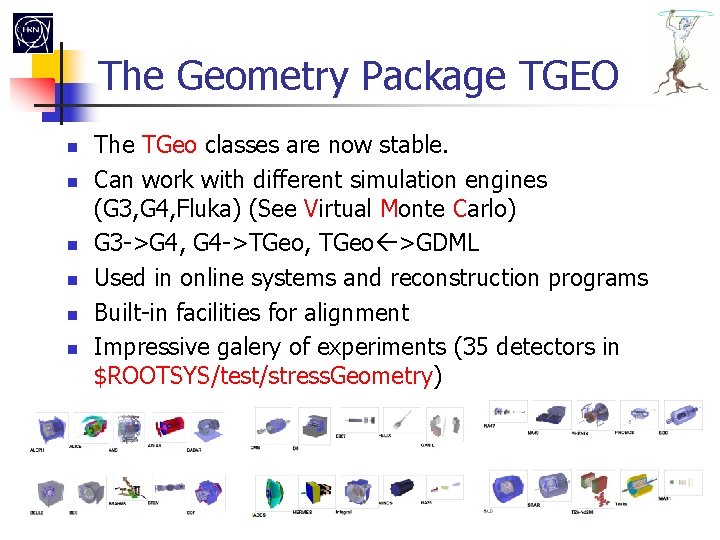

The Geometry Package TGEO The TGeo classes are now stable. Can work with different simulation engines (G 3, G 4, Fluka) (See Virtual Monte Carlo) G 3 ->G 4, G 4 ->TGeo, TGeo >GDML Used in online systems and reconstruction programs Built-in facilities for alignment Impressive galery of experiments (35 detectors in $ROOTSYS/test/stress. Geometry)

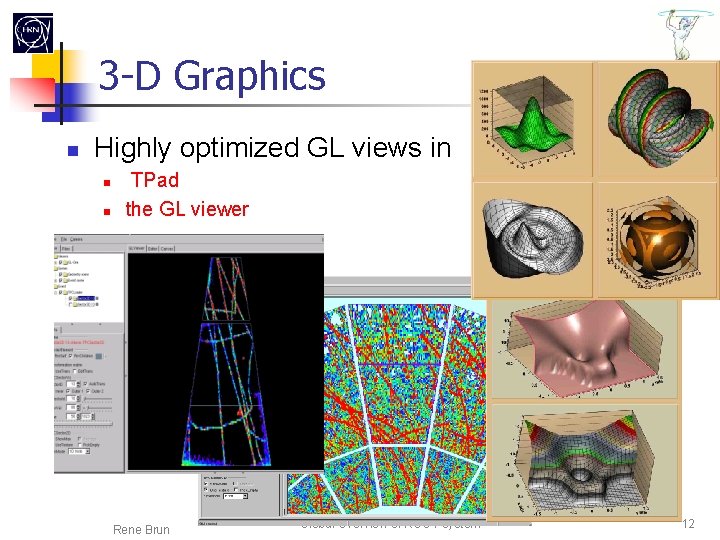

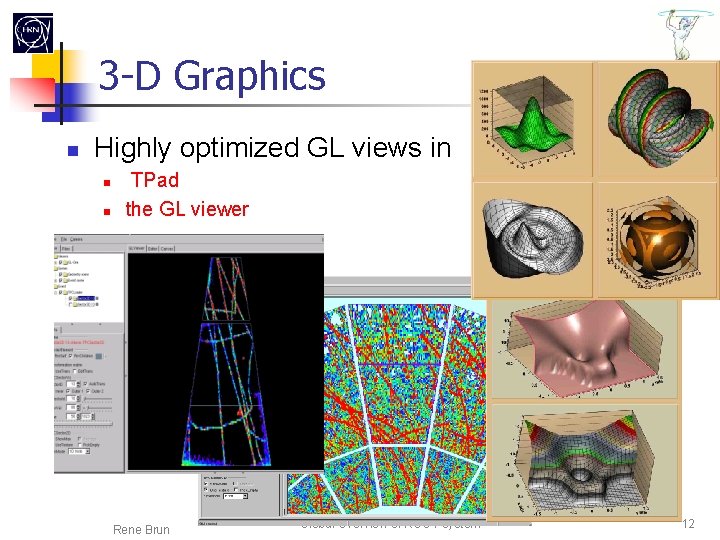

3 -D Graphics Highly optimized GL views in TPad the GL viewer Rene Brun Global Overview of ROOT system 12

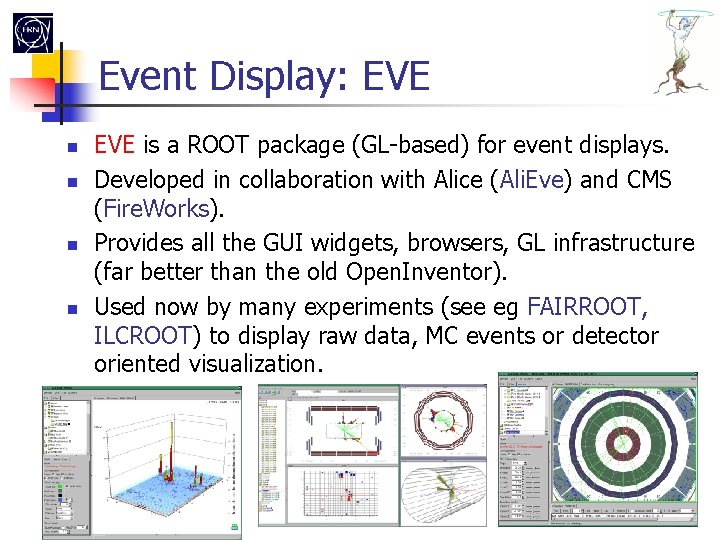

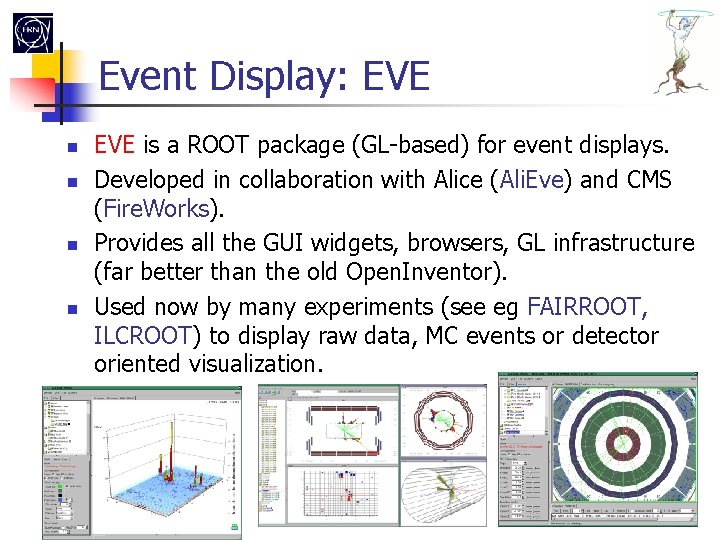

Event Display: EVE is a ROOT package (GL-based) for event displays. Developed in collaboration with Alice (Ali. Eve) and CMS (Fire. Works). Provides all the GUI widgets, browsers, GL infrastructure (far better than the old Open. Inventor). Used now by many experiments (see eg FAIRROOT, ILCROOT) to display raw data, MC events or detector oriented visualization.

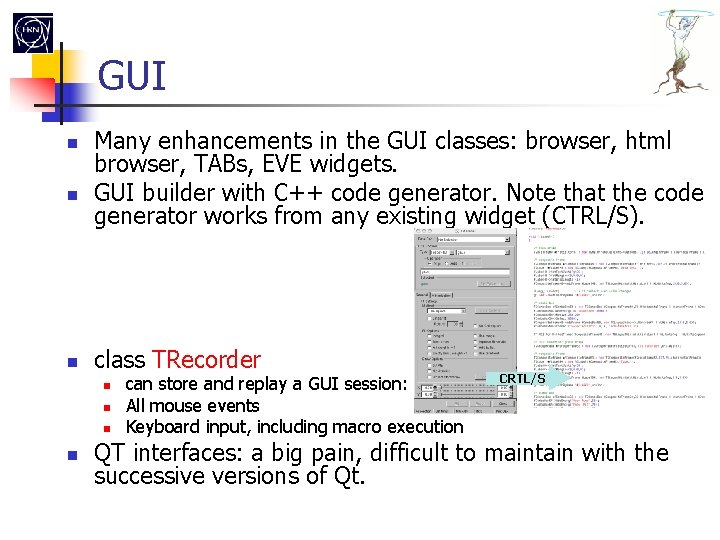

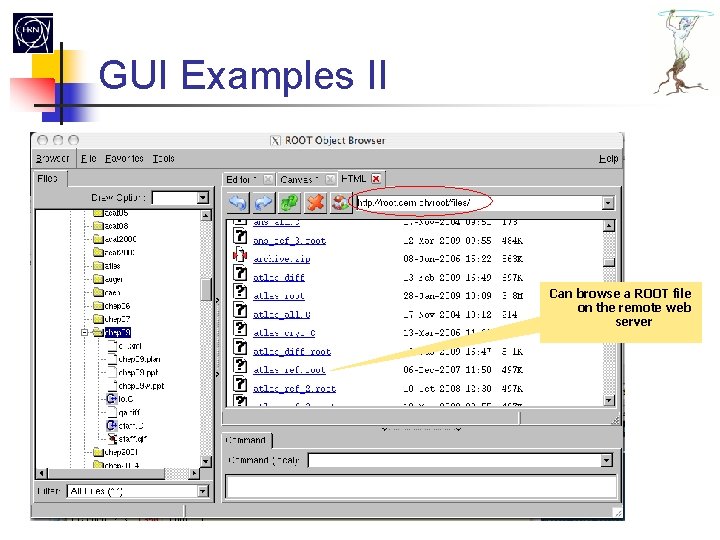

GUI Many enhancements in the GUI classes: browser, html browser, TABs, EVE widgets. GUI builder with C++ code generator. Note that the code generator works from any existing widget (CTRL/S). class TRecorder can store and replay a GUI session: All mouse events Keyboard input, including macro execution CRTL/S QT interfaces: a big pain, difficult to maintain with the successive versions of Qt.

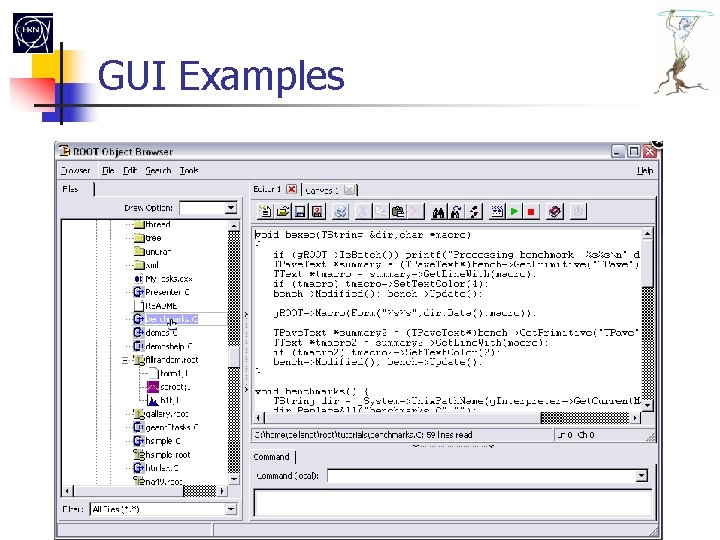

GUI Examples

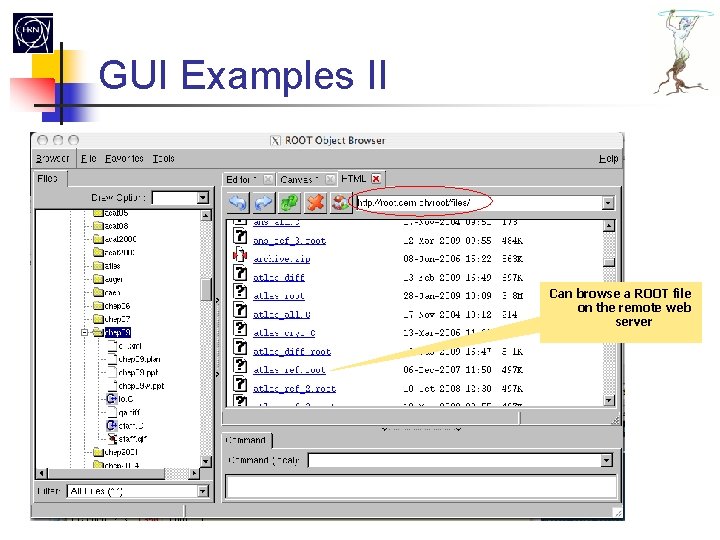

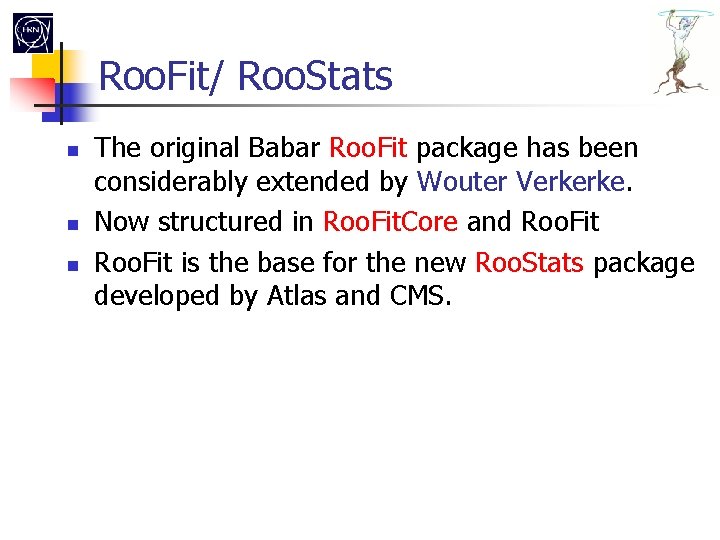

GUI Examples II Can browse a ROOT file on the remote web server

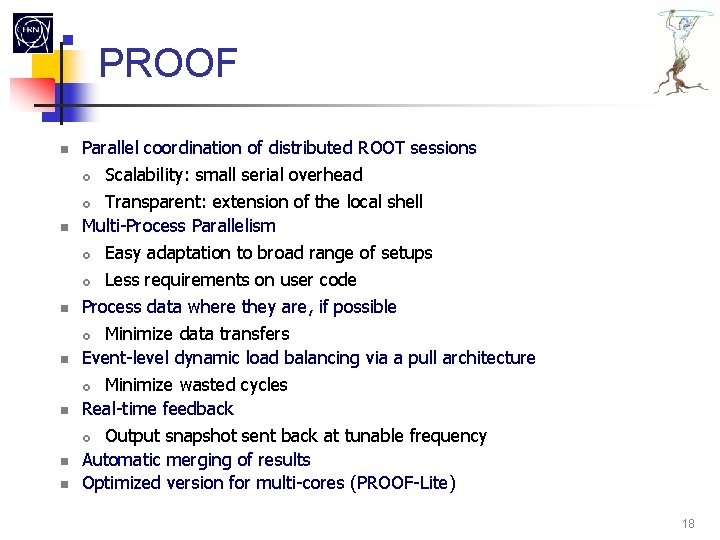

Roo. Fit/ Roo. Stats The original Babar Roo. Fit package has been considerably extended by Wouter Verkerke. Now structured in Roo. Fit. Core and Roo. Fit is the base for the new Roo. Stats package developed by Atlas and CMS.

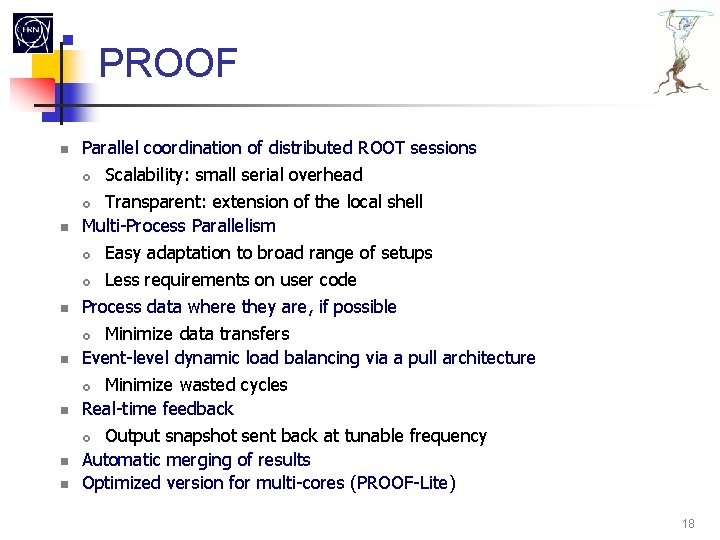

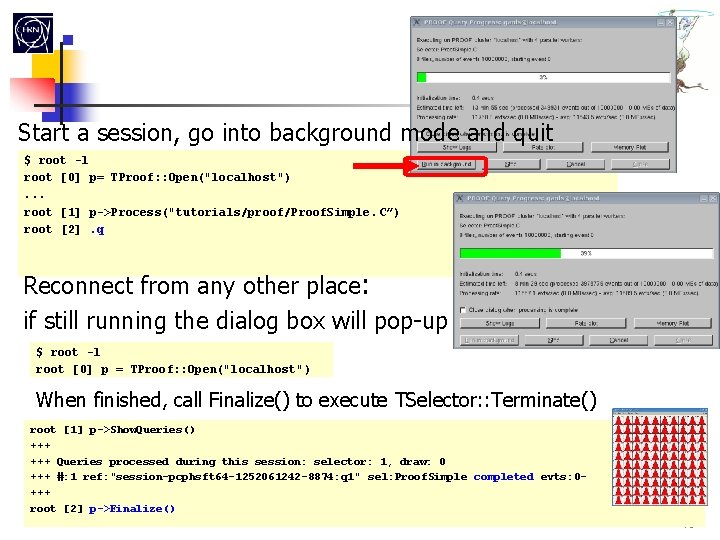

PROOF – Parallel ROOT Facility PROOF Parallel coordination of distributed ROOT sessions Scalability: small serial overhead Transparent: extension of the local shell Multi-Process Parallelism Easy adaptation to broad range of setups Less requirements on user code Process data where they are, if possible Minimize data transfers Event-level dynamic load balancing via a pull architecture Minimize wasted cycles Real-time feedback Output snapshot sent back at tunable frequency Automatic merging of results Optimized version for multi-cores (PROOF-Lite) 18

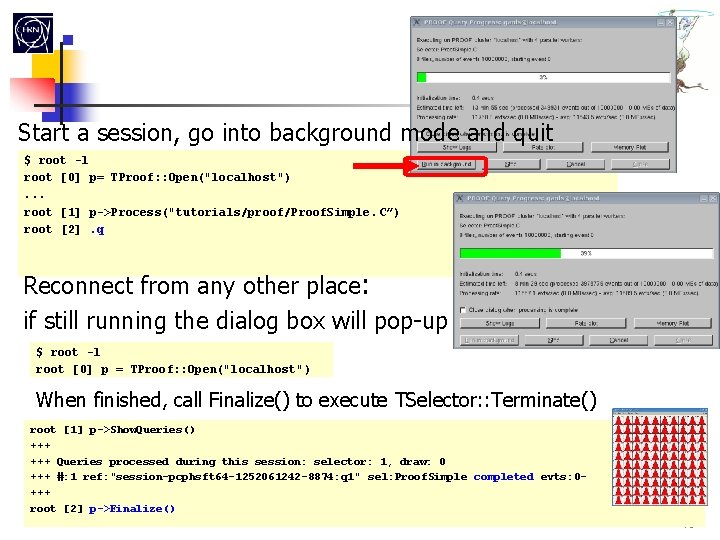

Interactive-batch Start a session, go into background mode and quit $ root -l root [0] p= TProof: : Open("localhost"). . . root [1] p->Process("tutorials/proof/Proof. Simple. C”) root [2]. q Reconnect from any other place: if still running the dialog box will pop-up $ root -l root [0] p = TProof: : Open("localhost") When finished, call Finalize() to execute TSelector: : Terminate() root [1] p->Show. Queries() +++ Queries processed during this session: selector: 1, draw: 0 +++ #: 1 ref: "session-pcphsft 64 -1252061242 -8874: q 1" sel: Proof. Simple completed evts: 0+++ root [2] p->Finalize() 19

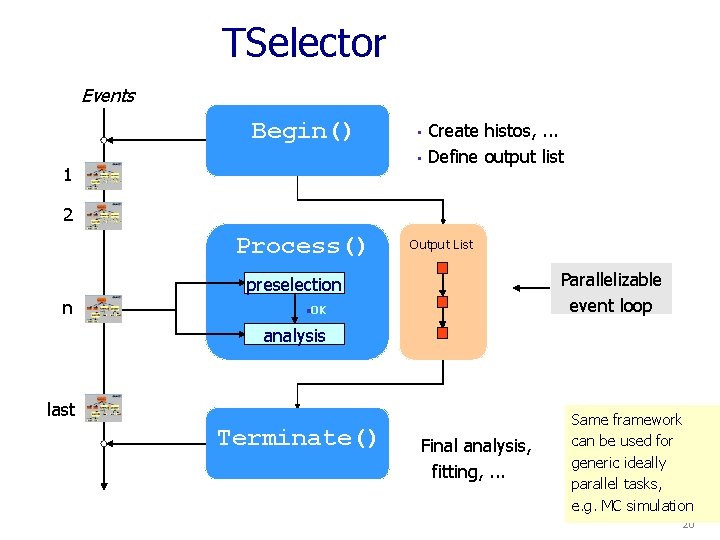

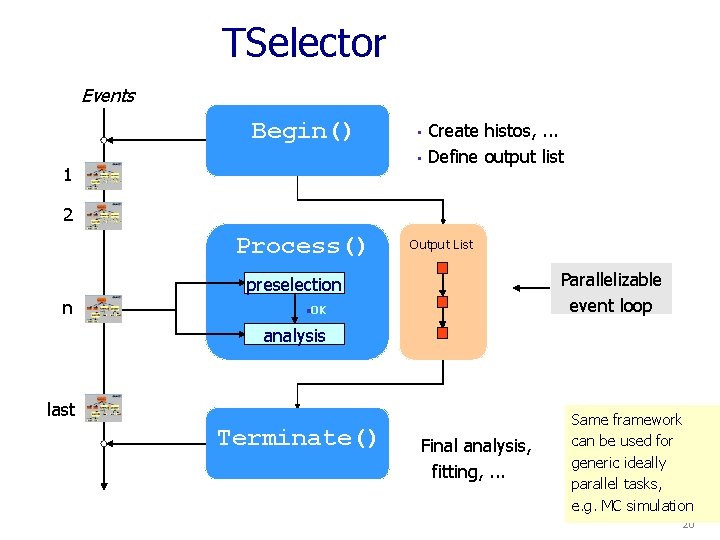

Event level TSelector framework Events Begin() • • 1 Create histos, . . . Define output list 2 Process() Output List Parallelizable event loop preselection n OK analysis last Terminate() Final analysis, fitting, . . . Same framework can be used for generic ideally parallel tasks, e. g. MC simulation 20

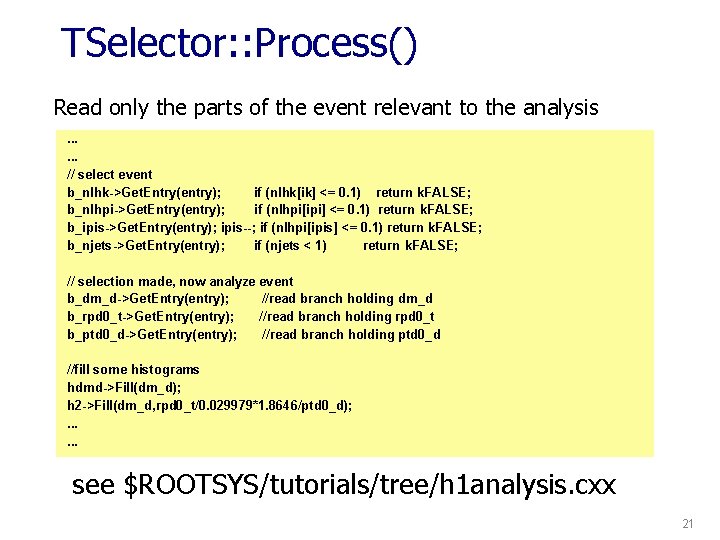

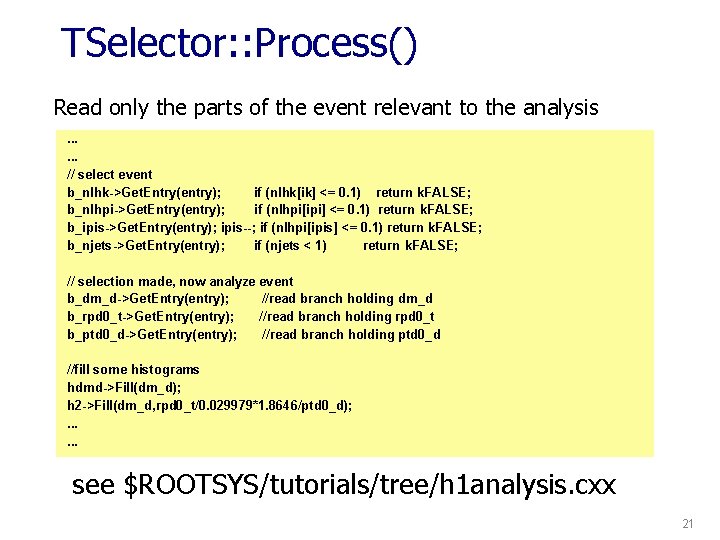

TSelector: : Process() Read only the parts of the event relevant to the analysis. . . // select event b_nlhk->Get. Entry(entry); if (nlhk[ik] <= 0. 1) return k. FALSE; b_nlhpi->Get. Entry(entry); if (nlhpi[ipi] <= 0. 1) return k. FALSE; b_ipis->Get. Entry(entry); ipis--; if (nlhpi[ipis] <= 0. 1) return k. FALSE; b_njets->Get. Entry(entry); if (njets < 1) return k. FALSE; // selection made, now analyze event b_dm_d->Get. Entry(entry); //read branch holding dm_d b_rpd 0_t->Get. Entry(entry); //read branch holding rpd 0_t b_ptd 0_d->Get. Entry(entry); //read branch holding ptd 0_d //fill some histograms hdmd->Fill(dm_d); h 2 ->Fill(dm_d, rpd 0_t/0. 029979*1. 8646/ptd 0_d); . . . see $ROOTSYS/tutorials/tree/h 1 analysis. cxx 21

TSelector performance from “Profiling Post-Grid analysis”, A. Shibata, Erice, ACAT 2008 22

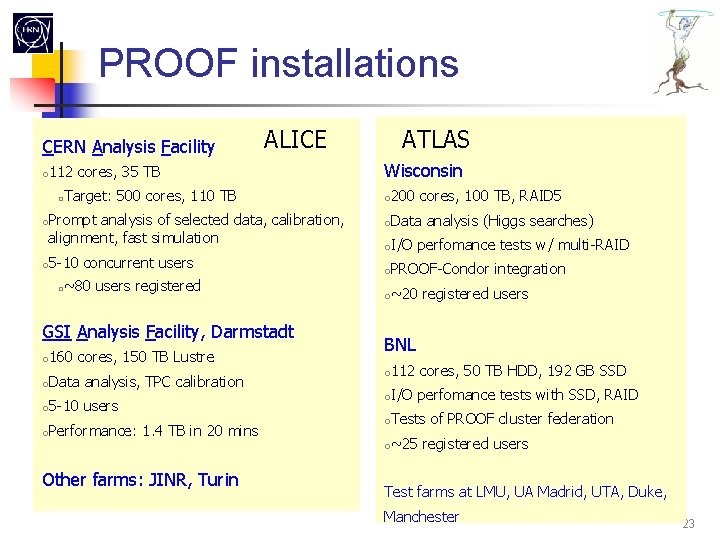

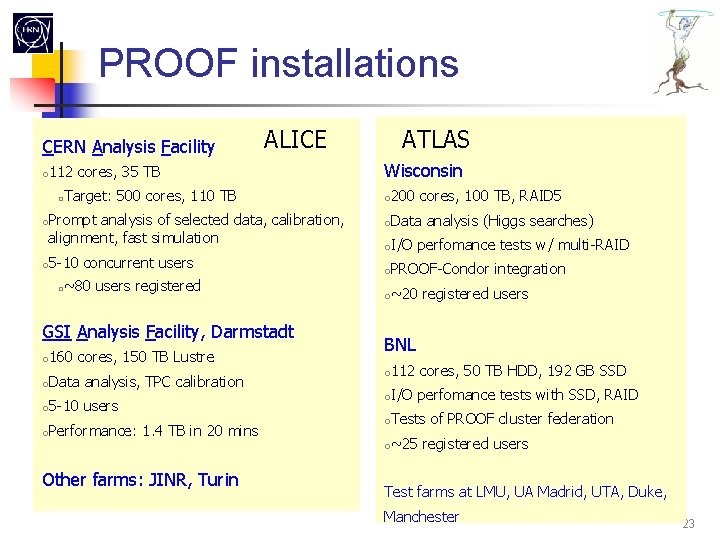

PROOF installations CERN Analysis Facility ALICE 112 cores, 35 TB Target: 500 cores, 110 TB Prompt analysis of selected data, calibration, alignment, fast simulation 5 -10 concurrent users ~80 users registered GSI Analysis Facility, Darmstadt ATLAS Wisconsin 200 cores, 100 TB, RAID 5 Data analysis (Higgs searches) I/O perfomance tests w/ multi-RAID PROOF-Condor integration ~20 registered users BNL 160 cores, 150 TB Lustre Data analysis, TPC calibration 112 cores, 50 TB HDD, 192 GB SSD 5 -10 users I/O perfomance tests with SSD, RAID Performance: 1. 4 TB in 20 mins Tests of PROOF cluster federation ~25 registered users Other farms: JINR, Turin Test farms at LMU, UA Madrid, UTA, Duke, Manchester 23

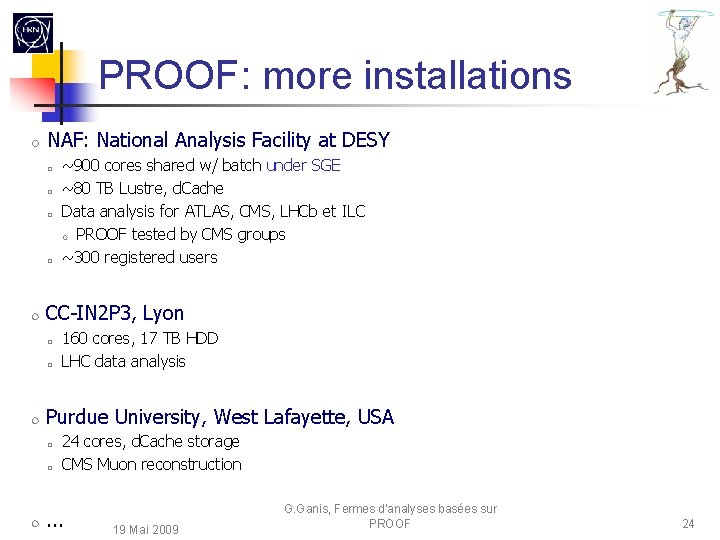

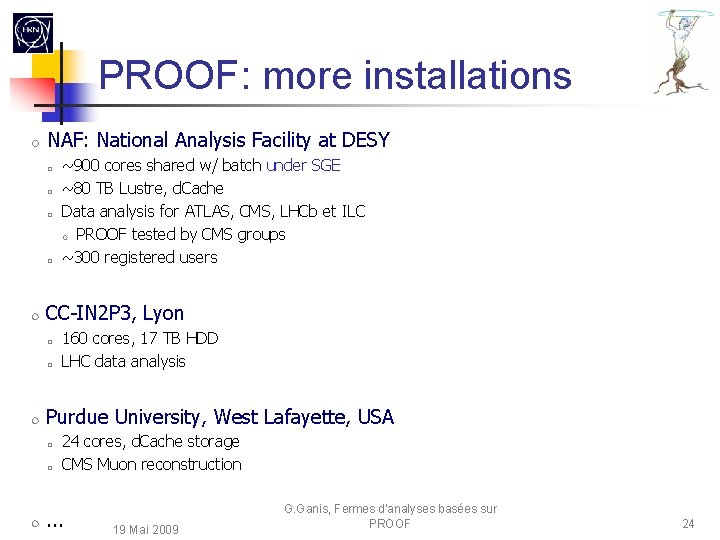

PROOF: more installations NAF: National Analysis Facility at DESY CC-IN 2 P 3, Lyon 160 cores, 17 TB HDD LHC data analysis Purdue University, West Lafayette, USA ~900 cores shared w/ batch under SGE ~80 TB Lustre, d. Cache Data analysis for ATLAS, CMS, LHCb et ILC PROOF tested by CMS groups ~300 registered users 24 cores, d. Cache storage CMS Muon reconstruction . . . 19 Mai 2009 G. Ganis, Fermes d'analyses basées sur PROOF 24

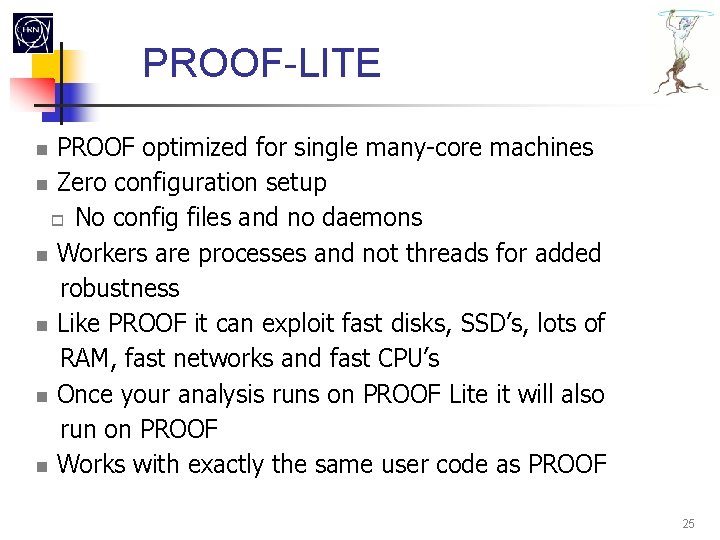

PROOF-LITE PROOF optimized for single many-core machines Zero configuration setup No config files and no daemons Workers are processes and not threads for added robustness Like PROOF it can exploit fast disks, SSD’s, lots of RAM, fast networks and fast CPU’s Once your analysis runs on PROOF Lite it will also run on PROOF Works with exactly the same user code as PROOF 25

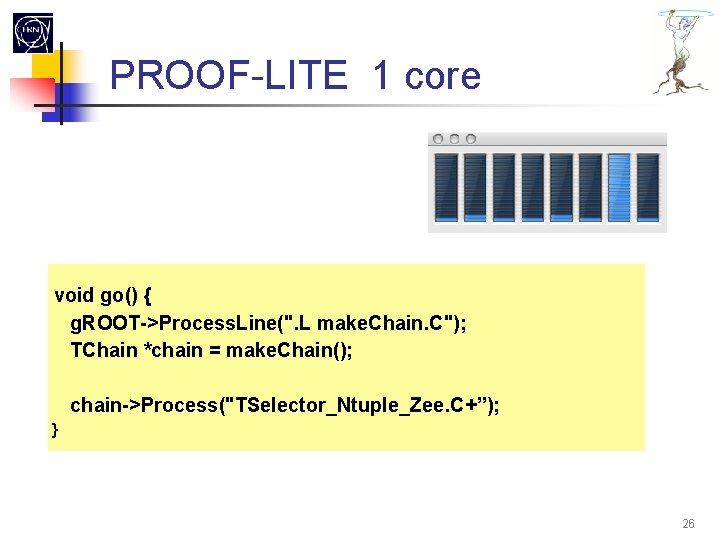

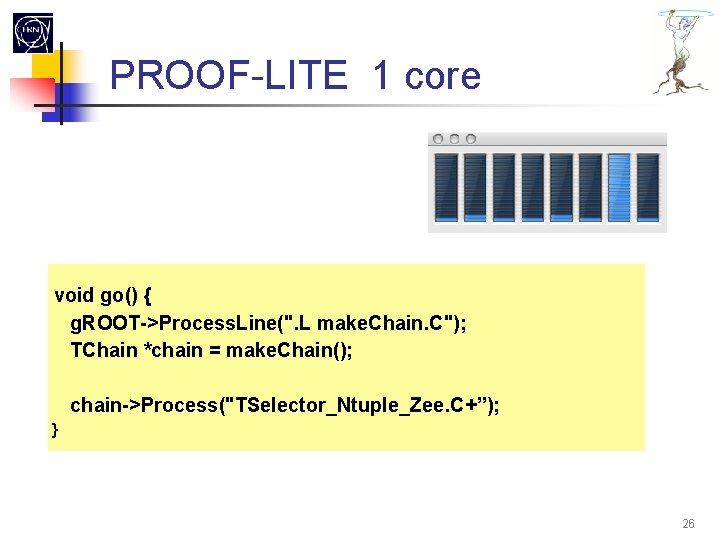

PROOF-LITE 1 core void go() { g. ROOT->Process. Line(". L make. Chain. C"); TChain *chain = make. Chain(); chain->Process("TSelector_Ntuple_Zee. C+”); } 26

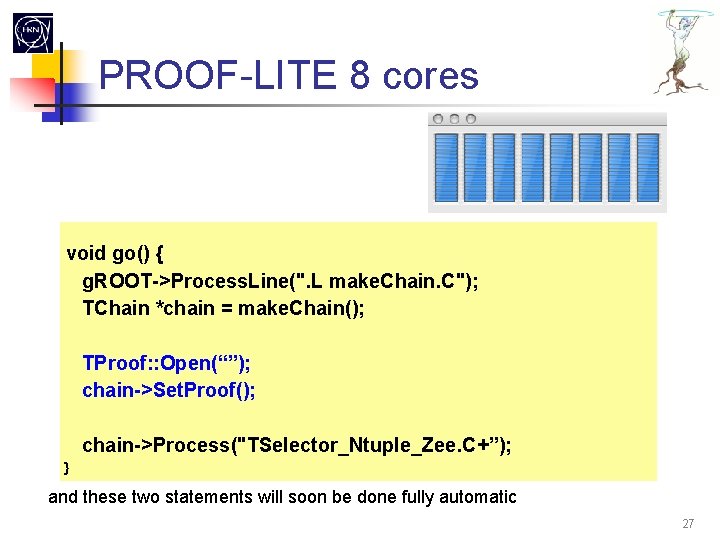

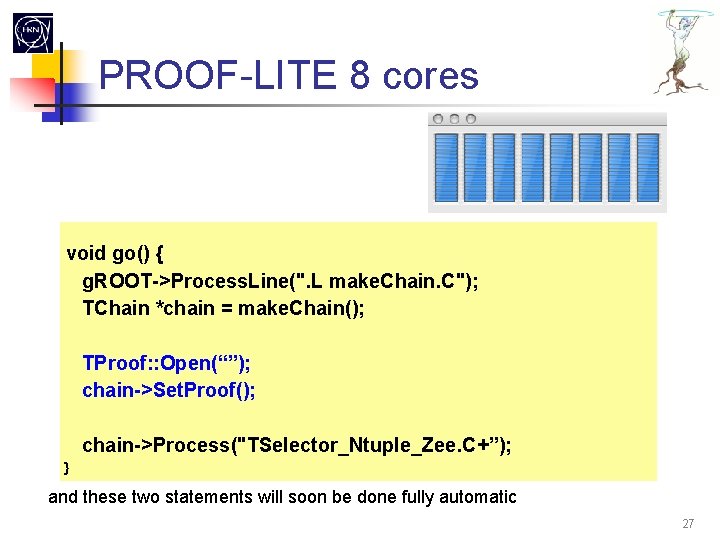

PROOF-LITE 8 cores void go() { g. ROOT->Process. Line(". L make. Chain. C"); TChain *chain = make. Chain(); TProof: : Open(“”); chain->Set. Proof(); chain->Process("TSelector_Ntuple_Zee. C+”); } and these two statements will soon be done fully automatic 27

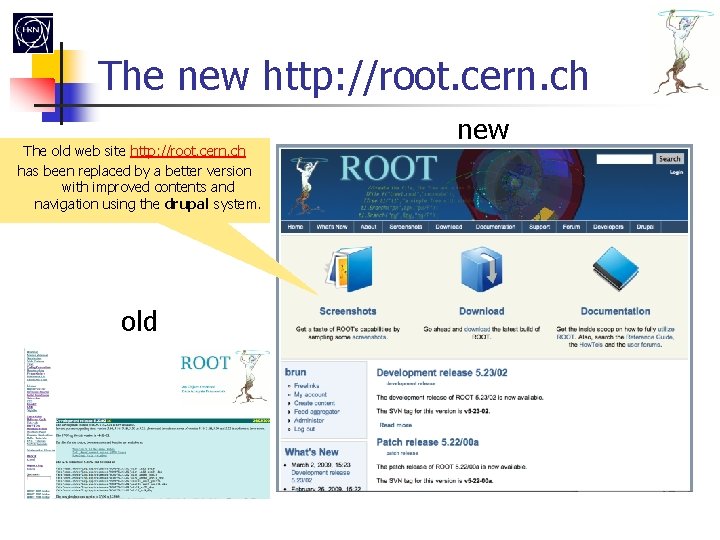

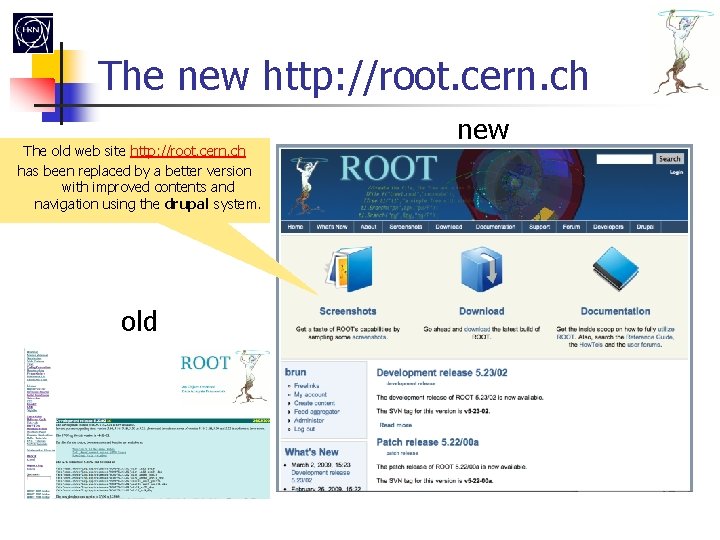

The new http: //root. cern. ch The old web site http: //root. cern. ch has been replaced by a better version with improved contents and navigation using the drupal system. old new

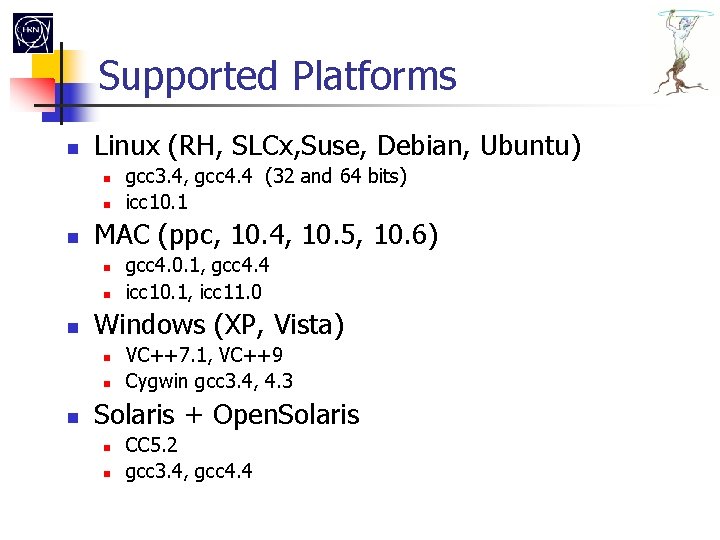

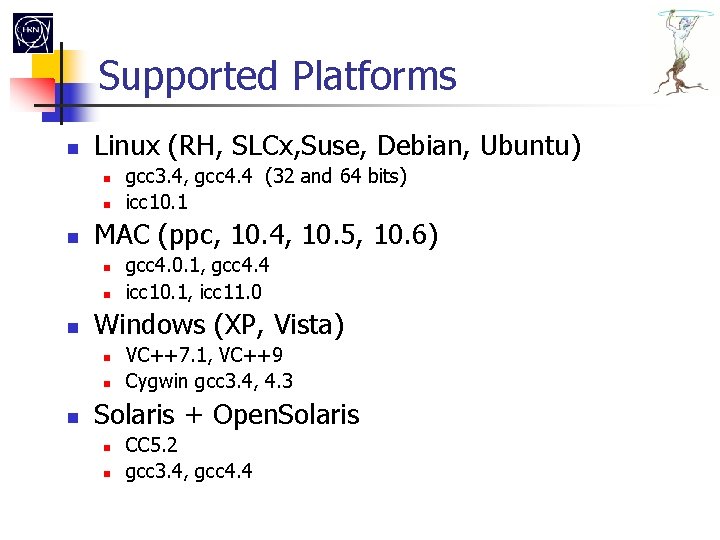

Supported Platforms Linux (RH, SLCx, Suse, Debian, Ubuntu) MAC (ppc, 10. 4, 10. 5, 10. 6) gcc 4. 0. 1, gcc 4. 4 icc 10. 1, icc 11. 0 Windows (XP, Vista) gcc 3. 4, gcc 4. 4 (32 and 64 bits) icc 10. 1 VC++7. 1, VC++9 Cygwin gcc 3. 4, 4. 3 Solaris + Open. Solaris CC 5. 2 gcc 3. 4, gcc 4. 4

Robustness & QA Impressive test suite roottest run in the nightly builds (several hundred tests) Working on GUI test suite (based on Event Recorder)

ROOT developers more stability

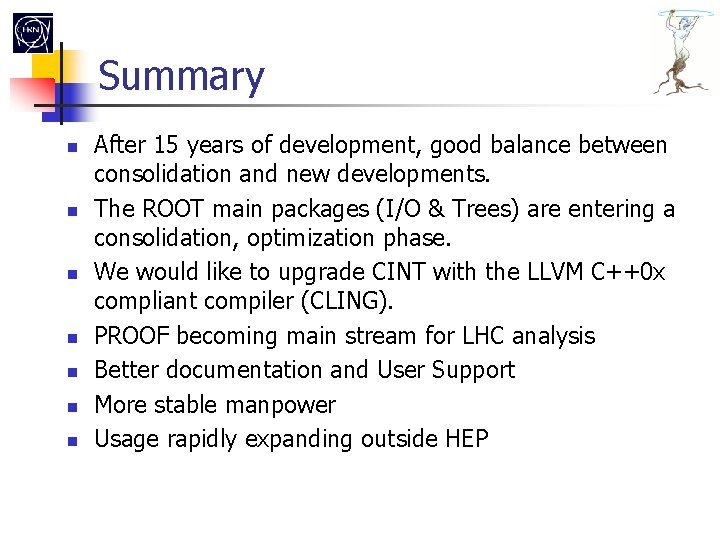

Summary After 15 years of development, good balance between consolidation and new developments. The ROOT main packages (I/O & Trees) are entering a consolidation, optimization phase. We would like to upgrade CINT with the LLVM C++0 x compliant compiler (CLING). PROOF becoming main stream for LHC analysis Better documentation and User Support More stable manpower Usage rapidly expanding outside HEP

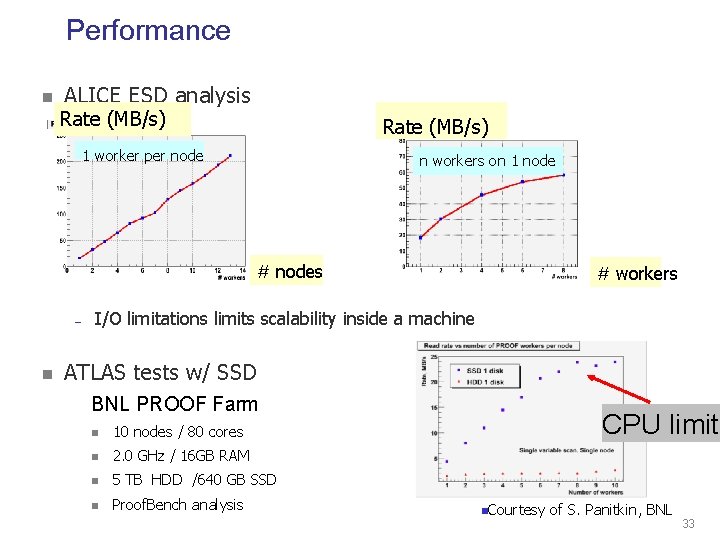

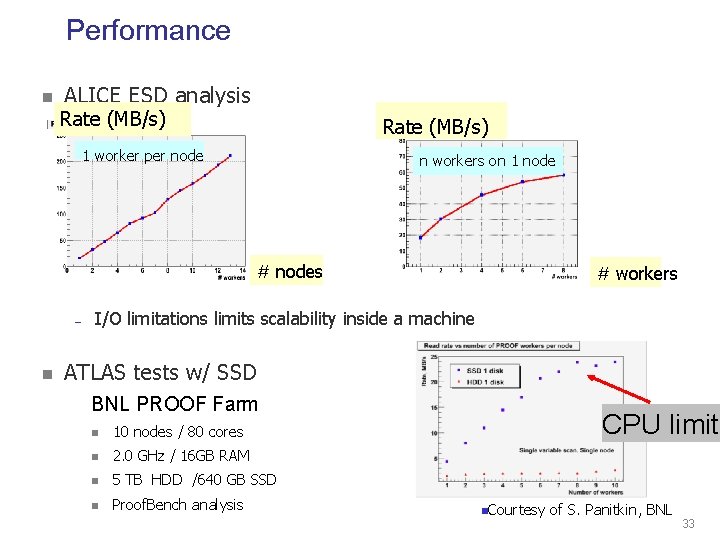

Performance ALICE ESD analysis Rate (MB/s) 1 worker per node n workers on 1 node # nodes – # workers I/O limitations limits scalability inside a machine ATLAS tests w/ SSD BNL PROOF Farm 10 nodes / 80 cores 2. 0 GHz / 16 GB RAM 5 TB HDD /640 GB SSD Proof. Bench analysis CPU limite Courtesy of S. Panitkin, BNL 33

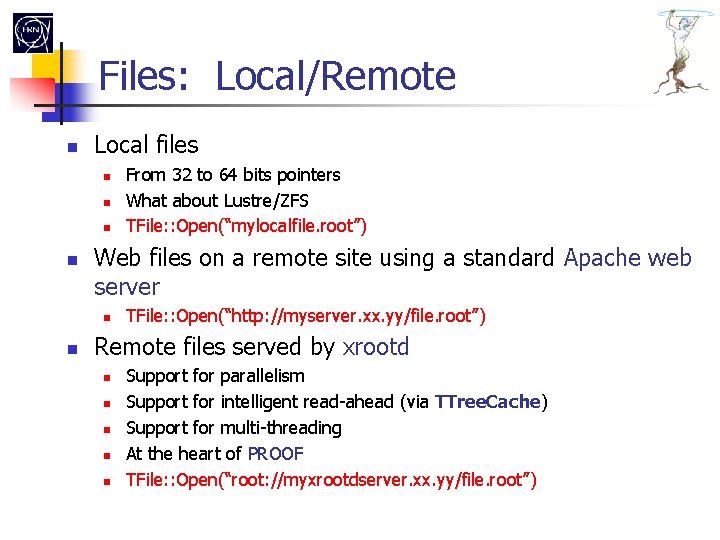

Files: Local/Remote Local files Web files on a remote site using a standard Apache web server From 32 to 64 bits pointers What about Lustre/ZFS TFile: : Open(“mylocalfile. root”) TFile: : Open(“http: //myserver. xx. yy/file. root”) Remote files served by xrootd Support for parallelism Support for intelligent read-ahead (via TTree. Cache) Support for multi-threading At the heart of PROOF TFile: : Open(“root: //myxrootdserver. xx. yy/file. root”)