The Role of Uncertainty Quantification in Machine Learning

- Slides: 15

The Role of Uncertainty Quantification in Machine Learning David J. Stracuzzi April 17, 2020 djstrac@sandia. gov Joint work with: Max Chen, Michael Darling, Todd Jones, Matt Peterson, and Charlie Vollmer SAND 2018 -12825 PE Sandia National Laboratories is a multimission laboratory managed and operated by National Technology & Engineering Solutions of Sandia, LLC, a wholly owned subsidiary of Honeywell International Inc. , for the U. S. Department of Energy’s National Nuclear Security Administration under contract DE-NA 0003525.

2 Uncertainty in Machine Learning Background § Lots of data § Potentially many sources of varying quality § Targets may be rare or hard to detect § Many choices of how to model § Limited training examples § Training and deployed environments may differ Core Questions Ø How do we know that our data analyses are complete, accurate, and informative? Ø How do we know when to trust our analytic results? Under what conditions?

3 What Questions Do We Need to Address? 1. Data sufficiency: Is there enough information in the data to identify stable boundaries? 2. Reliability: a. b. How do we distinguish robust models from those that are sensitive to small changes in the data? What is the relationship between a new example, and the examples used to construct the model? 3. Performance diagnosis: a. b. Under what conditions will a model perform well? What specific steps might address a model’s weaknesses? 4. Decision making: a. b. Given the cost of errors associated with a particular application and a learned model’s performance, where should we place the decision boundary? How might we use uncertainty to guide or support analysts? 5. Multi-source data contributions: Which data should be included in an analysis, and how important is it relative to other data sources? We will look at first attempts at answering these questions.

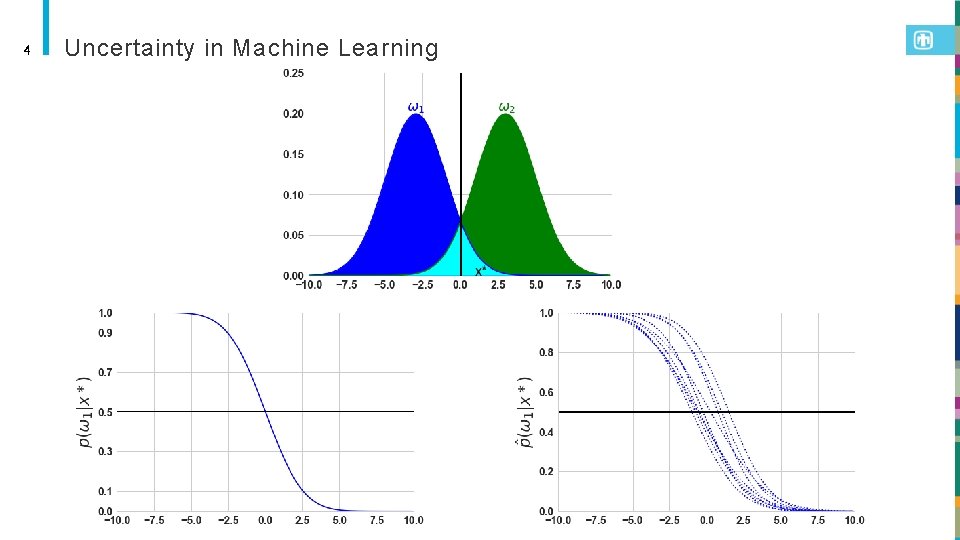

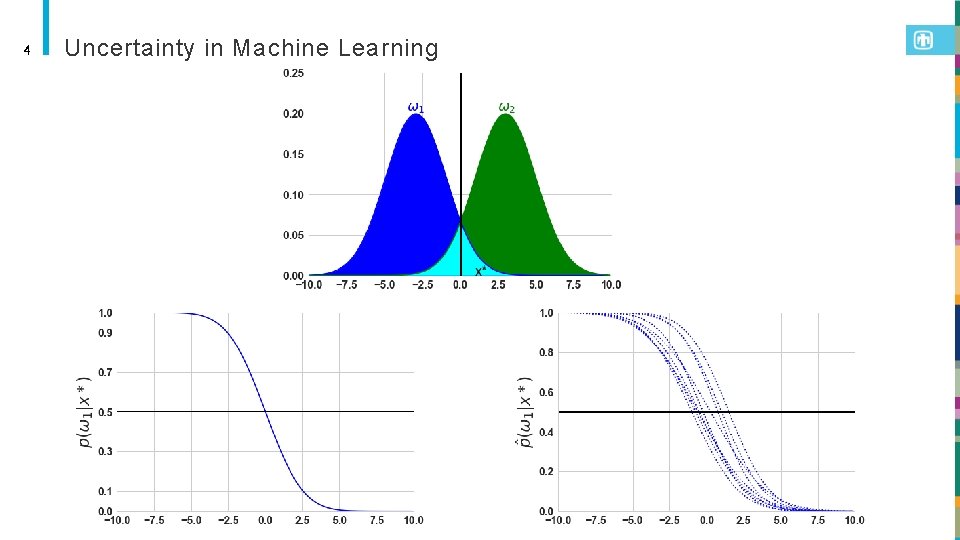

4 Uncertainty in Machine Learning

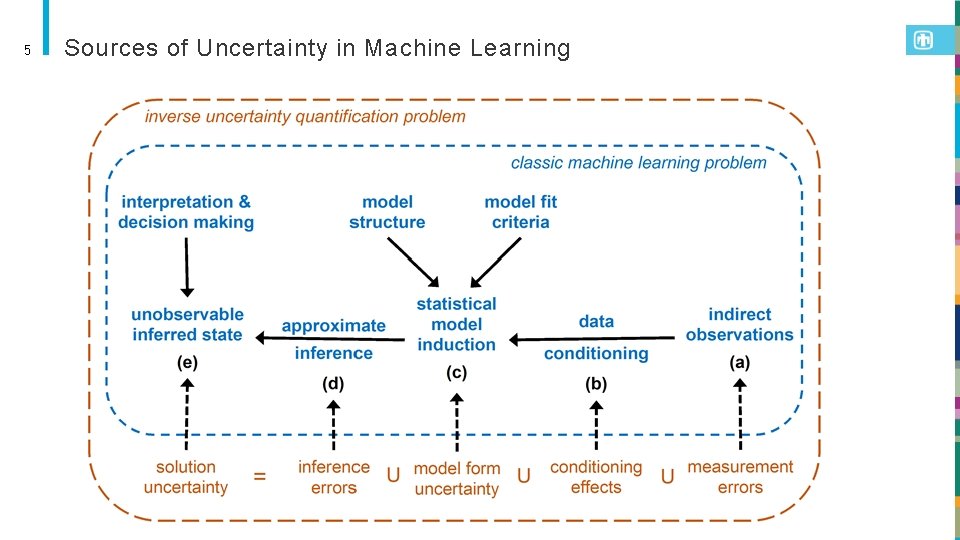

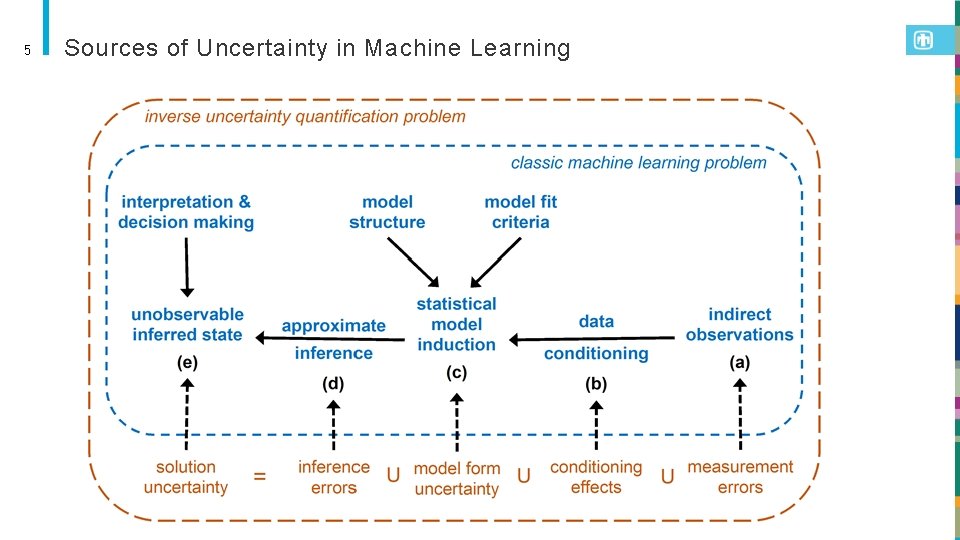

5 Sources of Uncertainty in Machine Learning

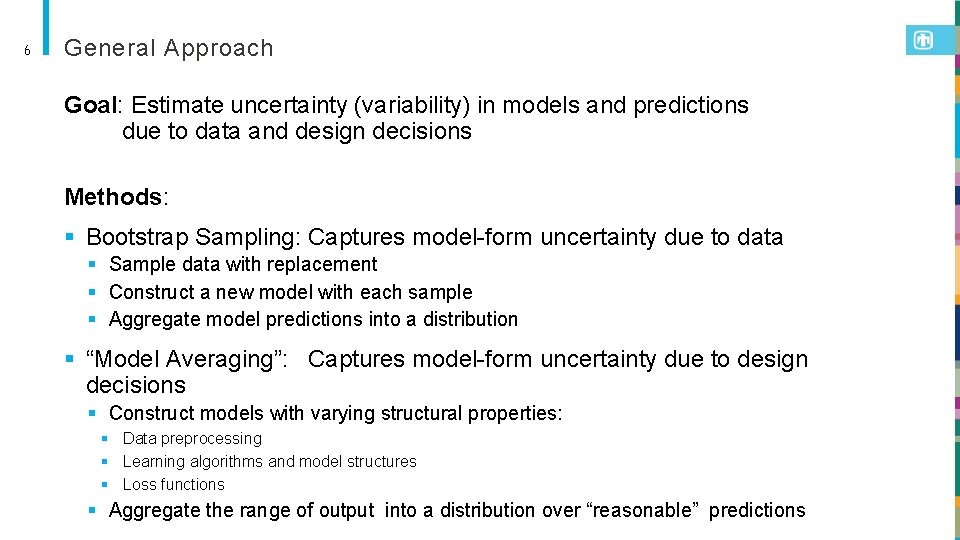

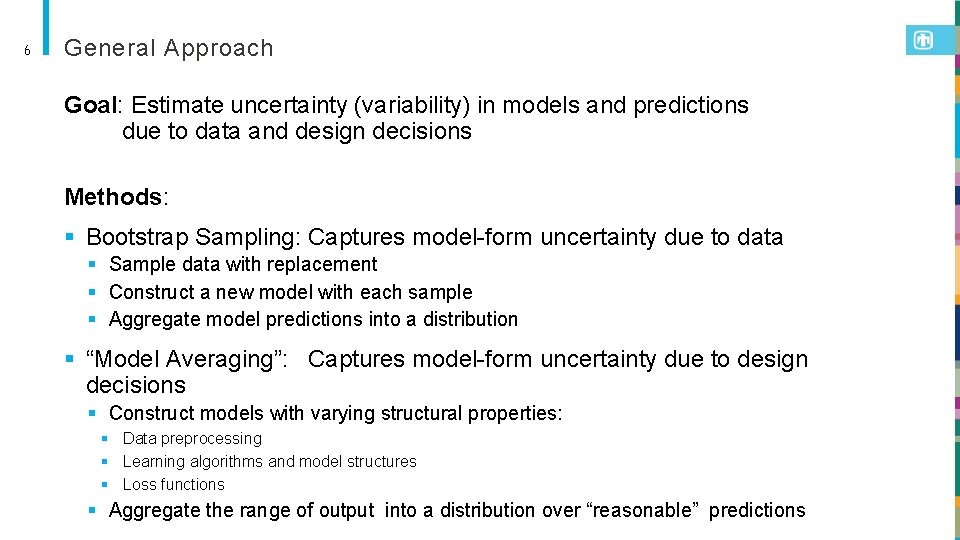

6 General Approach Goal: Estimate uncertainty (variability) in models and predictions due to data and design decisions Methods: § Bootstrap Sampling: Captures model-form uncertainty due to data § Sample data with replacement § Construct a new model with each sample § Aggregate model predictions into a distribution § “Model Averaging”: Captures model-form uncertainty due to design decisions § Construct models with varying structural properties: § Data preprocessing § Learning algorithms and model structures § Loss functions § Aggregate the range of output into a distribution over “reasonable” predictions

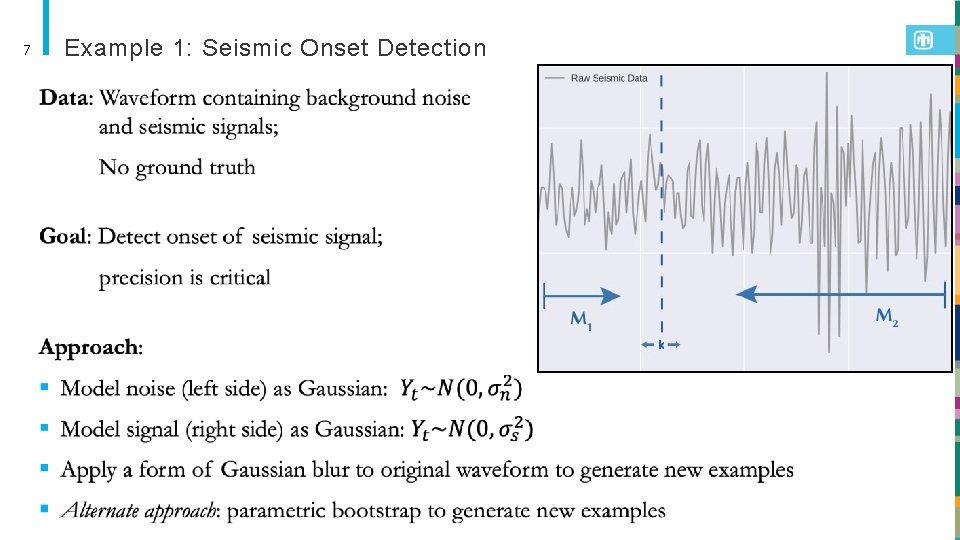

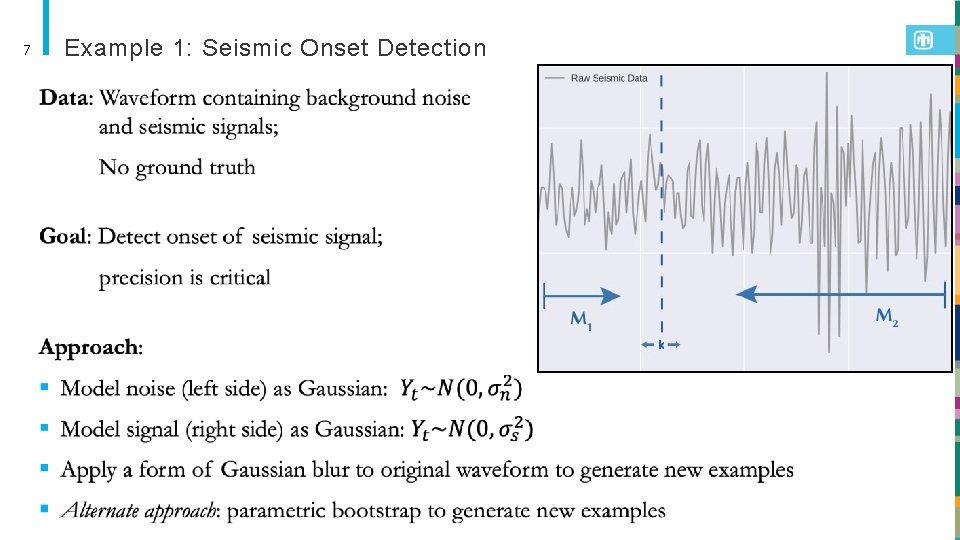

7 Example 1: Seismic Onset Detection

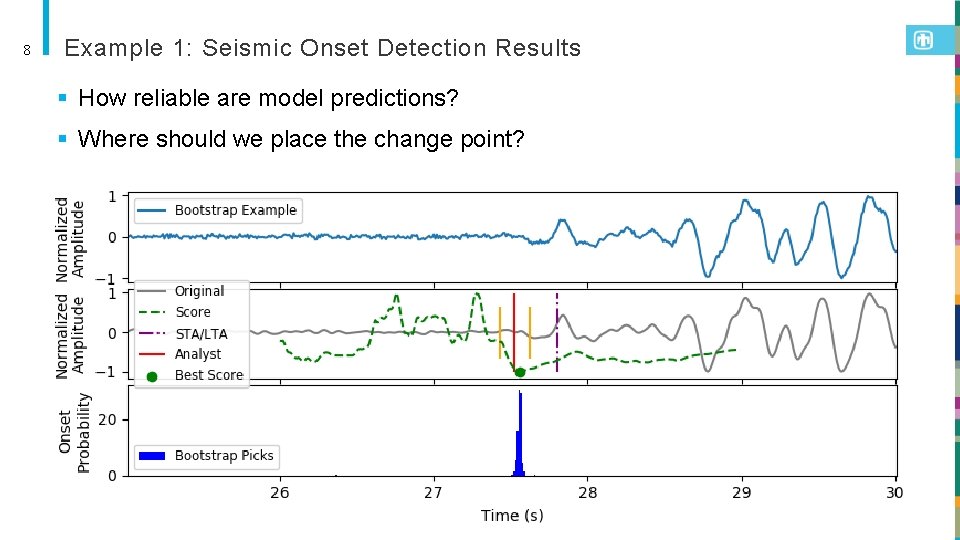

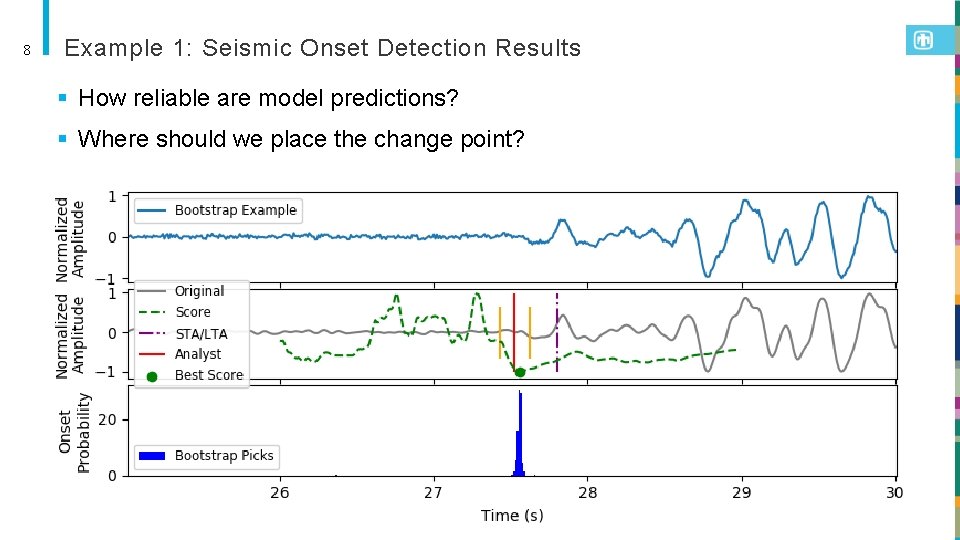

8 Example 1: Seismic Onset Detection Results § How reliable are model predictions? § Where should we place the change point?

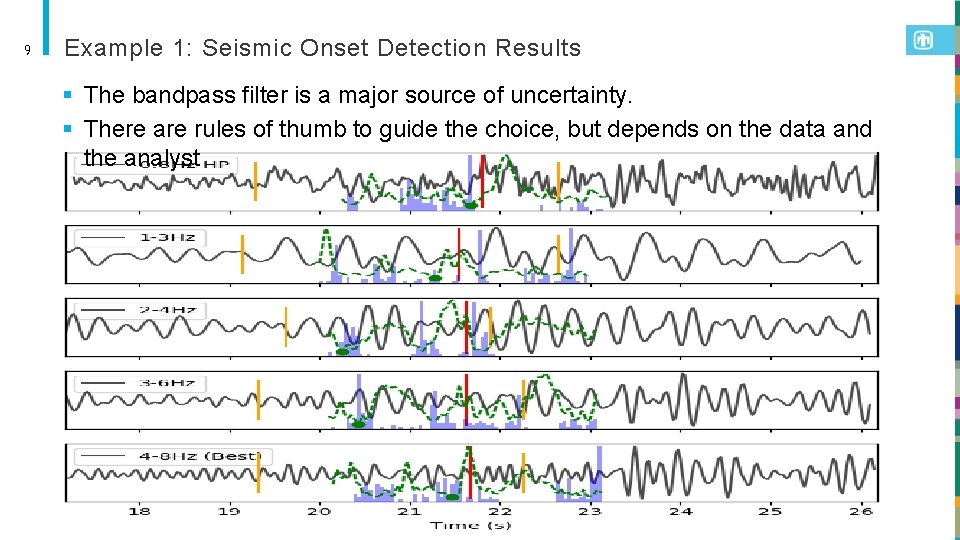

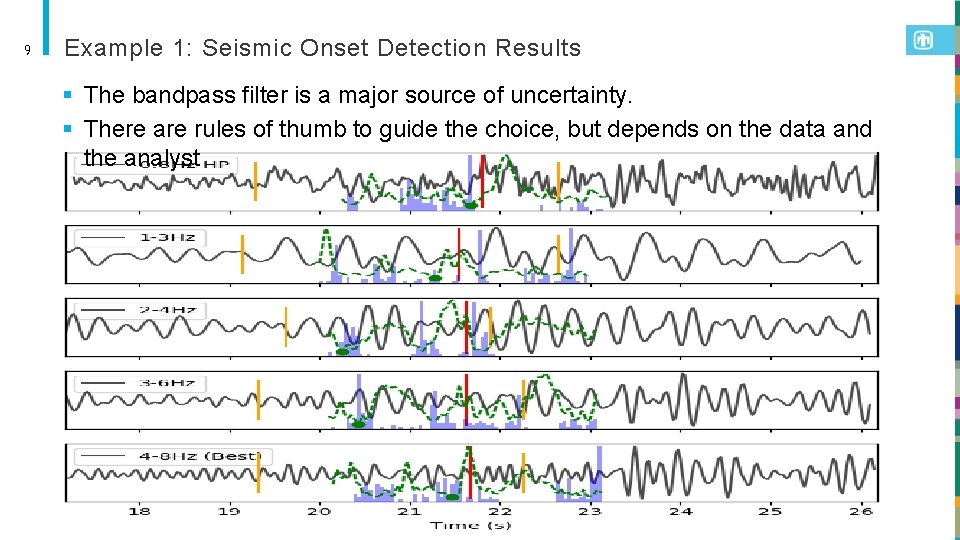

9 Example 1: Seismic Onset Detection Results § The bandpass filter is a major source of uncertainty. § There are rules of thumb to guide the choice, but depends on the data and the analyst

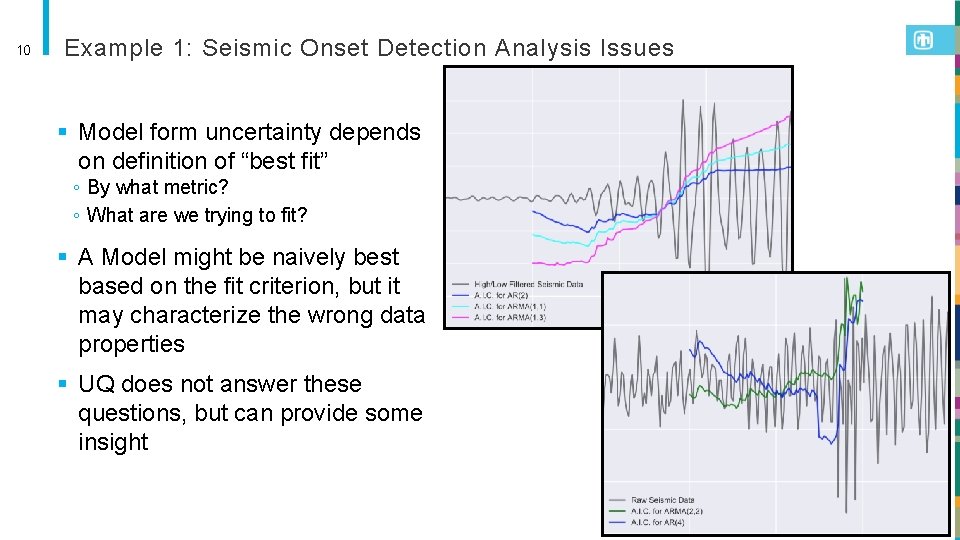

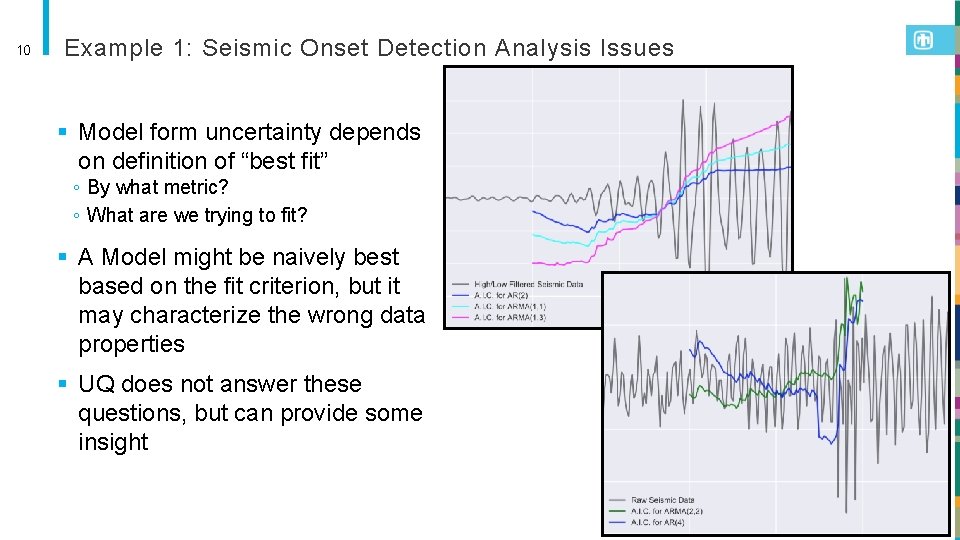

10 Example 1: Seismic Onset Detection Analysis Issues § Model form uncertainty depends on definition of “best fit” ◦ By what metric? ◦ What are we trying to fit? § A Model might be naively best based on the fit criterion, but it may characterize the wrong data properties § UQ does not answer these questions, but can provide some insight

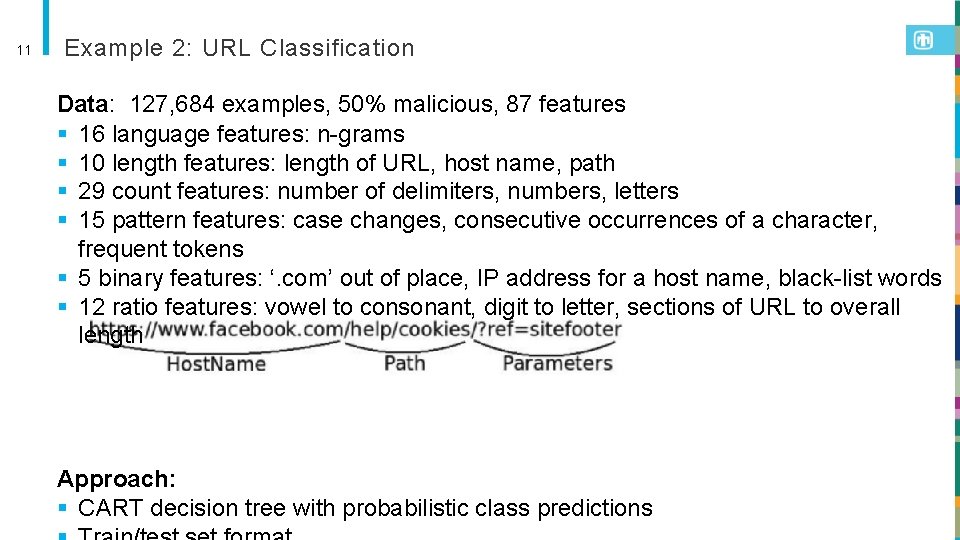

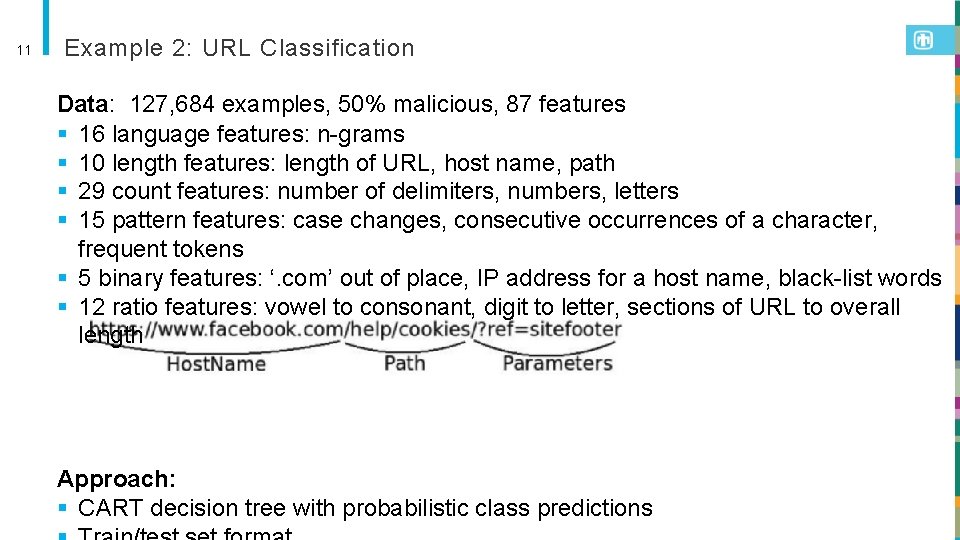

11 Example 2: URL Classification Data: 127, 684 examples, 50% malicious, 87 features § 16 language features: n-grams § 10 length features: length of URL, host name, path § 29 count features: number of delimiters, numbers, letters § 15 pattern features: case changes, consecutive occurrences of a character, frequent tokens § 5 binary features: ‘. com’ out of place, IP address for a host name, black-list words § 12 ratio features: vowel to consonant, digit to letter, sections of URL to overall length Approach: § CART decision tree with probabilistic class predictions

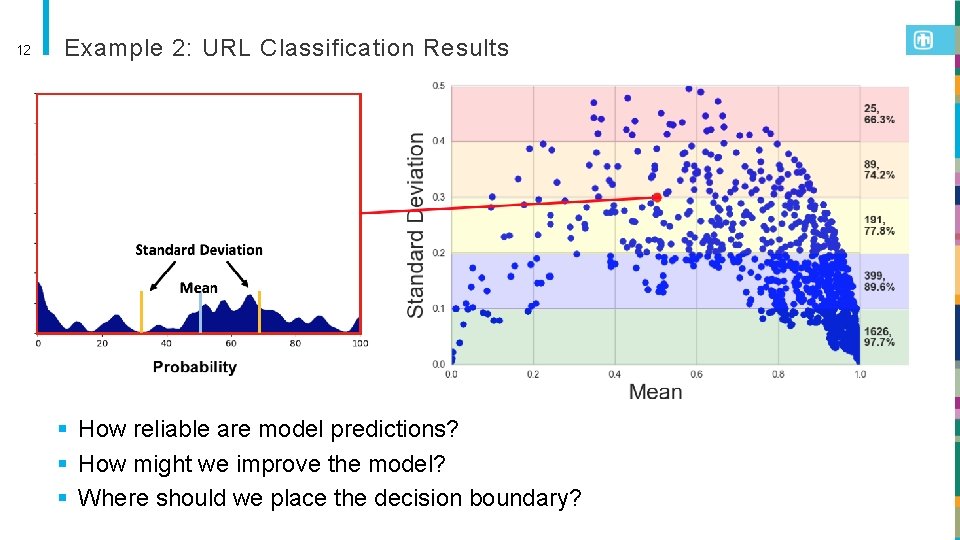

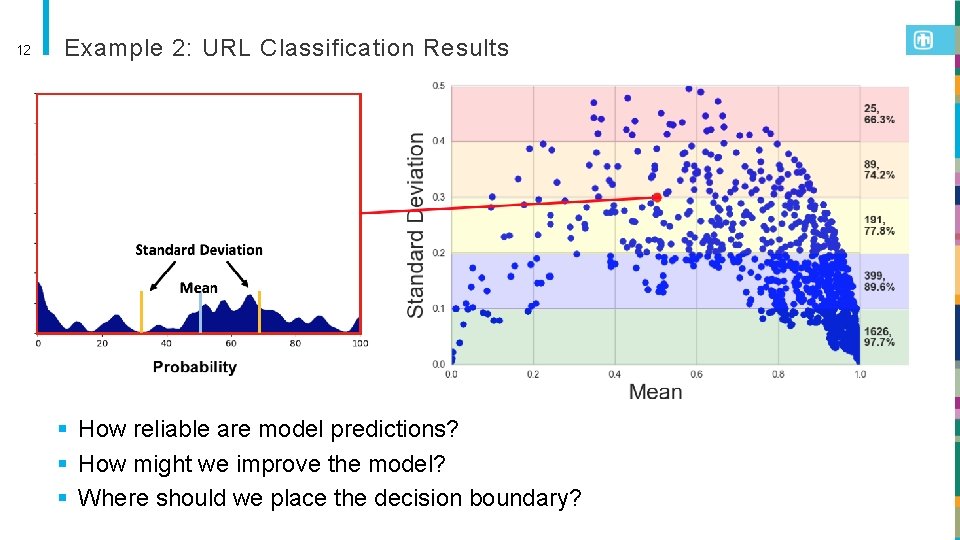

12 Example 2: URL Classification Results § How reliable are model predictions? § How might we improve the model? § Where should we place the decision boundary?

13 Future Work § Capture all sources of uncertainty § Uncertainty propagation from one task to the next § Incorporate Bayesian methods § Develop ground truth benchmarks to evaluate uncertainty estimation § Develop the relationship between prediction uncertainty and the decisions that need to be made § Incorporate human expertise into uncertainty analyses § Expand on notion of uncertainty as alternate interpretations of data

14 Summary Uncertainty analysis does not “build a better model” or tell us whether a given prediction is correct. It indicates how well a given model captures the data and how strongly we should rely on it for decision making. § Explicitly evaluates data and model sufficiency in the absence of ground truth; § Adds statistical rigor to existing reliance on model accuracy relative to known examples; § Considers multiple interpretations of the data; § Provides information about the model and possible improvements; § Provides information about decision making and trustworthiness.

15 Uncertainty Quotes “We demand rigidly defined areas of doubt and uncertainty!” – Douglas Adams, The Hitchhiker's Guide to the Galaxy “It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so. ” – Uncertain Origin “Uncertainty is an uncomfortable position. But certainty is an absurd one. ” – Voltaire "Ignorance more frequently begets confidence than does knowledge…” – Charles Darwin “As far as the laws of mathematics refer to reality, they are not certain; and as far as they are certain, they do not refer to reality. ” – Albert Einstein