The Role of Metadata in Machine Learning for

- Slides: 12

The Role of Metadata in Machine Learning for TAR Amanda Jones ajones@h 5. com Marzieh Bazrafshan mbazrafshan@h 5. com Fernando Delgado fdelgado@h 5. com Tania Lihatsh tlihatsh@h 5. com Tami Schuyler tschuyler@h 5. com

Metadata Use in TAR – Lack of Consensus Ø It is generally agreed across the industry that metadata is a critical component of ESI for e. Discovery. • Some view incorporation of metadata into machine learning algorithm development for TAR as a matter of course. • Others view it as atypical, if not incompatible, with machine learning approaches to document classification. 2

Metadata in TAR – Goals of the Study Ø If metadata provides information that is vital for manual document review in e. Discovery, though, why would it be any less valuable for TAR? Ø Goals of the current study: 1. Establish the potential benefit of incorporating metadata into TAR algorithm development processes. 2. Establish the potential benefit of leveraging widely inclusive sets of metadata, as opposed to limited pre-determined sets. 3. Establish the potential benefit of integrating metadata using techniques that preserve the added layer of information associated with metadata values. 3

Metadata in TAR – Data & Methods Ø 3 Distinct Data Sets: • • 1 drawn from Topic 301 of the TREC 2010 Interactive Task 2 proprietary business data sets Random sample of 4500 individually labeled documents for each Split into a 3000 document Control Set and a 1500 -document Training Set Ø All machine learning models developed using an open source Support Vector Machine (SVM) implementation Ø Performance Metric: Area Under the Receiver Operating Characteristic Curve (AUROC) 4

Metadata in TAR – Metadata Choices Ø Metadata availability varied across data sets Ø Fields were chosen opportunistically based on availability and amenability to feature transformation • Fields that were populated for fewer than 5% of the documents were omitted • Continuous metadata values were transformed into categorical values. o For example, date values were collapsed into simple Month-Year values ; file size values were assigned to categories ranging from very small to very large. 5

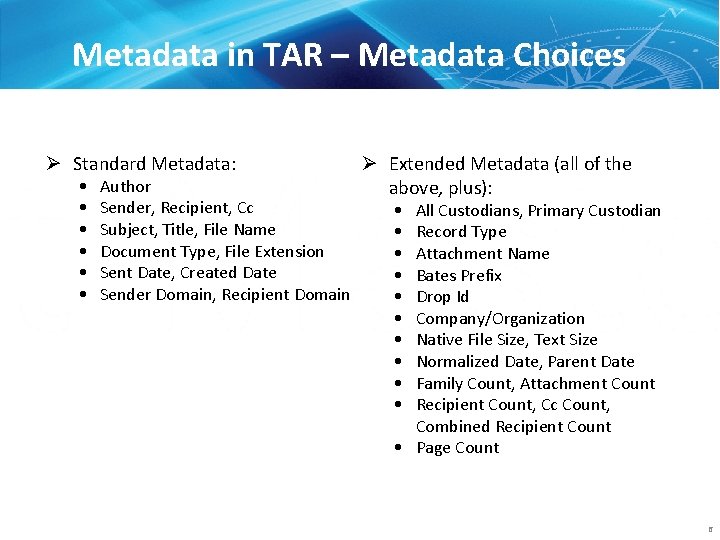

Metadata in TAR – Metadata Choices Ø Standard Metadata: • • • Author Sender, Recipient, Cc Subject, Title, File Name Document Type, File Extension Sent Date, Created Date Sender Domain, Recipient Domain Ø Extended Metadata (all of the above, plus): • • • All Custodians, Primary Custodian Record Type Attachment Name Bates Prefix Drop Id Company/Organization Native File Size, Text Size Normalized Date, Parent Date Family Count, Attachment Count Recipient Count, Cc Count, Combined Recipient Count • Page Count 6

Metadata in TAR – Incremental Testing Ø Hypothesis 1: Incorporating metadata into the machine learning process will lead to improved model performance. • Text from Standard Metadata added to the body text of documents o There was a general trend of improvement across the three data sets. The improvement was highly significant for Data Set 3. Ø Hypothesis 2: Incorporating the text from Extended Metadata will lead to superior results as compared to incorporating Standard Metadata alone. • Text from Extended Metadata added to the body text of documents – compared to models based on addition of Standard Metadata o There was a general trend of improvement across the three data sets. The improvement was highly significant for Data Sets 1 and 3. 7

Metadata in TAR – Incremental Testing Ø Hypothesis 3: Using metadata values in ways that preserve both attribute and value information will result in superior performance. • Extended Metadata values prefixed to indicate their field origins added to body text – compared to models with Extended Metadata added as plain text o Improvements varied across the three data sets, but significant for Data Set 2. • Dual modeling – prefixed metadata values and simple body text modeled independently, scores from two models multiplied to arrive at a final score – dual models compared to single models with prefixed Extended Metadata o There was a general trend of improvement across the three data sets. The improvement was highly significant for Data Sets 2 and 3. 8

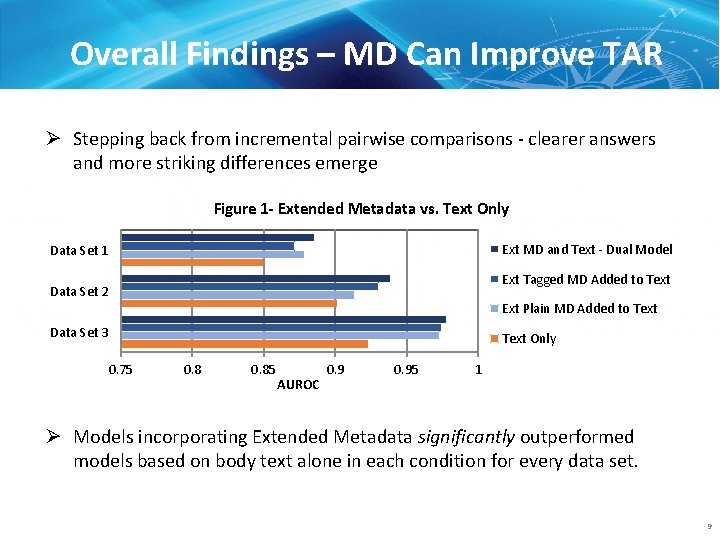

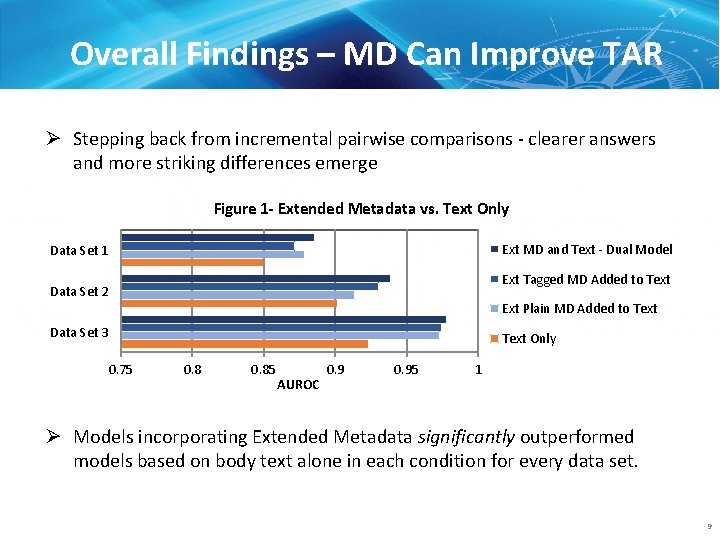

Overall Findings – MD Can Improve TAR Ø Stepping back from incremental pairwise comparisons - clearer answers and more striking differences emerge Figure 1 - Extended Metadata vs. Text Only Ext MD and Text - Dual Model Data Set 1 Ext Tagged MD Added to Text Data Set 2 Ext Plain MD Added to Text Data Set 3 Text Only 0. 75 0. 85 AUROC 0. 95 1 Ø Models incorporating Extended Metadata significantly outperformed models based on body text alone in each condition for every data set. 9

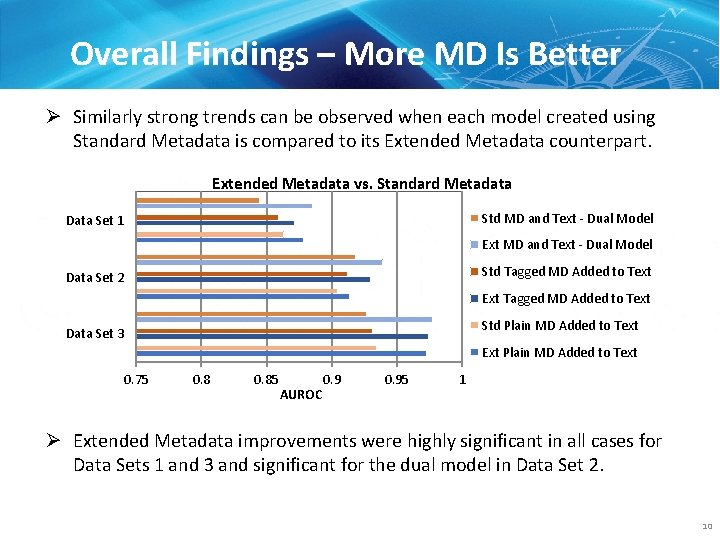

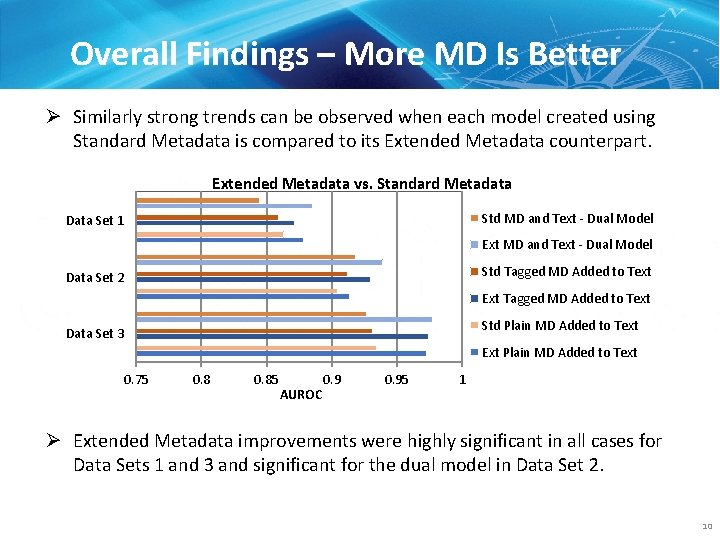

Overall Findings – More MD Is Better Ø Similarly strong trends can be observed when each model created using Standard Metadata is compared to its Extended Metadata counterpart. Extended Metadata vs. Standard Metadata Std MD and Text - Dual Model Data Set 1 Ext MD and Text - Dual Model Std Tagged MD Added to Text Data Set 2 Ext Tagged MD Added to Text Std Plain MD Added to Text Data Set 3 Ext Plain MD Added to Text 0. 75 0. 85 AUROC 0. 95 1 Ø Extended Metadata improvements were highly significant in all cases for Data Sets 1 and 3 and significant for the dual model in Data Set 2. 10

Metadata in TAR – Conclusions Ø Incorporating metadata as an integral component of machine learning processes for TAR in e. Discovery will benefit the community of practice. • Neglecting this resource is – at best – a missed opportunity. In an information retrieval effort, why leave information on the table? Ø To realize the full potential of using metadata in machine learning for TAR, practitioners should not rely solely on a limited intuitive set of metadata. • Examining the contributions of specific metadata fields at a more granular level could be very worthwhile. o Is “all available” always the best choice? Ø There are still more questions than answers when it comes to the use of metadata in modeling for TAR. o More effective algorithms? o Better techniques for capturing the full metadata contribution? 11

Questions? 12