The Role of Advanced Technology Systems in the

- Slides: 24

The Role of Advanced Technology Systems in the ASC Platform Strategy Douglas Doerfler Distinguished Member of Technical Staff Sandia National Laboratories Scalable Computer Architectures Department SAND 2014 -3174 C Unlimited Release Sandia National Laboratories is a multi-program laboratory managed and operated by Sandia Corporation, a wholly owned subsidiary of Lockheed Martin Corporation, for the U. S. Department of Energy’s National Nuclear Security Administration under contract DE-AC 04 -94 AL 85000. SAND NO. 2011 -XXXXP

Topics § § ASC ATS Computing Strategy Partnerships & the Joint Procurement Process Trinity Project Status Advanced Architecture Test Bed Project 2

ASC computing strategy § Approach: Two classes of systems § Advanced Technology: First of a kind systems that identify and foster technical capabilities and features that are beneficial to ASC applications § Commodity Technology: Robust, cost-effective systems to meet the day-to-day simulation workload needs of the program § Investment Principles § Maintain continuity of production § Ensure that the needs of the current and future stockpile are met § Balance investments in system cost-performance types with computational requirements § Partner with industry to introduce new high-end technology constrained by life-cycle costs § Acquire right-sized platforms to meet the mission needs 3

Advanced Technology Systems § Leadership-class platforms § Pursue promising new technology paths with industry partners § These systems are to meet unique mission needs and to help prepare the program for future system designs § Includes Non-Recurring Engineering (NRE) funding to enable delivery of leading-edge platforms § Trinity (ATS-1) will be deployed by ACES (New Mexico Alliance for Computing at Extreme Scale, i. e. Los Alamos & Sandia) § ATS-2 will be deployed by LLNL 4

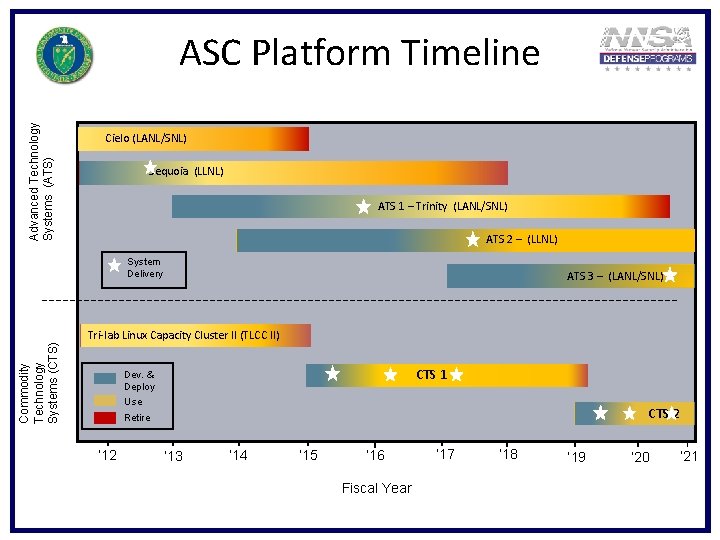

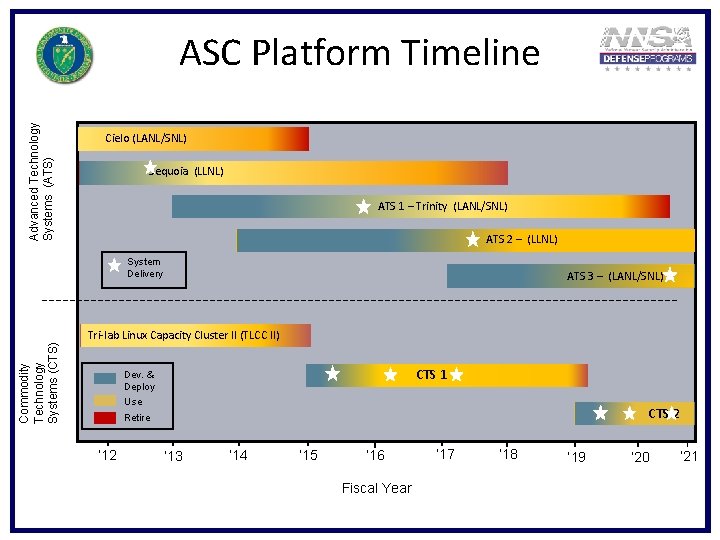

Advanced Technology Systems (ATS) ASC Platform Timeline Cielo (LANL/SNL) Sequoia (LLNL) ATS 1 – Trinity (LANL/SNL) ATS 2 – (LLNL) Commodity Technology Systems (CTS) System Delivery ATS 3 – (LANL/SNL) Tri-lab Linux Capacity Cluster II (TLCC II) CTS 1 Dev. & Deploy Use CTS 2 Retire ‘ 12 ‘ 13 ‘ 14 ‘ 15 ‘ 16 Fiscal Year ‘ 17 ‘ 18 ‘ 19 ‘ 20 ‘ 21

The ACES partnership since 2008 § SNL/LANL MOU signed March 2008 to integrate and leverage capabilities § Commitment to the shared development and use of HPC to meet NW mission needs § Major efforts are executed by project teams chartered by and accountable to the ACES co-directors § Cielo delivered ca. 2011 § Trinity delivery in 2015 is now our dominant focus § Both Laboratories are fully committed to delivering a successful platform as it’s essential to the Laboratories 6

NNSA/ASC and SC/ASCR are partnering on RFPs § Trinity/NERSC-8: ACES & LBL § CORAL: LLNL, ORNL and ANL § § § § § Strengthen the alliance between NNSA and SC on road to exascale Show vendors a more united path on road to exascale Shared technical expertise between labs Should gain cost benefit Saves vendors money/time responding to a single RFP, single set of technical requirements Outside perspective reduces risk -- avoids tunnel vision by one lab More leverage with vendors by sharing information between labs Benefits in production, shared bug reports, quarterly meetings Less likely to be a one-off system with multiple sites participating 7

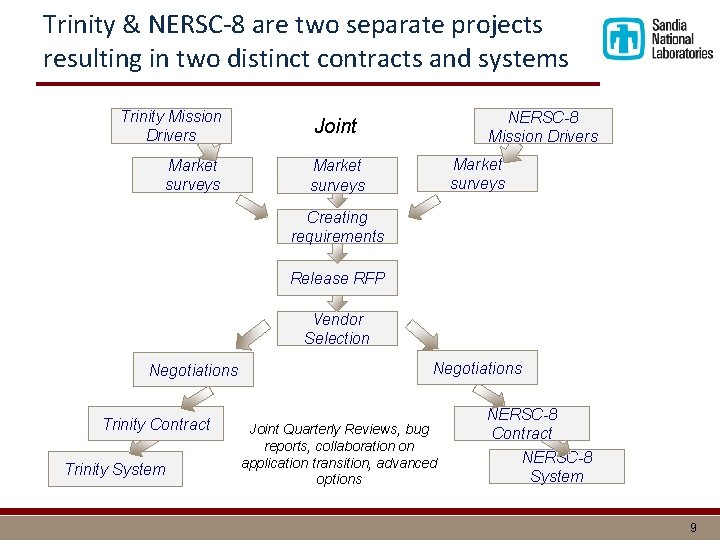

Why is NNSA/ASC and SC/ASCR collaborating? § The April 2011 MOU between SC & NNSA for coordinating Exascale activities was the impetus for ASC and ASCR to work together on the proposed Exascale Computing Initiative (ECI). § While ECI is yet to be realized, ASC & ASCR program directors made strategic decisions to co-fund and collaborate on: § Technology R&D Investments: Fast. Forward and Design. Forward § System Acquisitions: Trinity/NERSC-8 and CORAL § Great leveraging opportunities to share precious resources (budget & technical expertise) to achieve each program’s mission goals, while working out some cultural/bureaucratic differences. § The Trinity/NERSC-8 collaboration will proceed with joint RFP and selection, separate system awards, attendance at other system’s project reviews and collective problem solving. 8

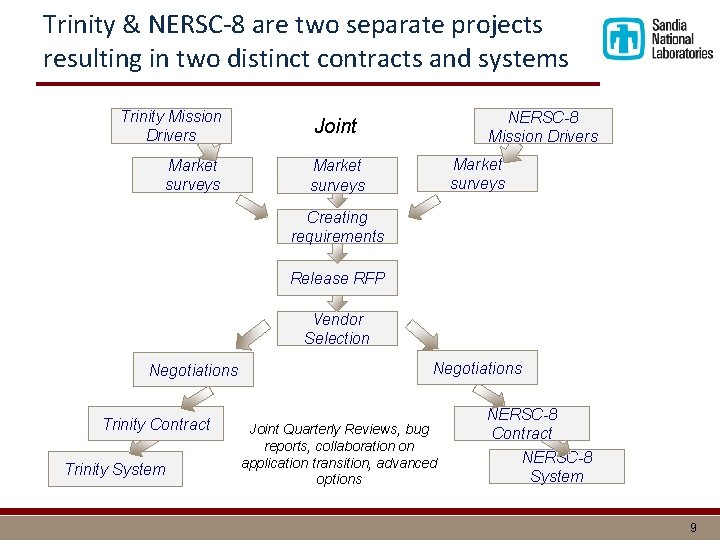

Trinity & NERSC-8 are two separate projects resulting in two distinct contracts and systems Trinity Mission Drivers Market surveys NERSC-8 Mission Drivers Joint Market surveys Creating requirements Release RFP Vendor Selection Negotiations Trinity Contract Trinity System Negotiations Joint Quarterly Reviews, bug reports, collaboration on application transition, advanced options NERSC-8 Contract NERSC-8 System 9

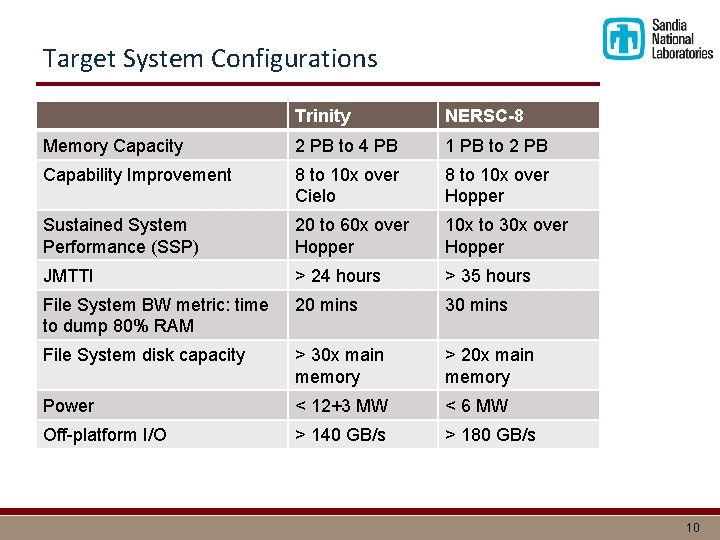

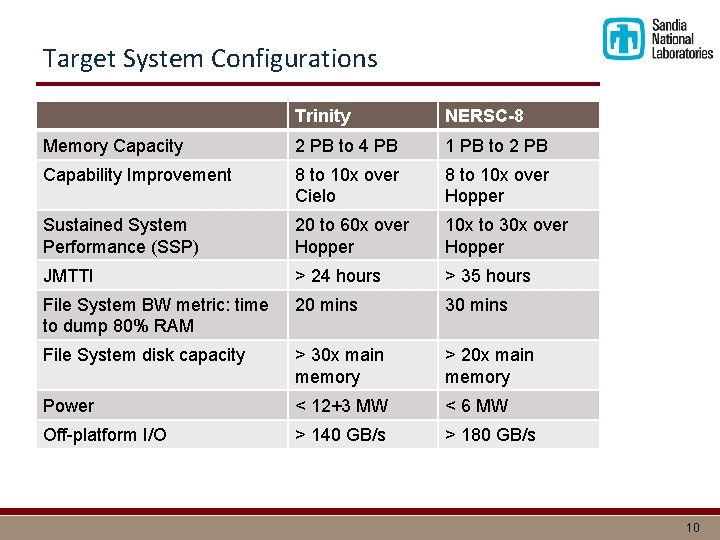

Target System Configurations Trinity NERSC-8 Memory Capacity 2 PB to 4 PB 1 PB to 2 PB Capability Improvement 8 to 10 x over Cielo 8 to 10 x over Hopper Sustained System Performance (SSP) 20 to 60 x over Hopper 10 x to 30 x over Hopper JMTTI > 24 hours > 35 hours File System BW metric: time to dump 80% RAM 20 mins 30 mins File System disk capacity > 30 x main memory > 20 x main memory Power < 12+3 MW < 6 MW Off-platform I/O > 140 GB/s > 180 GB/s 10

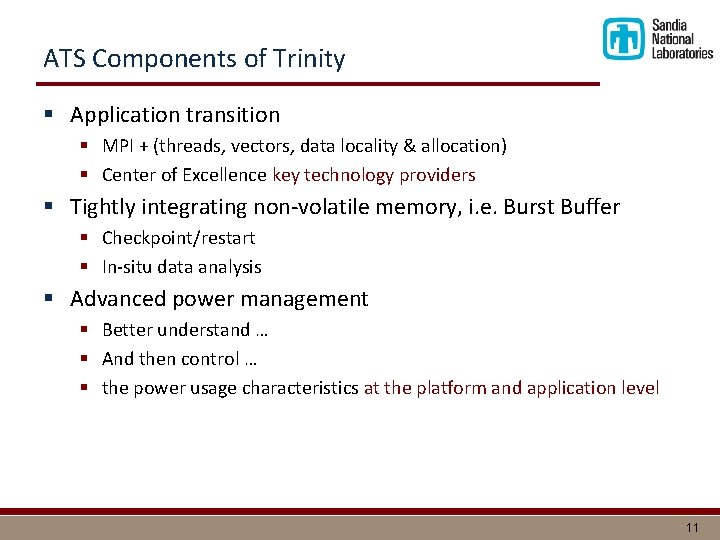

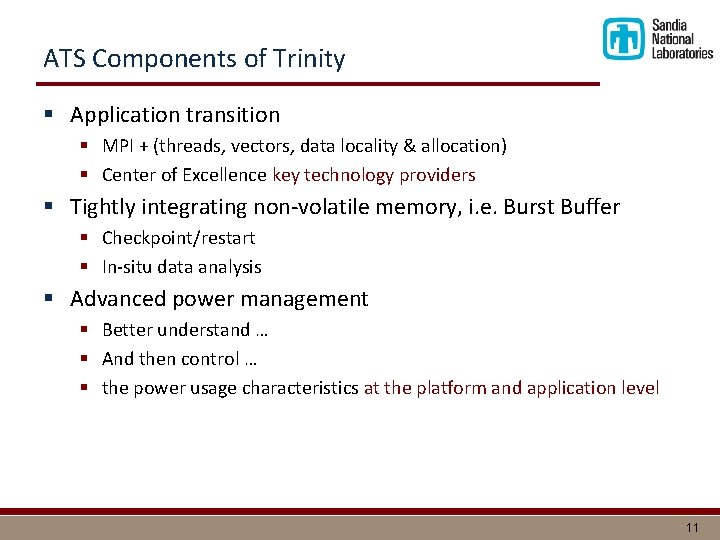

ATS Components of Trinity § Application transition § MPI + (threads, vectors, data locality & allocation) § Center of Excellence key technology providers § Tightly integrating non-volatile memory, i. e. Burst Buffer § Checkpoint/restart § In-situ data analysis § Advanced power management § Better understand … § And then control … § the power usage characteristics at the platform and application level 11

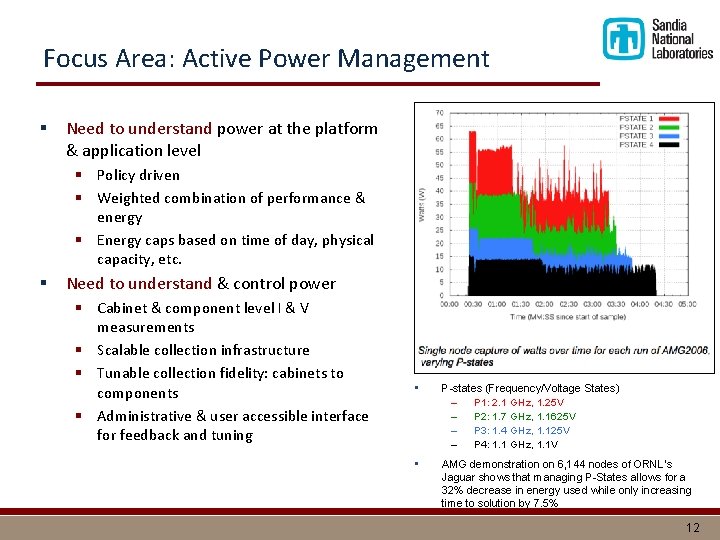

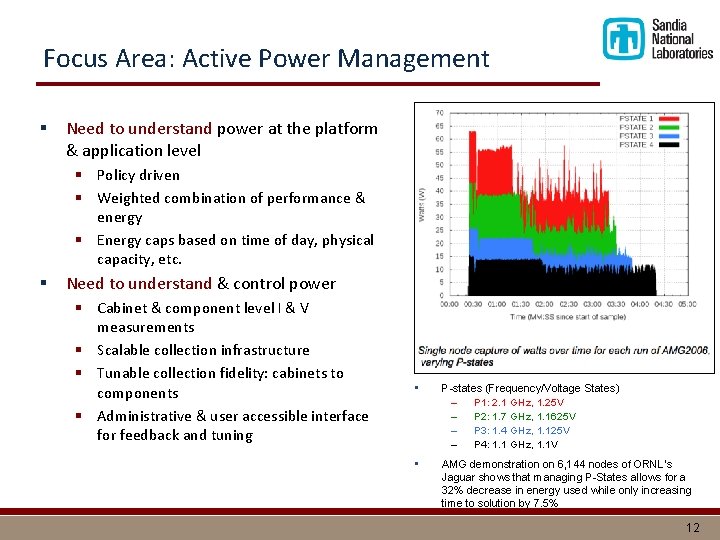

Focus Area: Active Power Management § Need to understand power at the platform & application level § Policy driven § Weighted combination of performance & energy § Energy caps based on time of day, physical capacity, etc. § Need to understand & control power § Cabinet & component level I & V measurements § Scalable collection infrastructure § Tunable collection fidelity: cabinets to components § Administrative & user accessible interface for feedback and tuning • P-states (Frequency/Voltage States) – P 1: 2. 1 GHz, 1. 25 V – P 2: 1. 7 GHz, 1. 1625 V – P 3: 1. 4 GHz, 1. 125 V – P 4: 1. 1 GHz, 1. 1 V • AMG demonstration on 6, 144 nodes of ORNL’s Jaguar shows that managing P-States allows for a 32% decrease in energy used while only increasing time to solution by 7. 5% 12

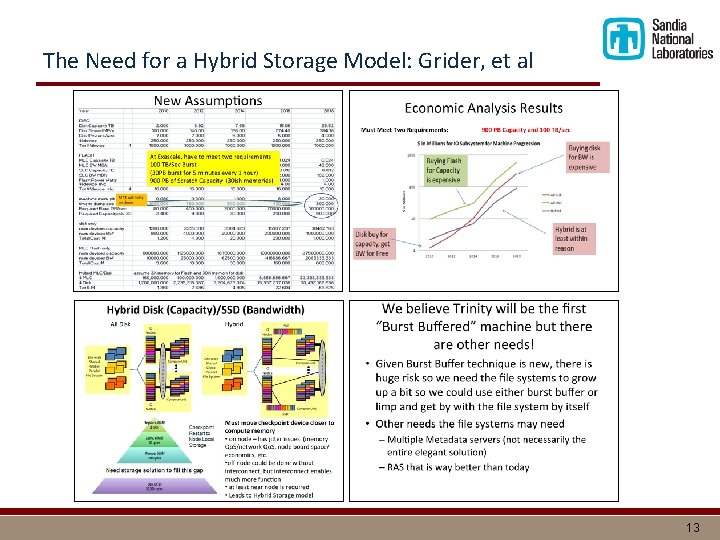

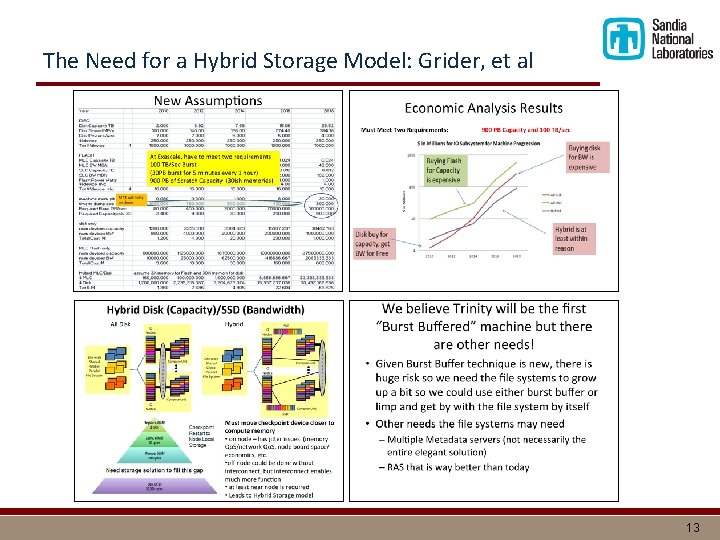

The Need for a Hybrid Storage Model: Grider, et al 13

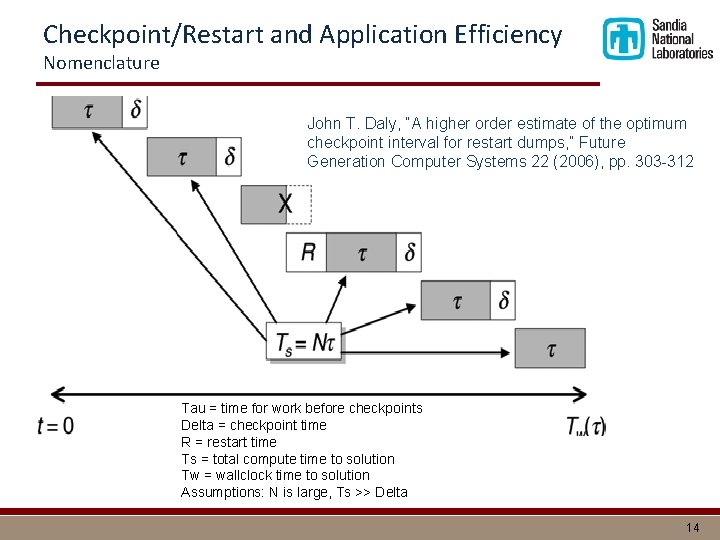

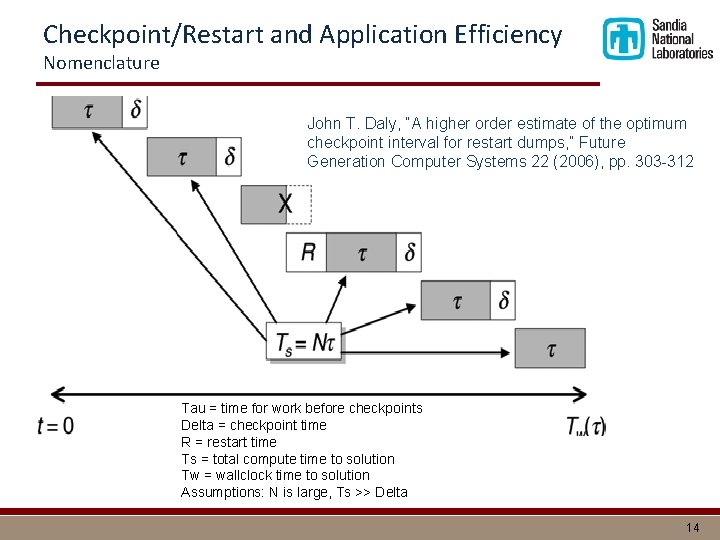

Checkpoint/Restart and Application Efficiency Nomenclature John T. Daly, “A higher order estimate of the optimum checkpoint interval for restart dumps, ” Future Generation Computer Systems 22 (2006), pp. 303 -312 Tau = time for work before checkpoints Delta = checkpoint time R = restart time Ts = total compute time to solution Tw = wallclock time to solution Assumptions: N is large, Ts >> Delta 14

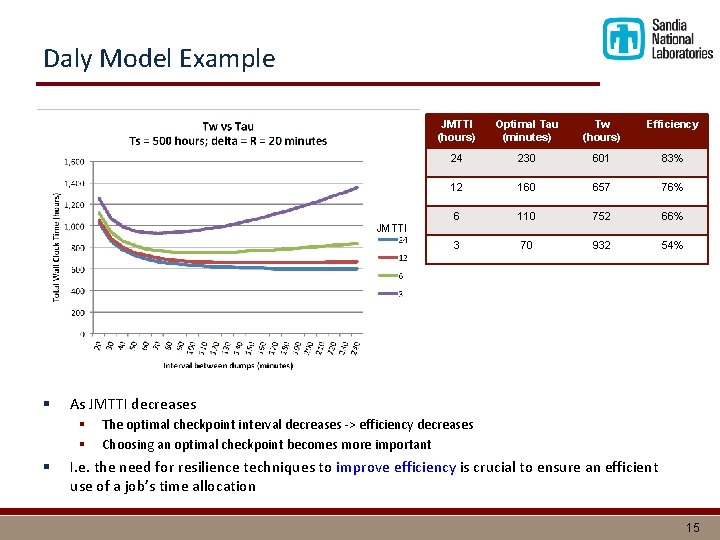

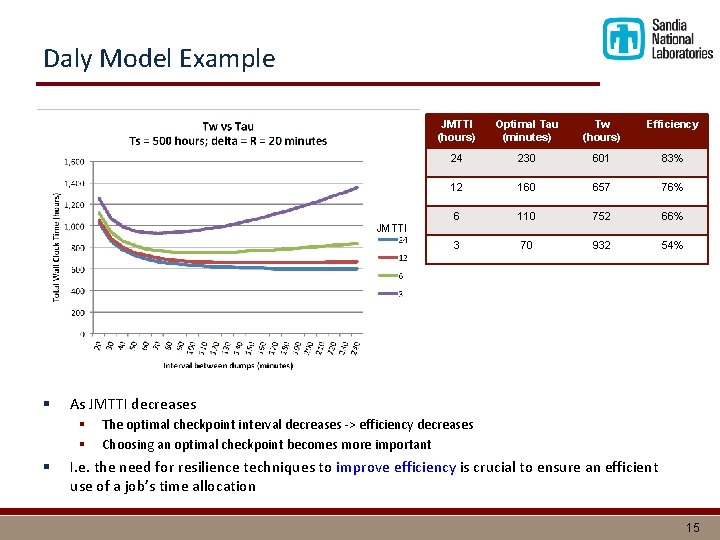

Daly Model Example JMTTI § Optimal Tau (minutes) Tw (hours) Efficiency 24 230 601 83% 12 160 657 76% 6 110 752 66% 3 70 932 54% As JMTTI decreases § § § JMTTI (hours) The optimal checkpoint interval decreases -> efficiency decreases Choosing an optimal checkpoint becomes more important I. e. the need for resilience techniques to improve efficiency is crucial to ensure an efficient use of a job’s time allocation 15

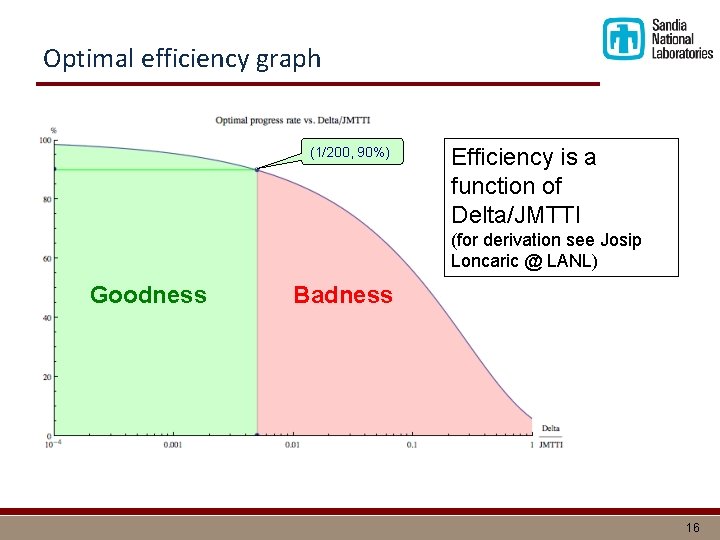

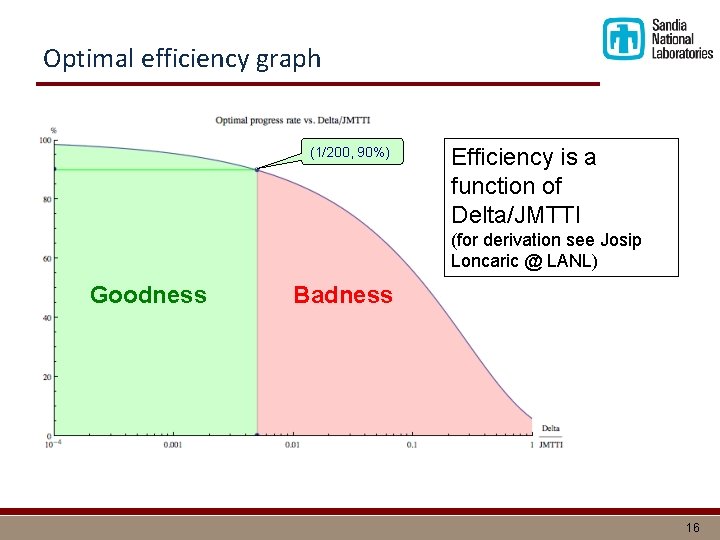

Optimal efficiency graph (1/200, 90%) Efficiency is a function of Delta/JMTTI (for derivation see Josip Loncaric @ LANL) Goodness Badness 16

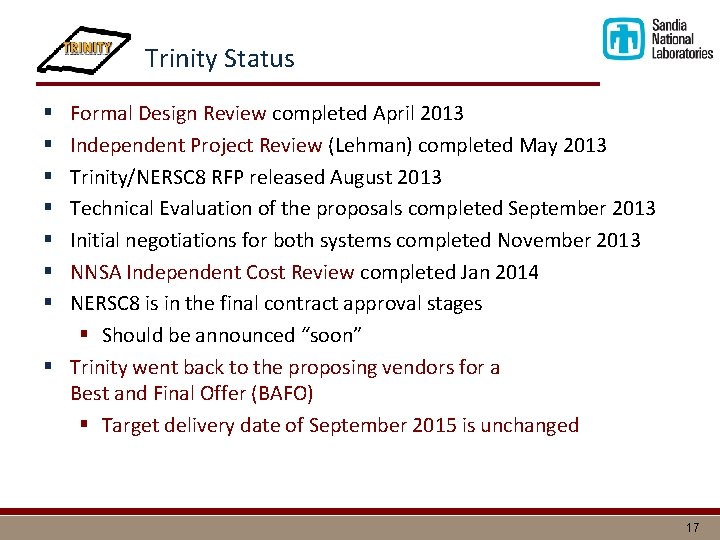

Trinity Status Formal Design Review completed April 2013 Independent Project Review (Lehman) completed May 2013 Trinity/NERSC 8 RFP released August 2013 Technical Evaluation of the proposals completed September 2013 Initial negotiations for both systems completed November 2013 NNSA Independent Cost Review completed Jan 2014 NERSC 8 is in the final contract approval stages § Should be announced “soon” § Trinity went back to the proposing vendors for a Best and Final Offer (BAFO) § Target delivery date of September 2015 is unchanged § § § § 17

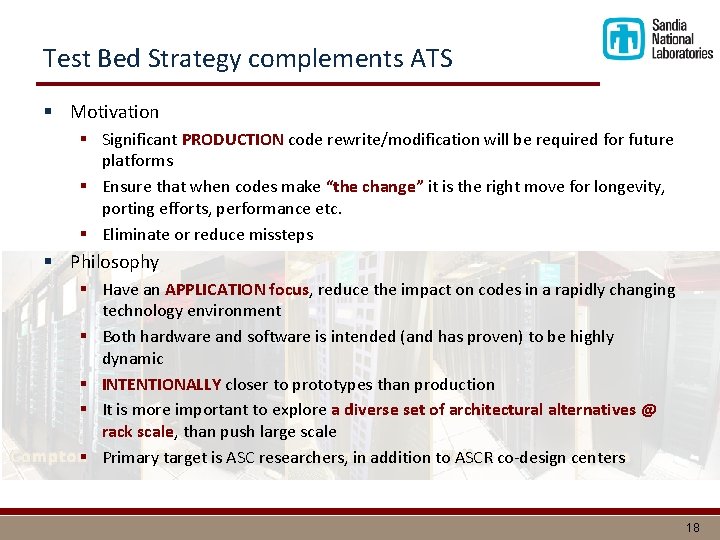

Test Bed Strategy complements ATS § Motivation § Significant PRODUCTION code rewrite/modification will be required for future platforms § Ensure that when codes make “the change” it is the right move for longevity, porting efforts, performance etc. § Eliminate or reduce missteps § Philosophy § Have an APPLICATION focus, reduce the impact on codes in a rapidly changing technology environment § Both hardware and software is intended (and has proven) to be highly dynamic § INTENTIONALLY closer to prototypes than production § It is more important to explore a diverse set of architectural alternatives @ rack scale, than push large scale § Primary target is ASC researchers, in addition to ASCR co-design centers 18

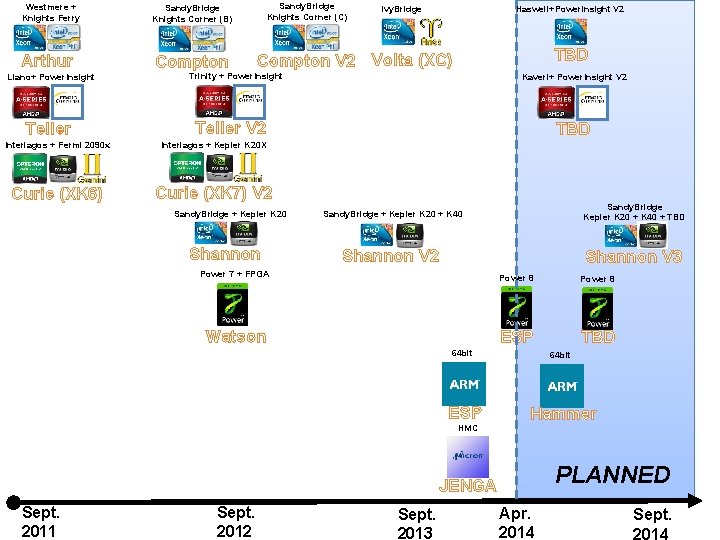

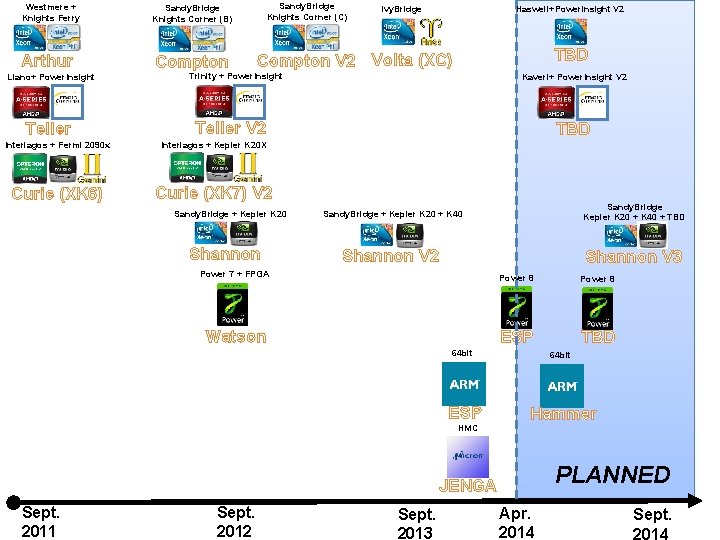

Westmere + Knights Ferry Arthur Llano+ Power. Insight Teller Sandy. Bridge Knights Corner (B) Sandy. Bridge Knights Corner (C) Compton V 2 Haswell+Powerinsight V 2 Ivy. Bridge TBD Volta (XC) Trinity + Power. Insight Kaveri+ Power. Insight V 2 Teller V 2 Interlagos + Fermi 2090 x Interlagos + Kepler K 20 X Curie (XK 6) Curie (XK 7) V 2 Sandy. Bridge + Kepler K 20 Shannon TBD Sandy. Bridge + Kepler K 20 + K 40 Sandy. Bridge Kepler K 20 + K 40 + TBD Shannon V 2 Shannon V 3 Power 7 + FPGA Watson Power 8 ESP TBD 64 bit ESP 64 bit Hammer HMC PLANNED JENGA Sept. 2011 Sept. 2012 Sept. 2013 Apr. 2014 Sept. 2014

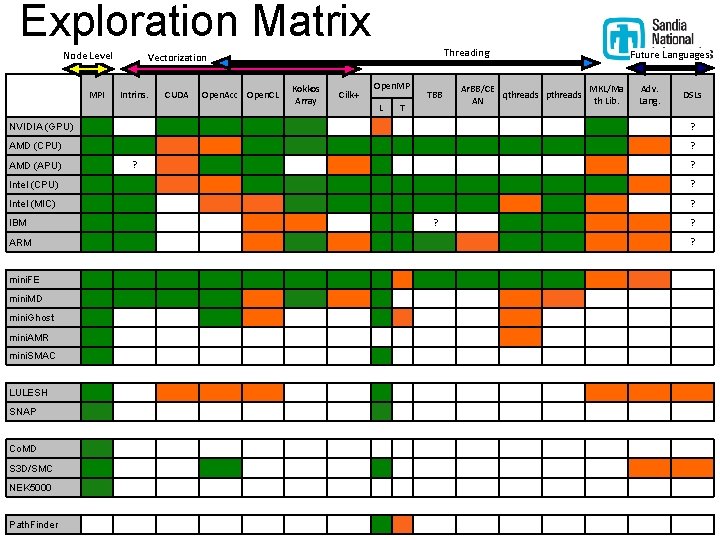

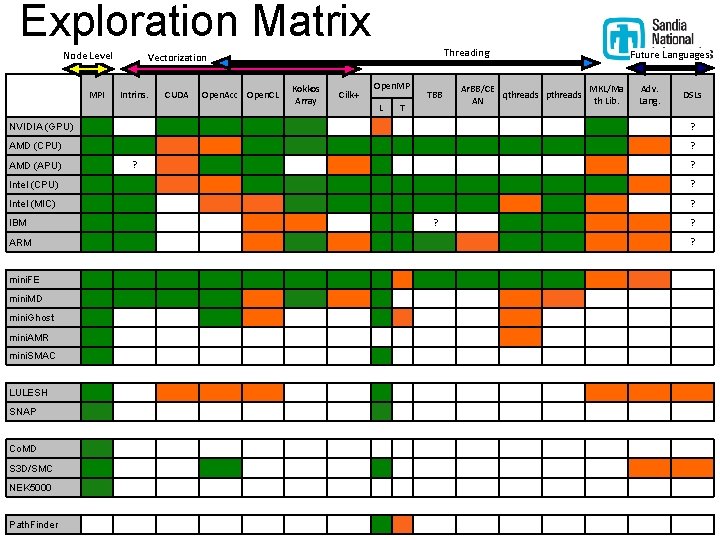

Exploration Matrix Node Level MPI Threading Vectorization Intrins. CUDA Open. Acc Open. CL Kokkos Array Cilk+ Open. MP L TBB T Ar. BB/CE MKL/Ma qthreads pthreads AN th Lib. Future Languages Adv. Lang. DSLs NVIDIA (GPU) ? AMD (CPU) ? AMD (APU) ? ? Intel (CPU) ? Intel (MIC) ? IBM ARM ? ? ? mini. FE mini. MD mini. Ghost mini. AMR mini. SMAC LULESH SNAP Co. MD S 3 D/SMC NEK 5000 Path. Finder 20

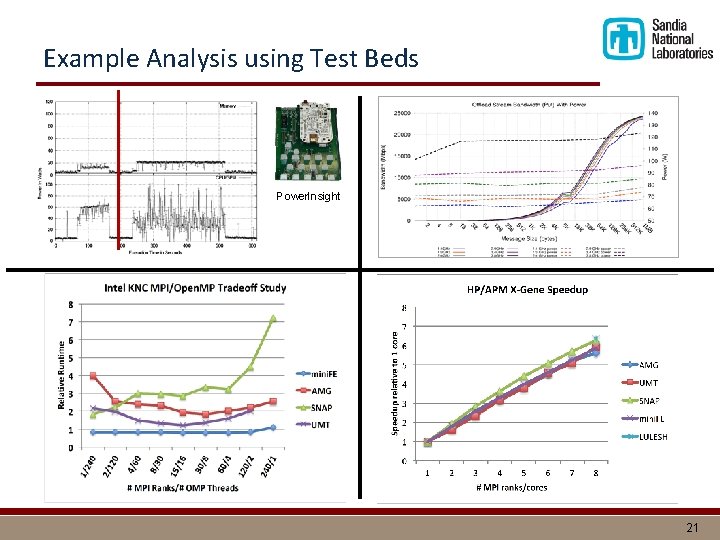

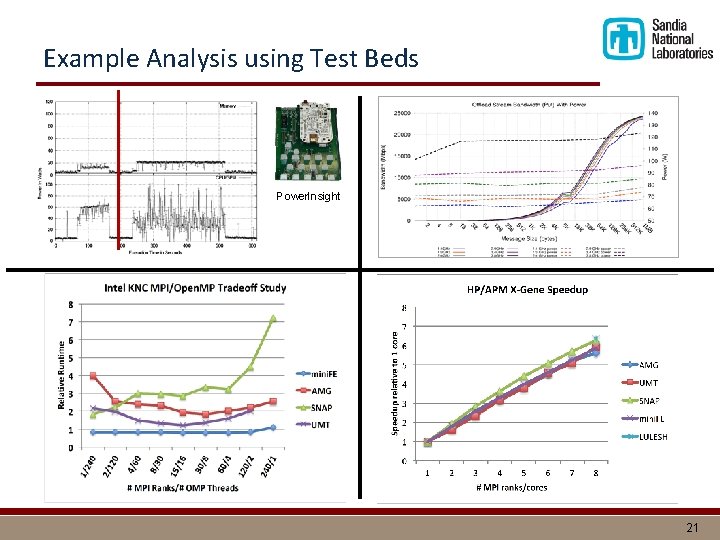

Example Analysis using Test Beds Power. Insight 21

Thank You Questions? Thank you to the following for content: • Trinity & NERSC-8 Project Teams • Thuc Hoang – ASC HQ • Manuel Vigil – LANL • Jim Laros – SNL • Josip Loncaric – LANL • Simon Hammond - SNL • and a cast of many others … 22

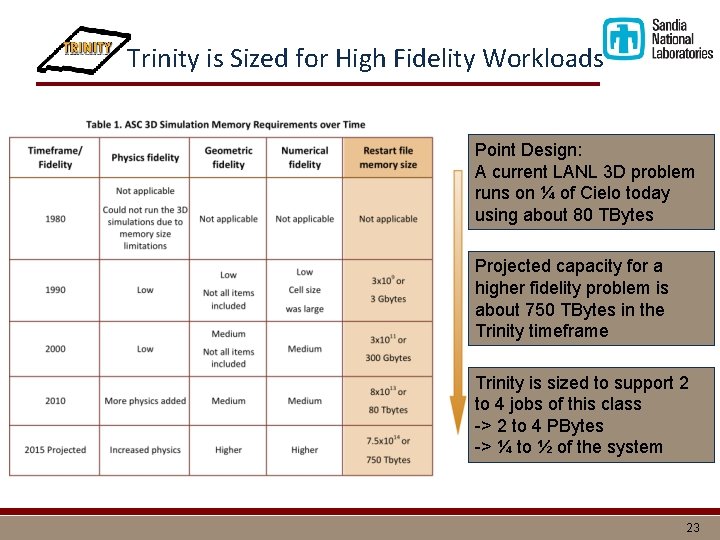

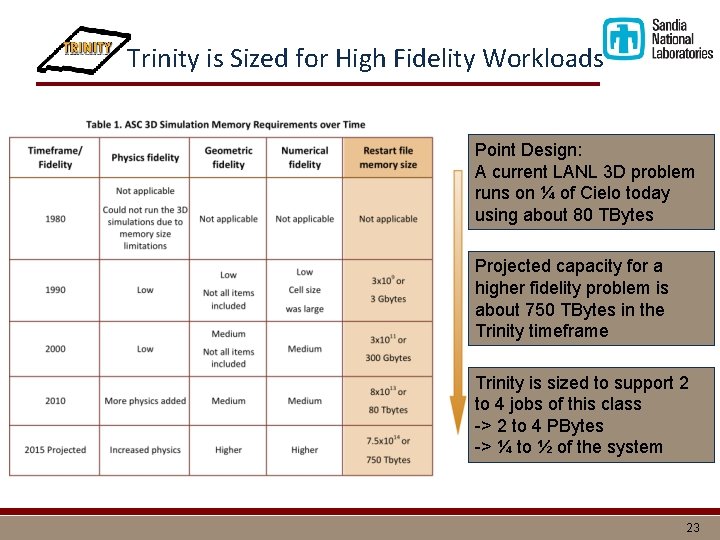

Trinity is Sized for High Fidelity Workloads Point Design: A current LANL 3 D problem runs on ¼ of Cielo today using about 80 TBytes Projected capacity for a higher fidelity problem is about 750 TBytes in the Trinity timeframe Trinity is sized to support 2 to 4 jobs of this class -> 2 to 4 PBytes -> ¼ to ½ of the system 23

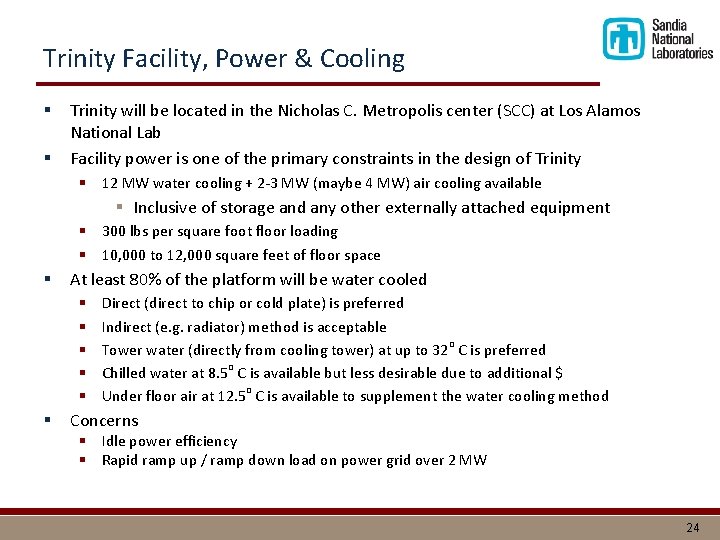

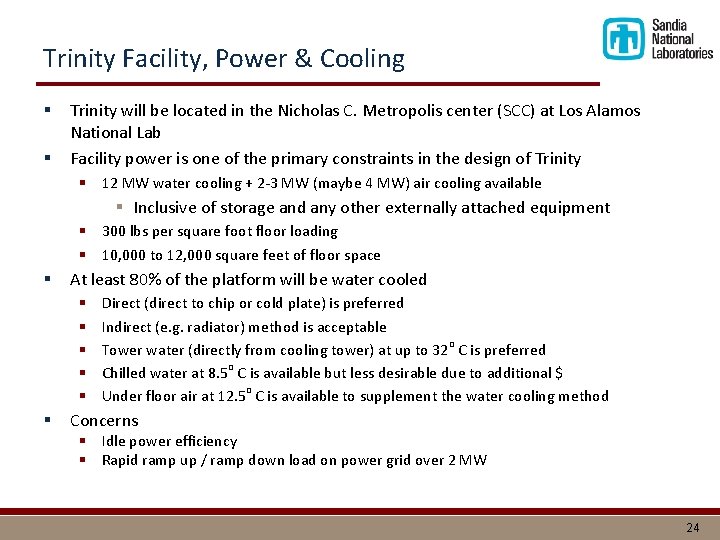

Trinity Facility, Power & Cooling § § Trinity will be located in the Nicholas C. Metropolis center (SCC) at Los Alamos National Lab Facility power is one of the primary constraints in the design of Trinity § 12 MW water cooling + 2 -3 MW (maybe 4 MW) air cooling available § Inclusive of storage and any other externally attached equipment § 300 lbs per square foot floor loading § 10, 000 to 12, 000 square feet of floor space § At least 80% of the platform will be water cooled § § § Direct (direct to chip or cold plate) is preferred Indirect (e. g. radiator) method is acceptable Tower water (directly from cooling tower) at up to 32 o C is preferred Chilled water at 8. 5 o C is available but less desirable due to additional $ Under floor air at 12. 5 o C is available to supplement the water cooling method Concerns § Idle power efficiency § Rapid ramp up / ramp down load on power grid over 2 MW 24