The Revised SPDG Program Measures An Overview Jennifer

- Slides: 57

The Revised SPDG Program Measures: An Overview Jennifer Coffey, Ph. D. SPDG Program Lead August 30, 2011

Performance Measures � Performance Measurement 1: Projects use evidence-based professional development practices to support the attainment of identified competencies. � Performance Measurement 2: Participants in SPDG professional development demonstrate improvement in implementation of SPDG-supported practices over time.

� Performance Measurement 3: Projects use SPDG professional development funds to provide follow-up activities designed to sustain the use of SPDG-supported practices. (Efficiency Measure) � Performance Measurement 4: Highly qualified special education teachers that have participated in SPDG supported special education teacher retention activities remain as special education teachers two years after their initial participation in these activities.

Continuation Reporting 2007 grantees will not be using the new program measures: � Everyone else will have 1 year for practice › Grantees will use the revised measures this year for their APR › This continuation report will be a pilot �OSEP will learn from this round of reports and make changes as appropriate �Your feedback will be appreciated › You may continue to report on the old program measures, if you like

Change is hard! This pace is much easier:

The Year in Review & Looking Forward In review: � Opportunities for OSEP and the Office of Planning, Evaluation, and Policy Development to hear from you › 4 + Calls for you to give feedback � Small group to discuss revising the Program Measures and Creating Methodology

Thank You › To all who joined the large group discussions › To the small working group members: �Patti Noonan �Jim Frasier �Susan Williamson �Nikki Sandve �Li Walter �Ed Caffarella �Jon Dyson �Julie Morrison

Opportunities to Learn and Share � Monthly Webinars – “Directors’ Calls” › Professional Development Series � Evaluator Community of Practice � Resource Library � “Regional Meetings” � Project Director’s Conference � PLC’s

Looking Forward � Directors’ Calls focused on the specific measures and overall “how-to” › Please provide feedback about information and assistance you need � Written guidance & tools to assist you � Continuation reporting guidance Webinar that will focus on the program measures � We will be learning from you about necessary flexibility (feedback loop)

Performance Measure #1 � Projects use evidence-based professional development practices to support the attainment of identified competencies.

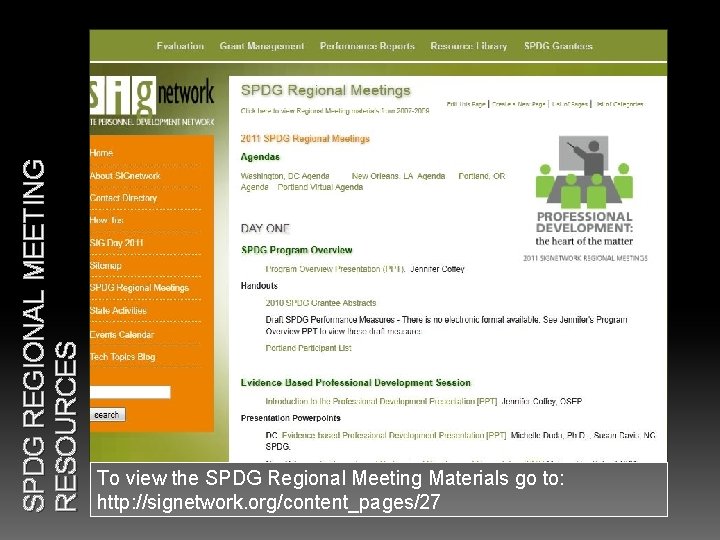

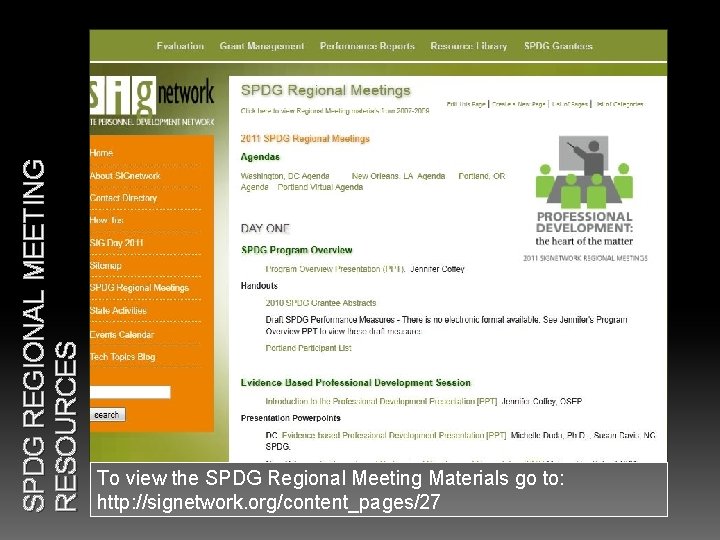

SPDG REGIONAL MEETING RESOURCES To view the SPDG Regional Meeting Materials go to: http: //signetwork. org/content_pages/27

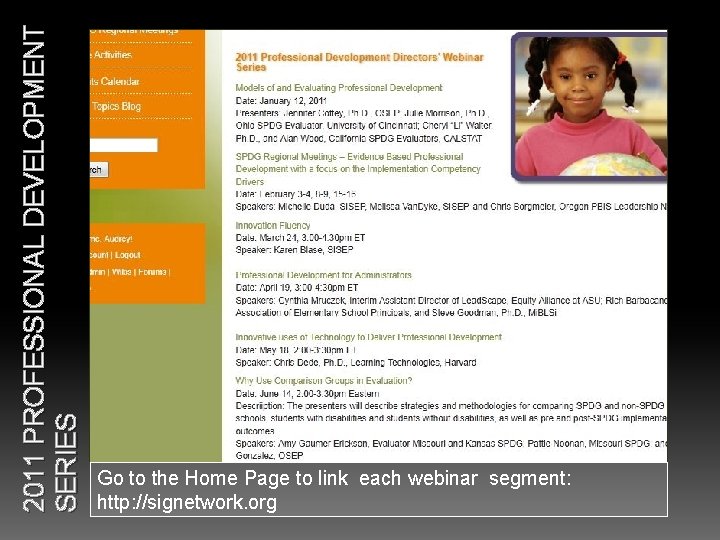

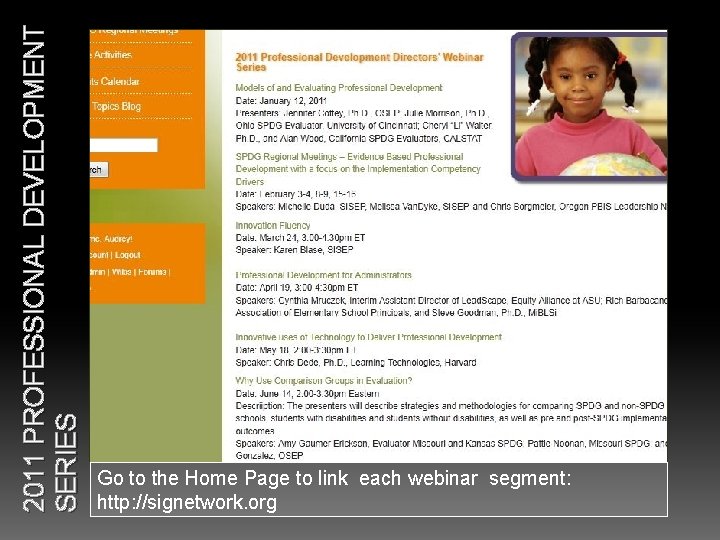

2011 PROFESSIONAL DEVELOPMENT SERIES Go to the Home Page to link each webinar segment: http: //signetwork. org

Evidence-based Professional Development � Models of and Evaluating Professional Development › Date: January 12, 3: 00 -4 : 30 pm ET › Speakers: Julie Morrison, Alan Wood, & Li Walter (SPDG evaluators) � SPDG REGIONAL MEETINGS › Topic: Evidence-based Professional Development

Evidence-based PD � Innovation Fluency › Date: › Speaker: � March 24, 3: 00 -4: 30 pm ET Karen Blase, SISEP Professional Development for Administrators › Date: April 19, 3: 00 -4: 30 pm ET › Speakers: Elaine Mulligan, NIUSI Leadscape › Rich Barbacane, National Association of Elementary School Principals � Using Technology for Professional Development › Date: May 18, 2: 00 -3: 30 pm ET › Speaker: Chris Dede, Ph. D. , Learning Technologies at Harvard’s Graduate School of Education

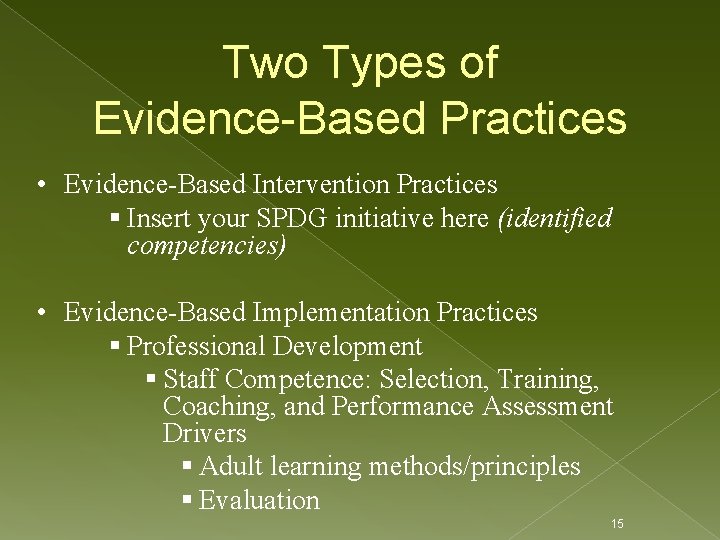

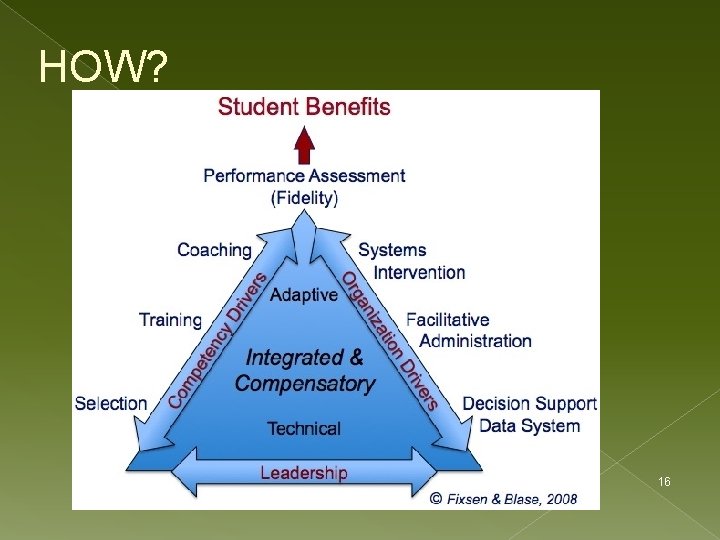

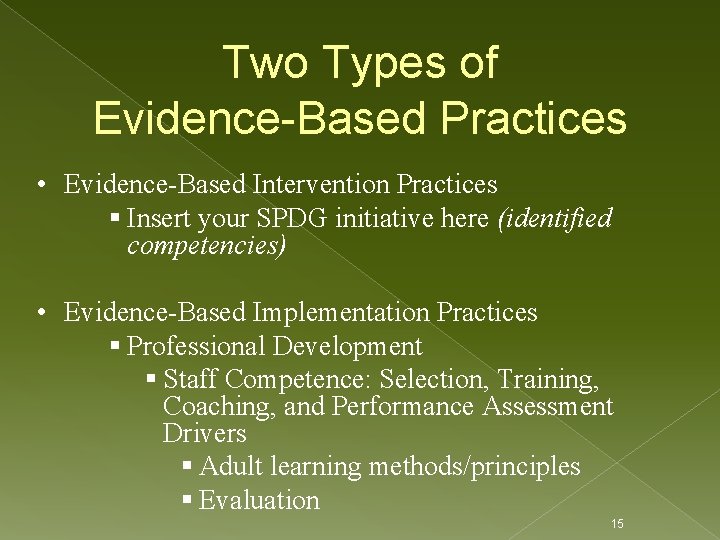

Two Types of Evidence-Based Practices • Evidence-Based Intervention Practices § Insert your SPDG initiative here (identified competencies) • Evidence-Based Implementation Practices § Professional Development § Staff Competence: Selection, Training, Coaching, and Performance Assessment Drivers § Adult learning methods/principles § Evaluation 15

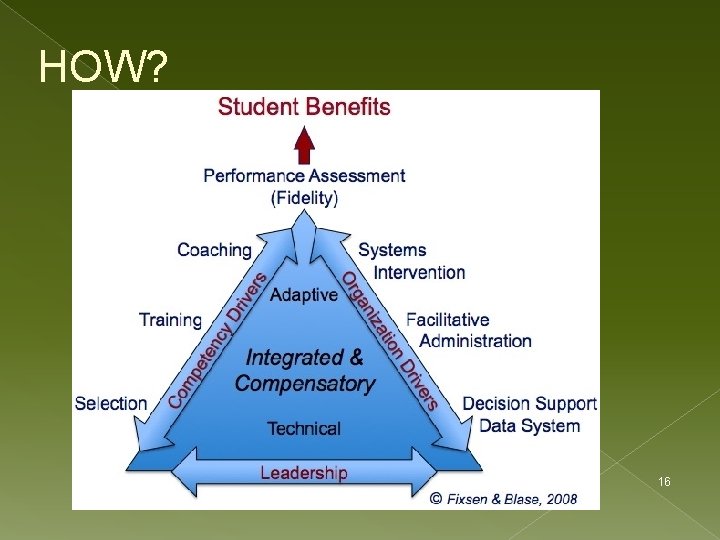

HOW? 16

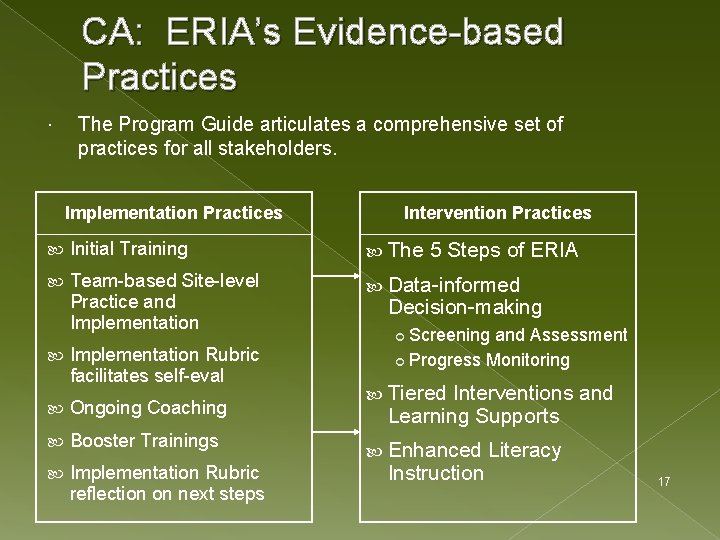

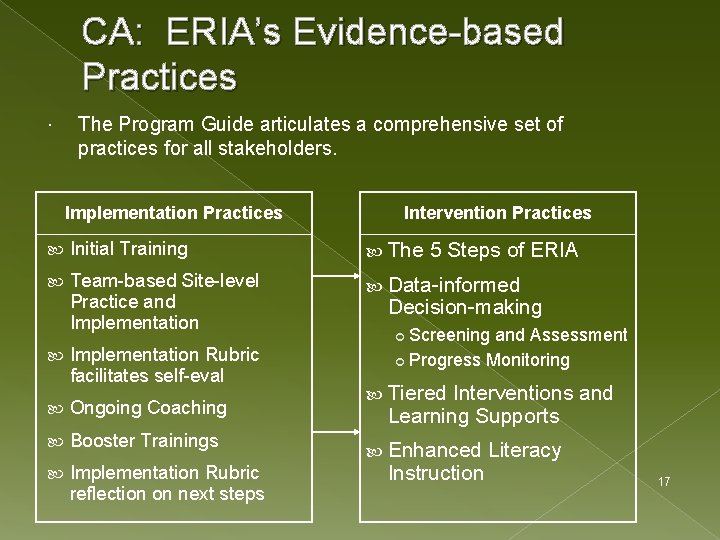

CA: ERIA’s Evidence-based Practices The Program Guide articulates a comprehensive set of practices for all stakeholders. Implementation Practices Intervention Practices Initial Training The 5 Steps of ERIA Team-based Site-level Practice and Implementation Data-informed Implementation Rubric facilitates self-eval Ongoing Coaching Booster Trainings Implementation Rubric reflection on next steps Decision-making Screening and Assessment Progress Monitoring Tiered Interventions and Learning Supports Enhanced Literacy Instruction 17

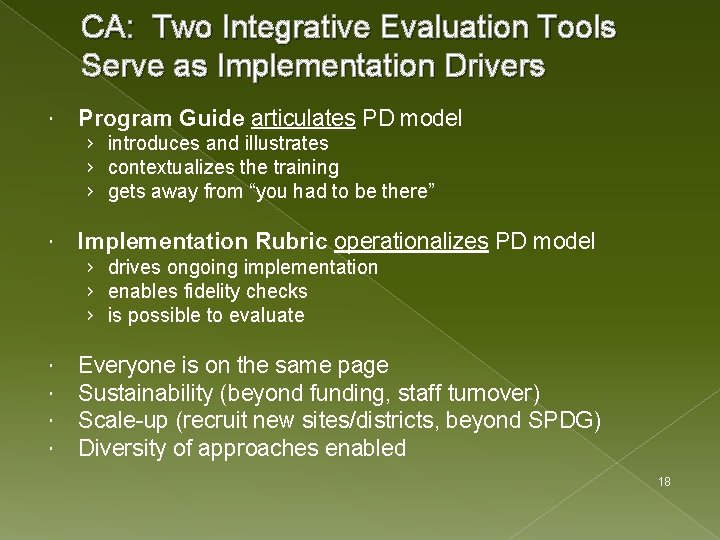

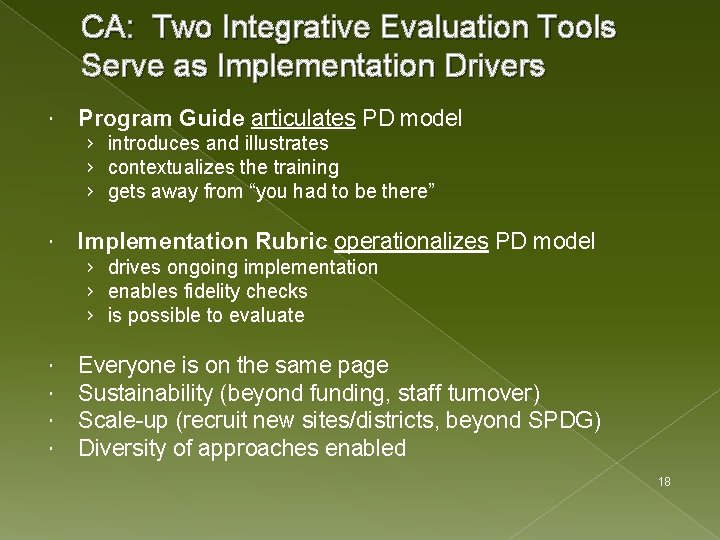

CA: Two Integrative Evaluation Tools Serve as Implementation Drivers Program Guide articulates PD model › introduces and illustrates › contextualizes the training › gets away from “you had to be there” Implementation Rubric operationalizes PD model › drives ongoing implementation › enables fidelity checks › is possible to evaluate Everyone is on the same page Sustainability (beyond funding, staff turnover) Scale-up (recruit new sites/districts, beyond SPDG) Diversity of approaches enabled 18

HOW? 19

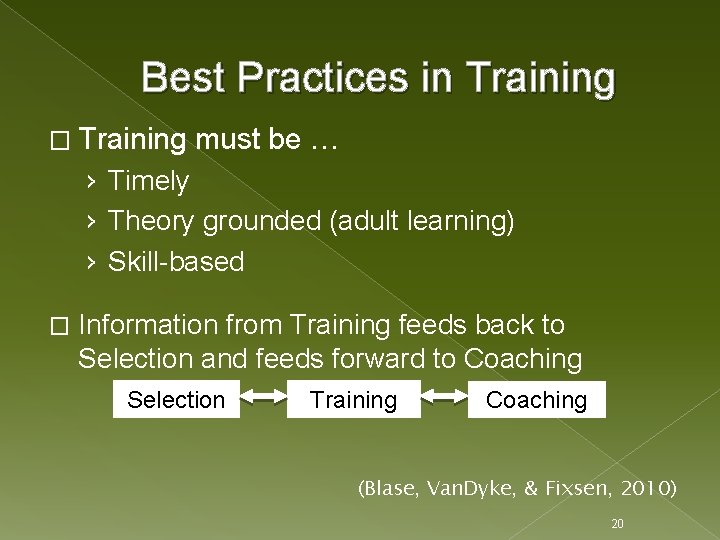

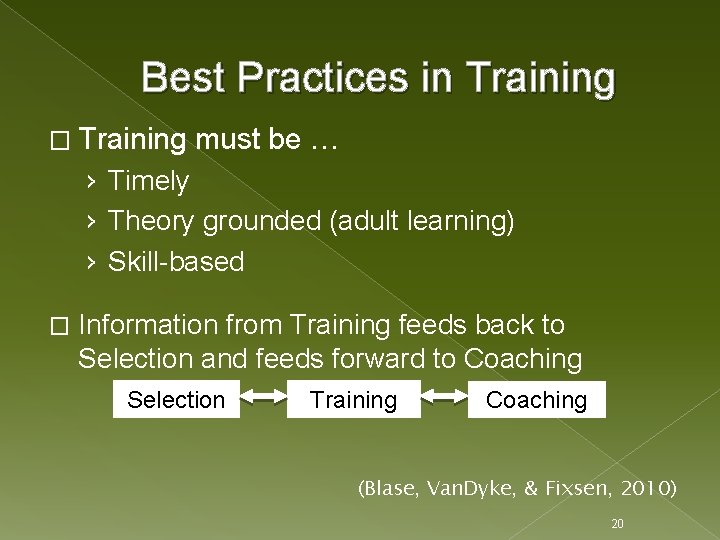

Best Practices in Training � Training must be … › Timely › Theory grounded (adult learning) › Skill-based � Information from Training feeds back to Selection and feeds forward to Coaching Selection Training Coaching (Blase, Van. Dyke, & Fixsen, 2010) 20

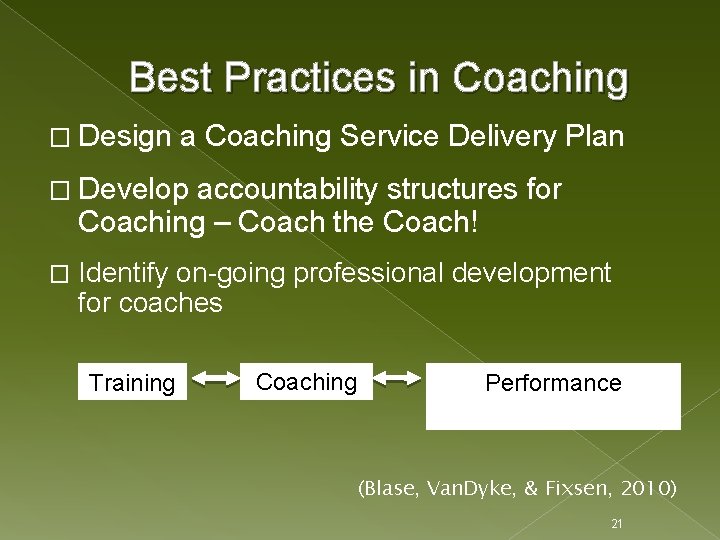

Best Practices in Coaching � Design a Coaching Service Delivery Plan � Develop accountability structures for Coaching – Coach the Coach! � Identify on-going professional development for coaches Training Coaching Performance Assessment (Blase, Van. Dyke, & Fixsen, 2010) 21

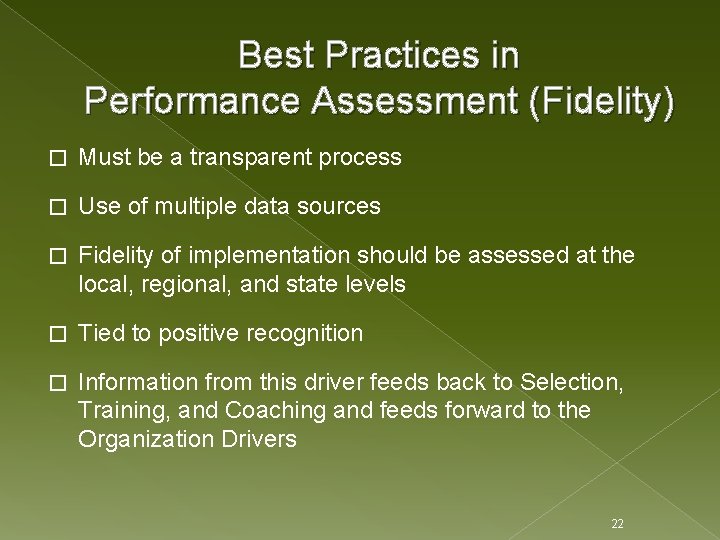

Best Practices in Performance Assessment (Fidelity) � Must be a transparent process � Use of multiple data sources � Fidelity of implementation should be assessed at the local, regional, and state levels � Tied to positive recognition � Information from this driver feeds back to Selection, Training, and Coaching and feeds forward to the Organization Drivers 22

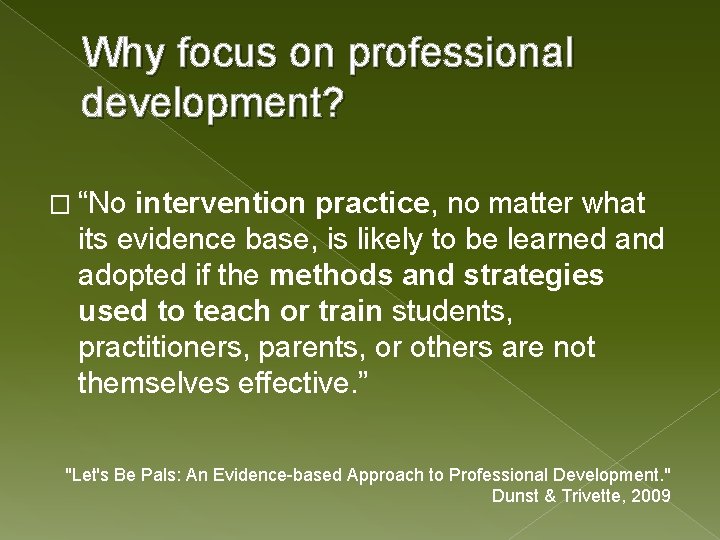

Why focus on professional development? � “No intervention practice, no matter what its evidence base, is likely to be learned and adopted if the methods and strategies used to teach or train students, practitioners, parents, or others are not themselves effective. ” "Let's Be Pals: An Evidence-based Approach to Professional Development. " Dunst & Trivette, 2009

Using Research Findings to Inform Practical Approaches to Evidence-Based Practices Carl J. Dunst, Ph. D. Carol M. Trivette, Ph. D. Orelena Hawks Puckett Institute Asheville and Morganton, North Carolina Presentation Prepared for a Webinar with the Knowledge Transfer Group, U. S. Department of Health and Human Services, Children’s Bureau Division of Research and Innovation, September 22, 2009

� “Adult learning refers to a collection of theories, methods, and approaches for describing the characteristics of and conditions under which the process of learning is optimized. ”

Six Characteristics Identified in How People Learna Were Used to Code and Evaluate the Adult Learning Methods Planning Introduce Engage the learner in a preview of the material, knowledge or practice that is the focus of instruction or training Illustrate Demonstrate or illustrate the use or applicability of the material, knowledge or practice for the learner Application Practice Engage the learner in the use of the material, knowledge or practice Evaluate Engage the learner in a process of evaluating the consequence or outcome of the application of the material, knowledge or practice Deep Understanding Reflection Engage the learner in self-assessment of his or her acquisition of knowledge and skills as a basis for identifying “next steps” in the learning process Mastery Engage the learner in a process of assessing his or her experience in the context of some conceptual or practical model or framework, or some external set of standards or criteria a Donovan, M. et al. (Eds. ) (1999). How people learn. Washington, DC: National Academy Press.

Additional Translational Synthesis Findings � The smaller the number of persons participating in a training (<20), the larger the effect sizes for the study outcomes. � The more hours of training over an extended number of sessions, the better the study outcomes. � The practices are similarly effective when used in different settings with different types of learners.

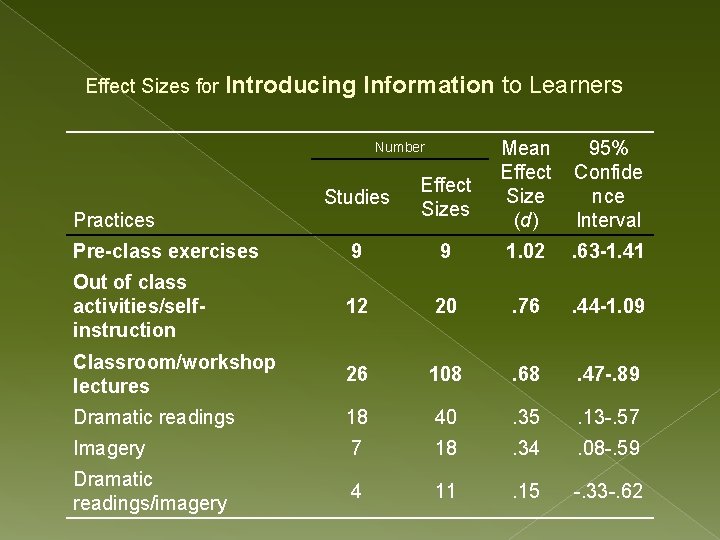

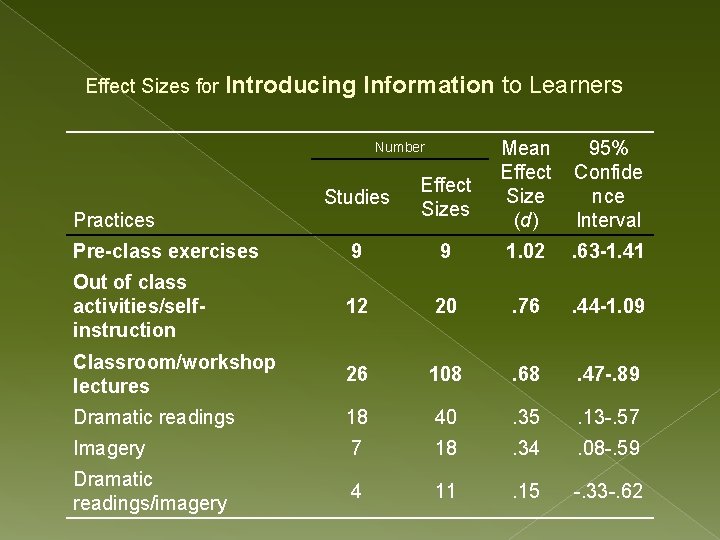

Effect Sizes for Introducing Information to Learners Number Mean 95% Effect Confide Size nce (d) Interval Studies Effect Sizes Pre-class exercises 9 9 1. 02 . 63 -1. 41 Out of class activities/selfinstruction 12 20 . 76 . 44 -1. 09 Classroom/workshop lectures 26 108 . 68 . 47 -. 89 Dramatic readings 18 40 . 35 . 13 -. 57 Imagery 7 18 . 34 . 08 -. 59 Dramatic readings/imagery 4 11 . 15 -. 33 -. 62 Practices

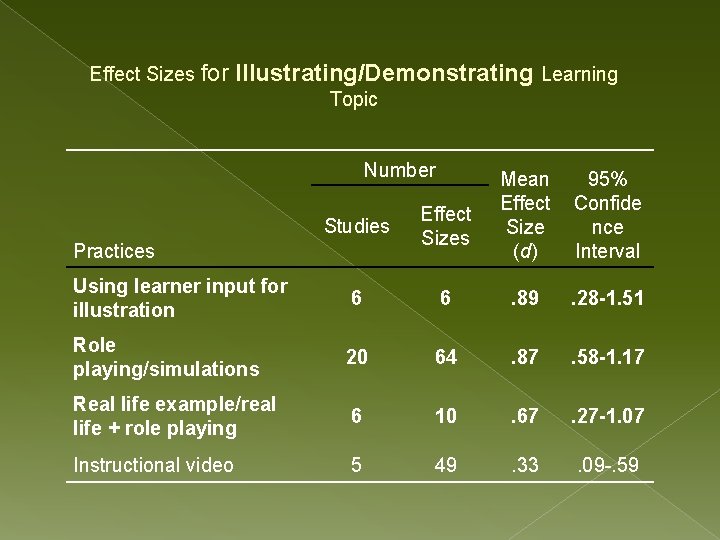

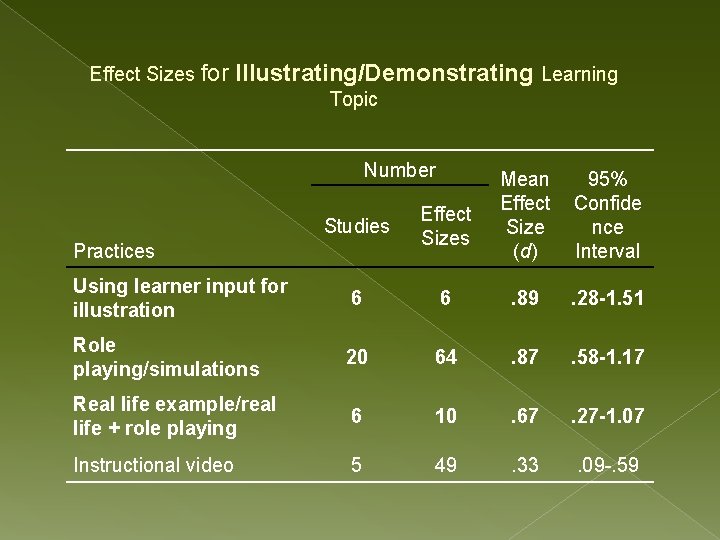

Effect Sizes for Illustrating/Demonstrating Learning Topic Number Mean 95% Effect Confide Size nce (d) Interval Studies Effect Sizes Using learner input for illustration 6 6 . 89 . 28 -1. 51 Role playing/simulations 20 64 . 87 . 58 -1. 17 Real life example/real life + role playing 6 10 . 67 . 27 -1. 07 Instructional video 5 49 . 33 . 09 -. 59 Practices

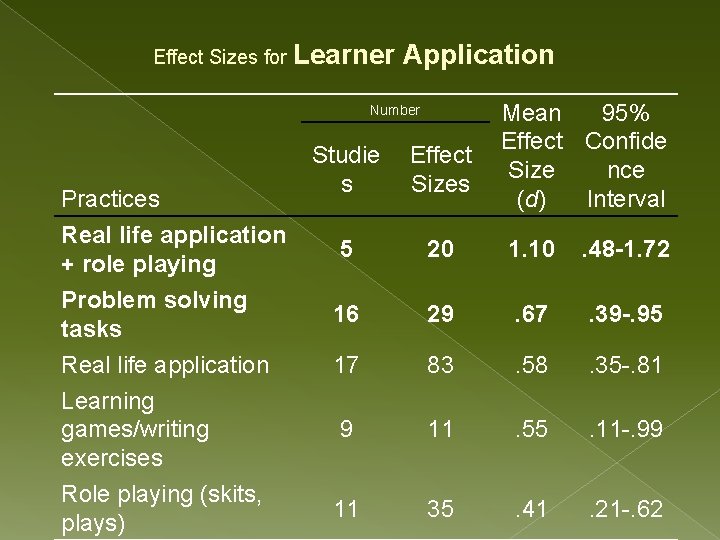

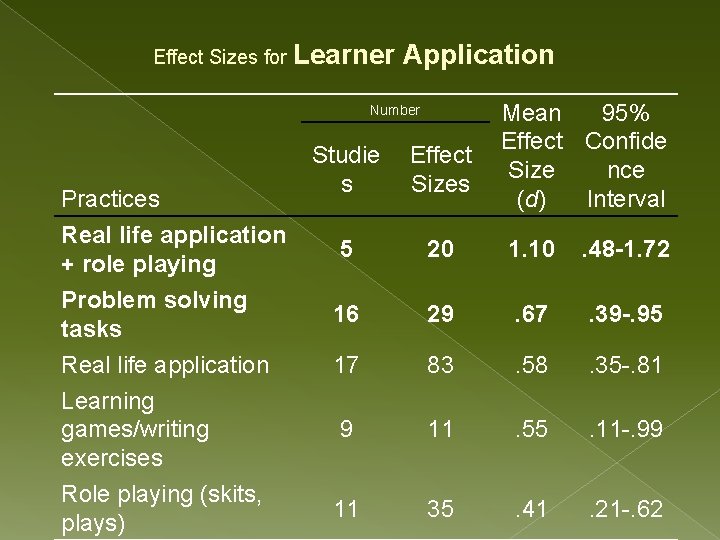

Effect Sizes for Learner Application Mean 95% Effect Confide Effect Size nce Sizes (d) Interval Number Practices Real life application + role playing Problem solving tasks Real life application Learning games/writing exercises Role playing (skits, plays) Studie s 5 20 1. 10 . 48 -1. 72 16 29 . 67 . 39 -. 95 17 83 . 58 . 35 -. 81 9 11 . 55 . 11 -. 99 11 35 . 41 . 21 -. 62

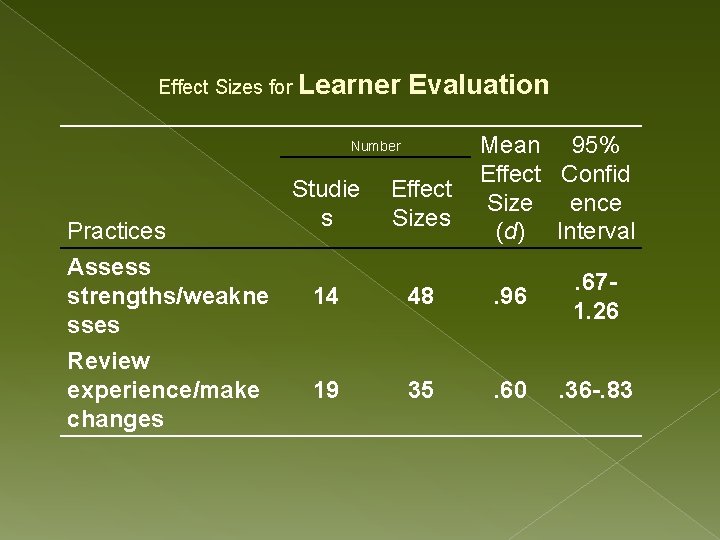

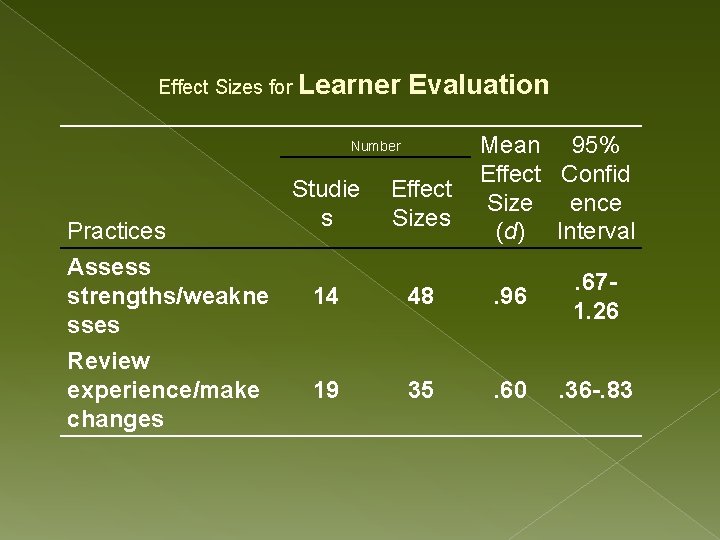

Effect Sizes for Learner Evaluation Mean 95% Effect Confid Effect Size ence Sizes (d) Interval Number Practices Assess strengths/weakne sses Review experience/make changes Studie s 14 48 . 96 . 671. 26 19 35 . 60 . 36 -. 83

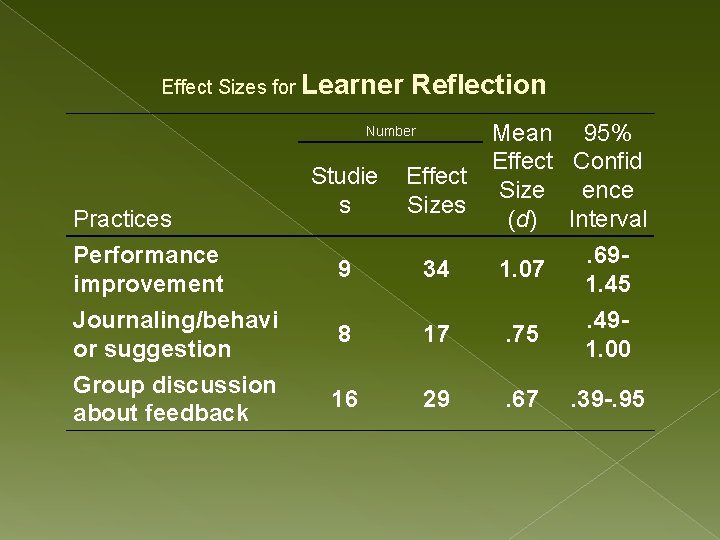

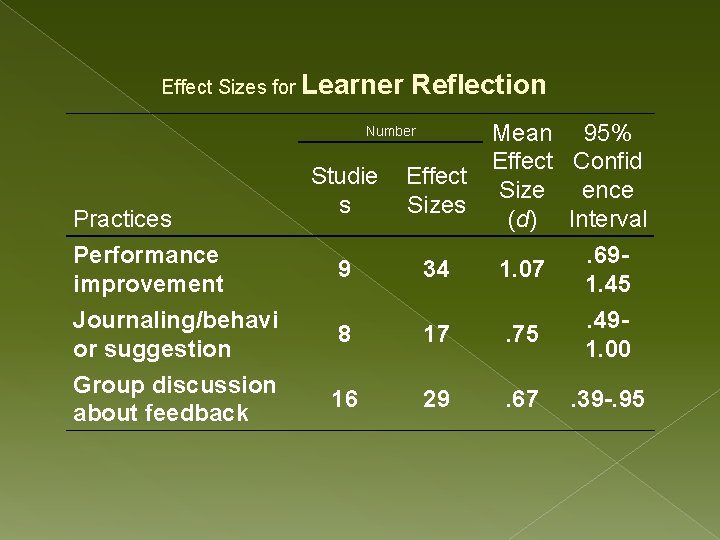

Effect Sizes for Learner Reflection Mean 95% Effect Confid Effect Size ence Sizes (d) Interval. 6934 1. 07 1. 45 Number Practices Performance improvement Studie s 9 Journaling/behavi or suggestion 8 17 . 75 . 491. 00 Group discussion about feedback 16 29 . 67 . 39 -. 95

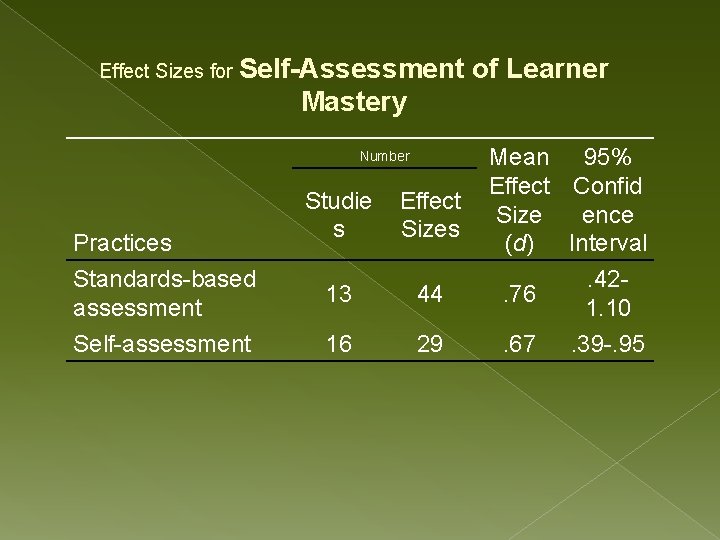

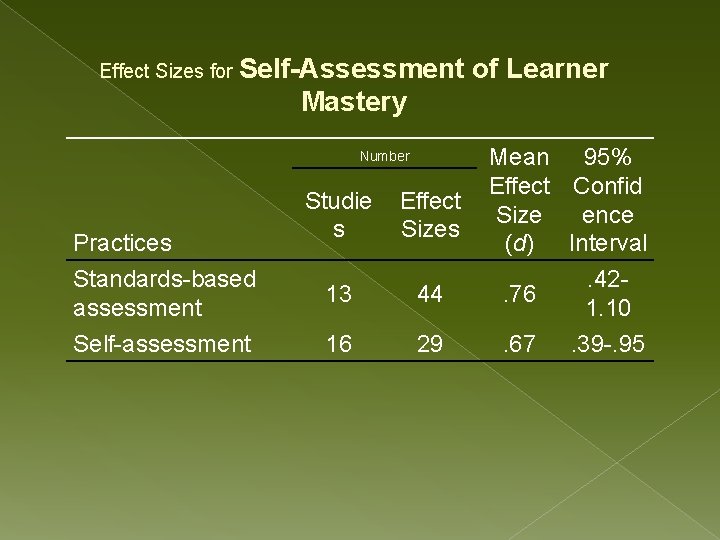

Effect Sizes for Self-Assessment of Learner Mastery Mean 95% Effect Confid Effect Size ence Sizes (d) Interval. 4244. 76 1. 10 Number Practices Standards-based assessment Self-assessment Studie s 13 16 29 . 67 . 39 -. 95

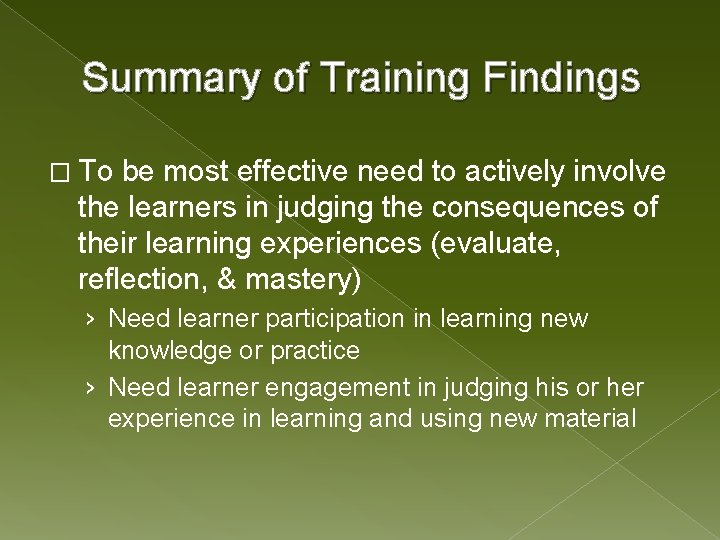

Summary of Training Findings � To be most effective need to actively involve the learners in judging the consequences of their learning experiences (evaluate, reflection, & mastery) › Need learner participation in learning new knowledge or practice › Need learner engagement in judging his or her experience in learning and using new material

Innovation Fluency � Definition: Innovation Fluency refers to the degree to which we know the innovation with respect to: › Evidence › Program and Practice Features › Implementation Requirements 35

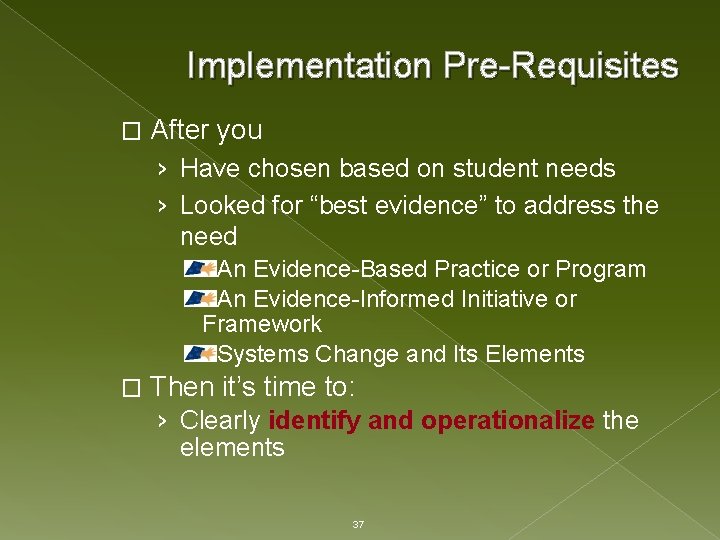

Implementation Pre-Requisites � After you › Have chosen based on student needs › Looked for “best evidence” to address the need An Evidence-Based Practice or Program An Evidence-Informed Initiative or Framework Systems Change and Its Elements 36

Implementation Pre-Requisites � After you › Have chosen based on student needs › Looked for “best evidence” to address the need An Evidence-Based Practice or Program An Evidence-Informed Initiative or Framework Systems Change and Its Elements � Then it’s time to: › Clearly identify and operationalize the elements 37

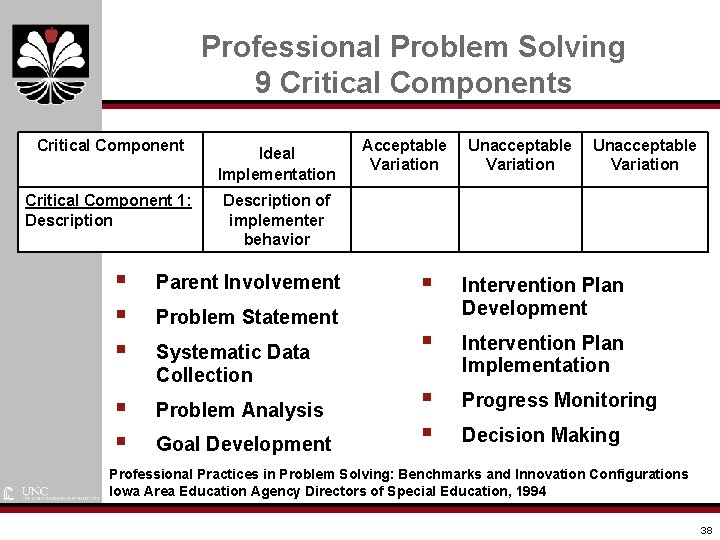

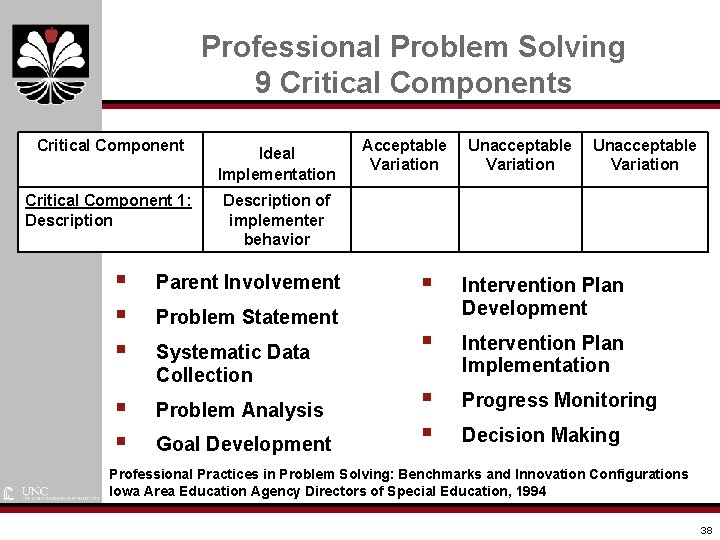

Professional Problem Solving 9 Critical Components Critical Component Ideal Implementation Critical Component 1: Description of implementer behavior § § § Parent Involvement § § Problem Analysis Problem Statement Systematic Data Collection Goal Development Acceptable Variation Unacceptable Variation § Intervention Plan Development § Intervention Plan Implementation § § Progress Monitoring Decision Making Professional Practices in Problem Solving: Benchmarks and Innovation Configurations Iowa Area Education Agency Directors of Special Education, 1994 38

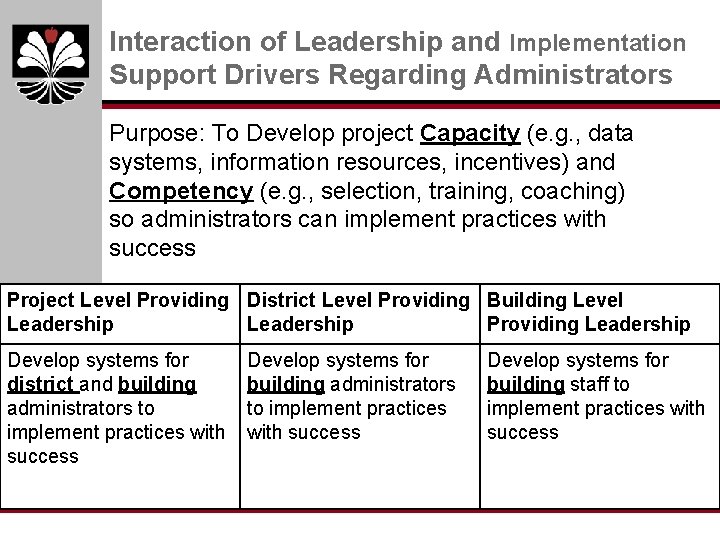

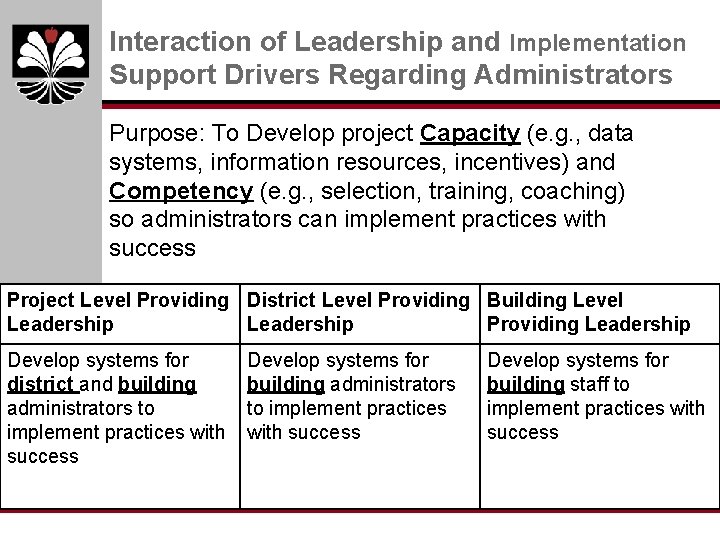

Interaction of Leadership and Implementation Support Drivers Regarding Administrators Purpose: To Develop project Capacity (e. g. , data systems, information resources, incentives) and Competency (e. g. , selection, training, coaching) so administrators can implement practices with success Project Level Providing District Level Providing Building Level Leadership Providing Leadership Develop systems for district and building administrators to implement practices with success Develop systems for building staff to implement practices with success

Mi. BLSi Statewide Structure of Support Who is supported? Across State Multiple District/Building Teams Multiple schools w/in intermediate district Multiple schools w/in local district Building All staff Leadership Team All students Building Staff Students Regional Technical Assistance ISD Leadership Team LEA District Leadership Team Provides guidance, 40 visibility, funding, political support for Mi. BLSi Provides coaching for District Teams and technical assistance for Building Teams Provides guidance, visibility, funding, political support Provides guidance and manages implementation Provides effective practices to support students Improved behavior and reading Michigan Department of Education/Mi. BLSi Leadership How is support provided?

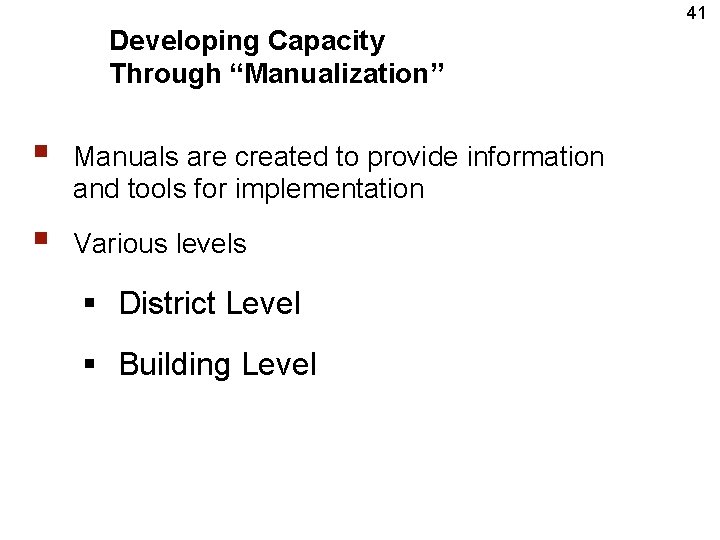

41 Developing Capacity Through “Manualization” § Manuals are created to provide information and tools for implementation § Various levels § District Level § Building Level

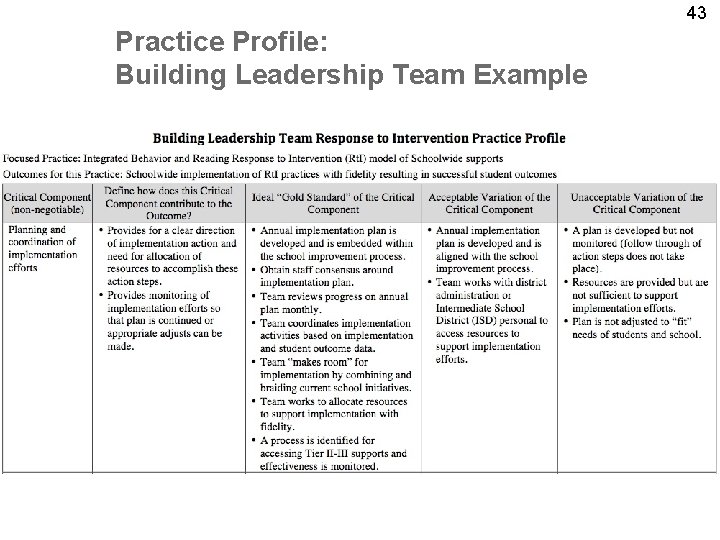

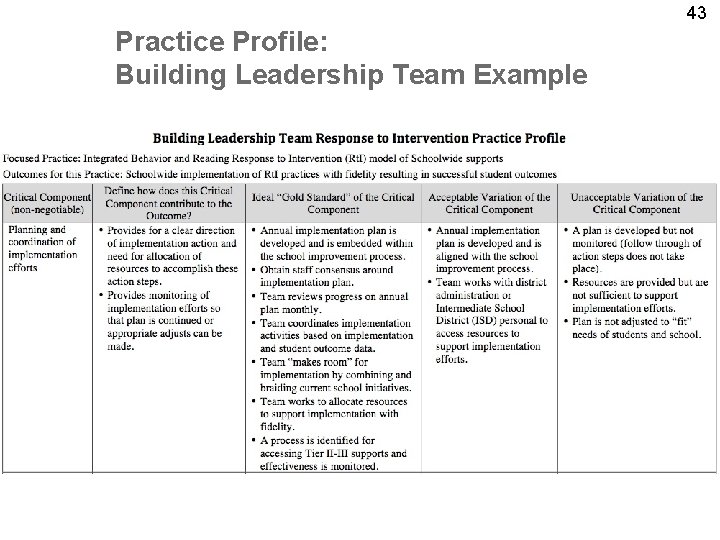

Developing Capacity Through “Practice Profiles” (Implementation Guides) 42 • Implementation Guides have been Developed for – Positive Behavioral Interventions and Supports at the Building Level – Reading Supports at the Building Level – Building Leadership Team – District Leadership Team • Quick Guides have been developed for – Principals – Coaches

43 Practice Profile: Building Leadership Team Example

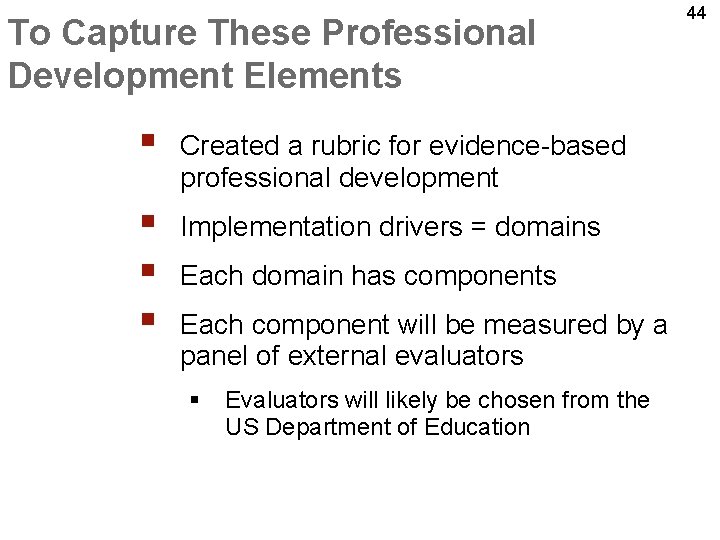

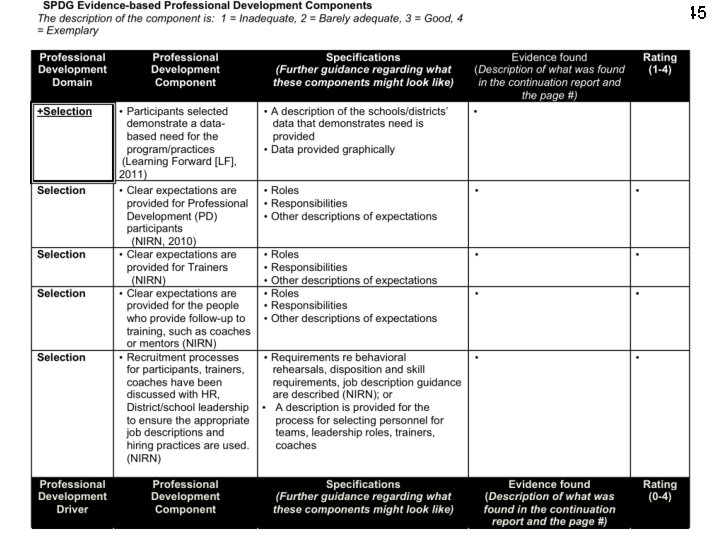

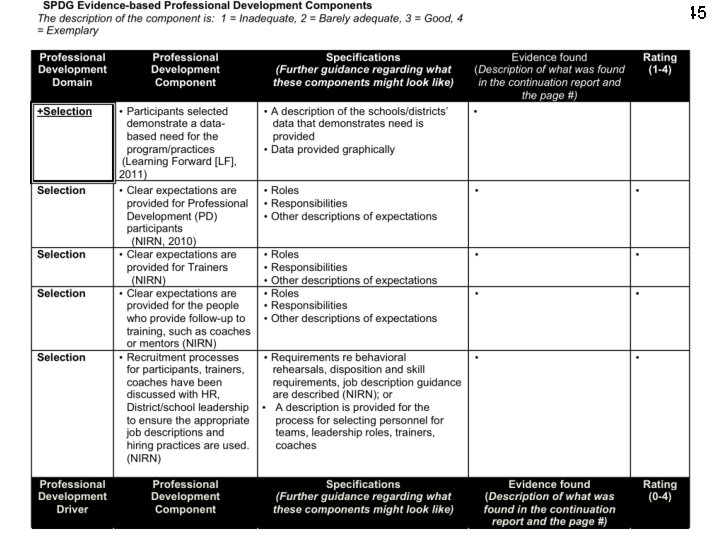

To Capture These Professional Development Elements § Created a rubric for evidence-based professional development § § § Implementation drivers = domains Each domain has components Each component will be measured by a panel of external evaluators § Evaluators will likely be chosen from the US Department of Education 44

45

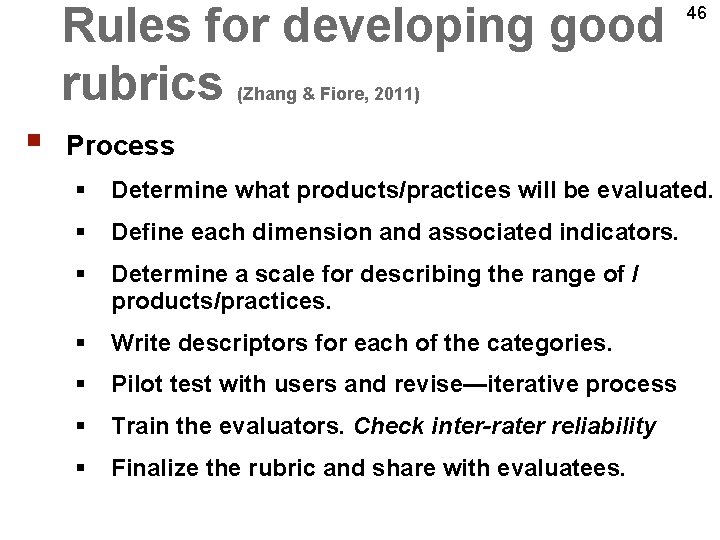

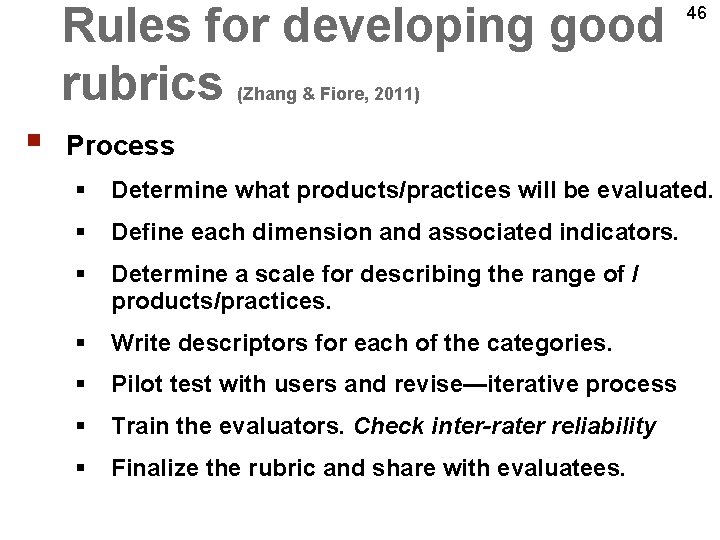

Rules for developing good rubrics 46 (Zhang & Fiore, 2011) § Process § Determine what products/practices will be evaluated. § Define each dimension and associated indicators. § Determine a scale for describing the range of / products/practices. § Write descriptors for each of the categories. § Pilot test with users and revise—iterative process § Train the evaluators. Check inter-rater reliability § Finalize the rubric and share with evaluatees.

47 Use of the Rubric § 4 Domains, each with 6 components § Selection § Training § Coaching § Performance Assessment § Components from the National Implementation Research Network, Learning Forward (NSDC), Guskey § Each component of the domains will be rated from 1 - 4

48 Component Themes § Assigning responsibility for major professional development functions (e. g. , measuring fidelity and outcomes; monitoring coaching quality) § Expectations stated for all roles and responsibilities (e. g. , PD participants, trainers, coaches, school & district administrators) § Data for each stage of PD (e. g. , selection, training, implementation, coaching, outcomes)

PD Needed for SPDG Project Personnel § § Adult learning principles for coaches § How to create a professional development plan § How describe the elements of the professional development plan More on adult learning principles for training 49

What Initiatives will you report on? (from project feedback) 1. “If a SPDG has 1 Goal/Initiative, they report on the performance measure for it. If a SPDG has two Goals/Initiatives, they report on one. If they have three, they report on two. If they have four, they report on two. The pattern would be that SPDGs would report on half of their Goals/Initiatives if they have an even number of them and would report on 2 of 3, 3 of 5, 4 of 7, etc for odd numbers. This is a simple method which could easily be applied. ” 50

51 § “An alternative would be to have SPDGs report on the measures for any Goals/Initiatives which involve PD which includes workshops/conferences designed not to just impart knowledge (Awareness Level of Systems Change Theory) but to implement an evidence based practice (e. g. SWPBIS, Reading Strategies, Math Strategies, etc. ) …”

52 § “l think having the OSEP Project Officers assigned to the various states negotiate with their respective state SPDG directors which of their SPDG initiatives are appropriate for this measure. This could be done each year immediately after the annual project report is submitted to OSEP by the SPDG Directors via a phone call and email exchanges. IF the negotiation could be completed in the early summer, individual meetings (if necessary) could be conducted at the July OSEP Project Director's Conference in July. This type of negotiation could provide OSEP Project Officers with information necessary for making informed decisions about each state SPDG award for the upcoming year. ”

53 We will do… § A combination of the three ideas: § We will only have you report on those initiatives that lead to implementation (of the practice/program you are providing training on) § If you have 1 or 2 of these initiatives, you will report on both. If you have 3, you will report on 2. If you have 4 you will report on 2, (report on 3 if you have 5, and so on) § This is all per discussion with your Project Officer.

54 Setting Benchmarks § “Perhaps a annual target could be that at least 60% of all practices receive at least a score of 3 or 4 in the first year of a five year SPDG funding cycle; at least 70% of all practices receive at least a score of 3 or 4 in the second year of funding; at least 80% of all practices receive at least a score of 3 or 4 in the third year of funding; at least 90% of all practices receive at least a score of 3 or 4 in the fourth and fifth year of funding. ”

55 We will do… § § § This basic idea: § 3 rd yr: 70% of components will have a score of 3 or 4 § 4 th yr: 80% of components will have a score of 3 or 4 § 5 th yr: 80% of components will have a score of 3 or 4 (maintenance yr) 1 st year of funding: baseline 2 nd yr: 50% of components will have a score of 3 or 4

56 Other feedback § Have projects fill out a worksheet with descriptions of the elements of their professional development system § § We will do this and have the panel of evaluators work from this worksheet and any supporting documents the project provides Provide exemplars § We will create practice profiles for each component to demonstrate what would receive a 4, 3, 2, or 1 rating

57 Ideas for Guidance § “It would be helpful to have a few rows [of the rubric] completed as an example with rating scores provided. § § We will do this Other ideas