The Real World Evaluation Approach to Impact Evaluation

- Slides: 27

The Real. World Evaluation Approach to Impact Evaluation With reference to the chapter in the Country-led monitoring and evaluation systems book Michael Bamberger and Jim Rugh Note: more information is available at: www. Real. World. Evaluation. org

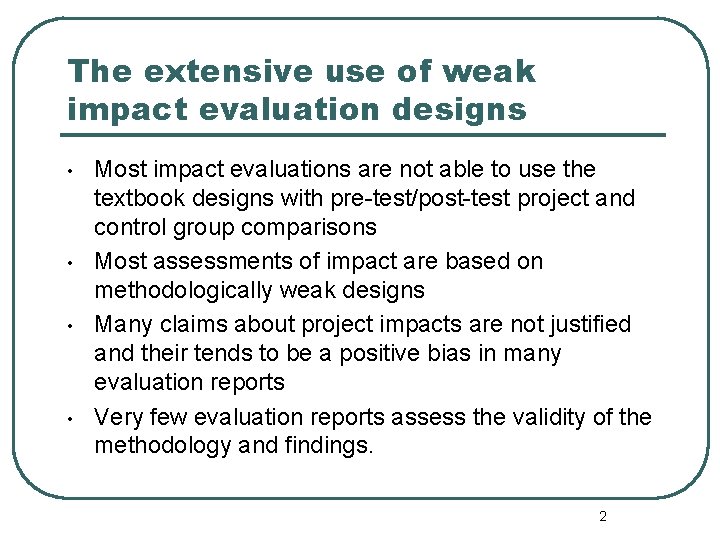

The extensive use of weak impact evaluation designs • • Most impact evaluations are not able to use the textbook designs with pre-test/post-test project and control group comparisons Most assessments of impact are based on methodologically weak designs Many claims about project impacts are not justified and their tends to be a positive bias in many evaluation reports Very few evaluation reports assess the validity of the methodology and findings. 2

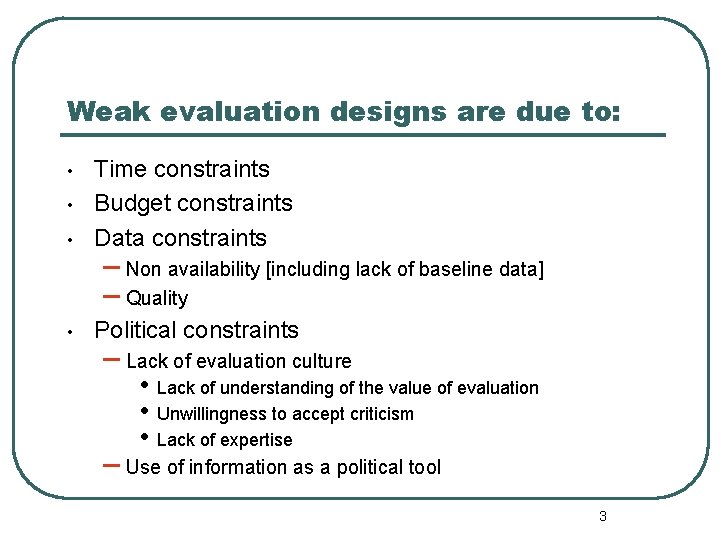

Weak evaluation designs are due to: • Time constraints Budget constraints Data constraints • Political constraints • • – Non availability [including lack of baseline data] – Quality – Lack of evaluation culture • • • Lack of understanding of the value of evaluation Unwillingness to accept criticism Lack of expertise – Use of information as a political tool 3

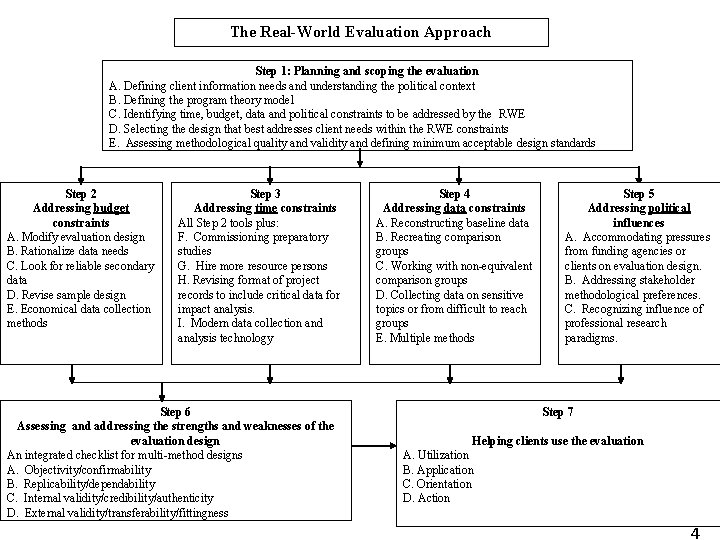

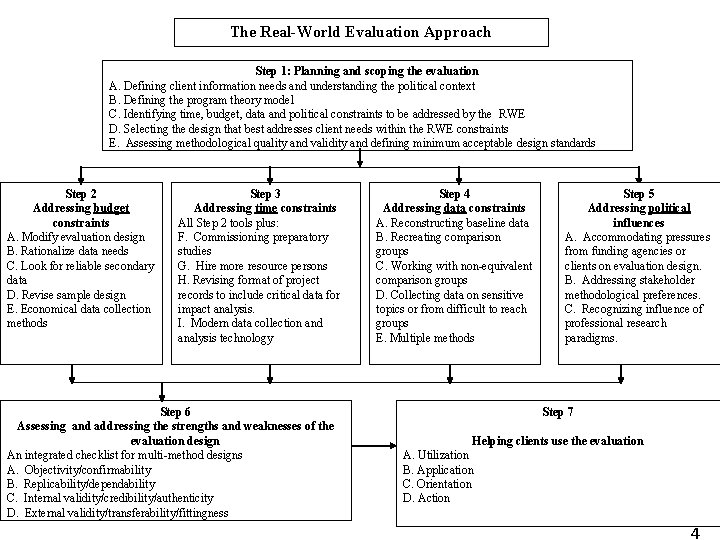

The Real-World Evaluation Approach Step 1: Planning and scoping the evaluation A. Defining client information needs and understanding the political context B. Defining the program theory model C. Identifying time, budget, data and political constraints to be addressed by the RWE D. Selecting the design that best addresses client needs within the RWE constraints E. Assessing methodological quality and validity and defining minimum acceptable design standards Step 2 Addressing budget constraints A. Modify evaluation design B. Rationalize data needs C. Look for reliable secondary data D. Revise sample design E. Economical data collection methods Step 3 Addressing time constraints All Step 2 tools plus: F. Commissioning preparatory studies G. Hire more resource persons H. Revising format of project records to include critical data for impact analysis. I. Modern data collection and analysis technology Step 6 Assessing and addressing the strengths and weaknesses of the evaluation design An integrated checklist for multi-method designs A. Objectivity/confirmability B. Replicability/dependability C. Internal validity/credibility/authenticity D. External validity/transferability/fittingness Step 4 Addressing data constraints A. Reconstructing baseline data B. Recreating comparison groups C. Working with non-equivalent comparison groups D. Collecting data on sensitive topics or from difficult to reach groups E. Multiple methods Step 5 Addressing political influences A. Accommodating pressures from funding agencies or clients on evaluation design. B. Addressing stakeholder methodological preferences. C. Recognizing influence of professional research paradigms. Step 7 Helping clients use the evaluation A. Utilization B. Application C. Orientation D. Action 4

How RWE contributes to countryled Monitoring and Evaluation • Increasing the uptake of evidence into policy making – Involving stakeholders in the design, implementation, analysis and dissemination – Using program theory to: • • base the evaluation on stakeholder understanding of the program and its objectives Ensure evaluation focuses on key issues – Present findings: • when they are needed • Using the clients’ preferred communication style 5

• The quality challenge: matching technical rigor and policy relevance –Adapting the evaluation design to the level of rigor required by decision makers –Use of the “Threats to validity checklist” at several points in the evaluation cycle –Defining minimum acceptable levels of methodological rigor –Avoiding positive bias in the evaluation design and presentation of findings • How to present negative findings 6

• Adapting country-led evaluation to realworld constraints –Adapting the system to real-world budget, time and data constraints –Ensuring evaluations produce useful and actionable information –Adapting the M&E system to national political, administrative and evaluation cultures –Focus on institutionalization of M&E systems not just ad hoc evaluations –Evaluation capacity development –Focus on quality assurance 7

The Real. World Evaluation Approach An integrated approach to ensure acceptable standards of methodological rigor while operating under realworld budget, time, data and political constraints. See summary chapter and workshop presentations at www. Real. World. Evaluation. org for more details 8

Reality Check – Real-World Challenges to Evaluation • • • All too often, project designers do not think evaluatively – evaluation not designed until the end There was no baseline – at least not one with data comparable to evaluation There was/can be no control/comparison group. Limited time and resources for evaluation Clients have prior expectations for what the evaluation findings will say Many stakeholders do not understand evaluation; distrust the process; or even see it as a threat (dislike of being judged) 9

Determining appropriate (and feasible) evaluation design l Based on an understanding of client information needs, required level of rigor, and what is possible given the constraints, the evaluator and client need to determine what evaluation design is required and possible under the circumstances. 10

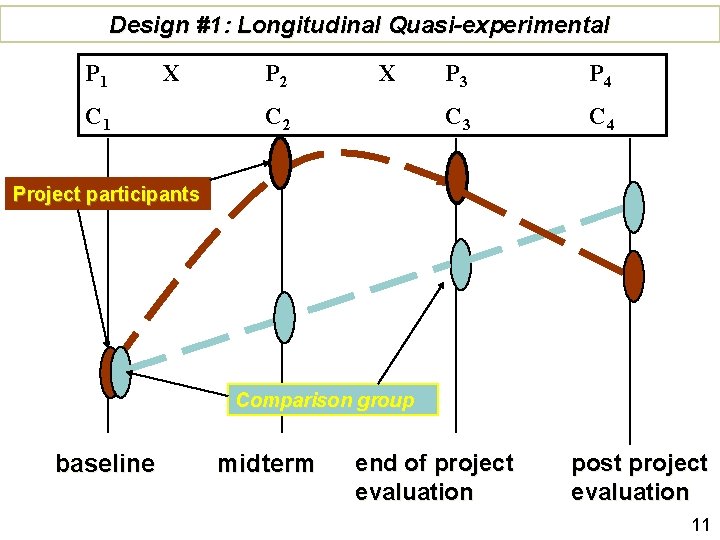

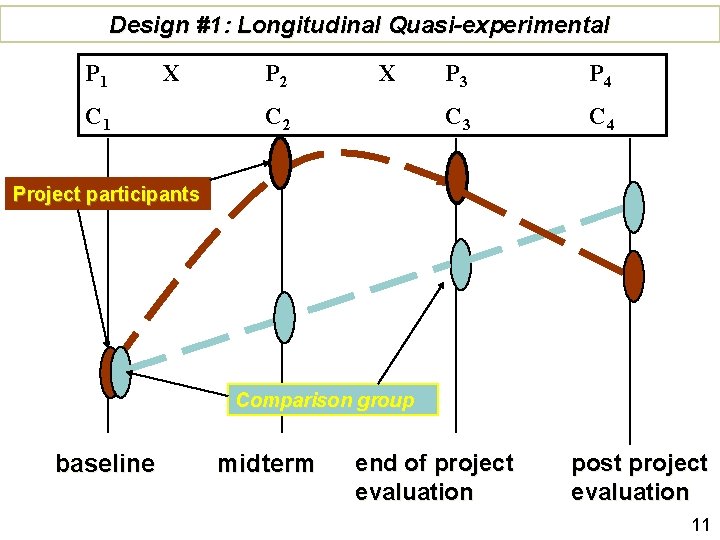

Design #1: Longitudinal Quasi-experimental P 1 X C 1 P 2 X C 2 P 3 P 4 C 3 C 4 Project participants Comparison group baseline midterm end of project evaluation post project evaluation 11

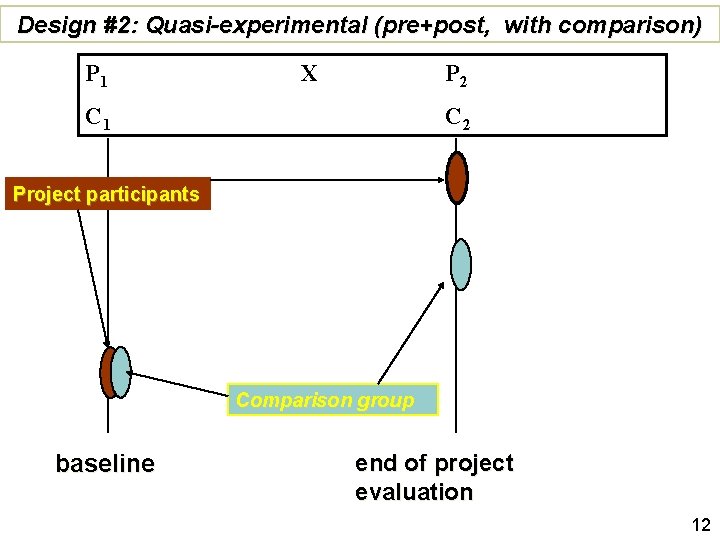

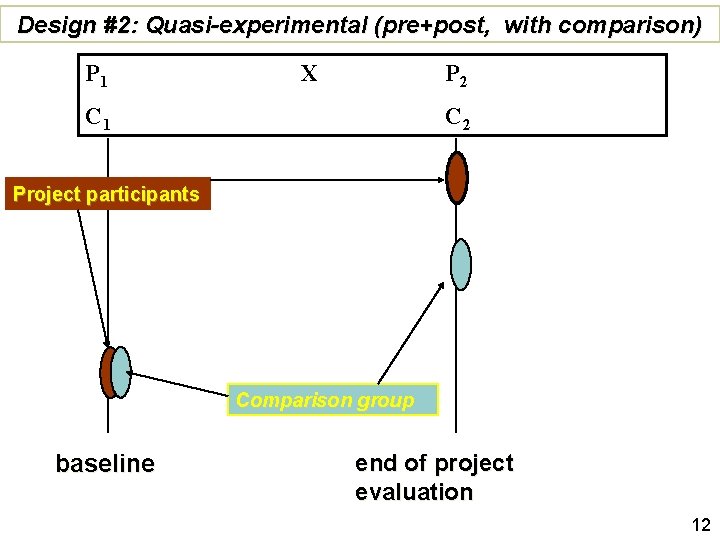

Design #2: Quasi-experimental (pre+post, with comparison) P 1 X P 2 C 1 C 2 Project participants Comparison group baseline end of project evaluation 12

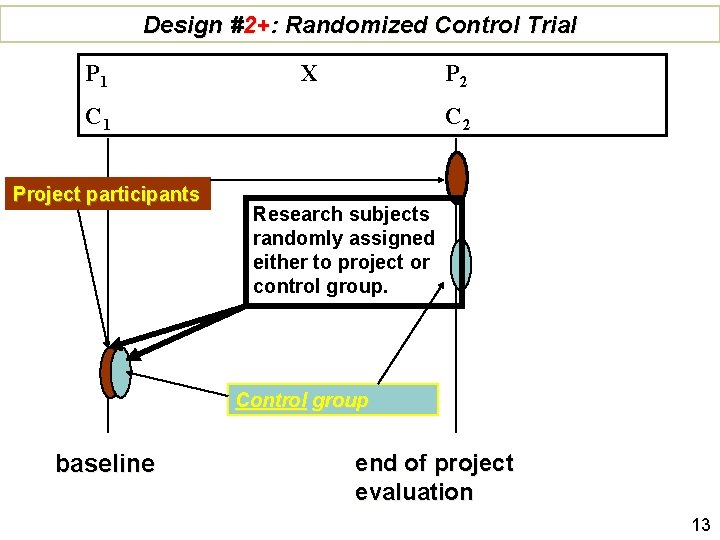

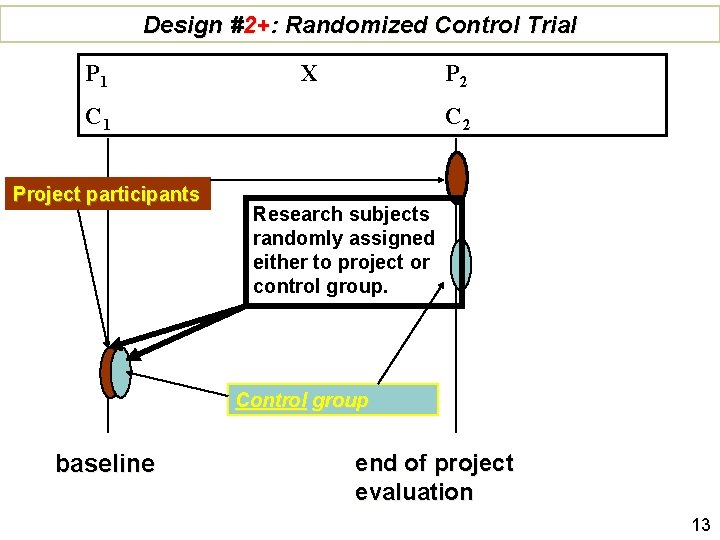

Design #2+: Randomized Control Trial P 1 X P 2 C 1 Project participants C 2 Research subjects randomly assigned either to project or control group. Control group baseline end of project evaluation 13

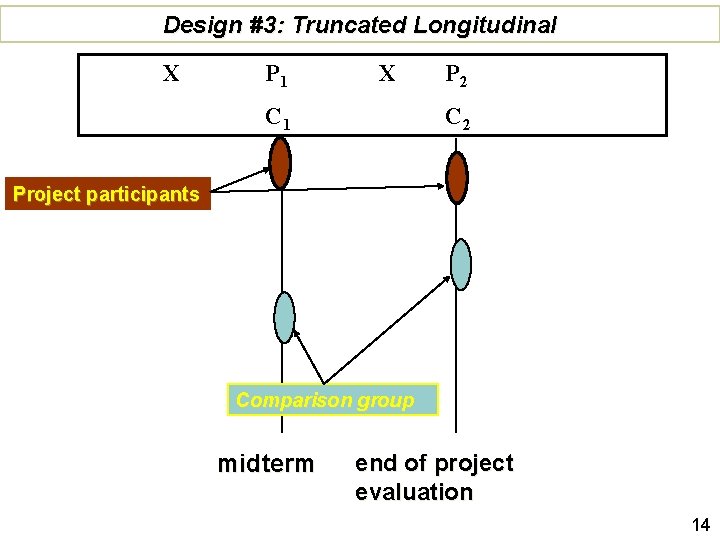

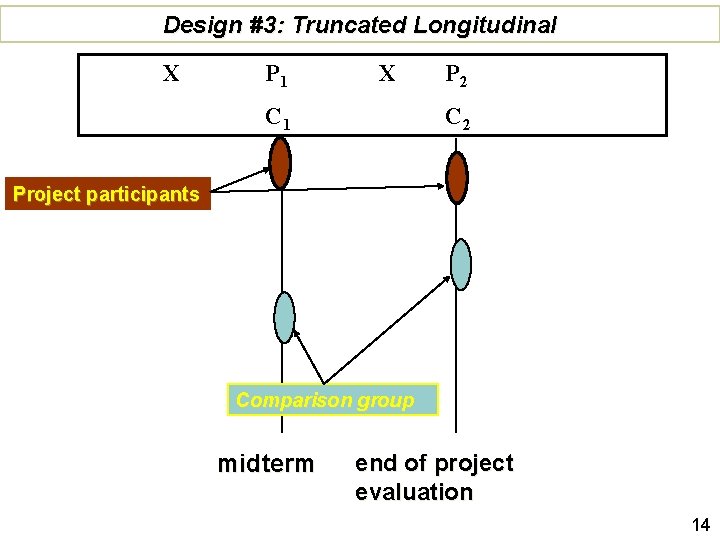

Design #3: Truncated Longitudinal X P 1 X C 1 P 2 C 2 Project participants Comparison group midterm end of project evaluation 14

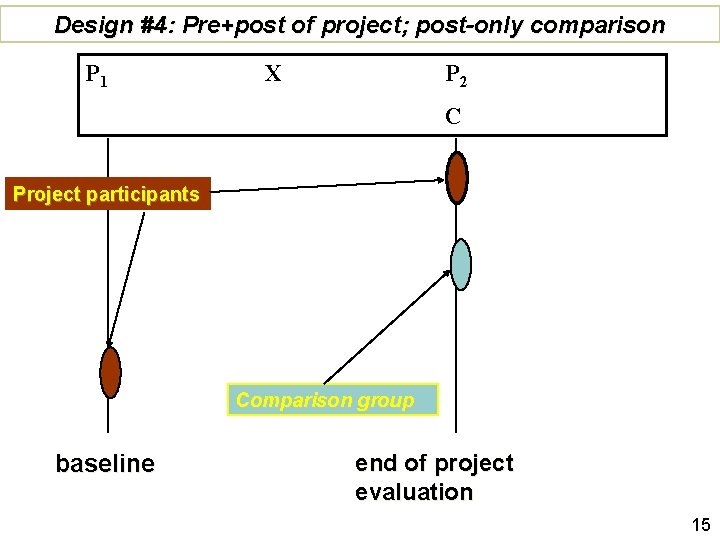

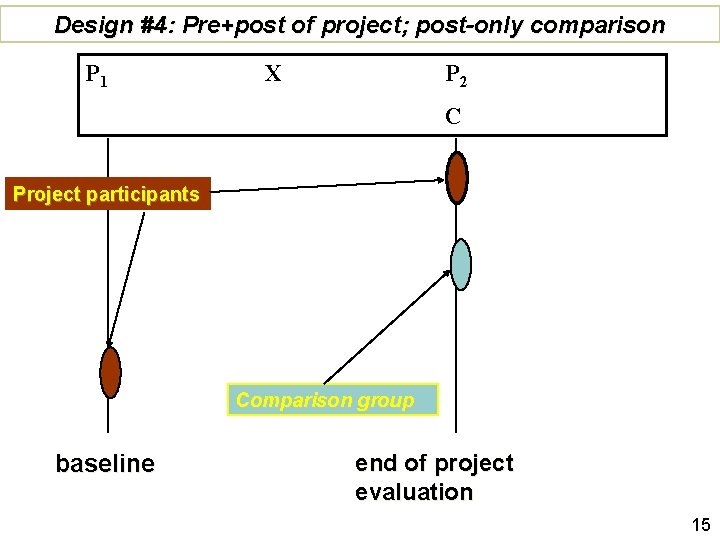

Design #4: Pre+post of project; post-only comparison P 1 X P 2 C Project participants Comparison group baseline end of project evaluation 15

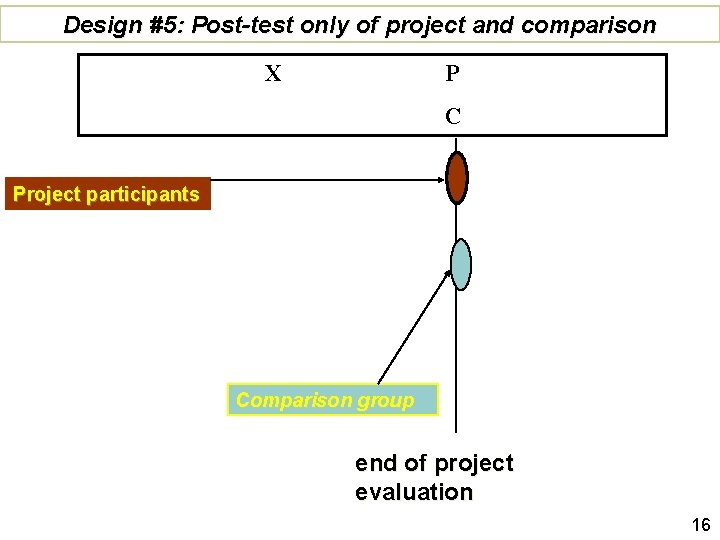

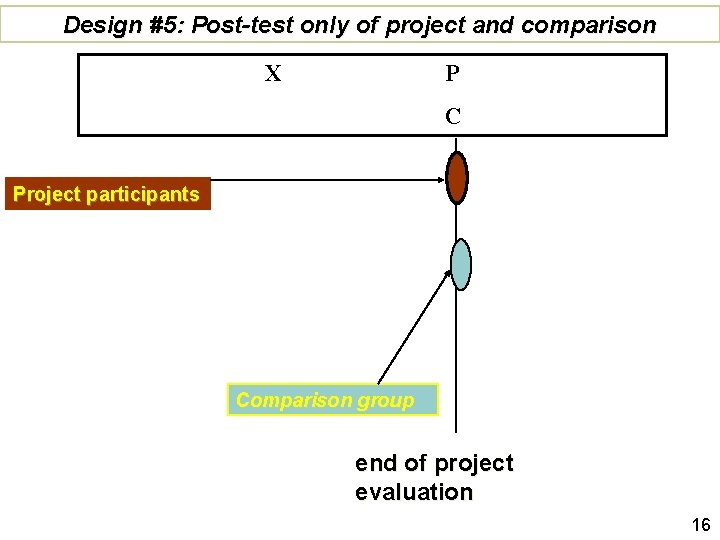

Design #5: Post-test only of project and comparison X P C Project participants Comparison group end of project evaluation 16

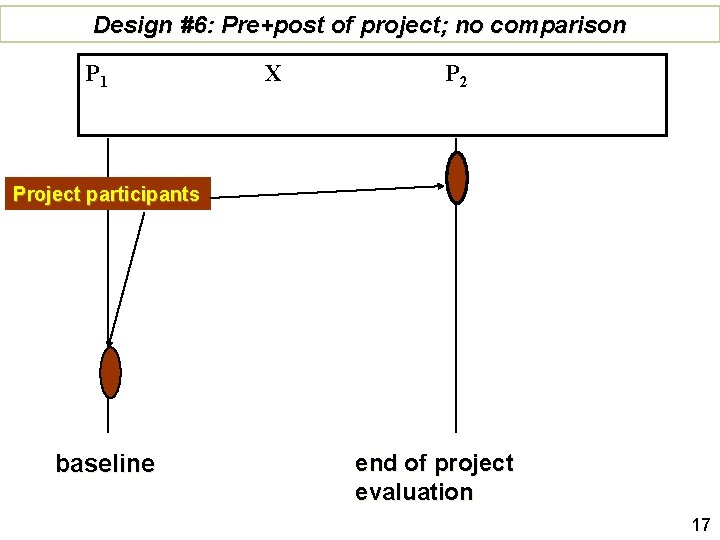

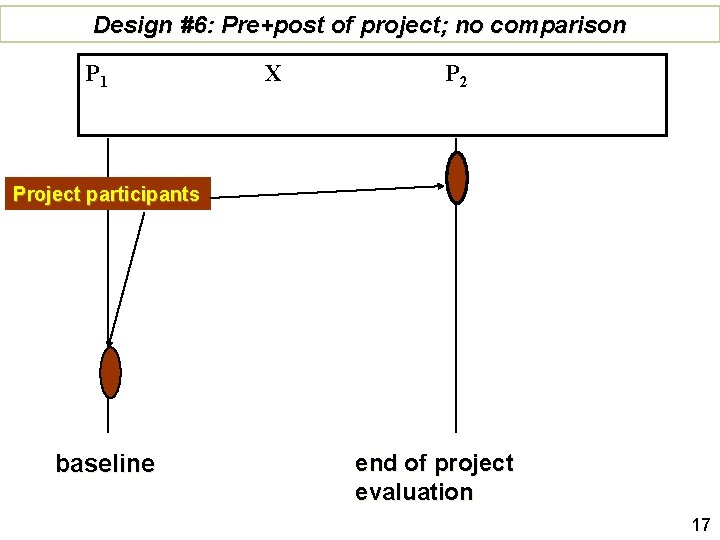

Design #6: Pre+post of project; no comparison P 1 X P 2 Project participants baseline end of project evaluation 17

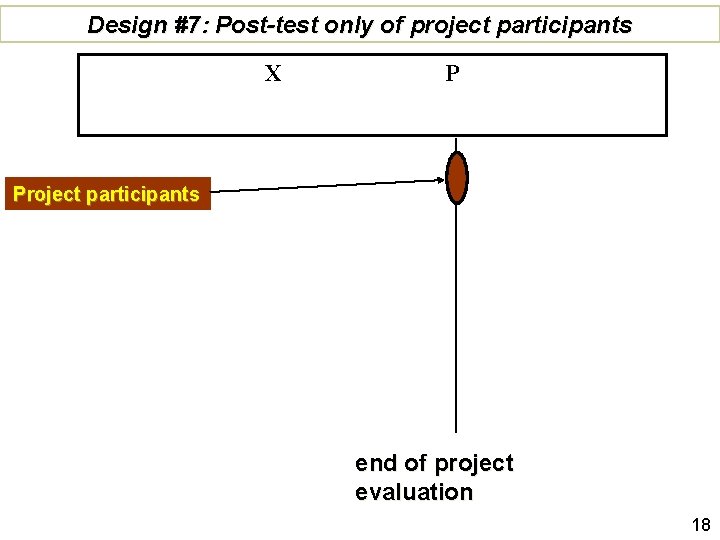

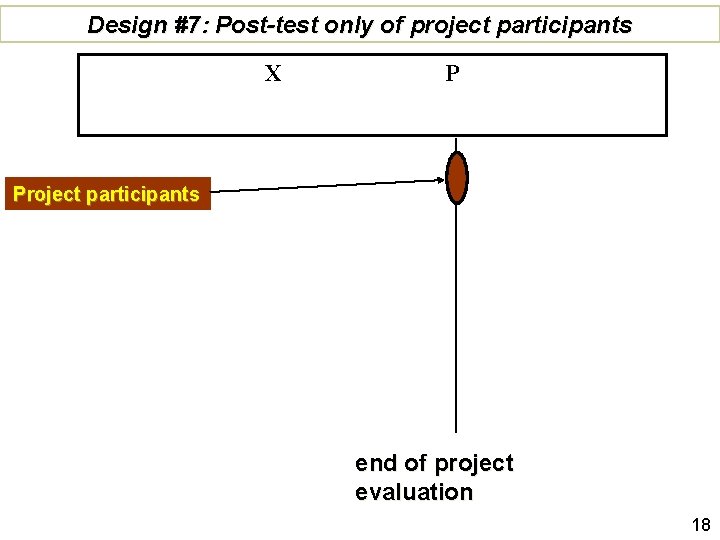

Design #7: Post-test only of project participants X P Project participants end of project evaluation 18

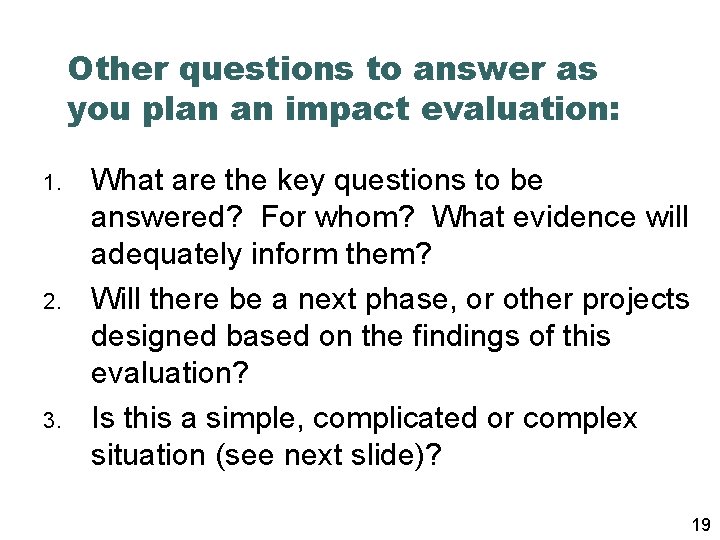

Other questions to answer as you plan an impact evaluation: 1. 2. 3. What are the key questions to be answered? For whom? What evidence will adequately inform them? Will there be a next phase, or other projects designed based on the findings of this evaluation? Is this a simple, complicated or complex situation (see next slide)? 19

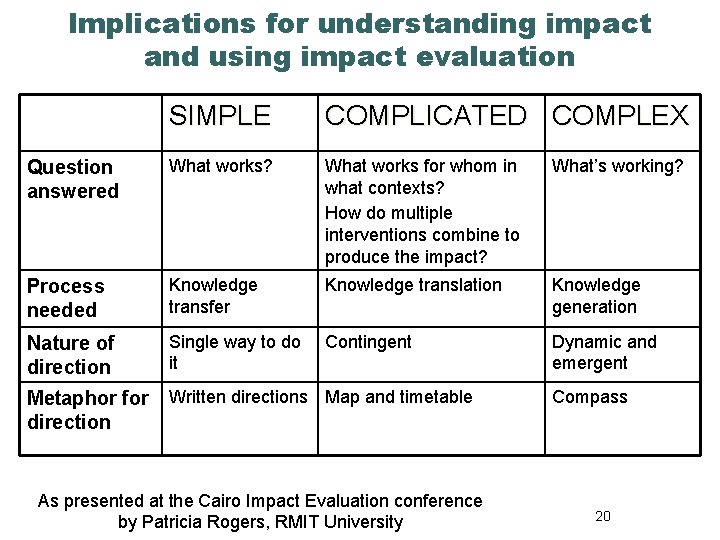

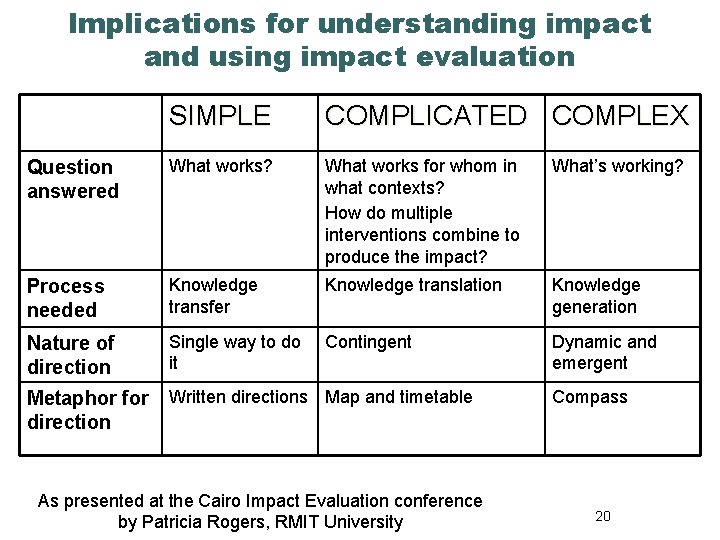

Implications for understanding impact and using impact evaluation SIMPLE COMPLICATED COMPLEX Question answered What works? What works for whom in what contexts? How do multiple interventions combine to produce the impact? What’s working? Process needed Knowledge transfer Knowledge translation Knowledge generation Nature of direction Single way to do it Contingent Dynamic and emergent Metaphor for Written directions Map and timetable direction As presented at the Cairo Impact Evaluation conference by Patricia Rogers, RMIT University Compass 20

Other questions to answer as you plan an impact evaluation: 1. 2. 3. Will focusing on one quantifiable indicator adequately represent “impact”? Is it feasible to expect there to be a clear, linear cause-effect chain attributable to one unique intervention? Or will we have to account for multiple plausible contributions by various agencies and actors to “higher-level” impact? Would one data collection method suffice, or should there be a combination of multiple methods used? 21

Ways to reconstruct baseline conditions A. B. C. D. E. Secondary data Project records Recall Key informants PRA and other participatory techniques such as timelines, and critical incidents to help establish the chronology of important changes in the community 22

Assessing the utility of potential secondary data l l l Reference period Population coverage Inclusion of required indicators Completeness Accuracy Free from bias 23

Ways to reconstruct comparison groups l l l Judgmental matching of communities. When phased introduction of project services beneficiaries entering in later phases can be used as “pipeline” control group. Internal controls when different subjects receive different combinations and levels of services 24

Importance of validity Evaluations provide recommendations for future decisions and action. If the findings and interpretation are not valid: l Programs which do not work may continue or even be expanded l Good programs may be discontinued l Priority target groups may not have access or benefit 25

RWE quality control goals l l The evaluator must achieve greatest possible methodological rigor within the limitations of a given context Standards must be appropriate for different types of evaluation The evaluator must identify and control for methodological weaknesses in the evaluation design. The evaluation report must identify methodological weaknesses and how these affect generalization to broader populations. 26

Main RWE messages 1. 2. 3. 4. 5. Evaluators must be prepared for realworld evaluation challenges There is considerable experience to draw on A toolkit of rapid and economical “Real. World” evaluation techniques is available (see www. Real. World. Evaluation. org) Never use time and budget constraints as an excuse for sloppy evaluation methodology A “threats to validity” checklist helps keep you honest by identifying potential weaknesses in your evaluation design and analysis 27