The RAJA Programming Approach Toward Architecture Portability for

The RAJA Programming Approach: Toward Architecture Portability for Large Multiphysics Applications Salishan Conference on High-Speed Computing April 21 -24, 2014 LLNL-PRES-653032 This work was performed under the auspices of the U. S. Department of Energy by Lawrence Livermore National Laboratory under contract DE-AC 52 -07 NA 27344. Lawrence Livermore National Security, LLC Rich Hornung

Hardware trends in the “Extreme-scale Era” will disrupt S/W maintainability & architecture portability § Managing increased hardware complexity and diversity… • Multi-level memory — — — • Heterogeneous processor hierarchy, changing core count configurations — — • Latency-optimized (e. g. , fat cores) Throughput-optimized (e. g. , GPUs, MIC) Increased importance of vectorization / SIMD — • High-bandwidth (e. g. , stacked) memory on-package High-capacity (e. g. , NVRAM) main memory Deeper cache hierarchies (user managed? ) 2 – 8 wide (double precision) on many architectures and growing, 32 wide on GPUs As # cores/chip increases, cache coherence across full chip may not exist § …requires pervasive, disruptive, architecture-specific software changes • Data-specific changes — — • Data structure transformations (e. g. , Struct of Array vs. Array of Struct) Need to insert directives and intrinsics (e. g. , restrict and align annotations) on individual loops Algorithm-specific changes — — Loop inversion, strip mining, loop fusion, etc. Which loops and which directives (e. g. , Open. MP, Open. ACC) may be architecture-specific Lawrence Livermore National LLNL-PRES-653032 2

Applications must enable implementation flexibility without excessive developer disruption § Architecture/Performance portability (our defn): map application memory and functional requirements to memory systems and functional units on a range of architectures, while maintaining a consistent programming style and control of all aspects of execution § The problem is acute for large multi-physics codes • O(105) – O(106) lines of code; O(10 K) loops • Mini-apps do not capture the scale of code changes needed § Manageable portable performance requires separating platform- specific data management and execution concerns from numerical algorithms in our applications… …. with code changes that are intuitive to developers Lawrence Livermore National LLNL-PRES-653032 3

“RAJA” is a potential path forward for our multiphysics applications at LLNL § We need algorithms & programming styles that: • Can express various forms of parallelism • Enable high performance portably • We can explore in our codes incrementally § There is no clear “best choice” for future PM/language. § RAJA is based on standard C++ (we rely on already) • It supports constructs & extends concepts used heavily in LLNL codes • It can be added to codes incrementally & used selectively • It allows various PMs “under the covers” – it does not wed a code to a particular technology • It is lightweight and offers developers customizable implementation choices Lawrence Livermore National LLNL-PRES-653032 4

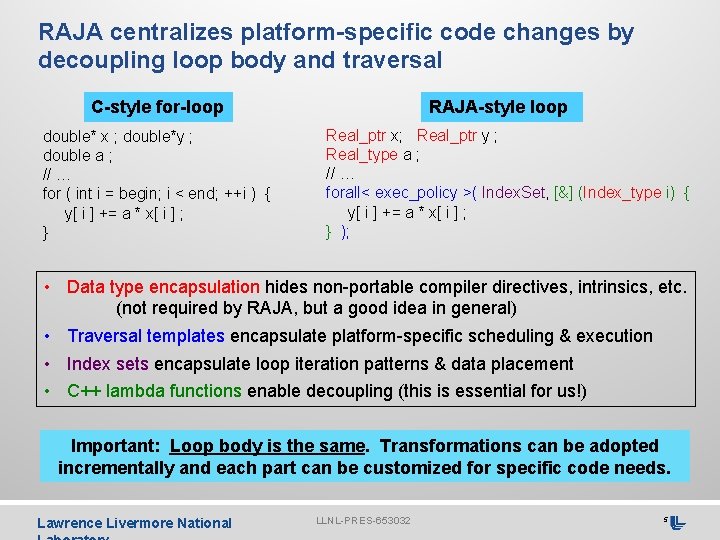

RAJA centralizes platform-specific code changes by decoupling loop body and traversal C-style for-loop double* x ; double*y ; double a ; // … for ( int i = begin; i < end; ++i ) { y[ i ] += a * x[ i ] ; } RAJA-style loop Real_ptr x; Real_ptr y ; Real_type a ; // … forall< exec_policy >( Index. Set, [&] (Index_type i) { y[ i ] += a * x[ i ] ; } ); • Data type encapsulation hides non-portable compiler directives, intrinsics, etc. (not required by RAJA, but a good idea in general) • Traversal templates encapsulate platform-specific scheduling & execution • Index sets encapsulate loop iteration patterns & data placement • C++ lambda functions enable decoupling (this is essential for us!) Important: Loop body is the same. Transformations can be adopted incrementally and each part can be customized for specific code needs. Lawrence Livermore National LLNL-PRES-653032 5

RAJA index sets enable tuning operations for segments of loop iteration space It is common to define arrays of indices to process; e. g. , nodes, elts w/material, etc. int elems[] = {0, 1, 2, 3, 4, 5, 6, 7, 14, 27, 36, 40, 41, 42, 43, 44, 45, 46, 47, 87, 117}; Create “Hybrid” Index Set containing work segments Hybrid. ISet segments = create. Hybrid. ISet( elems, nelems ); 0… 7 Traversal method dispatches segments according to execution policy 14, 27, 36 40… 47 87, 117 forall< exec_policy >( segments, loop_body ); Range segment Unstructured segment for (int i = begin; i < end; ++i) { loop_body(i) ; } for (int i = 0; i < seg_len; ++i) { loop_body( segment[i] ) ; } Segments can be tailored to architecture features (e. g. , SIMD hardware units): • create. Hybrid. ISet() methods coordinate runtime & compile-time optimizations • Platform specific header files contain tailored traversal definitions Lawrence Livermore National LLNL-PRES-653032 6

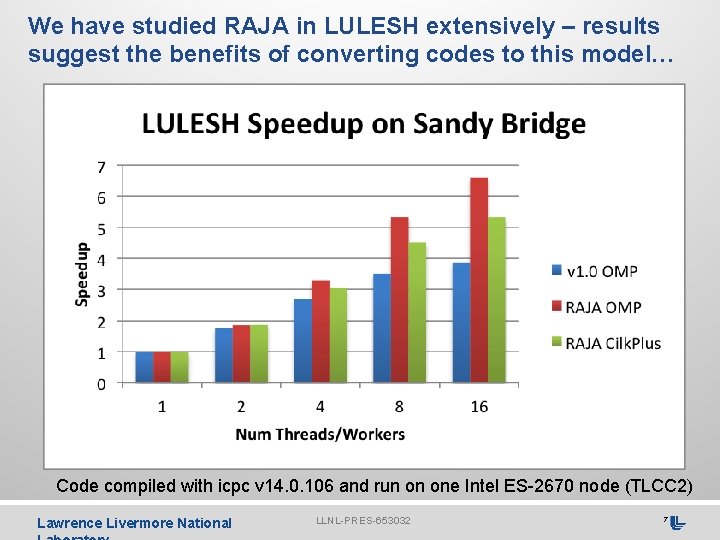

We have studied RAJA in LULESH extensively – results suggest the benefits of converting codes to this model… Code compiled with icpc v 14. 0. 106 and run on one Intel ES-2670 node (TLCC 2) Lawrence Livermore National LLNL-PRES-653032 7

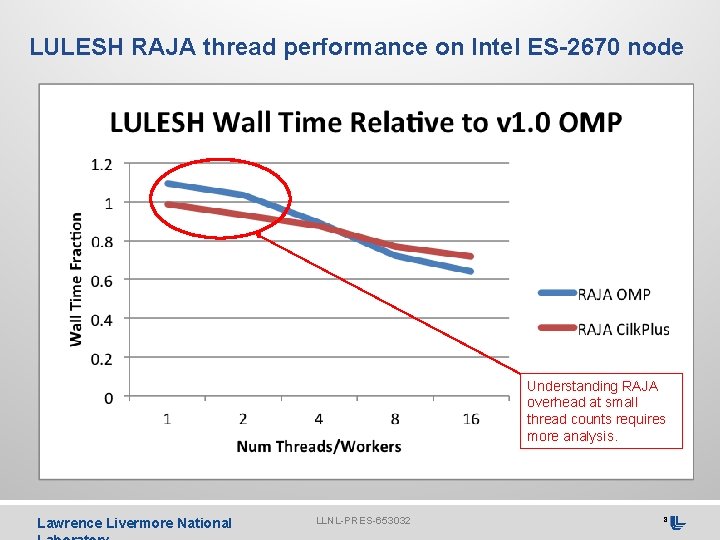

LULESH RAJA thread performance on Intel ES-2670 node Understanding RAJA overhead at small thread counts requires more analysis. Lawrence Livermore National LLNL-PRES-653032 8

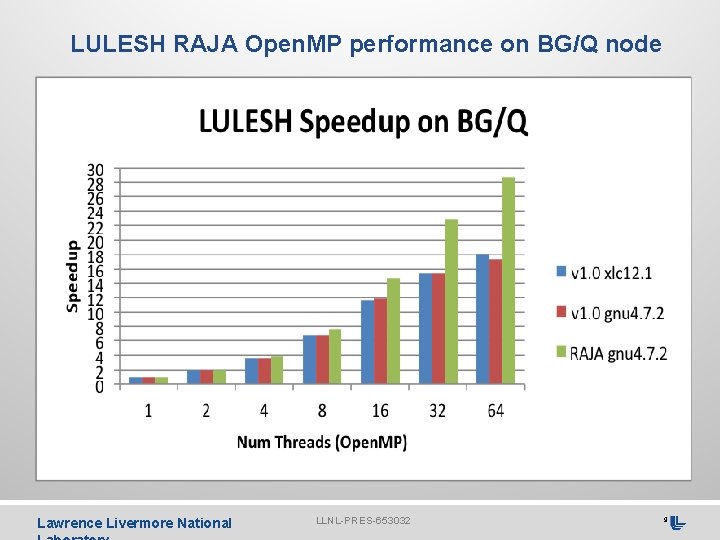

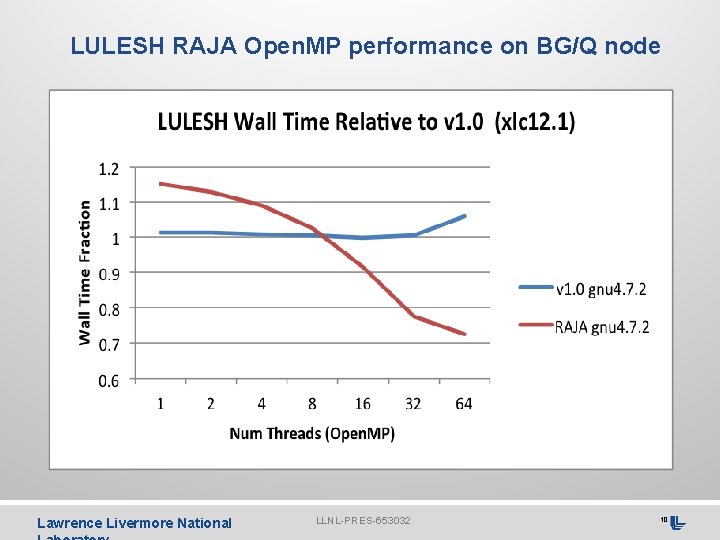

LULESH RAJA Open. MP performance on BG/Q node Lawrence Livermore National LLNL-PRES-653032 9

LULESH RAJA Open. MP performance on BG/Q node Lawrence Livermore National LLNL-PRES-653032 10

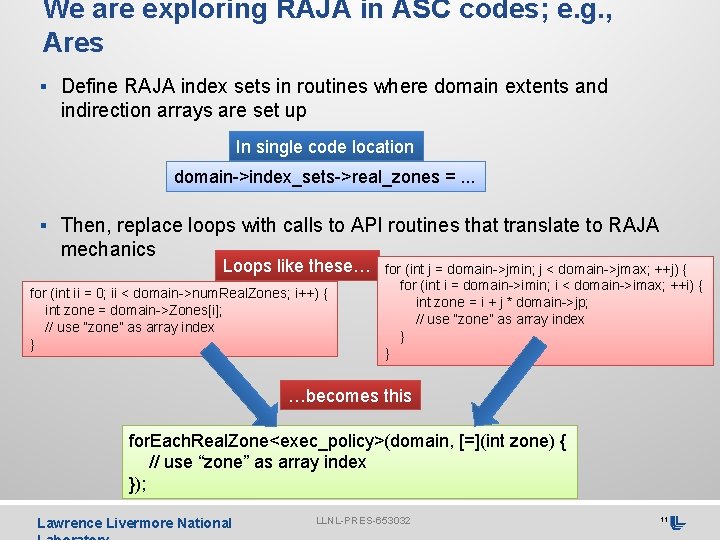

We are exploring RAJA in ASC codes; e. g. , Ares § Define RAJA index sets in routines where domain extents and indirection arrays are set up In single code location domain->index_sets->real_zones =. . . § Then, replace loops with calls to API routines that translate to RAJA mechanics Loops like these… for (int ii = 0; ii < domain->num. Real. Zones; i++) { int zone = domain->Zones[i]; // use “zone” as array index } for (int j = domain->jmin; j < domain->jmax; ++j) { for (int i = domain->imin; i < domain->imax; ++i) { int zone = i + j * domain->jp; // use “zone” as array index } } …becomes this for. Each. Real. Zone<exec_policy>(domain, [=](int zone) { // use “zone” as array index }); Lawrence Livermore National LLNL-PRES-653032 11

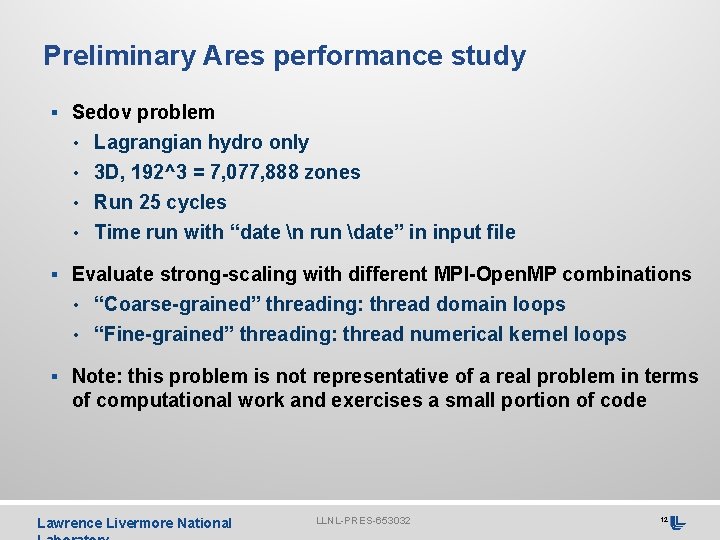

Preliminary Ares performance study § Sedov problem • Lagrangian hydro only • 3 D, 192^3 = 7, 077, 888 zones • Run 25 cycles • Time run with “date n run date” in input file § Evaluate strong-scaling with different MPI-Open. MP combinations • “Coarse-grained” threading: thread domain loops • “Fine-grained” threading: thread numerical kernel loops § Note: this problem is not representative of a real problem in terms of computational work and exercises a small portion of code Lawrence Livermore National LLNL-PRES-653032 12

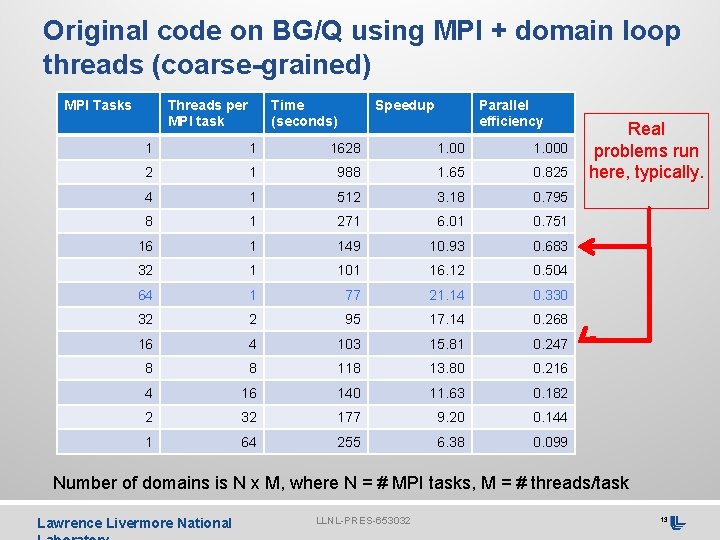

Original code on BG/Q using MPI + domain loop threads (coarse-grained) MPI Tasks Threads per MPI task Time (seconds) Speedup Parallel efficiency 1 1 1628 1. 000 2 1 988 1. 65 0. 825 4 1 512 3. 18 0. 795 8 1 271 6. 01 0. 751 16 1 149 10. 93 0. 683 32 1 101 16. 12 0. 504 64 1 77 21. 14 0. 330 32 2 95 17. 14 0. 268 16 4 103 15. 81 0. 247 8 8 118 13. 80 0. 216 4 16 140 11. 63 0. 182 2 32 177 9. 20 0. 144 1 64 255 6. 38 0. 099 Real problems run here, typically. Number of domains is N x M, where N = # MPI tasks, M = # threads/task Lawrence Livermore National LLNL-PRES-653032 13

Fine-grained loop threading via RAJA shows speedup over original code using only MPI Speedup (RAJA vs. Original) 7 6 5 4 3 2 1 0 1 2 4 8 MPI Tasks 16 32 64 RAJA version: N MPI tasks x M threads, N x M = 64 Original version: N MPI tasks In either case, N domains Lawrence Livermore National LLNL-PRES-653032 14

Performance of RAJA version w/ fine-grained threading is comparable to original w/ coarse-grained threading Speedup (RAJA vs. Original) 1. 2 1 0. 8 0. 6 0. 4 0. 2 0 1 2 4 8 MPI Tasks 16 32 64 RAJA version: N MPI tasks x M threads, N domains Original version: N MPI tasks x M threads, N x M domains In either case, N x M = 64 Lawrence Livermore National LLNL-PRES-653032 15

What have we learned about RAJA usage in Ares? § Basic integration is straightforward § Hard work is localized • Setting up & manipulating index sets • Defining platform-specific execution policies for loop classes § Converting loops is easy, but tedious • Replace loop header with call to iteration template • Identify loop type (i. e. , execution pattern) • Determine whether loop can and should be parallelized — Are other changes needed; e. g. , variable scope, thread safety? — What is appropriate execution policy? Platform-specific? § Encapsulation of looping constructs also benefits software consistency, readability, and maintainability Lawrence Livermore National LLNL-PRES-653032 16

What have we learned about RAJA performance in Ares? § RAJA version is sometimes faster, sometimes slower. • We have converted only 421 Lagrange hydro loops (327 DPstream, 83 DPwork, 11 Seq). Threading too aggressive? • Other code transformations can expose additional parallelism opportunities and enable compiler optimizations (e. g. , SIMD). § We need to overcome RAJA serial performance hit. • Compiler optimization for template/lambda constructs? • Need better inlining? § Once RAJA in place, exploration of data layout and execution choices to improve performance is straightforward and centralized (we have done some of this already) Lawrence Livermore National LLNL-PRES-653032 17

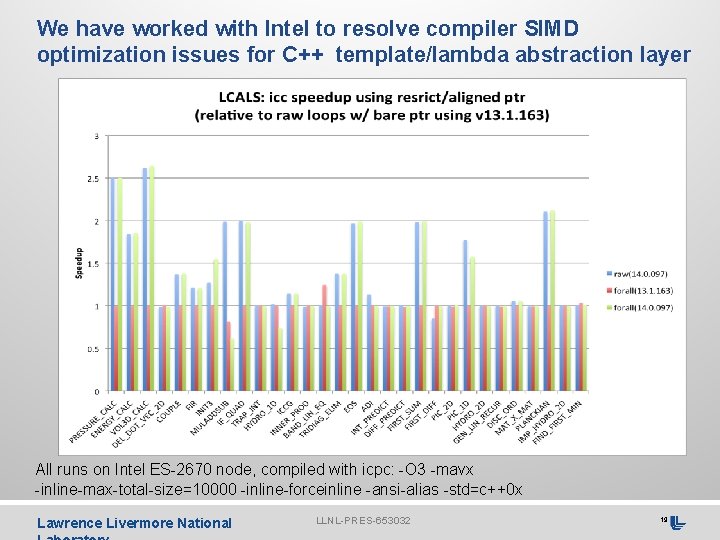

We can’t do this with s/w engineering alone – we need help from compilers too § We have identified specific compiler deficiencies, developed concrete recommendations and evidence of feasibility, and engaged compiler teams “A Case for Improved C++ Compiler Support to Enable Performance Portability in Large Physics Simulation Codes”, Rich Hornung and Jeff Keasler, LLNL-TR-653681. (https: //codesign. llnl. gov/codesign-papers-presentations. php) § We also created LCALS to study & monitor the issues • A suite of loops implemented with various s/w constructs (Livermore Loops modernized and expanded – this time in C++) • Very useful for dialogue with compiler vendors — Generate test cases for vendors showing optimization/support issues — Try vendor solutions and report findings — Introduce & motivate encapsulation concepts not on vendors’ RADAR — Track version-to-version compiler performance • Available at https: //codesign. llnl. gov Lawrence Livermore National LLNL-PRES-653032 18

We have worked with Intel to resolve compiler SIMD optimization issues for C++ template/lambda abstraction layer All runs on Intel ES-2670 node, compiled with icpc: -O 3 -mavx -inline-max-total-size=10000 -inline-forceinline -ansi-alias -std=c++0 x Lawrence Livermore National LLNL-PRES-653032 19

Illustrative RAJA use cases Lawrence Livermore National LLNL-PRES-653032 20

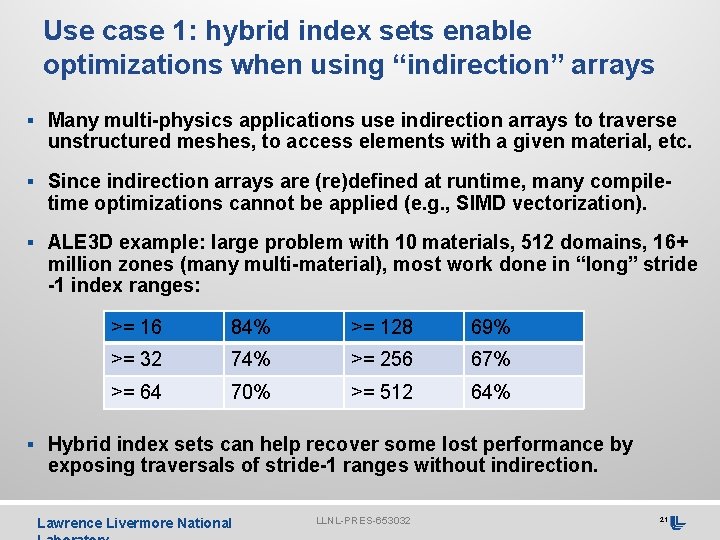

Use case 1: hybrid index sets enable optimizations when using “indirection” arrays § Many multi-physics applications use indirection arrays to traverse unstructured meshes, to access elements with a given material, etc. § Since indirection arrays are (re)defined at runtime, many compile- time optimizations cannot be applied (e. g. , SIMD vectorization). § ALE 3 D example: large problem with 10 materials, 512 domains, 16+ million zones (many multi-material), most work done in “long” stride -1 index ranges: >= 16 84% >= 128 69% >= 32 74% >= 256 67% >= 64 70% >= 512 64% § Hybrid index sets can help recover some lost performance by exposing traversals of stride-1 ranges without indirection. Lawrence Livermore National LLNL-PRES-653032 21

Use case 2: encapsulate fine-grain fault recovery template <typename LB> forall(int begin, int end, LB loop_body) { } bool done = false ; while (!done) { try { done = true ; for (int i = begin; i < end; ++i) loop_body(i) ; } catch (Transient_fault) { cache_invalidate() ; done = false ; } } • No impact on source code & recovery cost is commensurate with scope of fault. • It requires: idempotence, O/S can signal processor faults, try/catch can process O/S signals, etc. • These requirements should not be showstoppers! Lawrence Livermore National LLNL-PRES-653032 22

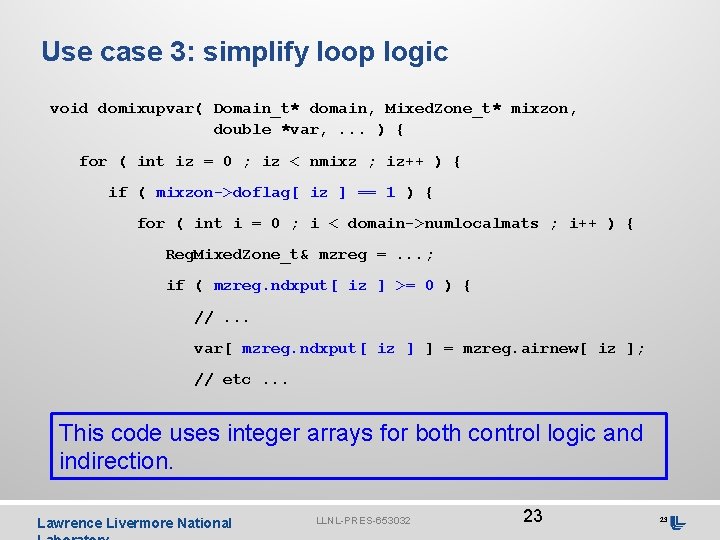

Use case 3: simplify loop logic void domixupvar( Domain_t* domain, Mixed. Zone_t* mixzon, double *var, . . . ) { for ( int iz = 0 ; iz < nmixz ; iz++ ) { if ( mixzon->doflag[ iz ] == 1 ) { for ( int i = 0 ; i < domain->numlocalmats ; i++ ) { Reg. Mixed. Zone_t& mzreg =. . . ; if ( mzreg. ndxput[ iz ] >= 0 ) { //. . . var[ mzreg. ndxput[ iz ] ] = mzreg. airnew[ iz ]; // etc. . . This code uses integer arrays for both control logic and indirection. Lawrence Livermore National LLNL-PRES-653032 23 23

Use case 3: simplify loop logic void domixupvar( Domain_t* domain, Mixed. Zone_t* mixzon, double *var, . . . ) { for (int i = 0; i < domain->numlocalmats; i++) { int ir = domain->localmats[i] ; RAJA: : forall<exec_policy>(*mixzon->reg[ir]. ndxput_is, [&] (int iz) { //. . . var[ mzreg. ndxput[ iz ] ] = mzreg. airnew[ iz ]; // etc. . . Encoding conditional logic in RAJA index sets simplifies code and removes two levels of nesting (good for developers and compilers!): • • Serial speedup: 1. 6 x on Intel Sandybridge, 1. 9 x on IBM BG/Q (g++) Aside: compiling original code w/ g++4. 7. 2 (needed for lambdas) gives 1. 99 x speedup over XLC. So, original w/XLC RAJA w/g++ yields 3. 78 x total performance increase. Lawrence Livermore National LLNL-PRES-653032 24

Use case 4: reorder loop iterations to enable parallelism § A common operation in staggered-mesh codes sums values to nodes from surrounding zones; i. e. , nodal_val[ node ] += zonal_val[ zone ] § Index set segments can be used to define independent groups of computation (colors) § Option A (~8 x speedup w/16 threads): • Iterate over groups sequentially (group 1 completes, then group 2, etc. ) • Operations within a group execute in parallel § Option B (~17% speedup over option A): Option A 1 2 1 2 1 3 4 3 4 3 1 2 1 • Zones in a group (row) processed sequentially Option B 1 1 1 • Iterate over rows of each color in parallel § Note: No source code change needed to switch between iteration / parallel execution patterns. Lawrence Livermore National LLNL-PRES-653032 2 2 1 1 1 25

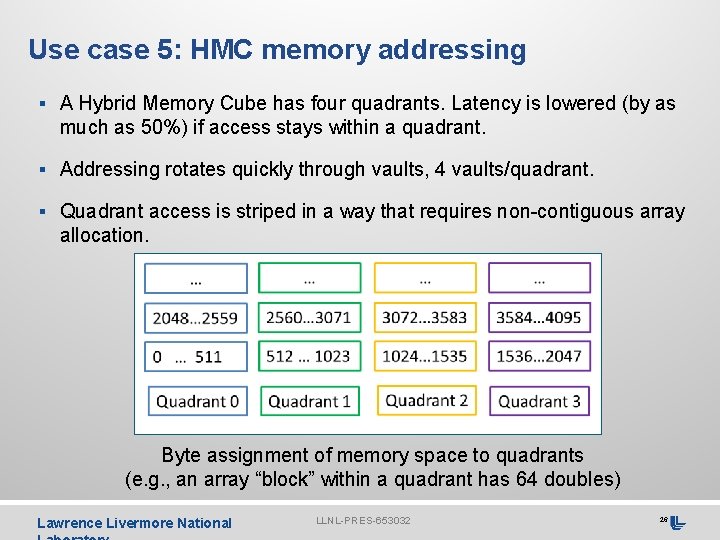

Use case 5: HMC memory addressing § A Hybrid Memory Cube has four quadrants. Latency is lowered (by as much as 50%) if access stays within a quadrant. § Addressing rotates quickly through vaults, 4 vaults/quadrant. § Quadrant access is striped in a way that requires non-contiguous array allocation. Byte assignment of memory space to quadrants (e. g. , an array “block” within a quadrant has 64 doubles) Lawrence Livermore National LLNL-PRES-653032 26

Use case 5: HMC memory addressing § A specialized traversal template can be written for the HMC to keep memory access within a quadrant; e. g. , #define QUADRANT_SIZE 64 #define QUADRANT_MASK 63 #define QUADRANT_STRIDE (QUADRANT_SIZE * 4) template <typename LOOP_BODY> void forall(int begin, int end, LOOP_BODY loop_body){ int begin. Quad = (begin / QUADRANT_SIZE ) ; int end. Quad = ((end - 1) / QUADRANT_SIZE) ; int begin. Offset = (begin. Quad * QUADRANT_STRIDE + (begin & QUADRANT_MASK) ; int end. Offset = (end. Quad * QUADRANT_STRIDE) + ((end - 1) & QUADRANT_MASK) + 1 ; do { /* do at most QUADRANT_SIZE iterations */ for(ii=begin. Off. Set; ii<end. Off. Set; ++i) { loop_body(ii) ; } begin. Offset += QUADRANT_STRIDE ; end. Offset += QUADRANT_STRIDE ; } while (begin. Quad++ != end. Quad) ; } Lawrence Livermore National LLNL-PRES-653032 27

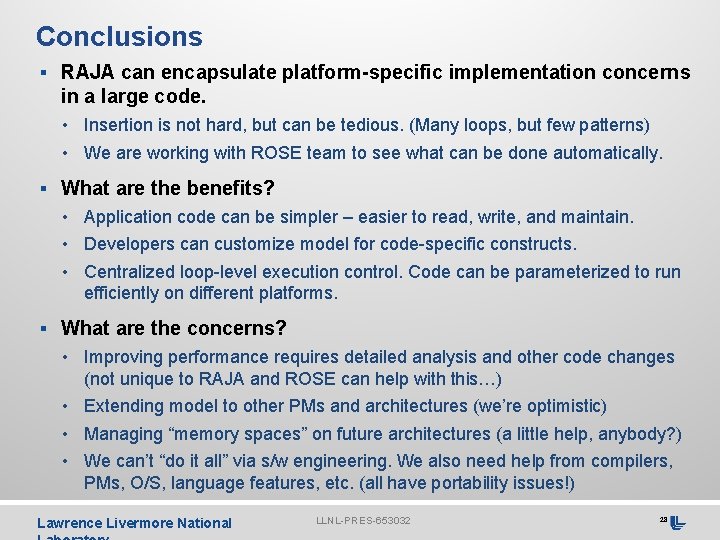

Conclusions § RAJA can encapsulate platform-specific implementation concerns in a large code. • Insertion is not hard, but can be tedious. (Many loops, but few patterns) • We are working with ROSE team to see what can be done automatically. § What are the benefits? • Application code can be simpler – easier to read, write, and maintain. • Developers can customize model for code-specific constructs. • Centralized loop-level execution control. Code can be parameterized to run efficiently on different platforms. § What are the concerns? • Improving performance requires detailed analysis and other code changes (not unique to RAJA and ROSE can help with this…) • Extending model to other PMs and architectures (we’re optimistic) • Managing “memory spaces” on future architectures (a little help, anybody? ) • We can’t “do it all” via s/w engineering. We also need help from compilers, PMs, O/S, language features, etc. (all have portability issues!) Lawrence Livermore National LLNL-PRES-653032 28

Acknowledgements § Jeff Keasler, my collaborator on RAJA development § Esteban Pauli, “guinea pig” for trying out RAJA in Ares Lawrence Livermore National LLNL-PRES-653032 29

The end. Lawrence Livermore National LLNL-PRES-653032 30

- Slides: 30