The PTX GPU Assembly Simulator and Interpreter N

The PTX GPU Assembly Simulator and Interpreter N. M. Stiffler Zheming Jin Ibrahim Savran

Parallel Thread Execution – PTX • Pseudo Assembly language used in n. Vidia’s Cuda Programming Environment

Compute Unified Device Architecture – CUDA • Parallel architecture developed by n. Vidia • Used in n. Vidia’s GPU’s • Using CUDA, the latest NVIDIA GPUs effectively become open architectures like CPUs. ▫ Perk - GPUs have a parallel architecture, each core capable of running thousands of threads simultaneously ▫ If a given application is parallelizable then the GPU can offer large performance benefits.

Visual

Accessing the GPU? • A subset of C with n. Vidia extensions ▫ Why is it a subset of C recursion-free function-pointer-free

Tying It Together

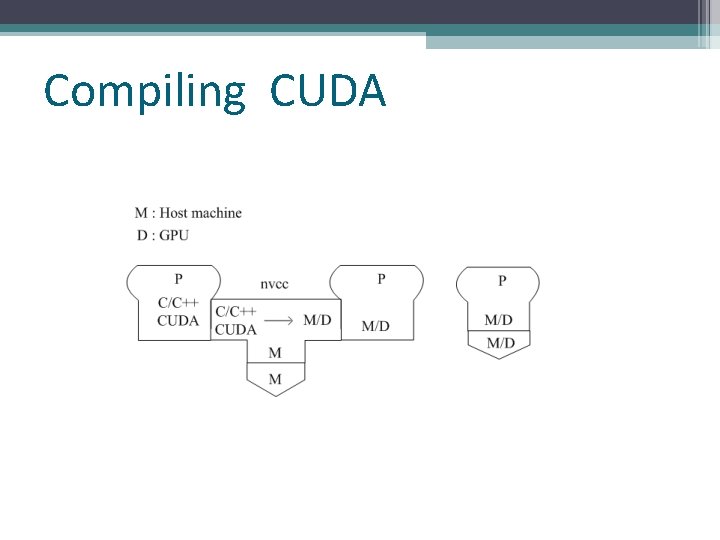

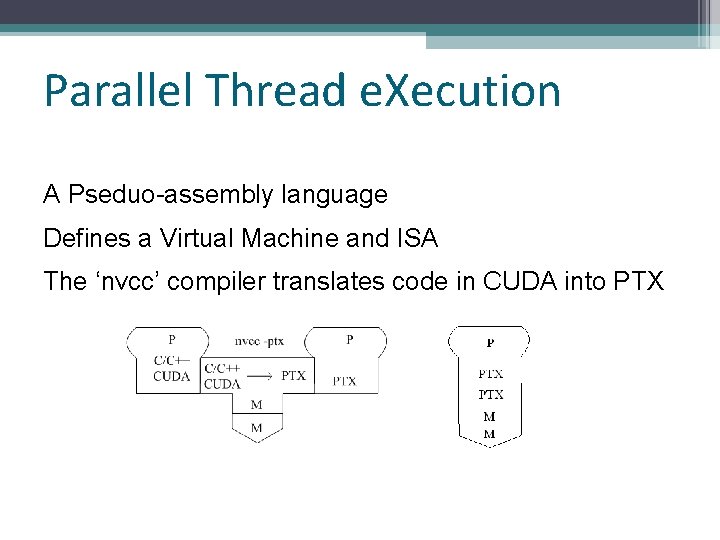

NVCC • 'nvcc‘ (n. Vidia gcc) compiler translates code written in CUDA into PTX • GPU contains a compiler which translates the PTX into something which can be run on the processing cores.

CSCE 513 Course Presentation Zheming Jin

Compiling CUDA

Parallel Thread e. Xecution A Pseduo-assembly language Defines a Virtual Machine and ISA The ‘nvcc’ compiler translates code in CUDA into PTX

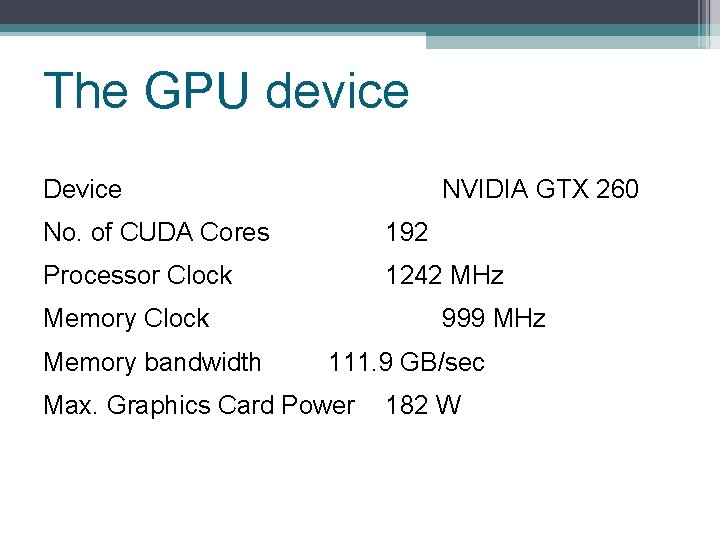

The GPU device Device NVIDIA GTX 260 No. of CUDA Cores 192 Processor Clock 1242 MHz Memory Clock Memory bandwidth 999 MHz 111. 9 GB/sec Max. Graphics Card Power 182 W

Ocelot Project(Cont) Aim to compile CUDA programs to so that they can be run on architectures other than NIVIDA GPUs Allow architectures other than NVIDIA GPUs to leverage the parallelism in PTX The project is freely available on Google Code.

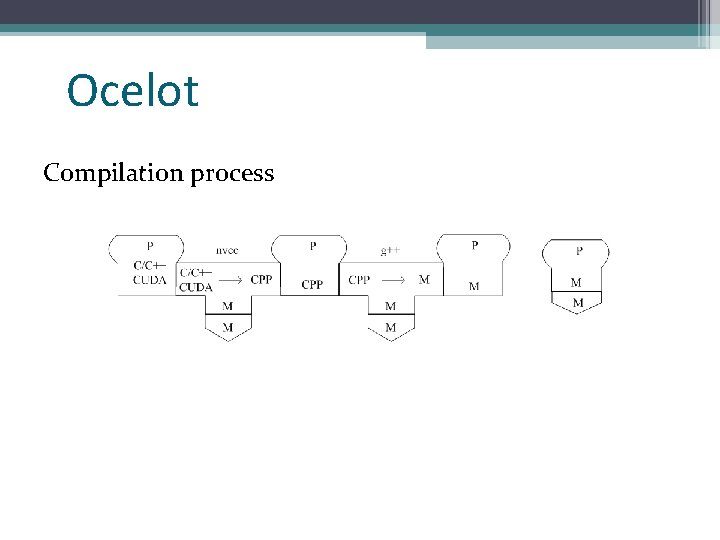

Ocelot Compilation process

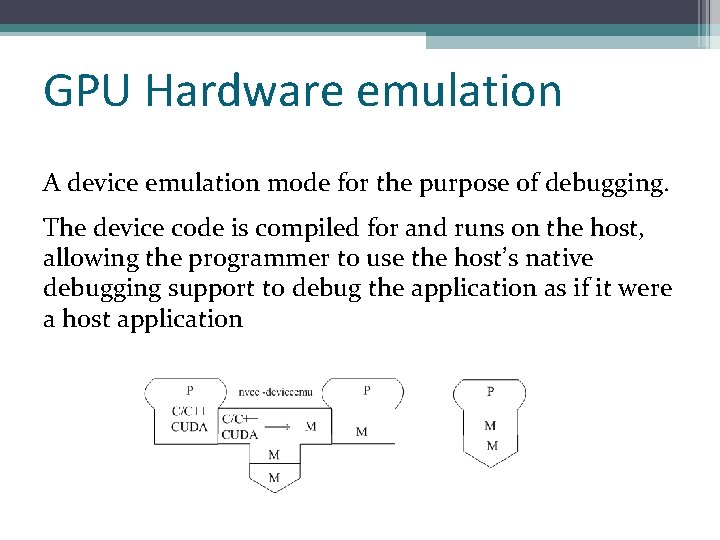

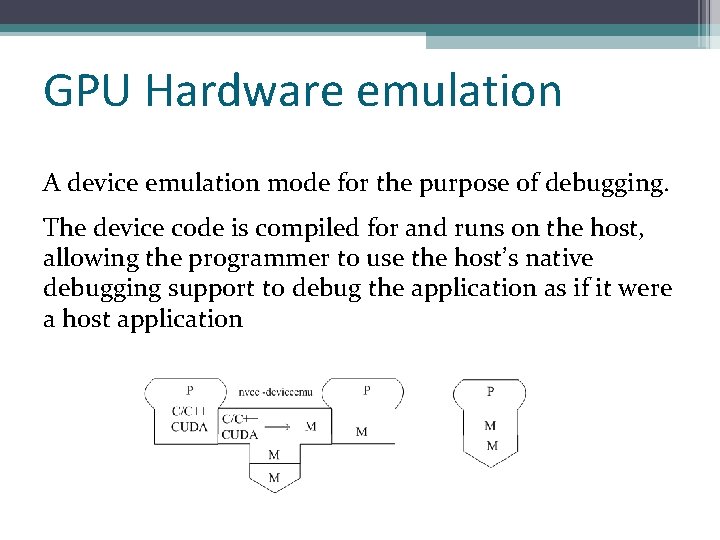

GPU Hardware emulation A device emulation mode for the purpose of debugging. The device code is compiled for and runs on the host, allowing the programmer to use the host’s native debugging support to debug the application as if it were a host application

GPU Hardware emulation A device emulation mode for the purpose of debugging. The device code is compiled for and runs on the host, allowing the programmer to use the host’s native debugging support to debug the application as if it were a host application

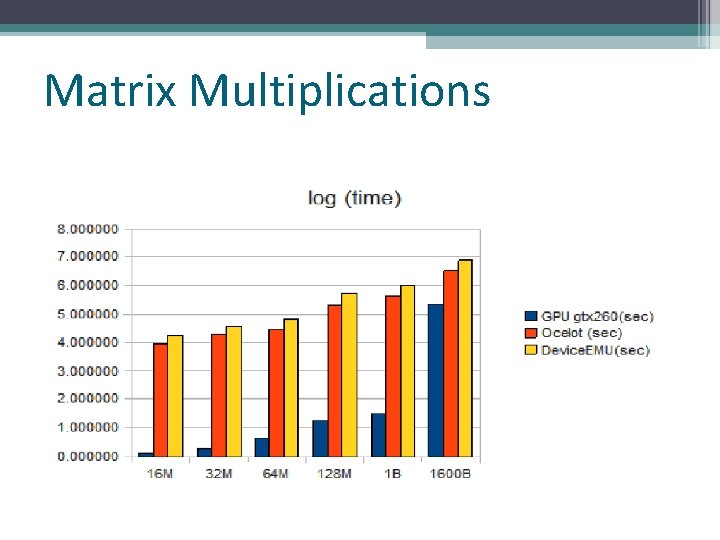

Matrix Multiplications

Ibrahim Savran CUDA PTX & an Interpreter

Outline • Some Notes About CUDA Infrastructure • A CUDA Example and PTX • PTX Overview – The goals of PTX – PTX Instructions (some) • • • Case Study: PTX Simulator Interpretation Process Steps Demo Time Q/A Section References

Caveats • There’s a lot not detailed in manuals – Bit level formats of instructions – Handling of divergent thread paths – What kind of variables are stored where

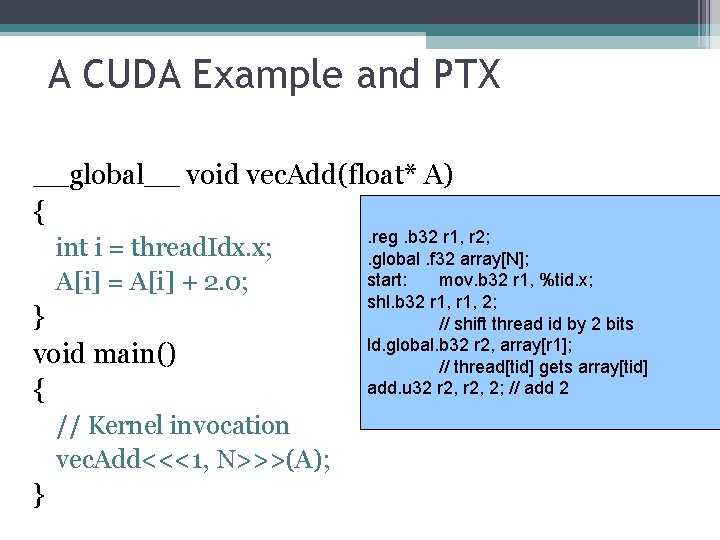

A CUDA Example and PTX __global__ void vec. Add(float* A) { int i = thread. Idx. x; A[i] = A[i] + 2. 0; } void main() { // Kernel invocation vec. Add<<<1, N>>>(A); } . reg. b 32 r 1, r 2; . global. f 32 array[N]; start: mov. b 32 r 1, %tid. x; shl. b 32 r 1, 2; // shift thread id by 2 bits ld. global. b 32 r 2, array[r 1]; // thread[tid] gets array[tid] add. u 32 r 2, 2; // add 2

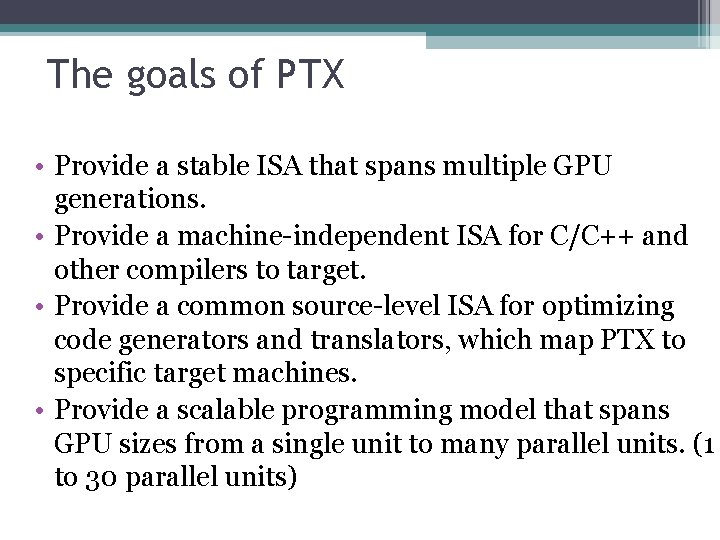

The goals of PTX • Provide a stable ISA that spans multiple GPU generations. • Provide a machine-independent ISA for C/C++ and other compilers to target. • Provide a common source-level ISA for optimizing code generators and translators, which map PTX to specific target machines. • Provide a scalable programming model that spans GPU sizes from a single unit to many parallel units. (1 to 30 parallel units)

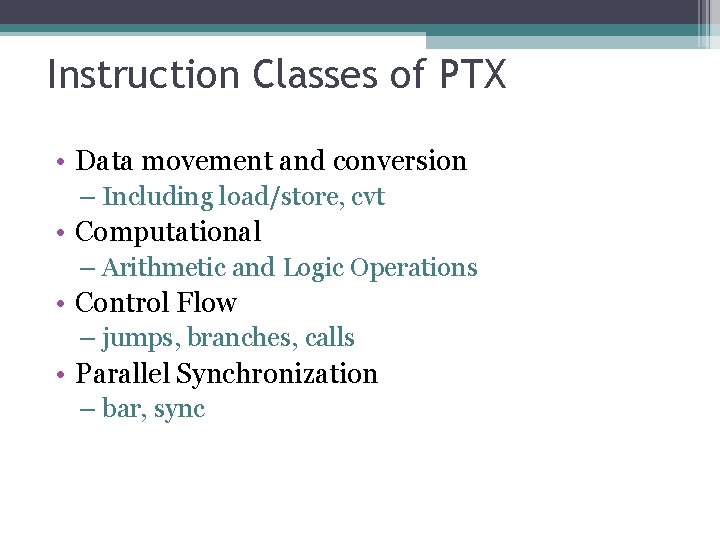

Instruction Classes of PTX • Data movement and conversion – Including load/store, cvt • Computational – Arithmetic and Logic Operations • Control Flow – jumps, branches, calls • Parallel Synchronization – bar, sync

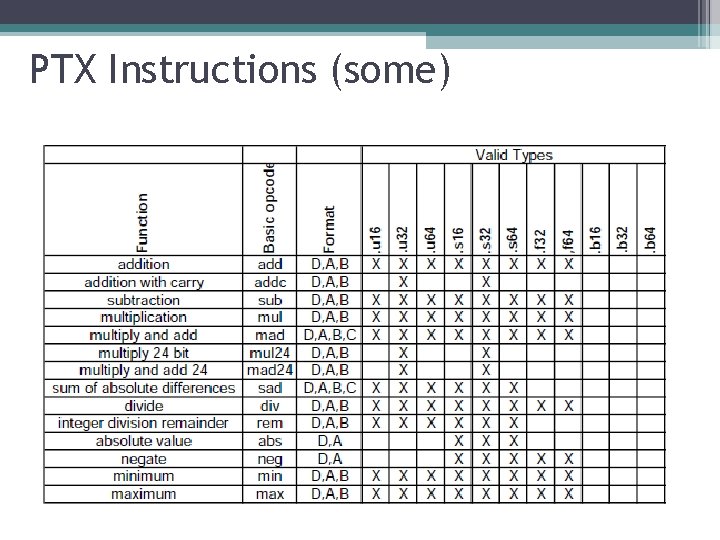

PTX Instructions (some)

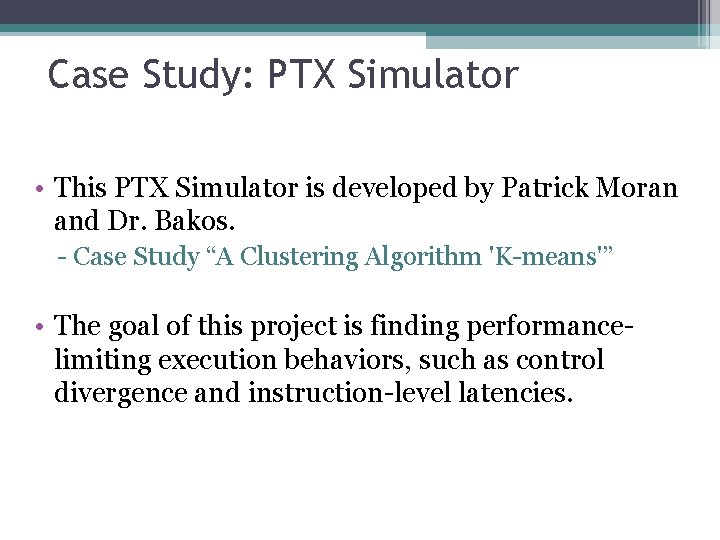

Case Study: PTX Simulator • This PTX Simulator is developed by Patrick Moran and Dr. Bakos. - Case Study “A Clustering Algorithm 'K-means'” • The goal of this project is finding performancelimiting execution behaviors, such as control divergence and instruction-level latencies.

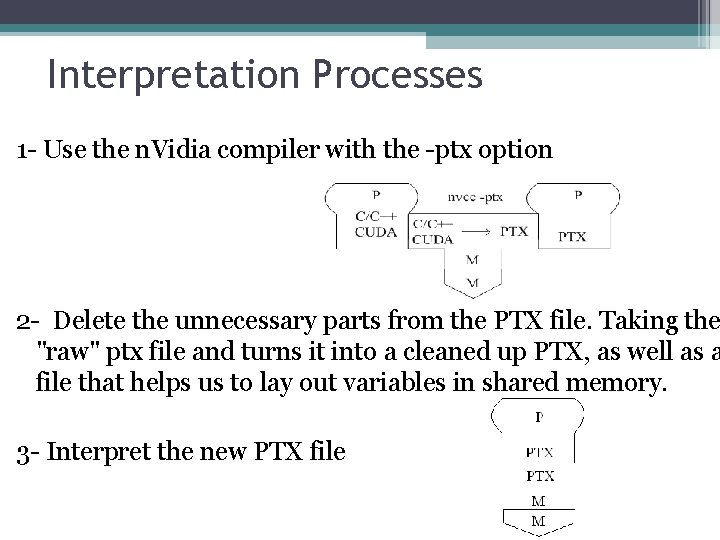

Interpretation Processes 1 - Use the n. Vidia compiler with the -ptx option 2 - Delete the unnecessary parts from the PTX file. Taking the "raw" ptx file and turns it into a cleaned up PTX, as well as a file that helps us to lay out variables in shared memory. 3 - Interpret the new PTX file

Demo Time

Q/A Session ?

Reference NVIDIA Corporation, NVIDIA CUDATM: Programming Guide Version 2. 3. Introduction to CUDA Programming Ibrahim Savran’s notes Course textbook P. A. Moran, J. D. Bakos, “A PTX Simulator for Performance Tuning CUDA Code"

- Slides: 28