The Presidency Department of Performance Monitoring and Evaluation

- Slides: 28

The Presidency Department of Performance Monitoring and Evaluation Update on national evaluation system and call for evaluations Dr Ian Goldman Head of Evaluation and Research Presentation to Mineral Resources Portfolio Committee 19 June 2013

Outline of Presentation Ø Reminder of evaluation system Ø Update on evaluations and the system § Example of findings from ECD § Issues emerging Ø Status of evaluations underway and recommended Ø Implications for portfolio committees The Presidency: Department of Performance Monitoring and Evaluation 2

Key messages Evaluations provide a very important tool for portfolio committees to get an in-depth look at how policies and programmes are performing, and how they need to change Ø It is important for improving performance that committees do use this information, and so departments are accountable – not to punish them, but to ensure they are problem-solving and improving the effectiveness and impact of their work, and not wasting public funds Ø Where Portfolio Committees have concerns about existing or new policies or programmes they can ask departments to undertake rigorous independent evaluations – historically, or for effective diagnostic evaluations prior to a new programme or policy Ø DPME will ensure committees are informed of all evaluations being undertaken and report regularly to the Chairs Ø The Presidency: Department of Performance Monitoring and Evaluation 3

1. Reminder on evaluation system The Presidency: Department of Performance Monitoring and Evaluation 4

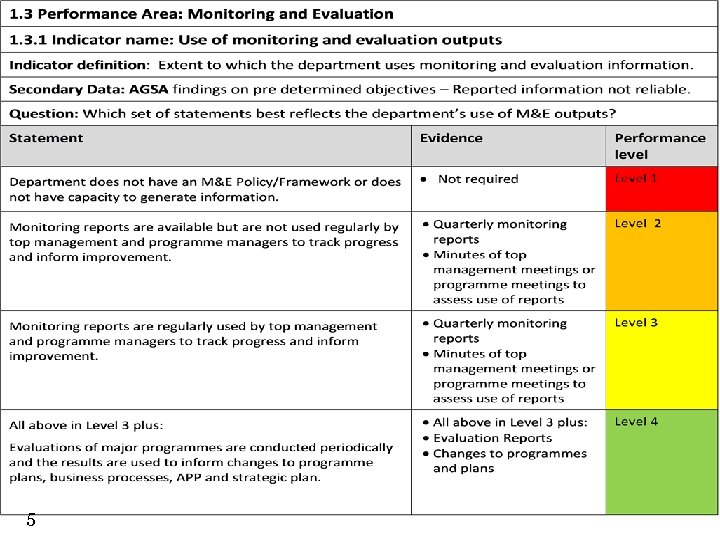

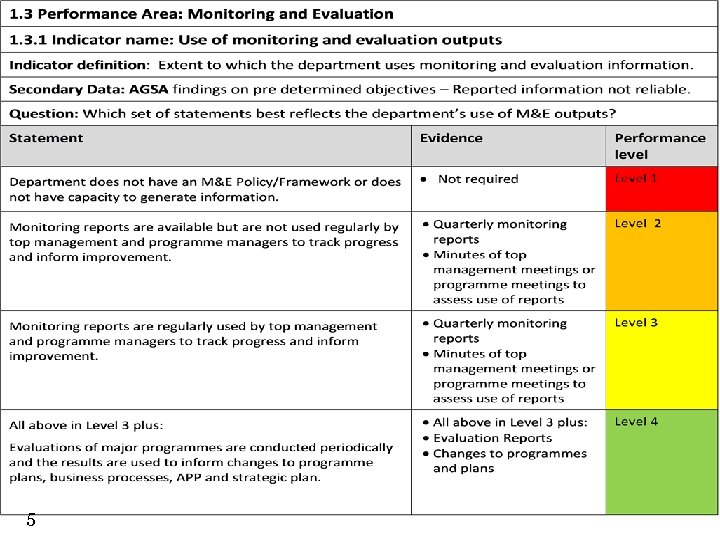

5

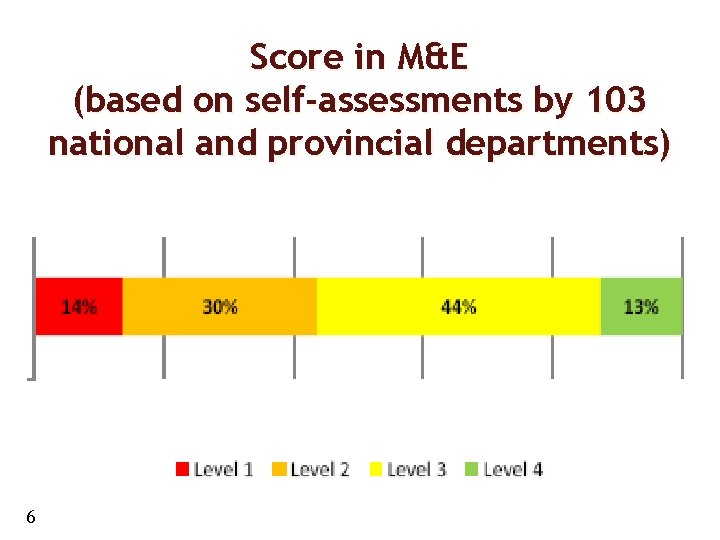

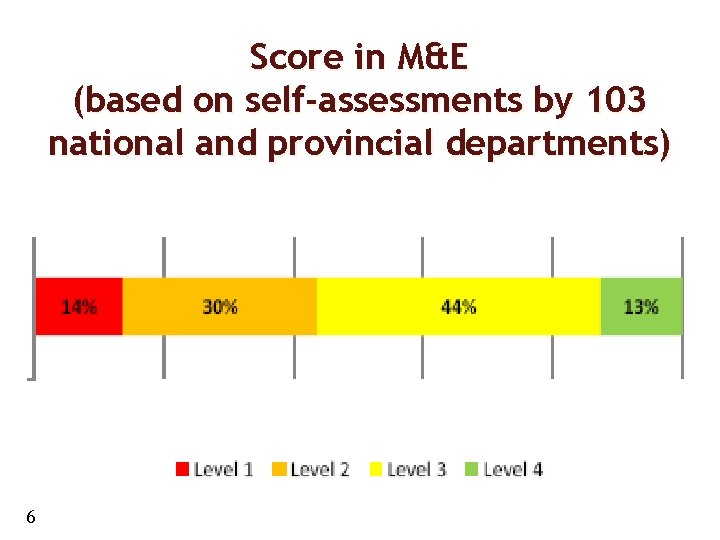

Score in M&E (based on self-assessments by 103 national and provincial departments) 6

Problem Ø Evaluation is applied sporadically and not informing planning, policy-making and budgeting sufficiently, so we are missing the opportunity to improve Government’s effectiveness, efficiency, impact and sustainability. The Presidency: Department of Performance Monitoring and Evaluation 7

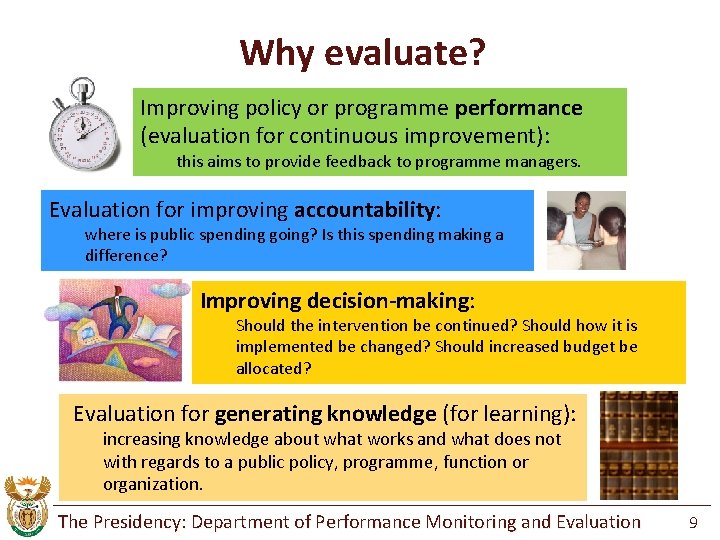

Why evaluate? Improving policy or programme performance (evaluation for continuous improvement): this aims to provide feedback to programme managers. Evaluation for improving accountability: where is public spending going? Is this spending making a difference? Improving decision-making: Should the intervention be continued? Should how it is implemented be changed? Should increased budget be allocated? Evaluation for generating knowledge (for learning): increasing knowledge about what works and what does not with regards to a public policy, programme, function or organization. The Presidency: Department of Performance Monitoring and Evaluation 9

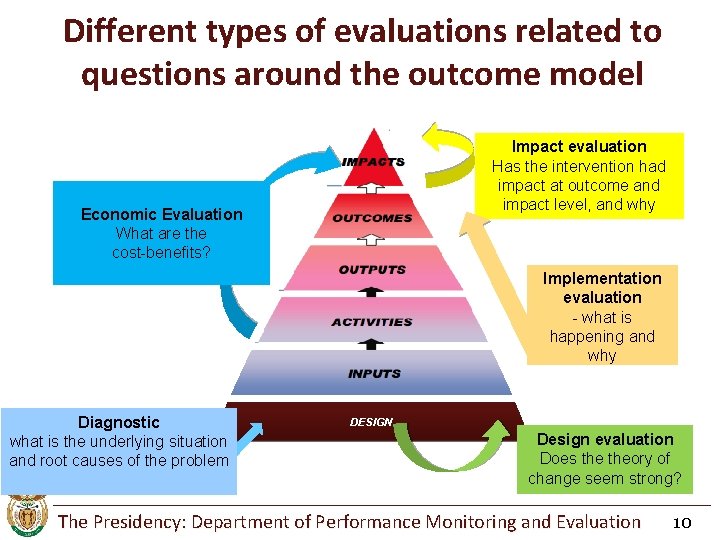

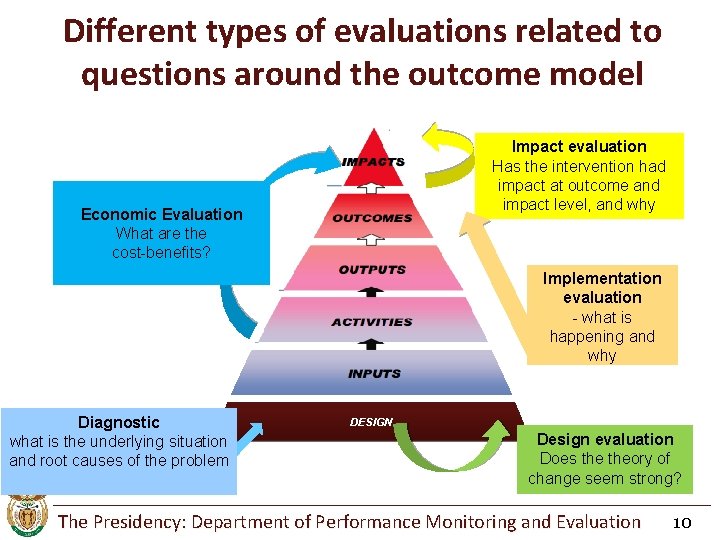

Different types of evaluations related to questions around the outcome model Impact evaluation Has the intervention had impact at outcome and impact level, and why Economic Evaluation What are the cost-benefits? Implementation evaluation - what is happening and why Diagnostic what is the underlying situation and root causes of the problem DESIGN Design evaluation Does theory of change seem strong? The Presidency: Department of Performance Monitoring and Evaluation 10

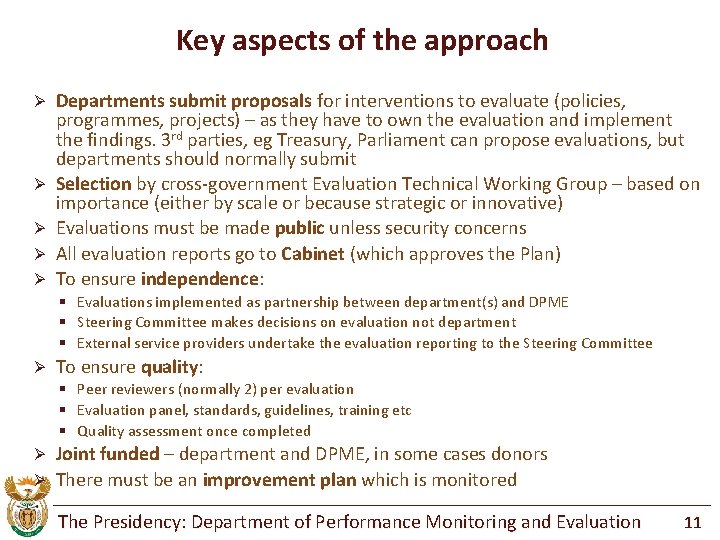

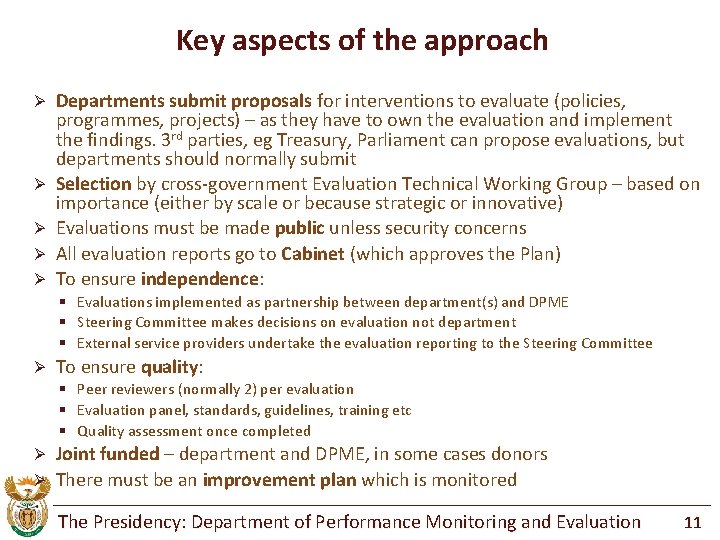

Key aspects of the approach Ø Ø Ø Departments submit proposals for interventions to evaluate (policies, programmes, projects) – as they have to own the evaluation and implement the findings. 3 rd parties, eg Treasury, Parliament can propose evaluations, but departments should normally submit Selection by cross-government Evaluation Technical Working Group – based on importance (either by scale or because strategic or innovative) Evaluations must be made public unless security concerns All evaluation reports go to Cabinet (which approves the Plan) To ensure independence: § Evaluations implemented as partnership between department(s) and DPME § Steering Committee makes decisions on evaluation not department § External service providers undertake the evaluation reporting to the Steering Committee Ø To ensure quality: § Peer reviewers (normally 2) per evaluation § Evaluation panel, standards, guidelines, training etc § Quality assessment once completed Joint funded – department and DPME, in some cases donors Ø There must be an improvement plan which is monitored Ø The Presidency: Department of Performance Monitoring and Evaluation 11

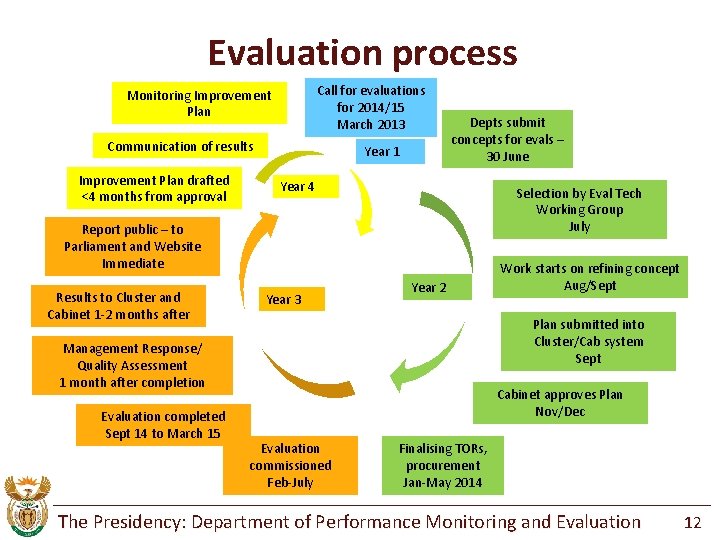

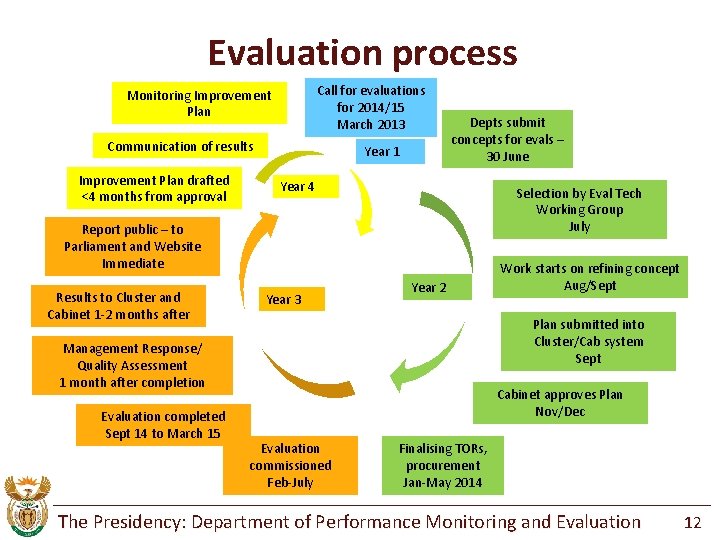

Evaluation process Call for evaluations for 2014/15 March 2013 Monitoring Improvement Plan Communication of results Improvement Plan drafted <4 months from approval Year 1 Depts submit concepts for evals – 30 June Year 4 Selection by Eval Tech Working Group July Report public – to Parliament and Website Immediate Results to Cluster and Cabinet 1 -2 months after Year 3 Year 2 Plan submitted into Cluster/Cab system Sept Management Response/ Quality Assessment 1 month after completion Evaluation completed Sept 14 to March 15 Work starts on refining concept Aug/Sept Cabinet approves Plan Nov/Dec Evaluation commissioned Feb-July Finalising TORs, procurement Jan-May 2014 The Presidency: Department of Performance Monitoring and Evaluation 12

2. Update on evaluations and the system The Presidency: Department of Performance Monitoring and Evaluation 13

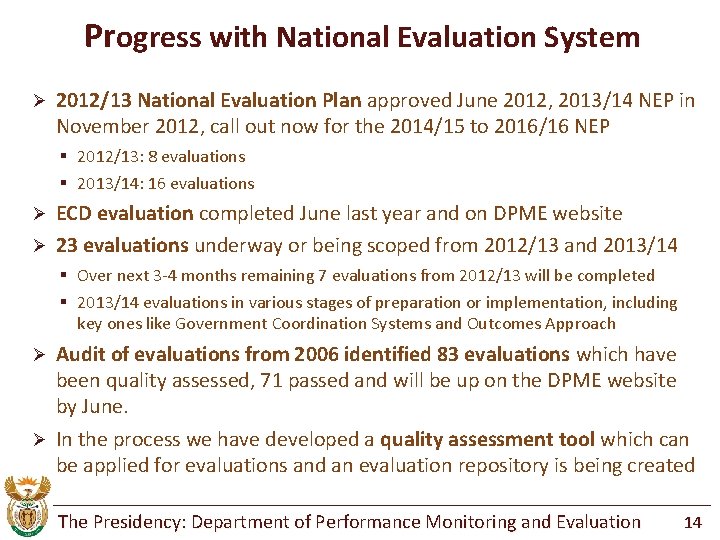

Progress with National Evaluation System Ø 2012/13 National Evaluation Plan approved June 2012, 2013/14 NEP in November 2012, call out now for the 2014/15 to 2016/16 NEP § 2012/13: 8 evaluations § 2013/14: 16 evaluations ECD evaluation completed June last year and on DPME website Ø 23 evaluations underway or being scoped from 2012/13 and 2013/14 Ø § Over next 3 -4 months remaining 7 evaluations from 2012/13 will be completed § 2013/14 evaluations in various stages of preparation or implementation, including key ones like Government Coordination Systems and Outcomes Approach Audit of evaluations from 2006 identified 83 evaluations which have been quality assessed, 71 passed and will be up on the DPME website by June. Ø In the process we have developed a quality assessment tool which can be applied for evaluations and an evaluation repository is being created Ø The Presidency: Department of Performance Monitoring and Evaluation 14

Progress (2) >10 Guidelines and templates - ranging from TORs to Improvement Plans Ø Standards for evaluations and competences drafted and out for consultation, and standards have guided the quality assessment tool Ø 2 courses developed, over 200 government staff trained Ø Evaluation panel developed with 42 organisations which simplifies procurement Ø Gauteng, W Cape provinces have developed provincial evaluation plans. DPME is working with other provinces who wish to develop PEPs, starting with Free State Ø 1 department has developed a departmental evaluation plan (dti) Ø The Presidency: Department of Performance Monitoring and Evaluation 15

3. What are we finding? The Presidency: Department of Performance Monitoring and Evaluation 16

Findings on Early Childhood Development (ECD) Ø Ø Ø Ø Report on DPME website Need to focus on children from conception, not from birth, which requires changes in Children’s Act Very small numbers of the youngest children (0 -2 years old) are in formal early child care and education (ECCE) centres. That plus emphasis on pregnancy means greater involvement of Health Poorer children still don’t have sufficient access. Need to prioritise. Current provision privileges children who can access centre-based services and whose families can afford fees, rather than home- and community-based provision Need to widen set of services available Inter-sectoral coordination mechanism for providing ECD and associated services needs to be strengthened Improvement Plan being implemented The Presidency: Department of Performance Monitoring and Evaluation 17

Next evaluations to be public Ø Grade R Ø Business Process Outsourcing Ø Both are coming up with significant findings which have major implications for how the programmes are designed The Presidency: Department of Performance Monitoring and Evaluation 18

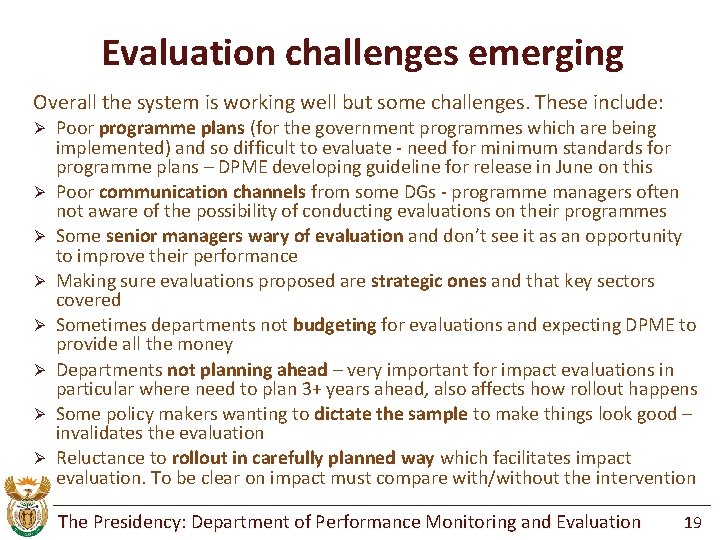

Evaluation challenges emerging Overall the system is working well but some challenges. These include: Ø Ø Ø Ø Poor programme plans (for the government programmes which are being implemented) and so difficult to evaluate - need for minimum standards for programme plans – DPME developing guideline for release in June on this Poor communication channels from some DGs - programme managers often not aware of the possibility of conducting evaluations on their programmes Some senior managers wary of evaluation and don’t see it as an opportunity to improve their performance Making sure evaluations proposed are strategic ones and that key sectors covered Sometimes departments not budgeting for evaluations and expecting DPME to provide all the money Departments not planning ahead – very important for impact evaluations in particular where need to plan 3+ years ahead, also affects how rollout happens Some policy makers wanting to dictate the sample to make things look good – invalidates the evaluation Reluctance to rollout in carefully planned way which facilitates impact evaluation. To be clear on impact must compare with/without the intervention The Presidency: Department of Performance Monitoring and Evaluation 19

4. Status with evaluations The Presidency: Department of Performance Monitoring and Evaluation 20

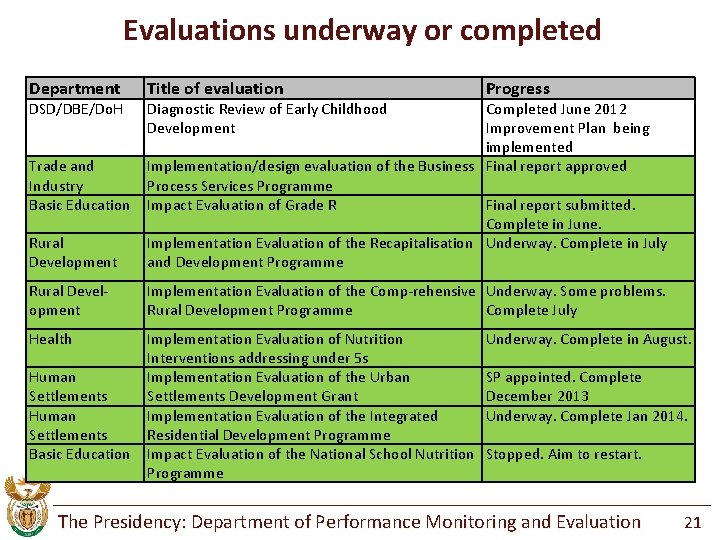

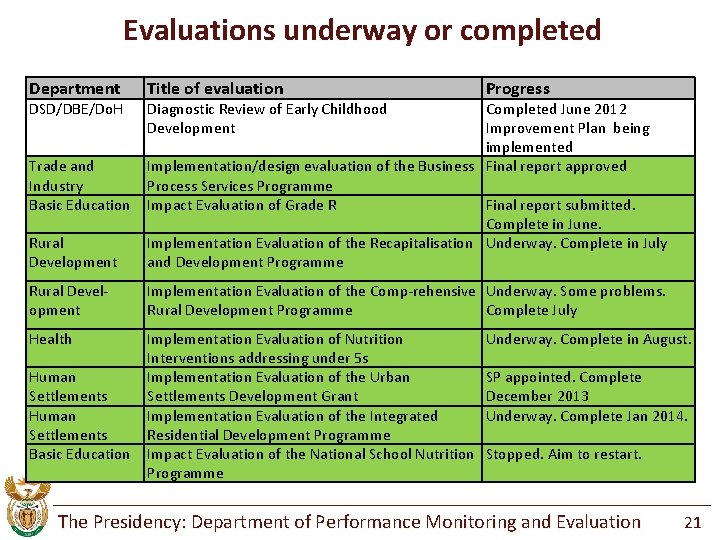

Evaluations underway or completed Department DSD/DBE/Do. H Title of evaluation Rural Development Completed June 2012 Improvement Plan being implemented Implementation/design evaluation of the Business Final report approved Process Services Programme Impact Evaluation of Grade R Final report submitted. Complete in June. Implementation Evaluation of the Recapitalisation Underway. Complete in July and Development Programme Rural Development Implementation Evaluation of the Comp-rehensive Underway. Some problems. Rural Development Programme Complete July Health Implementation Evaluation of Nutrition Interventions addressing under 5 s Implementation Evaluation of the Urban Settlements Development Grant Implementation Evaluation of the Integrated Residential Development Programme Impact Evaluation of the National School Nutrition Programme Trade and Industry Basic Education Human Settlements Basic Education Diagnostic Review of Early Childhood Development Progress Underway. Complete in August. SP appointed. Complete December 2013 Underway. Complete Jan 2014. Stopped. Aim to restart. The Presidency: Department of Performance Monitoring and Evaluation 21

Evaluations for 2013/14 (in various stages of preparation or implementation) 1. 2. 3. 4. 5. 6. 7. 8. Evaluation of Export Marketing Investment Assistance incentive programme (DTI). Evaluation of Support Programme for Industrial Innovation (DTI). Impact evaluation of Technology and Human Resources for Industry programme (DTI). Evaluation of Military Veterans Economic Empowerment Programme (Military Veterans). Impact evaluation on Tax Compliance Cost of Small Businesses (SARS). Impact evaluation of the Comprehensive Agriculture Support Programme (DAFF). Brought forward Evaluation of MAFISA from 2014/15. Evaluation of the Socio-Economic Impact of Restitution programme (DRDLR). The Presidency: Department of Performance Monitoring and Evaluation 22

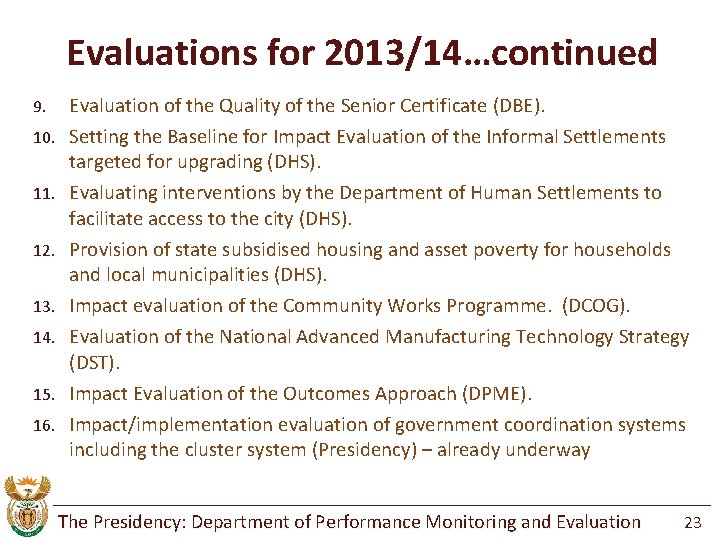

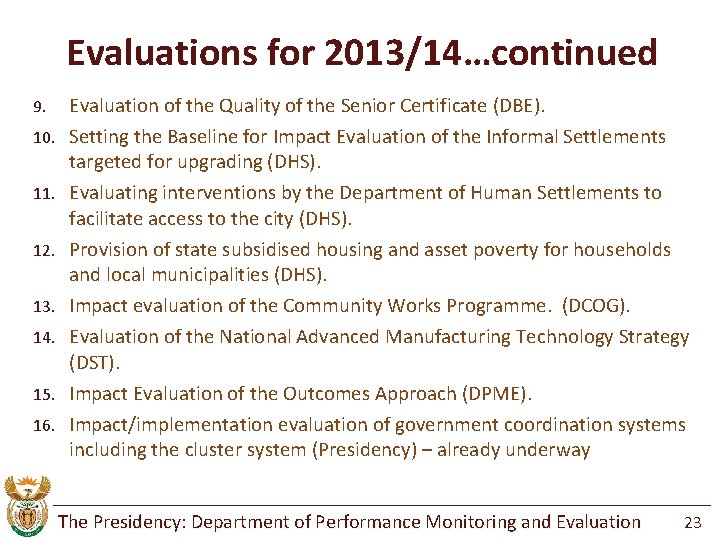

Evaluations for 2013/14…continued 9. 10. 11. 12. 13. 14. 15. 16. Evaluation of the Quality of the Senior Certificate (DBE). Setting the Baseline for Impact Evaluation of the Informal Settlements targeted for upgrading (DHS). Evaluating interventions by the Department of Human Settlements to facilitate access to the city (DHS). Provision of state subsidised housing and asset poverty for households and local municipalities (DHS). Impact evaluation of the Community Works Programme. (DCOG). Evaluation of the National Advanced Manufacturing Technology Strategy (DST). Impact Evaluation of the Outcomes Approach (DPME). Impact/implementation evaluation of government coordination systems including the cluster system (Presidency) – already underway The Presidency: Department of Performance Monitoring and Evaluation 23

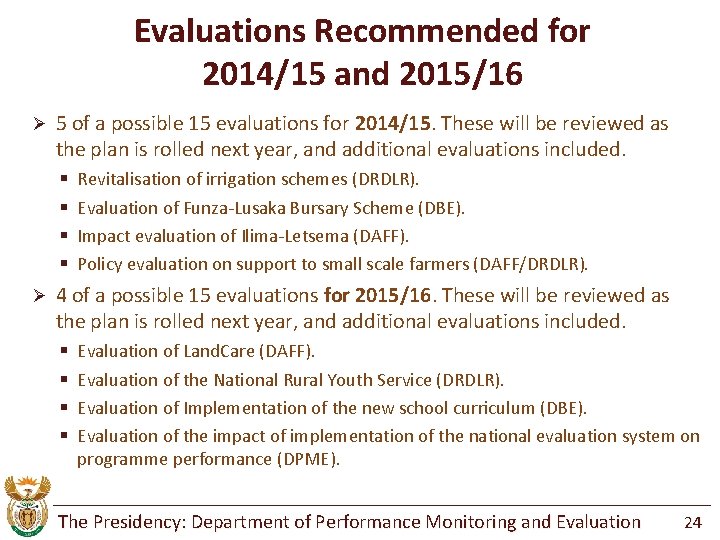

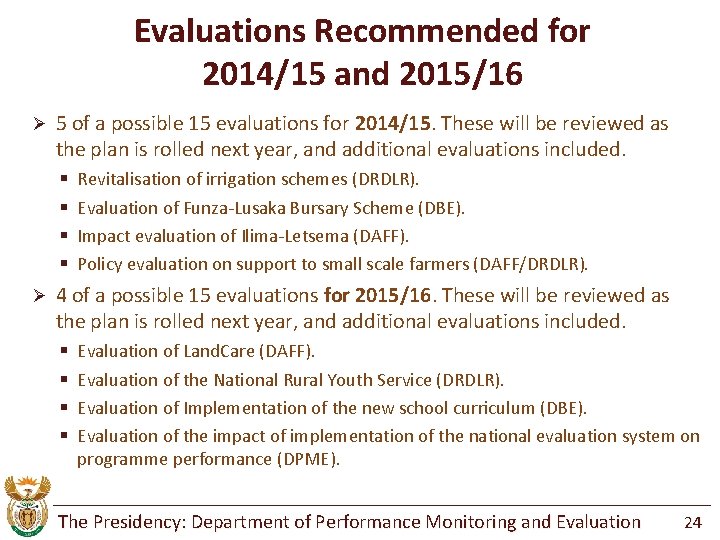

Evaluations Recommended for 2014/15 and 2015/16 Ø 5 of a possible 15 evaluations for 2014/15. These will be reviewed as the plan is rolled next year, and additional evaluations included. § § Ø Revitalisation of irrigation schemes (DRDLR). Evaluation of Funza-Lusaka Bursary Scheme (DBE). Impact evaluation of Ilima-Letsema (DAFF). Policy evaluation on support to small scale farmers (DAFF/DRDLR). 4 of a possible 15 evaluations for 2015/16. These will be reviewed as the plan is rolled next year, and additional evaluations included. § § Evaluation of Land. Care (DAFF). Evaluation of the National Rural Youth Service (DRDLR). Evaluation of Implementation of the new school curriculum (DBE). Evaluation of the impact of implementation of the national evaluation system on programme performance (DPME). The Presidency: Department of Performance Monitoring and Evaluation 24

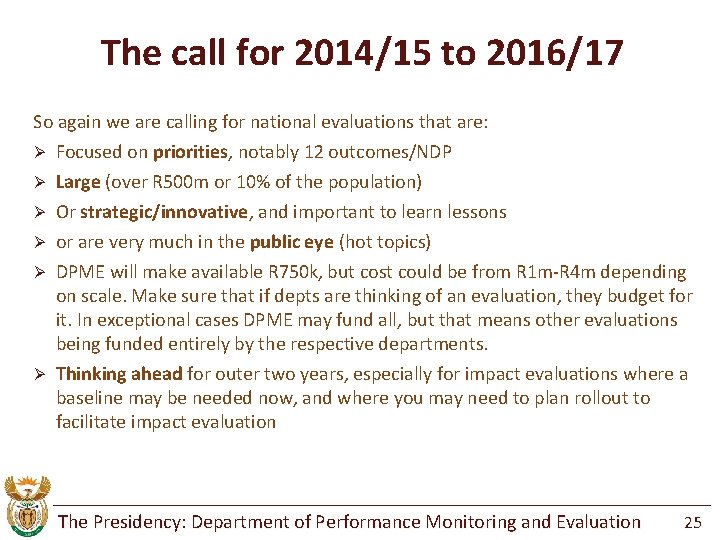

The call for 2014/15 to 2016/17 So again we are calling for national evaluations that are: Ø Ø Ø Focused on priorities, notably 12 outcomes/NDP Large (over R 500 m or 10% of the population) Or strategic/innovative, and important to learn lessons or are very much in the public eye (hot topics) DPME will make available R 750 k, but cost could be from R 1 m-R 4 m depending on scale. Make sure that if depts are thinking of an evaluation, they budget for it. In exceptional cases DPME may fund all, but that means other evaluations being funded entirely by the respective departments. Thinking ahead for outer two years, especially for impact evaluations where a baseline may be needed now, and where you may need to plan rollout to facilitate impact evaluation The Presidency: Department of Performance Monitoring and Evaluation 25

5. Implications for Portfolio Committees The Presidency: Department of Performance Monitoring and Evaluation 26

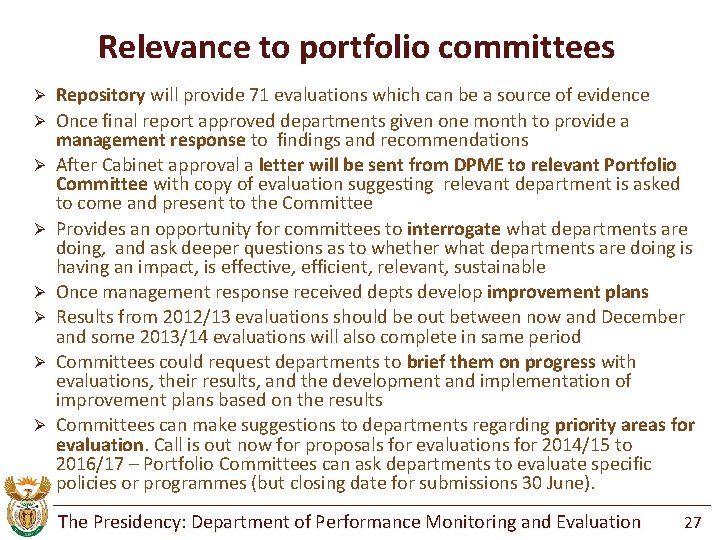

Relevance to portfolio committees Ø Ø Ø Ø Repository will provide 71 evaluations which can be a source of evidence Once final report approved departments given one month to provide a management response to findings and recommendations After Cabinet approval a letter will be sent from DPME to relevant Portfolio Committee with copy of evaluation suggesting relevant department is asked to come and present to the Committee Provides an opportunity for committees to interrogate what departments are doing, and ask deeper questions as to whether what departments are doing is having an impact, is effective, efficient, relevant, sustainable Once management response received depts develop improvement plans Results from 2012/13 evaluations should be out between now and December and some 2013/14 evaluations will also complete in same period Committees could request departments to brief them on progress with evaluations, their results, and the development and implementation of improvement plans based on the results Committees can make suggestions to departments regarding priority areas for evaluation. Call is out now for proposals for evaluations for 2014/15 to 2016/17 – Portfolio Committees can ask departments to evaluate specific policies or programmes (but closing date for submissions 30 June). The Presidency: Department of Performance Monitoring and Evaluation 27

Conclusions (1) Ø Ø Ø Interest is growing – more departments getting involved, more provinces, and more types of evaluation Development of design evaluation will potentially have very big impact – will build capacity in departments to undertake The story is travelling and SA is now being quoted around the world A challenge may emerge now as the evaluation reports start being finalised and the focus shifts to improvement plans – need close monitoring of development and implementation of improvement plans to ensure that evaluations add value Parliament could play a key oversight role in this regard – committees could request departments to present the evaluation results to them, request departments to present improvement plans to them, and request departments to present progress reports against the improvement plans to them The Presidency: Department of Performance Monitoring and Evaluation 28

Conclusions (2) Evaluations provide a very important tool for portfolio committees to get an in-depth look at how policies and programmes are performing, and how they need to change Ø It is important for improving performance that committees do use this information, and so departments are accountable – not to punish them, but to ensure they are problem-solving and improving the effectiveness and impact of their work, and not wasting public funds Ø Where Portfolio Committees have concerns about existing or new policies or programmes they can ask departments to undertake rigorous independent evaluations – historically, or for effective diagnostic evaluations prior to a new programme or policy (closing date for 2014/15 is 30 June) Ø DPME will ensure committees are informed of all evaluations being undertaken and report regularly to the Chairs Ø The Presidency: Department of Performance Monitoring and Evaluation 29