The Performance of Spin Lock Alternatives for Shared

- Slides: 22

The Performance of Spin Lock Alternatives for Shared. Memory Microprocessors Thomas E. Anderson Presented by David Woodard

Introduction n Shared Memory Multiprocessors q q q Need to protect shared data structures (critical sections) Often share resources including ports to memory (bus, network, etc. ) Challenge: Efficiently implement scalable, low latency mechanisms to protect shared data

Introduction n Spin Locks q q One approach to protecting shared data on multiprocessors Efficient on some systems, but greatly degrades performance on others

Multiprocessor Architecture n Paper focuses on two dimensions for design q q n Interconnect type (bus/multistage network) Cache coherence strategy Six Proposed Models q q q Bus: no cache coherence Bus: snoopy write through invalidation cache coherence Bus: snoopy write-back invalidation cache coherence Bus: snoopy distributed write cache coherence Multistage network: no cache coherence Multistage network: invalidation based cache coherence

Mutual Exclusion and Atomic Operations n n Most processors support atomic read/write operations Test and Set Load the (old) value of lock Store TRUE in lock n If the loaded value is false, continue else continue to try until lock is free (spin lock)

Test and Set in a Spin Lock n Advantages q q n Quickly gain access to lock when available Works well on systems with few processors or low contention Disadvantages q q q Slows down other processors (including the processor holding the lock!) Shared resources are also used to carry out the test and set instructions More complex algorithms to reduce the burden on resources increases latency in acquiring lock

Spin on Read n Intended for processors with per CPU coherent caches q q n Each CPU can spin testing the value of the lock in its own cache If free, then send test and lock transaction Problem q q Nature of cache coherence protocols slow down process More pronounced in systems with invalidation based policies

Why Quiescence is Slow for Spin on Read n n n n When the lock is released its value is modified, hence all cached copies of it are invalidated Subsequent reads on all processors miss in cache, hence generating bus contention Many see the lock free at the same time because there is a delay in satisfying the cache miss of the one that will eventually succeed in getting the lock next Many attempt to set it using TSL Each attempt generates contention and invalidates all copies All but one attempt fails, causing the CPU to revert to reading The first read misses in the cache! By the time all this is over, the critical section has completed and the lock has been freed again!

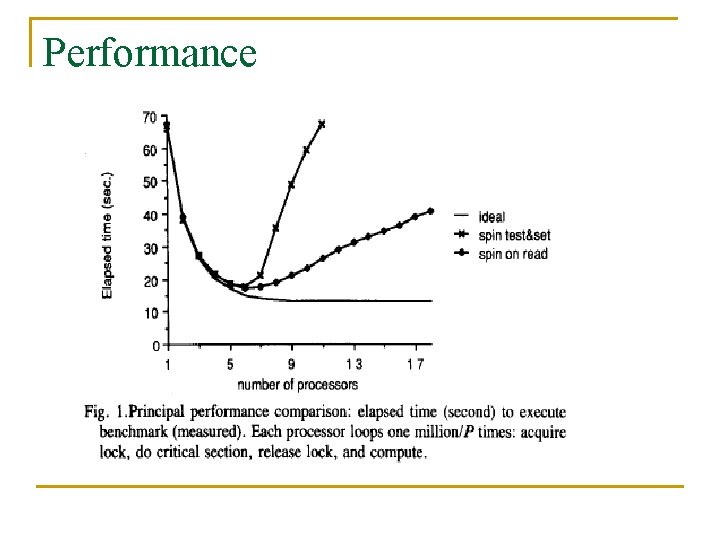

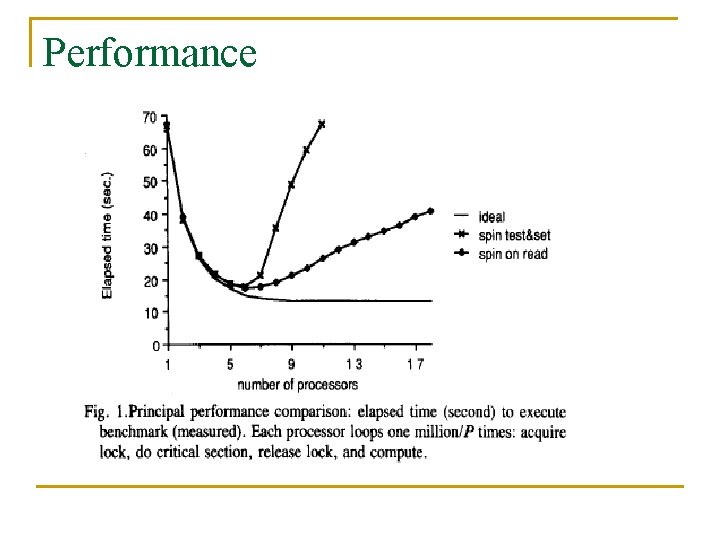

Performance

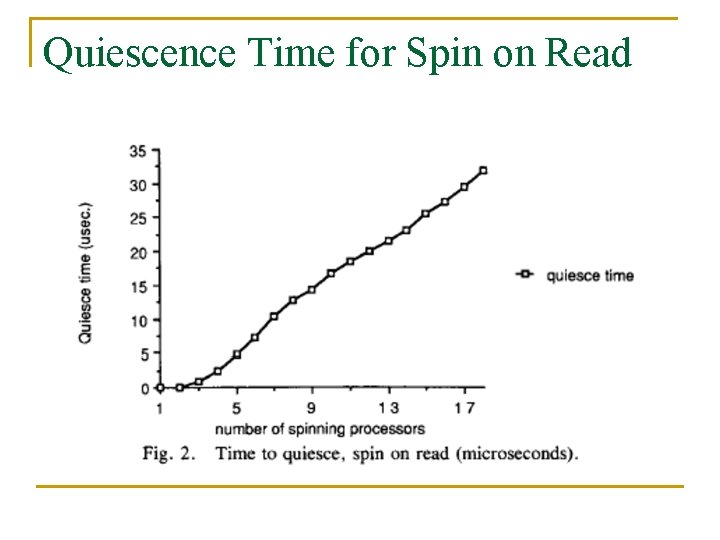

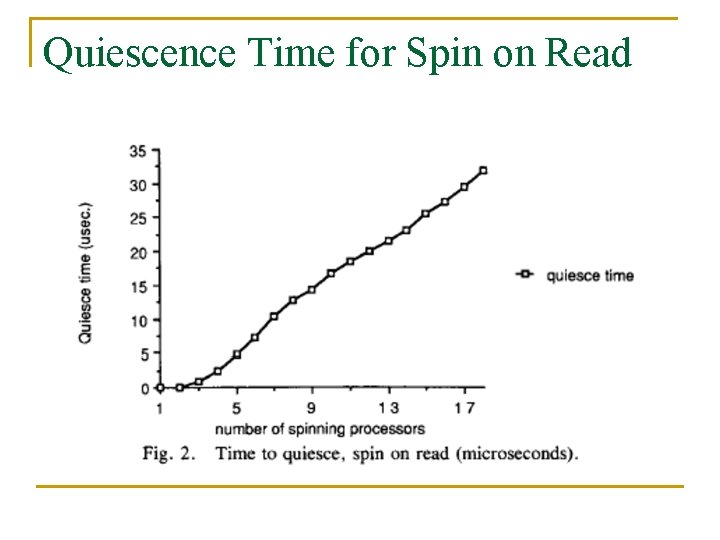

Quiescence Time for Spin on Read

Proposed Software Solutions n Based on CSMA (Carrier Sense Multiple Access) q q n Basic Idea: Adjust the length of time between attempts to access shared resource Dynamically or Statically set delay? When to delay? q q After Spin on Read returns true, delay before setting After every memory access Better on models where Spin on Read generates contention

Proposed Software Solutions n Delay on attempted set q q n Reduces the number of TSLs thereby reducing contention Works well when delay is short and there is little contention OR when delay is long and there is a lot of contention Delay on every memory access q Works well on systems without per CPU caches n Reduces the number of memory accesses thereby reducing the number of read instructions

Length of Delay - Static n Advantages q q n Each processor is given its own “slot”; this makes it easy to assign priority to CPUs Few empty slots = good latency; Few crowded slots = little contention Disadvantages q q Doesn’t adjust to environments prone to bursts Processors with same delay that have conflict will always have conflict

Length of Delay - Dynamic n Advantages q n Adjusts to evolving environments; increases delay time after each conflict (up to a ceiling) Disadvantages q q What criteria determine the amount of back off? Long critical sections could keep increasing delay in some CPUs n Bound maximum delay: What if the bound is too high? Too low?

Proposed Software Solution Queuing n Flag Based Approach q q As CPU waits Add to queue Waiting CPUs spin on flag of processor ahead of it in the queue (different for each CPU) n q No bus or cache contention Queue assertion and deletion require locks n Not useful for small critical sections (such as queue operations!)

Proposed Software Solution Queuing n Counter Based Approach q q Each CPU does an atomic read and increment to acquire a unique sequence number When a processor releases a lock it signals the processor with the next successive sequence number n n Sets a flag in a different cache block unique to the waiting processor Processor spinning on its own flag sees the change and continues (occurs invalidation and read miss cycles)

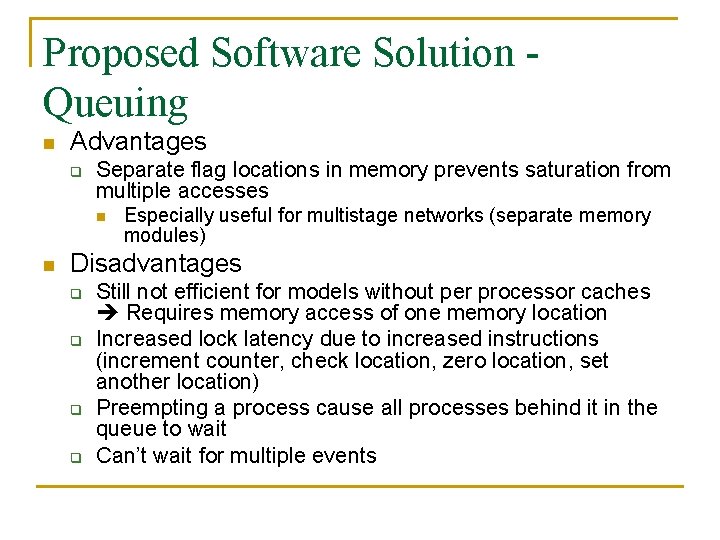

Proposed Software Solution Queuing n Advantages q Separate flag locations in memory prevents saturation from multiple accesses n n Especially useful for multistage networks (separate memory modules) Disadvantages q q Still not efficient for models without per processor caches Requires memory access of one memory location Increased lock latency due to increased instructions (increment counter, check location, zero location, set another location) Preempting a process cause all processes behind it in the queue to wait Can’t wait for multiple events

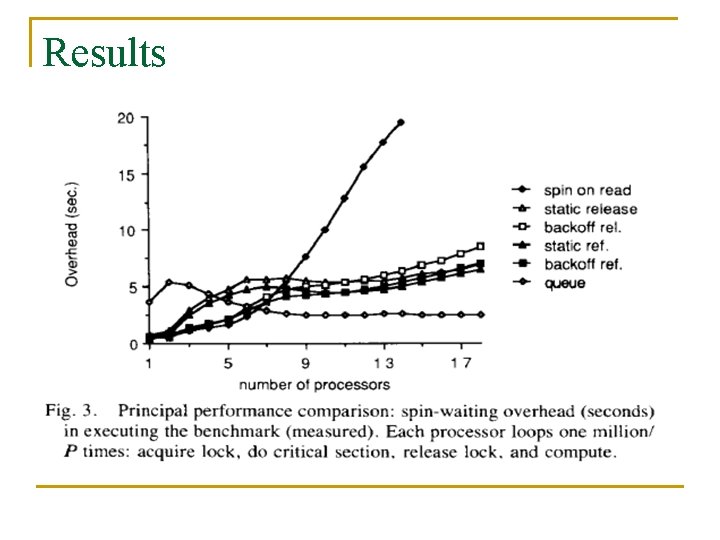

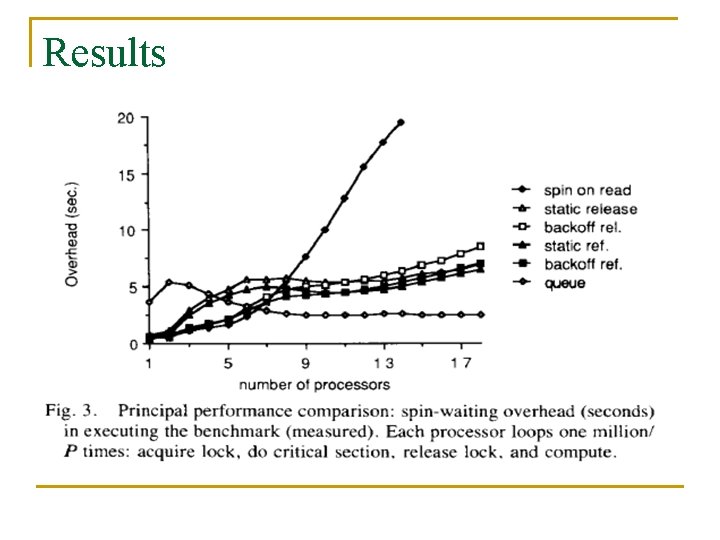

Results

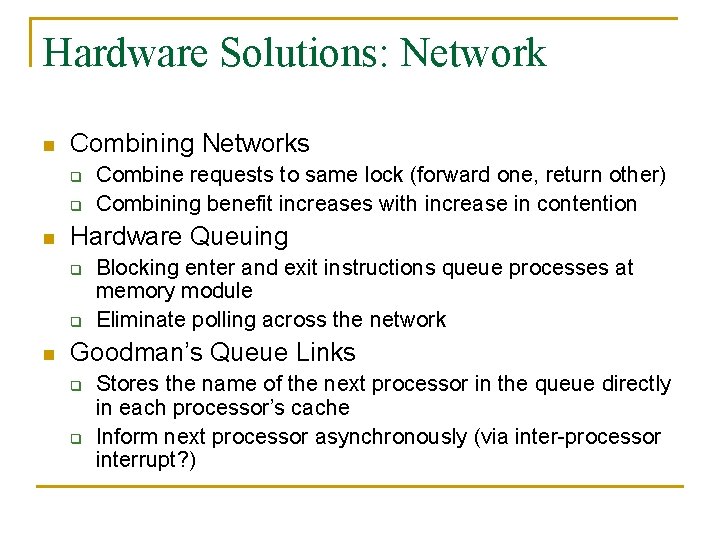

Hardware Solutions: Network n Combining Networks q q n Hardware Queuing q q n Combine requests to same lock (forward one, return other) Combining benefit increases with increase in contention Blocking enter and exit instructions queue processes at memory module Eliminate polling across the network Goodman’s Queue Links q q Stores the name of the next processor in the queue directly in each processor’s cache Inform next processor asynchronously (via inter-processor interrupt? )

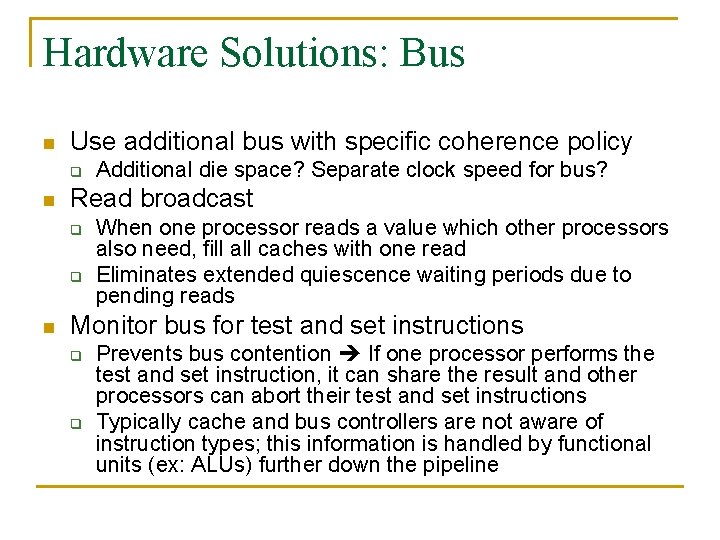

Hardware Solutions: Bus n Use additional bus with specific coherence policy q n Read broadcast q q n Additional die space? Separate clock speed for bus? When one processor reads a value which other processors also need, fill all caches with one read Eliminates extended quiescence waiting periods due to pending reads Monitor bus for test and set instructions q q Prevents bus contention If one processor performs the test and set instruction, it can share the result and other processors can abort their test and set instructions Typically cache and bus controllers are not aware of instruction types; this information is handled by functional units (ex: ALUs) further down the pipeline

Conclusions n n Traditional Spin Lock approaches are not affective for large numbers of processors When contention is low, models borrowed from CSMA work well q n When contention is high, queuing methods work well q n Delay slots Trades lock latency for more efficient/parallelized lock hand -off Hardware approaches are very promising, but requires additional logic Additional cost in die size and money to manufacture

Resources n n Dr. Jonathan Walpole http: //web. cecs. pdx. edu/~walpole/class/cs 53 3/winter 2008/home. html Emma Kuo: http: //web. cecs. pdx. edu/~walpole/class/cs 53 3/winter 2007/slides/42. pdf