The Open Science Grid 1 The Vision Practical

- Slides: 45

The Open Science Grid 1

The Vision Practical support for end-to-end community systems in a heterogeneous gobal environment to Transform compute and data intensive science through a national cyberinfrastructure that includes from the smallest to the largest organizations. The Open Science Grid 2

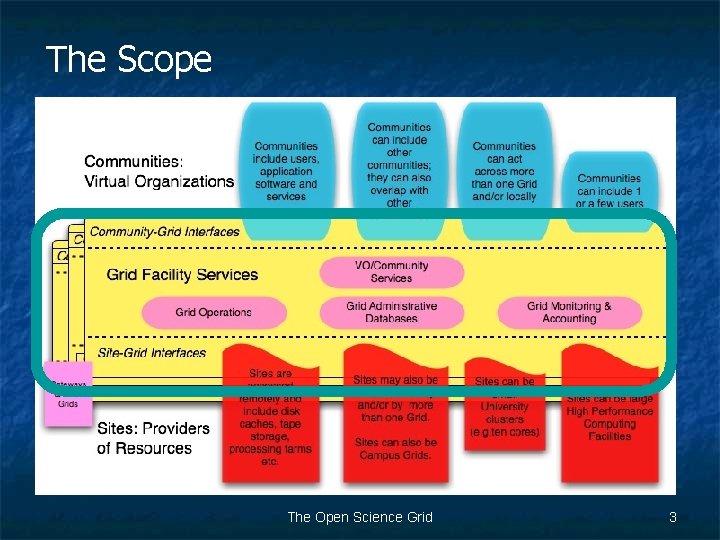

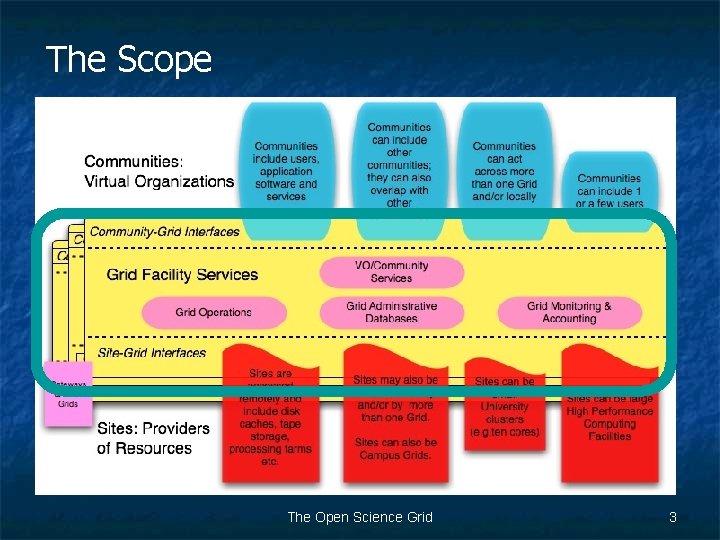

The Scope The Open Science Grid 3

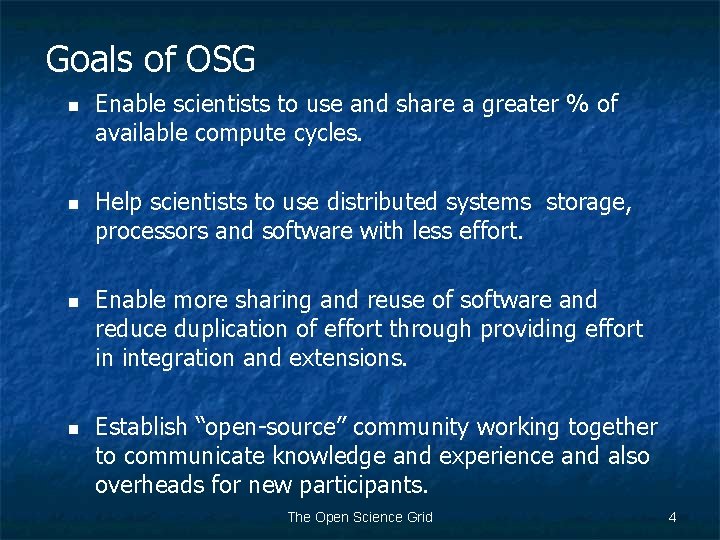

Goals of OSG n n Enable scientists to use and share a greater % of available compute cycles. Help scientists to use distributed systems storage, processors and software with less effort. Enable more sharing and reuse of software and reduce duplication of effort through providing effort in integration and extensions. Establish “open-source” community working together to communicate knowledge and experience and also overheads for new participants. The Open Science Grid 4

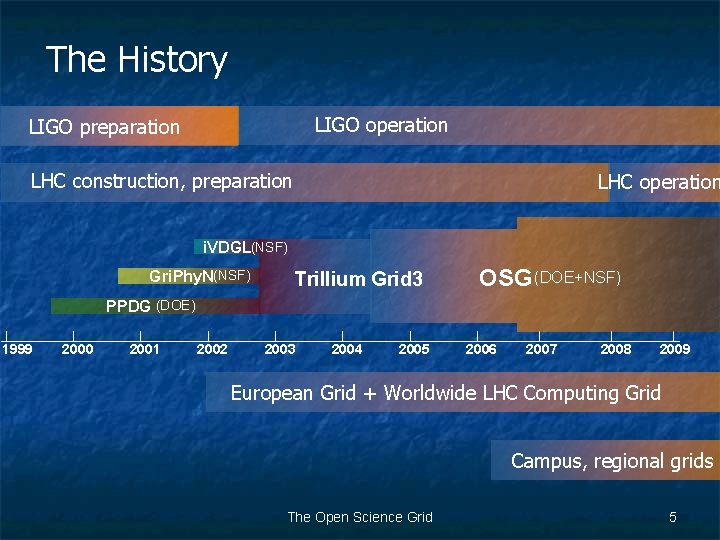

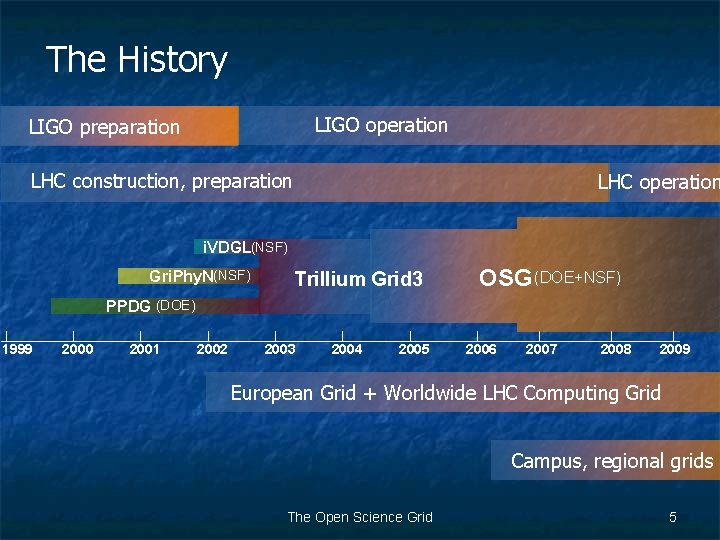

The History LIGO operation LIGO preparation LHC construction, preparation LHC operation i. VDGL(NSF) Gri. Phy. N(NSF) Trillium Grid 3 OSG (DOE+NSF) PPDG (DOE) 1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 European Grid + Worldwide LHC Computing Grid Campus, regional grids The Open Science Grid 5

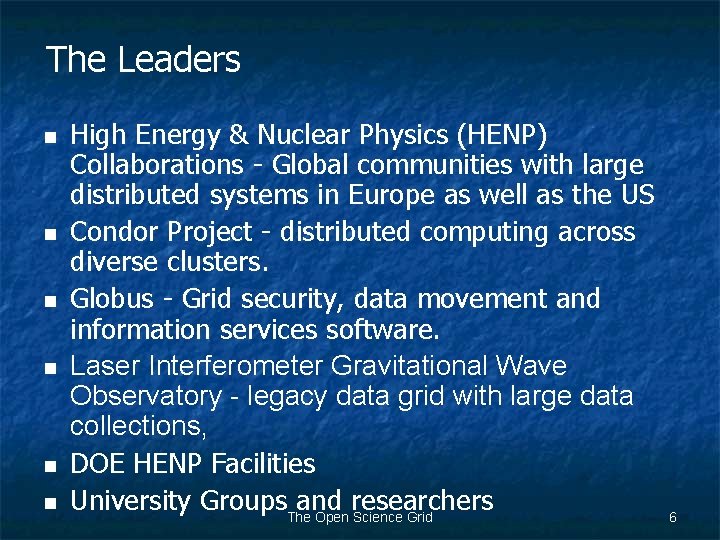

The Leaders n n n High Energy & Nuclear Physics (HENP) Collaborations - Global communities with large distributed systems in Europe as well as the US Condor Project - distributed computing across diverse clusters. Globus - Grid security, data movement and information services software. Laser Interferometer Gravitational Wave Observatory - legacy data grid with large data collections, DOE HENP Facilities University Groups. The and researchers Open Science Grid 6

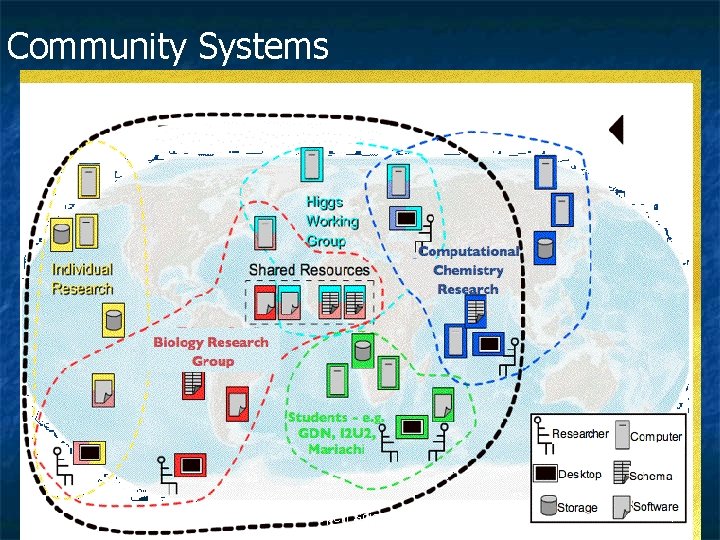

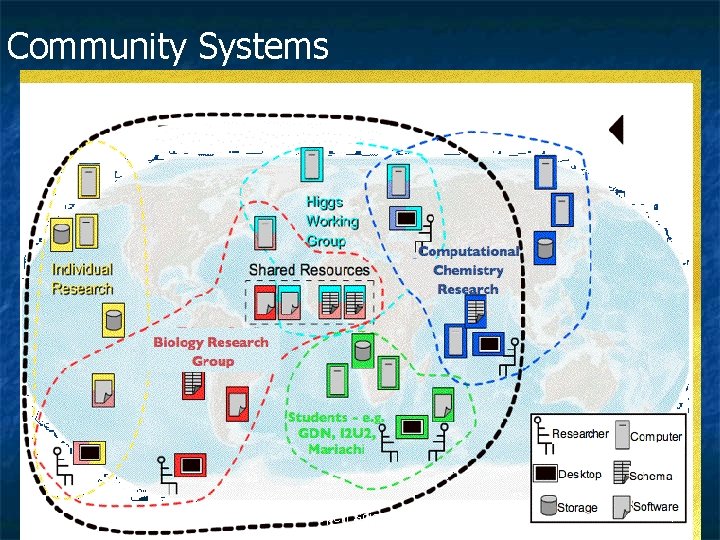

Community Systems The Open Science Grid 7

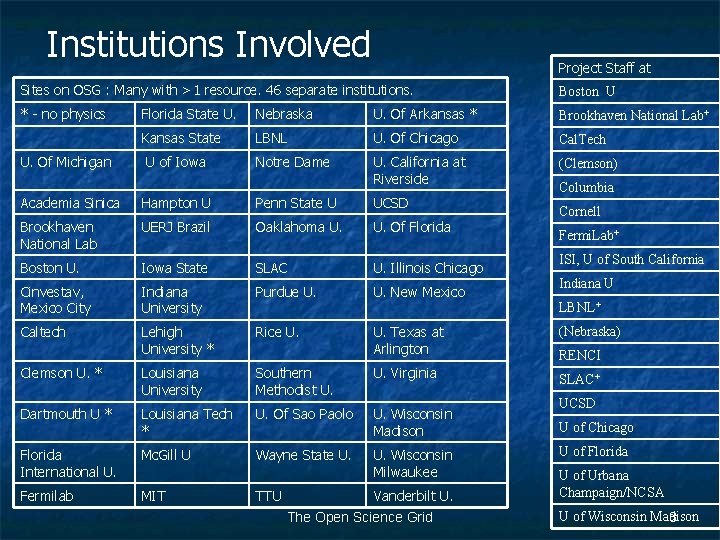

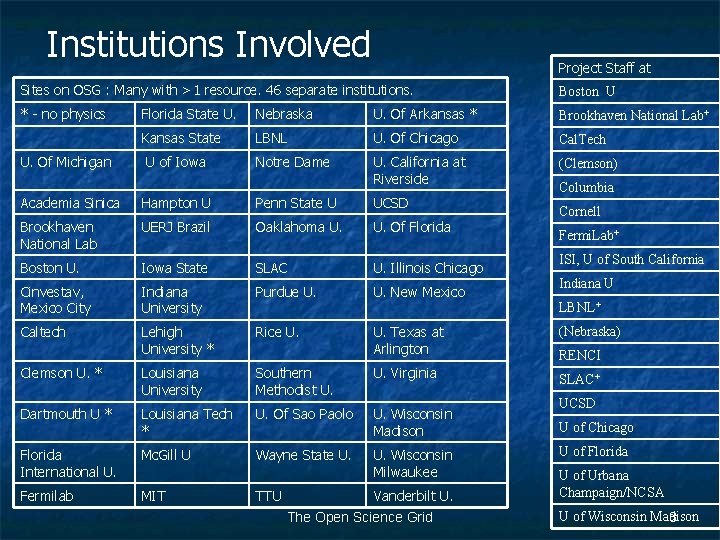

Institutions Involved Project Staff at Sites on OSG : Many with >1 resource. 46 separate institutions. Boston U * - no physics Florida State U. Nebraska U. Of Arkansas * Brookhaven National Lab+ Kansas State LBNL U. Of Chicago Cal. Tech U of Iowa Notre Dame U. California at Riverside (Clemson) Academia Sinica Hampton U Penn State U UCSD Brookhaven National Lab UERJ Brazil Oaklahoma U. Of Florida Boston U. Iowa State SLAC U. Illinois Chicago ISI, U of South California Cinvestav, Mexico City Indiana University Purdue U. New Mexico Indiana U Caltech Lehigh University * Rice U. Clemson U. * Louisiana University Southern Methodist U. Virginia Dartmouth U * Louisiana Tech * U. Of Sao Paolo U. Wisconsin Madison Florida International U. Mc. Gill U Wayne State U. Wisconsin Milwaukee Fermilab MIT U. Of Michigan Columbia Cornell Fermi. Lab+ LBNL+ TTU U. Texas at Arlington Vanderbilt U. The Open Science Grid (Nebraska) RENCI SLAC+ UCSD U of Chicago U of Florida U of Urbana Champaign/NCSA U of Wisconsin Madison 8

Monitoring the Sites The Open Science Grid 9

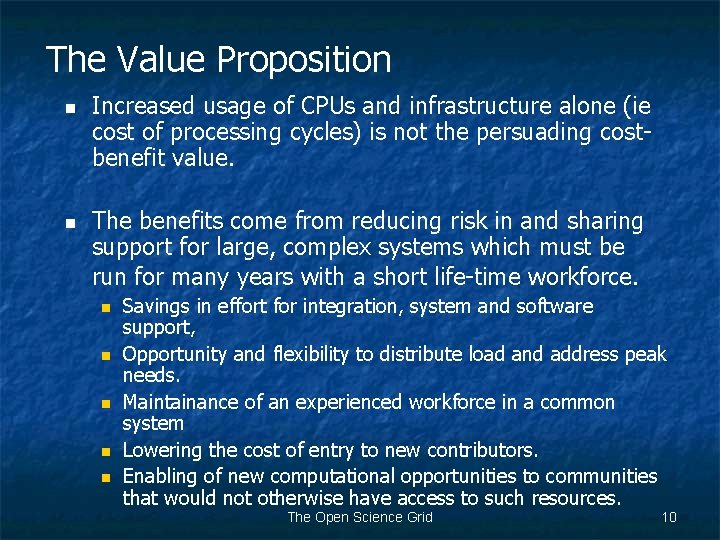

The Value Proposition n n Increased usage of CPUs and infrastructure alone (ie cost of processing cycles) is not the persuading costbenefit value. The benefits come from reducing risk in and sharing support for large, complex systems which must be run for many years with a short life-time workforce. n n n Savings in effort for integration, system and software support, Opportunity and flexibility to distribute load and address peak needs. Maintainance of an experienced workforce in a common system Lowering the cost of entry to new contributors. Enabling of new computational opportunities to communities that would not otherwise have access to such resources. The Open Science Grid 10

OSG Does n n n n Release, deploy and support Software. Integrate and test new software at the system level. Support operations and Grid-wide services. Provide Security operations and policy. Troubleshoot end to end user and system problems. Engage and help new communities. Extend capability and scale. The Open Science Grid 11

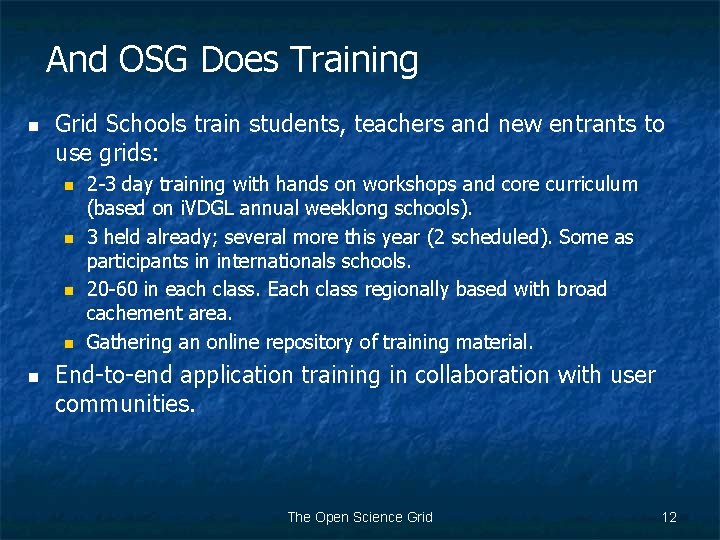

And OSG Does Training n Grid Schools train students, teachers and new entrants to use grids: n n n 2 -3 day training with hands on workshops and core curriculum (based on i. VDGL annual weeklong schools). 3 held already; several more this year (2 scheduled). Some as participants in internationals schools. 20 -60 in each class. Each class regionally based with broad cachement area. Gathering an online repository of training material. End-to-end application training in collaboration with user communities. The Open Science Grid 12

Participants in a recent Open Science Grid workshop held in Argentina. Image courtesy of Carolina Leon Carri, University of Buenos Aires The Open Science Grid 13

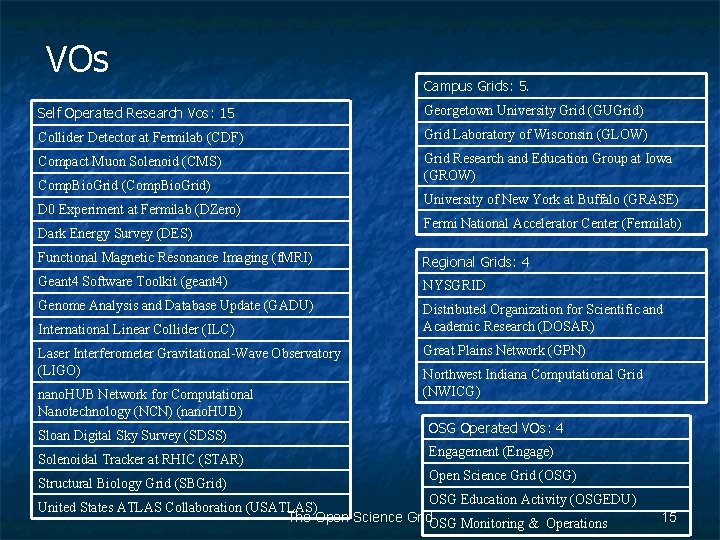

Virtual Organizations n n n A Virtual Organization is a collection of people (VO members). A VO has responsibilities to manage its members and the services its runs on their behalf. A VO may own resources and be prepared to share in their use. The Open Science Grid 14

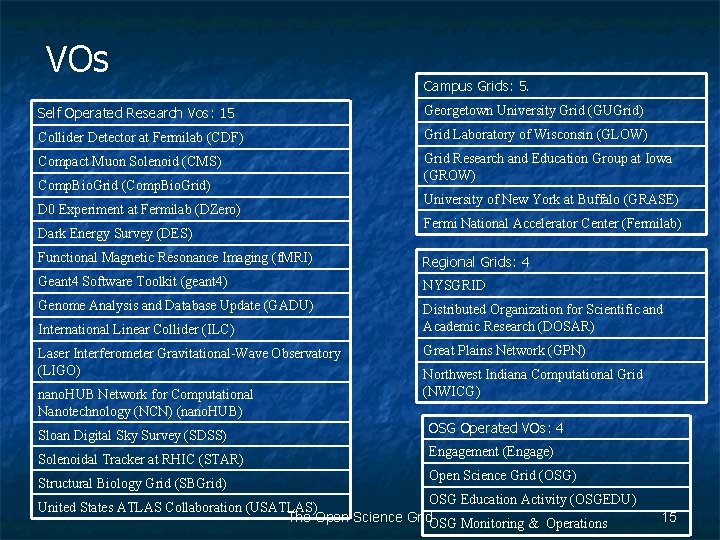

VOs Campus Grids: 5. Self Operated Research Vos: 15 Georgetown University Grid (GUGrid) Collider Detector at Fermilab (CDF) Grid Laboratory of Wisconsin (GLOW) Compact Muon Solenoid (CMS) Grid Research and Education Group at Iowa (GROW) Comp. Bio. Grid (Comp. Bio. Grid) D 0 Experiment at Fermilab (DZero) Dark Energy Survey (DES) University of New York at Buffalo (GRASE) Fermi National Accelerator Center (Fermilab) Functional Magnetic Resonance Imaging (f. MRI) Regional Grids: 4 Geant 4 Software Toolkit (geant 4) NYSGRID Genome Analysis and Database Update (GADU) Distributed Organization for Scientific and Academic Research (DOSAR) International Linear Collider (ILC) Laser Interferometer Gravitational-Wave Observatory (LIGO) nano. HUB Network for Computational Nanotechnology (NCN) (nano. HUB) Sloan Digital Sky Survey (SDSS) Solenoidal Tracker at RHIC (STAR) Structural Biology Grid (SBGrid) Great Plains Network (GPN) Northwest Indiana Computational Grid (NWICG) OSG Operated VOs: 4 Engagement (Engage) Open Science Grid (OSG) OSG Education Activity (OSGEDU) United States ATLAS Collaboration (USATLAS) The Open Science Grid. OSG Monitoring & Operations 15

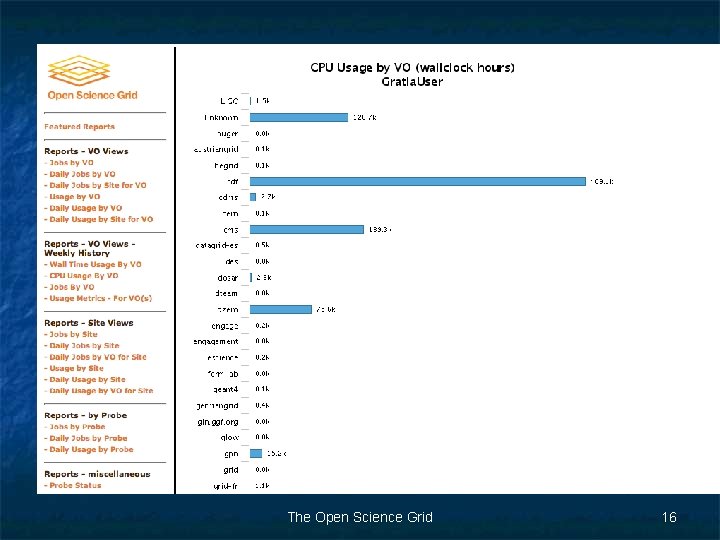

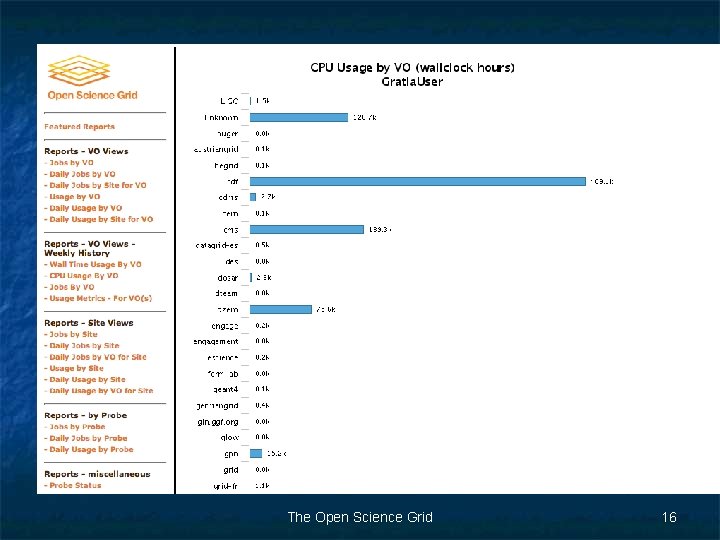

The Open Science Grid 16

Sites n n A Site is a collection of commonly administered computing and/or storage resources and services. Resources can be owned by and shared among VOs The Open Science Grid 17

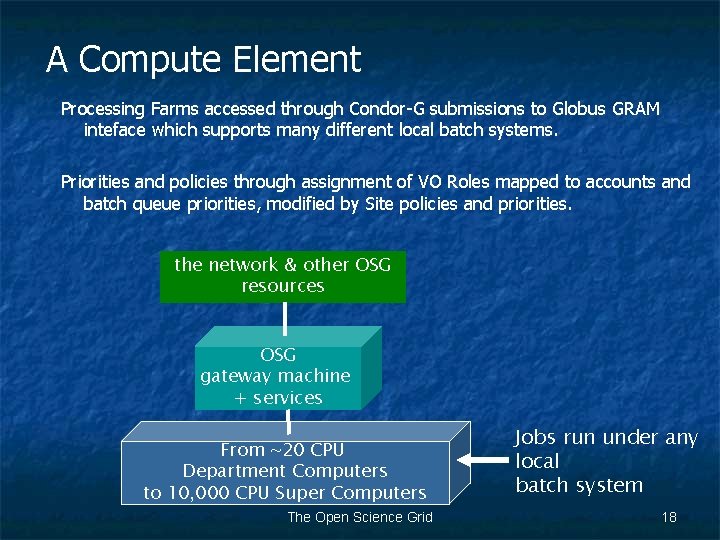

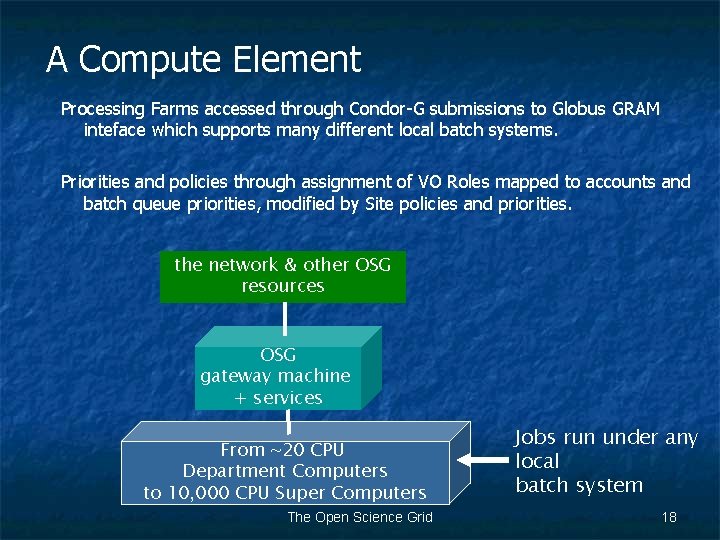

A Compute Element Processing Farms accessed through Condor-G submissions to Globus GRAM inteface which supports many different local batch systems. Priorities and policies through assignment of VO Roles mapped to accounts and batch queue priorities, modified by Site policies and priorities. the network & other OSG resources OSG gateway machine + services From ~20 CPU Department Computers to 10, 000 CPU Super Computers The Open Science Grid Jobs run under any local batch system 18

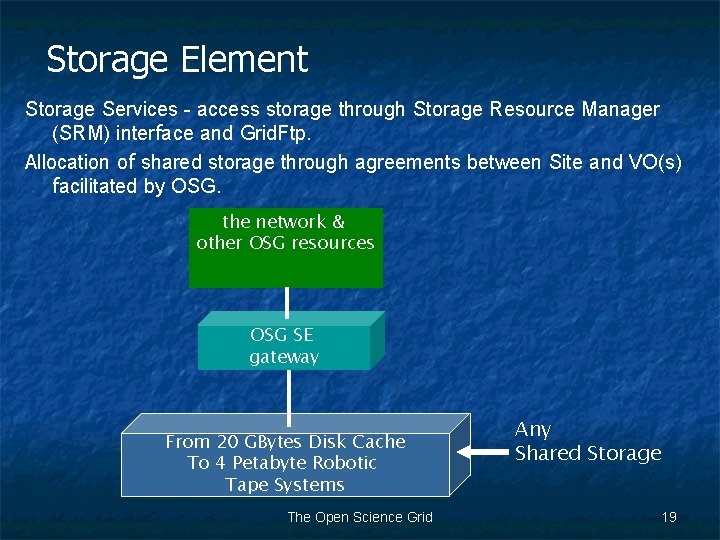

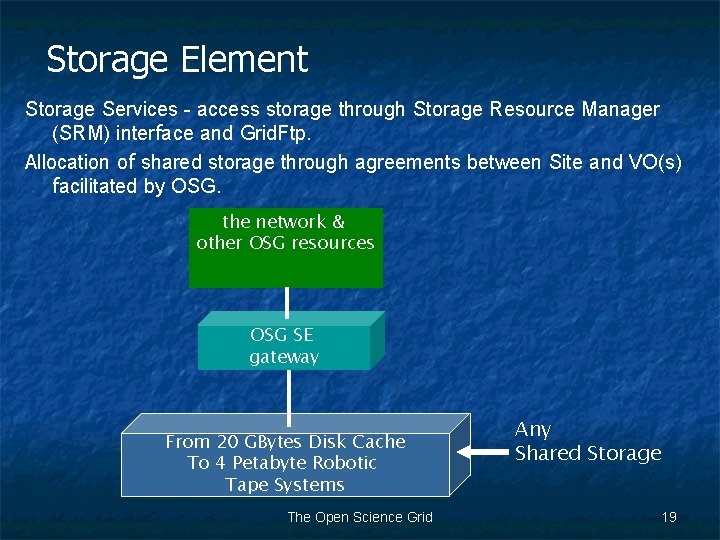

Storage Element Storage Services - access storage through Storage Resource Manager (SRM) interface and Grid. Ftp. Allocation of shared storage through agreements between Site and VO(s) facilitated by OSG. the network & other OSG resources OSG SE gateway From 20 GBytes Disk Cache To 4 Petabyte Robotic Tape Systems The Open Science Grid Any Shared Storage 19

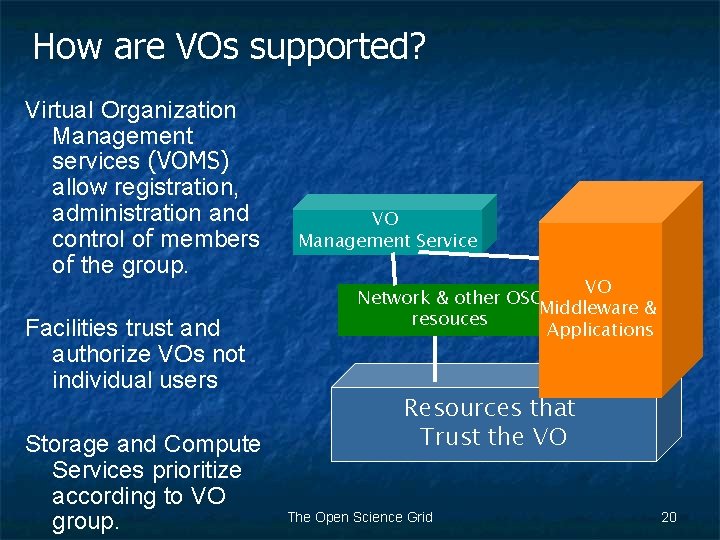

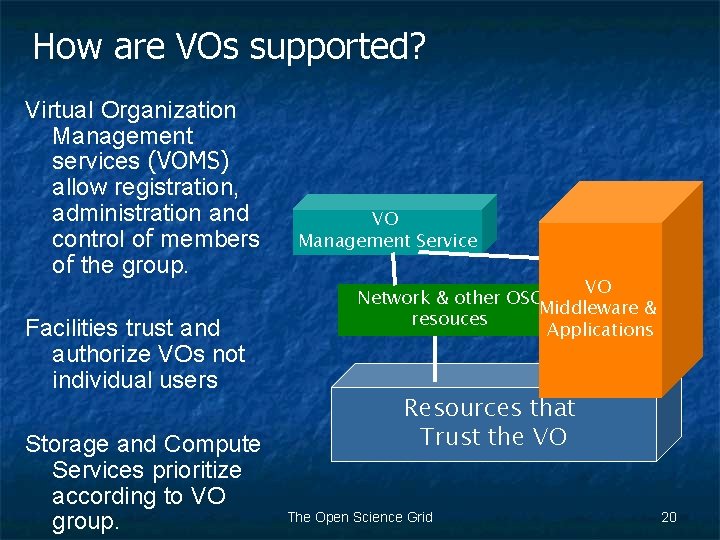

How are VOs supported? Virtual Organization Management services (VOMS) allow registration, administration and control of members of the group. Facilities trust and authorize VOs not individual users Storage and Compute Services prioritize according to VO group. VO Management Service VO Network & other OSG Middleware & resouces Applications Resources that Trust the VO The Open Science Grid 20

Running Jobs n n Condor-G client Pre-ws or WS Gram as Site gateway Priority through VO role and policy, mitigate by Site policy Pilot jobs submitted through regular gateway can then bring down multiple user jobs until batch slot resources are used up. Glexec modelled on Apache suexec allows jobs to run under user identity. The Open Science Grid 21

Data and Storage n n n Grid. FTP data transfer Storage Resource Manager to manage shared and common storage Environment variables on the site let VOs know where to put and leave files. d. Cache - large scale, high I/O disk caching system for large sites DRM - NFS based disk management system for small sites. ? NFS V 4 ? GPFS ? The Open Science Grid 22

The Open Science Grid 23

Resource Management n Many resources are owned or statically allocated to one user community. n n The remainder of an organization’s available resources can be “used by everyone or anyone else”. n n n The institutions which own resources typically have ongoing relationships with (a few) particular user communities (VOs) organizations can decide against supporting particular VOs. OSG staff are responsible for monitoring and, if needed, managing this usage. Our challenge is to maximize good - successful output from the whole system. The Open Science Grid 24

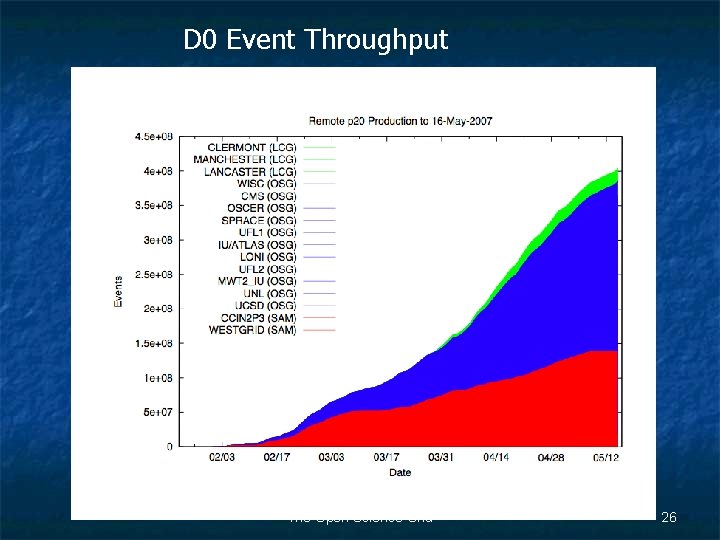

An Example of Opportunistic use: n n D 0’s own resources are committed to the processing of newly acquired data and analysis of the processed datasets. In Nov ‘ 06 D 0 asked to use 1500 -2000 CPUs for 2 -4 months for re-processing of an existing dataset (~500 million events) for science results for the summer conferences in July ‘ 07. The Executive Board estimated there were currently sufficient opportunistically available resources on OSG to meet the request; We also looked into the local storage and I/O needs. The Council members agreed to contribute resources to meet this request. The Open Science Grid 25

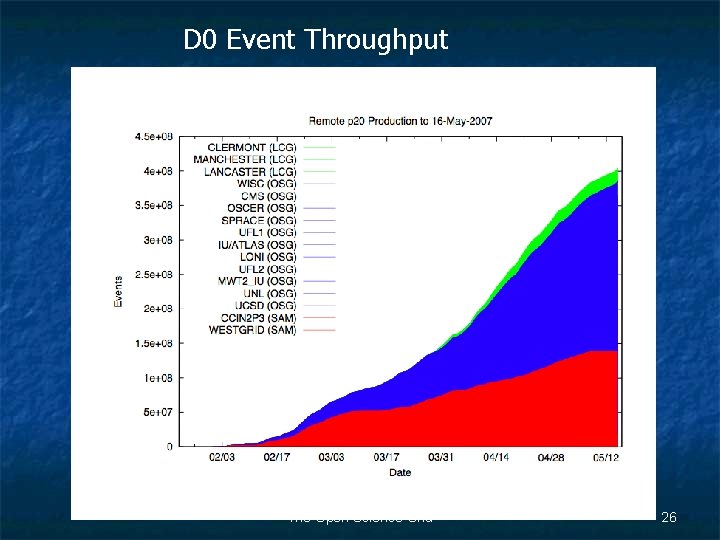

D 0 Event Throughput The Open Science Grid 26

How did D 0 Reprocessing Go? n n D 0 had 2 -3 months of smooth production running using >1, 000 CPUs and met their goal by the end of May. To achieve this n n D 0 testing of the integrated software system took until February. OSG staff and D 0 then worked closely together as a team to reach the needed throughput goals - facing and solving problems n n n sites - hardware, connectivity, software configurations application software - performance, error recovery scheduling of jobs to a changing mix of available resources. The Open Science Grid 27

D 0 OSG CPUHours / Week The Open Science Grid 28

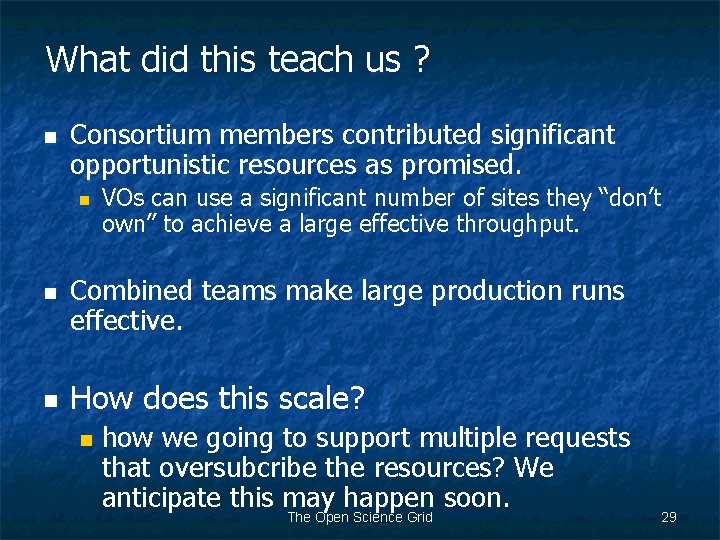

What did this teach us ? n Consortium members contributed significant opportunistic resources as promised. n n n VOs can use a significant number of sites they “don’t own” to achieve a large effective throughput. Combined teams make large production runs effective. How does this scale? n how we going to support multiple requests that oversubcribe the resources? We anticipate this may happen soon. The Open Science Grid 29

Use by non-Physics n n n Rosetta@Kuhlman lab: in production across ~15 sites since April Weather Research Forecase: MPI job running on 1 OSG site; more to come CHARMM molecular dynamic simulation to the problem of water penetration in staphylococcal nuclease Genome Analysis and Database Update system (GADU): portal across OSG & Tera. Grid. Runs Blast. Nano. HUB at Purdue: Biomoca and Nanowire production. The Open Science Grid 30

Rosetta User decided to submit jobs. . 3, 000 jobs The Open Science Grid 31

Scale needed in 2008/2009: n n 20 -30 Petabyte tertiary automated tape storage at 12 centers world-wide physics and other scientific collaborations. High availability (365 x 24 x 7) and high data access rates (1 GByte/sec) locally and remotely. Evolving and scaling smoothly to meet evolving requirements. E. g. for a single experiment The Open Science Grid 32

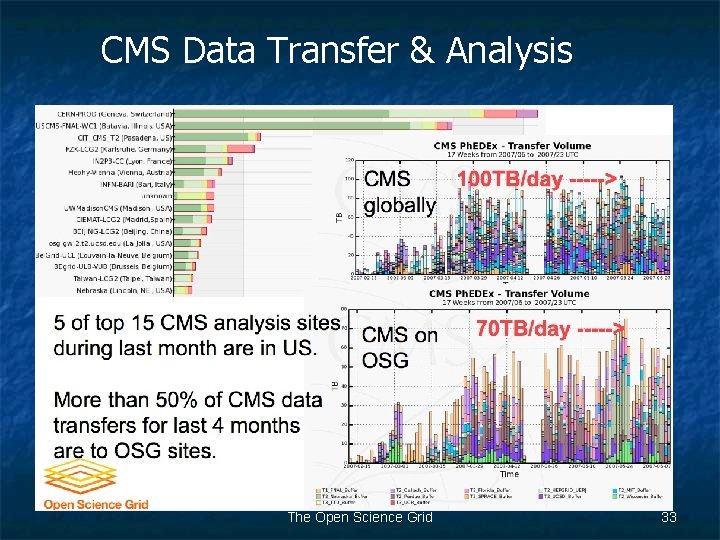

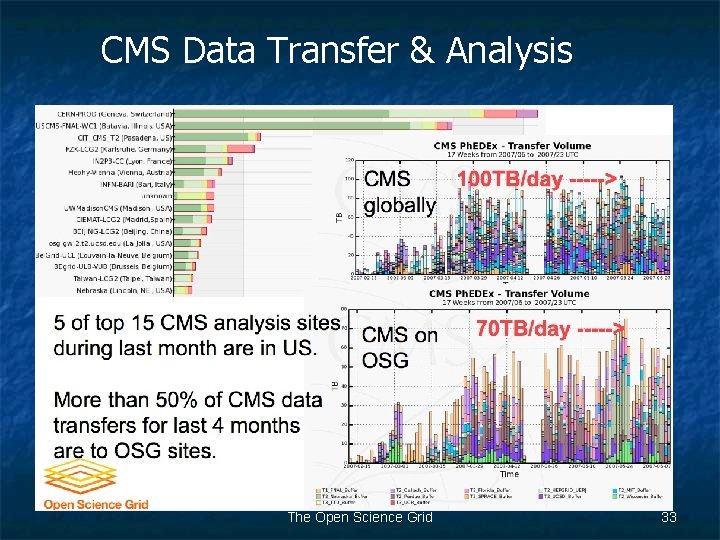

CMS Data Transfer & Analysis The Open Science Grid 33

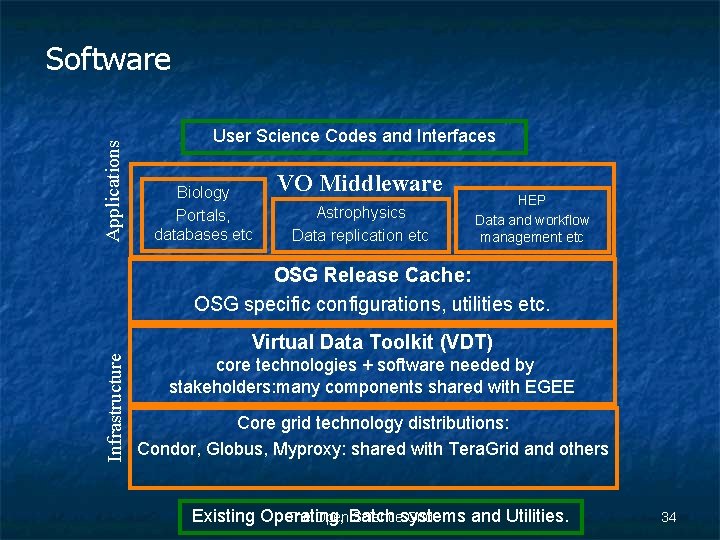

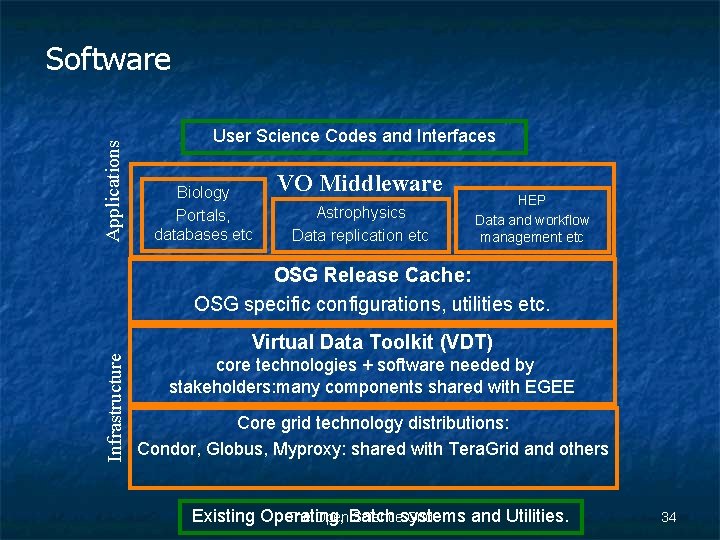

Applications Software User Science Codes and Interfaces Biology Portals, databases etc VO Middleware Astrophysics Data replication etc HEP Data and workflow management etc Infrastructure OSG Release Cache: OSG specific configurations, utilities etc. Virtual Data Toolkit (VDT) core technologies + software needed by stakeholders: many components shared with EGEE Core grid technology distributions: Condor, Globus, Myproxy: shared with Tera. Grid and others Existing Operating, and Utilities. The Open. Batch Sciencesystems Grid 34

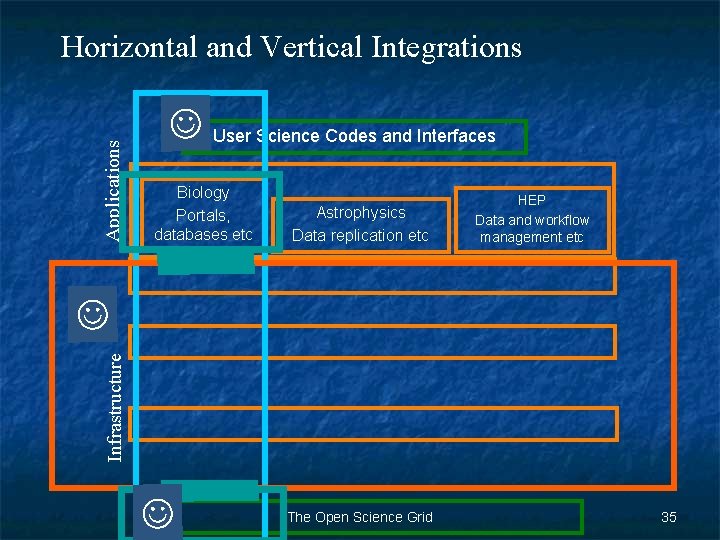

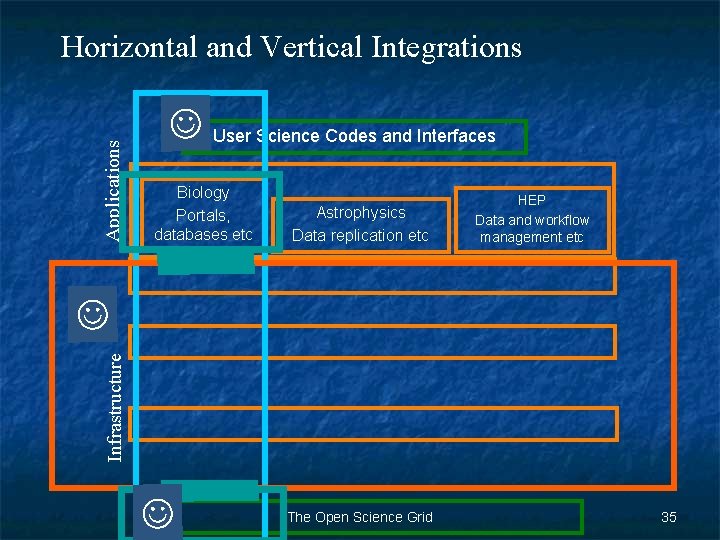

Applications Horizontal and Vertical Integrations User Science Codes and Interfaces Biology Portals, databases etc Astrophysics Data replication etc HEP Data and workflow management etc Infrastructure The Open Science Grid 35

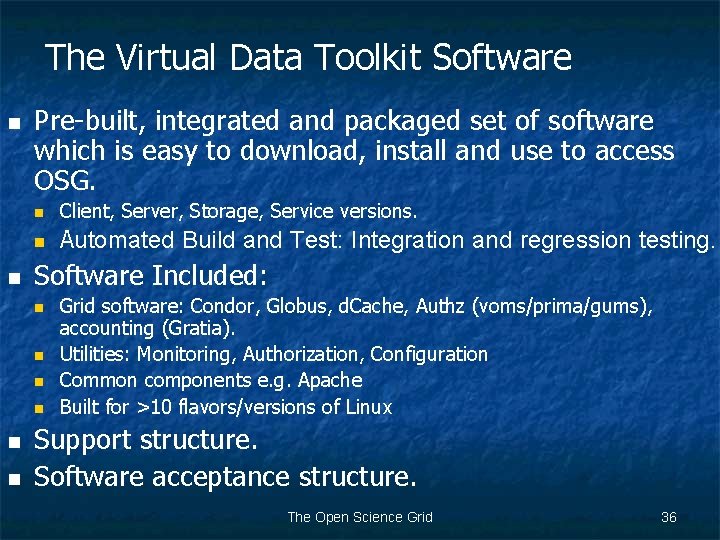

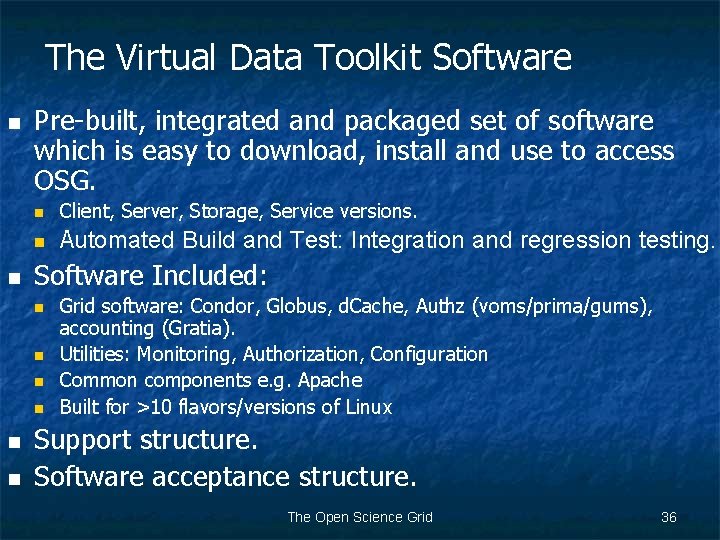

The Virtual Data Toolkit Software n n Pre-built, integrated and packaged set of software which is easy to download, install and use to access OSG. n Client, Server, Storage, Service versions. n Automated Build and Test: Integration and regression testing. Software Included: n n n Grid software: Condor, Globus, d. Cache, Authz (voms/prima/gums), accounting (Gratia). Utilities: Monitoring, Authorization, Configuration Common components e. g. Apache Built for >10 flavors/versions of Linux Support structure. Software acceptance structure. The Open Science Grid 36

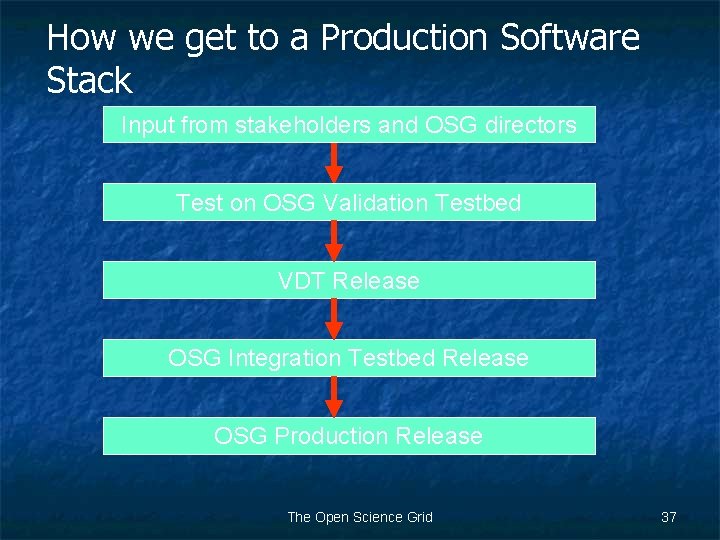

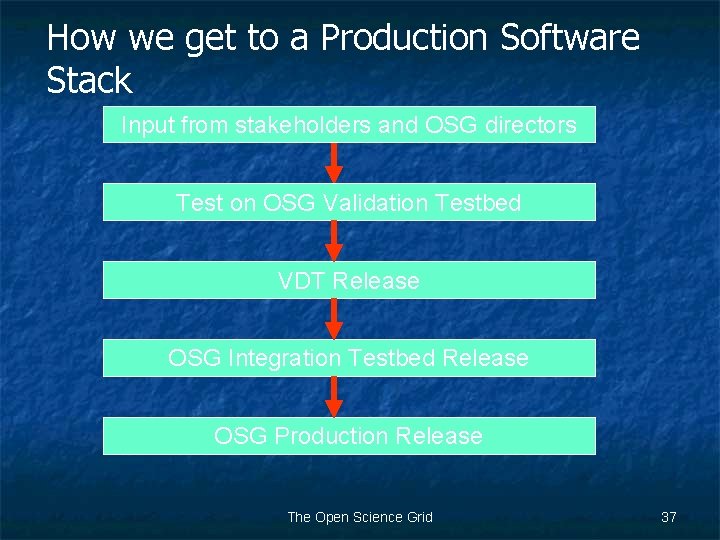

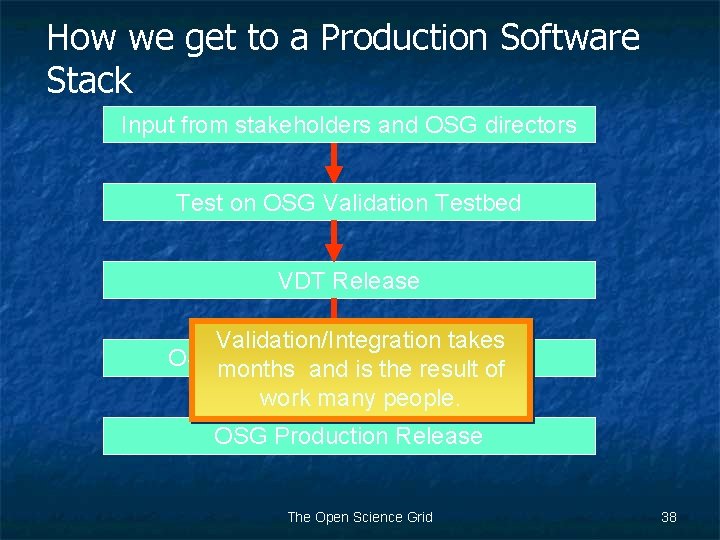

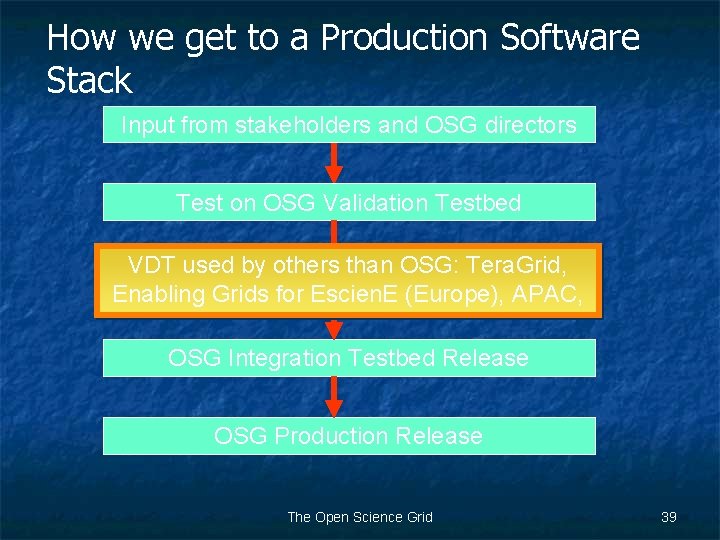

How we get to a Production Software Stack Input from stakeholders and OSG directors Test on OSG Validation Testbed VDT Release OSG Integration Testbed Release OSG Production Release The Open Science Grid 37

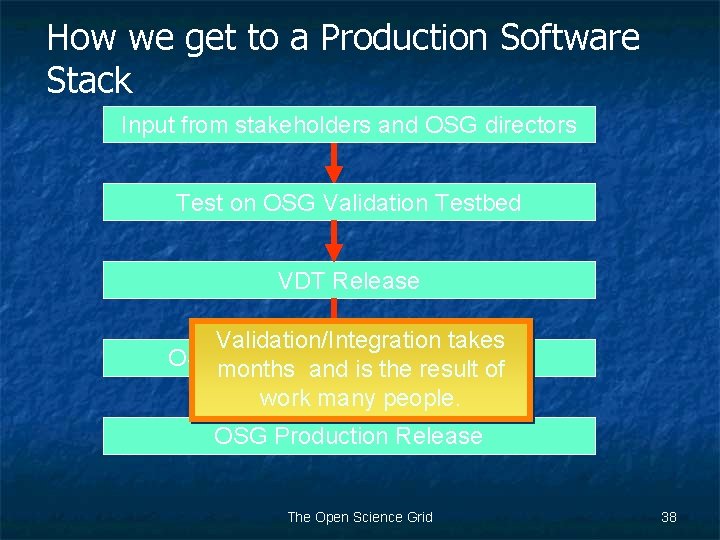

How we get to a Production Software Stack Input from stakeholders and OSG directors Test on OSG Validation Testbed VDT Release Validation/Integration takes OSGmonths Integration Release and. Testbed is the result of work many people. OSG Production Release The Open Science Grid 38

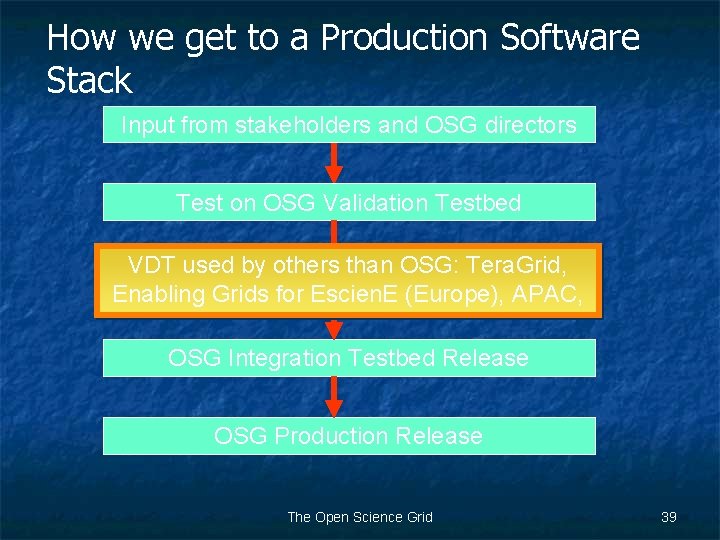

How we get to a Production Software Stack Input from stakeholders and OSG directors Test on OSG Validation Testbed VDT used by others than OSG: Tera. Grid, VDT Release Enabling Grids for Escien. E (Europe), APAC, OSG Integration Testbed Release OSG Production Release The Open Science Grid 39

The Open Science Grid 40

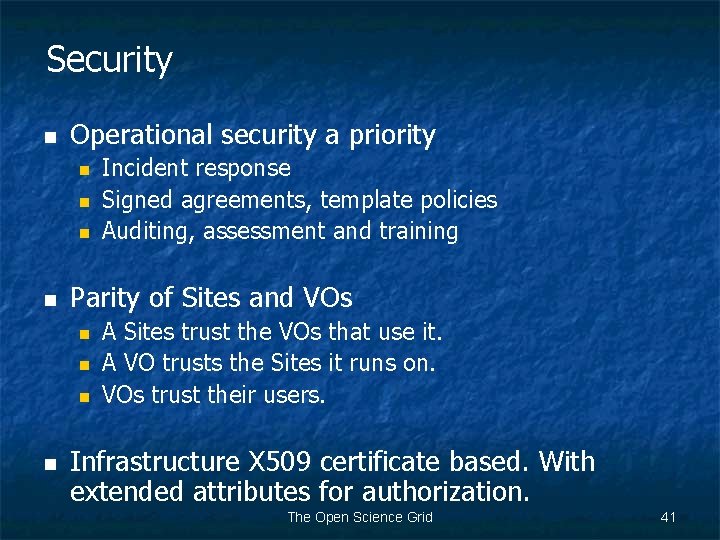

Security n Operational security a priority n n Parity of Sites and VOs n n Incident response Signed agreements, template policies Auditing, assessment and training A Sites trust the VOs that use it. A VO trusts the Sites it runs on. VOs trust their users. Infrastructure X 509 certificate based. With extended attributes for authorization. The Open Science Grid 41

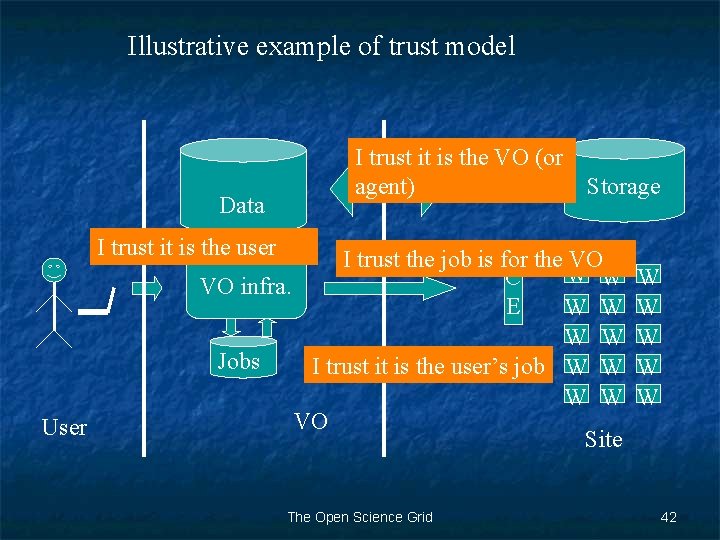

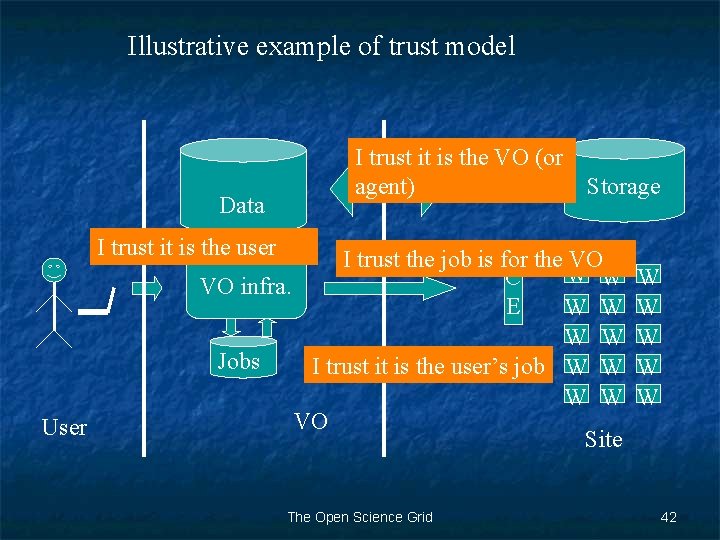

Illustrative example of trust model Data I trust it is the VO (or agent) Storage I trust it is the user User I trust the job is for the VO W W C VO infra. E W W Jobs I trust it is the user’s job W W VO Site The Open Science Grid W W W 42

Operations & Troubleshooting & Support n n n Well established Grid Operations Center at Indiana University Users support distributed, includes osggeneral@opensciencegrid community support. Site coordinator supports team of sites. n n Accounting and Site Validation required services of sites. Troubleshooting looks at targetted end to end problems n Partnering with LBNL Troubleshooting work for auditing and forensics. The Open Science Grid 43

Campus Grids n n Sharing across compute clusters is a change and a challenge for many Universities. OSG, Tera. Grid, Internet 2, Educause working together on CI Days n Work with CIO, Faculty, IT organizations for a 1 day meeting where we all come and talk about the needs the ideas and, yes, the next steps. The Open Science Grid 44

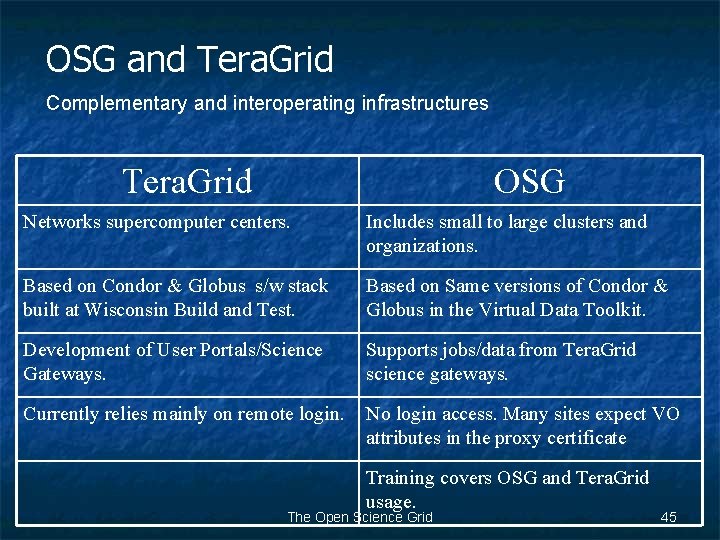

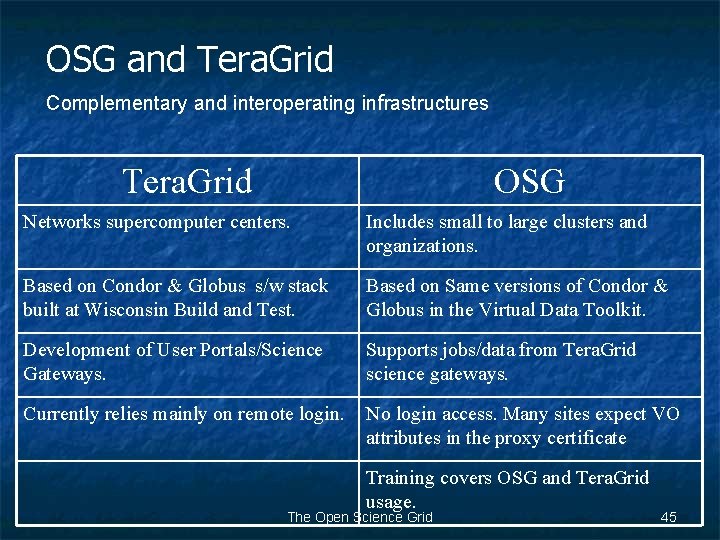

OSG and Tera. Grid Complementary and interoperating infrastructures Tera. Grid OSG Networks supercomputer centers. Includes small to large clusters and organizations. Based on Condor & Globus s/w stack built at Wisconsin Build and Test. Based on Same versions of Condor & Globus in the Virtual Data Toolkit. Development of User Portals/Science Gateways. Supports jobs/data from Tera. Grid science gateways. Currently relies mainly on remote login. No login access. Many sites expect VO attributes in the proxy certificate Training covers OSG and Tera. Grid usage. The Open Science Grid 45