The Memory Hierarchy Cache Main Memory and Virtual

- Slides: 40

The Memory Hierarchy Cache, Main Memory, and Virtual Memory Lecture for CPSC 5155 Edward Bosworth, Ph. D. Computer Science Department Columbus State University

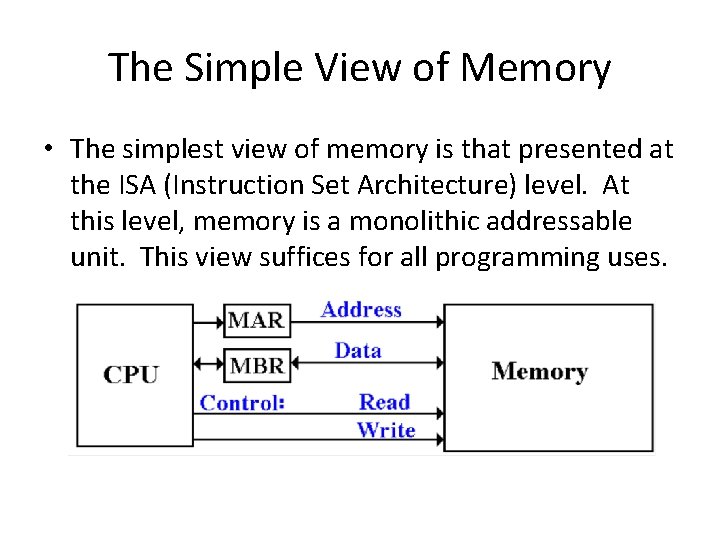

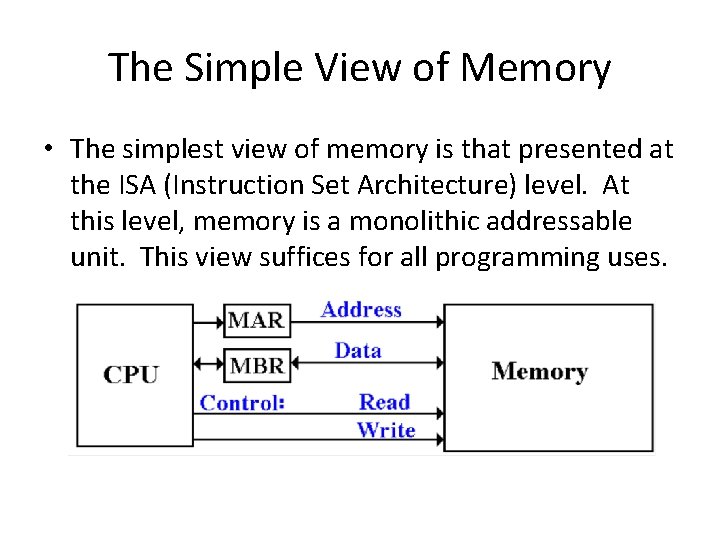

The Simple View of Memory • The simplest view of memory is that presented at the ISA (Instruction Set Architecture) level. At this level, memory is a monolithic addressable unit. This view suffices for all programming uses.

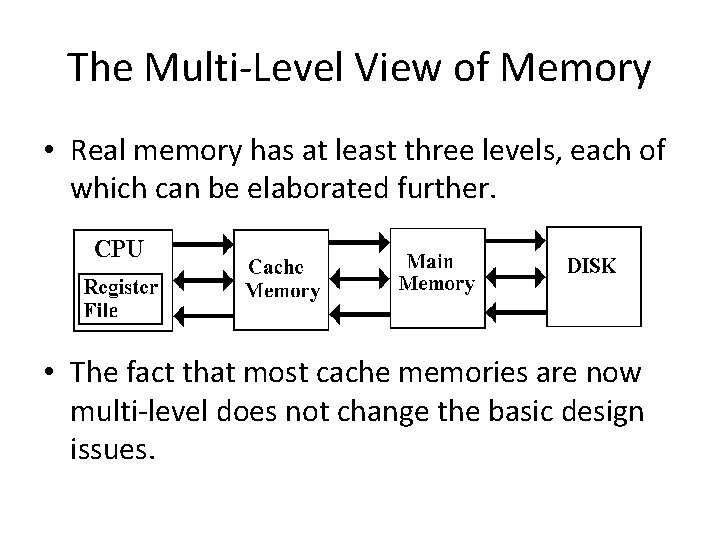

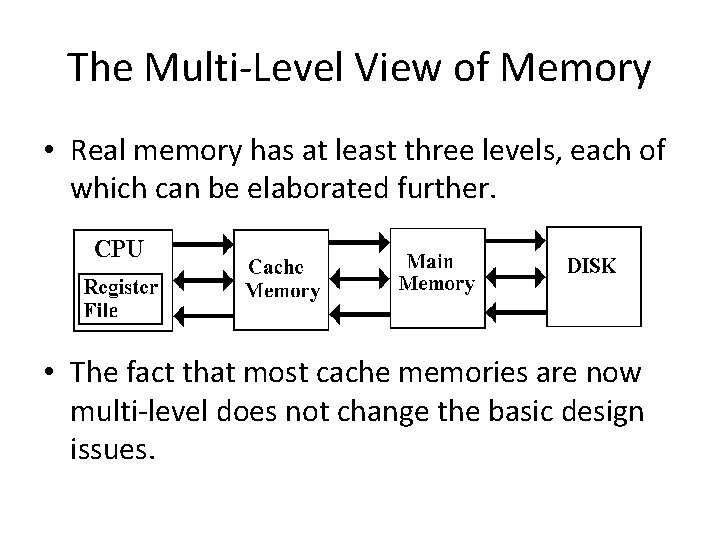

The Multi-Level View of Memory • Real memory has at least three levels, each of which can be elaborated further. • The fact that most cache memories are now multi-level does not change the basic design issues.

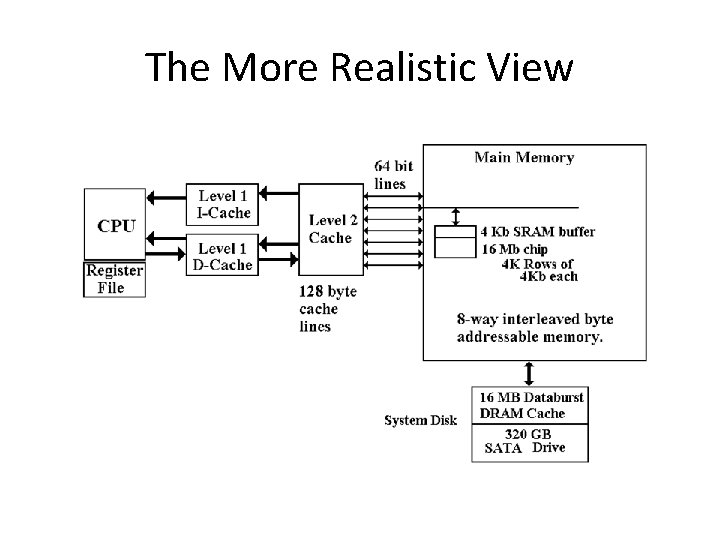

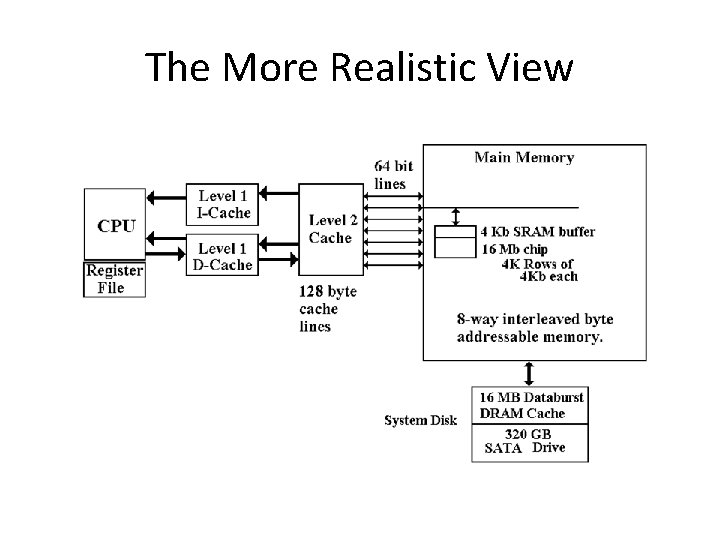

The More Realistic View

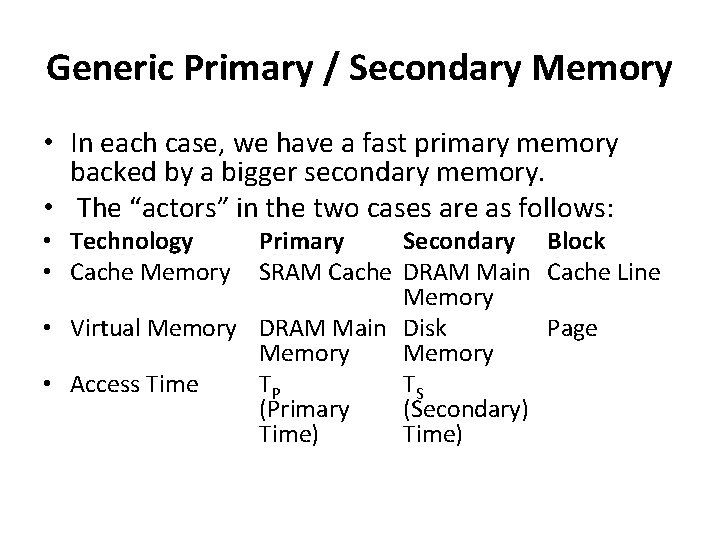

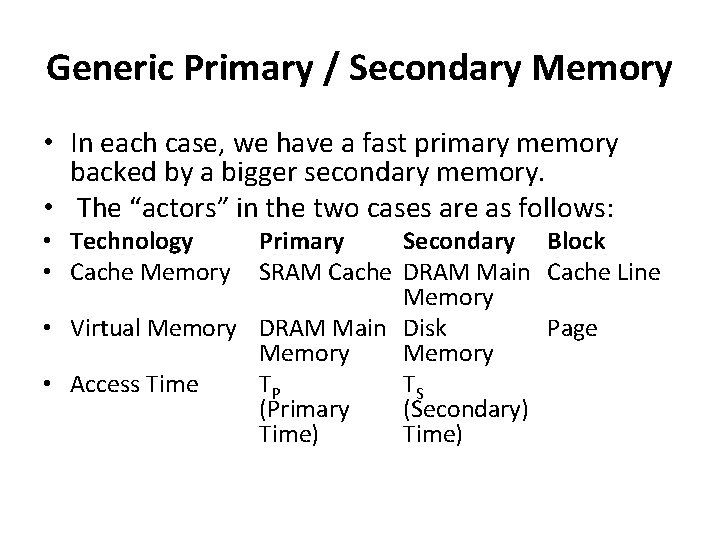

Generic Primary / Secondary Memory • In each case, we have a fast primary memory backed by a bigger secondary memory. • The “actors” in the two cases are as follows: • Technology • Cache Memory Primary Secondary Block SRAM Cache DRAM Main Cache Line Memory • Virtual Memory DRAM Main Disk Page Memory • Access Time TP TS (Primary (Secondary) Time)

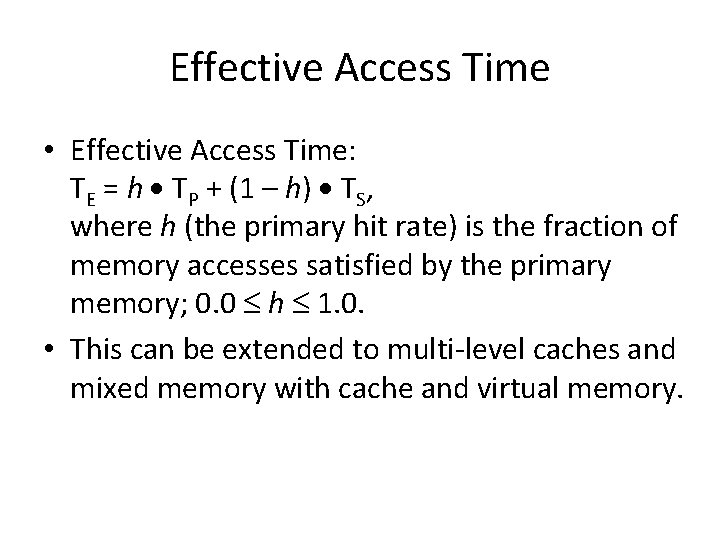

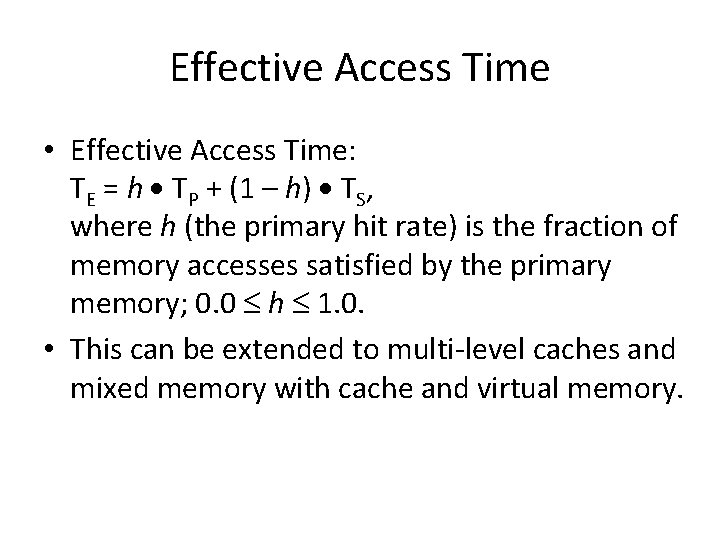

Effective Access Time • Effective Access Time: TE = h TP + (1 – h) TS, where h (the primary hit rate) is the fraction of memory accesses satisfied by the primary memory; 0. 0 h 1. 0. • This can be extended to multi-level caches and mixed memory with cache and virtual memory.

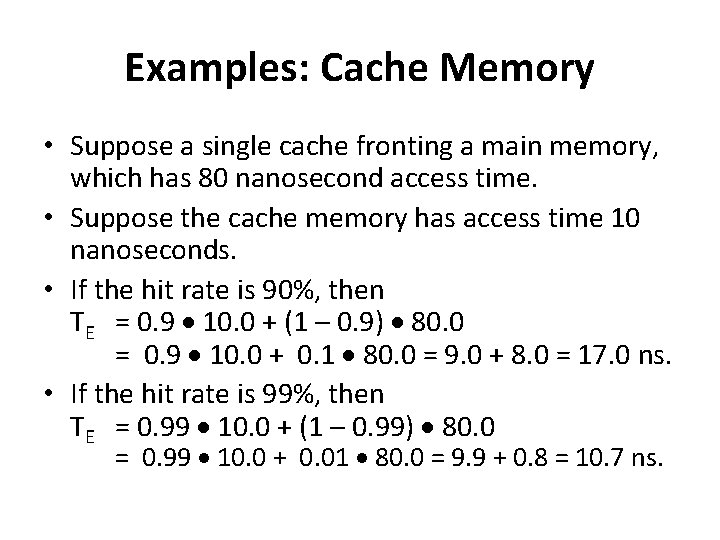

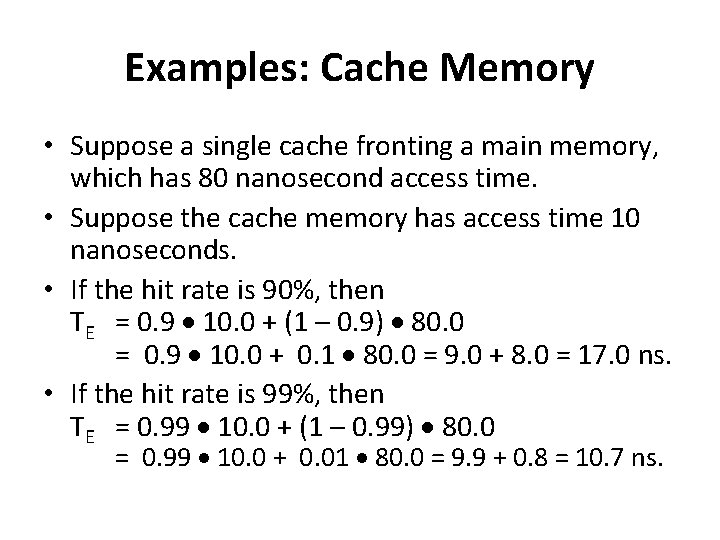

Examples: Cache Memory • Suppose a single cache fronting a main memory, which has 80 nanosecond access time. • Suppose the cache memory has access time 10 nanoseconds. • If the hit rate is 90%, then TE = 0. 9 10. 0 + (1 – 0. 9) 80. 0 = 0. 9 10. 0 + 0. 1 80. 0 = 9. 0 + 8. 0 = 17. 0 ns. • If the hit rate is 99%, then TE = 0. 99 10. 0 + (1 – 0. 99) 80. 0 = 0. 99 10. 0 + 0. 01 80. 0 = 9. 9 + 0. 8 = 10. 7 ns.

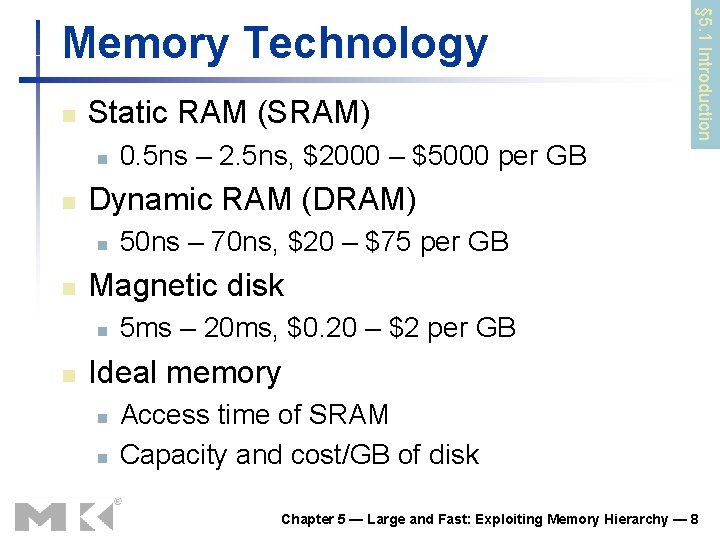

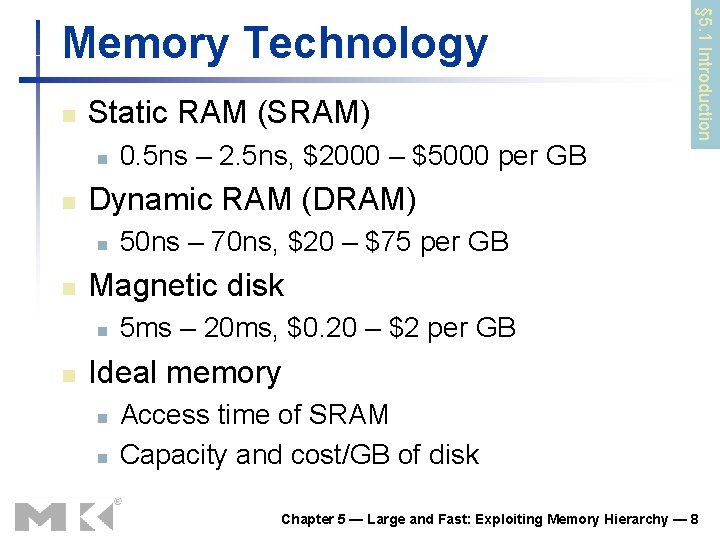

n Static RAM (SRAM) n n Dynamic RAM (DRAM) n n 50 ns – 70 ns, $20 – $75 per GB Magnetic disk n n 0. 5 ns – 2. 5 ns, $2000 – $5000 per GB § 5. 1 Introduction Memory Technology 5 ms – 20 ms, $0. 20 – $2 per GB Ideal memory n n Access time of SRAM Capacity and cost/GB of disk Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 8

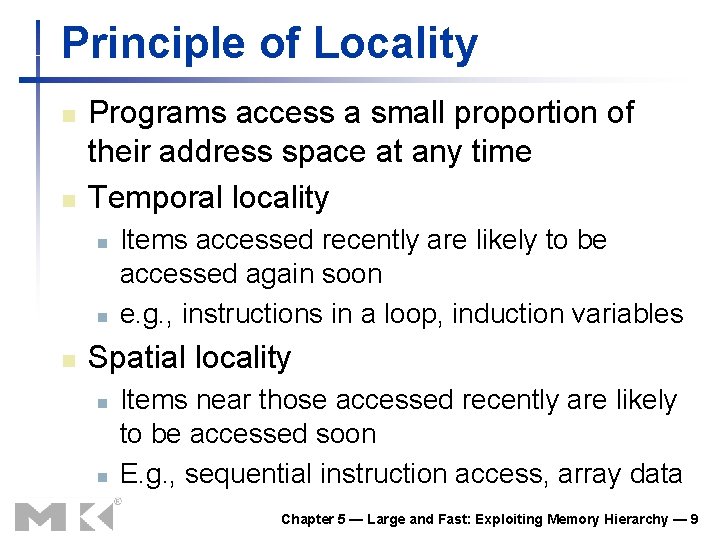

Principle of Locality n n Programs access a small proportion of their address space at any time Temporal locality n n n Items accessed recently are likely to be accessed again soon e. g. , instructions in a loop, induction variables Spatial locality n n Items near those accessed recently are likely to be accessed soon E. g. , sequential instruction access, array data Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 9

Taking Advantage of Locality n n n Memory hierarchy Store everything on disk Copy recently accessed (and nearby) items from disk to smaller DRAM memory n n Main memory Copy more recently accessed (and nearby) items from DRAM to smaller SRAM memory n Cache memory attached to CPU Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 10

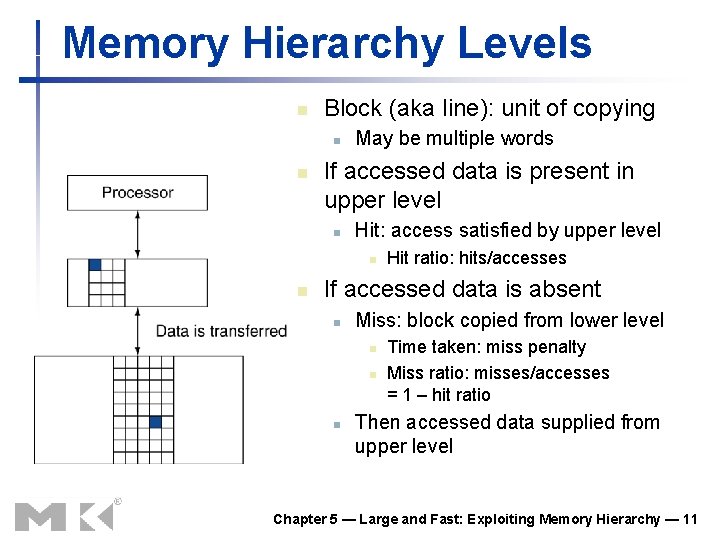

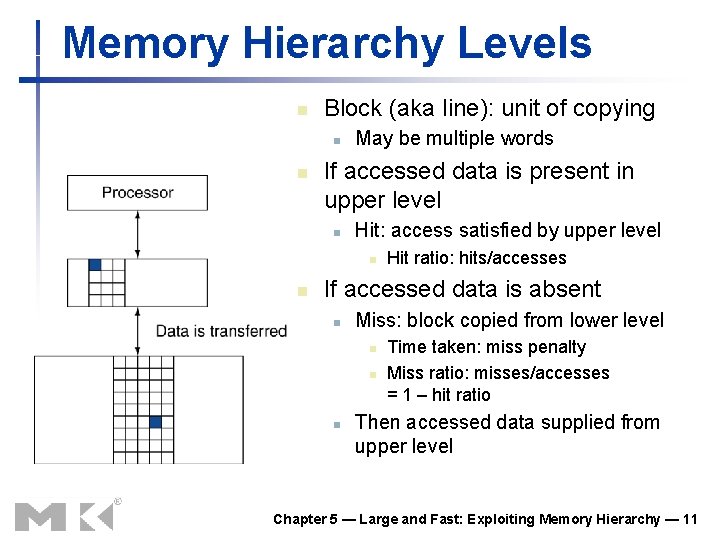

Memory Hierarchy Levels n Block (aka line): unit of copying n n May be multiple words If accessed data is present in upper level n Hit: access satisfied by upper level n n Hit ratio: hits/accesses If accessed data is absent n Miss: block copied from lower level n n n Time taken: miss penalty Miss ratio: misses/accesses = 1 – hit ratio Then accessed data supplied from upper level Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 11

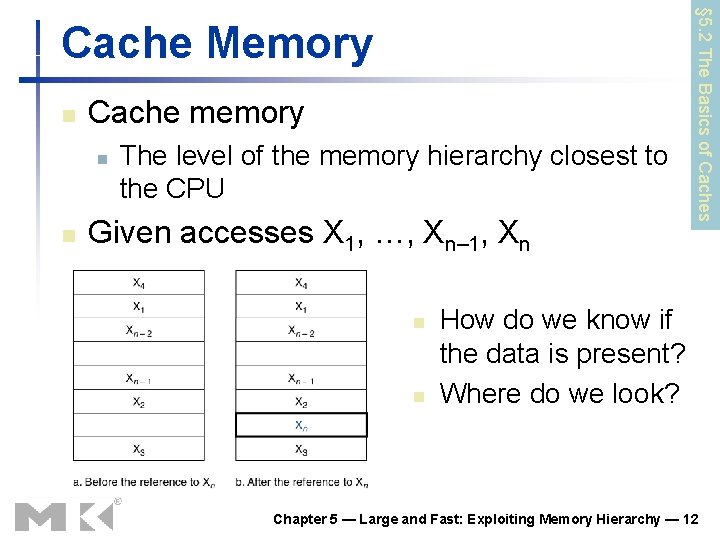

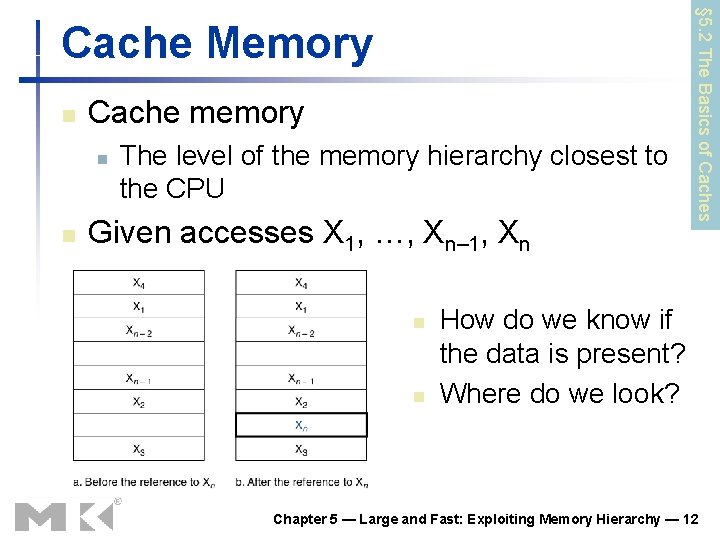

n Cache memory n n The level of the memory hierarchy closest to the CPU Given accesses X 1, …, Xn– 1, Xn n n § 5. 2 The Basics of Caches Cache Memory How do we know if the data is present? Where do we look? Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 12

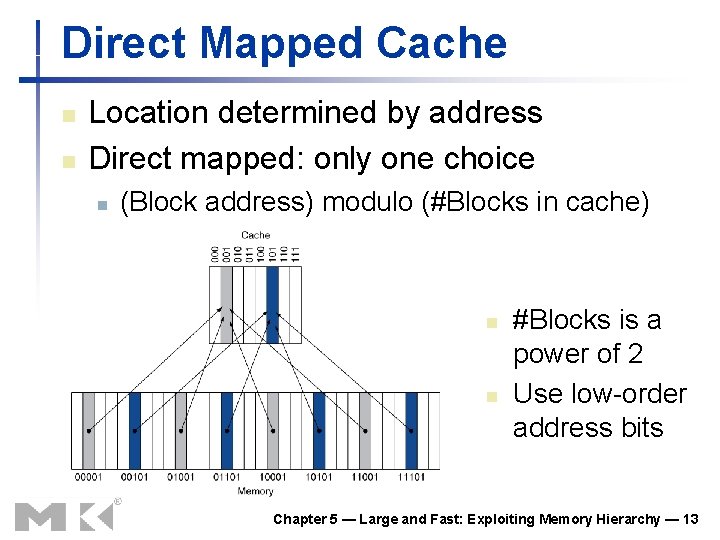

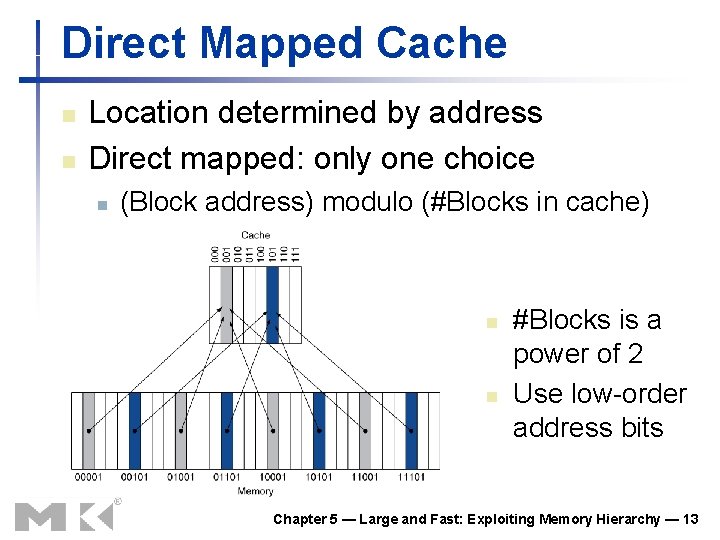

Direct Mapped Cache n n Location determined by address Direct mapped: only one choice n (Block address) modulo (#Blocks in cache) n n #Blocks is a power of 2 Use low-order address bits Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 13

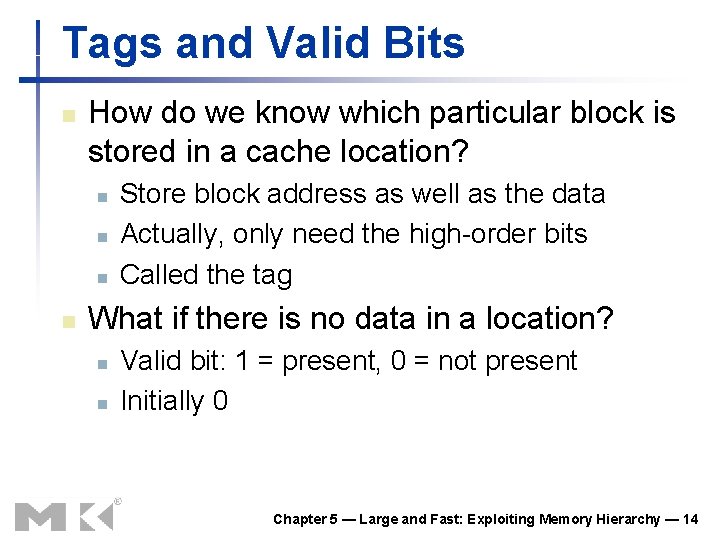

Tags and Valid Bits n How do we know which particular block is stored in a cache location? n n Store block address as well as the data Actually, only need the high-order bits Called the tag What if there is no data in a location? n n Valid bit: 1 = present, 0 = not present Initially 0 Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 14

The Dirty Bit • In some contexts, it is important to mark the primary memory if the data have been changed since being copied from the secondary memory. • For historical reasons, this bit is called the “dirty bit”, denoted D. • If D = 0, the block does not need to be written back to secondary memory prior to being replaced. This is an efficiency consideration.

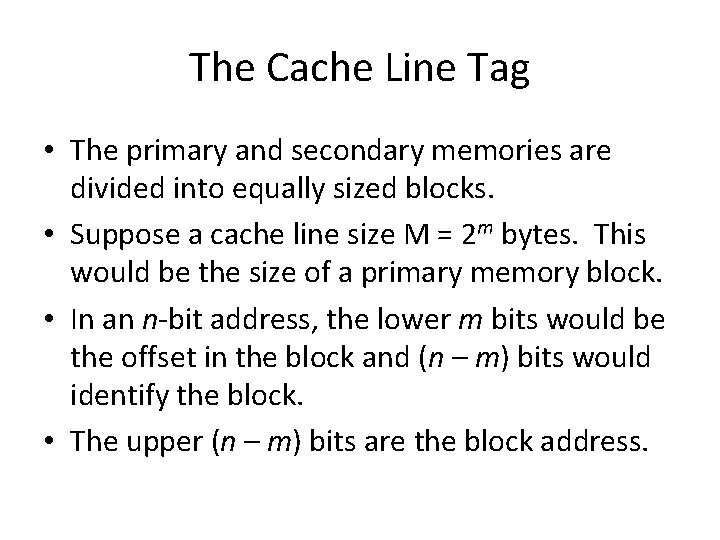

The Cache Line Tag • The primary and secondary memories are divided into equally sized blocks. • Suppose a cache line size M = 2 m bytes. This would be the size of a primary memory block. • In an n-bit address, the lower m bits would be the offset in the block and (n – m) bits would identify the block. • The upper (n – m) bits are the block address.

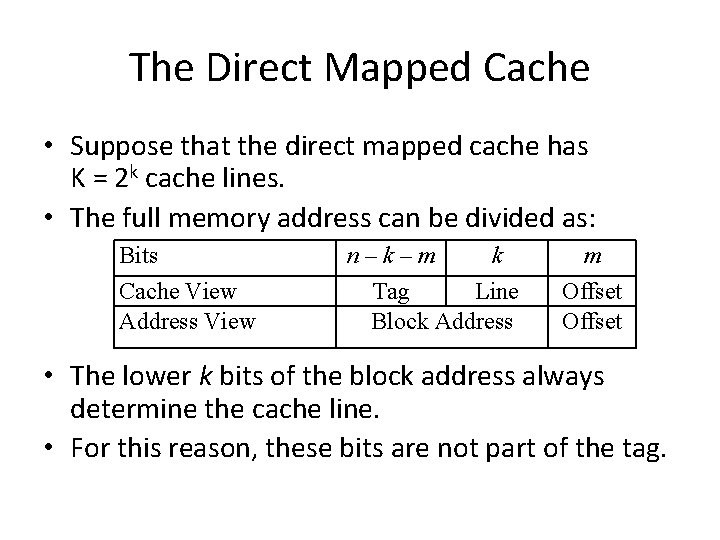

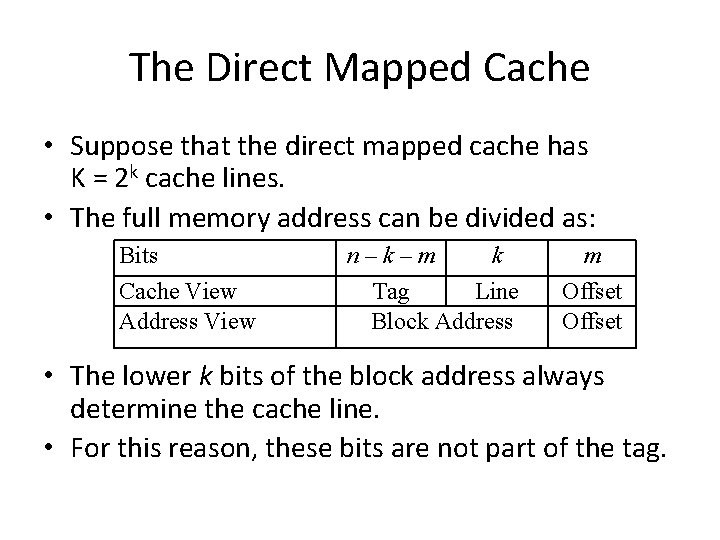

The Direct Mapped Cache • Suppose that the direct mapped cache has K = 2 k cache lines. • The full memory address can be divided as: Bits Cache View Address View n–k–m k Tag Line Block Address m Offset • The lower k bits of the block address always determine the cache line. • For this reason, these bits are not part of the tag.

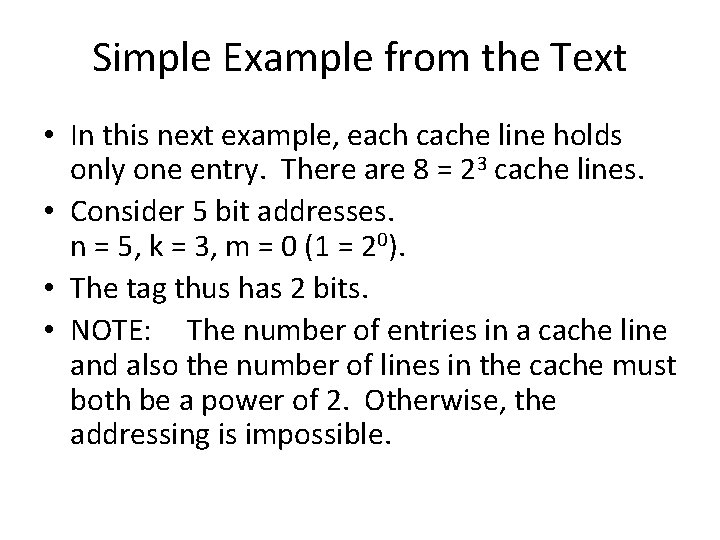

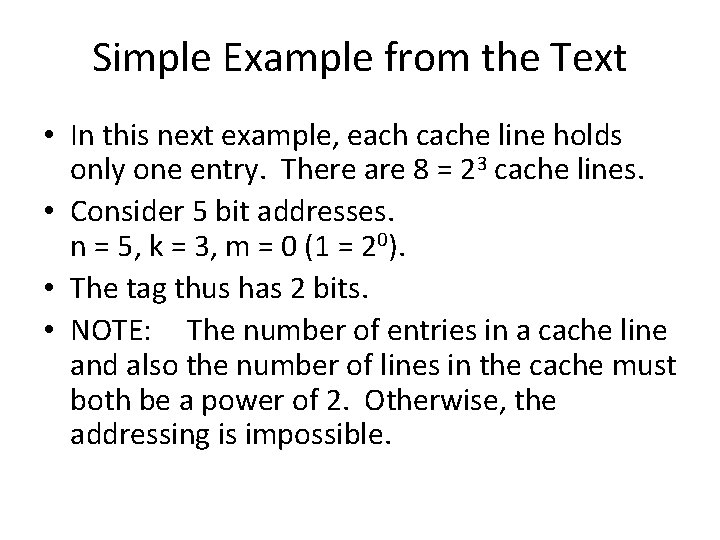

Simple Example from the Text • In this next example, each cache line holds only one entry. There are 8 = 23 cache lines. • Consider 5 bit addresses. n = 5, k = 3, m = 0 (1 = 20). • The tag thus has 2 bits. • NOTE: The number of entries in a cache line and also the number of lines in the cache must both be a power of 2. Otherwise, the addressing is impossible.

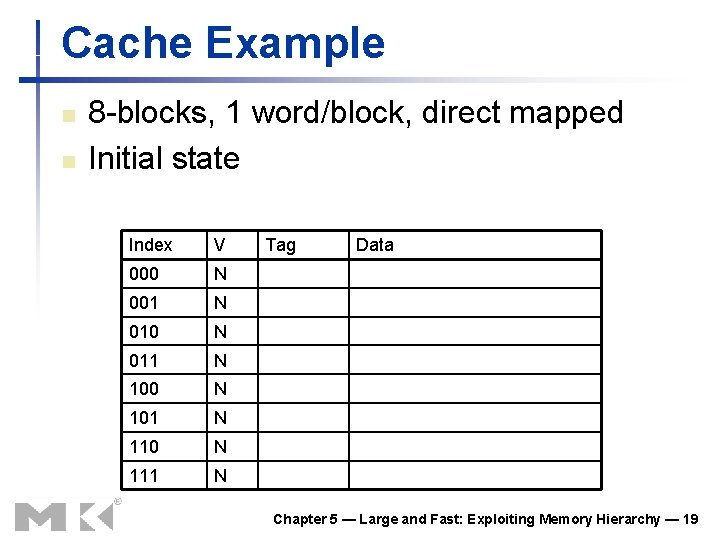

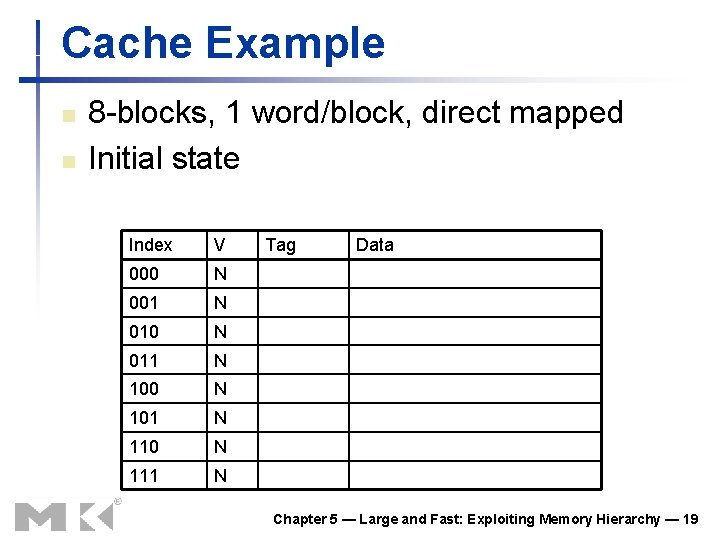

Cache Example n n 8 -blocks, 1 word/block, direct mapped Initial state Index V 000 N 001 N 010 N 011 N 100 N 101 N 110 N 111 N Tag Data Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 19

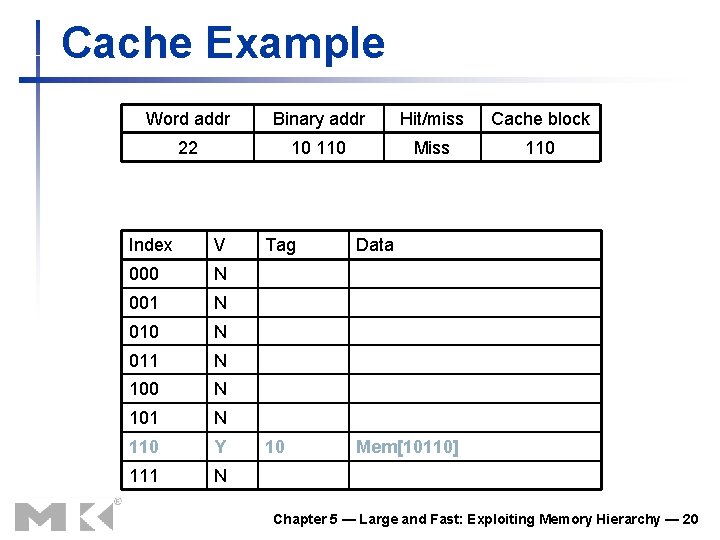

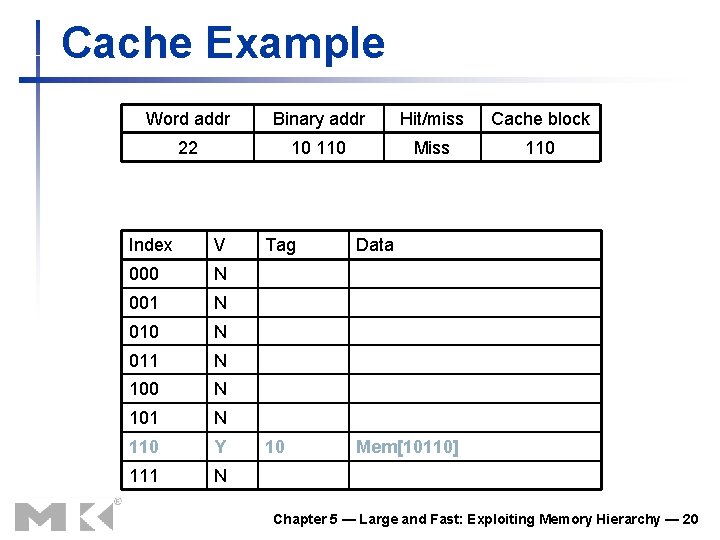

Cache Example Word addr Binary addr Hit/miss Cache block 22 10 110 Miss 110 Index V 000 N 001 N 010 N 011 N 100 N 101 N 110 Y 111 N Tag Data 10 Mem[10110] Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 20

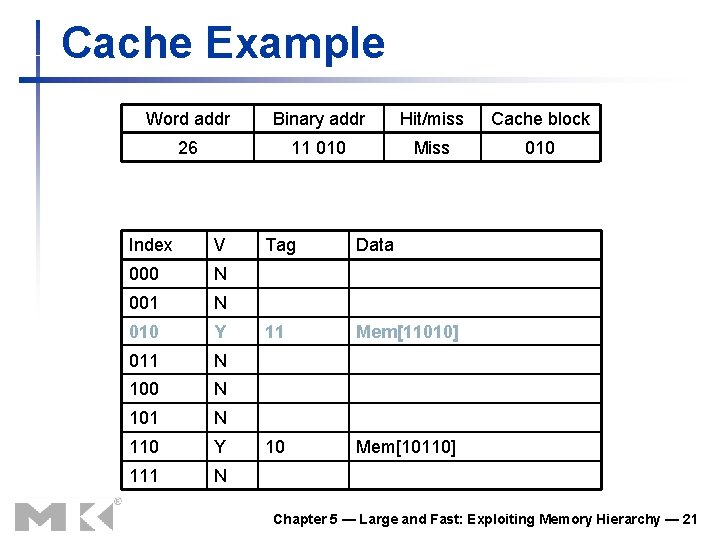

Cache Example Word addr Binary addr Hit/miss Cache block 26 11 010 Miss 010 Index V 000 N 001 N 010 Y 011 N 100 N 101 N 110 Y 111 N Tag Data 11 Mem[11010] 10 Mem[10110] Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 21

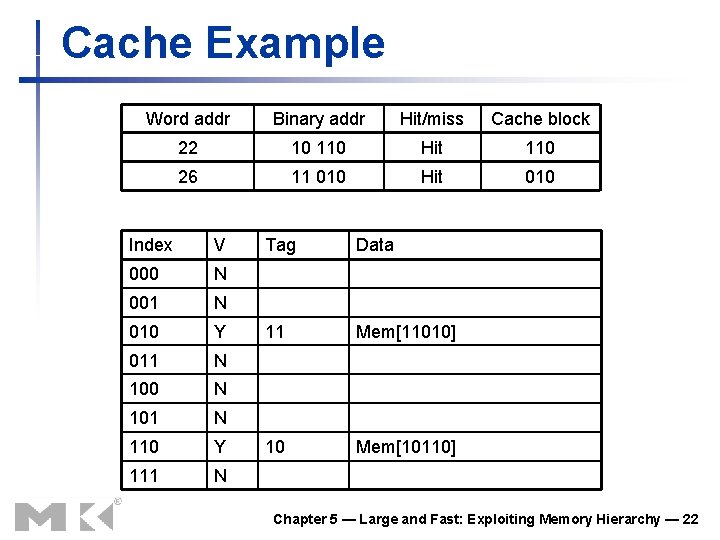

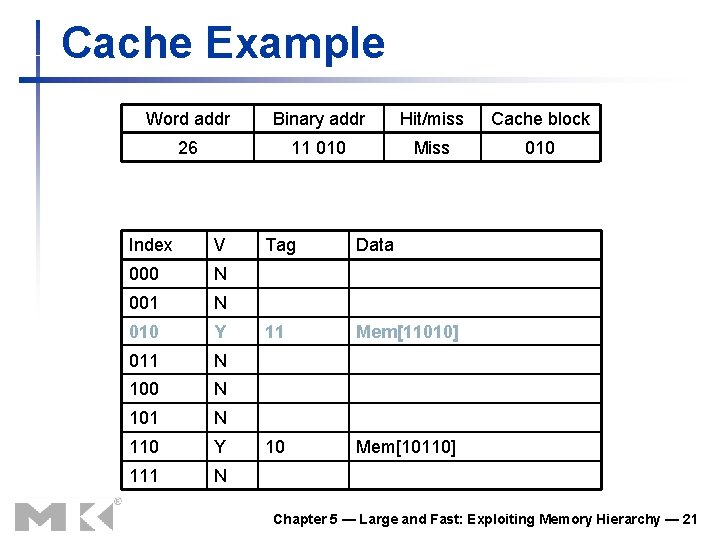

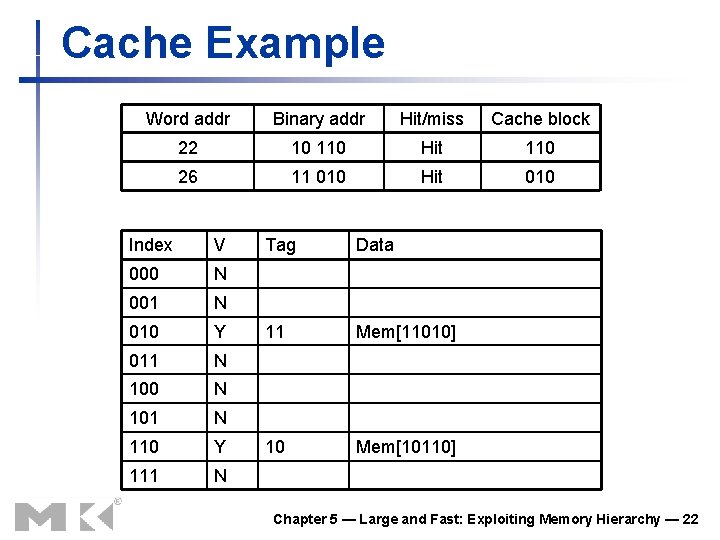

Cache Example Word addr Binary addr Hit/miss Cache block 22 10 110 Hit 110 26 11 010 Hit 010 Index V 000 N 001 N 010 Y 011 N 100 N 101 N 110 Y 111 N Tag Data 11 Mem[11010] 10 Mem[10110] Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 22

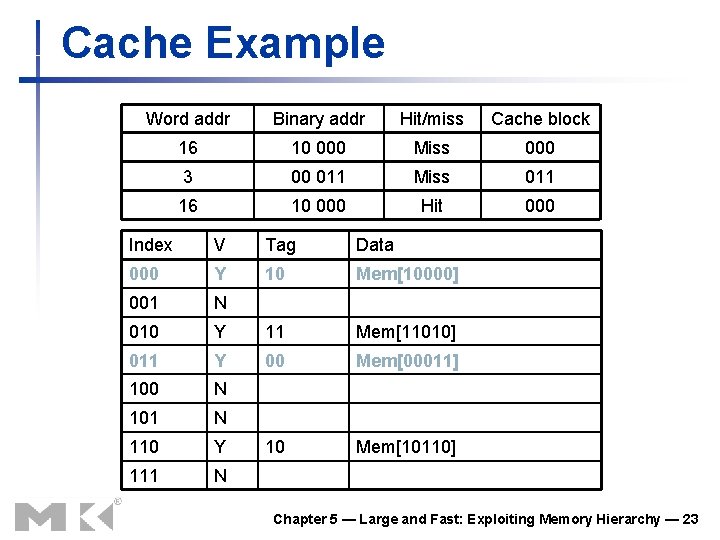

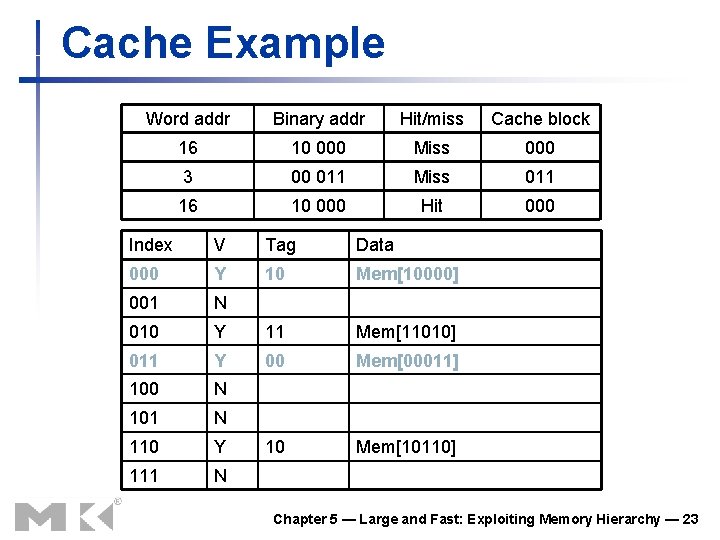

Cache Example Word addr Binary addr Hit/miss Cache block 16 10 000 Miss 000 3 00 011 Miss 011 16 10 000 Hit 000 Index V Tag Data 000 Y 10 Mem[10000] 001 N 010 Y 11 Mem[11010] 011 Y 00 Mem[00011] 100 N 101 N 110 Y 10 Mem[10110] 111 N Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 23

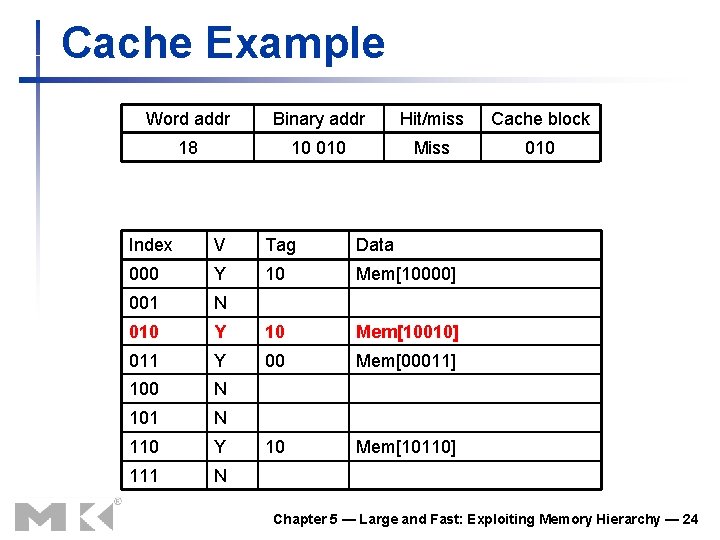

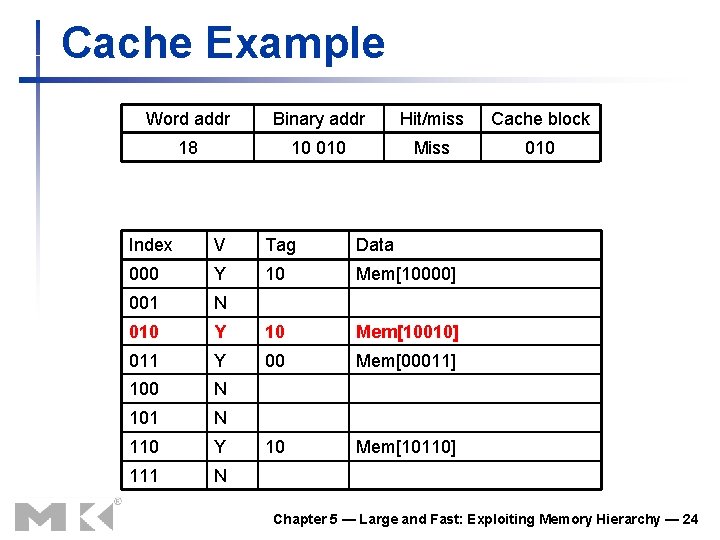

Cache Example Word addr Binary addr Hit/miss Cache block 18 10 010 Miss 010 Index V Tag Data 000 Y 10 Mem[10000] 001 N 010 Y 10 Mem[10010] 011 Y 00 Mem[00011] 100 N 101 N 110 Y 10 Mem[10110] 111 N Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 24

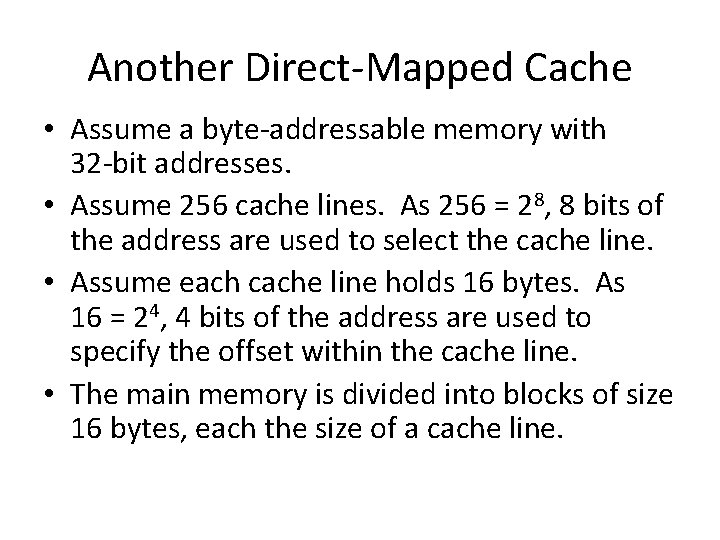

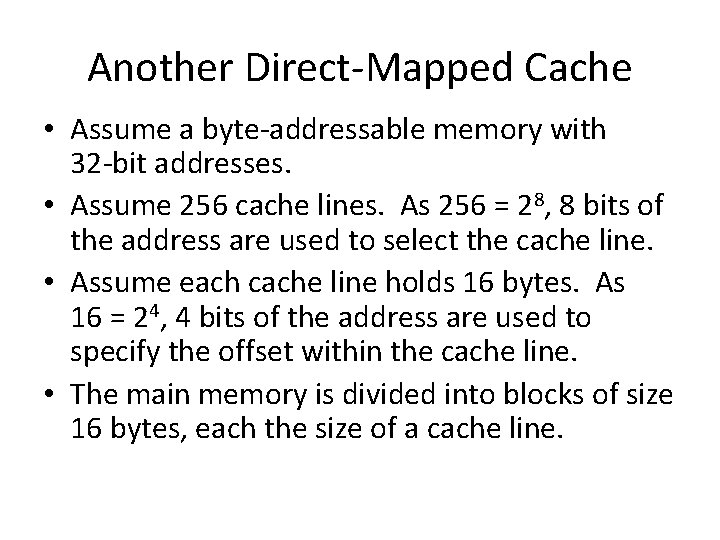

Another Direct-Mapped Cache • Assume a byte-addressable memory with 32 -bit addresses. • Assume 256 cache lines. As 256 = 28, 8 bits of the address are used to select the cache line. • Assume each cache line holds 16 bytes. As 16 = 24, 4 bits of the address are used to specify the offset within the cache line. • The main memory is divided into blocks of size 16 bytes, each the size of a cache line.

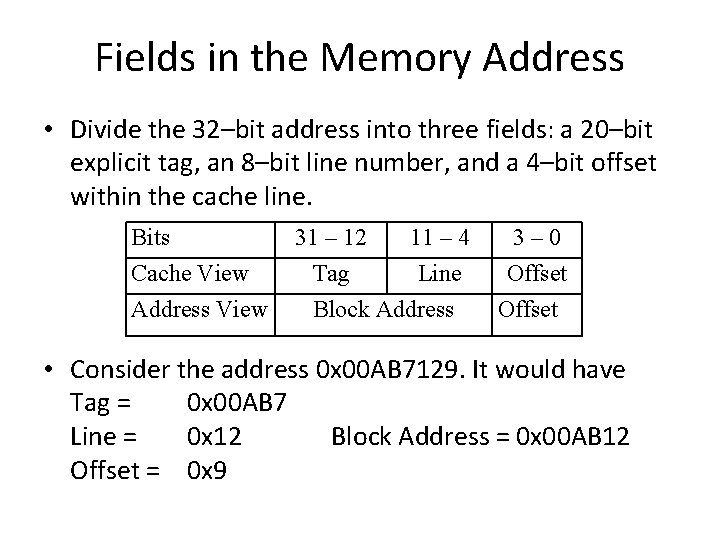

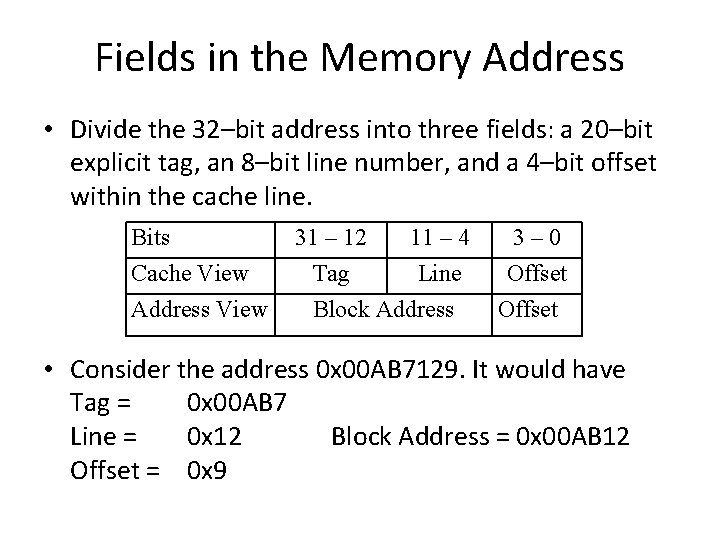

Fields in the Memory Address • Divide the 32–bit address into three fields: a 20–bit explicit tag, an 8–bit line number, and a 4–bit offset within the cache line. Bits Cache View Address View 31 – 12 11 – 4 Tag Line Block Address 3– 0 Offset • Consider the address 0 x 00 AB 7129. It would have Tag = 0 x 00 AB 7 Line = 0 x 12 Block Address = 0 x 00 AB 12 Offset = 0 x 9

Associative Caches • In a direct-mapped cache, each memory block from the main memory can be mapped into exactly one location in the cache. • Other cache organizations allow some flexibility in memory block placement. • One option for flexible placement is called an associative cache, based on content addressable memory.

Associative Memory • In associative memory, the contents of the memory are searched in one memory cycle. • Consider an array of 256 entries, indexed from 0 to 255 (or 0 x 0 to 0 x. FF). • Standard search strategies require either 128 tries (unordered) or 8 tries (binary search). • In content addressable memory, only one search is required.

Associative Search • Associative memory would find the item in one search. Think of the control circuitry as “broadcasting” the data value to all memory cells at the same time. If one of the memory cells has the value, it raises a Boolean flag and the item is found. • Some associative memories allow duplicate values and resolve multiple matches. Cache designs do not allow duplicate values.

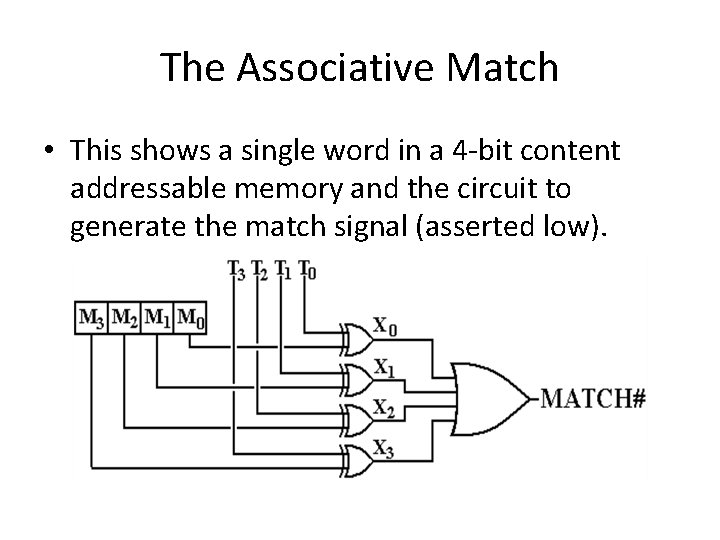

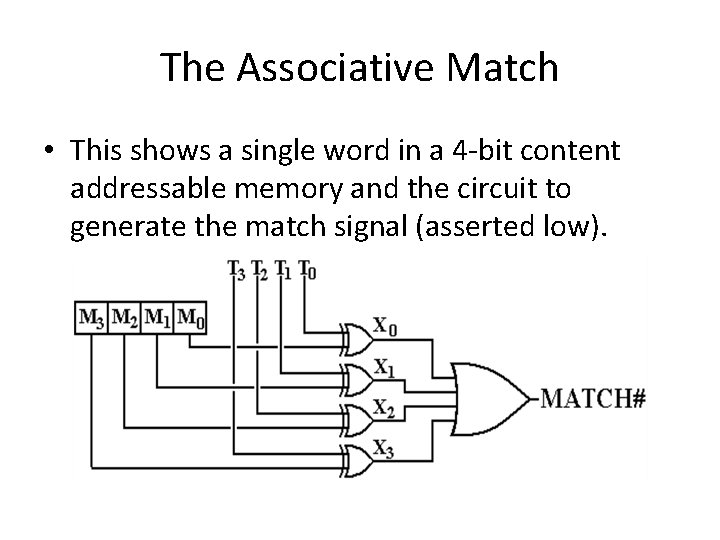

The Associative Match • This shows a single word in a 4 -bit content addressable memory and the circuit to generate the match signal (asserted low).

The Associative Cache • Again, a 32 -bit address with 16 -byte cache lines (4 bits for offset in cache). • The number of cache lines is not important for address handling in associative caches. • The address will divide as follows: 28 bits for the cache tag, and 4 bits for the offset in the cache line. • The cache tags will be stored in associative memory connected to the cache. .

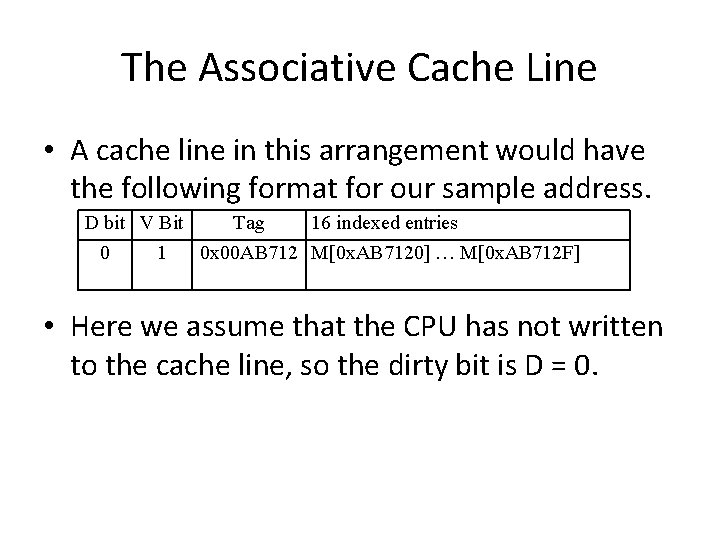

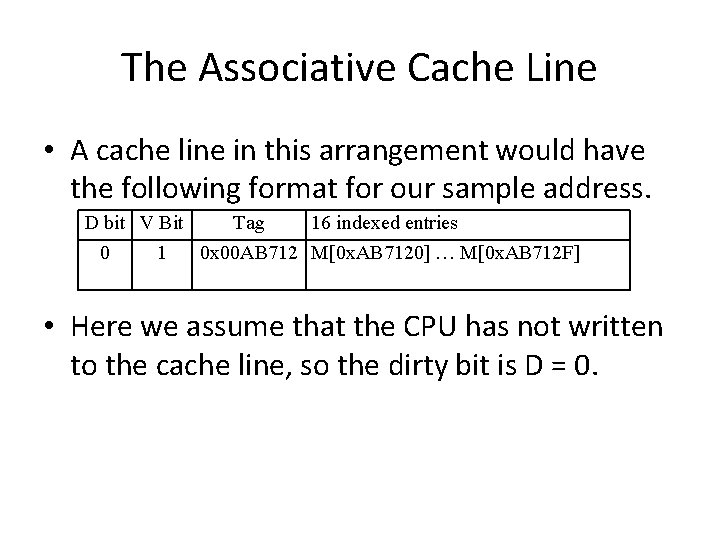

The Associative Cache Line • A cache line in this arrangement would have the following format for our sample address. D bit V Bit Tag 16 indexed entries 0 1 0 x 00 AB 712 M[0 x. AB 7120] … M[0 x. AB 712 F] • Here we assume that the CPU has not written to the cache line, so the dirty bit is D = 0.

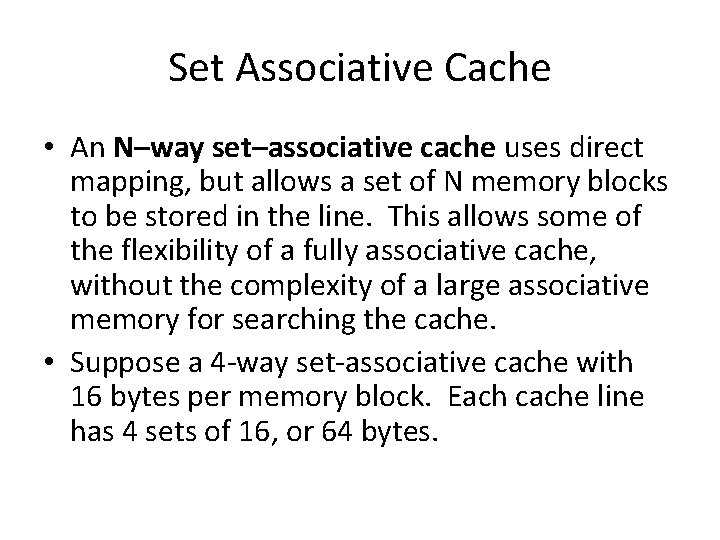

Set Associative Cache • An N–way set–associative cache uses direct mapping, but allows a set of N memory blocks to be stored in the line. This allows some of the flexibility of a fully associative cache, without the complexity of a large associative memory for searching the cache. • Suppose a 4 -way set-associative cache with 16 bytes per memory block. Each cache line has 4 sets of 16, or 64 bytes.

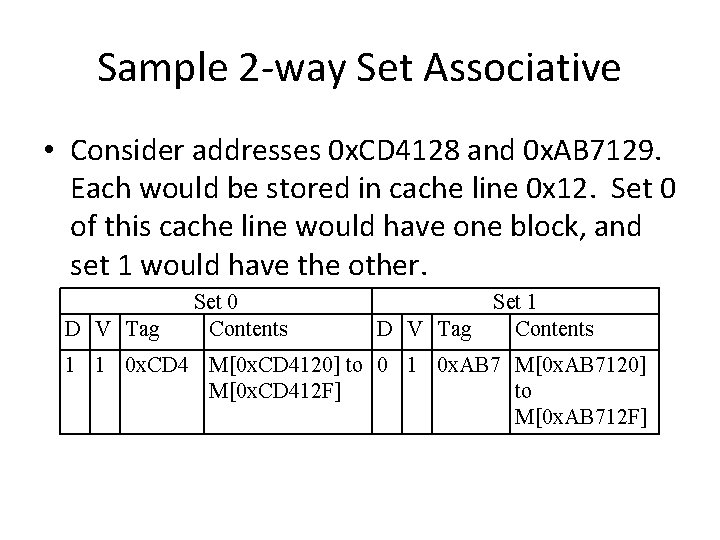

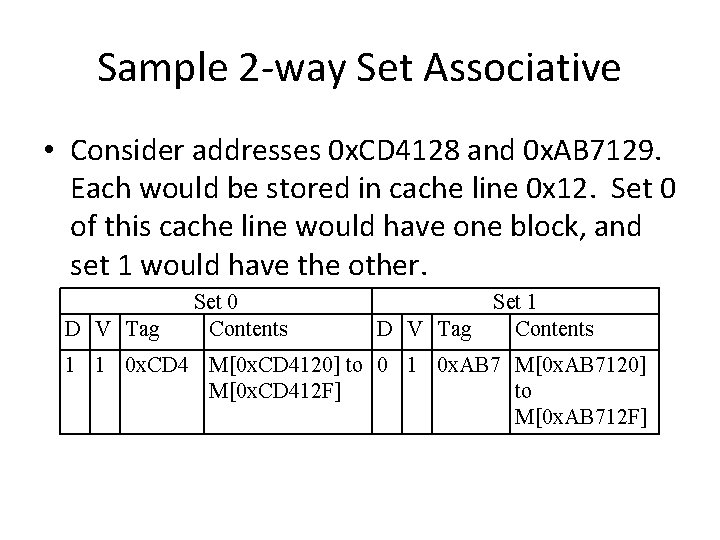

Sample 2 -way Set Associative • Consider addresses 0 x. CD 4128 and 0 x. AB 7129. Each would be stored in cache line 0 x 12. Set 0 of this cache line would have one block, and set 1 would have the other. D V Tag Set 0 Contents Set 1 D V Tag Contents 1 1 0 x. CD 4 M[0 x. CD 4120] to 0 1 0 x. AB 7 M[0 x. AB 7120] M[0 x. CD 412 F] to M[0 x. AB 712 F]

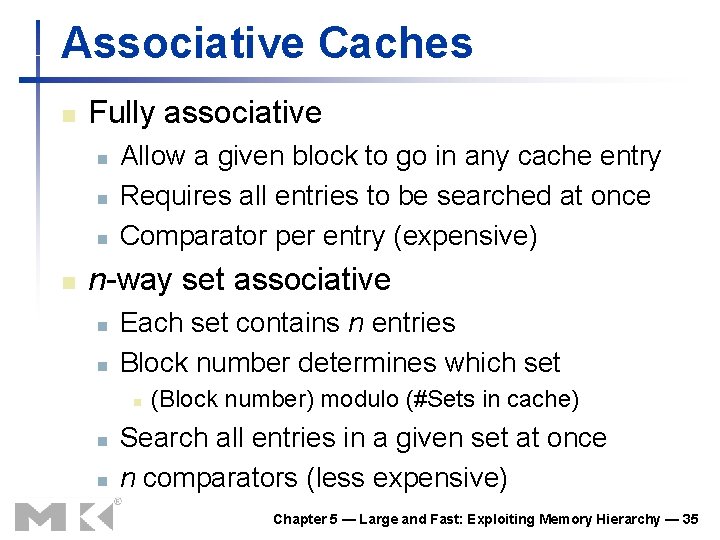

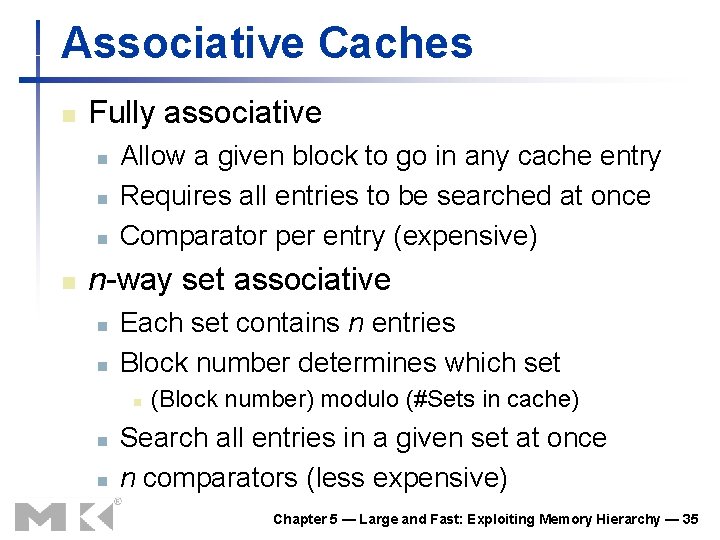

Associative Caches n Fully associative n n Allow a given block to go in any cache entry Requires all entries to be searched at once Comparator per entry (expensive) n-way set associative n n Each set contains n entries Block number determines which set n n n (Block number) modulo (#Sets in cache) Search all entries in a given set at once n comparators (less expensive) Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 35

Spectrum of Associativity n For a cache with 8 entries Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 36

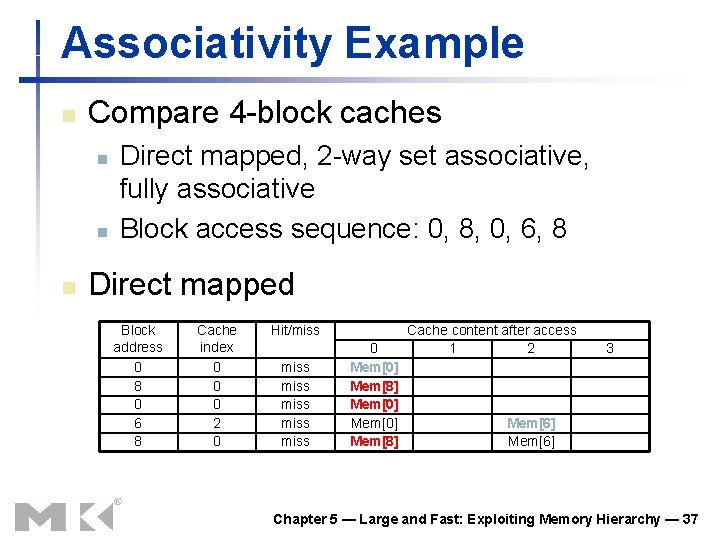

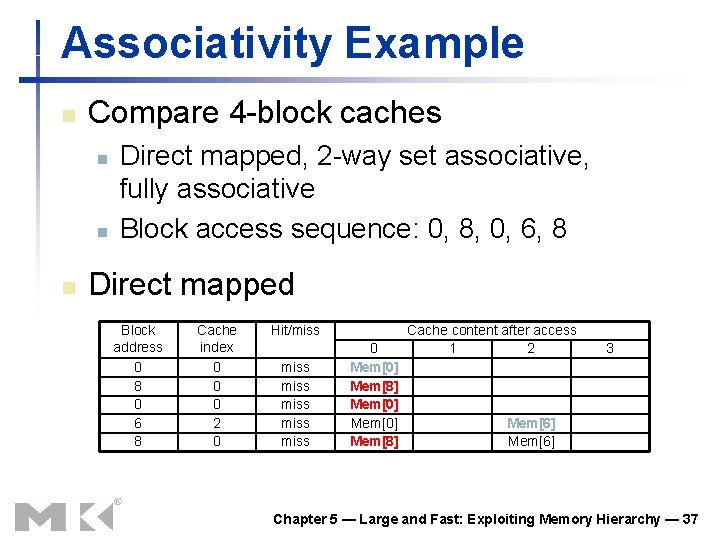

Associativity Example n Compare 4 -block caches n n n Direct mapped, 2 -way set associative, fully associative Block access sequence: 0, 8, 0, 6, 8 Direct mapped Block address 0 8 0 6 8 Cache index 0 0 0 2 0 Hit/miss miss 0 Mem[0] Mem[8] Cache content after access 1 2 3 Mem[6] Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 37

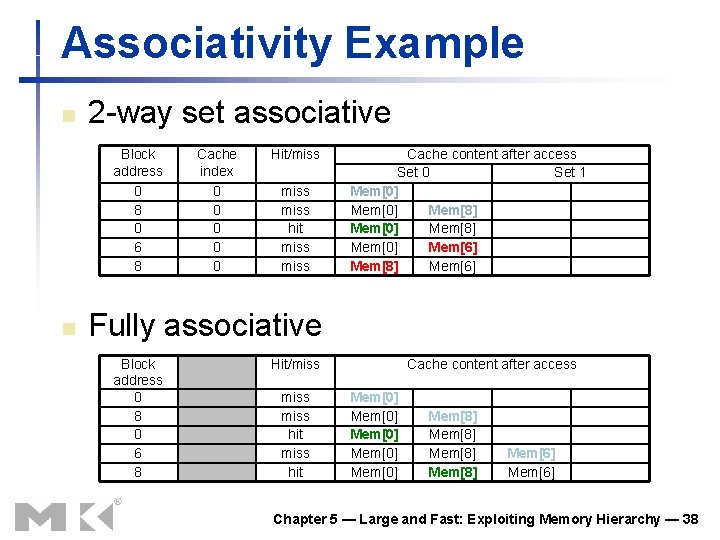

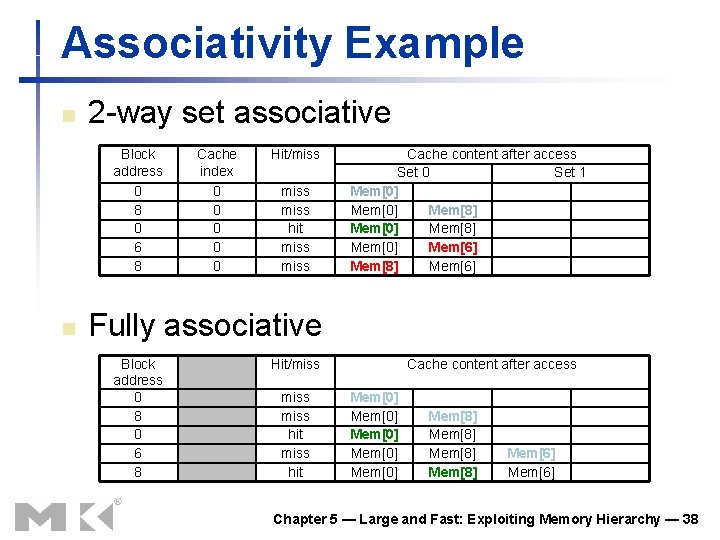

Associativity Example n 2 -way set associative Block address 0 8 0 6 8 n Cache index 0 0 0 Hit/miss hit miss Cache content after access Set 0 Set 1 Mem[0] Mem[8] Mem[0] Mem[6] Mem[8] Mem[6] Fully associative Block address 0 8 0 6 8 Hit/miss hit Cache content after access Mem[0] Mem[0] Mem[8] Mem[6] Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 38

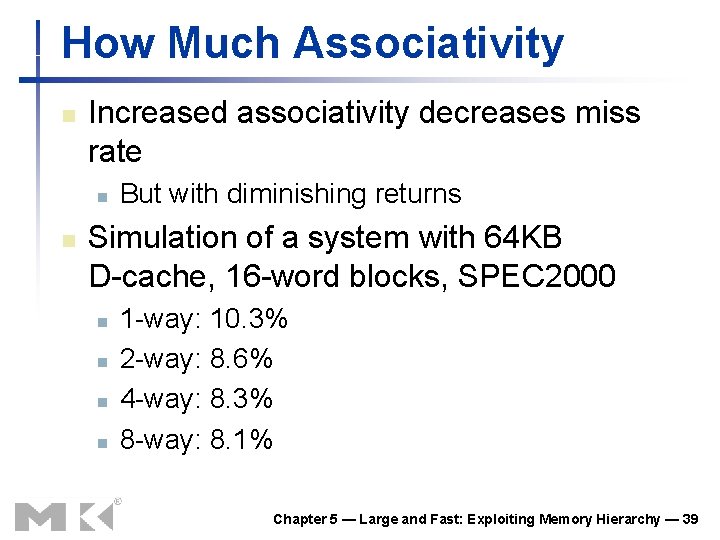

How Much Associativity n Increased associativity decreases miss rate n n But with diminishing returns Simulation of a system with 64 KB D-cache, 16 -word blocks, SPEC 2000 n n 1 -way: 10. 3% 2 -way: 8. 6% 4 -way: 8. 3% 8 -way: 8. 1% Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 39

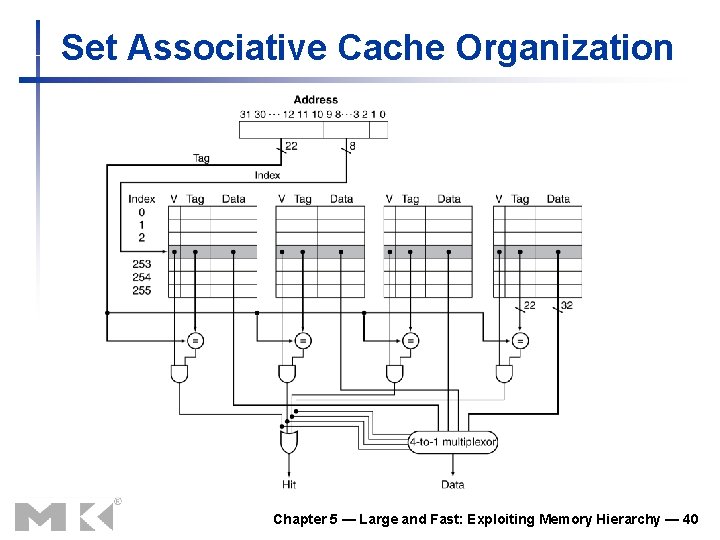

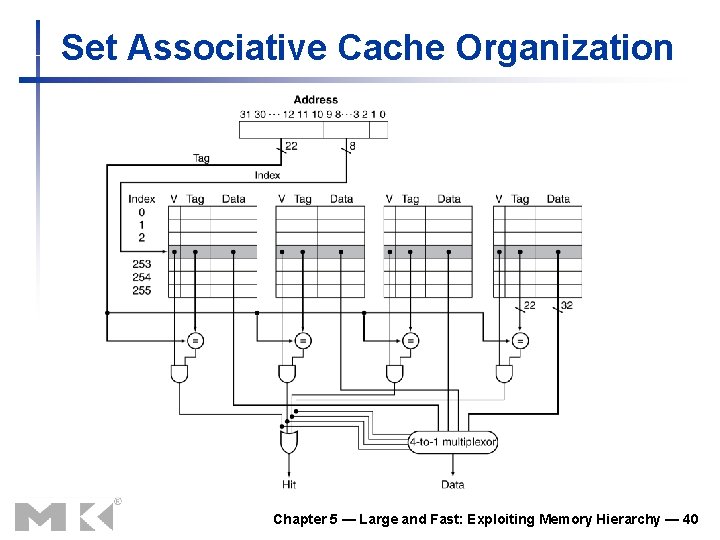

Set Associative Cache Organization Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 40