The LOCUS Distributed Operating System Bruce Walker Gerald

The LOCUS Distributed Operating System Bruce Walker, Gerald Popek, Robert English, Charles Kline and Greg Thiel University of California at Los Angeles 1983 Presented By – Quan(Cary) Zhang

LOCUS is not just a paper…

History on Distributed System n n Roughly speaking, we can divide the history of modern computing into the following eras: 1970 s: Timesharing (1 computer with many users) 1980 s: Personal computing (1 computer per user) 1990 s: Parallel computing (many computers per user) -Andrew S. Tanenbaum(in Amoeba Project)

LOCUS Distributed Operating System Network Transparency n Looks like one system, though each site runs a copy of kernel ☼ Distributed File system with name transparency and replication n Remote process creation & Remote IPC n Can be across heterogeneous CPU n

Overview n Distributed File System q q n n n Flexible and automatic replication Nested transaction Remote Processes Recovery from conflicts Dynamic Reconfiguration

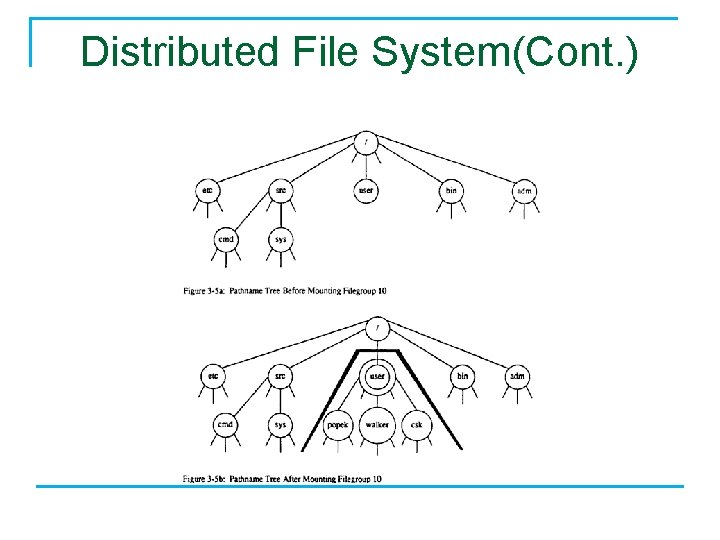

Distributed File System n Naming similar to UNIX: single directory tree q q q Transparent Naming: not mapping name to the location Transparent Replication: Glue filegroups to the directory tree

Distributed File System(Cont. )

Distributed File System n Replication q Availability, Yet complicate the update n q Performance n q Directory entries stored `nearby’ the site the child file store High level of the naming tree should be highly replicated, vice versa Essential to the Environment Set up files (Q)

Mechanism Support for replication n Physical containers store subset of filegroup’s files Copies of a file samely resolves to <filegroup number, inode> Version vector for the complicated update

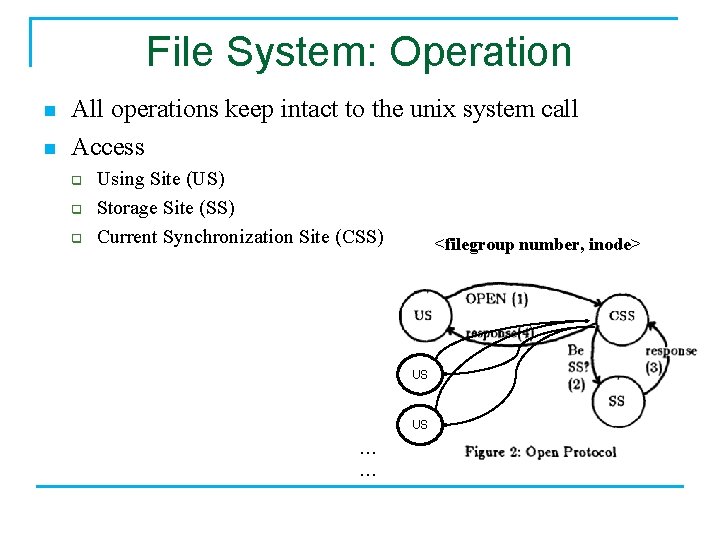

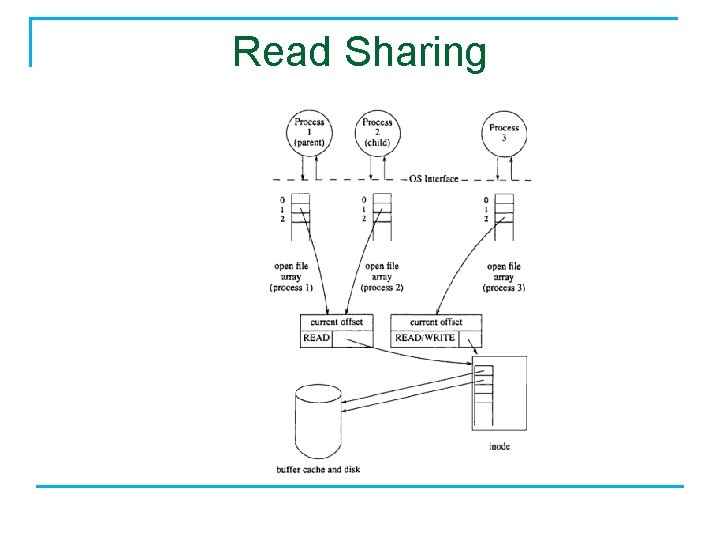

File System: Operation n n All operations keep intact to the unix system call Access q q q Using Site (US) Storage Site (SS) Current Synchronization Site (CSS) <filegroup number, inode> US US … …

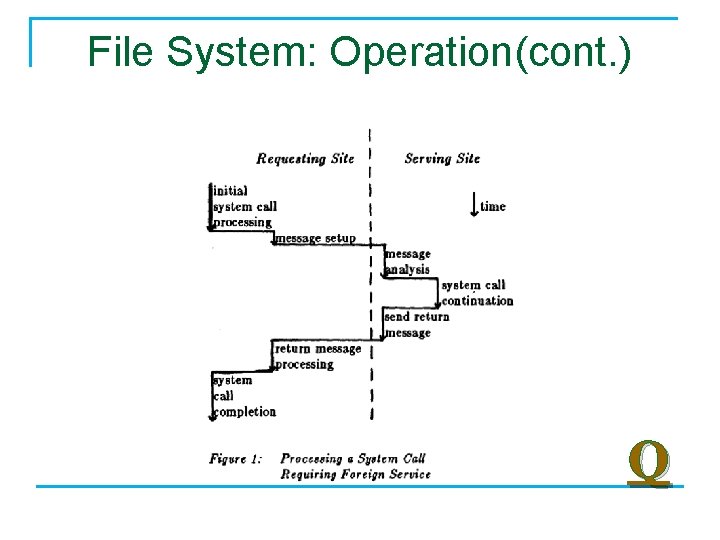

File System: Operation(cont. ) Q

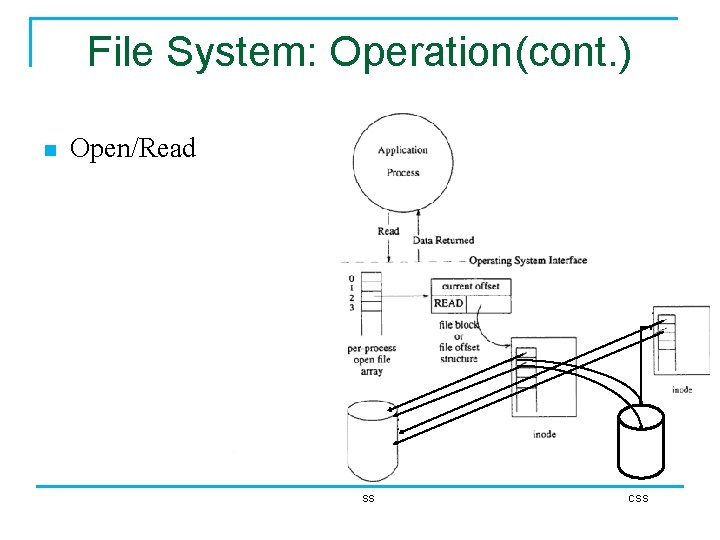

File System: Operation(cont. ) n Open/Read SS CSS

Read Sharing

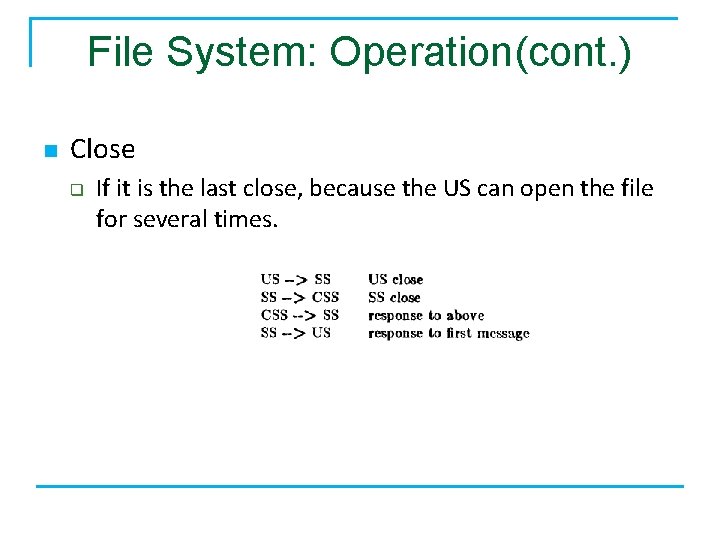

File System: Operation(cont. ) n Close q If it is the last close, because the US can open the file for several times.

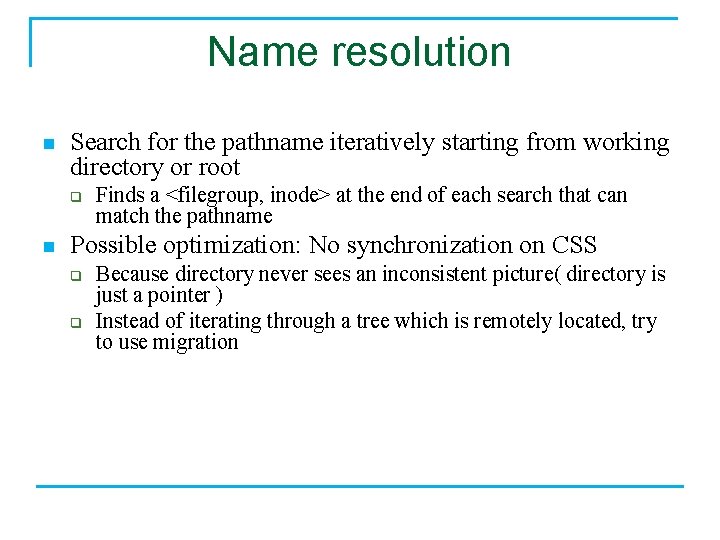

Name resolution n Search for the pathname iteratively starting from working directory or root q n Finds a <filegroup, inode> at the end of each search that can match the pathname Possible optimization: No synchronization on CSS q q Because directory never sees an inconsistent picture( directory is just a pointer ) Instead of iterating through a tree which is remotely located, try to use migration

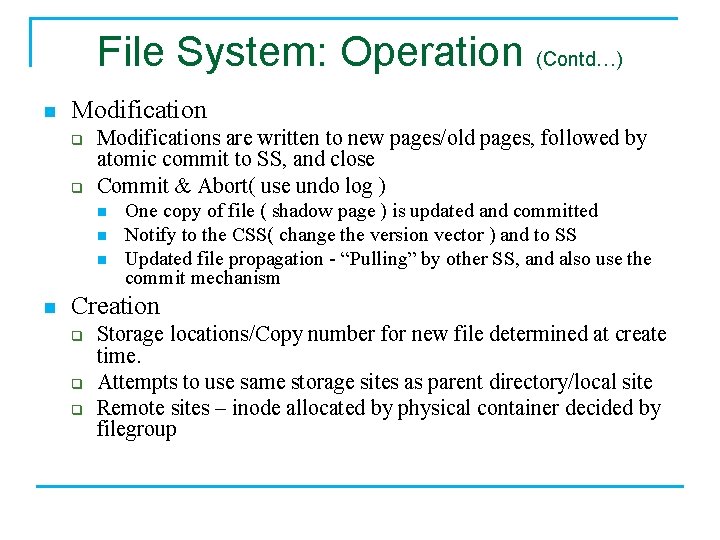

File System: Operation (Contd…) n Modification q q Modifications are written to new pages/old pages, followed by atomic commit to SS, and close Commit & Abort( use undo log ) n n One copy of file ( shadow page ) is updated and committed Notify to the CSS( change the version vector ) and to SS Updated file propagation - “Pulling” by other SS, and also use the commit mechanism Creation q q q Storage locations/Copy number for new file determined at create time. Attempts to use same storage sites as parent directory/local site Remote sites – inode allocated by physical container decided by filegroup

File System: Operation (Contd…) n Machine Dependent File q n Different Versions of the same file (Process Context based) Remote device and IPC pipe(distributed memory)

Remote Processes n n n Supports remote fork and exec (a special module) Copies entire process memory to destination machine: can be slow run system call performs the equivalent to local fork and remote exec Shared data protected using token passing (e. g. file descriptors, handle) Child Parent notified upon failures

Recovery n n n “Partitioning will occur” Strict Synchronization in a partition(independently/transaction) Merging: q Directories and mailboxes are easy n n n q q q Issue: File is both deleted and updated Solution: Propagate changes/Delete, whichever is later Name conflict: Rename and email Automatic - CSS Else, pass to filetype-specific recovery program Else, mail owner by massage

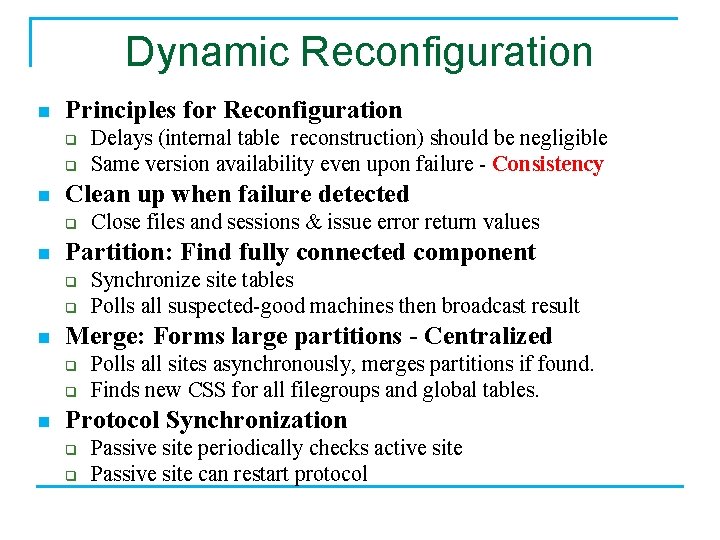

Dynamic Reconfiguration n Principles for Reconfiguration q q n Clean up when failure detected q n q Synchronize site tables Polls all suspected-good machines then broadcast result Merge: Forms large partitions - Centralized q q n Close files and sessions & issue error return values Partition: Find fully connected component q n Delays (internal table reconstruction) should be negligible Same version availability even upon failure - Consistency Polls all sites asynchronously, merges partitions if found. Finds new CSS for all filegroups and global tables. Protocol Synchronization q q Passive site periodically checks active site Passive site can restart protocol

Experiment n Bla, bla, yet with no essential result

Conclusion n Transparent Distributed System Behavior with high performance “is feasible” n “Performance Transparency” q Except remote fork/exec n Not much experience with replicated storage n Protocol for membership to a partition with high performance works

My perspective on LOCUS Not done: n Parallel Computing: because no thread concept currently n Process/Thread Migration: Load balance n Security: SSH n Distributed File System VS File System Service in Distributed environment

Discussion n – Why not simply store the Kernel, Shells etc on "local storage space"? I think you are right, not all the resource/file should be distributed into the network of computers e. g. FITCI

n – Can the RPC techniques we discussed earlier be implemented in this framework and help? Probably true: That RPC paper was in 1984, yet this paper was finished in 1983

n – The location transparency required/implemented in this work may incur imbalance of performance cost, is this a problem? Can centralized solution help for this problem? I think it is possible to add some policies to attain the load balance, e. g. , set the physical container for the file group respectively to the sites, yet there is real challenge in getting the information about the topology of the network.

n – Compare and contrast "The Multikernel" that we discussed on last Systems Research group meeting and LOCUS ? Could we say LOCUS is a very early stage of a Multikernel approach I think the distributed system(Amoeba) itself is planning to design the multikernel, so, the multikernel concept is not new, nonetheless, the multikernel we discussed last week can apply to one mechine with different execution unit(ISA)

n – If the node responsible for a file is very busy and unable to handle network requests, then what happens to my request? I don’t think this can happen, because the replication never stops.

n – It seems that distributed filesystems don't tend to be very popular in the real world. Instead, networked organizations who need to make files available in multiple locations tend to concentrate all storage on one server (or bank of servers), using something like NFS. Why has distributed storage, like in this paper, not become more popular? Because of the scalability issue

n As number of nodes and partitioning events increase I think that the paper's approach of manual conflict resolution won't scale!

n What do they mean by the 'guess' provided for the incore inode number? Is this sent by the US or the CSS and what happens if the guess is incorrect? And why should guessing be necessary at all - shouldn't the CSS know exactly which logical file it needs to access from the SS

n Is it possible to store a single file over multiple nodes, not in a replicated form, but in a striped form? That would mean that block A of a file is on PC 1, while block B of the file is on PC 2

- Slides: 32