The Largest Compaq Cluster Bringing TeraScale Computing to

The Largest Compaq Cluster Bringing Tera-Scale Computing to a Wide Audience at LLNL Mark Seager LLNL ASCI Tera. Scale Platforms PI October 6, 1999

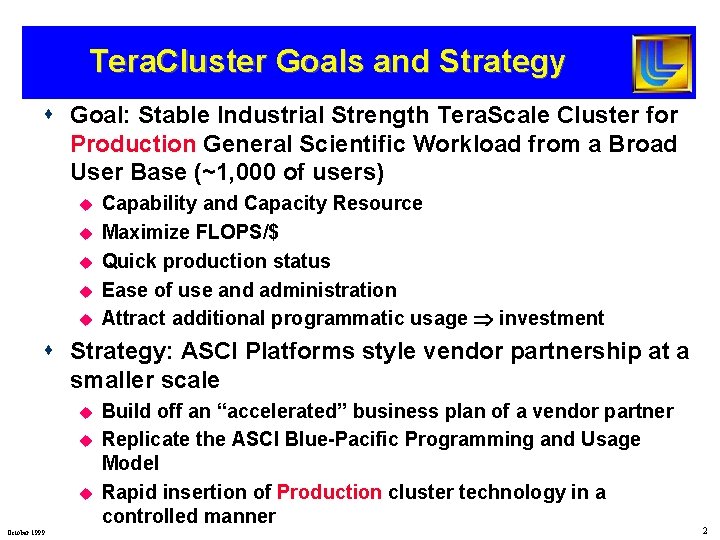

Tera. Cluster Goals and Strategy s Goal: Stable Industrial Strength Tera. Scale Cluster for Production General Scientific Workload from a Broad User Base (~1, 000 of users) u u u Capability and Capacity Resource Maximize FLOPS/$ Quick production status Ease of use and administration Attract additional programmatic usage investment s Strategy: ASCI Platforms style vendor partnership at a smaller scale u u u October 1999 Build off an “accelerated” business plan of a vendor partner Replicate the ASCI Blue-Pacific Programming and Usage Model Rapid insertion of Production cluster technology in a controlled manner 2

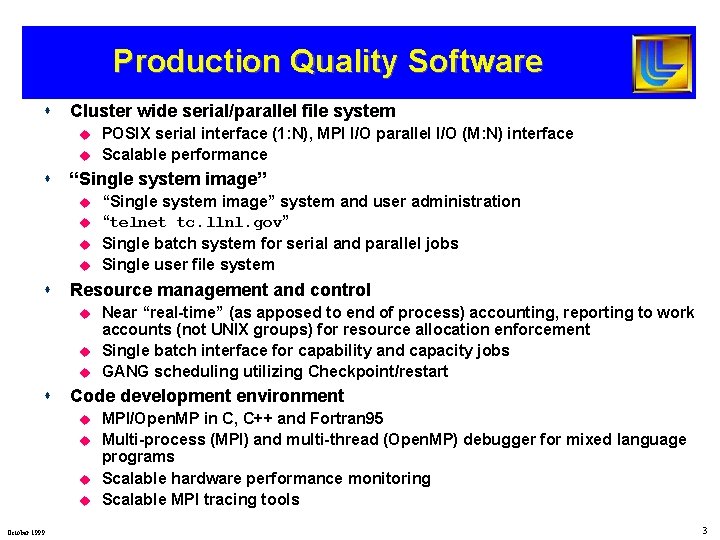

Production Quality Software s Cluster wide serial/parallel file system u u s “Single system image” u u s u u Near “real-time” (as apposed to end of process) accounting, reporting to work accounts (not UNIX groups) for resource allocation enforcement Single batch interface for capability and capacity jobs GANG scheduling utilizing Checkpoint/restart Code development environment u u October 1999 “Single system image” system and user administration “telnet tc. llnl. gov” Single batch system for serial and parallel jobs Single user file system Resource management and control u s POSIX serial interface (1: N), MPI I/O parallel I/O (M: N) interface Scalable performance MPI/Open. MP in C, C++ and Fortran 95 Multi-process (MPI) and multi-thread (Open. MP) debugger for mixed language programs Scalable hardware performance monitoring Scalable MPI tracing tools 3

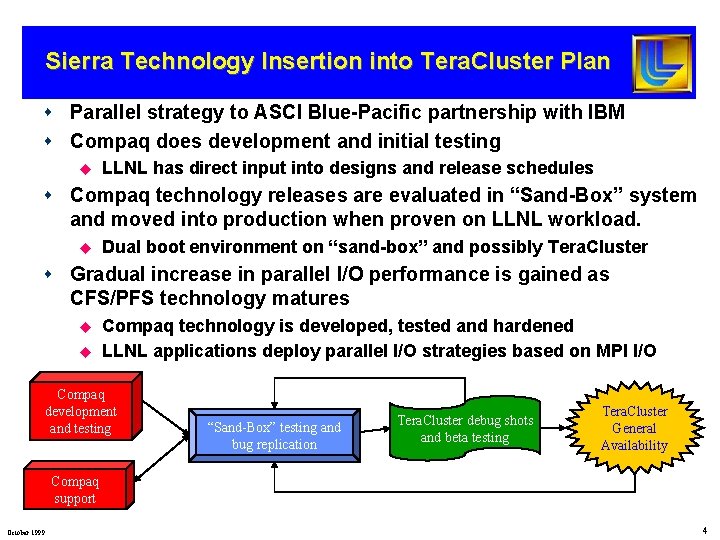

Sierra Technology Insertion into Tera. Cluster Plan s Parallel strategy to ASCI Blue-Pacific partnership with IBM s Compaq does development and initial testing u LLNL has direct input into designs and release schedules s Compaq technology releases are evaluated in “Sand-Box” system and moved into production when proven on LLNL workload. u Dual boot environment on “sand-box” and possibly Tera. Cluster s Gradual increase in parallel I/O performance is gained as CFS/PFS technology matures u u Compaq technology is developed, tested and hardened LLNL applications deploy parallel I/O strategies based on MPI I/O Compaq development and testing “Sand-Box” testing and bug replication Tera. Cluster debug shots and beta testing Tera. Cluster General Availability Compaq support October 1999 4

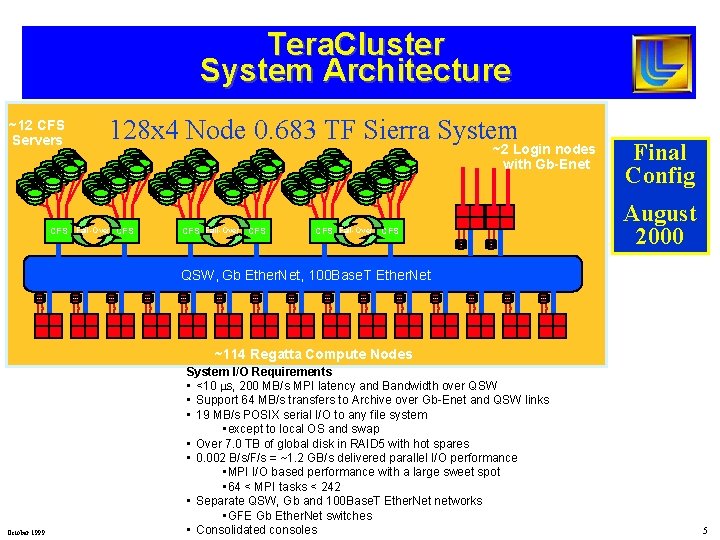

Tera. Cluster System Architecture ~12 CFS Servers 128 x 4 Node 0. 683 TF Sierra System CFS Fail-Over CFS ~2 Login nodes with Gb-Enet CFS Fail-Over CFS Final Config August 2000 QSW, Gb Ether. Net, 100 Base. T Ether. Net ~114 Regatta Compute Nodes October 1999 System I/O Requirements • <10 ms, 200 MB/s MPI latency and Bandwidth over QSW • Support 64 MB/s transfers to Archive over Gb-Enet and QSW links • 19 MB/s POSIX serial I/O to any file system • except to local OS and swap • Over 7. 0 TB of global disk in RAID 5 with hot spares • 0. 002 B/s/F/s = ~1. 2 GB/s delivered parallel I/O performance • MPI I/O based performance with a large sweet spot • 64 < MPI tasks < 242 • Separate QSW, Gb and 100 Base. T Ether. Net networks • GFE Gb Ether. Net switches • Consolidated consoles 5

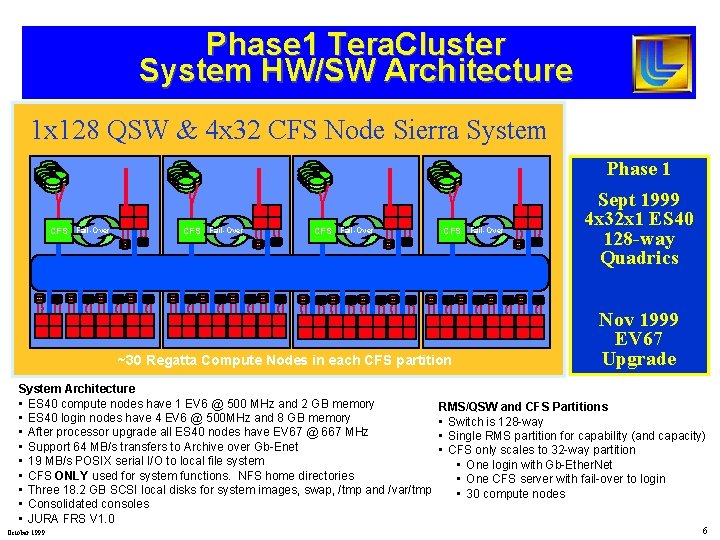

Phase 1 Tera. Cluster System HW/SW Architecture 1 x 128 QSW & 4 x 32 CFS Node Sierra System Phase 1 CFS Fail-Over ~30 Regatta Compute Nodes in each CFS partition System Architecture • ES 40 compute nodes have 1 EV 6 @ 500 MHz and 2 GB memory • ES 40 login nodes have 4 EV 6 @ 500 MHz and 8 GB memory • After processor upgrade all ES 40 nodes have EV 67 @ 667 MHz • Support 64 MB/s transfers to Archive over Gb-Enet • 19 MB/s POSIX serial I/O to local file system • CFS ONLY used for system functions. NFS home directories • Three 18. 2 GB SCSI local disks for system images, swap, /tmp and /var/tmp • Consolidated consoles • JURA FRS V 1. 0 October 1999 Sept 1999 1 ES 40 CFS 4 x 32 x 1 Server 128 -way Quadrics Nov 1999 EV 67 Upgrade RMS/QSW and CFS Partitions • Switch is 128 -way • Single RMS partition for capability (and capacity) • CFS only scales to 32 -way partition • One login with Gb-Ether. Net • One CFS server with fail-over to login • 30 compute nodes 6

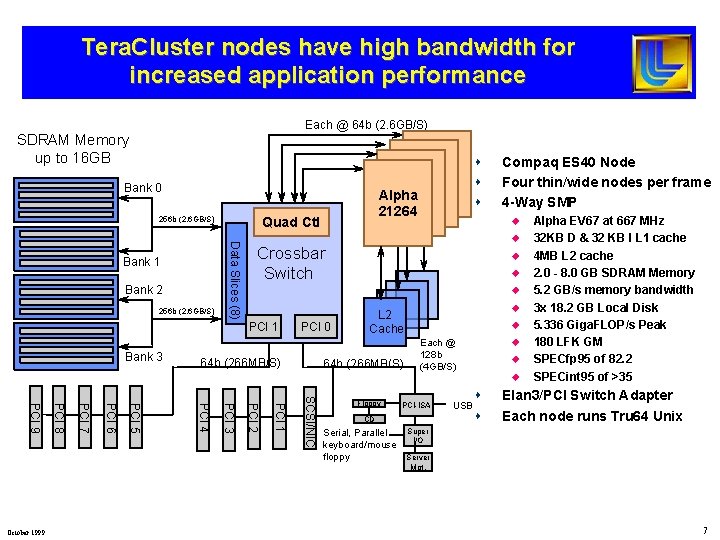

Tera. Cluster nodes have high bandwidth for increased application performance Each @ 64 b (2. 6 GB/S) SDRAM Memory up to 16 GB Bank 0 Bank 2 256 b (2. 6 GB/S) Data Slices (8) Bank 1 u u PCI 0 64 b (266 MB/S) u L 2 Cache 64 b (266 MB/S) SCSI/NIC PCI 1 PCI 2 PCI 3 PCI 4 PCI 5 PCI 6 PCI 7 PCI 8 PCI 9 October 1999 Compaq ES 40 Node Four thin/wide nodes per frame 4 -Way SMP u Crossbar Switch PCI 1 Bank 3 Alpha 21264 Quad Ctl 256 b (2. 6 GB/S) s s s Floppy u Each @ 128 b (4 GB/S) PCI-ISA CD Serial, Parallel keyboard/mouse floppy USB u u u s s Alpha EV 67 at 667 MHz 32 KB D & 32 KB I L 1 cache 4 MB L 2 cache 2. 0 - 8. 0 GB SDRAM Memory 5. 2 GB/s memory bandwidth 3 x 18. 2 GB Local Disk 5. 336 Giga. FLOP/s Peak 180 LFK GM SPECfp 95 of 82. 2 SPECint 95 of >35 Elan 3/PCI Switch Adapter Each node runs Tru 64 Unix Super I/O Server Mgt. 7

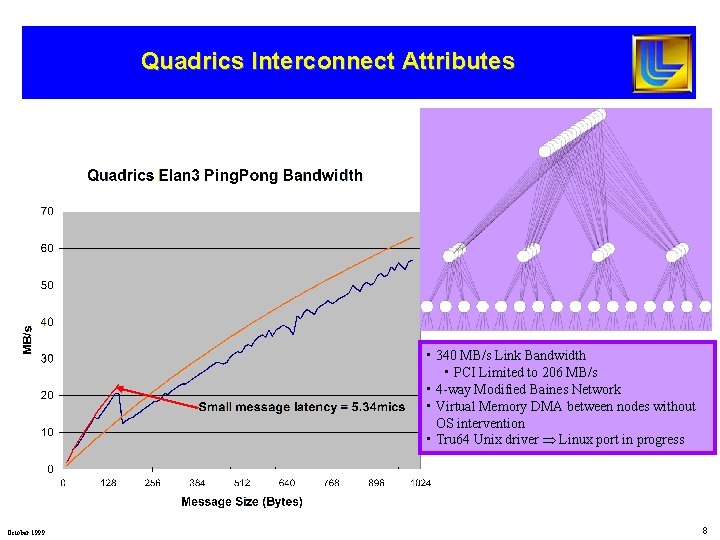

Quadrics Interconnect Attributes • 340 MB/s Link Bandwidth • PCI Limited to 206 MB/s • 4 -way Modified Baines Network • Virtual Memory DMA between nodes without OS intervention • Tru 64 Unix driver Linux port in progress October 1999 8

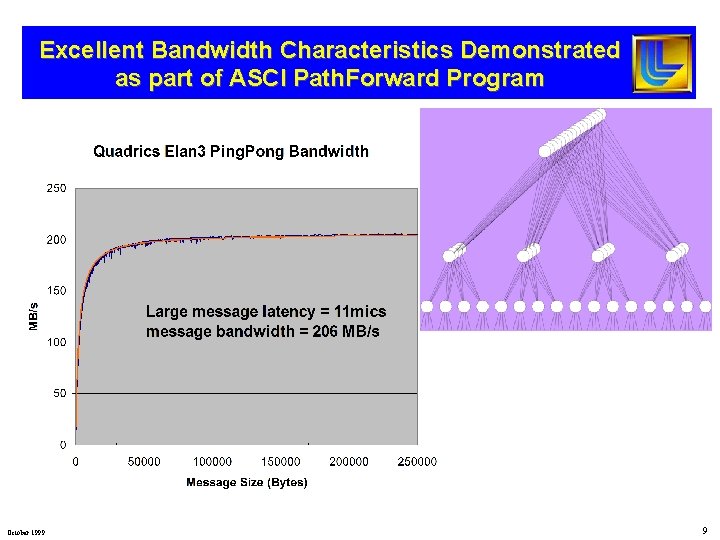

Excellent Bandwidth Characteristics Demonstrated as part of ASCI Path. Forward Program October 1999 9

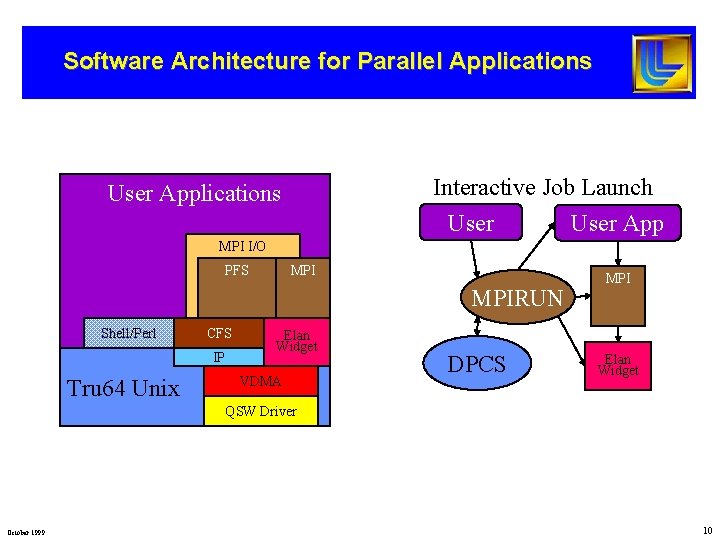

Software Architecture for Parallel Applications Interactive Job Launch User Applications MPI I/O PFS MPIRUN Shell/Perl CFS IP Tru 64 Unix Elan Widget VDMA DPCS MPI Elan Widget QSW Driver October 1999 10

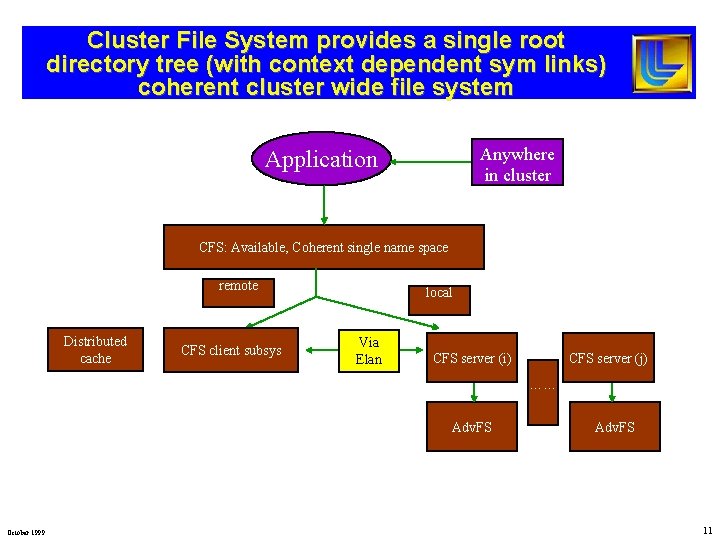

Cluster File System provides a single root directory tree (with context dependent sym links) coherent cluster wide file system Anywhere in cluster Application CFS: Available, Coherent single name space remote Distributed cache CFS client subsys local Via Elan CFS server (i) CFS server (j) …… Adv. FS October 1999 Adv. FS 11

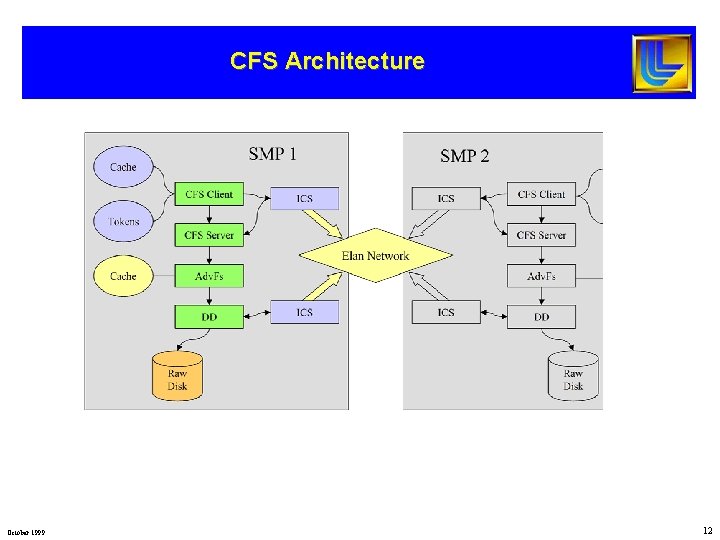

CFS Architecture October 1999 12

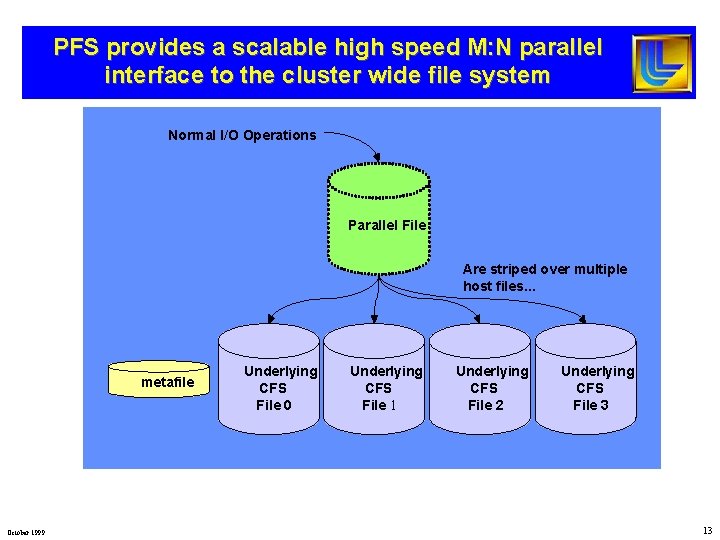

PFS provides a scalable high speed M: N parallel interface to the cluster wide file system Normal I/O Operations Parallel File Are striped over multiple host files. . . metafile October 1999 Underlying CFS File 0 Underlying CFS File 1 Underlying CFS File 2 Underlying CFS File 3 13

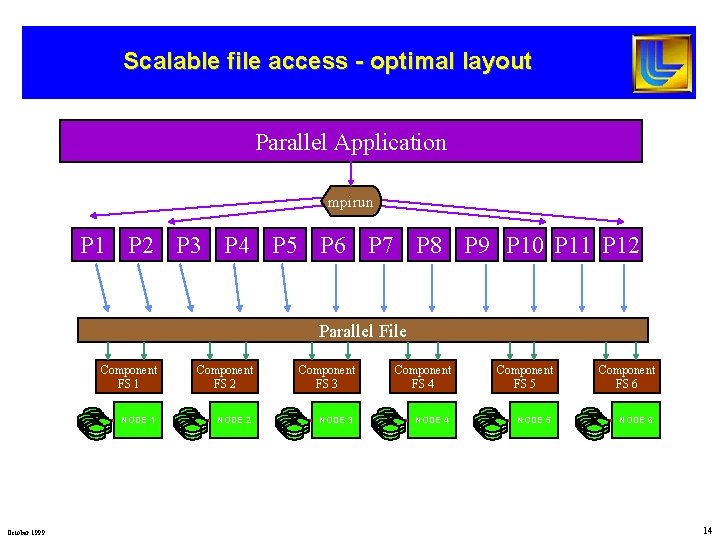

Scalable file access - optimal layout Parallel Application mpirun P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 P 9 P 10 P 11 P 12 Parallel File October 1999 Component FS 1 Component FS 2 Component FS 3 Component FS 4 Component FS 5 Component FS 6 NODE 1 NODE 2 NODE 3 NODE 4 NODE 5 NODE 6 14

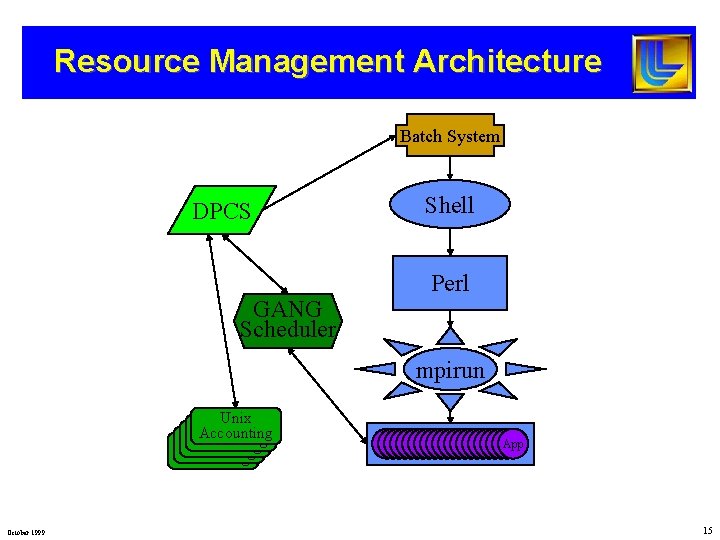

Resource Management Architecture Batch System DPCS GANG Scheduler Shell Perl mpirun Unix Accounting Unix Accounting October 1999 App App App App App App 15

Tera. Cluster Delivered September 29, 1999 October 1999 16

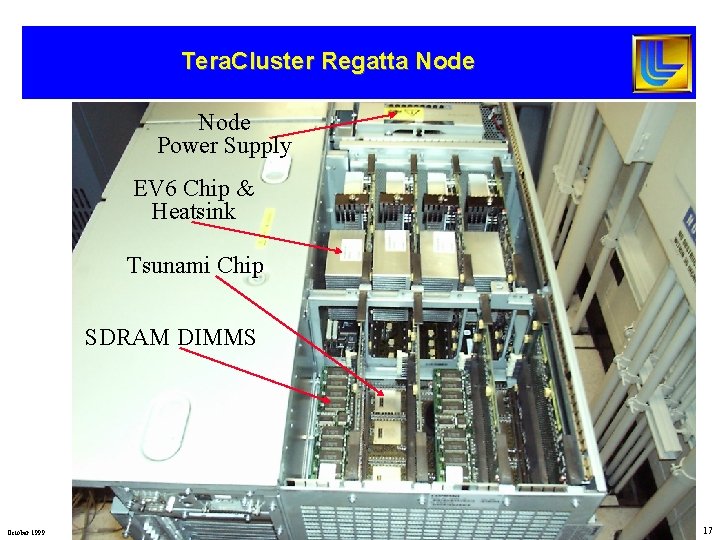

Tera. Cluster Regatta Node Power Supply EV 6 Chip & Heatsink Tsunami Chip SDRAM DIMMS October 1999 17

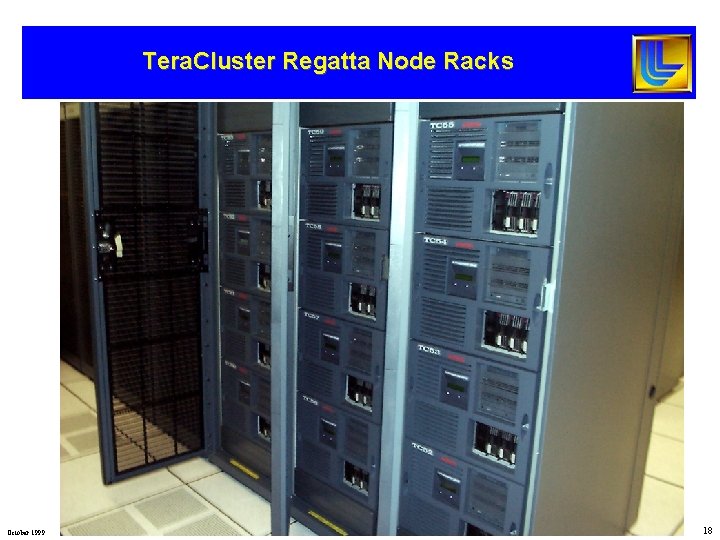

Tera. Cluster Regatta Node Racks October 1999 18

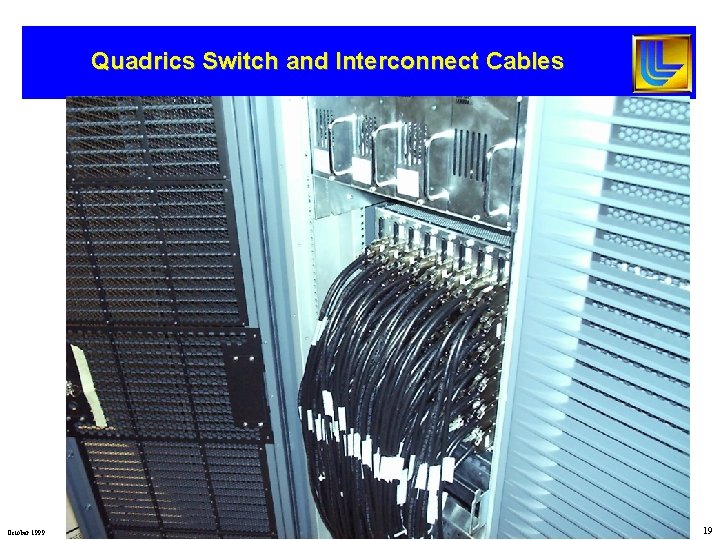

Quadrics Switch and Interconnect Cables October 1999 19

System Fast Ethernet Routers October 1999 20

System Console Concentrators October 1999 21

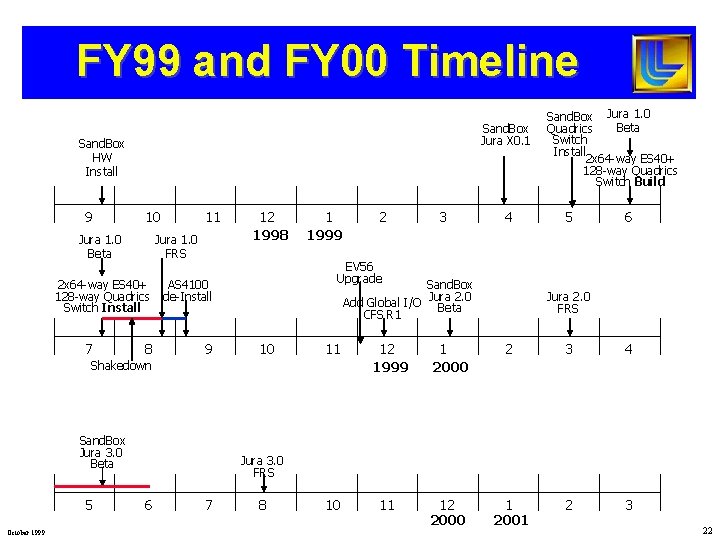

FY 99 and FY 00 Timeline Sand. Box Jura X 0. 1 Sand. Box HW Install 9 10 Jura 1. 0 Beta Jura 1. 0 FRS 2 x 64 -way ES 40+ 128 -way Quadrics Switch Install 7 11 8 12 1998 5 October 1999 3 4 Sand. Box Jura 2. 0 Add Global I/O Beta CFS R 1 10 5 6 Jura 2. 0 FRS 11 12 1999 1 2000 2 3 4 10 11 12 2000 1 2001 2 3 Shakedown Sand. Box Jura 3. 0 Beta 2 EV 56 Upgrade AS 4100 de-Install 9 1 1999 Sand. Box Jura 1. 0 Beta Quadrics Switch Install 2 x 64 -way ES 40+ 128 -way Quadrics Switch Build Jura 3. 0 FRS 6 7 8 22

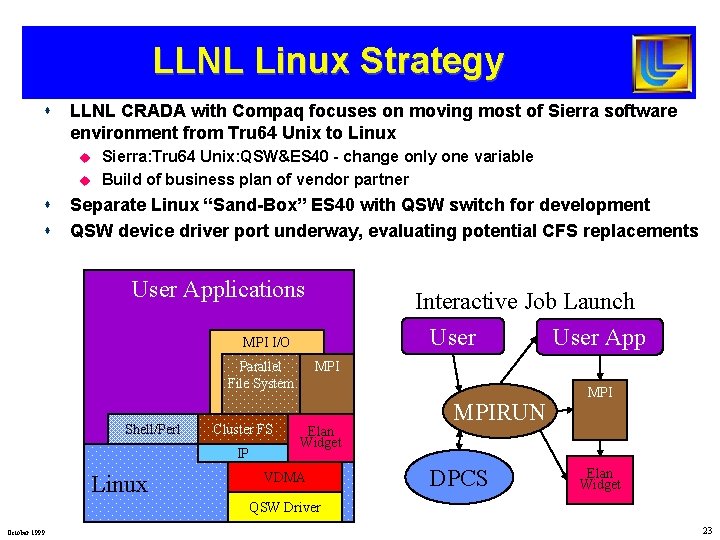

LLNL Linux Strategy s LLNL CRADA with Compaq focuses on moving most of Sierra software environment from Tru 64 Unix to Linux u u s s Sierra: Tru 64 Unix: QSW&ES 40 - change only one variable Build of business plan of vendor partner Separate Linux “Sand-Box” ES 40 with QSW switch for development QSW device driver port underway, evaluating potential CFS replacements User Applications Interactive Job Launch User App MPI I/O Parallel File System Shell/Perl Cluster FS IP Linux MPI Elan Widget VDMA MPIRUN DPCS MPI Elan Widget QSW Driver October 1999 23

- Slides: 23