The Informational Complexity of Interactive Machine Learning Steve

![Reducing Uncertainty: A 2 Algorithm l A 2 [BBL 06] – (slightly oversimplified explanation) Reducing Uncertainty: A 2 Algorithm l A 2 [BBL 06] – (slightly oversimplified explanation)](https://slidetodoc.com/presentation_image_h2/9fcf0d999db3adf09cedd24874c91a9c/image-14.jpg)

- Slides: 33

The Informational Complexity of Interactive Machine Learning Steve Hanneke

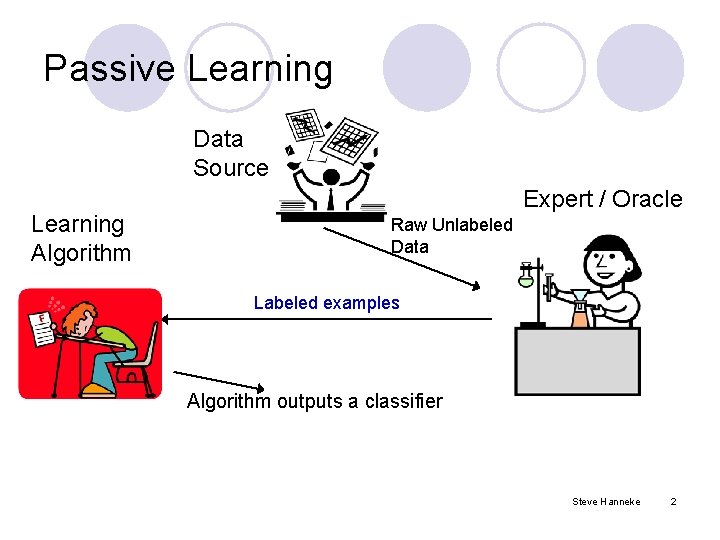

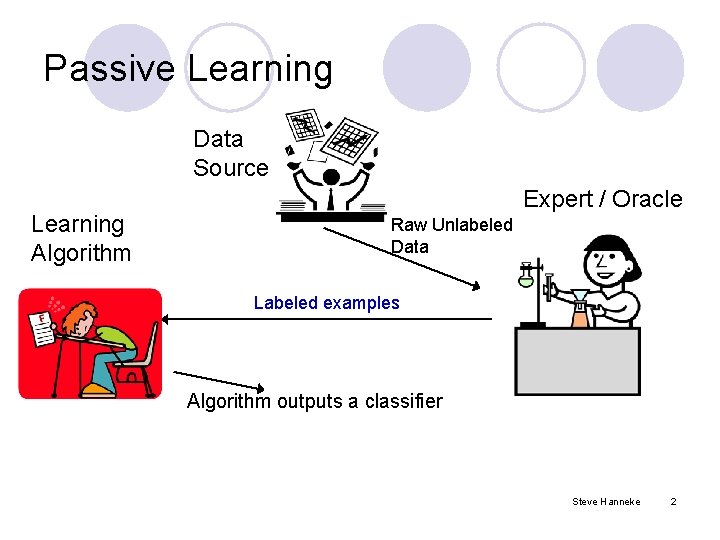

Passive Learning Data Source Learning Algorithm Expert / Oracle Raw Unlabeled Data Labeled examples Algorithm outputs a classifier Steve Hanneke 2

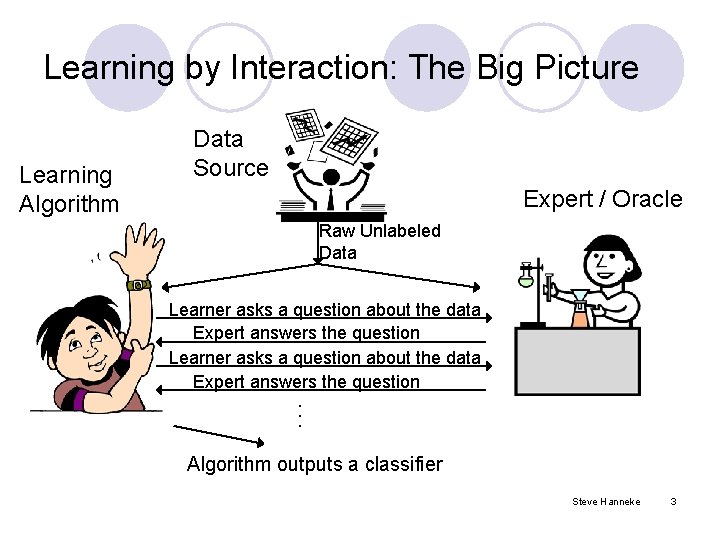

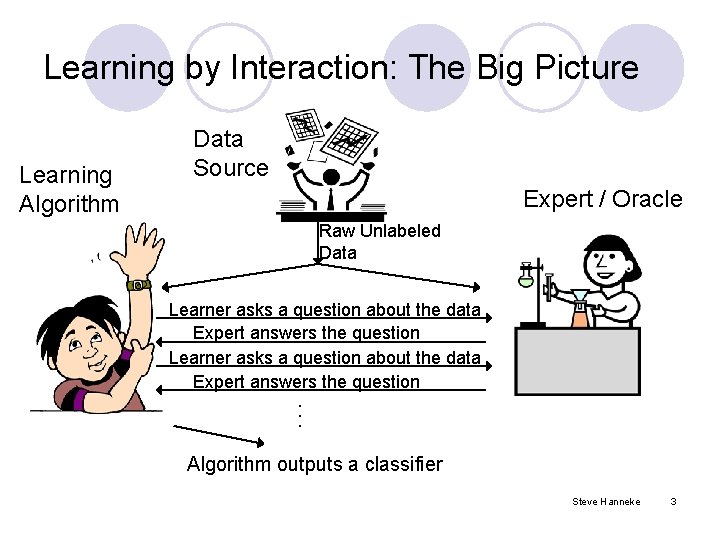

Learning by Interaction: The Big Picture Learning Algorithm Data Source Expert / Oracle Raw Unlabeled Data Learner asks a question about the data Expert answers the question. . . Algorithm outputs a classifier Steve Hanneke 3

Interactive Learning: A Manifesto l Machine learning is a collaborative effort between human and machine. l In passive learning, there is often a bottleneck on the human side (data annotation). l Conclusion: Passive algorithms are lazy collaborators. l Interactive algorithms may only require the human to expend effort providing relevant details, minimizing unnecessary redundancy. Steve Hanneke 4

The Value of Interaction l But how much improvement can we expect for any particular learning problem? l How much interaction is necessary and sufficient for learning? Steve Hanneke 5

Outline l Active learning with label requests l Disagreement Coefficient (Hanneke, ICML 2007) l Teaching Dimension (Hanneke, COLT 2007) l Class-conditional queries l Arbitrary Sample-based queries Steve Hanneke 6

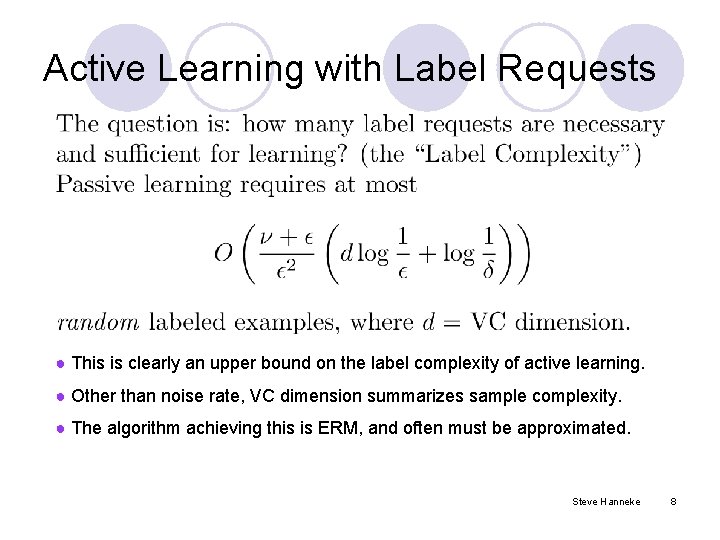

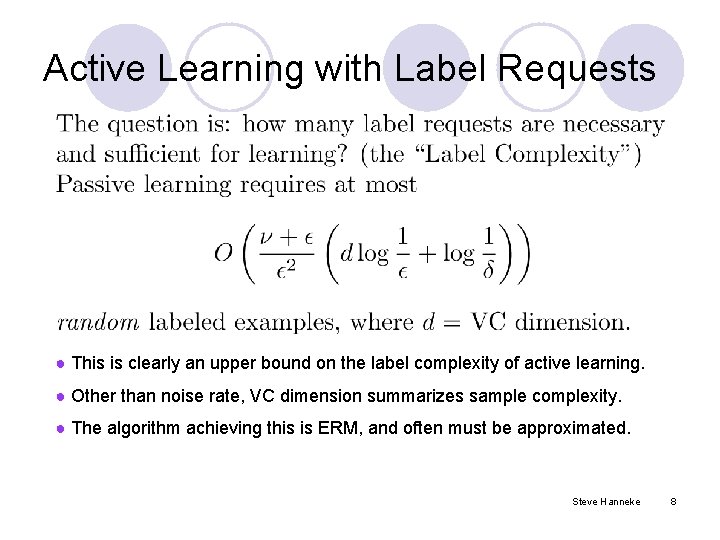

Active Learning with Label Requests Steve Hanneke 7

Active Learning with Label Requests ● This is clearly an upper bound on the label complexity of active learning. ● Other than noise rate, VC dimension summarizes sample complexity. ● The algorithm achieving this is ERM, and often must be approximated. Steve Hanneke 8

Outline l Active learning with label requests l Disagreement Coefficient (Hanneke, ICML 2007) l Teaching Dimension (Hanneke, COLT 2007) l Class-conditional queries l Arbitrary Sample-based queries Steve Hanneke 9

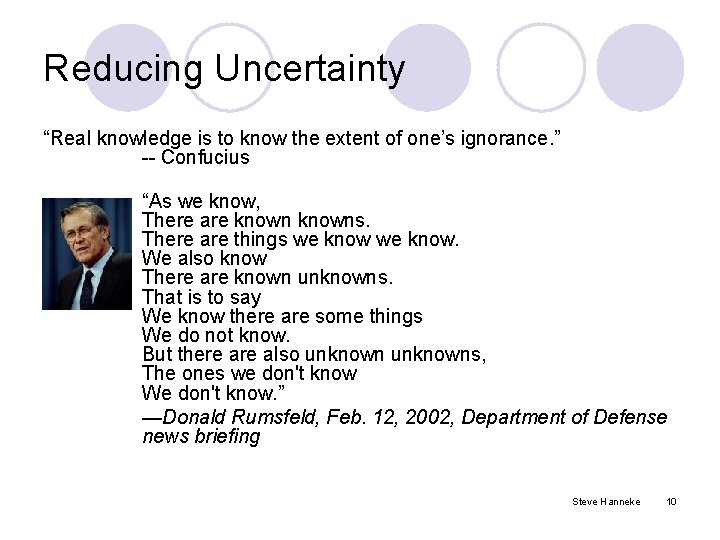

Reducing Uncertainty “Real knowledge is to know the extent of one’s ignorance. ” -- Confucius “As we know, There are knowns. There are things we know. We also know There are known unknowns. That is to say We know there are some things We do not know. But there also unknowns, The ones we don't know We don't know. ” —Donald Rumsfeld, Feb. 12, 2002, Department of Defense news briefing Steve Hanneke 10

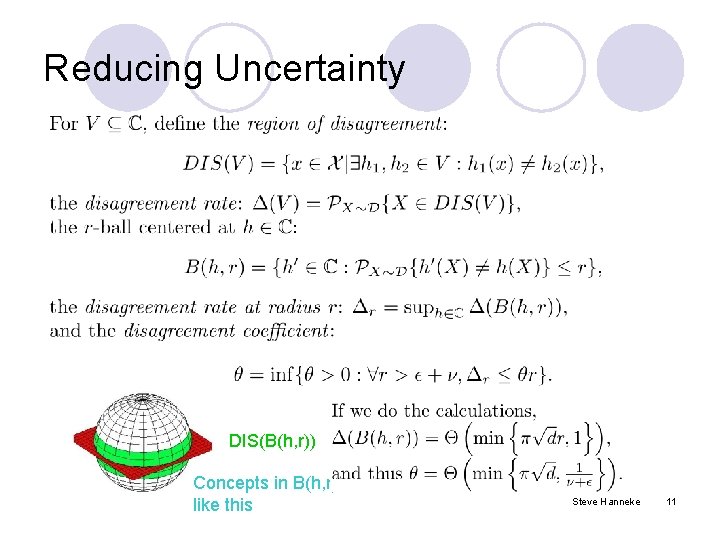

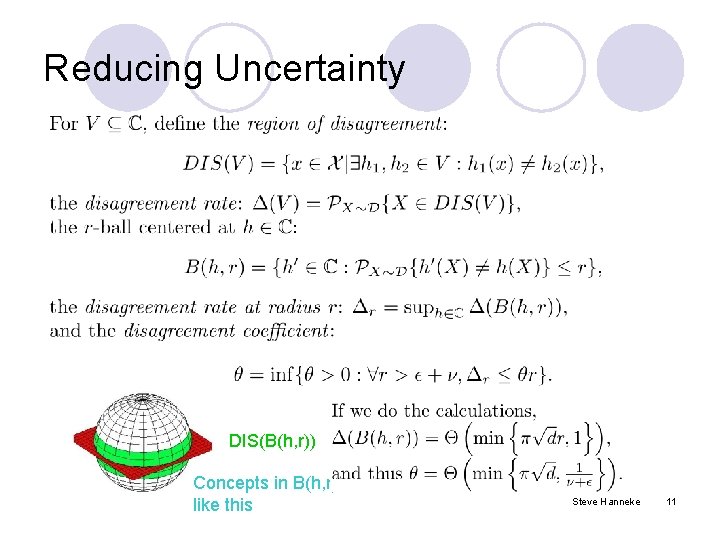

Reducing Uncertainty h DIS(B(h, r)) Concepts in B(h, r) look like this Steve Hanneke 11

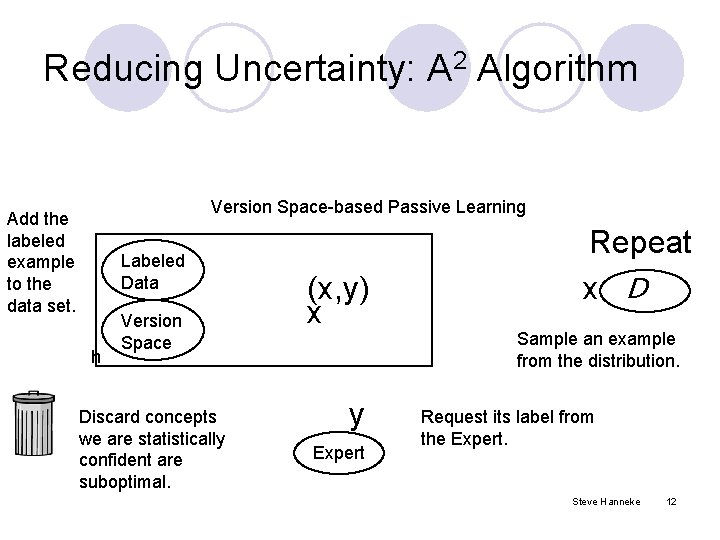

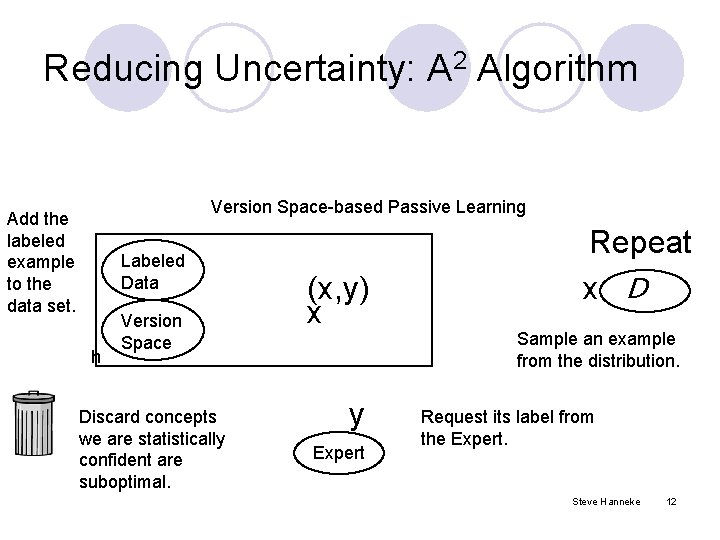

Reducing Uncertainty: A 2 Algorithm Version Space-based Passive Learning Add the labeled example to the data set. Labeled Data h Version Space Discard concepts we are statistically confident are suboptimal. (x, y) x y Expert Repeat x D Sample an example from the distribution. Request its label from the Expert. Steve Hanneke 12

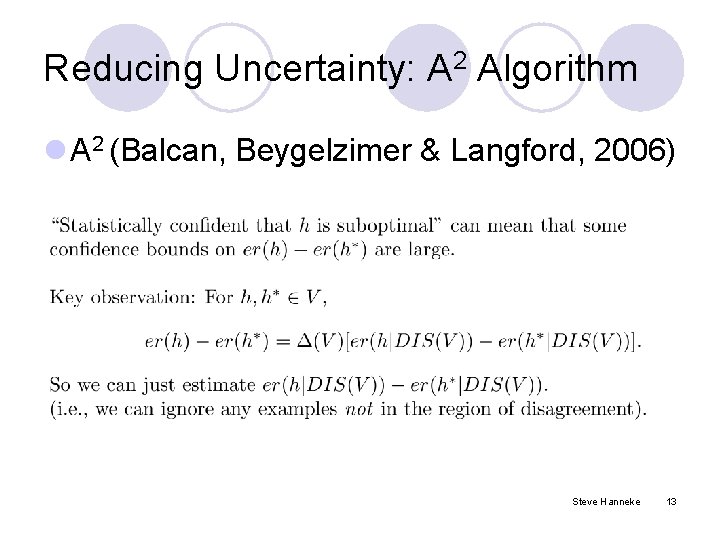

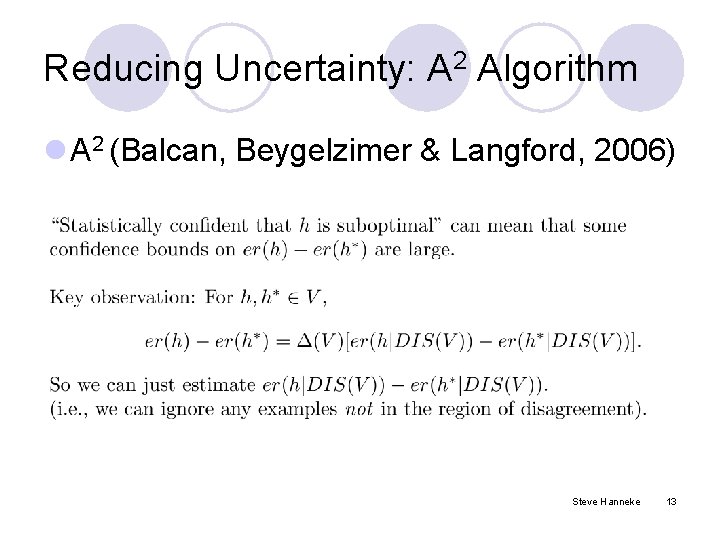

Reducing Uncertainty: A 2 Algorithm l A 2 (Balcan, Beygelzimer & Langford, 2006) Steve Hanneke 13

![Reducing Uncertainty A 2 Algorithm l A 2 BBL 06 slightly oversimplified explanation Reducing Uncertainty: A 2 Algorithm l A 2 [BBL 06] – (slightly oversimplified explanation)](https://slidetodoc.com/presentation_image_h2/9fcf0d999db3adf09cedd24874c91a9c/image-14.jpg)

Reducing Uncertainty: A 2 Algorithm l A 2 [BBL 06] – (slightly oversimplified explanation) Add the labeled example to the data set. Version Space-based Agnostic Active Learning If it is not in the region of disagreement, ignore it (move on to next sample). Labeled Data h Version Space Discard concepts we are statistically confident are suboptimal (wrt the filtered distribution). (x, y) x y Expert Repeat x D Sample an example from the distribution. If it is in the region of disagreement, request its label from the Expert. Steve Hanneke 14

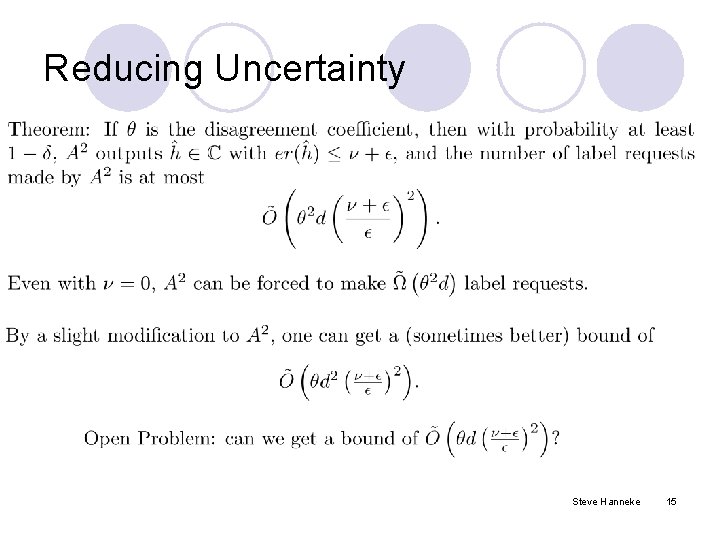

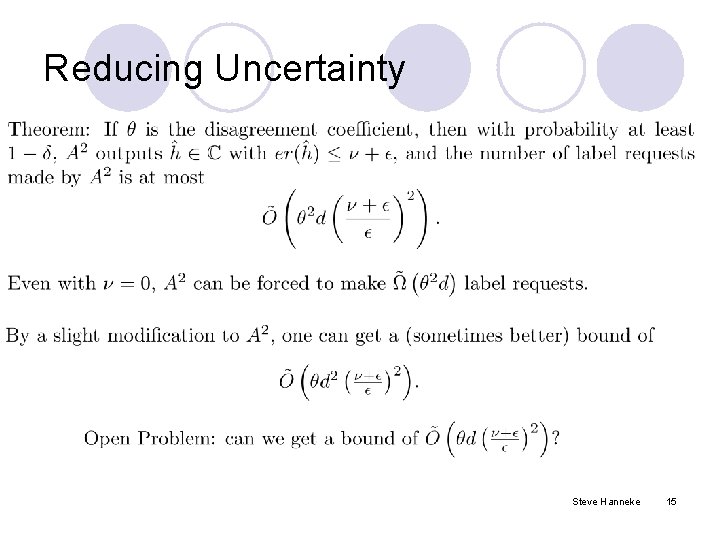

Reducing Uncertainty Steve Hanneke 15

Outline l Active learning with label requests l Disagreement Coefficient (Hanneke, ICML 2007) l Teaching Dimension (Hanneke, COLT 2007) l Class-conditional queries l Arbitrary Sample-based queries Steve Hanneke 16

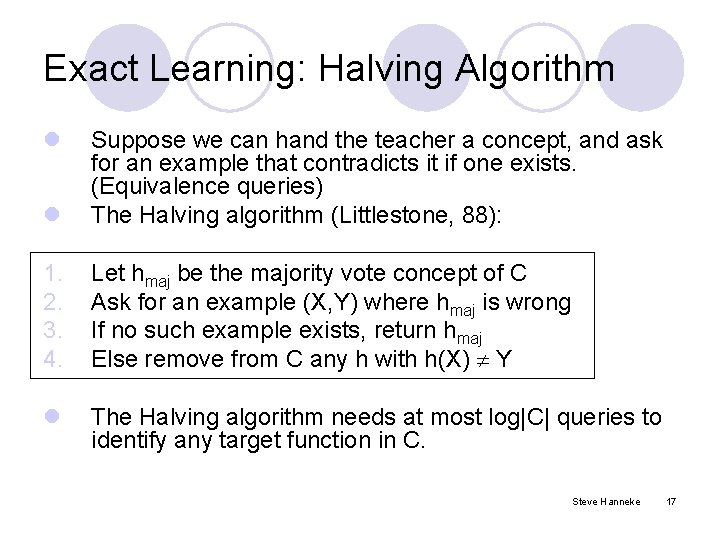

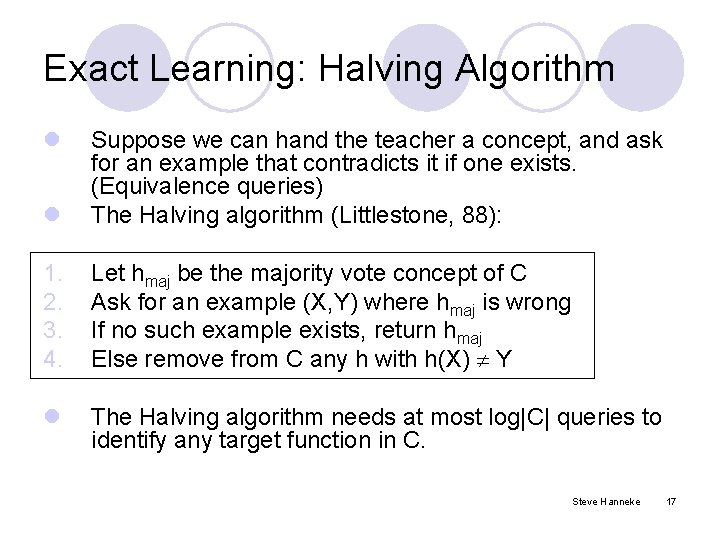

Exact Learning: Halving Algorithm l l Suppose we can hand the teacher a concept, and ask for an example that contradicts it if one exists. (Equivalence queries) The Halving algorithm (Littlestone, 88): 1. 2. 3. 4. Let hmaj be the majority vote concept of C Ask for an example (X, Y) where hmaj is wrong If no such example exists, return hmaj Else remove from C any h with h(X) Y l The Halving algorithm needs at most log|C| queries to identify any target function in C. Steve Hanneke 17

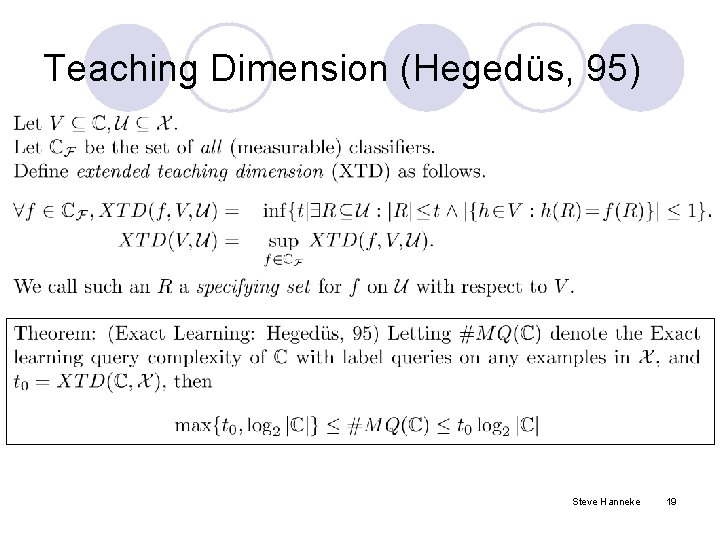

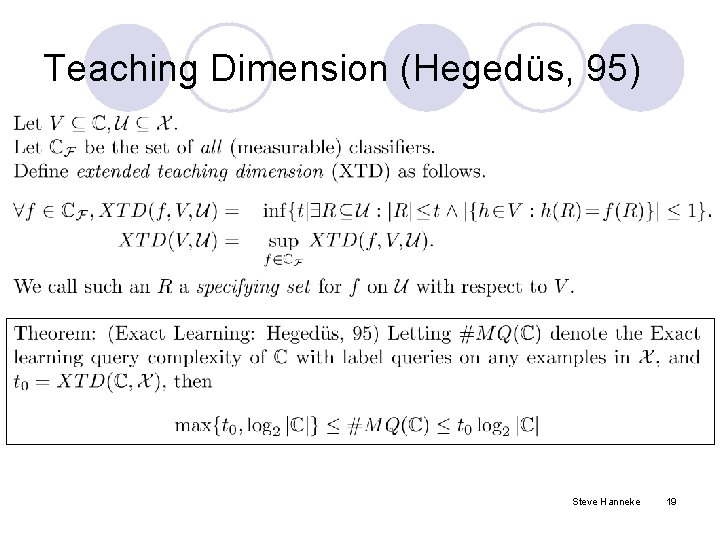

Exact Learning: Membership Queries l Suppose, instead of equivalence queries, we can request the label of any example in X. l We still want to run the Halving algorithm. l How many label requests does it take to build an equivalence query? Steve Hanneke 18

Teaching Dimension (Hegedüs, 95) Steve Hanneke 19

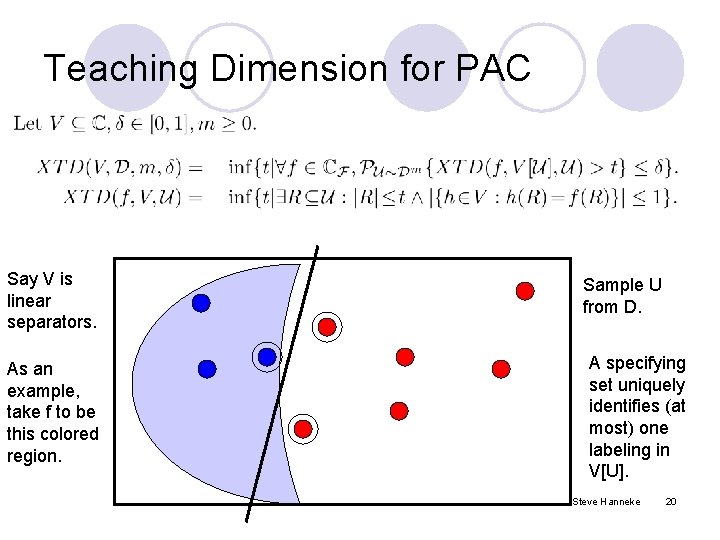

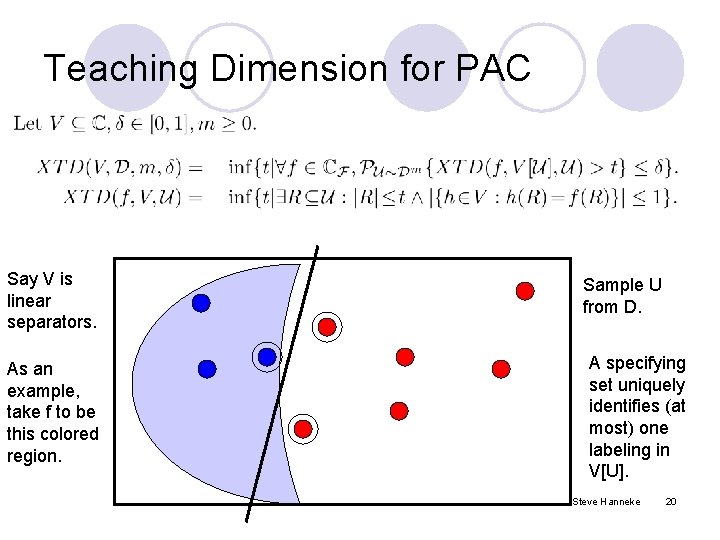

Teaching Dimension for PAC Say V is linear separators. As an example, take f to be this colored region. Sample U from D. A specifying set uniquely identifies (at most) one labeling in V[U]. Steve Hanneke 20

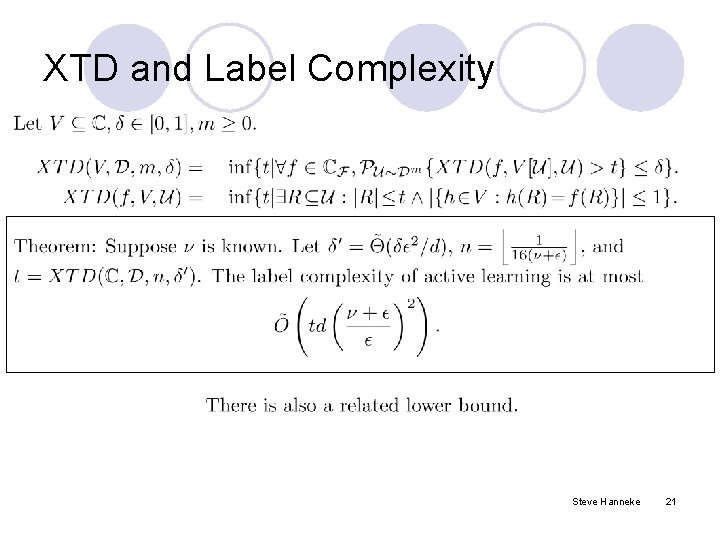

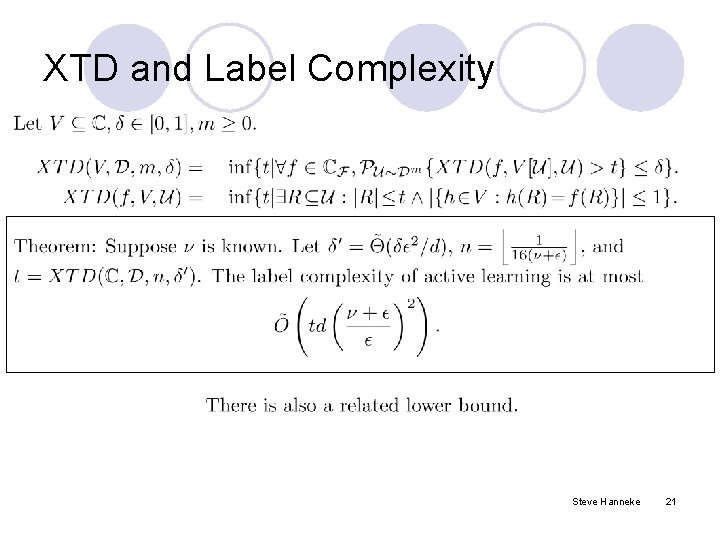

XTD and Label Complexity Steve Hanneke 21

XTD and Label Complexity Conjecture: a bound of this form is valid, even with no knowledge of the noise rate (i. e. , for agnostic learning). Steve Hanneke 22

Outline l Active learning with label requests l Disagreement Coefficient (Hanneke, ICML 2007) l Teaching Dimension (Hanneke, COLT 2007) l Class-conditional queries l Arbitrary Sample-based queries Steve Hanneke 23

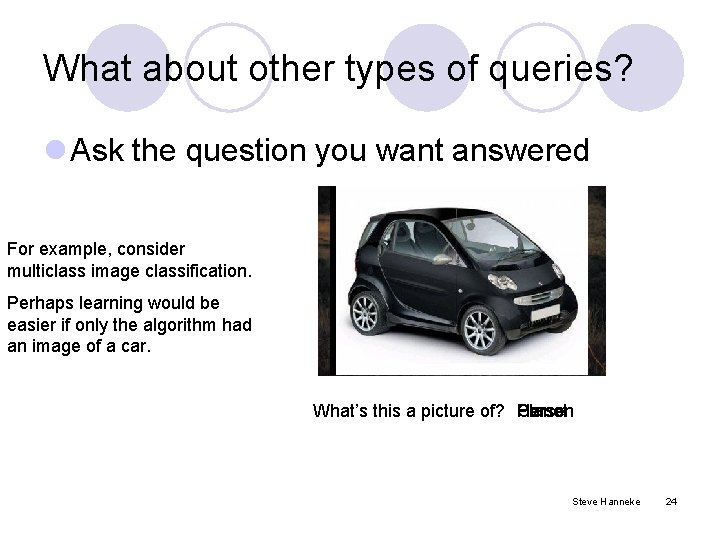

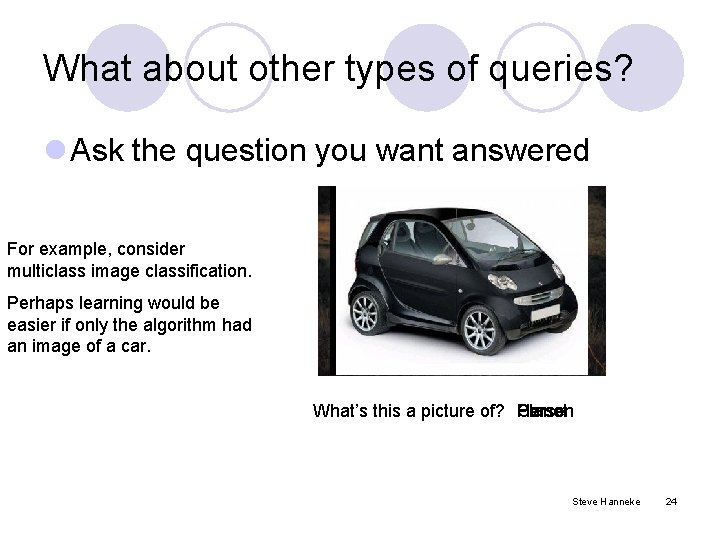

What about other types of queries? l Ask the question you want answered For example, consider multiclass image classification. Perhaps learning would be easier if only the algorithm had an image of a car. What’s this a picture of? Horse Car Person Planet Steve Hanneke 24

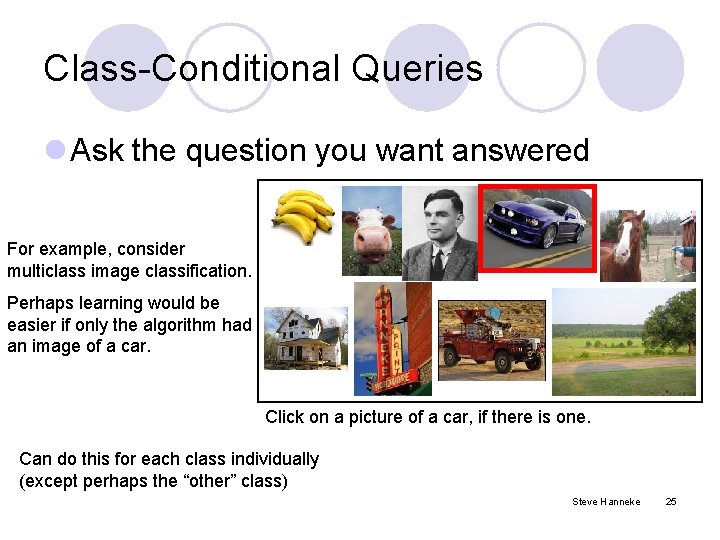

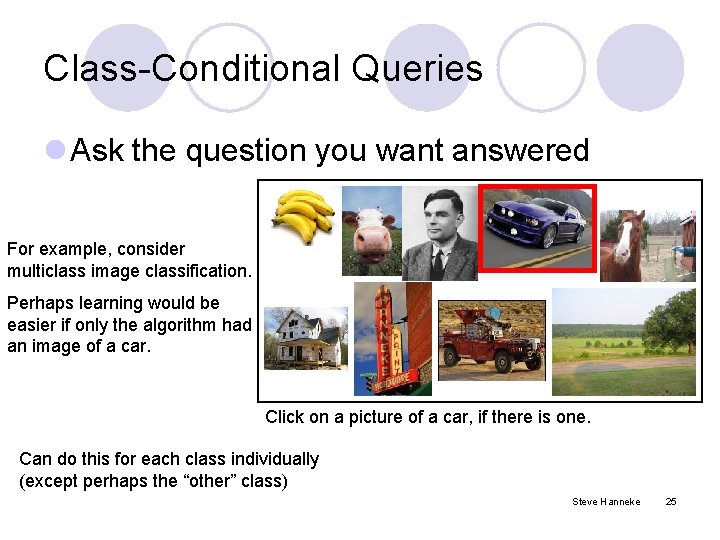

Class-Conditional Queries l Ask the question you want answered For example, consider multiclass image classification. Perhaps learning would be easier if only the algorithm had an image of a car. Click on a picture of a car, if there is one. Can do this for each class individually (except perhaps the “other” class) Steve Hanneke 25

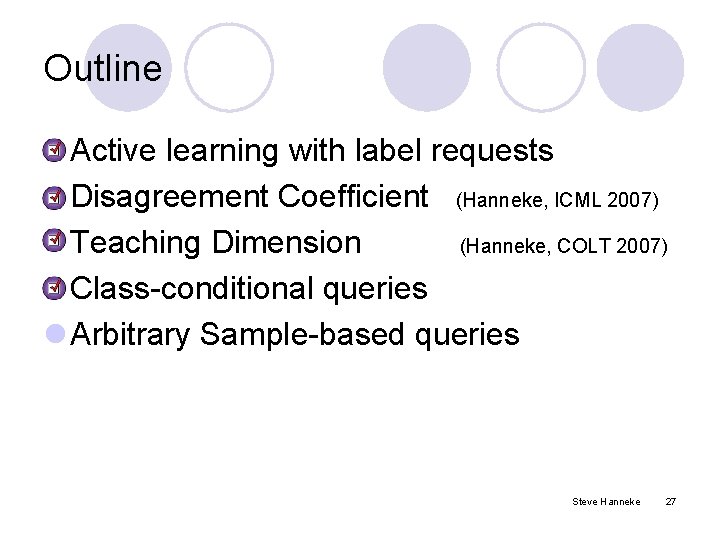

Class-Conditional Queries l A concrete example: Conjunctions (without noise). Steve Hanneke 26

Outline l Active learning with label requests l Disagreement Coefficient (Hanneke, ICML 2007) l Teaching Dimension (Hanneke, COLT 2007) l Class-conditional queries l Arbitrary Sample-based queries Steve Hanneke 27

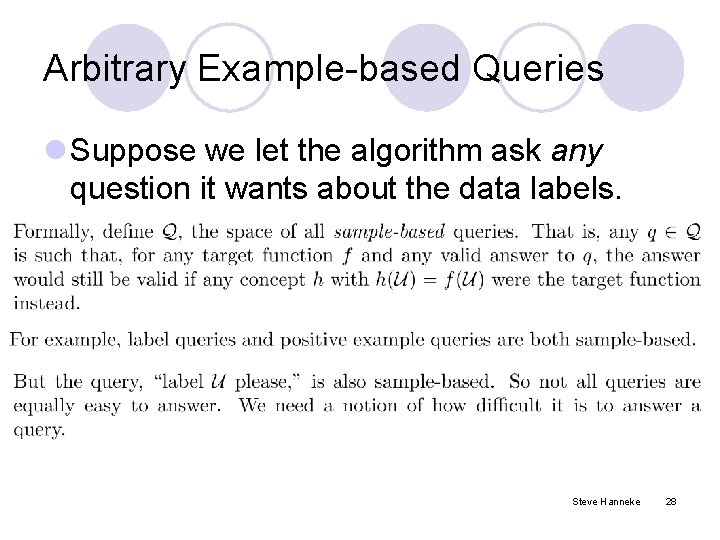

Arbitrary Example-based Queries l Suppose we let the algorithm ask any question it wants about the data labels. Steve Hanneke 28

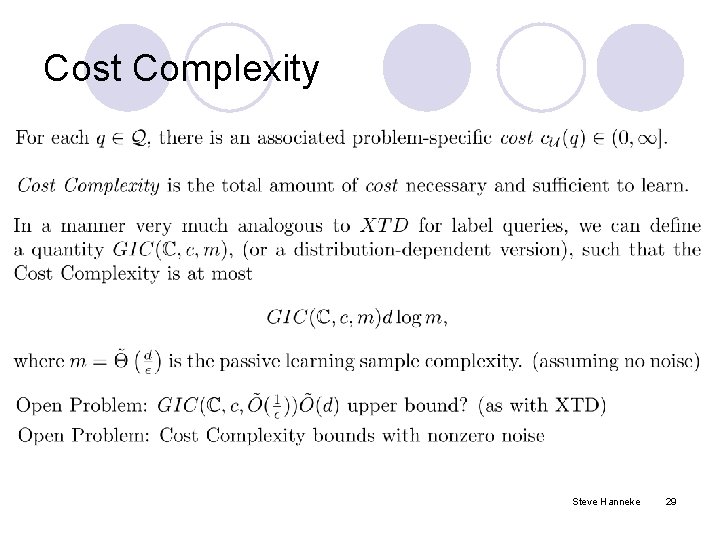

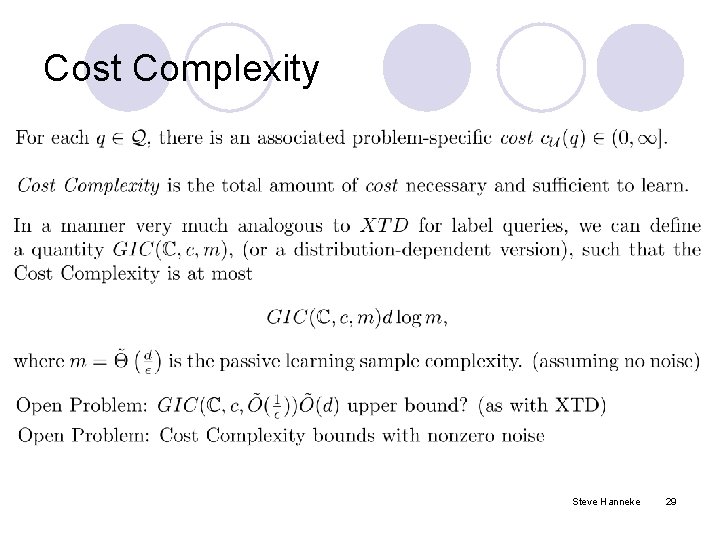

Cost Complexity Steve Hanneke 29

Questions? (cost = free )

Open Problems for Label Queries l The value of having more unlabeled data? (especially for Agnostic learning). l “Optimal” agnostic active learning algorithm? Steve Hanneke 31

Open Problems l Unknown cost functions E. g. , maybe examples near the separator are more expensive to label. l Other types of queries: E. g. , “give me a rule/explanation you used to decide the label of this example. ” Steve Hanneke 32

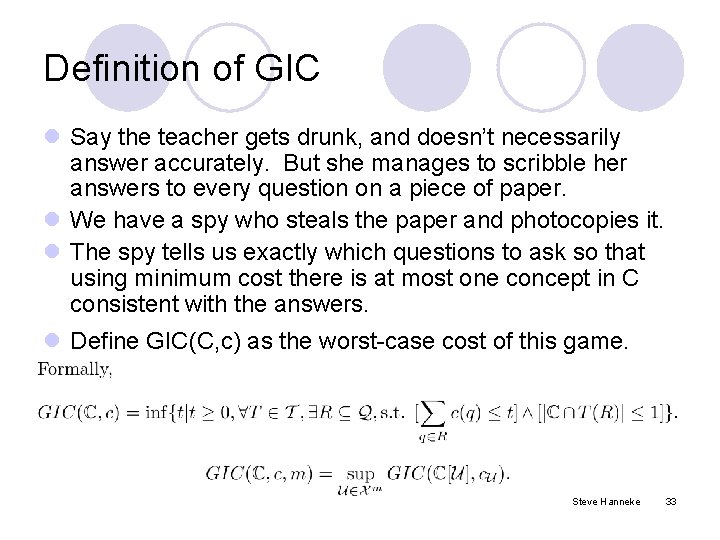

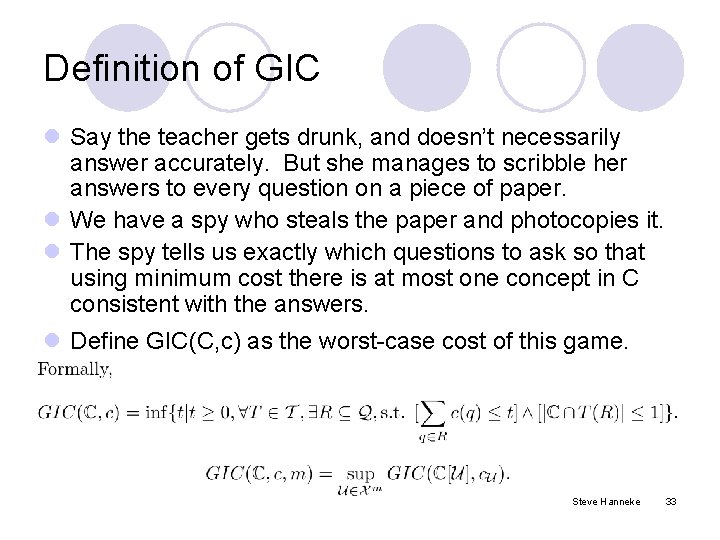

Definition of GIC l Say the teacher gets drunk, and doesn’t necessarily answer accurately. But she manages to scribble her answers to every question on a piece of paper. l We have a spy who steals the paper and photocopies it. l The spy tells us exactly which questions to ask so that using minimum cost there is at most one concept in C consistent with the answers. l Define GIC(C, c) as the worst-case cost of this game. Steve Hanneke 33