The INFN Grid Project Successful Grid Experiences at

- Slides: 36

The INFN Grid Project Successful Grid Experiences at Catania Roberto Barbera University of Catania and INFN Workshop CCR Rimini, 08. 05. 2007 http: //grid. infn. it

Outline Enabling Grids for E-scienc. E • Grid @ Catania – Network connection – Catania in the Grid Infrastructures – Production site (ALICE Tier-2) – GILDA – Tri. Grid VL – PI 2 S 2 • Management of the site resources – Goals – Configuration & Policies – Monitoring – Usage statistics • Summary & Conclusions

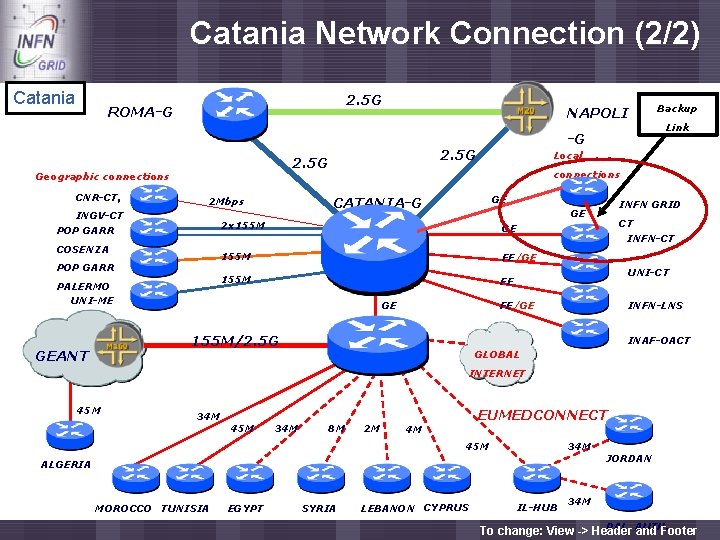

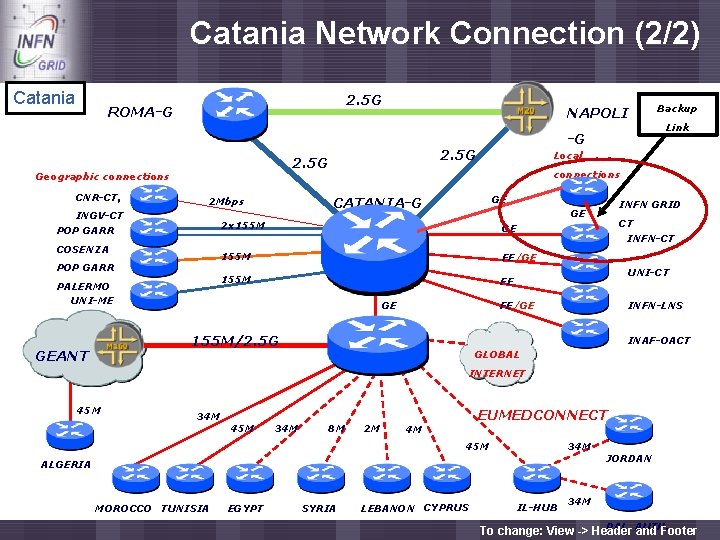

Catania Network Connection (2/2) Enabling Grids for E-scienc. E Catania 2. 5 G ROMA-G CNR-CT, INGV-CT POP GARR connections GE POP GARR PALERMO UNI-ME INFN GRID GE 2 x 155 M COSENZA CT GE INFN-CT 155 M FE/GE 155 M FE GE UNI-CT FE/GE 155 M/2. 5 G GEANT Local CATANIA-G 2 Mbps Link -G 2. 5 G Geographic connections Backup NAPOLI INFN-LNS INAF-OACT GLOBAL INTERNET 45 M EUMEDCONNECT 34 M 45 M 34 M 8 M 2 M 4 M 45 M 34 M ALGERIA MOROCCO TUNISIA EGYPT SYRIA LEBANON CYPRUS IL-HUB JORDAN 34 M PAL. AUTH. To change: View -> Header and Footer

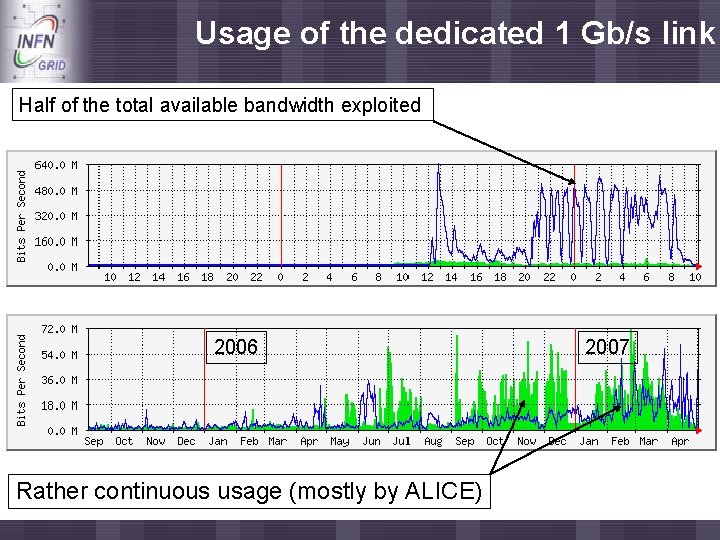

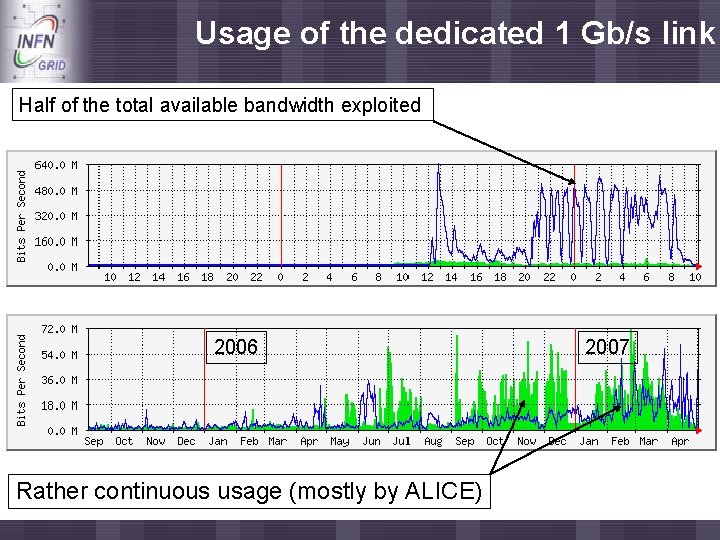

Usage of the dedicated 1 Gb/s link Enabling Grids for E-scienc. E Half of the total available bandwidth exploited 2006 Rather continuous usage (mostly by ALICE) 2007

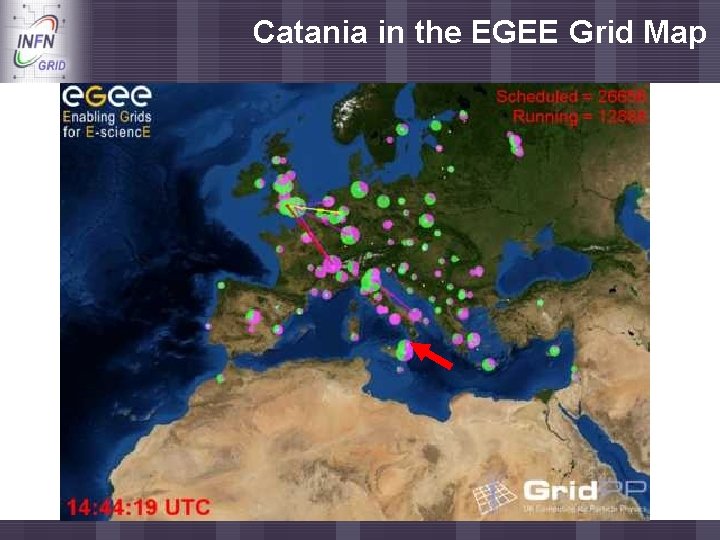

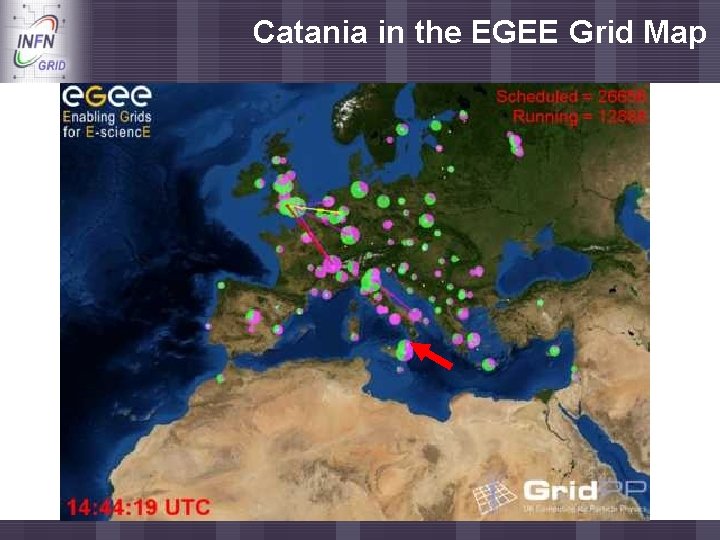

Catania in the EGEE Grid Map Enabling Grids for E-scienc. E

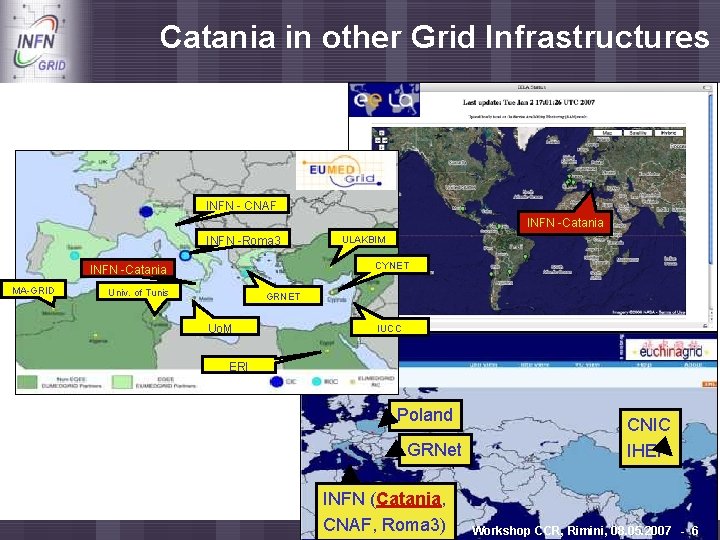

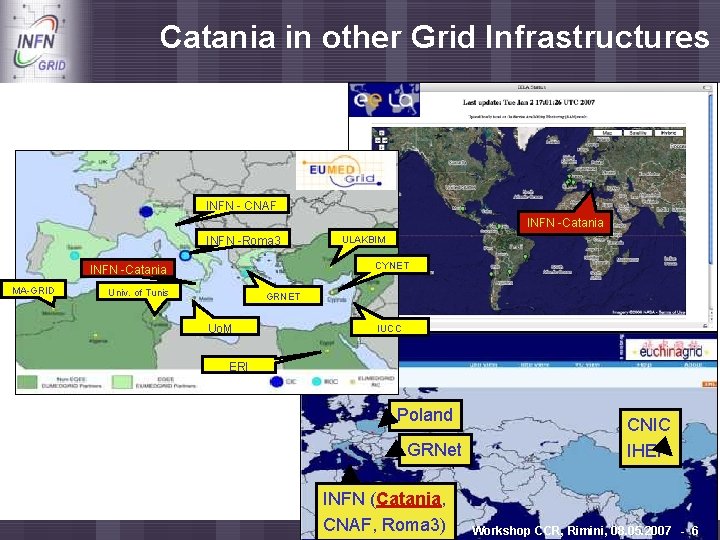

Catania in other Grid Infrastructures Enabling Grids for E-scienc. E INFN - CNAF INFN -Catania INFN -Roma 3 CYNET INFN -Catania MA-GRID ULAKBIM Univ. of Tunis GRNET Uo. M IUCC ERI Poland GRNet INFN (Catania, CNAF, Roma 3) CNIC IHEP Workshop CCR, Rimini, 08. 05. 2007 - 6

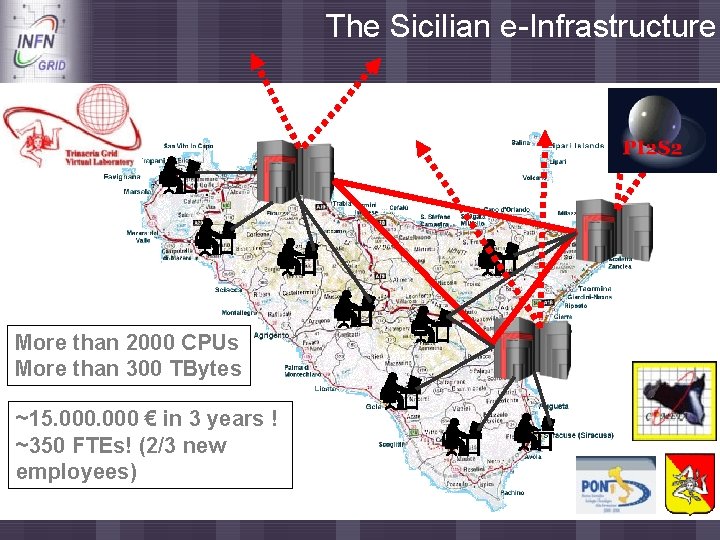

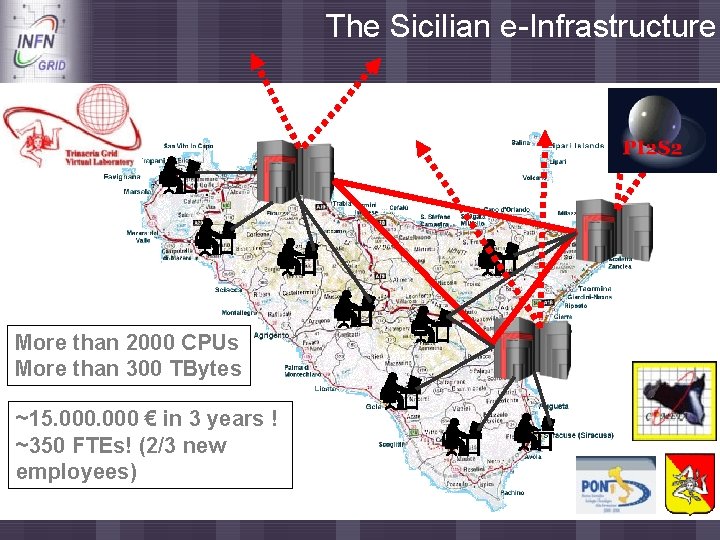

The Sicilian e-Infrastructure Enabling Grids for E-scienc. E More than 2000 CPUs More than 300 TBytes ~15. 000 € in 3 years ! ~350 FTEs! (2/3 new employees)

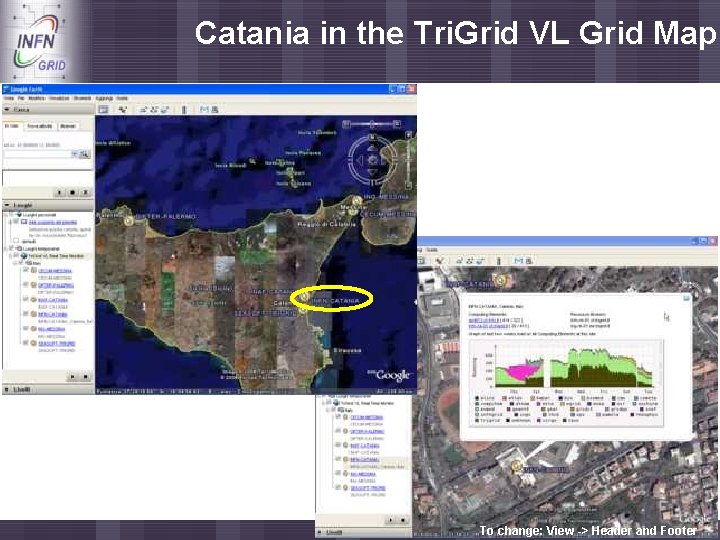

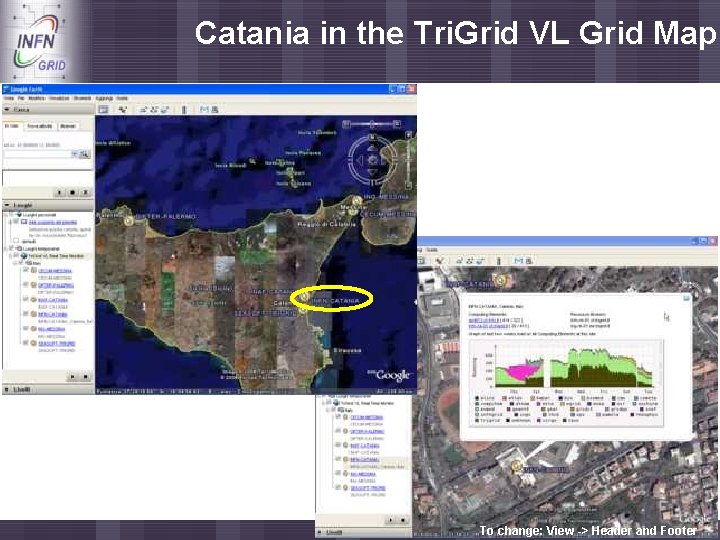

Catania in the Tri. Grid VL Grid Map Enabling Grids for E-scienc. E To change: View -> Header and Footer

Catania in the GILDA t-Infrastructure Enabling Grids for E-scienc. E

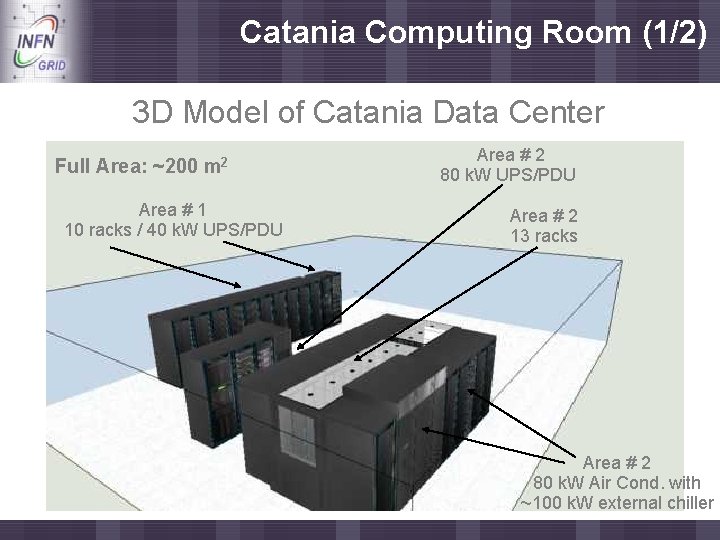

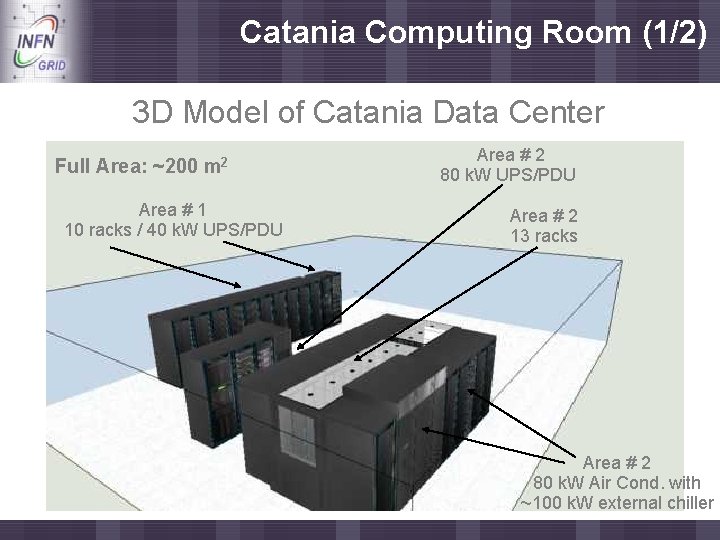

Catania Computing Room (1/2) Enabling Grids for E-scienc. E 3 D Model of Catania Data Center Full Area: ~200 m 2 Area # 1 10 racks / 40 k. W UPS/PDU Area # 2 80 k. W UPS/PDU Area # 2 13 racks Area # 2 80 k. W Air Cond. with ~100 k. W external chiller

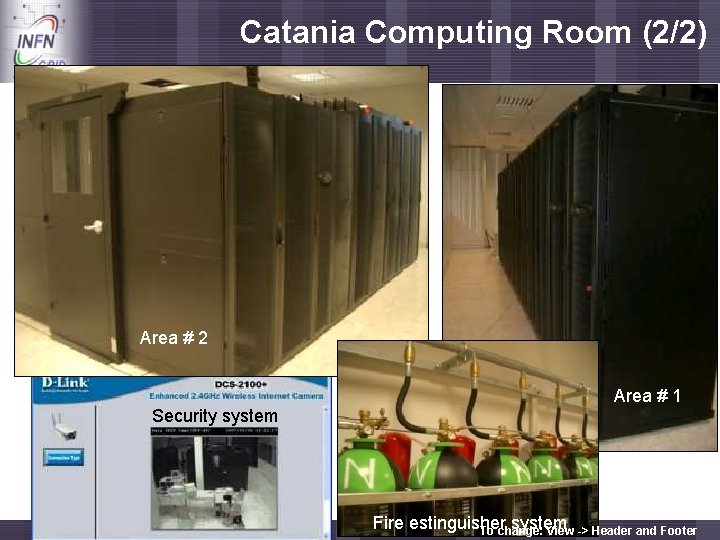

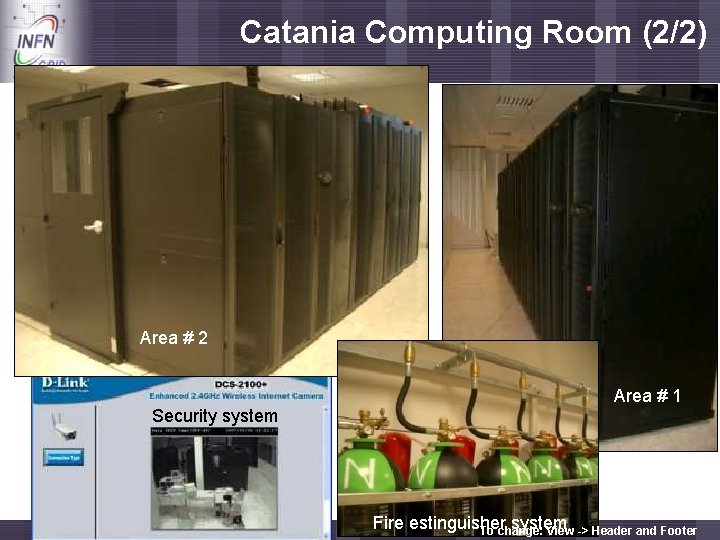

Catania Computing Room (2/2) Enabling Grids for E-scienc. E Area # 2 Security system Area # 1 Fire estinguisher system To change: View -> Header and Footer

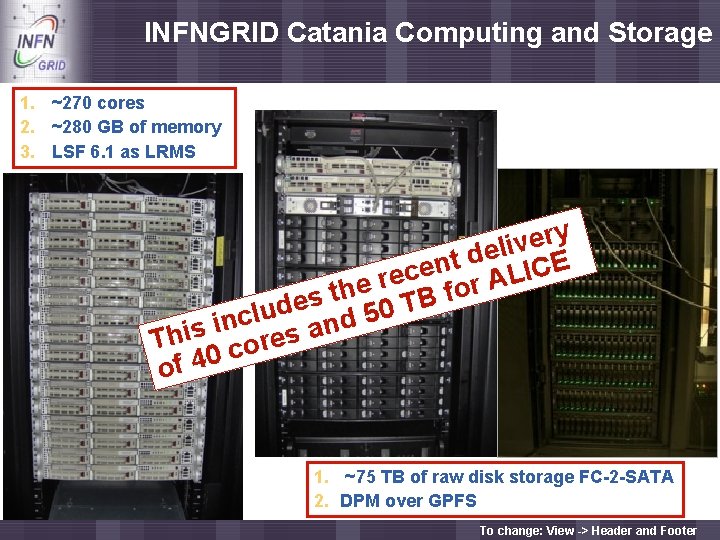

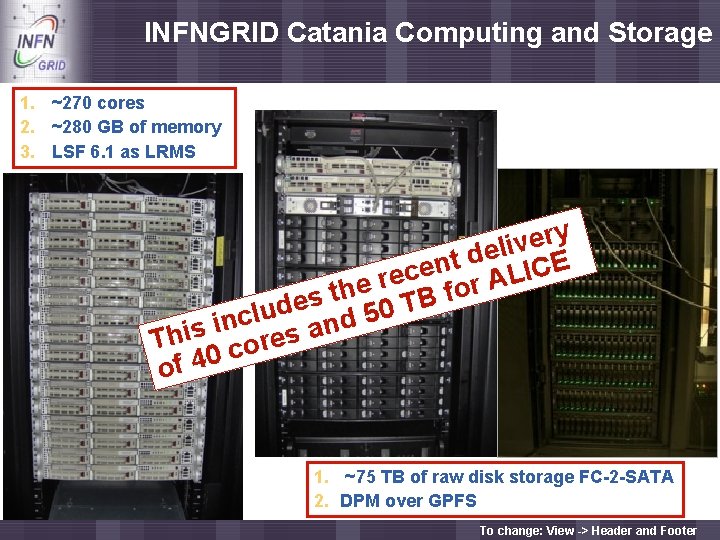

INFNGRID Catania Computing and Storage Enabling Grids for E-scienc. E 1. ~270 cores 2. ~280 GB of memory 3. LSF 6. 1 as LRMS y r e v i l e d t E n e C I c L e r A r e o h f t es 50 TB d u l c d n i n a s i Th res o c of 40 1. ~75 TB of raw disk storage FC-2 -SATA 2. DPM over GPFS To change: View -> Header and Footer

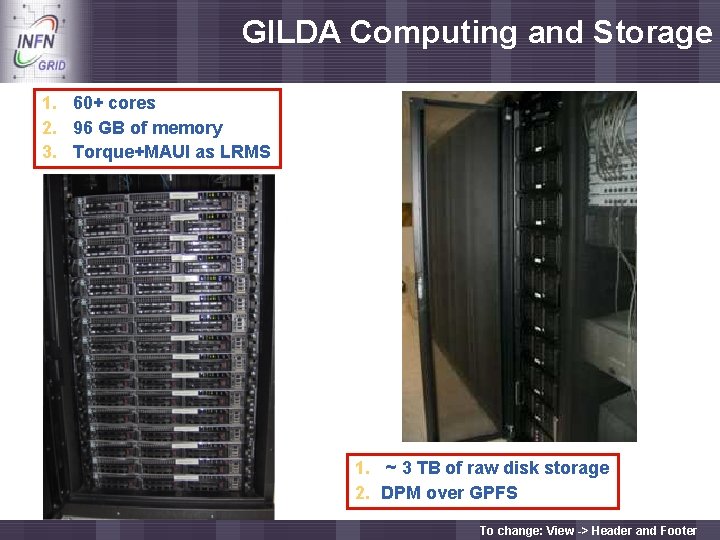

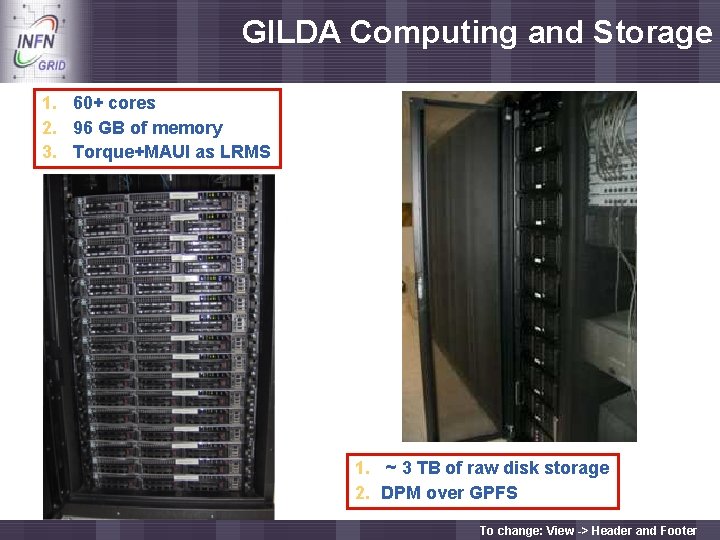

GILDA Computing and Storage Enabling Grids for E-scienc. E 1. 60+ cores 2. 96 GB of memory 3. Torque+MAUI as LRMS 1. ~ 3 TB of raw disk storage 2. DPM over GPFS To change: View -> Header and Footer

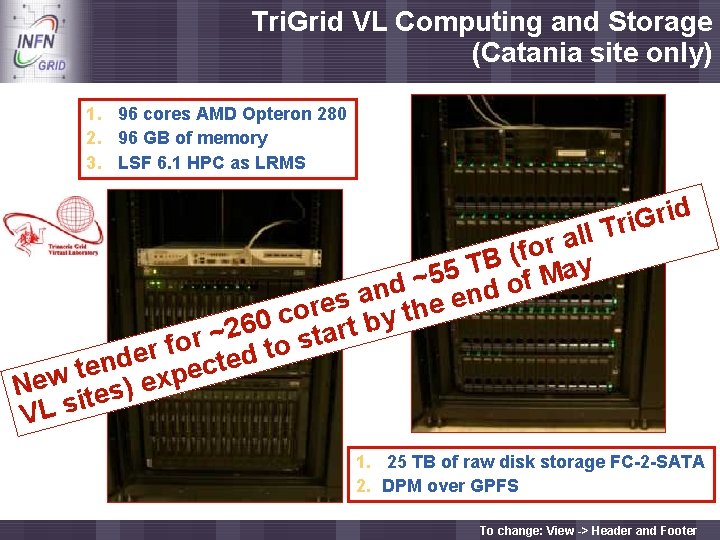

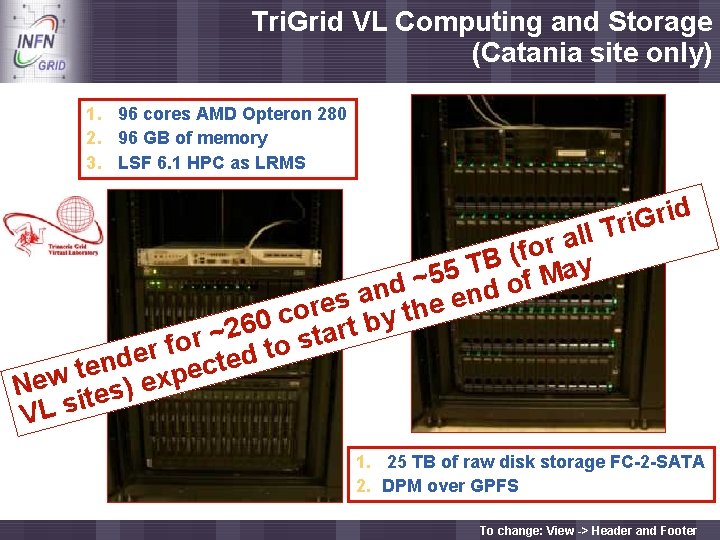

Tri. Grid VL Computing and Storage (Catania site only) Enabling Grids for E-scienc. E 1. 96 cores AMD Opteron 280 2. 96 GB of memory 3. LSF 6. 1 HPC as LRMS d i r G i ll Tr ra o f ( B T y a 5 5 M d ~ end of n a s e e r h o t c y 0 b 6 t 2 r ~ a t r s o f o t r e d d e t n c e t e p w x e e N ) s e t i VL s 1. 25 TB of raw disk storage FC-2 -SATA 2. DPM over GPFS To change: View -> Header and Footer

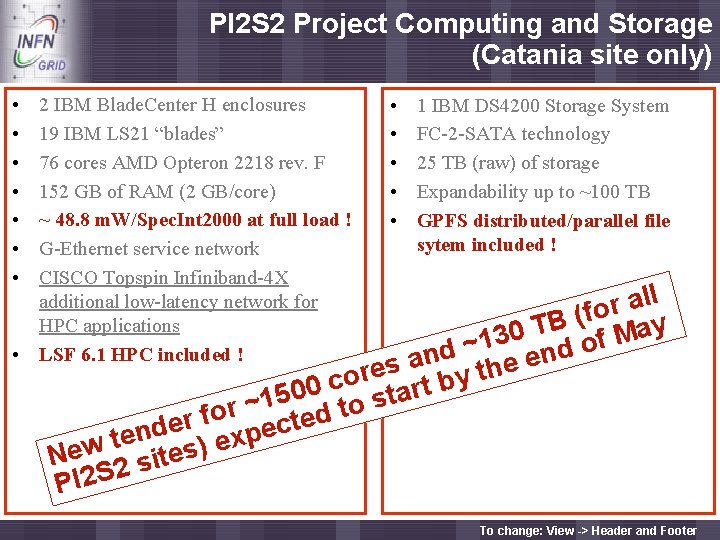

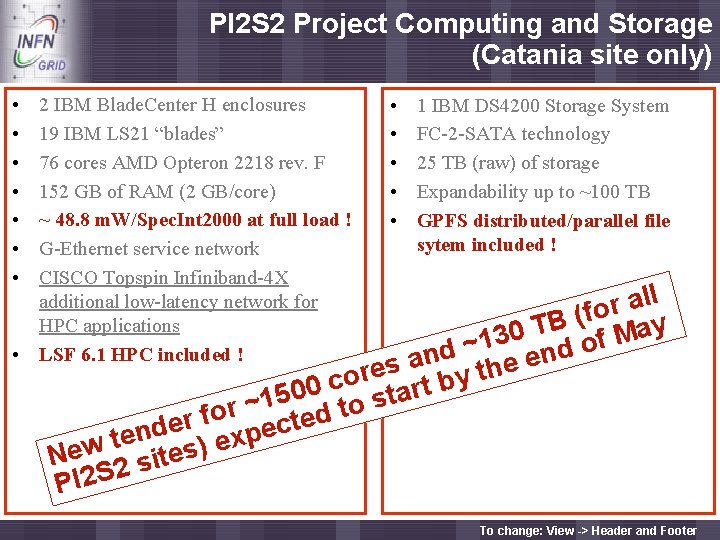

PI 2 S 2 Project Computing and Storage (Catania site only) Enabling Grids for E-scienc. E • • 2 IBM Blade. Center H enclosures 19 IBM LS 21 “blades” 76 cores AMD Opteron 2218 rev. F 152 GB of RAM (2 GB/core) ~ 48. 8 m. W/Spec. Int 2000 at full load ! G-Ethernet service network CISCO Topspin Infiniband-4 X additional low-latency network for HPC applications • LSF 6. 1 HPC included ! • • • 1 IBM DS 4200 Storage System FC-2 -SATA technology 25 TB (raw) of storage Expandability up to ~100 TB GPFS distributed/parallel file sytem included ! ll a r o f ( B y T a 0 M 3 f 1 o ~ d d n n e a e s h e t r o y c b 0 rt 0 a 5 t 1 s ~ o r t o f d r te e c d e n p e x t e ) w Ne 2 sites PI 2 S To change: View -> Header and Footer

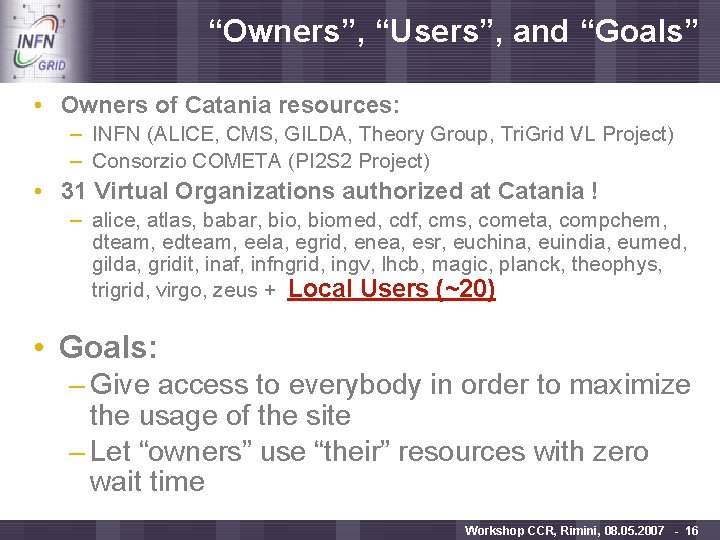

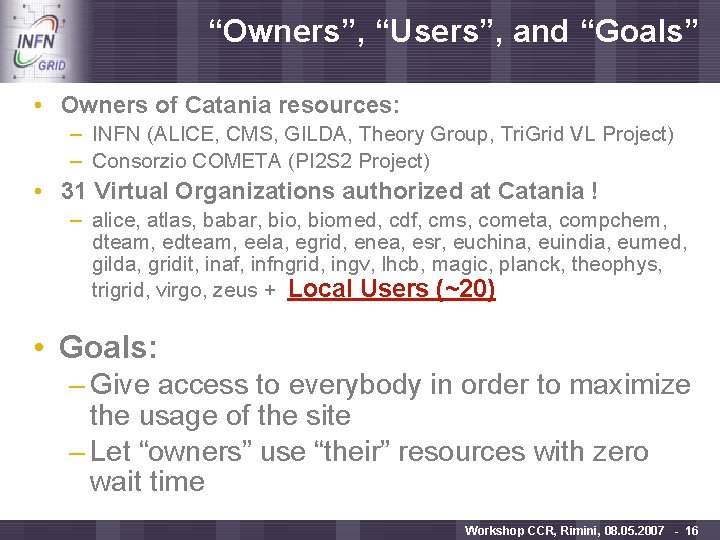

“Owners”, “Users”, and “Goals” Enabling Grids for E-scienc. E • Owners of Catania resources: – INFN (ALICE, CMS, GILDA, Theory Group, Tri. Grid VL Project) – Consorzio COMETA (PI 2 S 2 Project) • 31 Virtual Organizations authorized at Catania ! – alice, atlas, babar, biomed, cdf, cms, cometa, compchem, dteam, eela, egrid, enea, esr, euchina, euindia, eumed, gilda, gridit, inaf, infngrid, ingv, lhcb, magic, planck, theophys, trigrid, virgo, zeus + Local Users (~20) • Goals: – Give access to everybody in order to maximize the usage of the site – Let “owners” use “their” resources with zero wait time Workshop CCR, Rimini, 08. 05. 2007 - 16

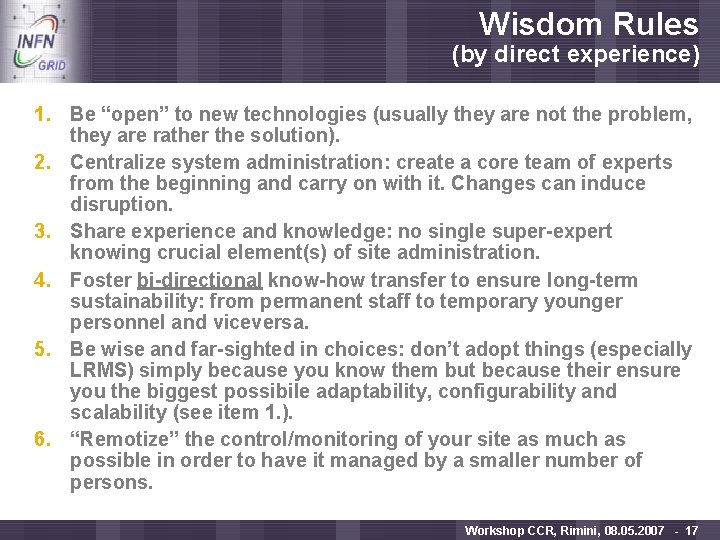

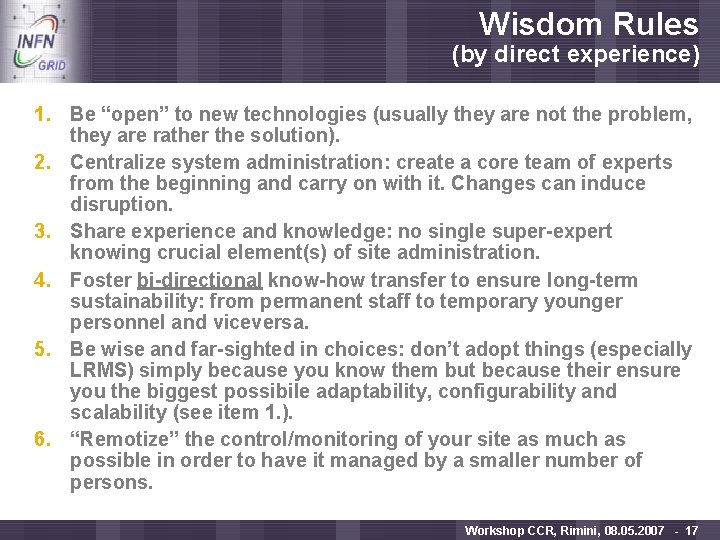

Wisdom Rules Enabling Grids for E-scienc. E (by direct experience) 1. Be “open” to new technologies (usually they are not the problem, they are rather the solution). 2. Centralize system administration: create a core team of experts from the beginning and carry on with it. Changes can induce disruption. 3. Share experience and knowledge: no single super-expert knowing crucial element(s) of site administration. 4. Foster bi-directional know-how transfer to ensure long-term sustainability: from permanent staff to temporary younger personnel and viceversa. 5. Be wise and far-sighted in choices: don’t adopt things (especially LRMS) simply because you know them but because their ensure you the biggest possibile adaptability, configurability and scalability (see item 1. ). 6. “Remotize” the control/monitoring of your site as much as possible in order to have it managed by a smaller number of persons. Workshop CCR, Rimini, 08. 05. 2007 - 17

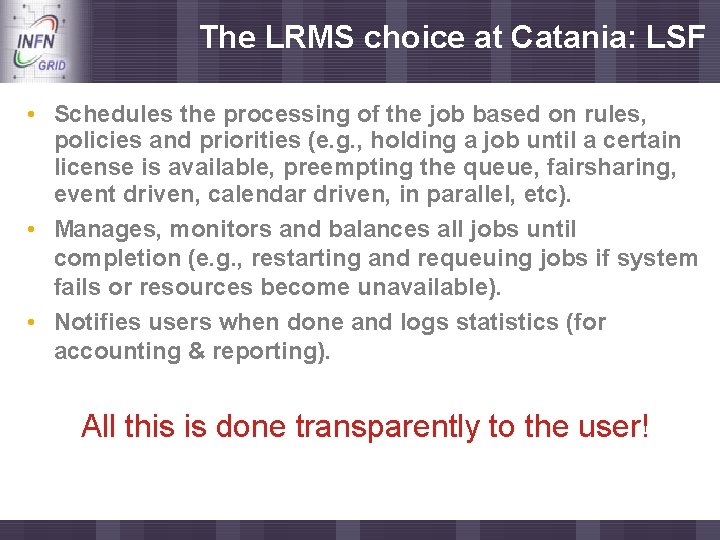

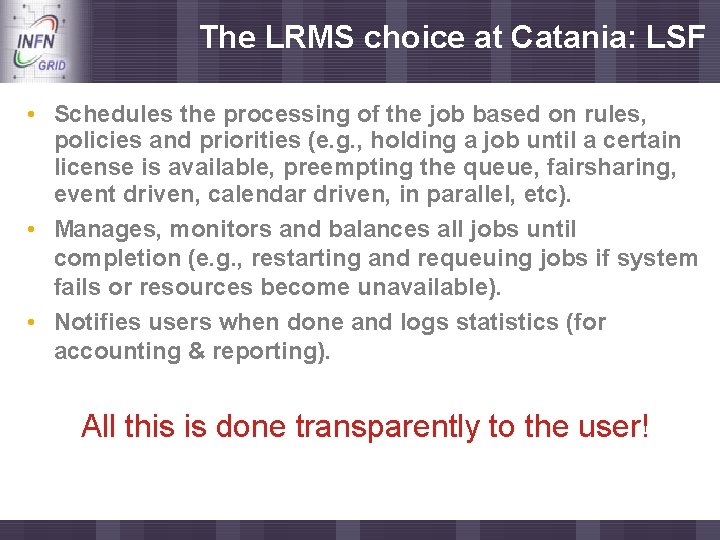

The LRMS choice at Catania: LSF Enabling Grids for E-scienc. E • Schedules the processing of the job based on rules, policies and priorities (e. g. , holding a job until a certain license is available, preempting the queue, fairsharing, event driven, calendar driven, in parallel, etc). • Manages, monitors and balances all jobs until completion (e. g. , restarting and requeuing jobs if system fails or resources become unavailable). • Notifies users when done and logs statistics (for accounting & reporting). All this is done transparently to the user!

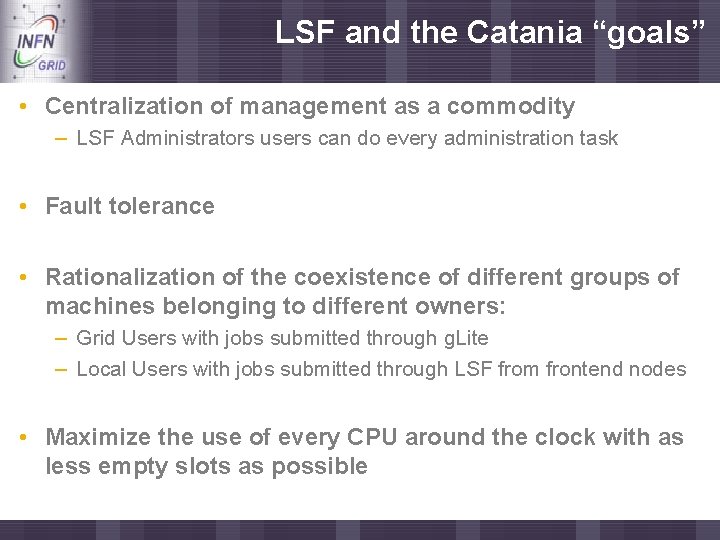

LSF and the Catania “goals” Enabling Grids for E-scienc. E • Centralization of management as a commodity – LSF Administrators users can do every administration task • Fault tolerance • Rationalization of the coexistence of different groups of machines belonging to different owners: – Grid Users with jobs submitted through g. Lite – Local Users with jobs submitted through LSF from frontend nodes • Maximize the use of every CPU around the clock with as less empty slots as possible

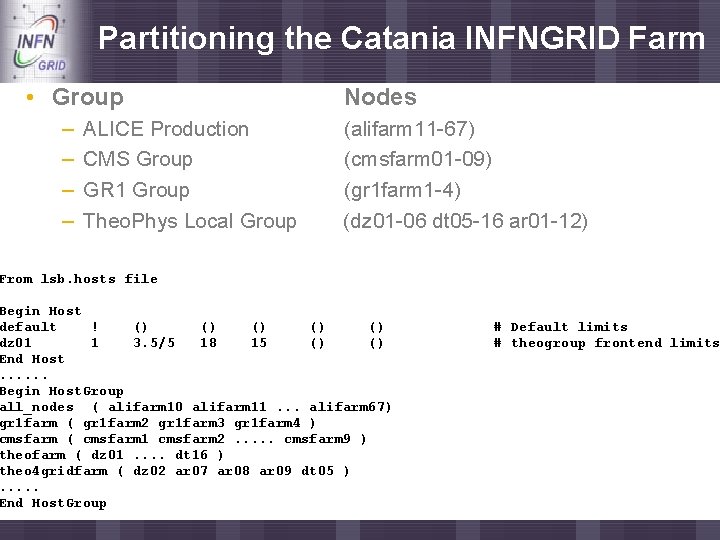

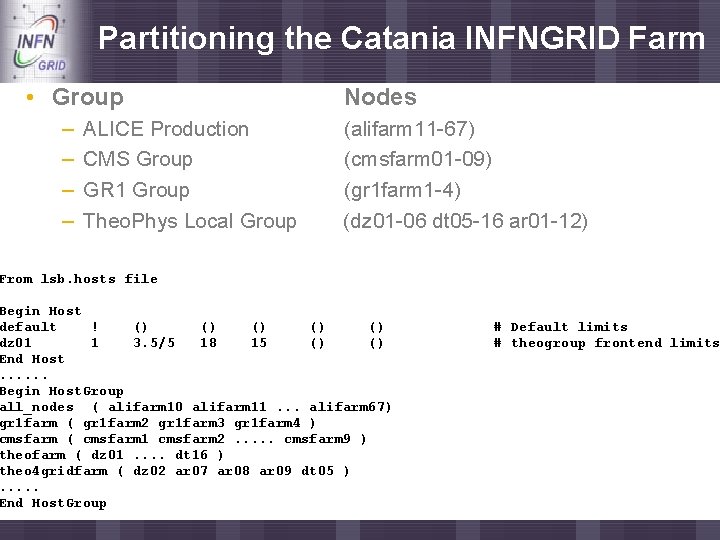

Partitioning the Catania INFNGRID Farm Enabling Grids for E-scienc. E • Group – – ALICE Production CMS Group GR 1 Group Theo. Phys Local Group Nodes (alifarm 11 -67) (cmsfarm 01 -09) (gr 1 farm 1 -4) (dz 01 -06 dt 05 -16 ar 01 -12) From lsb. hosts file Begin Host default ! () () () dz 01 1 3. 5/5 18 15 () () End Host. . . Begin Host. Group all_nodes ( alifarm 10 alifarm 11. . . alifarm 67) gr 1 farm ( gr 1 farm 2 gr 1 farm 3 gr 1 farm 4 ) cmsfarm ( cmsfarm 1 cmsfarm 2. . . cmsfarm 9 ) theofarm ( dz 01. . dt 16 ) theo 4 gridfarm ( dz 02 ar 07 ar 08 ar 09 dt 05 ). . . End Host. Group # Default limits # theogroup frontend limits

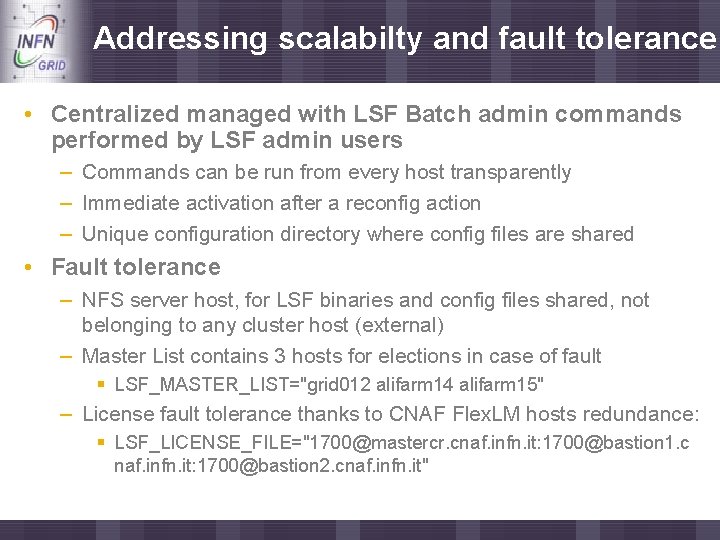

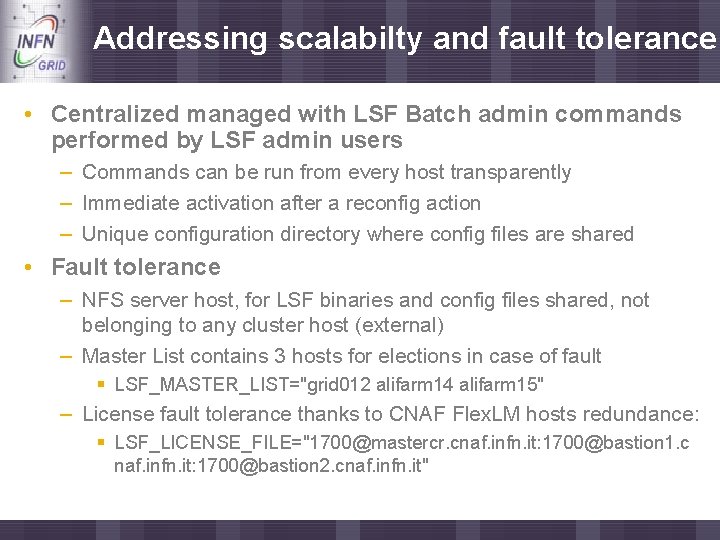

Addressing scalabilty and fault tolerance Enabling Grids for E-scienc. E • Centralized managed with LSF Batch admin commands performed by LSF admin users – Commands can be run from every host transparently – Immediate activation after a reconfig action – Unique configuration directory where config files are shared • Fault tolerance – NFS server host, for LSF binaries and config files shared, not belonging to any cluster host (external) – Master List contains 3 hosts for elections in case of fault LSF_MASTER_LIST="grid 012 alifarm 14 alifarm 15" – License fault tolerance thanks to CNAF Flex. LM hosts redundance: LSF_LICENSE_FILE="1700@mastercr. cnaf. infn. it: 1700@bastion 1. c naf. infn. it: 1700@bastion 2. cnaf. infn. it"

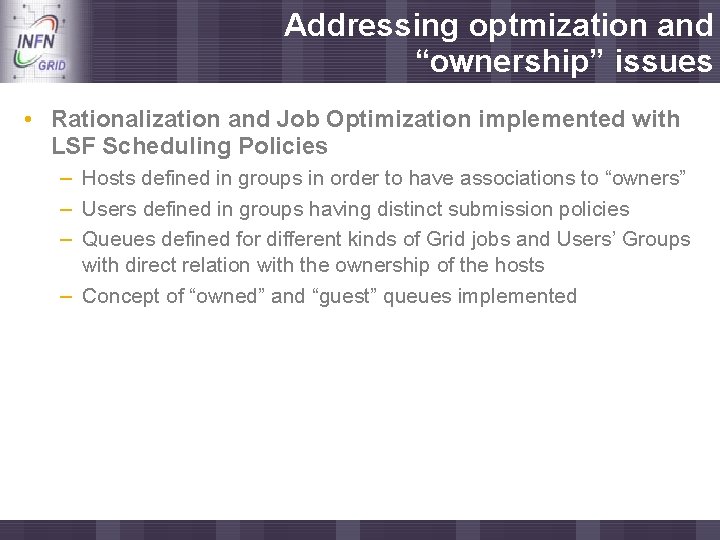

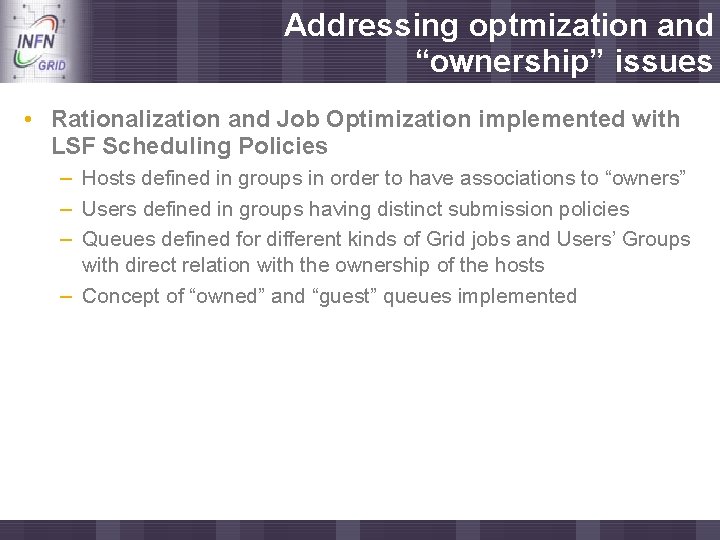

Addressing optmization and “ownership” issues Enabling Grids for E-scienc. E • Rationalization and Job Optimization implemented with LSF Scheduling Policies – Hosts defined in groups in order to have associations to “owners” – Users defined in groups having distinct submission policies – Queues defined for different kinds of Grid jobs and Users’ Groups with direct relation with the ownership of the hosts – Concept of “owned” and “guest” queues implemented

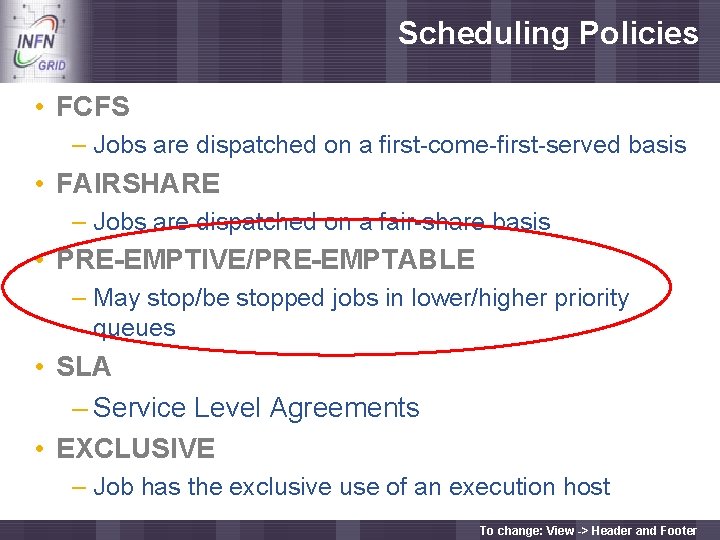

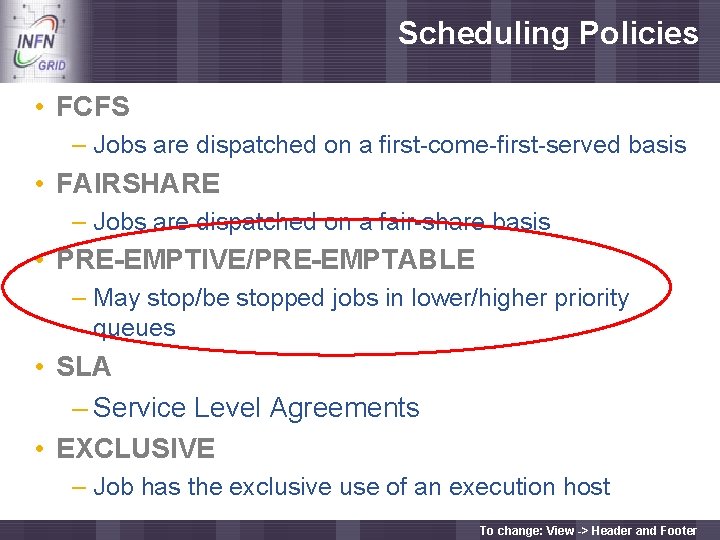

Scheduling Policies Enabling Grids for E-scienc. E • FCFS – Jobs are dispatched on a first-come-first-served basis • FAIRSHARE – Jobs are dispatched on a fair-share basis • PRE-EMPTIVE/PRE-EMPTABLE – May stop/be stopped jobs in lower/higher priority queues • SLA – Service Level Agreements • EXCLUSIVE – Job has the exclusive use of an execution host To change: View -> Header and Footer

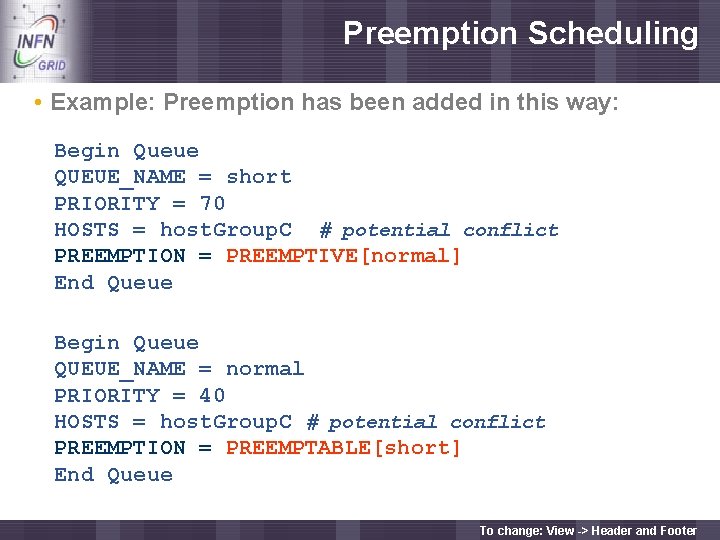

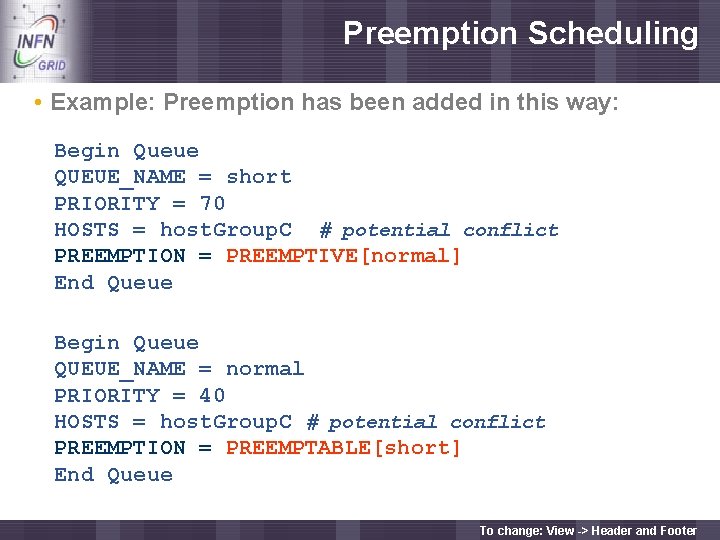

Preemption Scheduling Enabling Grids for E-scienc. E • Example: Preemption has been added in this way: Begin Queue QUEUE_NAME = short PRIORITY = 70 HOSTS = host. Group. C # potential conflict PREEMPTION = PREEMPTIVE[normal] End Queue Begin Queue QUEUE_NAME = normal PRIORITY = 40 HOSTS = host. Group. C # potential conflict PREEMPTION = PREEMPTABLE[short] End Queue To change: View -> Header and Footer

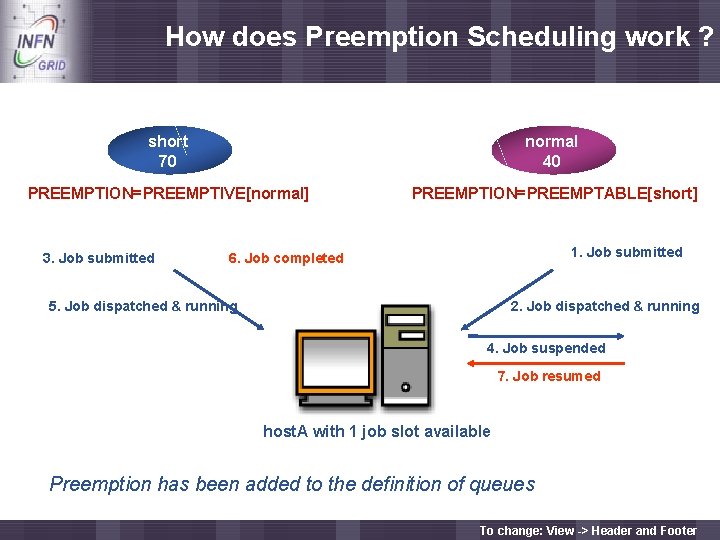

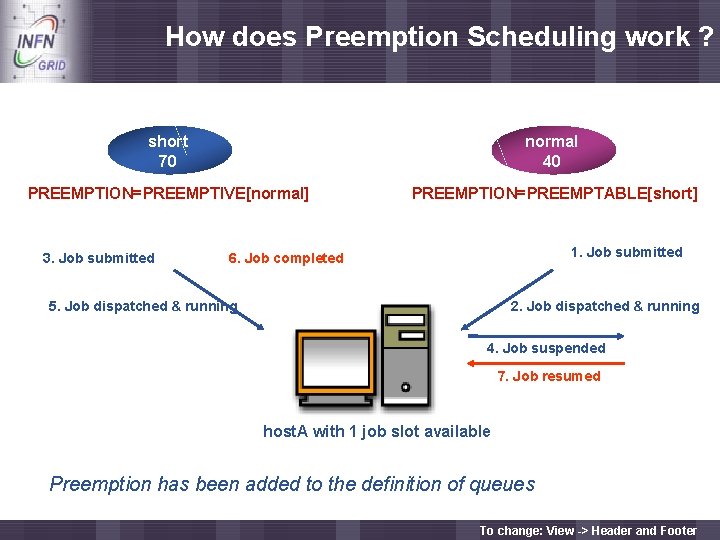

How does Preemption Scheduling work ? Enabling Grids for E-scienc. E short 70 normal 40 PREEMPTION=PREEMPTIVE[normal] PREEMPTION=PREEMPTABLE[short] 3. Job submitted 1. Job submitted 6. Job completed 5. Job dispatched & running 2. Job dispatched & running 4. Job suspended 7. Job resumed host. A with 1 job slot available Preemption has been added to the definition of queues To change: View -> Header and Footer

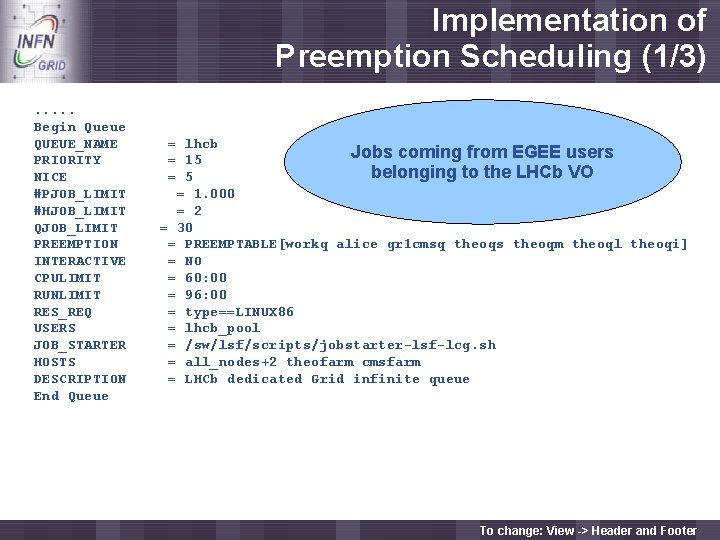

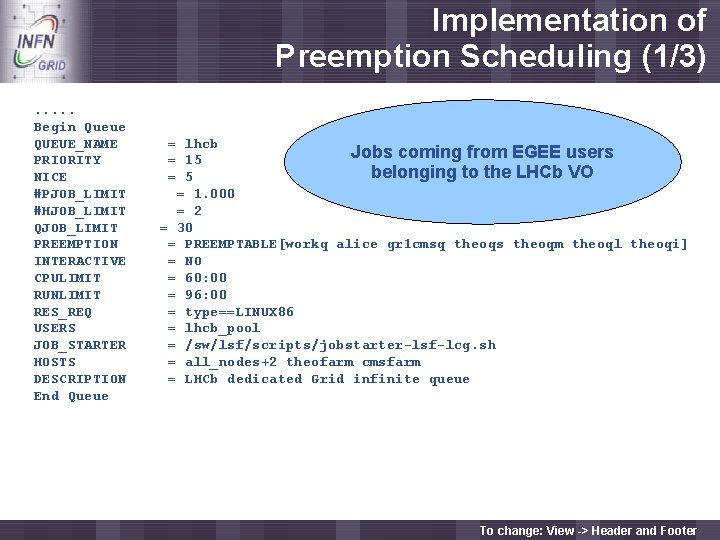

Implementation of Preemption Scheduling (1/3) Enabling Grids for E-scienc. E . . . Begin Queue QUEUE_NAME PRIORITY NICE #PJOB_LIMIT #HJOB_LIMIT QJOB_LIMIT PREEMPTION INTERACTIVE CPULIMIT RUNLIMIT RES_REQ USERS JOB_STARTER HOSTS DESCRIPTION End Queue = lhcb Jobs coming from EGEE users = 15 belonging to the LHCb VO = 5 = 1. 000 = 2 = 30 = PREEMPTABLE[workq alice gr 1 cmsq theoqs theoqm theoql theoqi] = NO = 60: 00 = 96: 00 = type==LINUX 86 = lhcb_pool = /sw/lsf/scripts/jobstarter-lsf-lcg. sh = all_nodes+2 theofarm cmsfarm = LHCb dedicated Grid infinite queue To change: View -> Header and Footer

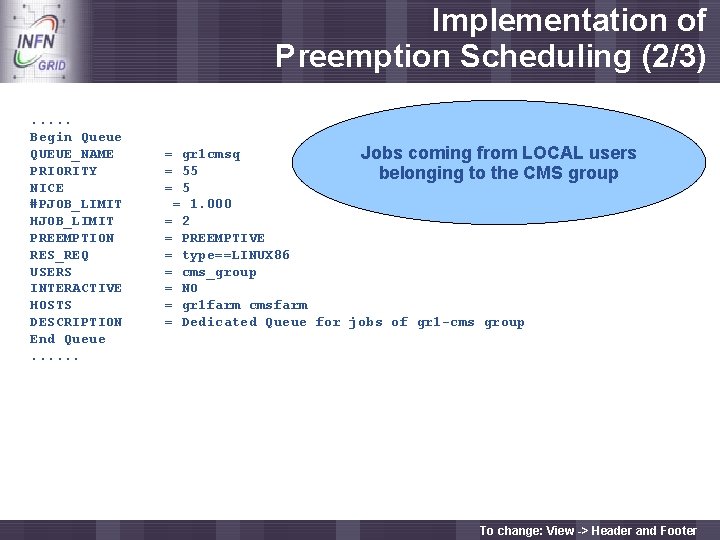

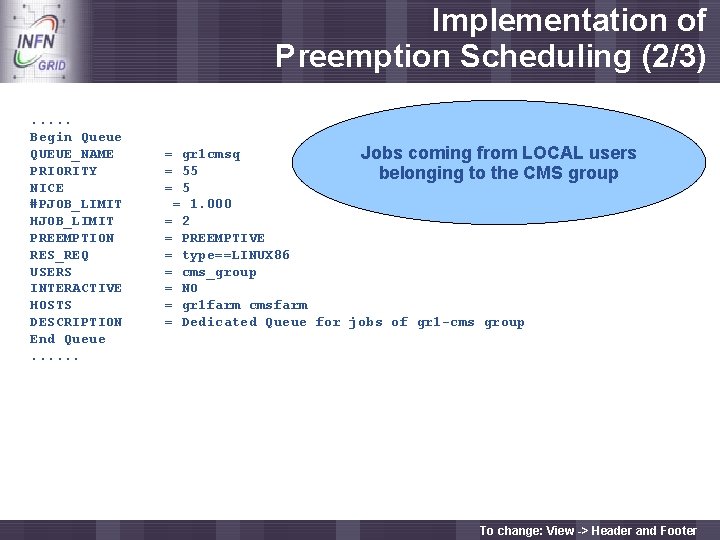

Implementation of Preemption Scheduling (2/3) Enabling Grids for E-scienc. E . . . Begin Queue QUEUE_NAME PRIORITY NICE #PJOB_LIMIT HJOB_LIMIT PREEMPTION RES_REQ USERS INTERACTIVE HOSTS DESCRIPTION End Queue. . . = gr 1 cmsq Jobs coming from LOCAL users = 55 belonging to the CMS group = 5 = 1. 000 = 2 = PREEMPTIVE = type==LINUX 86 = cms_group = NO = gr 1 farm cmsfarm = Dedicated Queue for jobs of gr 1 -cms group To change: View -> Header and Footer

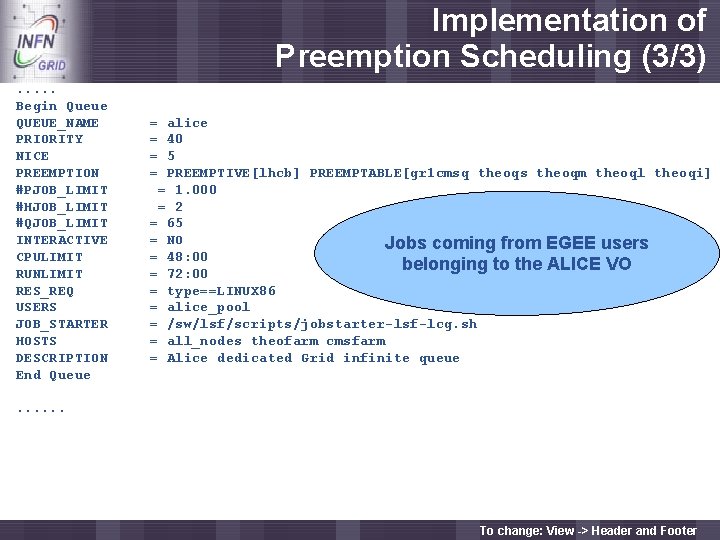

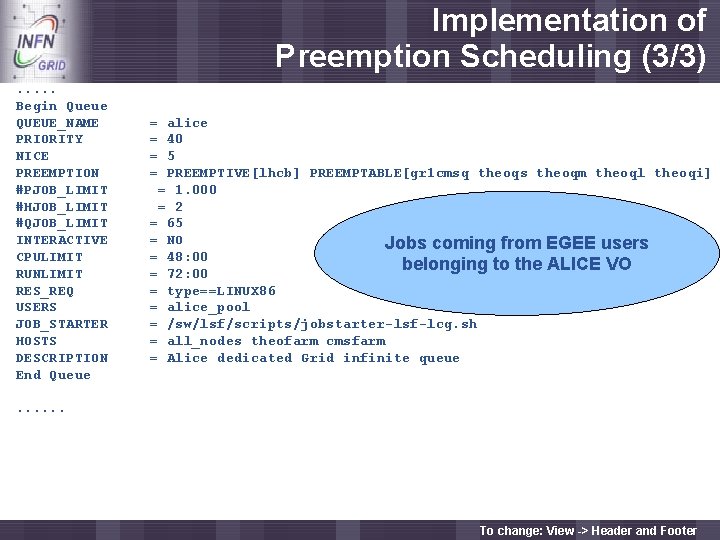

Implementation of Preemption Scheduling (3/3) Enabling Grids for E-scienc. E . . . Begin Queue QUEUE_NAME PRIORITY NICE PREEMPTION #PJOB_LIMIT #HJOB_LIMIT #QJOB_LIMIT INTERACTIVE CPULIMIT RUNLIMIT RES_REQ USERS JOB_STARTER HOSTS DESCRIPTION End Queue = = alice 40 5 PREEMPTIVE[lhcb] PREEMPTABLE[gr 1 cmsq theoqs theoqm theoql theoqi] = 1. 000 = 2 = 65 = NO Jobs coming from EGEE users = 48: 00 belonging to the ALICE VO = 72: 00 = type==LINUX 86 = alice_pool = /sw/lsf/scripts/jobstarter-lsf-lcg. sh = all_nodes theofarm cmsfarm = Alice dedicated Grid infinite queue . . . To change: View -> Header and Footer

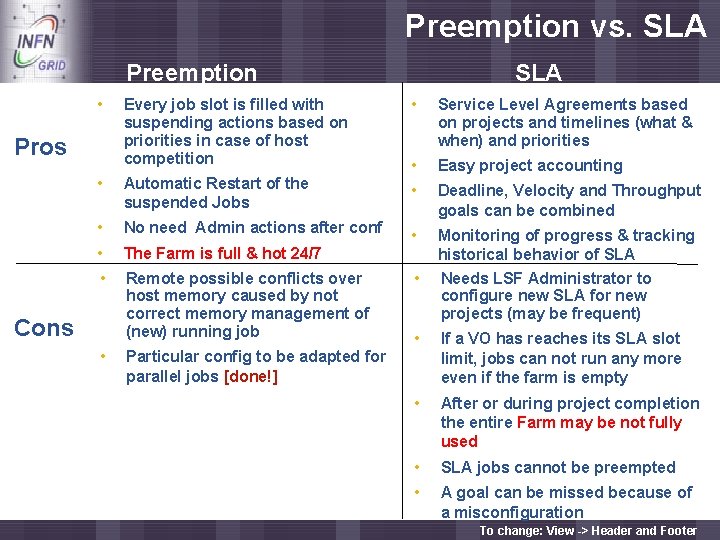

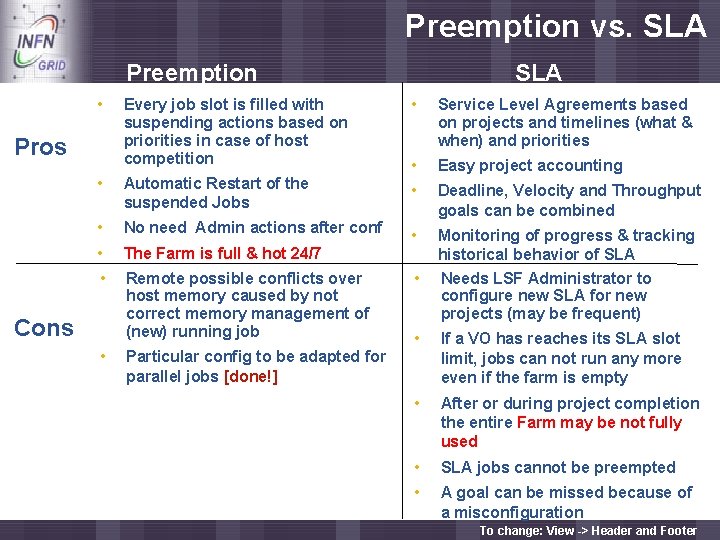

Preemption vs. SLA Enabling Grids for E-scienc. E Preemption • SLA Every job slot is filled with suspending actions based on priorities in case of host competition • Service Level Agreements based on projects and timelines (what & when) and priorities • Easy project accounting Automatic Restart of the suspended Jobs • • • No need Admin actions after conf Deadline, Velocity and Throughput goals can be combined • The Farm is full & hot 24/7 • • Remote possible conflicts over host memory caused by not correct memory management of (new) running job Monitoring of progress & tracking historical behavior of SLA Needs LSF Administrator to configure new SLA for new projects (may be frequent) Pros Cons • Particular config to be adapted for parallel jobs [done!] • • If a VO has reaches its SLA slot limit, jobs can not run any more even if the farm is empty • After or during project completion the entire Farm may be not fully used • SLA jobs cannot be preempted • A goal can be missed because of a misconfiguration To change: View -> Header and Footer

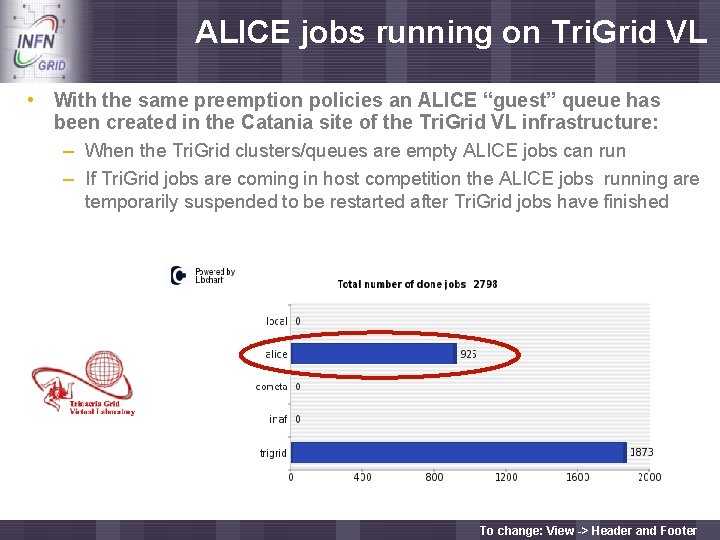

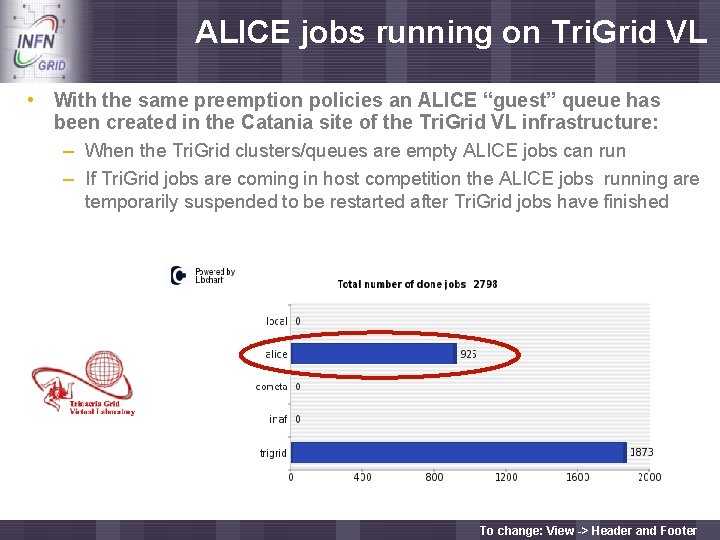

ALICE jobs running on Tri. Grid VL Enabling Grids for E-scienc. E • With the same preemption policies an ALICE “guest” queue has been created in the Catania site of the Tri. Grid VL infrastructure: – When the Tri. Grid clusters/queues are empty ALICE jobs can run – If Tri. Grid jobs are coming in host competition the ALICE jobs running are temporarily suspended to be restarted after Tri. Grid jobs have finished To change: View -> Header and Footer

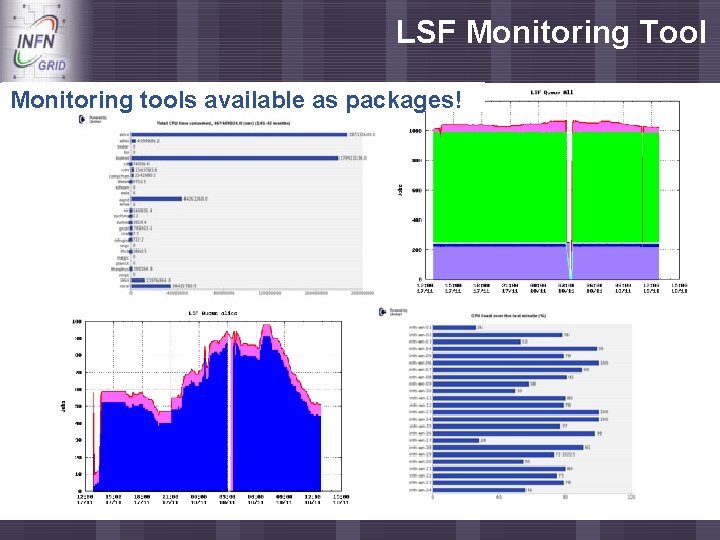

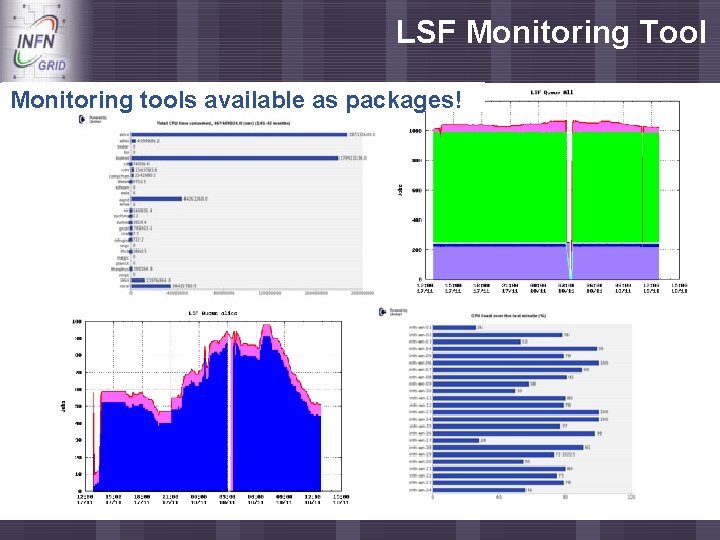

LSF Monitoring Tool Enabling Grids for E-scienc. E Monitoring tools available as packages!

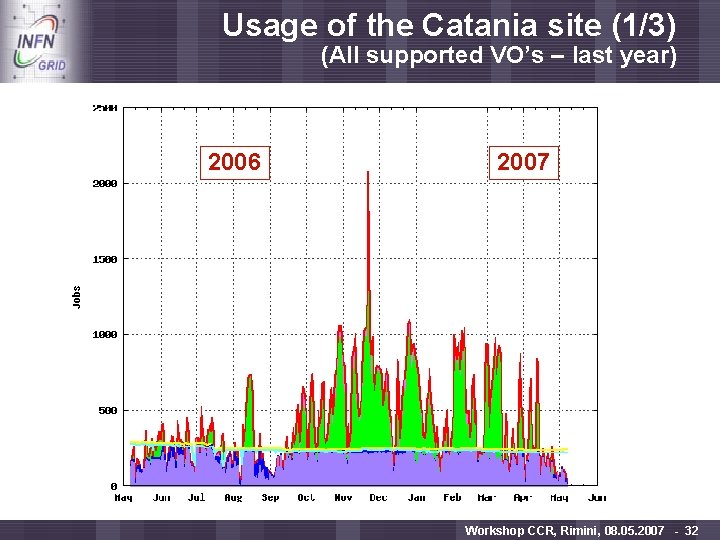

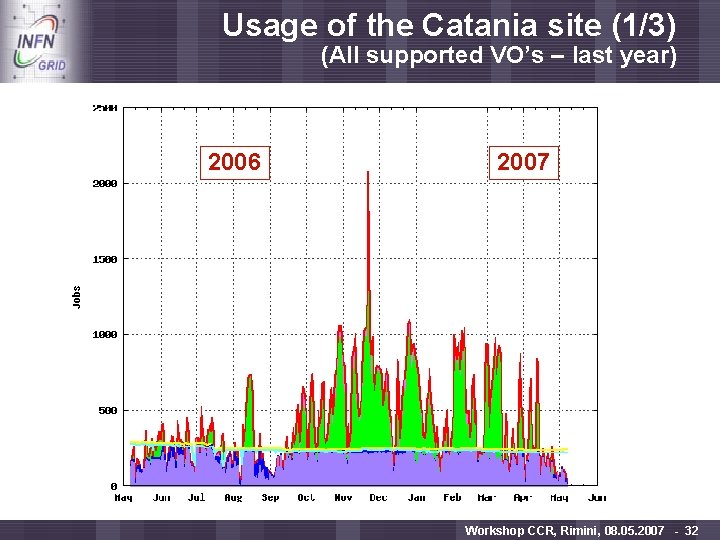

Usage of the Catania site (1/3) Enabling Grids for E-scienc. E 2006 (All supported VO’s – last year) 2007 Workshop CCR, Rimini, 08. 05. 2007 - 32

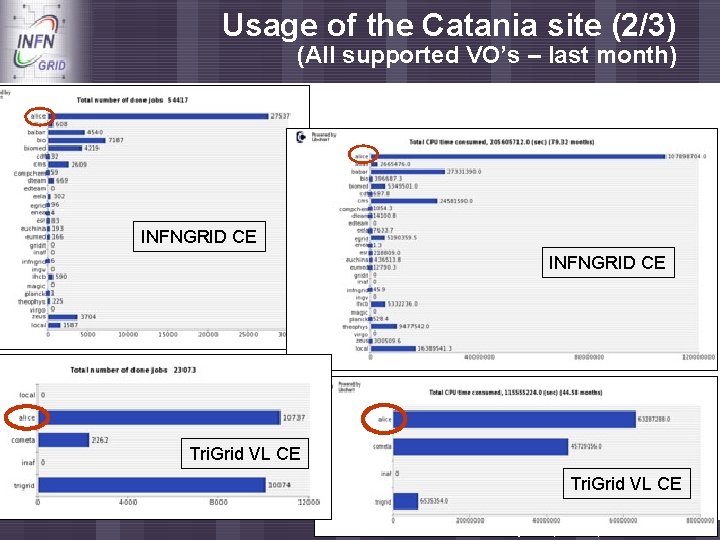

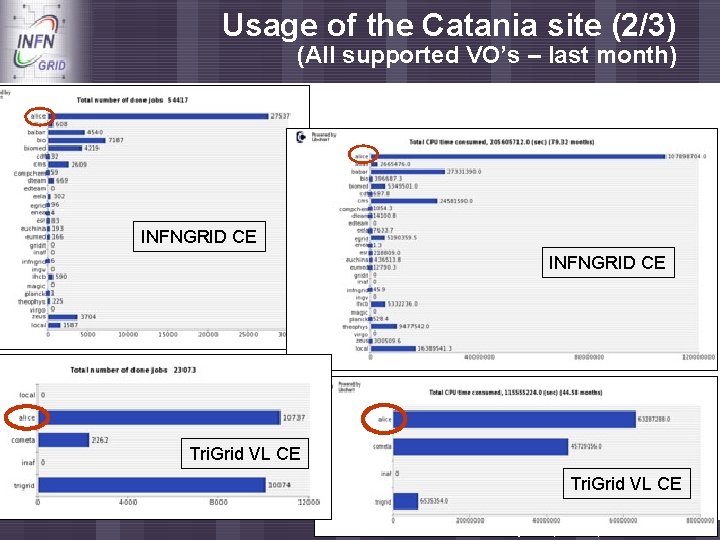

Usage of the Catania site (2/3) (All supported VO’s – last month) Enabling Grids for E-scienc. E INFNGRID CE Tri. Grid VL CE Workshop CCR, Rimini, 08. 05. 2007 - 33

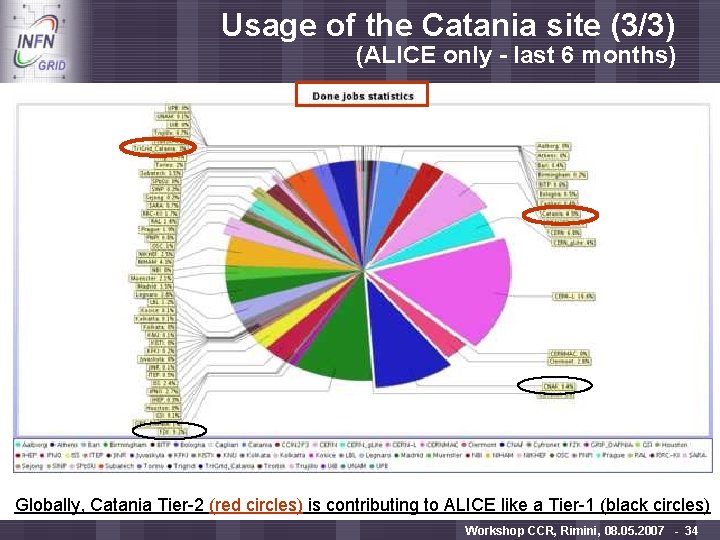

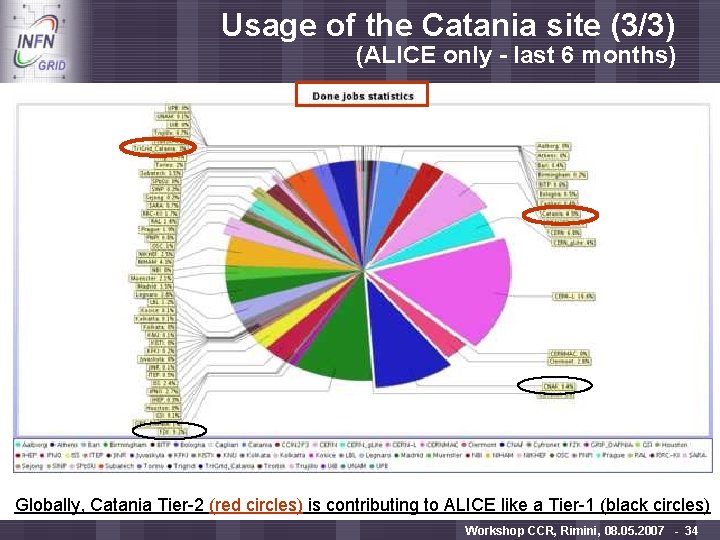

Usage of the Catania site (3/3) Enabling Grids for E-scienc. E (ALICE only - last 6 months) Globally, Catania Tier-2 (red circles) is contributing to ALICE like a Tier-1 (black circles) Workshop CCR, Rimini, 08. 05. 2007 - 34

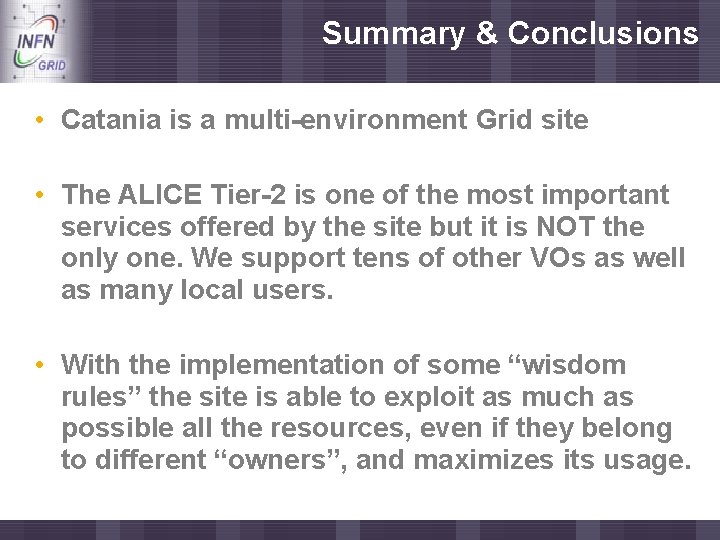

Summary & Conclusions Enabling Grids for E-scienc. E • Catania is a multi-environment Grid site • The ALICE Tier-2 is one of the most important services offered by the site but it is NOT the only one. We support tens of other VOs as well as many local users. • With the implementation of some “wisdom rules” the site is able to exploit as much as possible all the resources, even if they belong to different “owners”, and maximizes its usage.

Enabling Grids for E-scienc. E Questions… Workshop CCR, Rimini, 08. 05. 2007 - 36