The Imperative of Disciplined Parallelism A Hardware Architects

![DPJ Overview • Deterministic-by-default parallel language [OOPSLA’ 09] – – – Extension of sequential DPJ Overview • Deterministic-by-default parallel language [OOPSLA’ 09] – – – Extension of sequential](https://slidetodoc.com/presentation_image/13432a53b4b6c1cd5397e5e915b351d3/image-23.jpg)

![DPJ Overview • Deterministic-by-default parallel language [OOPSLA’ 09] – – – Extension of sequential DPJ Overview • Deterministic-by-default parallel language [OOPSLA’ 09] – – – Extension of sequential](https://slidetodoc.com/presentation_image/13432a53b4b6c1cd5397e5e915b351d3/image-24.jpg)

![Basic De. Novo Coherence [PACT’ 11] • Assume (for now): Private L 1, shared Basic De. Novo Coherence [PACT’ 11] • Assume (for now): Private L 1, shared](https://slidetodoc.com/presentation_image/13432a53b4b6c1cd5397e5e915b351d3/image-33.jpg)

- Slides: 54

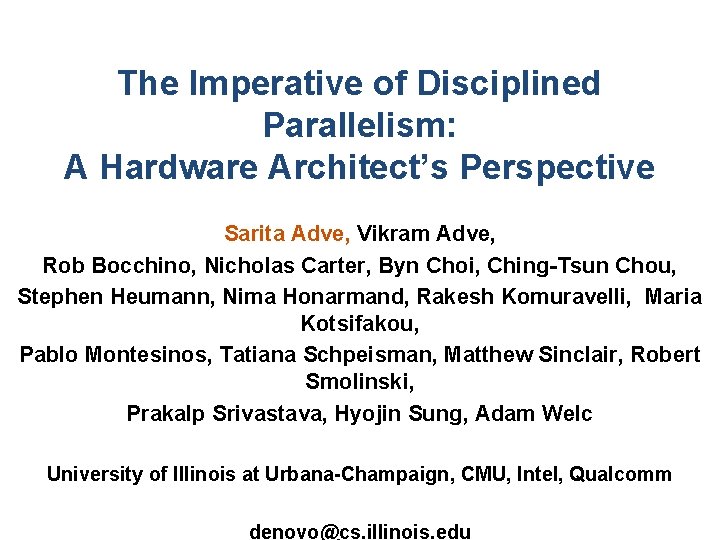

The Imperative of Disciplined Parallelism: A Hardware Architect’s Perspective Sarita Adve, Vikram Adve, Rob Bocchino, Nicholas Carter, Byn Choi, Ching-Tsun Chou, Stephen Heumann, Nima Honarmand, Rakesh Komuravelli, Maria Kotsifakou, Pablo Montesinos, Tatiana Schpeisman, Matthew Sinclair, Robert Smolinski, Prakalp Srivastava, Hyojin Sung, Adam Welc University of Illinois at Urbana-Champaign, CMU, Intel, Qualcomm denovo@cs. illinois. edu

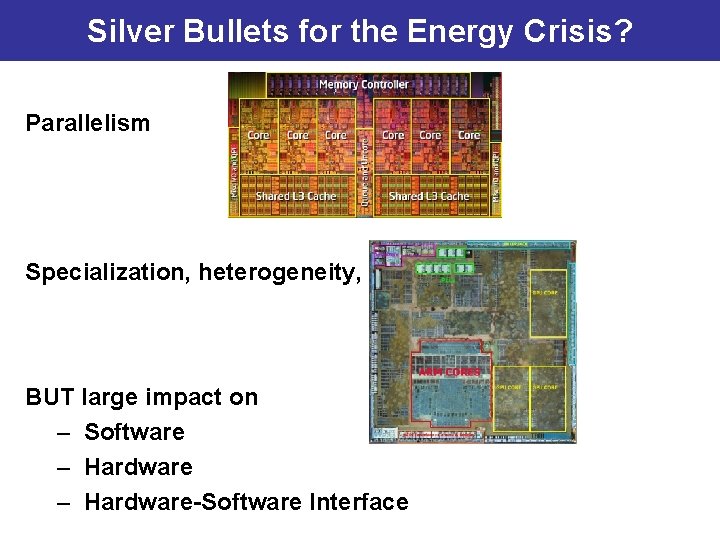

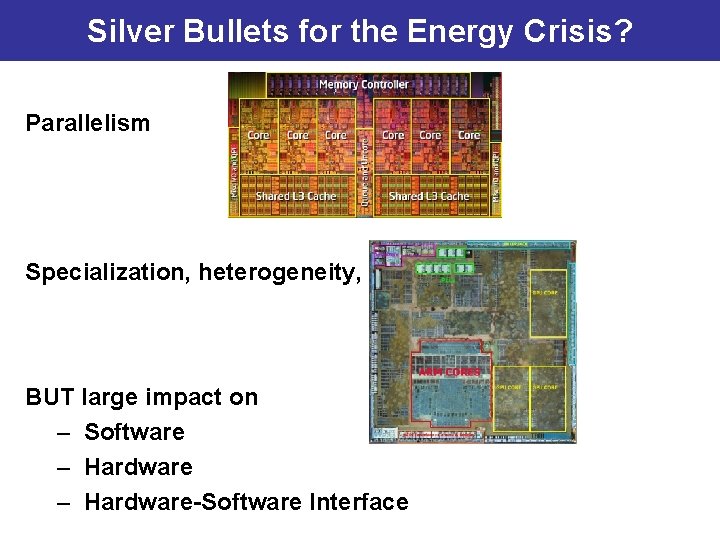

Silver Bullets for the Energy Crisis? Parallelism Specialization, heterogeneity, … BUT large impact on – Software – Hardware-Software Interface

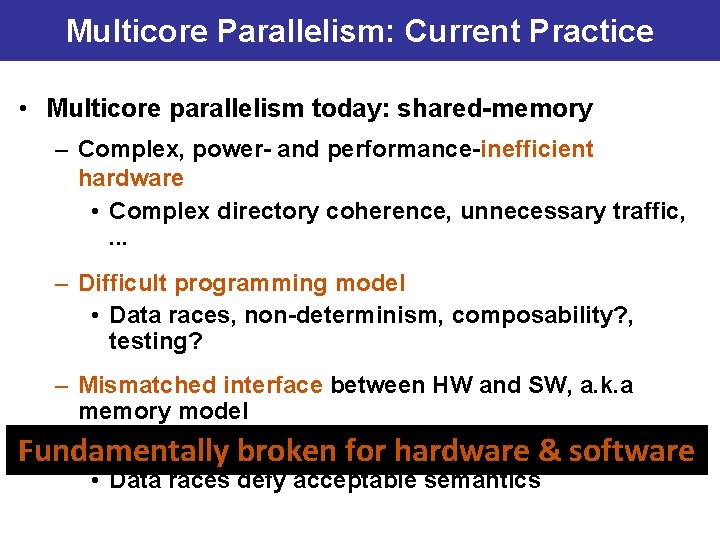

Multicore Parallelism: Current Practice • Multicore parallelism today: shared-memory – Complex, power- and performance-inefficient hardware • Complex directory coherence, unnecessary traffic, . . . – Difficult programming model • Data races, non-determinism, composability? , testing? – Mismatched interface between HW and SW, a. k. a memory model • Can’t specify “what value can read return” Fundamentally broken for hardware & software • Data races defy acceptable semantics

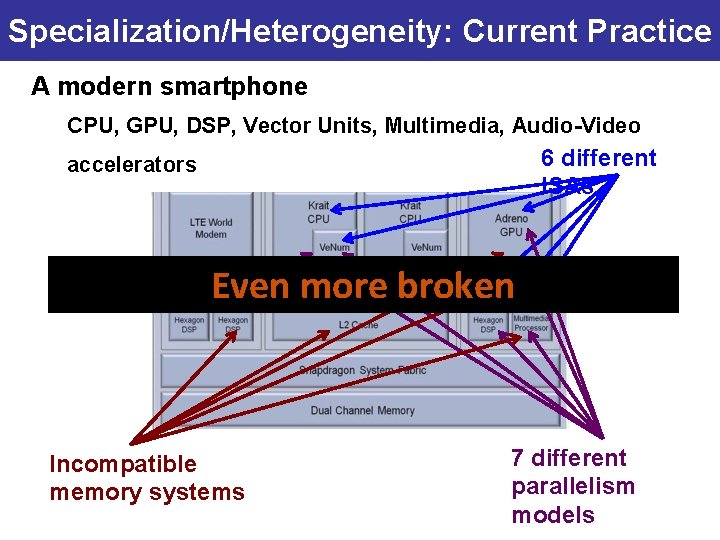

Specialization/Heterogeneity: Current Practice A modern smartphone CPU, GPU, DSP, Vector Units, Multimedia, Audio-Video 6 different ISAs accelerators Even more broken Incompatible memory systems 7 different parallelism models

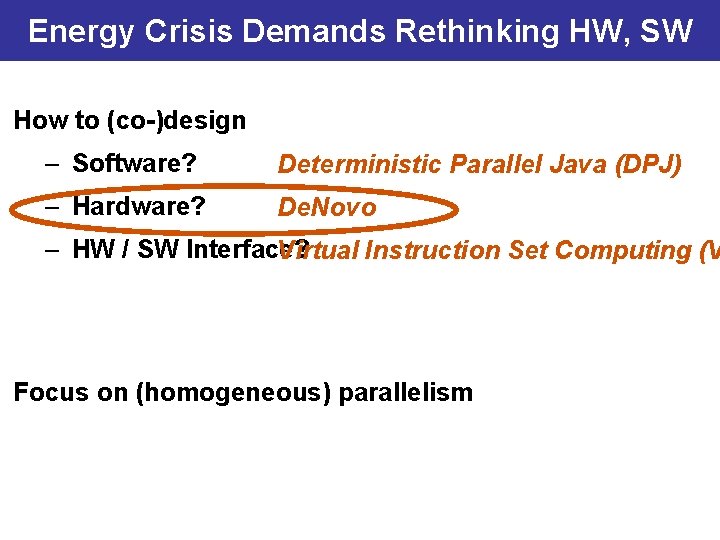

Energy Crisis Demands Rethinking HW, SW How to (co-)design – Software? Deterministic Parallel Java (DPJ) – Hardware? De. Novo – HW / SW Interface? Virtual Instruction Set Computing (V Focus on (homogeneous) parallelism

Multicore Parallelism: Current Practice • Multicore parallelism today: shared-memory – Complex, power- and performance-inefficient hardware • Complex directory coherence, unnecessary traffic, . . . – Difficult programming model • Data races, non-determinism, composability? , testing? – Mismatched interface between HW and SW, a. k. a memory model • Can’t specify “what value can read return” Fundamentally broken for hardware & software • Data races defy acceptable semantics

Multicore Parallelism: Current Practice • Multicore parallelism today: shared-memory – Complex, power- and performance-inefficient hardware • Complex directory coherence, unnecessary traffic, . . . Banish shared memory? – Difficult programming model • Data races, non-determinism, composability? , testing? – Mismatched interface between HW and SW, a. k. a memory model • Can’t specify “what value can read return” Fundamentally broken for hardware & software • Data races defy acceptable semantics

Multicore Parallelism: Current Practice • Multicore parallelism today: shared-memory – Complex, power- and performance-inefficient hardware • Complex directory coherence, unnecessary traffic, . . . Banish wild shared – Difficult programming model memory!composability? , • Data races, non-determinism, testing? disciplined shared Need – Mismatched interface between HW and SW, a. k. a memory model memory! • Can’t specify “what value can read return” Fundamentally broken for hardware & software • Data races defy acceptable semantics

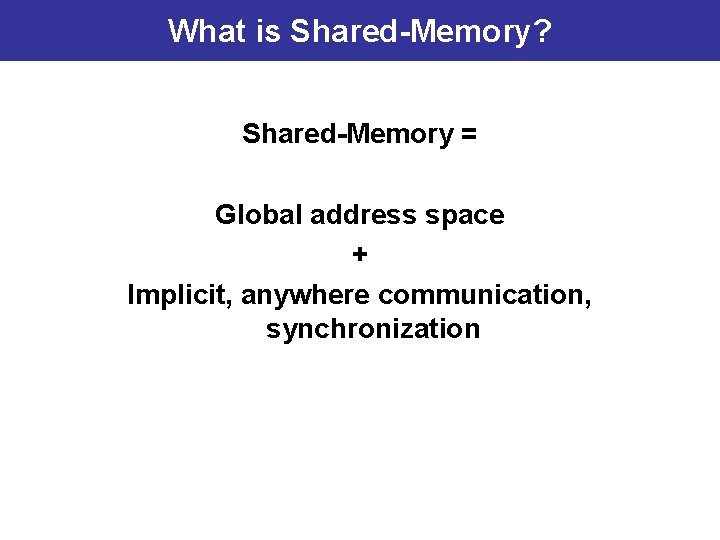

What is Shared-Memory? Shared-Memory = Global address space + Implicit, anywhere communication, synchronization

What is Shared-Memory? Shared-Memory = Global address space + Implicit, anywhere communication, synchronization

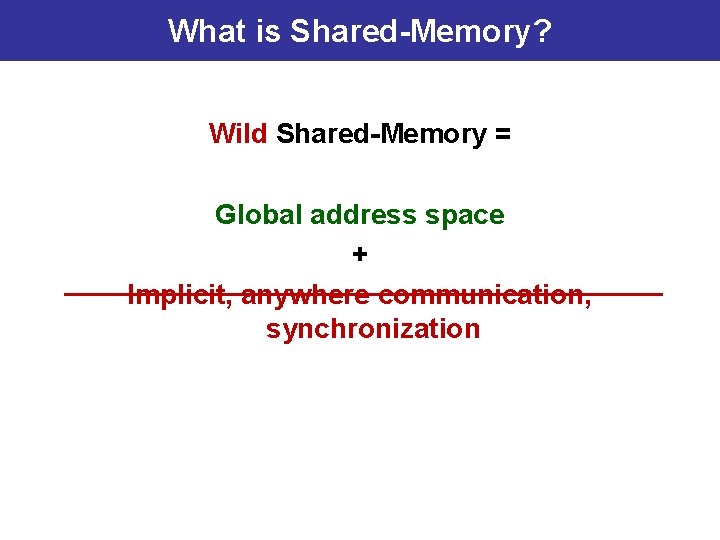

What is Shared-Memory? Wild Shared-Memory = Global address space + Implicit, anywhere communication, synchronization

What is Shared-Memory? Wild Shared-Memory = Global address space + Implicit, anywhere communication, synchronization

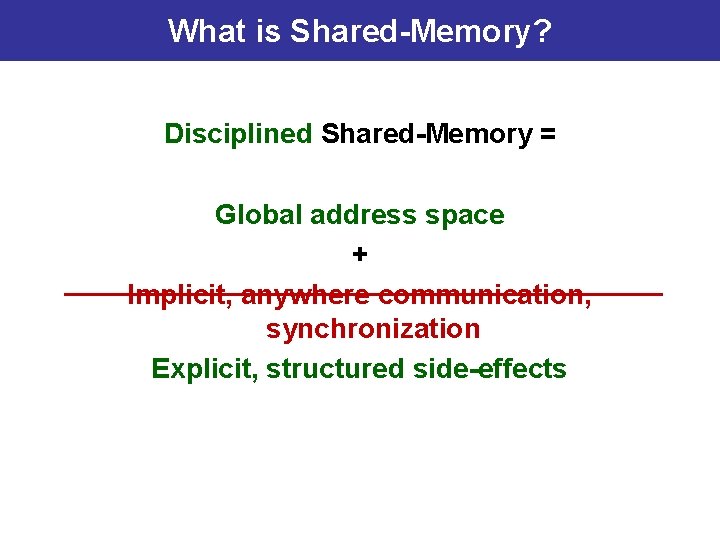

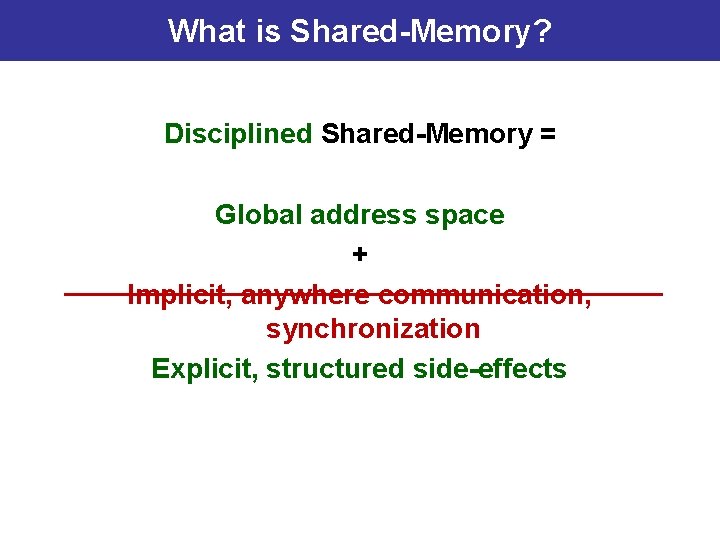

What is Shared-Memory? Disciplined Shared-Memory = Global address space + Implicit, anywhere communication, synchronization Explicit, structured side-effects

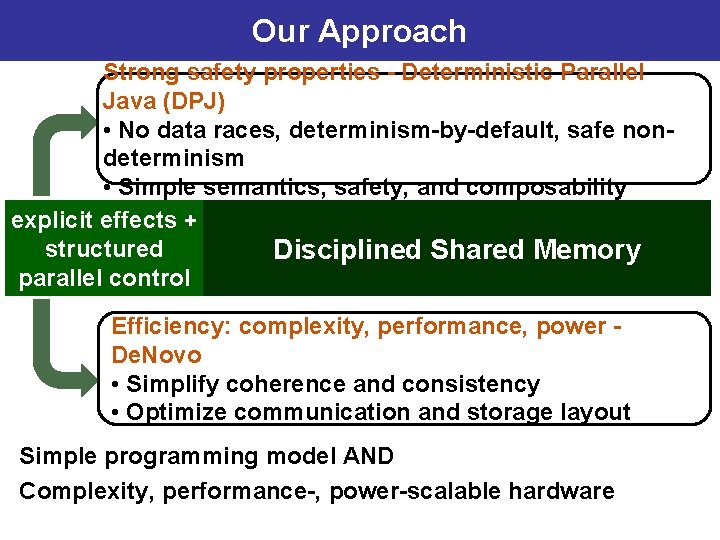

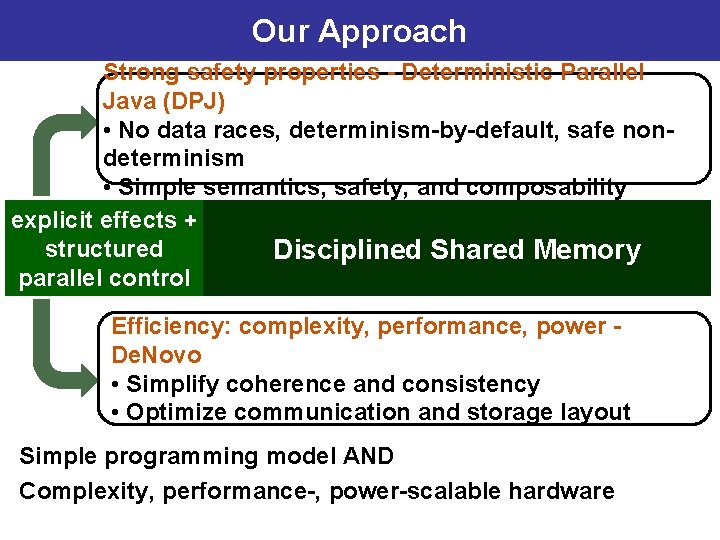

Our Approach Strong safety properties - Deterministic Parallel Java (DPJ) • No data races, determinism-by-default, safe nondeterminism • Simple semantics, safety, and composability explicit effects + structured Disciplined Shared Memory parallel control Efficiency: complexity, performance, power De. Novo • Simplify coherence and consistency • Optimize communication and storage layout Simple programming model AND Complexity, performance-, power-scalable hardware

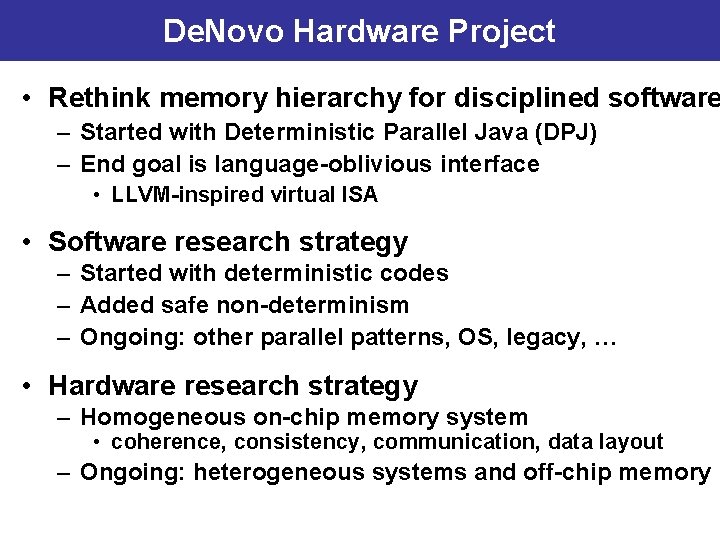

De. Novo Hardware Project • Rethink memory hierarchy for disciplined software – Started with Deterministic Parallel Java (DPJ) – End goal is language-oblivious interface • LLVM-inspired virtual ISA • Software research strategy – Started with deterministic codes – Added safe non-determinism – Ongoing: other parallel patterns, OS, legacy, … • Hardware research strategy – Homogeneous on-chip memory system • coherence, consistency, communication, data layout – Ongoing: heterogeneous systems and off-chip memory

De. Novo Hardware Project • Rethink memory hierarchy for disciplined software – Started with Deterministic Parallel Java (DPJ) – End goal is language-oblivious interface • LLVM-inspired virtual ISA • Software research strategy – Started with deterministic codes – Added safe non-determinism – Ongoing: other parallel patterns, OS, legacy, … • Hardware research strategy – Homogeneous on-chip memory system • coherence, consistency, communication, data layout – Similar ideas apply to heterogeneous and off-chip memory

De. Novo Hardware Project • Rethink memory hierarchy for disciplined software – Started with Deterministic Parallel Java (DPJ) – End goal is language-oblivious interface • LLVM-inspired virtual ISA • Software research strategy – Started with deterministic codes – Added safe non-determinism [ASPLOS’ 13] – Ongoing: other parallel patterns, OS, legacy, … • Hardware research strategy – Homogeneous on-chip memory system • coherence, consistency, communication, data layout – Similar ideas apply to heterogeneous and off-chip memory

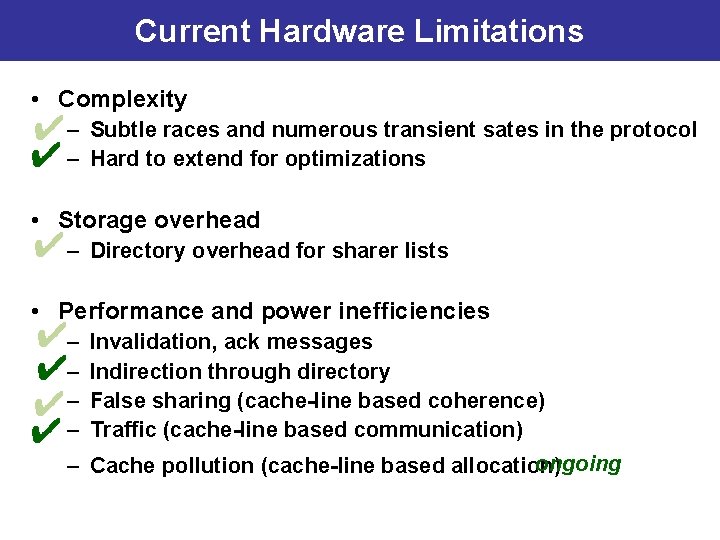

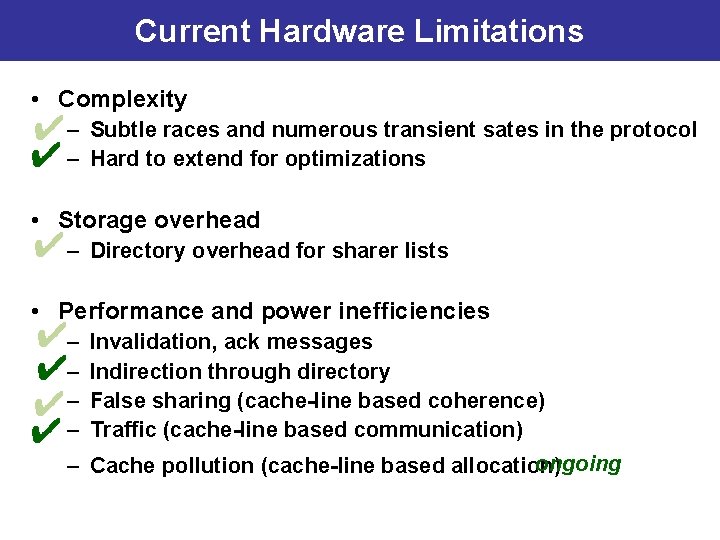

Current Hardware Limitations • Complexity – Subtle races and numerous transient states in the protocol – Hard to verify and extend for optimizations • Storage overhead – Directory overhead for sharer lists • Performance and power inefficiencies – – – Invalidation, ack messages Indirection through directory False sharing (cache-line based coherence) Bandwidth waste (cache-line based communication) Cache pollution (cache-line based allocation)

Results for Deterministic Codes • Complexity Base De. Novo 20 X faster to verify vs. − Simple to extend for optimizations MESI − No transient states • Storage overhead – Directory overhead for sharer lists • Performance and power inefficiencies – – – Invalidation, ack messages Indirection through directory False sharing (cache-line based coherence) Bandwidth waste (cache-line based communication) Cache pollution (cache-line based allocation)

Results for Deterministic Codes • Complexity Base De. Novo 20 X faster to verify vs. − Simple to extend for optimizations MESI − No transient states • Storage overhead − No storage overhead for directory information • 20 Performance and power inefficiencies – – – Invalidation, ack messages Indirection through directory False sharing (cache-line based coherence) Bandwidth waste (cache-line based communication) Cache pollution (cache-line based allocation)

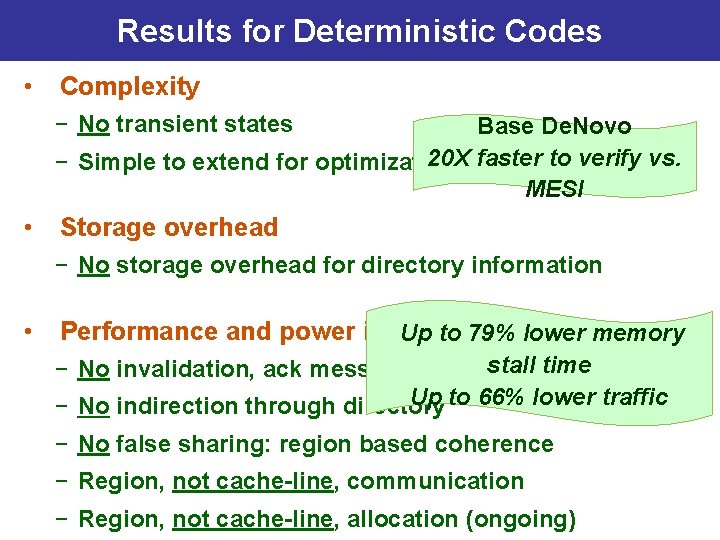

Results for Deterministic Codes • Complexity − No transient states Base De. Novo 20 X faster to verify vs. − Simple to extend for optimizations MESI • Storage overhead − No storage overhead for directory information • Performance and power inefficiencies Up to 79% lower memory stall time − No invalidation, ack messages Up to 66% lower traffic − No indirection through directory − No false sharing: region based coherence − Region, not cache-line, communication − Region, not cache-line, allocation (ongoing)

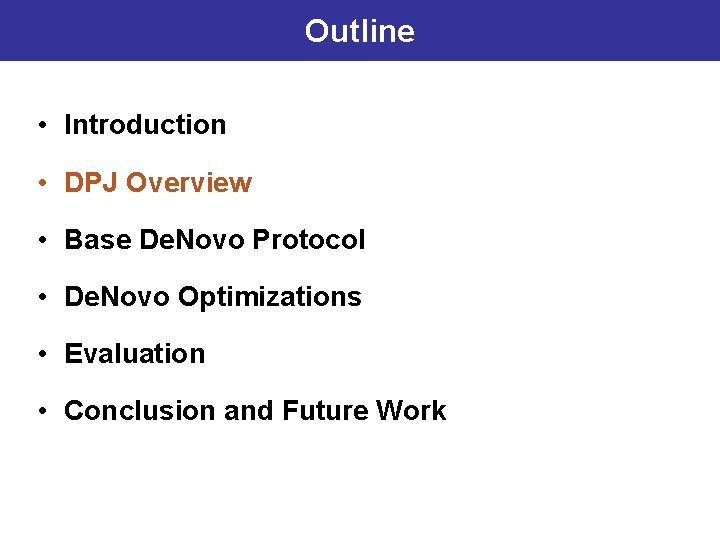

Outline • Introduction • DPJ Overview • Base De. Novo Protocol • De. Novo Optimizations • Evaluation • Conclusion and Future Work

![DPJ Overview Deterministicbydefault parallel language OOPSLA 09 Extension of sequential DPJ Overview • Deterministic-by-default parallel language [OOPSLA’ 09] – – – Extension of sequential](https://slidetodoc.com/presentation_image/13432a53b4b6c1cd5397e5e915b351d3/image-23.jpg)

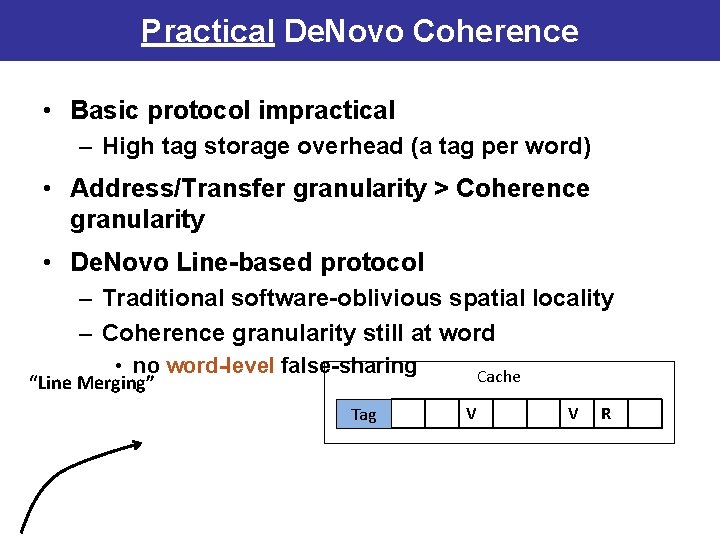

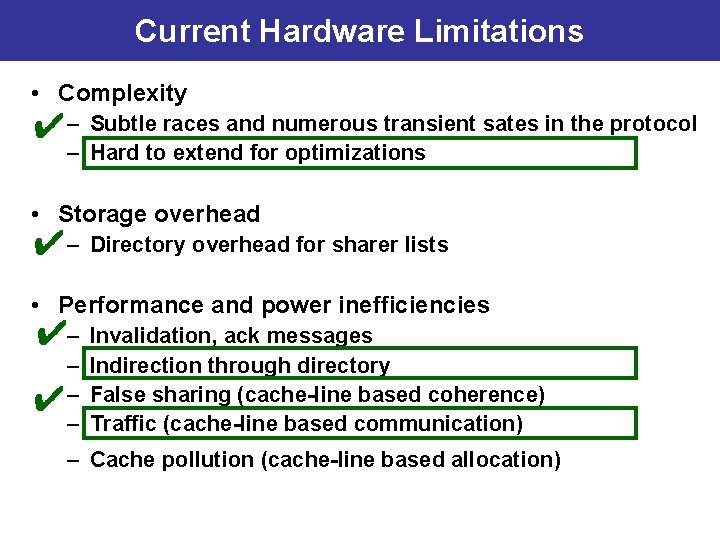

DPJ Overview • Deterministic-by-default parallel language [OOPSLA’ 09] – – – Extension of sequential Java Structured parallel control: nested fork-join Novel region-based type and effect system Speedups close to hand-written Java Expressive enough for irregular, dynamic parallelism • Supports – Disciplined non-determinism [POPL’ 11] • Explicit, data race-free, isolated • Non-deterministic, deterministic code co-exist safely (composable) – Unanalyzable effects using trusted frameworks [ECOOP’ 11] – Unstructured parallelism using tasks w/ effects [PPo. PP’ 13] • Focus here on deterministic codes

![DPJ Overview Deterministicbydefault parallel language OOPSLA 09 Extension of sequential DPJ Overview • Deterministic-by-default parallel language [OOPSLA’ 09] – – – Extension of sequential](https://slidetodoc.com/presentation_image/13432a53b4b6c1cd5397e5e915b351d3/image-24.jpg)

DPJ Overview • Deterministic-by-default parallel language [OOPSLA’ 09] – – – Extension of sequential Java Structured parallel control: nested fork-join Novel region-based type and effect system Speedups close to hand-written Java Expressive enough for irregular, dynamic parallelism • Supports – Disciplined non-determinism [POPL’ 11] • Explicit, data race-free, isolated • Non-deterministic, deterministic code co-exist safely (composable) – Unanalyzable effects using trusted frameworks [ECOOP’ 12] – Unstructured parallelism using tasks w/ effects [PPo. PP’ 13] • Focus here on deterministic codes

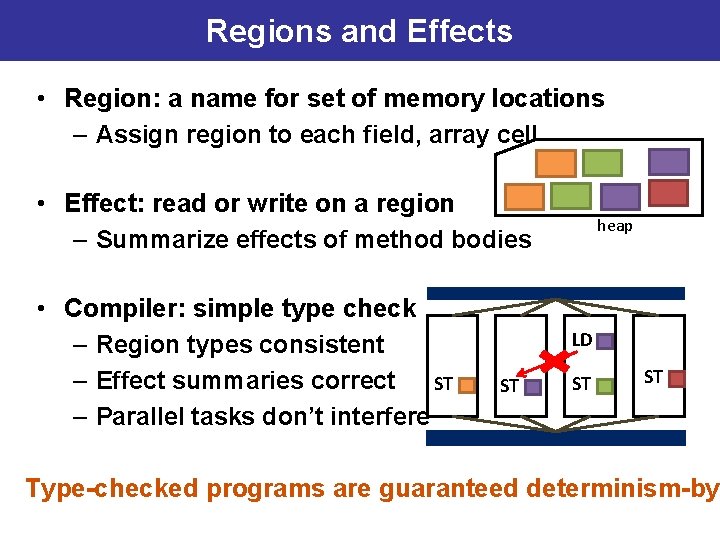

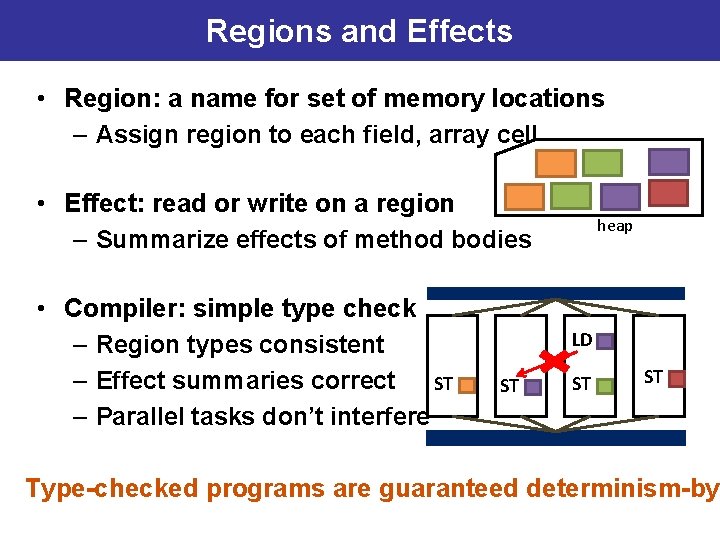

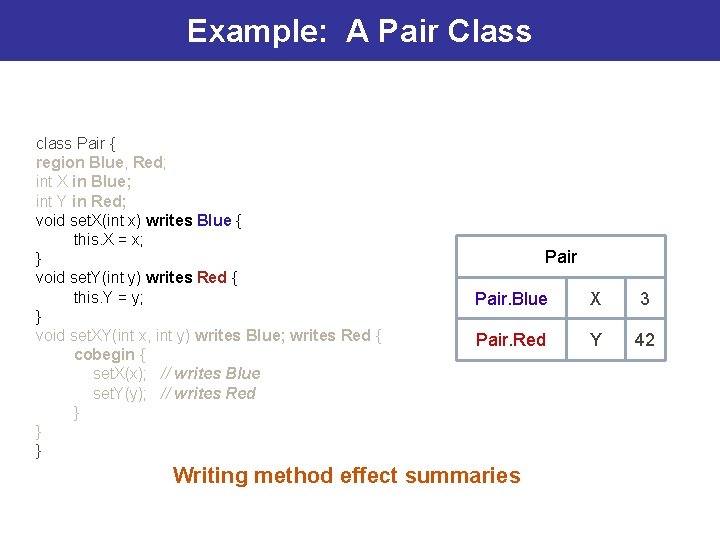

Regions and Effects • Region: a name for set of memory locations – Assign region to each field, array cell • Effect: read or write on a region – Summarize effects of method bodies • Compiler: simple type check – Region types consistent – Effect summaries correct ST – Parallel tasks don’t interfere heap LD ST ST ST Type-checked programs are guaranteed determinism-by-

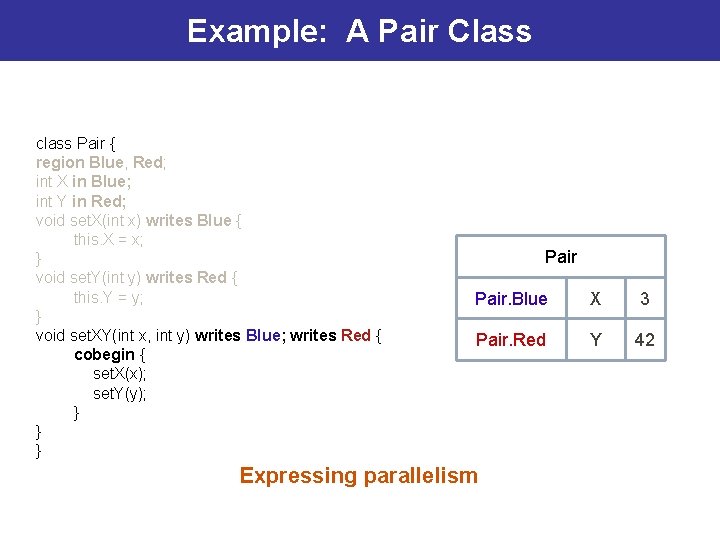

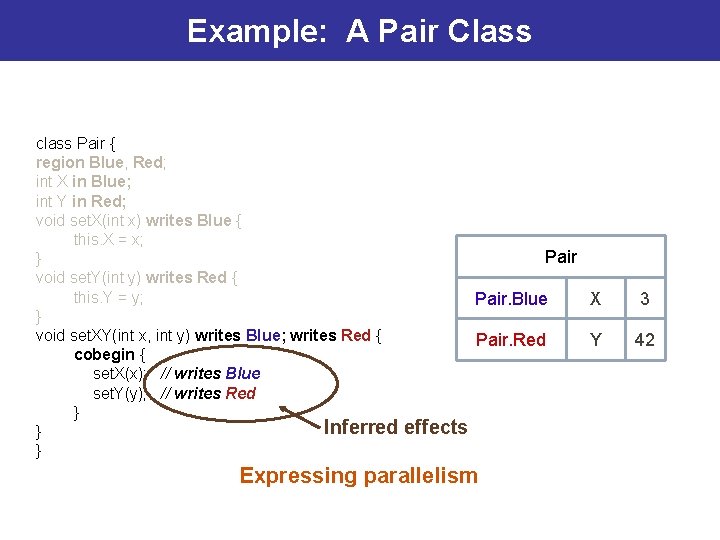

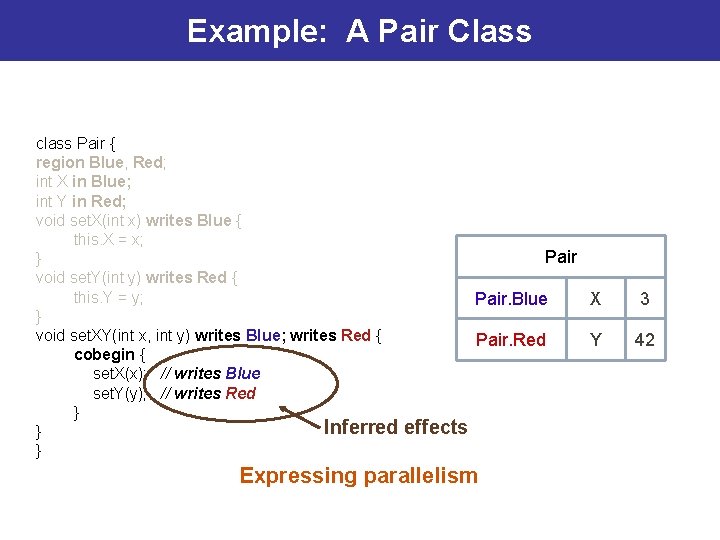

Example: A Pair Class class Pair { region Blue, Red; int X in Blue; int Y in Red; void set. X(int x) writes Blue { this. X = x; } void set. Y(int y) writes Red { this. Y = y; } void set. XY(int x, int y) writes Blue; writes Red { cobegin { set. X(x); // writes Blue set. Y(y); // writes Red } } } Region names have static scope (one per class) Pair. Blue X 3 Pair. Red Y 42 Declaring and using region names

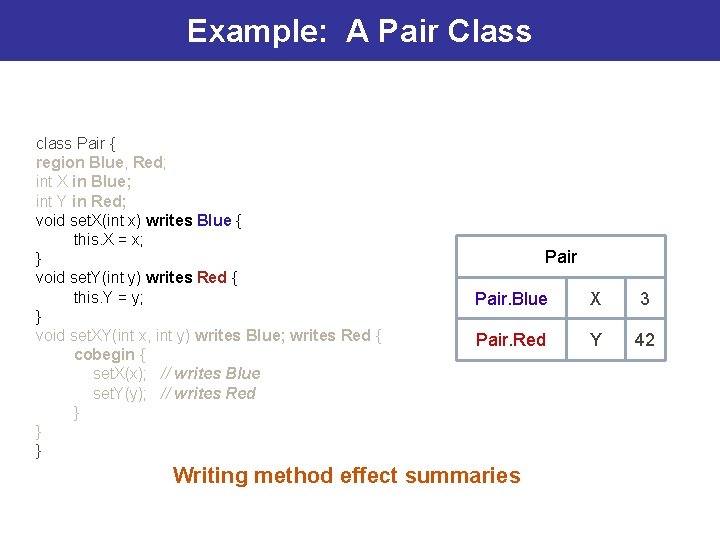

Example: A Pair Class class Pair { region Blue, Red; int X in Blue; int Y in Red; void set. X(int x) writes Blue { this. X = x; } void set. Y(int y) writes Red { this. Y = y; } void set. XY(int x, int y) writes Blue; writes Red { cobegin { set. X(x); // writes Blue set. Y(y); // writes Red } } } Pair. Blue X 3 Pair. Red Y 42 Writing method effect summaries

Example: A Pair Class class Pair { region Blue, Red; int X in Blue; int Y in Red; void set. X(int x) writes Blue { this. X = x; } void set. Y(int y) writes Red { this. Y = y; } void set. XY(int x, int y) writes Blue; writes Red { cobegin { set. X(x); set. Y(y); } } } Pair. Blue X 3 Pair. Red Y 42 Expressing parallelism

Example: A Pair Class class Pair { region Blue, Red; int X in Blue; int Y in Red; void set. X(int x) writes Blue { this. X = x; } void set. Y(int y) writes Red { this. Y = y; } void set. XY(int x, int y) writes Blue; writes Red { cobegin { set. X(x); // writes Blue set. Y(y); // writes Red } Inferred } } Pair. Blue X 3 Pair. Red Y 42 effects Expressing parallelism

Outline • Introduction • Background: DPJ • Base De. Novo Protocol • De. Novo Optimizations • Evaluation • Conclusion and Future Work

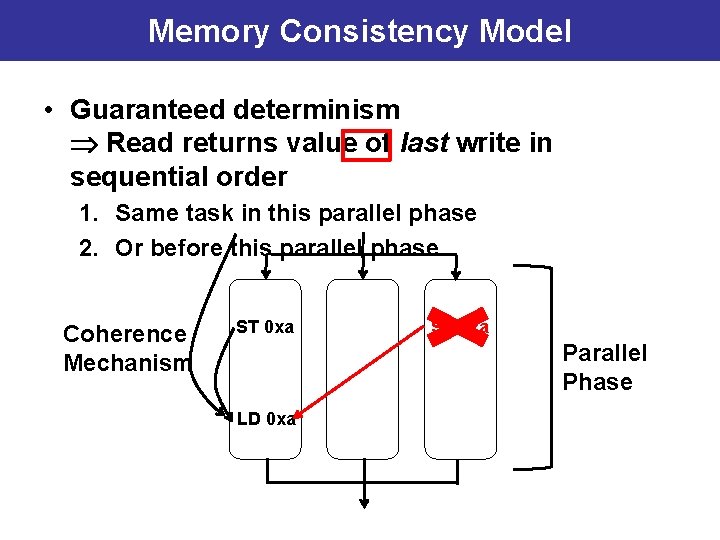

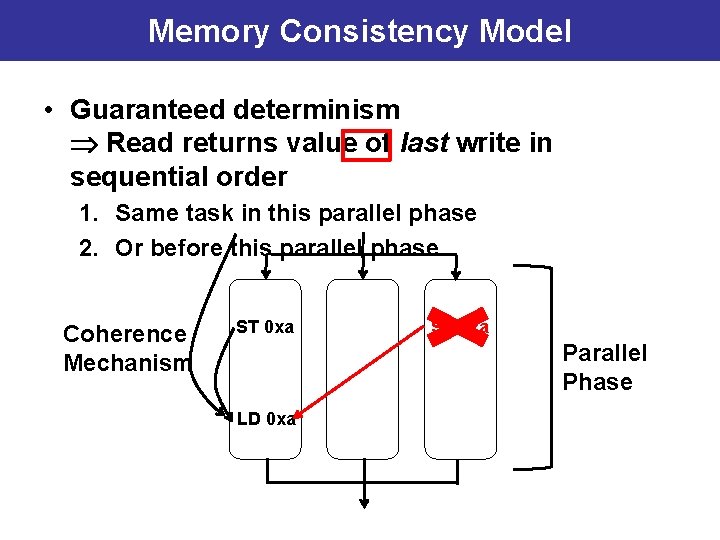

Memory Consistency Model • Guaranteed determinism Read returns value of last write in sequential order 1. Same task in this parallel phase 2. Or before this parallel phase Coherence Mechanism ST 0 xa Parallel Phase LD 0 xa

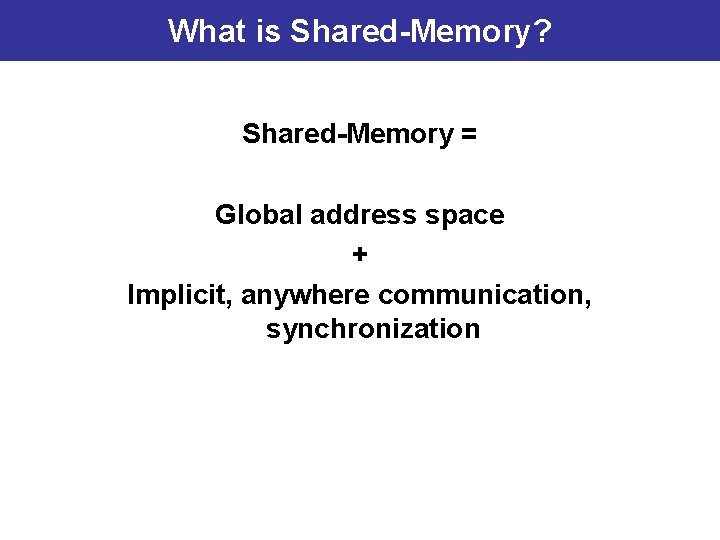

Cache Coherence • Coherence Enforcement 1. Invalidate stale copies in caches 2. Track one up-to-date copy • Explicit effects – Compiler knows all regions written in this parallel phase – Cache can self-invalidate before next parallel phase • Invalidates data in writeable regions not accessed by itself • Registration – Directory keeps track of one up-to-date copy – Writer updates before next parallel phase

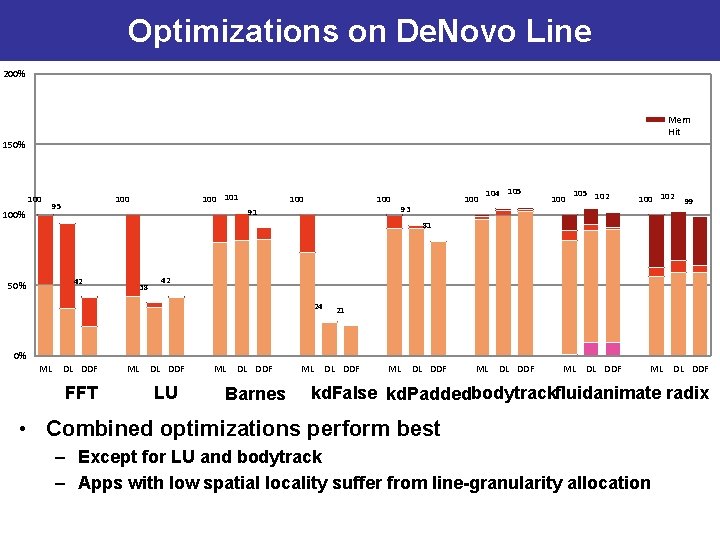

![Basic De Novo Coherence PACT 11 Assume for now Private L 1 shared Basic De. Novo Coherence [PACT’ 11] • Assume (for now): Private L 1, shared](https://slidetodoc.com/presentation_image/13432a53b4b6c1cd5397e5e915b351d3/image-33.jpg)

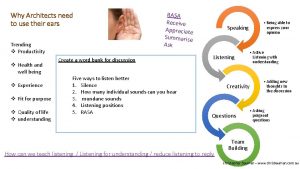

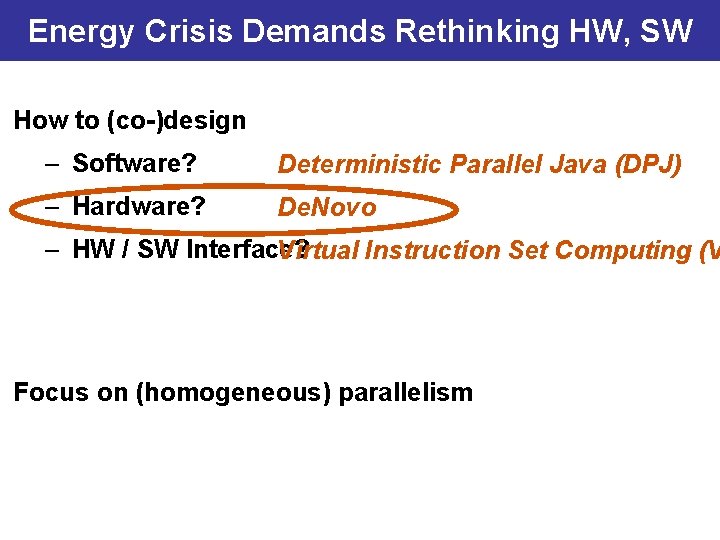

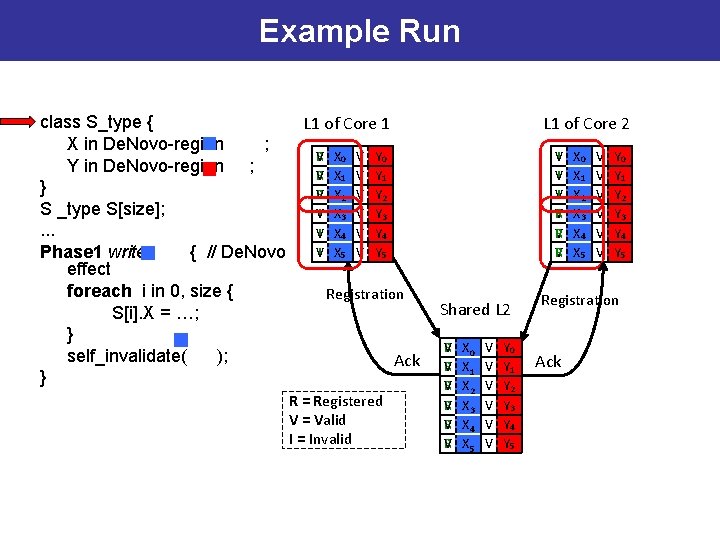

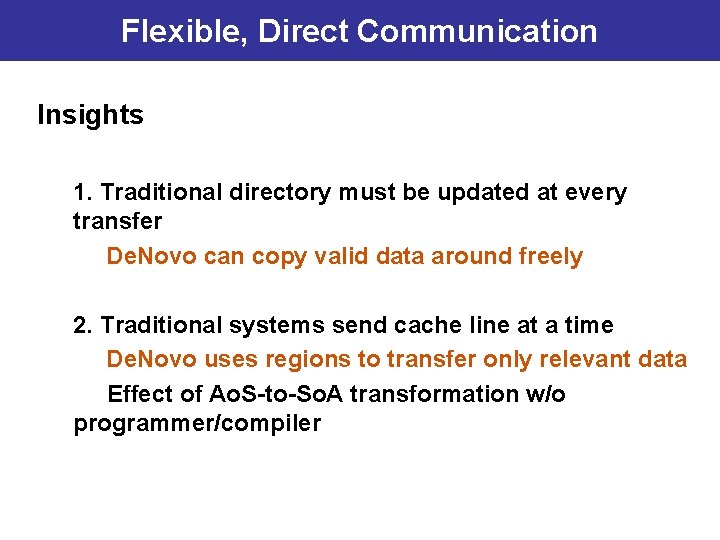

Basic De. Novo Coherence [PACT’ 11] • Assume (for now): Private L 1, shared L 2; single word line – Data-race freedom at word granularity registry • L 2 data arrays double as directory – Keep valid data or registered core id, no space overhead • L 1/L 2 states Invalid Read Write Valid Write Registered • Touched bit set only if read in the phase

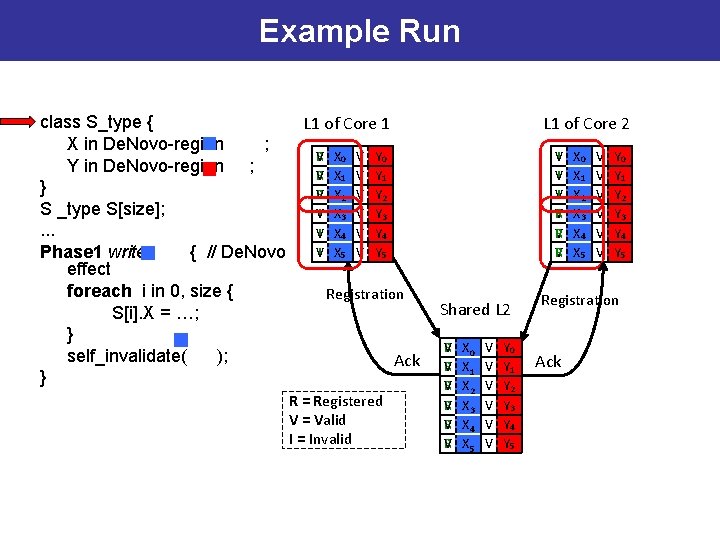

Example Run class S_type { L 1 of Core 1 X in De. Novo-region ; V R X 0 V Y 0 Y in De. Novo-region ; V R X 1 V Y 1 } V R X 2 V Y 2 S _type S[size]; VI X 3 V Y 3. . . VI X 4 V Y 4 VI X 5 V Y 5 Phase 1 writes { // De. Novo effect foreach i in 0, size { Registration S[i]. X = …; } self_invalidate( ); Ack } R = Registered V = Valid I = Invalid L 1 of Core 2 VI VI VI V R V R Shared L 2 V R V R V R C 1 X 0 C 1 X 1 C 1 X 2 C 2 X 3 C 2 X 4 C 2 X 5 V V V Y 0 Y 1 Y 2 Y 3 Y 4 Y 5 X 0 X 1 X 2 X 3 X 4 X 5 V V V Y 0 Y 1 Y 2 Y 3 Y 4 Y 5 Registration Ack

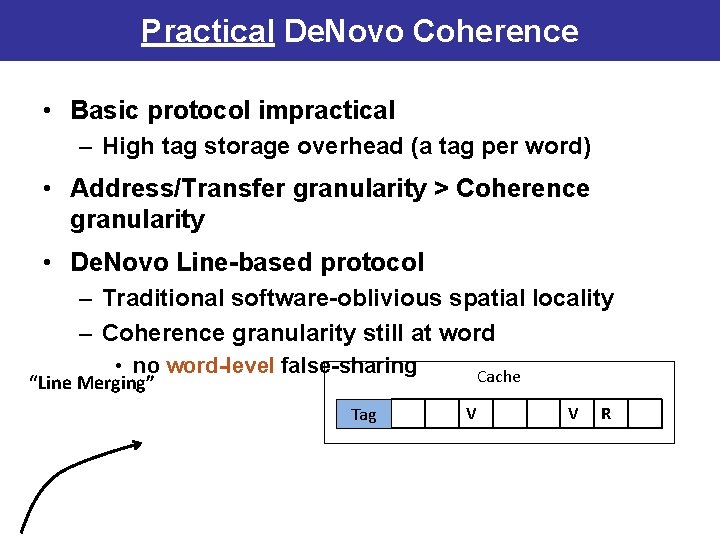

Practical De. Novo Coherence • Basic protocol impractical – High tag storage overhead (a tag per word) • Address/Transfer granularity > Coherence granularity • De. Novo Line-based protocol – Traditional software-oblivious spatial locality – Coherence granularity still at word • no word-level false-sharing Cache “Line Merging” Tag V V R

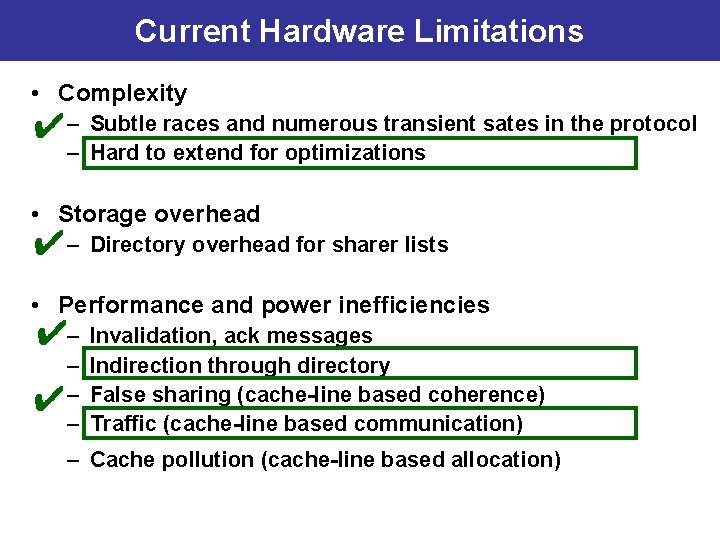

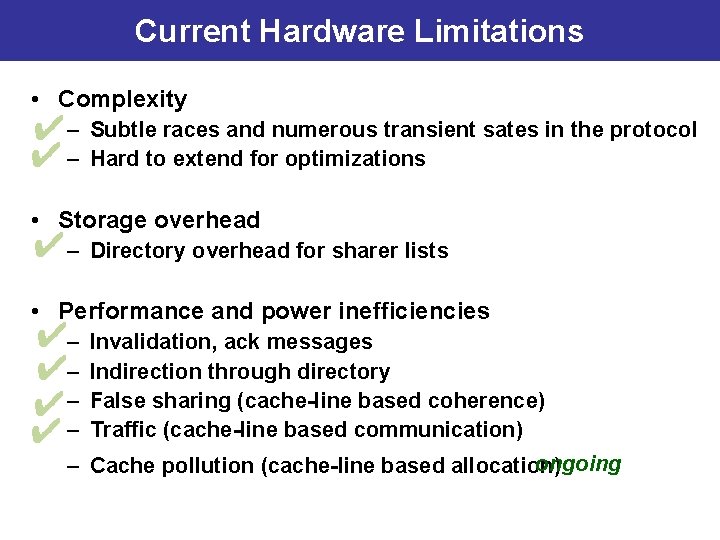

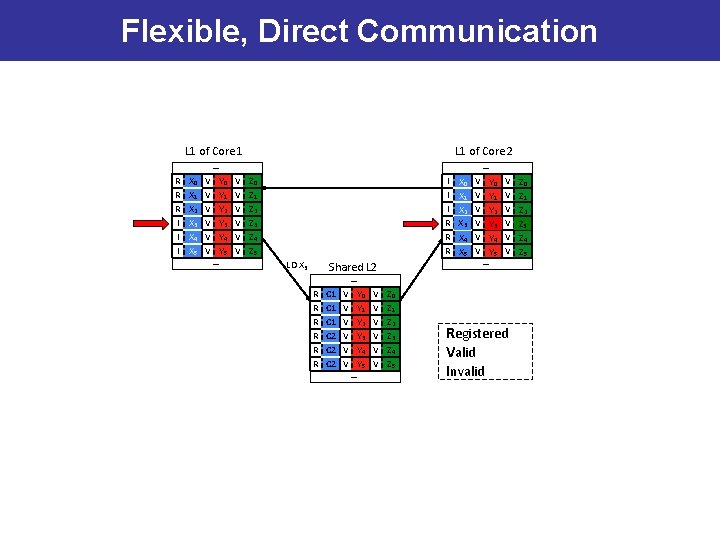

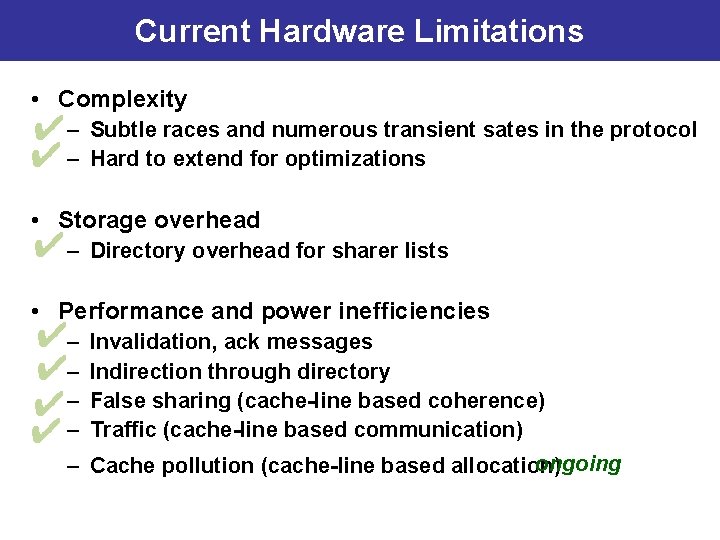

Current Hardware Limitations • Complexity ✔– Subtle races and numerous transient sates in the protocol – Hard to extend for optimizations • Storage overhead ✔– Directory overhead for sharer lists • Performance and power inefficiencies ✔– Invalidation, ack messages ✔ – Indirection through directory – False sharing (cache-line based coherence) – Traffic (cache-line based communication) – Cache pollution (cache-line based allocation)

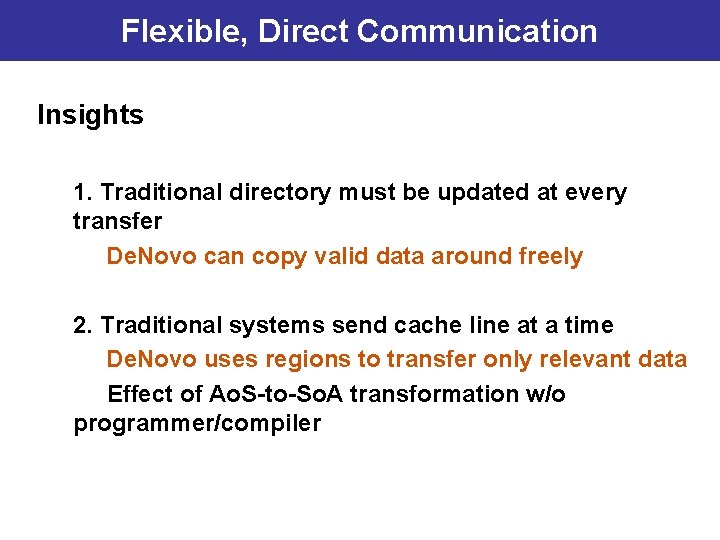

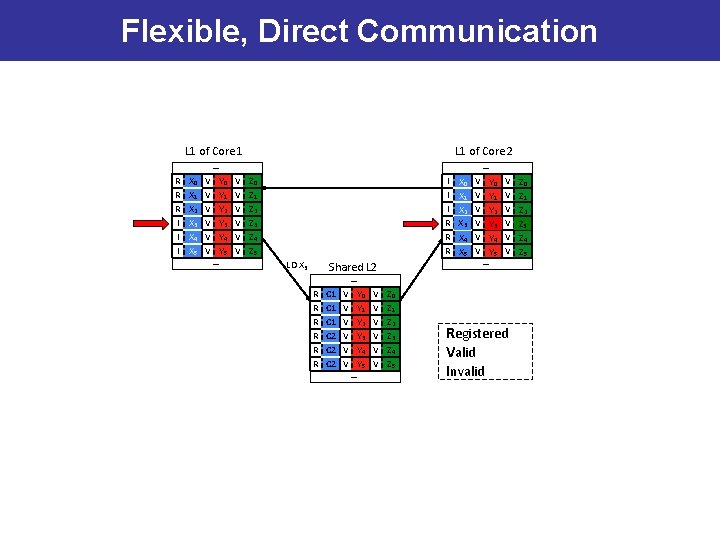

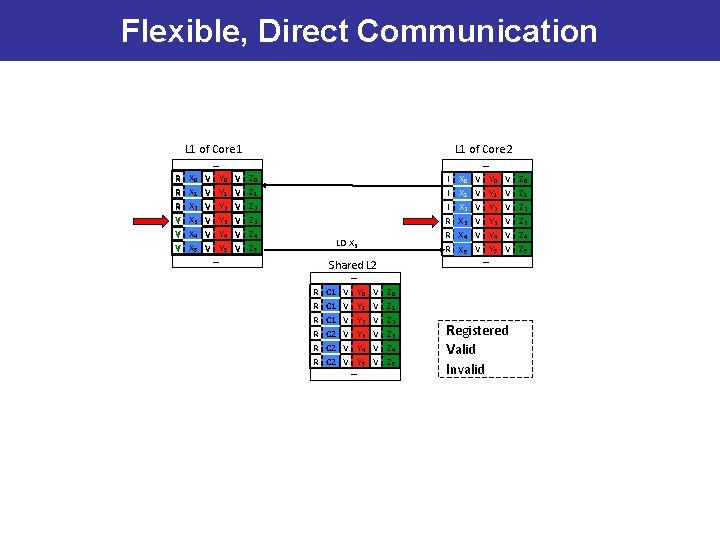

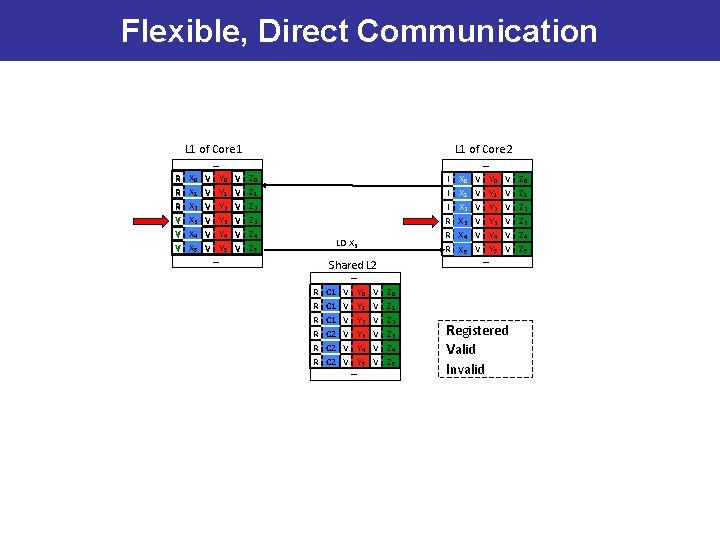

Flexible, Direct Communication Insights 1. Traditional directory must be updated at every transfer De. Novo can copy valid data around freely 2. Traditional systems send cache line at a time De. Novo uses regions to transfer only relevant data Effect of Ao. S-to-So. A transformation w/o programmer/compiler

Flexible, Direct Communication R R R I I I L 1 of Core 1 L 1 of Core 2 … … X 0 X 1 X 2 X 3 X 4 X 5 V V V Y 0 Y 1 Y 2 Y 3 Y 4 Y 5 … V V V Z 0 Z 1 Z 2 Z 3 Z 4 Z 5 I I I R R R LD X 3 Shared L 2 X 0 X 1 X 2 X 3 X 4 X 5 V V V Y 0 Y 1 Y 2 Y 3 Y 4 Y 5 … V V V … R R R C 1 C 1 C 2 C 2 V V V Y 0 Y 1 Y 2 Y 3 Y 4 Y 5 … V V V Z 0 Z 1 Z 2 Z 3 Z 4 Z 5 Registered Valid Invalid Z 0 Z 1 Z 2 Z 3 Z 4 Z 5

Flexible, Direct Communication R R R VI VI VI L 1 of Core 1 L 1 of Core 2 … … X 0 X 1 X 2 X 3 X 4 X 5 V V V Y 0 Y 1 Y 2 Y 3 Y 4 Y 5 … V V V Z 0 Z 1 Z 2 Z 3 Z 4 Z 5 I I I R R R LD X 3 Shared L 2 X 0 X 1 X 2 X 3 X 4 X 5 V V V Y 0 Y 1 Y 2 Y 3 Y 4 Y 5 … V V V … R R R C 1 C 1 C 2 C 2 V V V Y 0 Y 1 Y 2 Y 3 Y 4 Y 5 … V V V Z 0 Z 1 Z 2 Z 3 Z 4 Z 5 Registered Valid Invalid Z 0 Z 1 Z 2 Z 3 Z 4 Z 5

Current Hardware Limitations • Complexity ✔– Subtle races and numerous transient sates in the protocol ✔ – Hard to extend for optimizations • Storage overhead ✔– Directory overhead for sharer lists • Performance and power inefficiencies ✔– Invalidation, ack messages ✔– Indirection through directory ✔– False sharing (cache-line based coherence) ✔ – Traffic (cache-line based communication) ongoing – Cache pollution (cache-line based allocation)

Outline • Introduction • Background: DPJ • Base De. Novo Protocol • De. Novo Optimizations • Evaluation – Complexity – Performance • Conclusion and Future Work

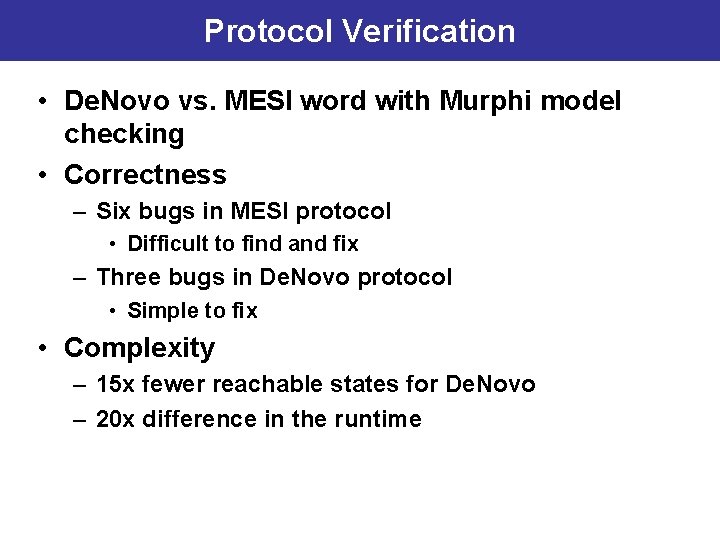

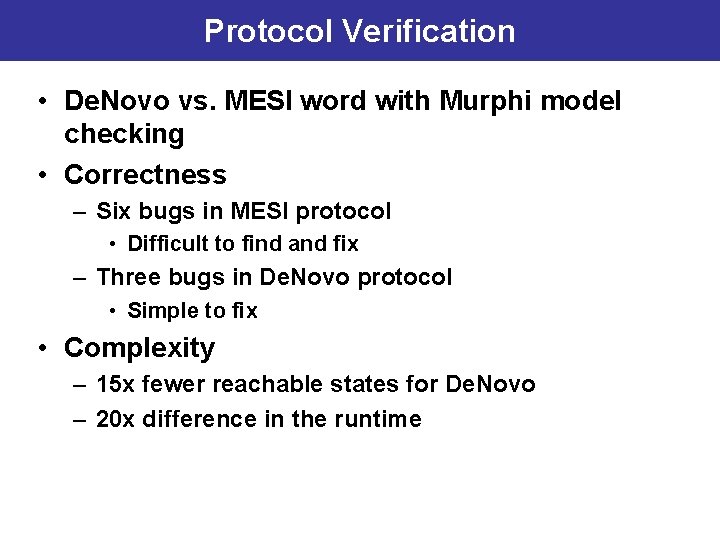

Protocol Verification • De. Novo vs. MESI word with Murphi model checking • Correctness – Six bugs in MESI protocol • Difficult to find and fix – Three bugs in De. Novo protocol • Simple to fix • Complexity – 15 x fewer reachable states for De. Novo – 20 x difference in the runtime

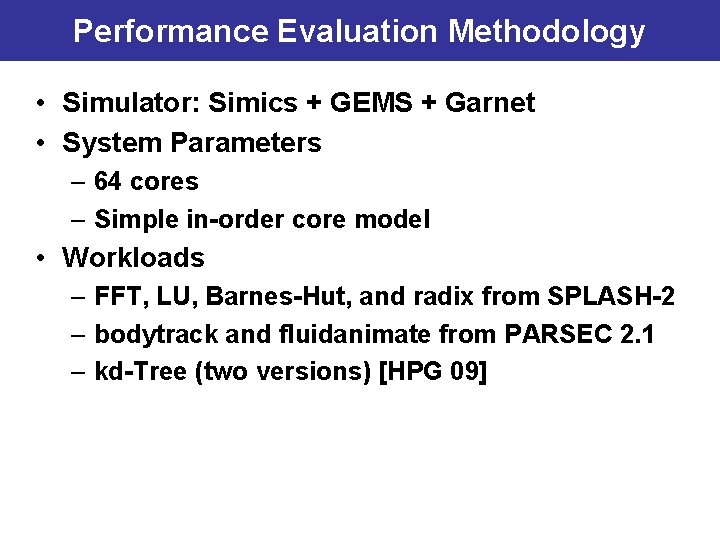

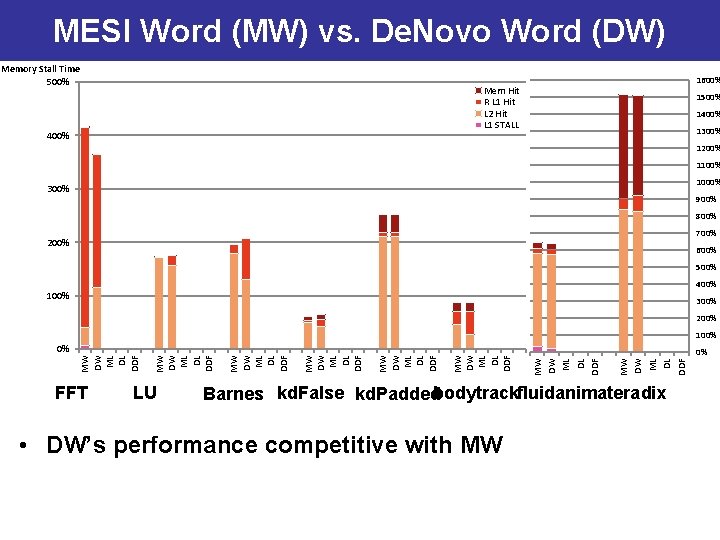

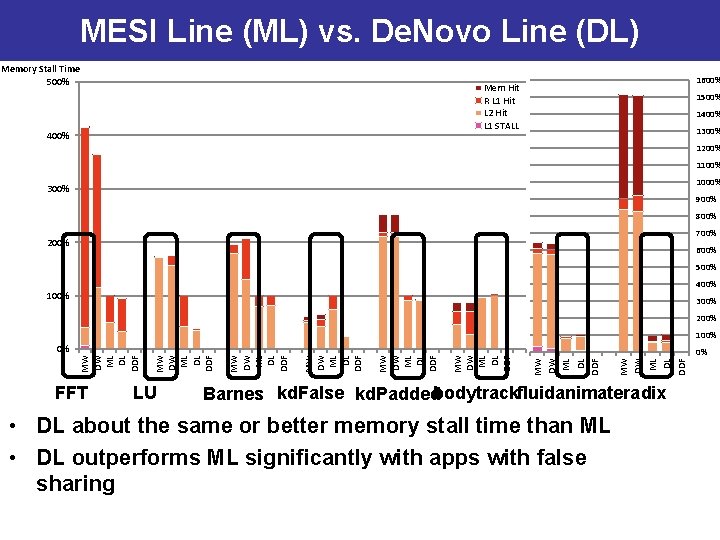

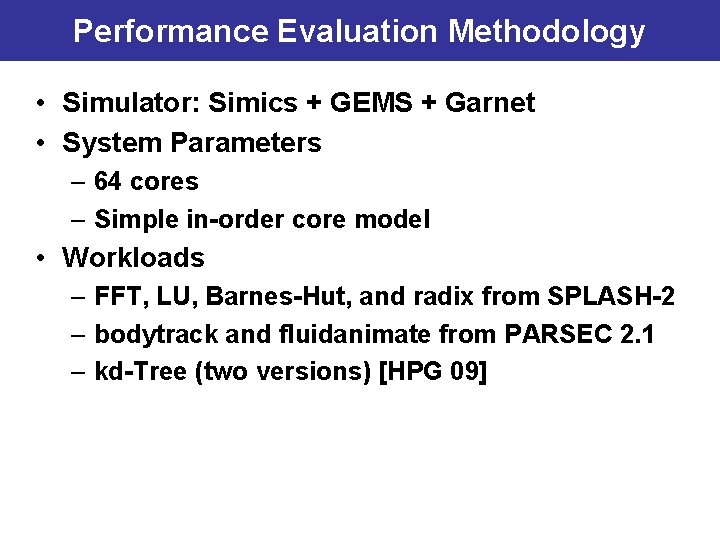

Performance Evaluation Methodology • Simulator: Simics + GEMS + Garnet • System Parameters – 64 cores – Simple in-order core model • Workloads – FFT, LU, Barnes-Hut, and radix from SPLASH-2 – bodytrack and fluidanimate from PARSEC 2. 1 – kd-Tree (two versions) [HPG 09]

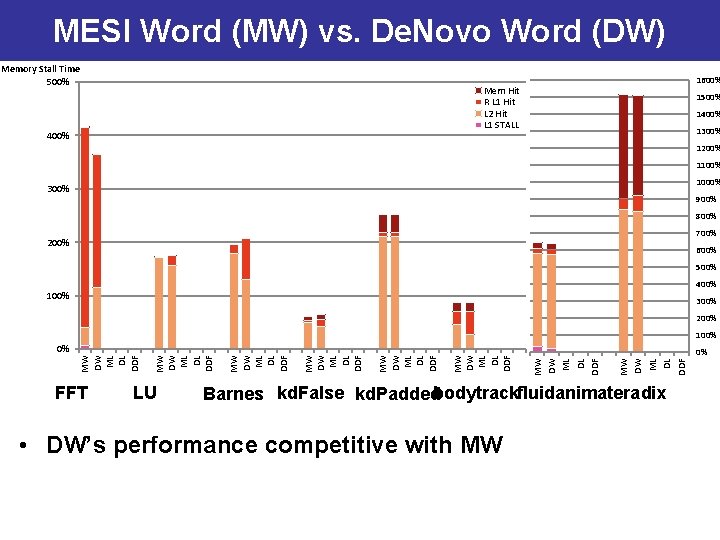

MESI Word (MW) vs. De. Novo Word (DW) Memory Stall Time 500% 1600% Mem Hit R L 1 Hit L 2 Hit L 1 STALL 400% 1500% 1400% 1300% 1200% 1100% 1000% 300% 900% 800% 700% 200% 600% 500% 400% 100% 300% 200% 100% FFT LU MW DW ML DL DDF 0% MW DW ML DL DDF MW DW ML DL DDF 0% Barnes kd. False kd. Paddedbodytrackfluidanimateradix • DW’s performance competitive with MW

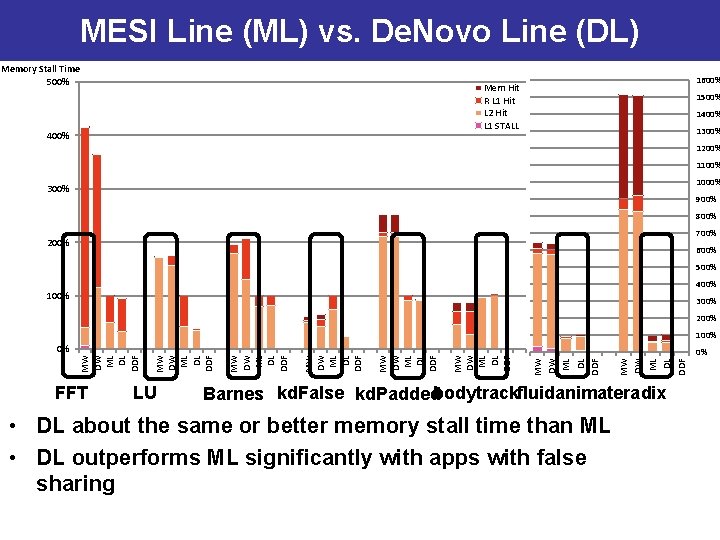

MESI Line (ML) vs. De. Novo Line (DL) Memory Stall Time 500% 1600% Mem Hit R L 1 Hit L 2 Hit L 1 STALL 400% 1500% 1400% 1300% 1200% 1100% 1000% 300% 900% 800% 700% 200% 600% 500% 400% 100% 300% 200% 100% FFT LU MW DW ML DL DDF 0% MW DW ML DL DDF MW DW ML DL DDF 0% Barnes kd. False kd. Paddedbodytrackfluidanimateradix • DL about the same or better memory stall time than ML • DL outperforms ML significantly with apps with false sharing

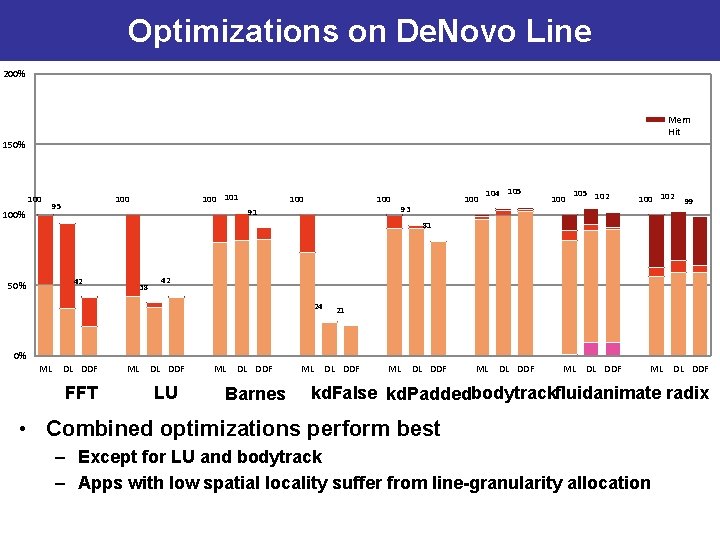

Optimizations on De. Novo Line 200% Mem Hit 150% 100 101 100 95 100 91 100 93 104 105 100 105 102 100 102 99 81 42 50% 38 42 24 21 0% ML DL DDF FFT ML DL DDF LU ML DL DDF Barnes ML DL DDF ML DL DDF kd. False kd. Paddedbodytrackfluidanimate radix • Combined optimizations perform best – Except for LU and bodytrack – Apps with low spatial locality suffer from line-granularity allocation

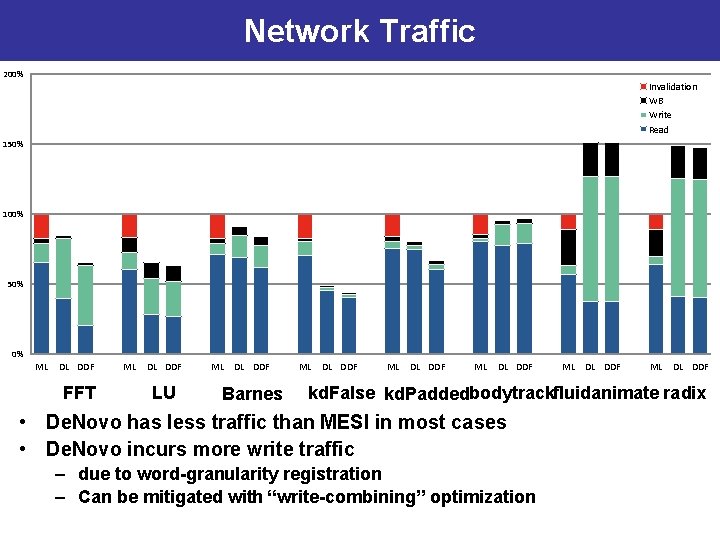

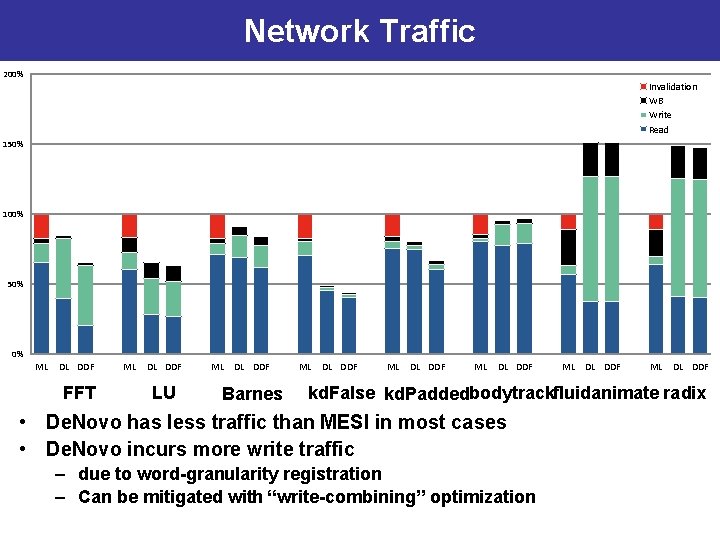

Network Traffic 200% Invalidation WB Write Read 150% 100% 50% 0% ML DL DDF FFT ML DL DDF LU ML DL DDF Barnes ML DL DDF ML DL DDF kd. False kd. Paddedbodytrackfluidanimate radix • De. Novo has less traffic than MESI in most cases • De. Novo incurs more write traffic – due to word-granularity registration – Can be mitigated with “write-combining” optimization

Network Traffic 200% Invalidation WB Write Read 150% 100% 50% 0% ML DL DDF FFT ML DL DDF LU ML DL DDF Barnes ML DL DDF ML DL DDF kd. False kd. Paddedbodytrackfluidanimate radix • De. Novo has less or comparable traffic than MESI • Write combining effective

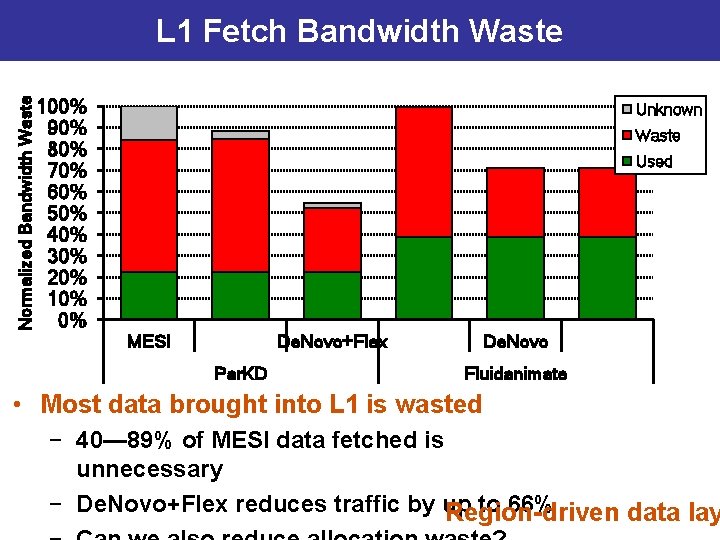

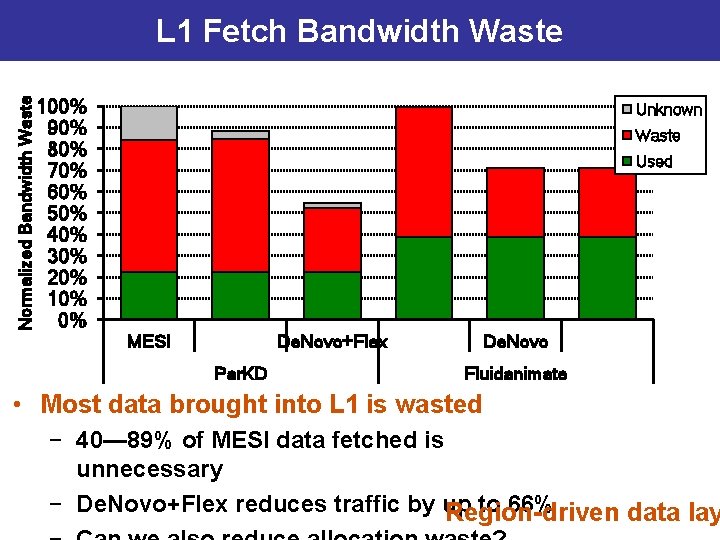

Normalized Bandwidth Waste L 1 Fetch Bandwidth Waste 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% Unknown Waste Used MESI De. Novo+Flex Par. KD De. Novo Fluidanimate • Most data brought into L 1 is wasted − 40— 89% of MESI data fetched is unnecessary − De. Novo+Flex reduces traffic by up to 66% Region-driven data lay

Current Hardware Limitations • Complexity ✔– Subtle races and numerous transient sates in the protocol ✔ – Hard to extend for optimizations • Storage overhead ✔– Directory overhead for sharer lists • Performance and power inefficiencies ✔– Invalidation, ack messages ✔– Indirection through directory ✔– False sharing (cache-line based coherence) ✔ – Traffic (cache-line based communication) ongoing – Cache pollution (cache-line based allocation)

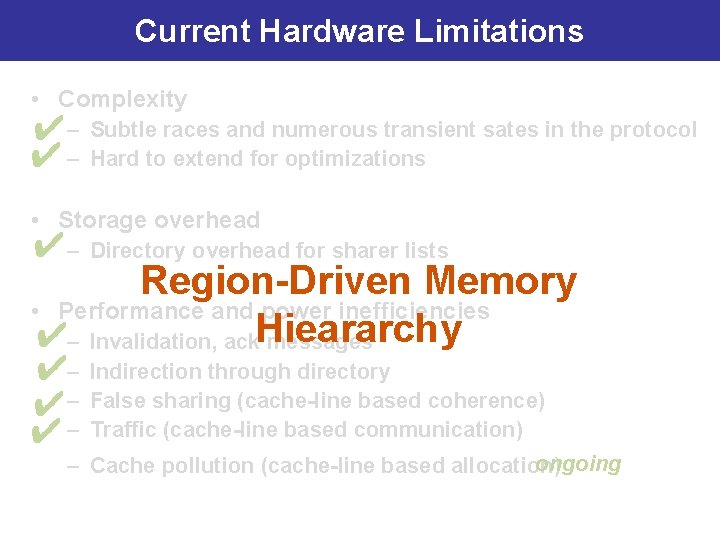

Current Hardware Limitations • Complexity ✔– Subtle races and numerous transient sates in the protocol ✔ – Hard to extend for optimizations • Storage overhead ✔– Directory overhead for sharer lists Region-Driven Memory • Performance and power inefficiencies ✔– Invalidation, ack. Hieararchy messages ✔– Indirection through directory ✔– False sharing (cache-line based coherence) ✔ – Traffic (cache-line based communication) ongoing – Cache pollution (cache-line based allocation)

Conclusions and Future Work (1 of 2) Strong safety properties - Deterministic Parallel Java (DPJ) • No data races, determinism-by-default, safe nondeterminism • Simple semantics, safety, and composability explicit effects + structured Disciplined Shared Memory parallel control Efficiency: complexity, performance, power De. Novo • Simplify coherence and consistency • Optimize communication and storage layout Simple programming model AND Complexity, performance-, power-scalable hardware

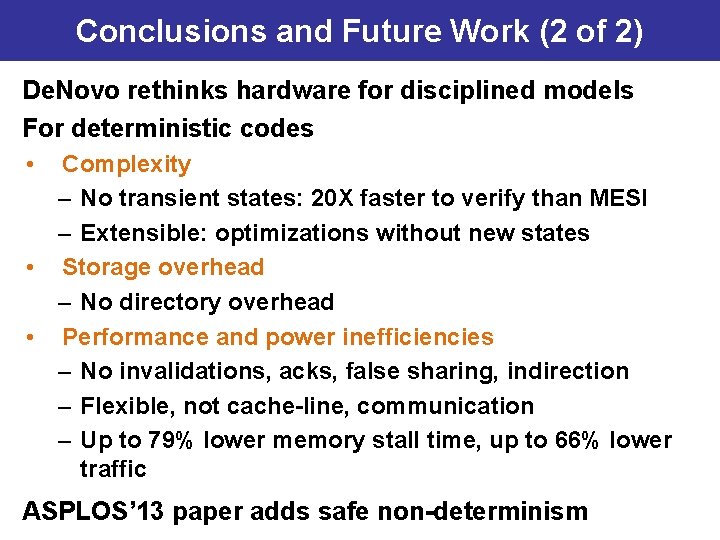

Conclusions and Future Work (2 of 2) De. Novo rethinks hardware for disciplined models For deterministic codes • Complexity – No transient states: 20 X faster to verify than MESI – Extensible: optimizations without new states • Storage overhead – No directory overhead • Performance and power inefficiencies – No invalidations, acks, false sharing, indirection – Flexible, not cache-line, communication – Up to 79% lower memory stall time, up to 66% lower traffic ASPLOS’ 13 paper adds safe non-determinism

Conclusions and Future Work (3 of 3) • Broaden software supported – Pipeline parallelism, OS, legacy, … • Region-driven memory hierarchy – Also apply to heterogeneous memory • Global address space • Region-driven coherence, communication, layout • Hardware/Software Interface – Language-neutral virtual ISA • Parallelism and specialization may solve energy crisis, but – Require rethinking software, hardware, interface – The Disciplined Parallel Programming Imperative

Thread level parallelism in computer architecture

Thread level parallelism in computer architecture Disciplined agile delivery roles

Disciplined agile delivery roles Shuhari agile

Shuhari agile What is disciplined

What is disciplined Intellectually disciplined

Intellectually disciplined Disciplined superlative

Disciplined superlative Disciplined agile delivery certification

Disciplined agile delivery certification 5 minds of the future

5 minds of the future Disciplined agile consortium

Disciplined agile consortium External components of a computer

External components of a computer Adjective of bitterness

Adjective of bitterness Architecture council pakistan

Architecture council pakistan Enterprise digital architects

Enterprise digital architects Association of enterprise architects

Association of enterprise architects Why architects need to use their ears

Why architects need to use their ears Lab4 architects

Lab4 architects Nevion videoipath

Nevion videoipath What does drawn to scale mean

What does drawn to scale mean Da silva architects

Da silva architects Ektron discovery phase

Ektron discovery phase The behavioural architects

The behavioural architects Ravi and minu architects

Ravi and minu architects Hulton square ordsall

Hulton square ordsall Nc architects and builders

Nc architects and builders Data lake services in chicago

Data lake services in chicago Addressing mode in computer architecture

Addressing mode in computer architecture Pdr architects

Pdr architects Csda architects

Csda architects Media production architects

Media production architects Tbda architects

Tbda architects Connolly & hickey historical architects

Connolly & hickey historical architects Kph architects

Kph architects Architect act 1967

Architect act 1967 The virtuoso h264

The virtuoso h264 Business plan for architects

Business plan for architects đại từ thay thế

đại từ thay thế Vẽ hình chiếu đứng bằng cạnh của vật thể

Vẽ hình chiếu đứng bằng cạnh của vật thể Quá trình desamine hóa có thể tạo ra

Quá trình desamine hóa có thể tạo ra Công của trọng lực

Công của trọng lực Môn thể thao bắt đầu bằng chữ f

Môn thể thao bắt đầu bằng chữ f Hình ảnh bộ gõ cơ thể búng tay

Hình ảnh bộ gõ cơ thể búng tay Khi nào hổ con có thể sống độc lập

Khi nào hổ con có thể sống độc lập Các loại đột biến cấu trúc nhiễm sắc thể

Các loại đột biến cấu trúc nhiễm sắc thể Nguyên nhân của sự mỏi cơ sinh 8

Nguyên nhân của sự mỏi cơ sinh 8 Phản ứng thế ankan

Phản ứng thế ankan Voi kéo gỗ như thế nào

Voi kéo gỗ như thế nào Thế nào là mạng điện lắp đặt kiểu nổi

Thế nào là mạng điện lắp đặt kiểu nổi Thiếu nhi thế giới liên hoan

Thiếu nhi thế giới liên hoan điện thế nghỉ

điện thế nghỉ Một số thể thơ truyền thống

Một số thể thơ truyền thống Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Hệ hô hấp

Hệ hô hấp Lp html

Lp html Các số nguyên tố

Các số nguyên tố