The GRID and the Linux Farm at the

- Slides: 35

The GRID and the Linux Farm at the RCF HEPIX – Amsterdam May 19 -23, 2003 A. Chan, R. Hogue, C. Hollowell, O. Rind, J. Smith, T. Throwe, T. Wlodek, D. Yu RHIC Computing Facility Brookhaven National Laboratory

Outline • Background • Hardware • Software • Security • GRID-like capabilities • Near-term plans

Background • Used for mass processing of RHIC data • U. S. tier 1 Center for ATLAS • Listed as 3 rd largest cluster in http: //clusters. top 500. org • Currently staffed with 5 FTE

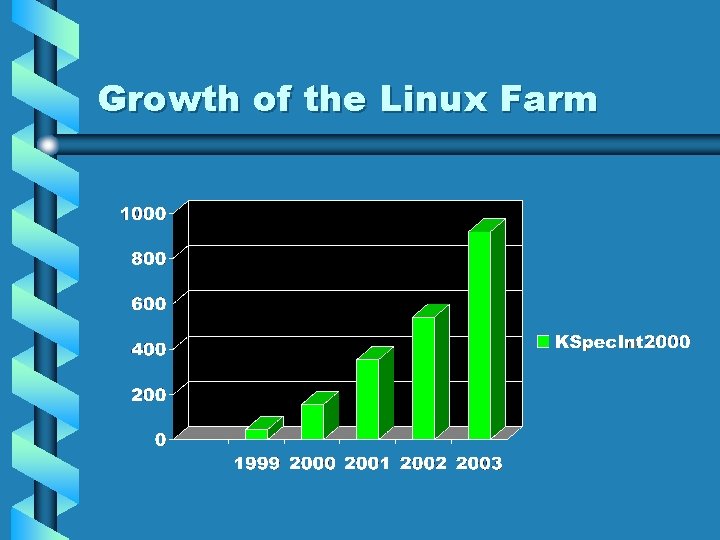

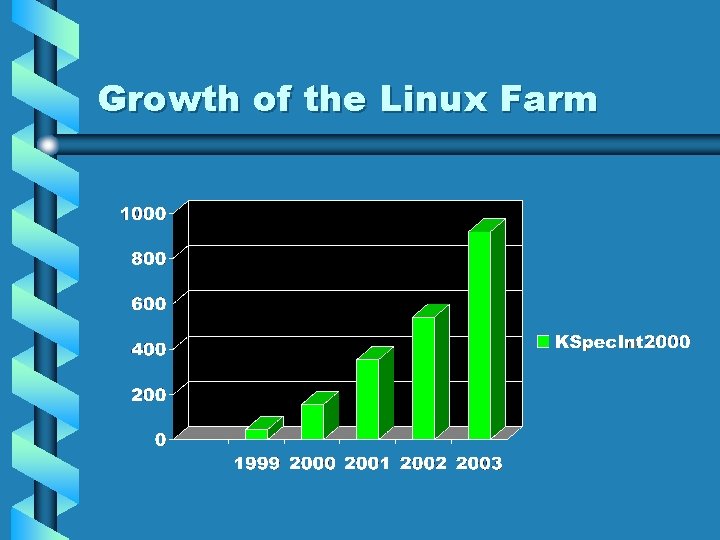

Growth of the Linux Farm

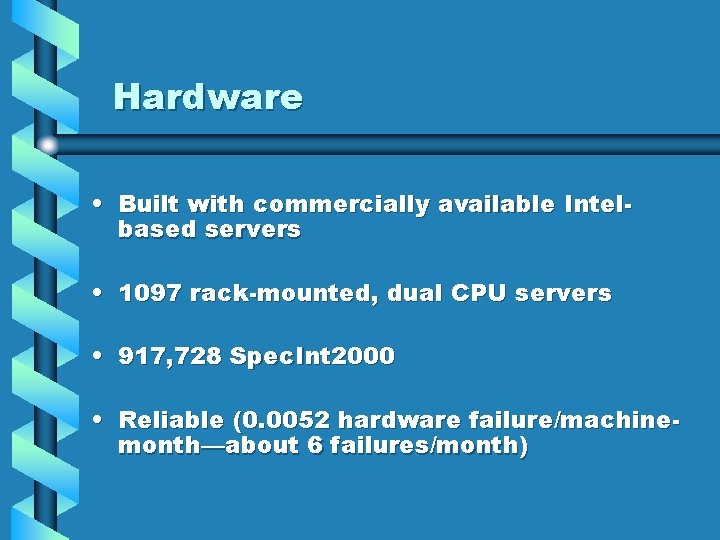

Hardware • Built with commercially available Intelbased servers • 1097 rack-mounted, dual CPU servers • 917, 728 Spec. Int 2000 • Reliable (0. 0052 hardware failure/machinemonth—about 6 failures/month)

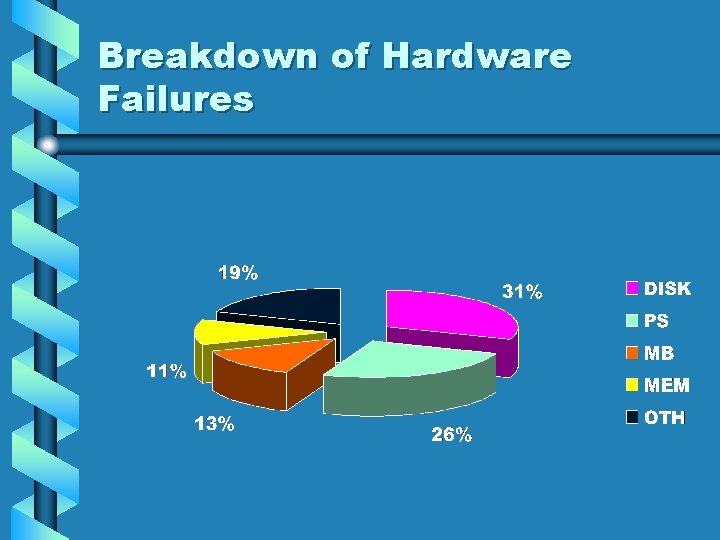

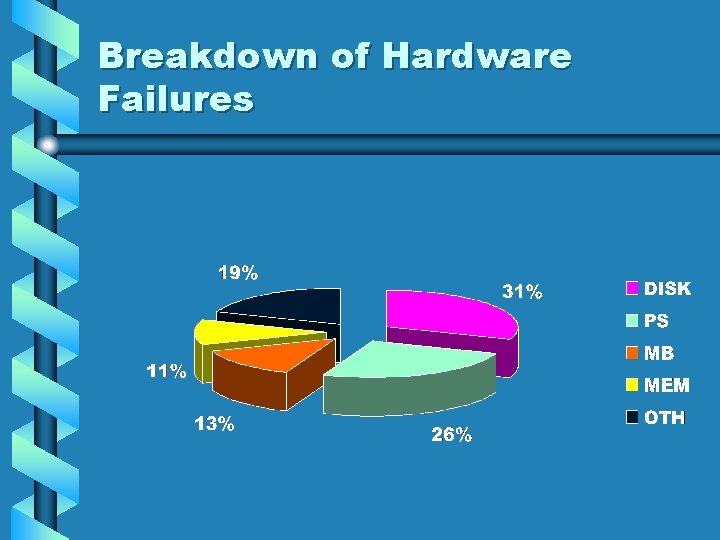

Breakdown of Hardware Failures

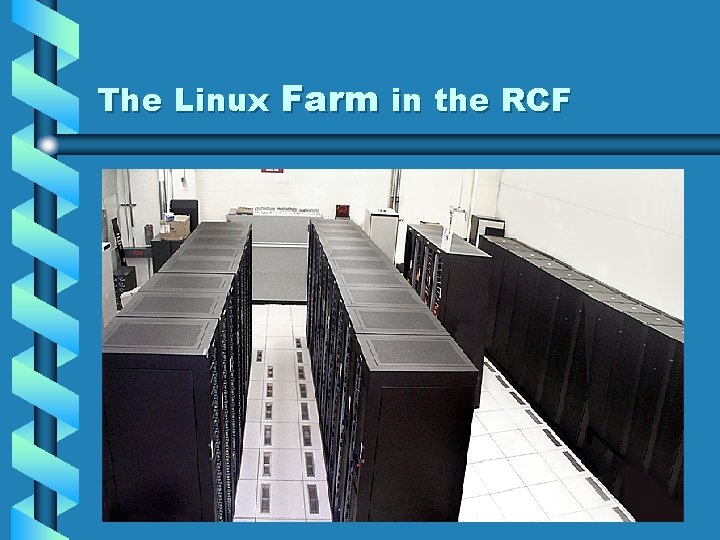

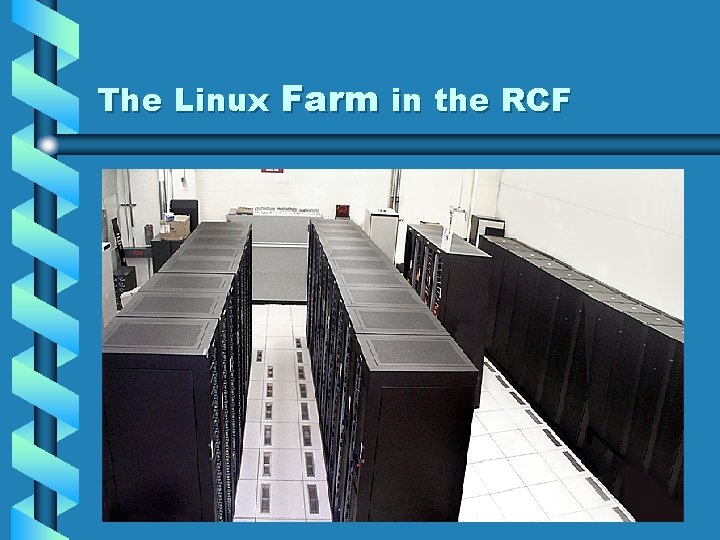

The Linux Farm in the RCF

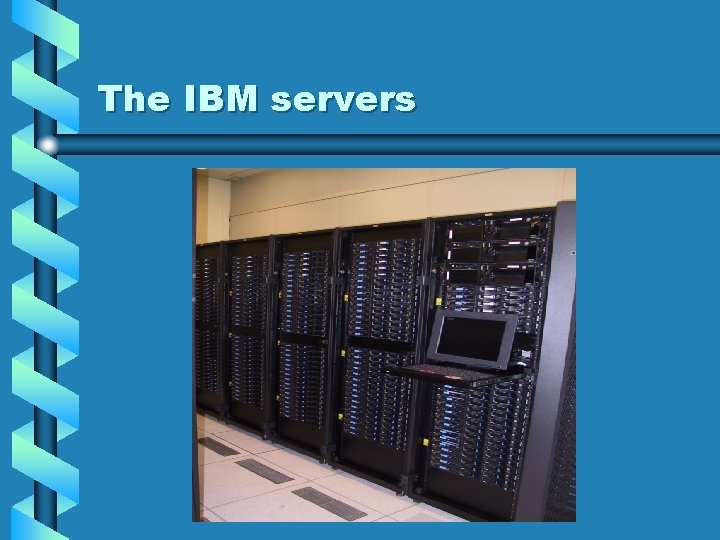

The IBM servers

The VA Linux servers

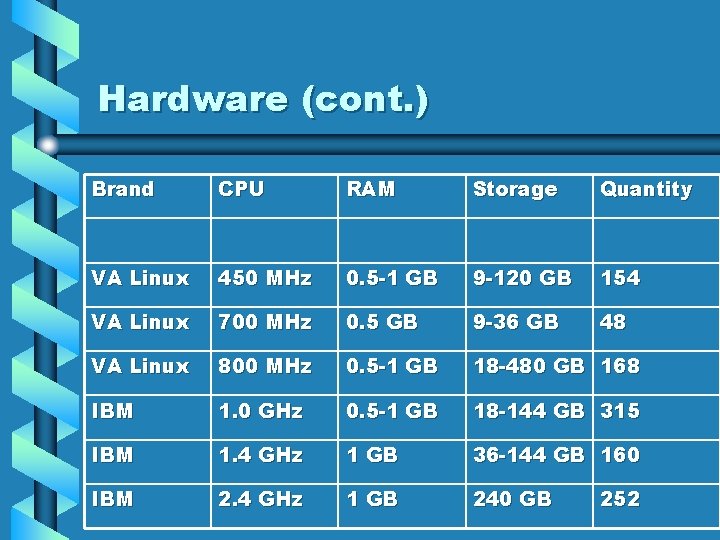

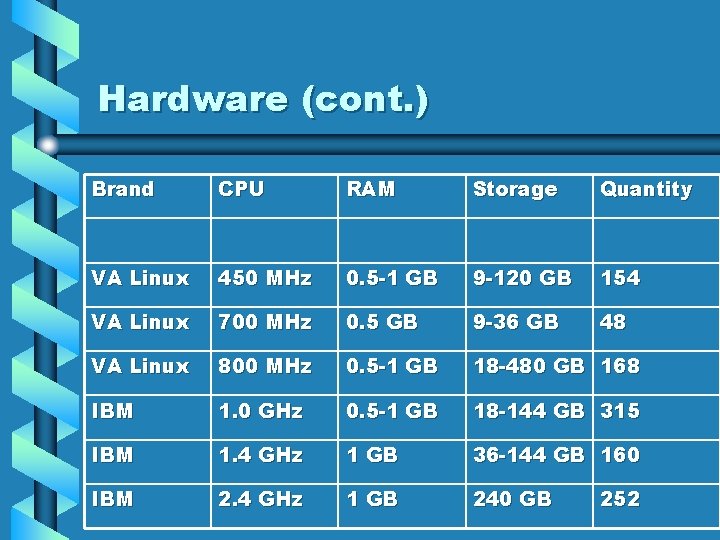

Hardware (cont. ) Brand CPU RAM Storage Quantity VA Linux 450 MHz 0. 5 -1 GB 9 -120 GB 154 VA Linux 700 MHz 0. 5 GB 9 -36 GB 48 VA Linux 800 MHz 0. 5 -1 GB 18 -480 GB 168 IBM 1. 0 GHz 0. 5 -1 GB 18 -144 GB 315 IBM 1. 4 GHz 1 GB 36 -144 GB 160 IBM 2. 4 GHz 1 GB 240 GB 252

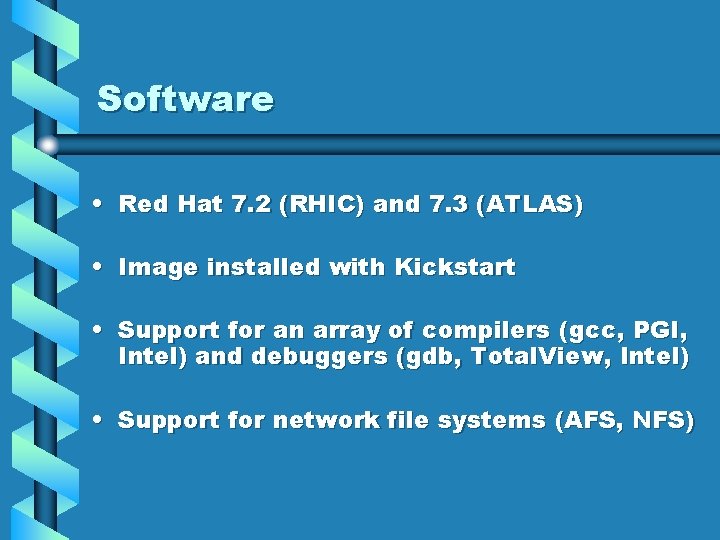

Software • Red Hat 7. 2 (RHIC) and 7. 3 (ATLAS) • Image installed with Kickstart • Support for an array of compilers (gcc, PGI, Intel) and debuggers (gdb, Total. View, Intel) • Support for network file systems (AFS, NFS)

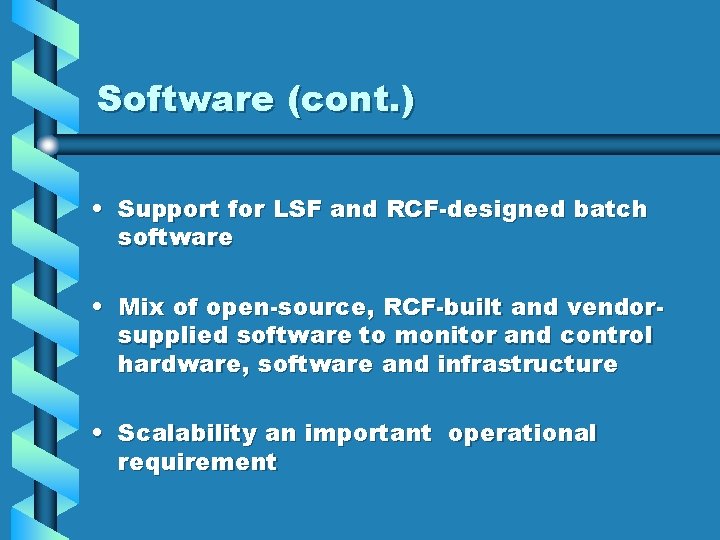

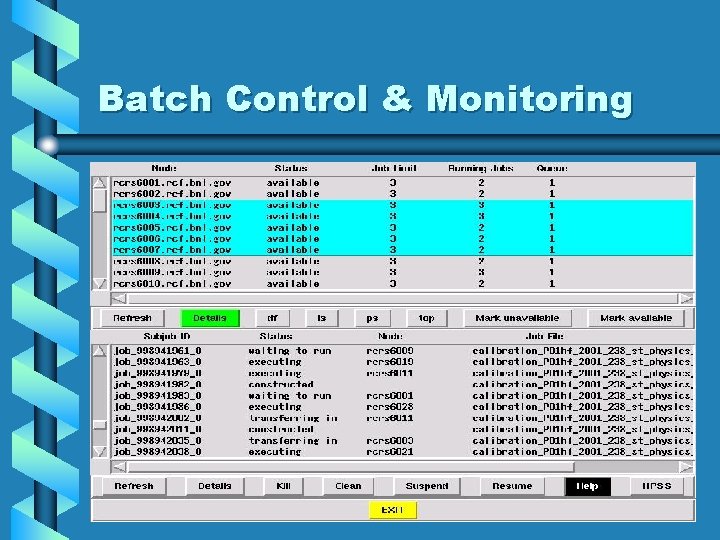

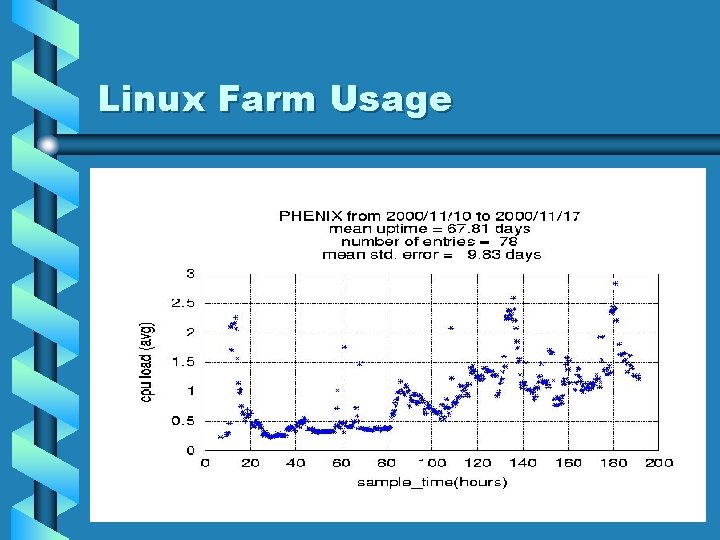

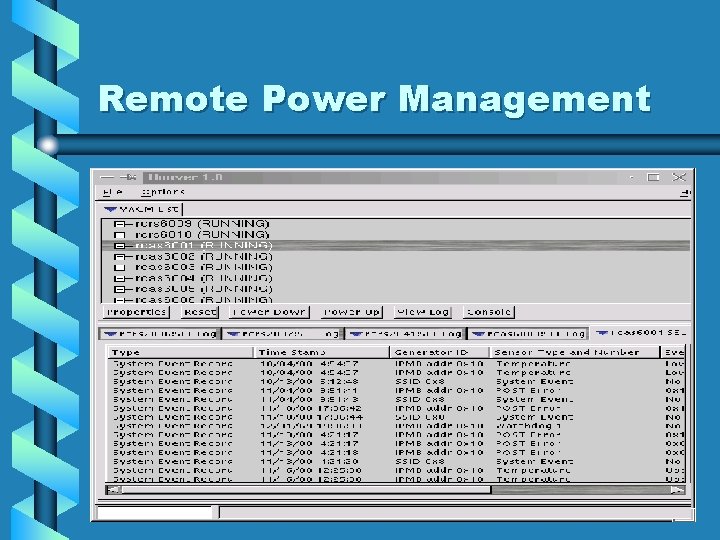

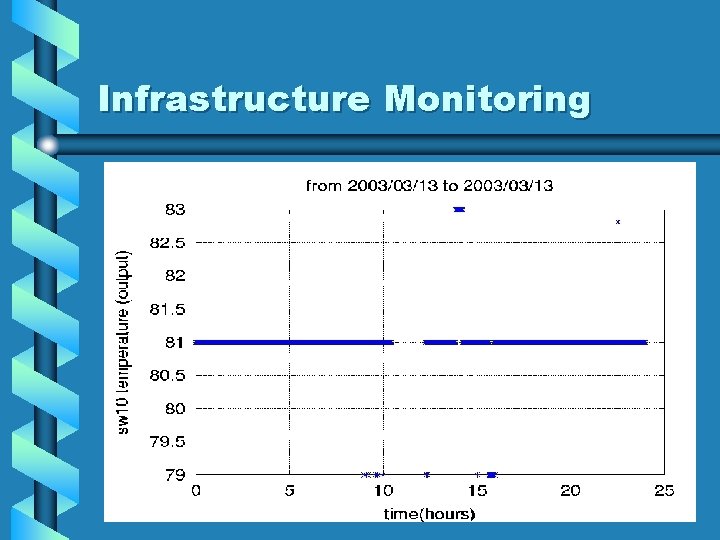

Software (cont. ) • Support for LSF and RCF-designed batch software • Mix of open-source, RCF-built and vendorsupplied software to monitor and control hardware, software and infrastructure • Scalability an important operational requirement

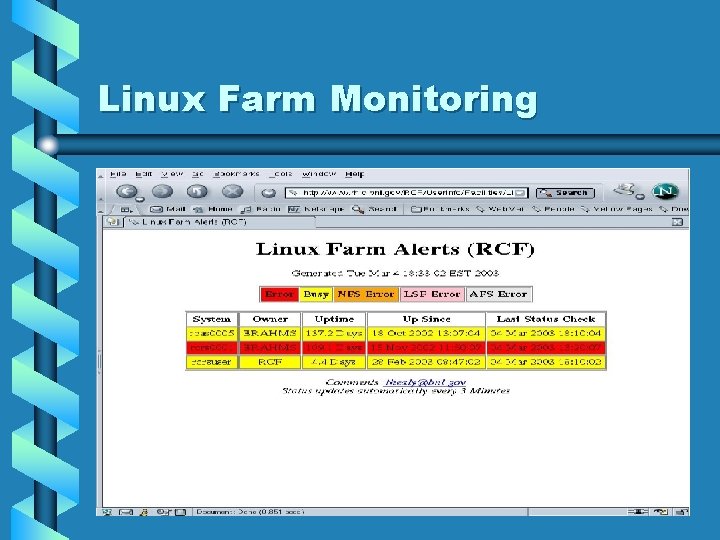

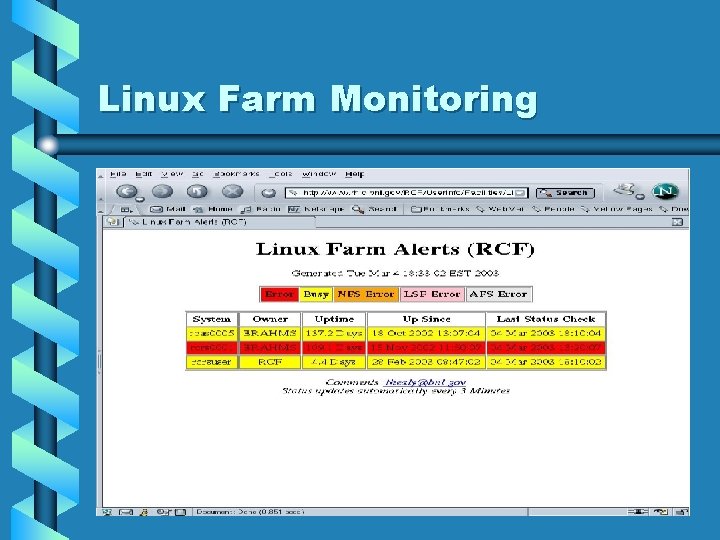

Linux Farm Monitoring

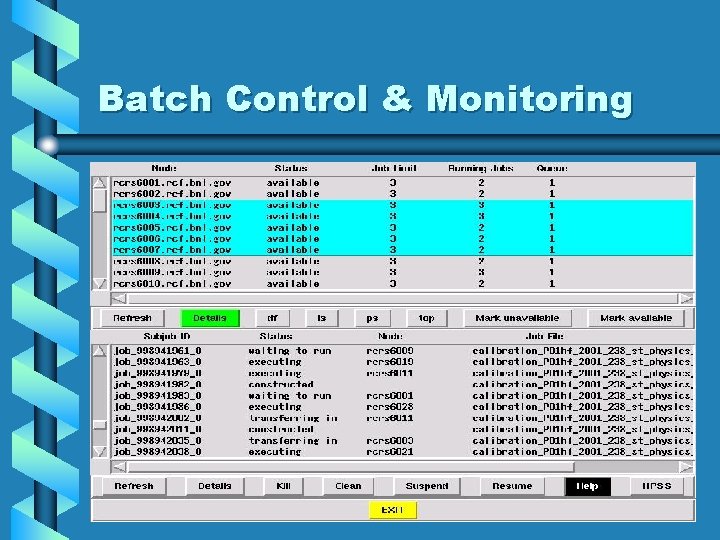

Batch Control & Monitoring

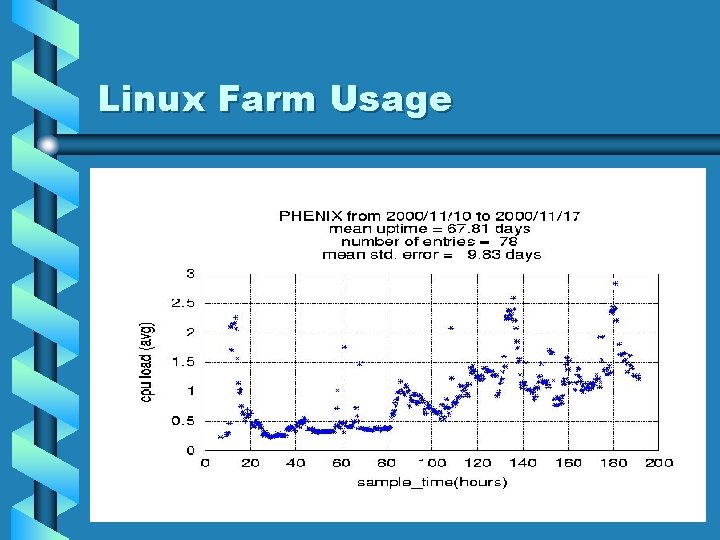

Linux Farm Usage

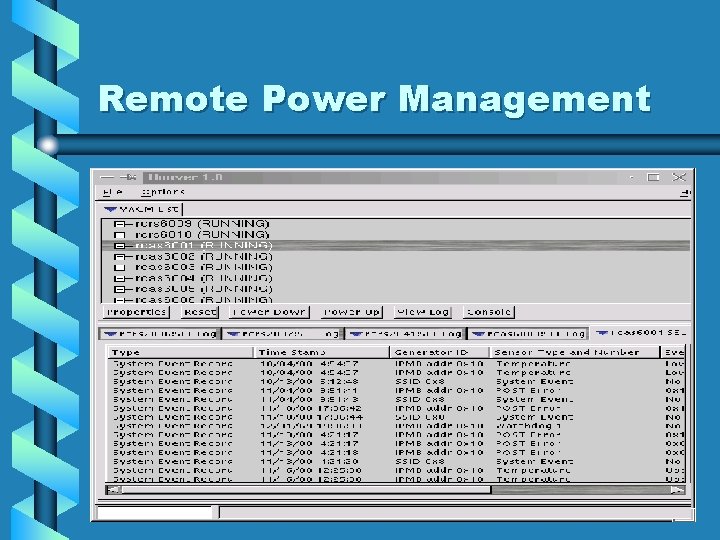

Remote Power Management

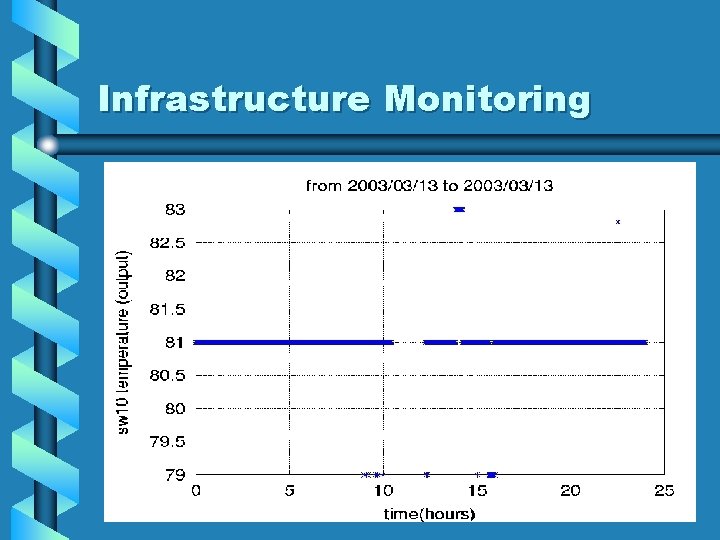

Infrastructure Monitoring

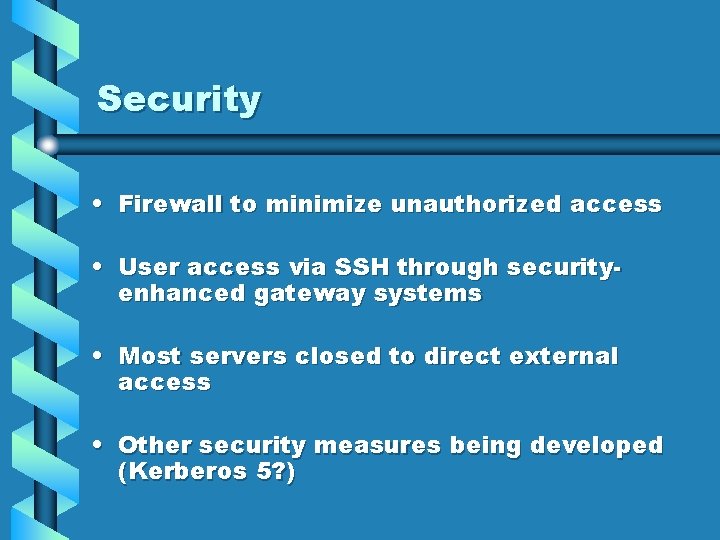

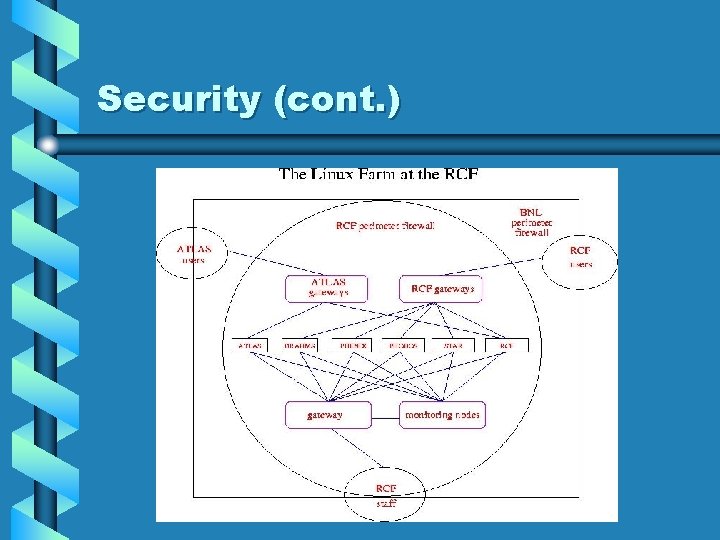

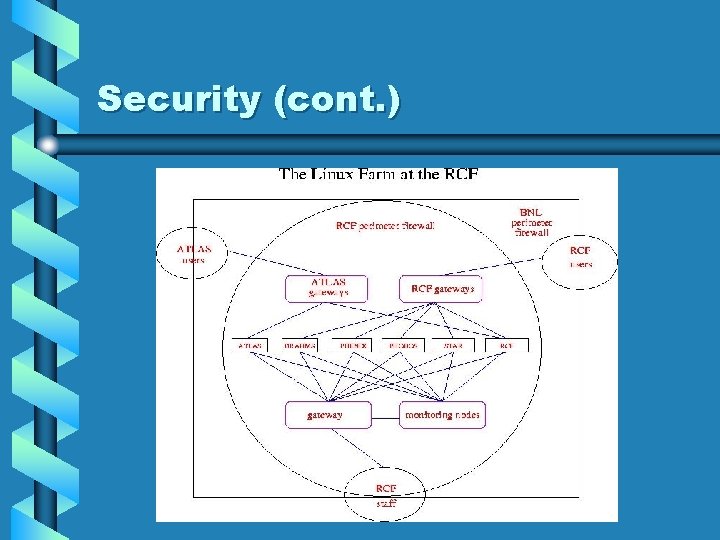

Security • Firewall to minimize unauthorized access • User access via SSH through securityenhanced gateway systems • Most servers closed to direct external access • Other security measures being developed (Kerberos 5? )

Security (cont. )

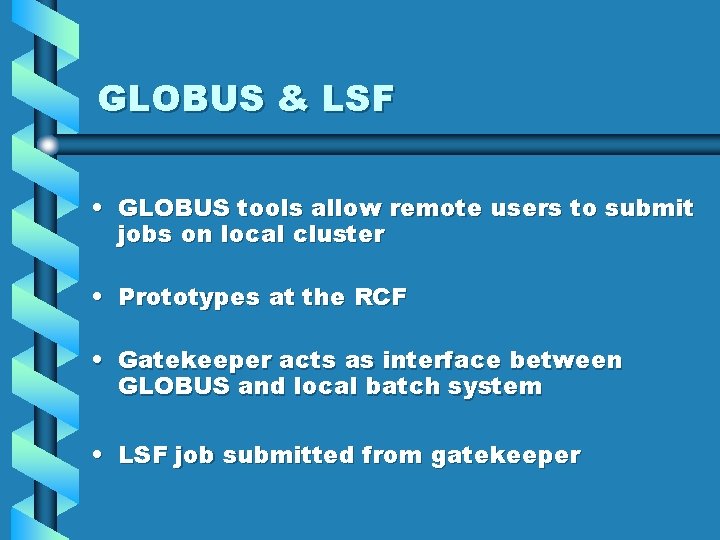

GRID-like capabilities • Ganglia (monitoring & job scheduler) • Condor (batch software) • GLOBUS & LSF batch

Ganglia • Open-source monitoring software (http: //sourceforge. net/projects/ganglia) • Can create federation of clusters • Historical data information • Can be used as job scheduler in GRID-like environment

Ganglia (cont. ) • Web interface • Prototype running for RHIC and ATLAS • Scalability issues • Downside – cannot (yet) restrict data access easily, not easily customized

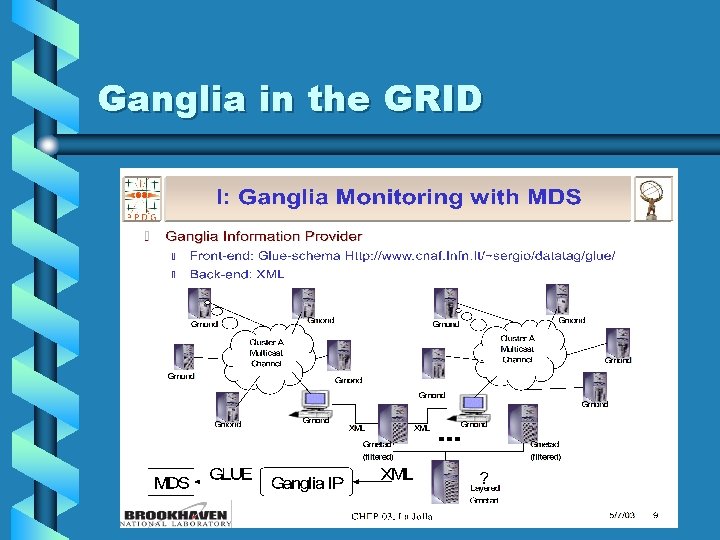

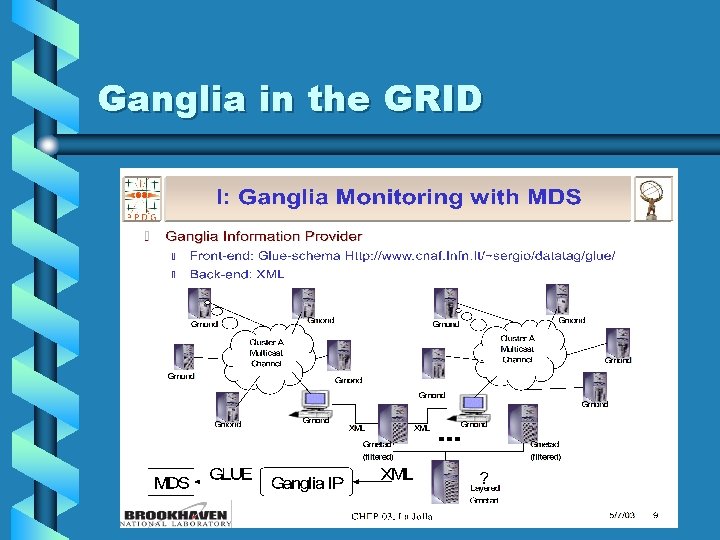

Ganglia in the GRID

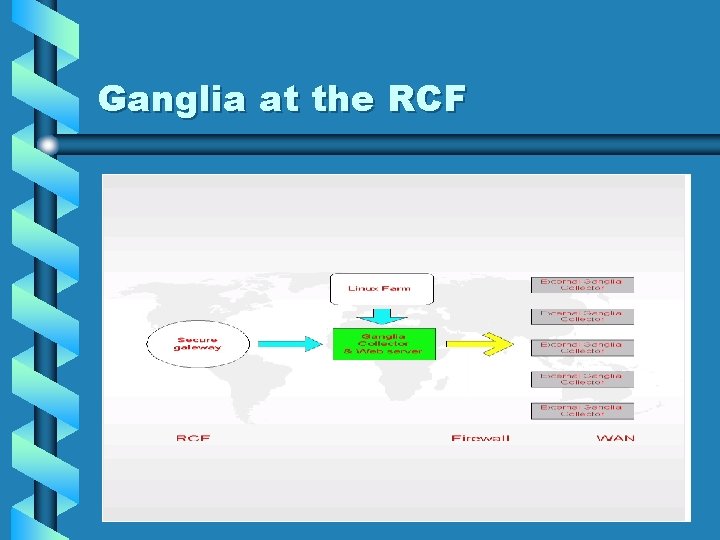

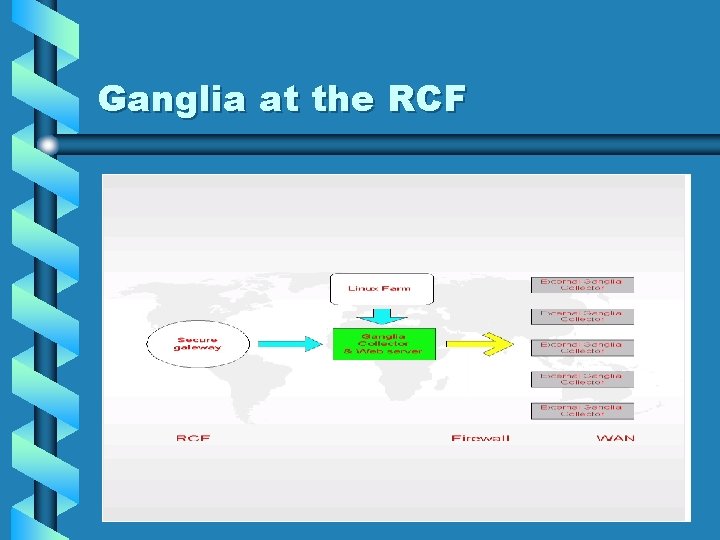

Ganglia at the RCF

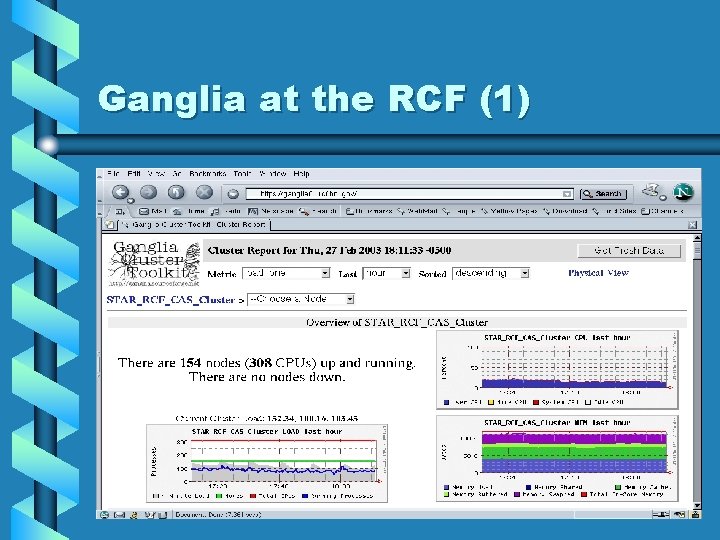

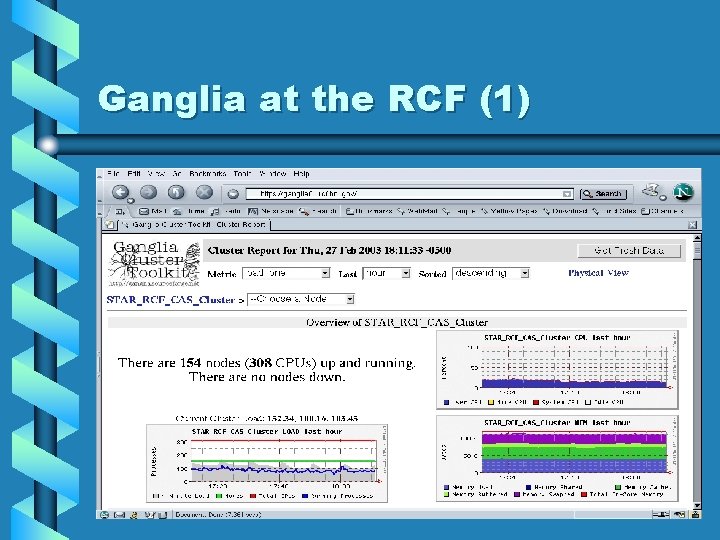

Ganglia at the RCF (1)

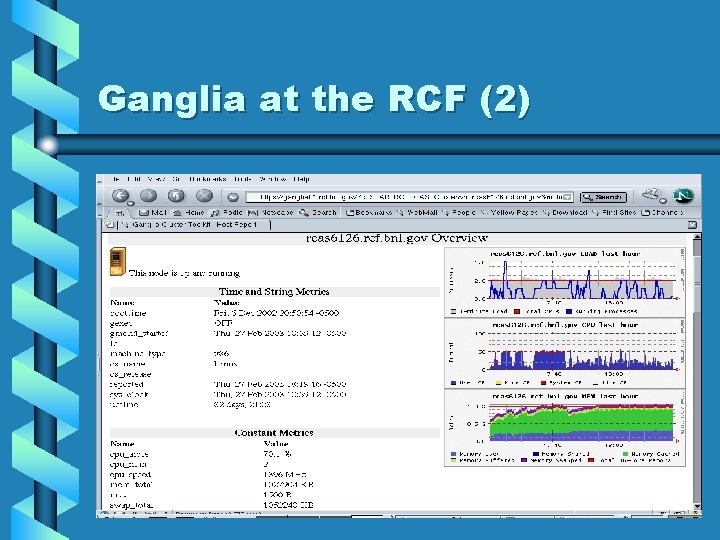

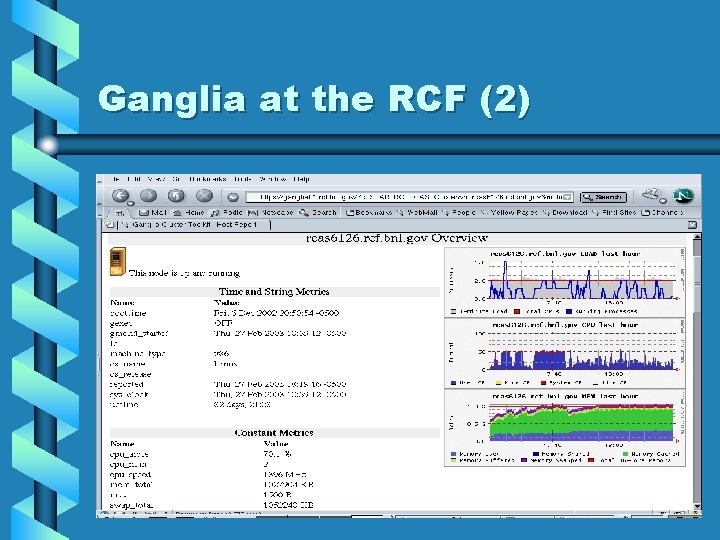

Ganglia at the RCF (2)

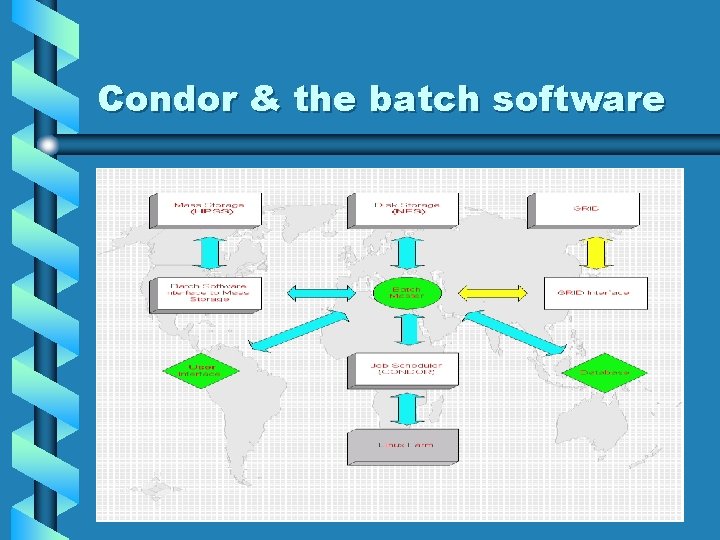

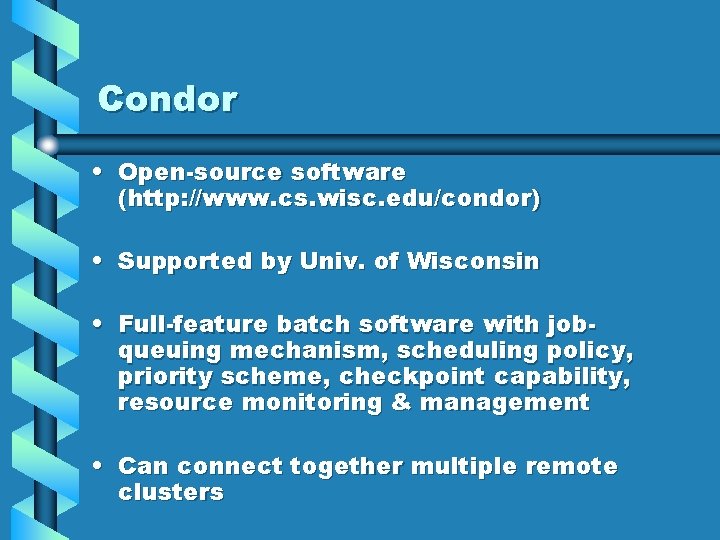

Condor • Open-source software (http: //www. cs. wisc. edu/condor) • Supported by Univ. of Wisconsin • Full-feature batch software with jobqueuing mechanism, scheduling policy, priority scheme, checkpoint capability, resource monitoring & management • Can connect together multiple remote clusters

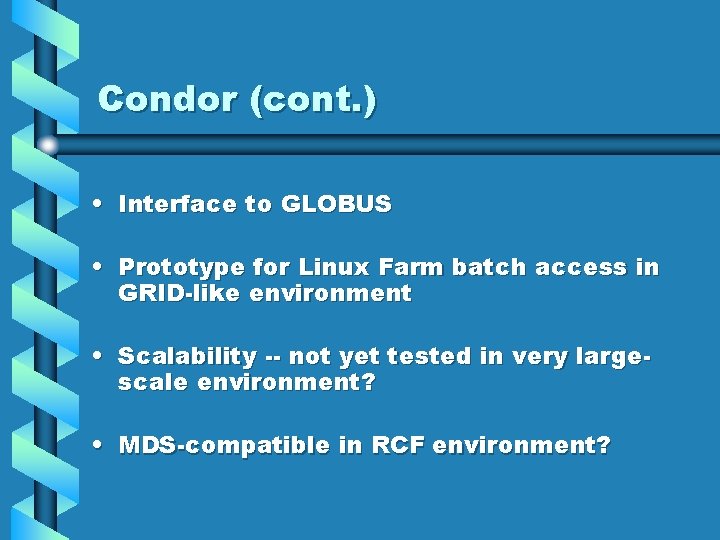

Condor (cont. ) • Interface to GLOBUS • Prototype for Linux Farm batch access in GRID-like environment • Scalability -- not yet tested in very largescale environment? • MDS-compatible in RCF environment?

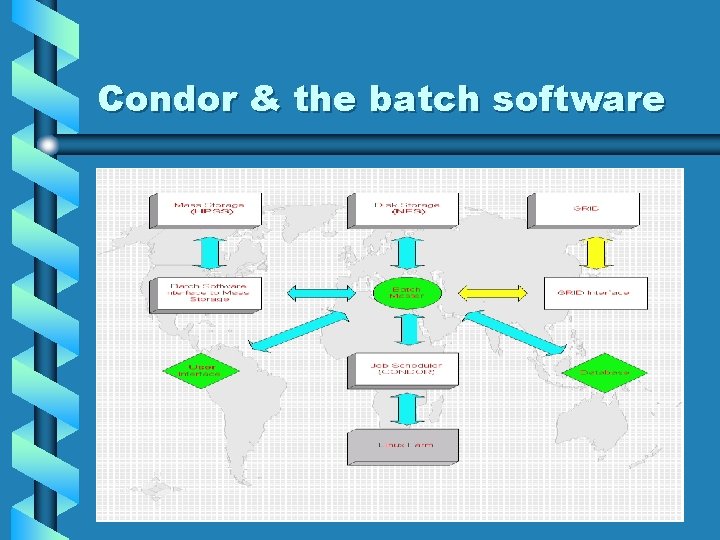

Condor & the batch software

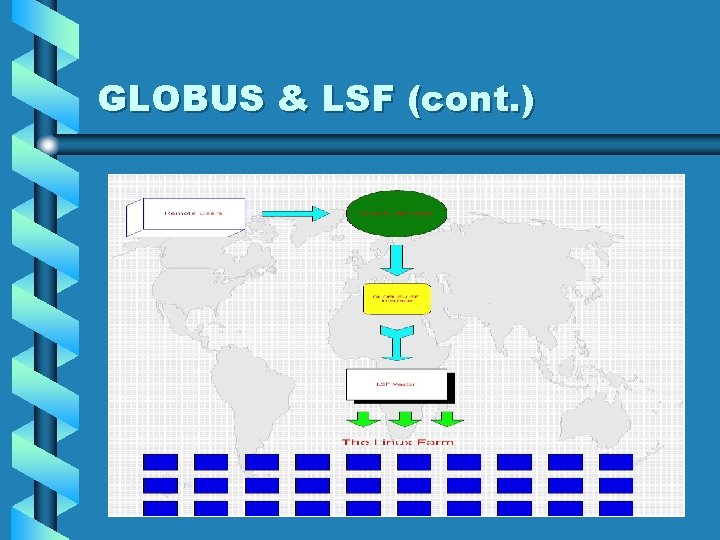

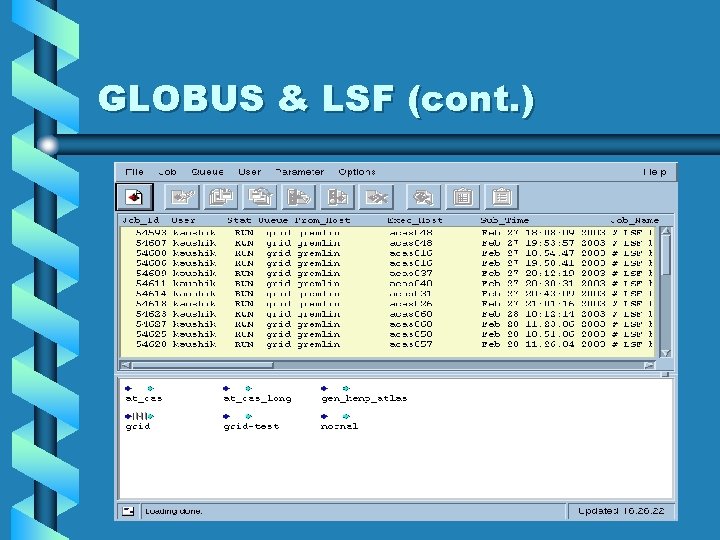

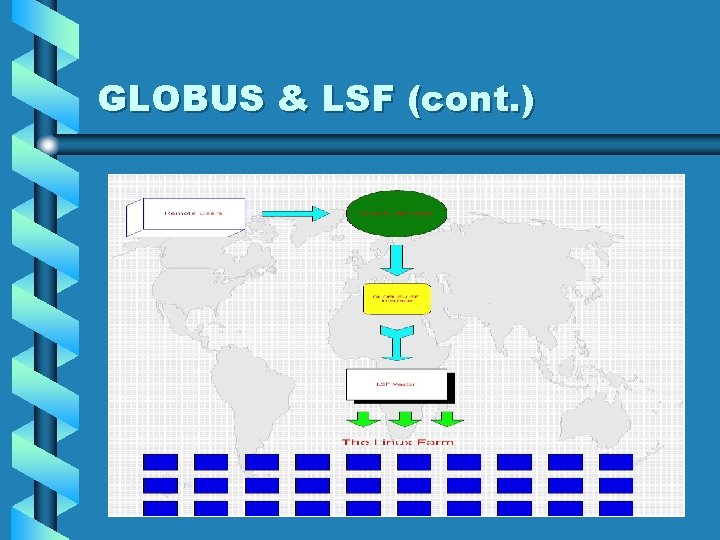

GLOBUS & LSF • GLOBUS tools allow remote users to submit jobs on local cluster • Prototypes at the RCF • Gatekeeper acts as interface between GLOBUS and local batch system • LSF job submitted from gatekeeper

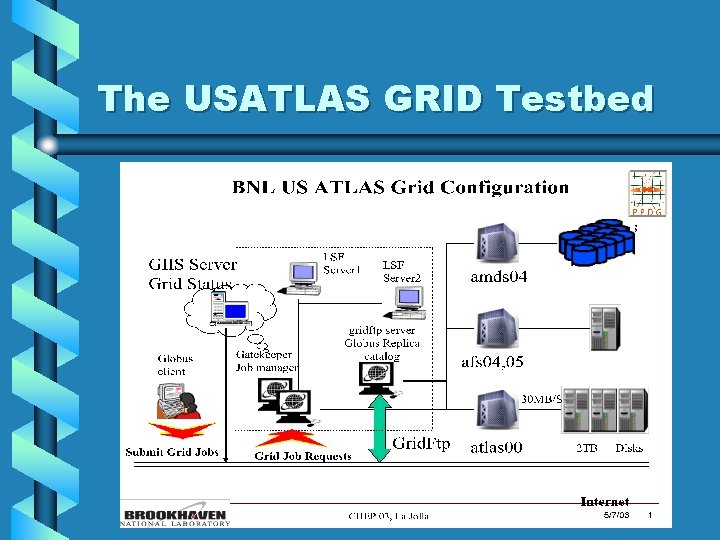

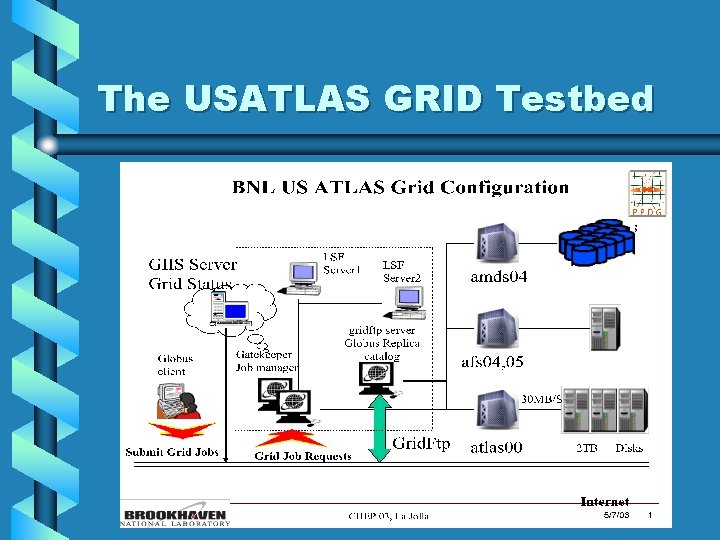

The USATLAS GRID Testbed

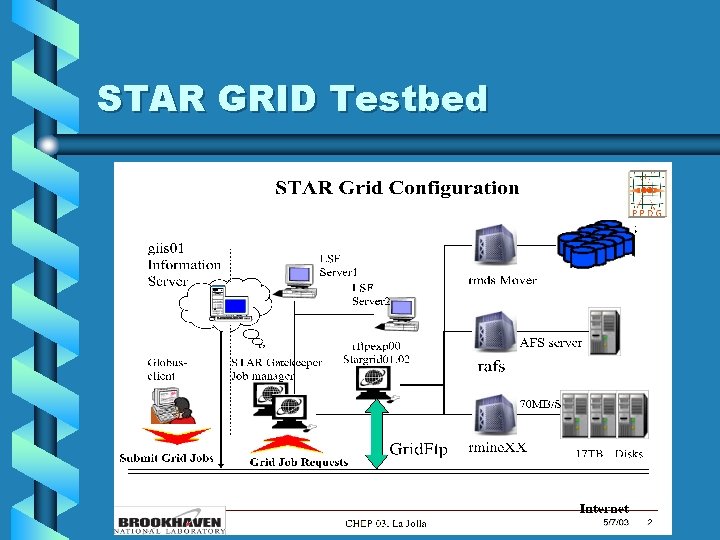

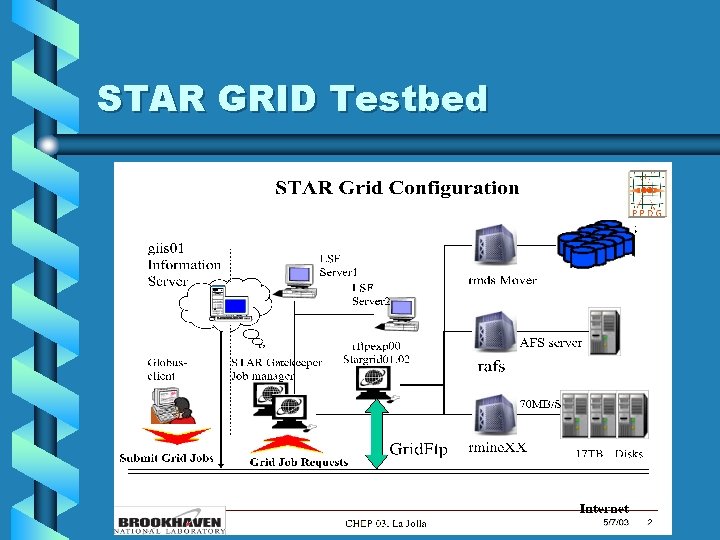

STAR GRID Testbed

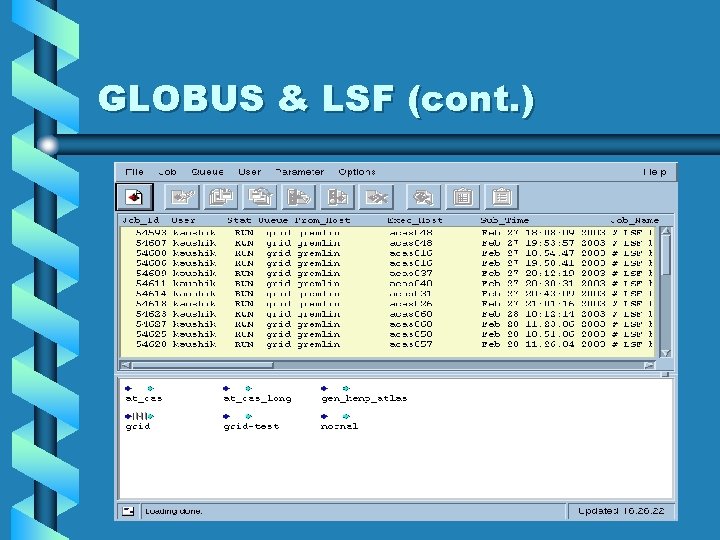

GLOBUS & LSF (cont. )

GLOBUS & LSF (cont. )

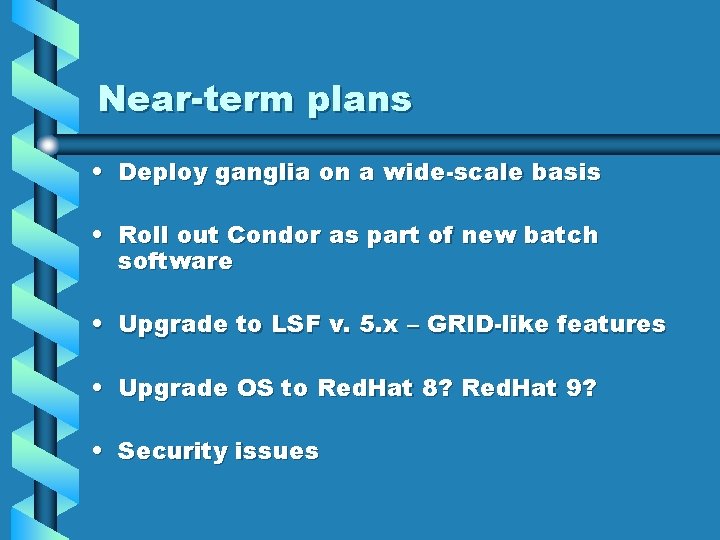

Near-term plans • Deploy ganglia on a wide-scale basis • Roll out Condor as part of new batch software • Upgrade to LSF v. 5. x – GRID-like features • Upgrade OS to Red. Hat 8? Red. Hat 9? • Security issues