The Green Data Center and Energy Efficient Computing

- Slides: 25

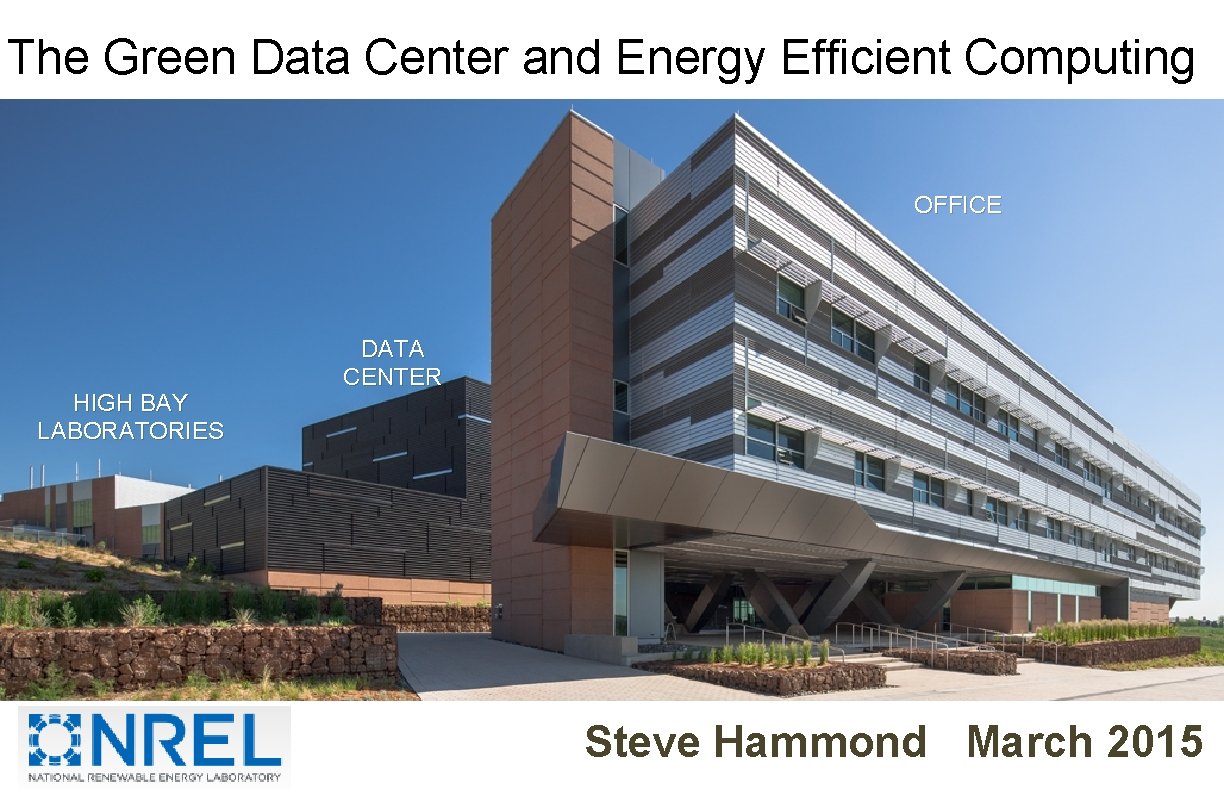

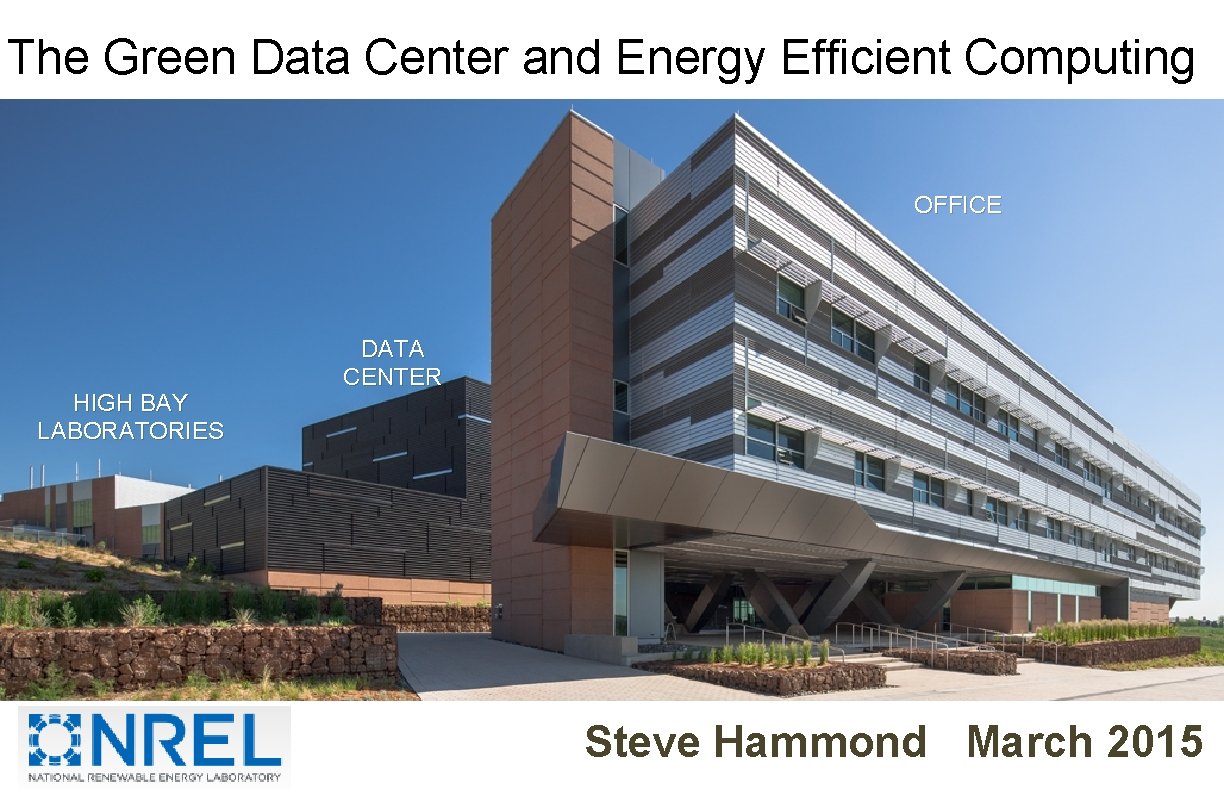

The Green Data Center and Energy Efficient Computing OFFICE HIGH BAY LABORATORIES DATA CENTER Steve Hammond March 20151

Energy Efficiency – The Fifth Fuel • The single largest chunk of energy consumption in the U. S. is buildings, ~31%, chiefly going to heating and cooling. • Much of it is wasted! Steve Hammond National Renewable Energy Laboratory NREL Steve Hammond 2

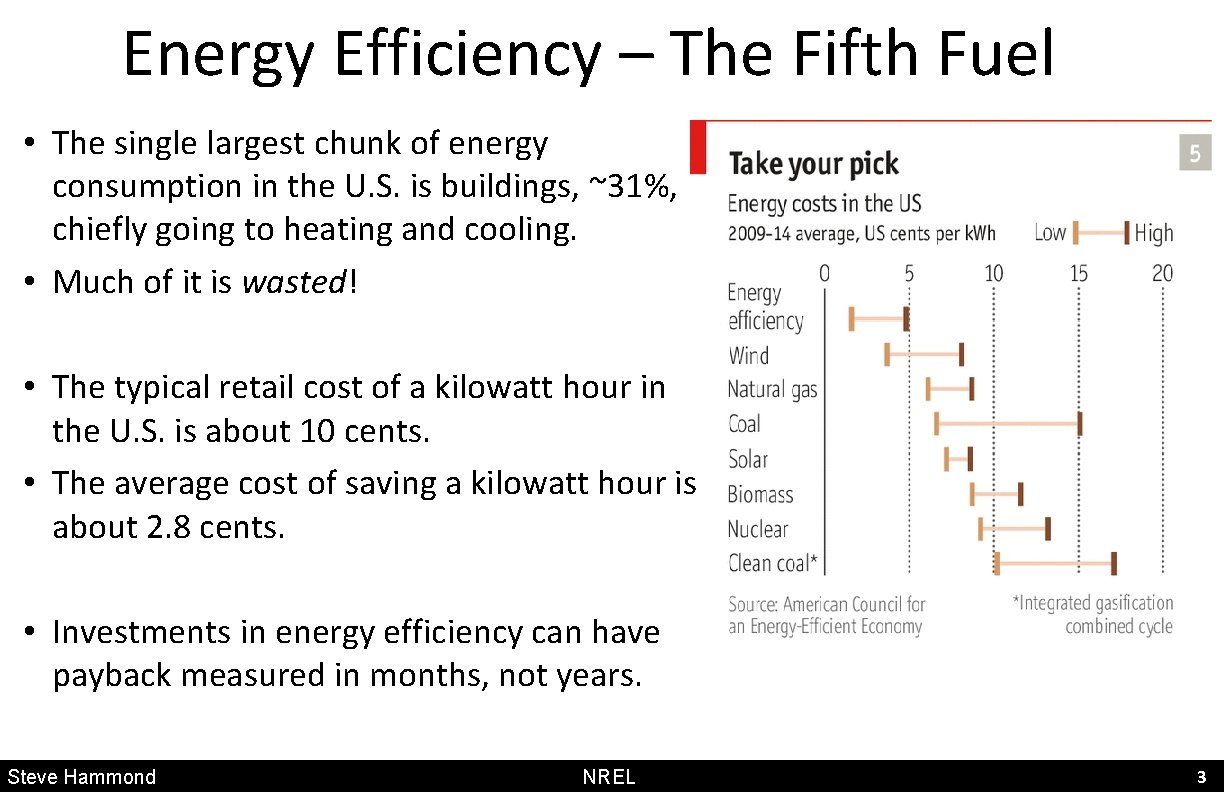

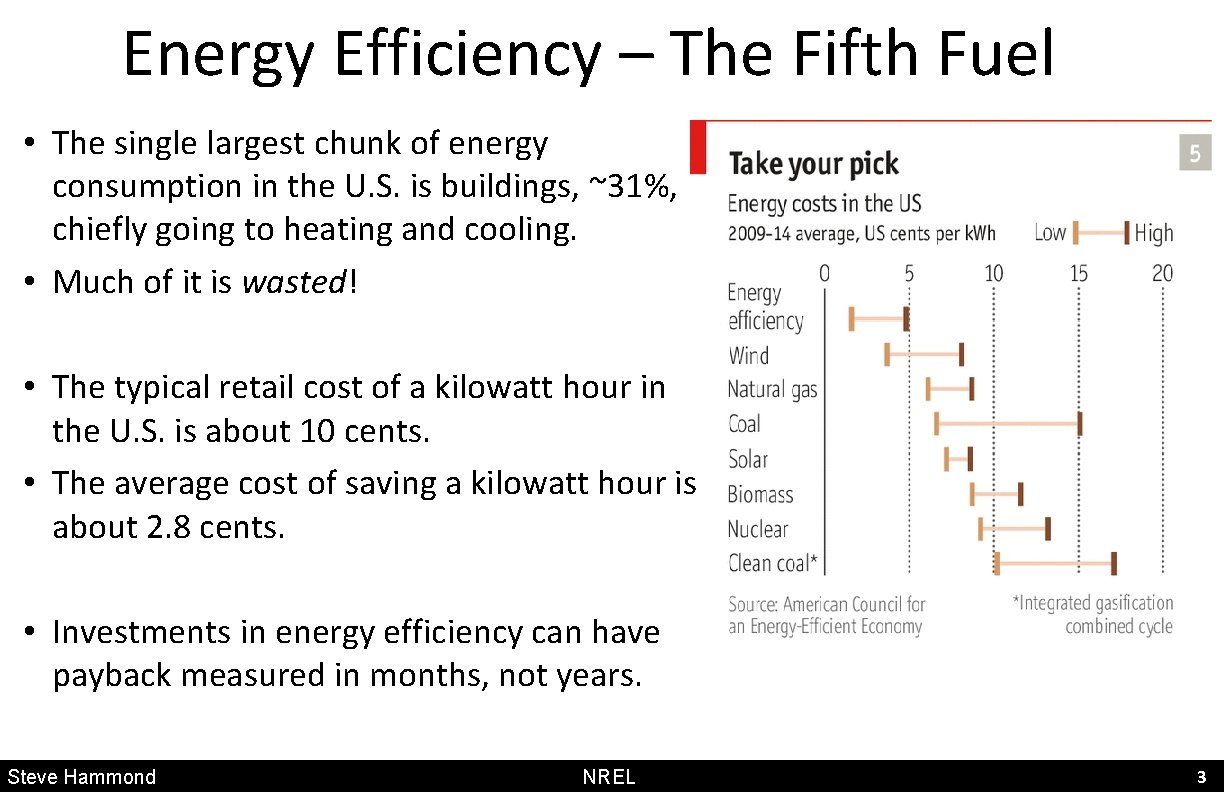

Energy Efficiency – The Fifth Fuel • The single largest chunk of energy consumption in the U. S. is buildings, ~31%, chiefly going to heating and cooling. • Much of it is wasted! • The typical retail cost of a kilowatt hour in the U. S. is about 10 cents. • The average cost of saving a kilowatt hour is about 2. 8 cents. • Investments in energy efficiency can have payback measured in months, not years. Steve Hammond National Renewable Energy Laboratory NREL Steve Hammond 3

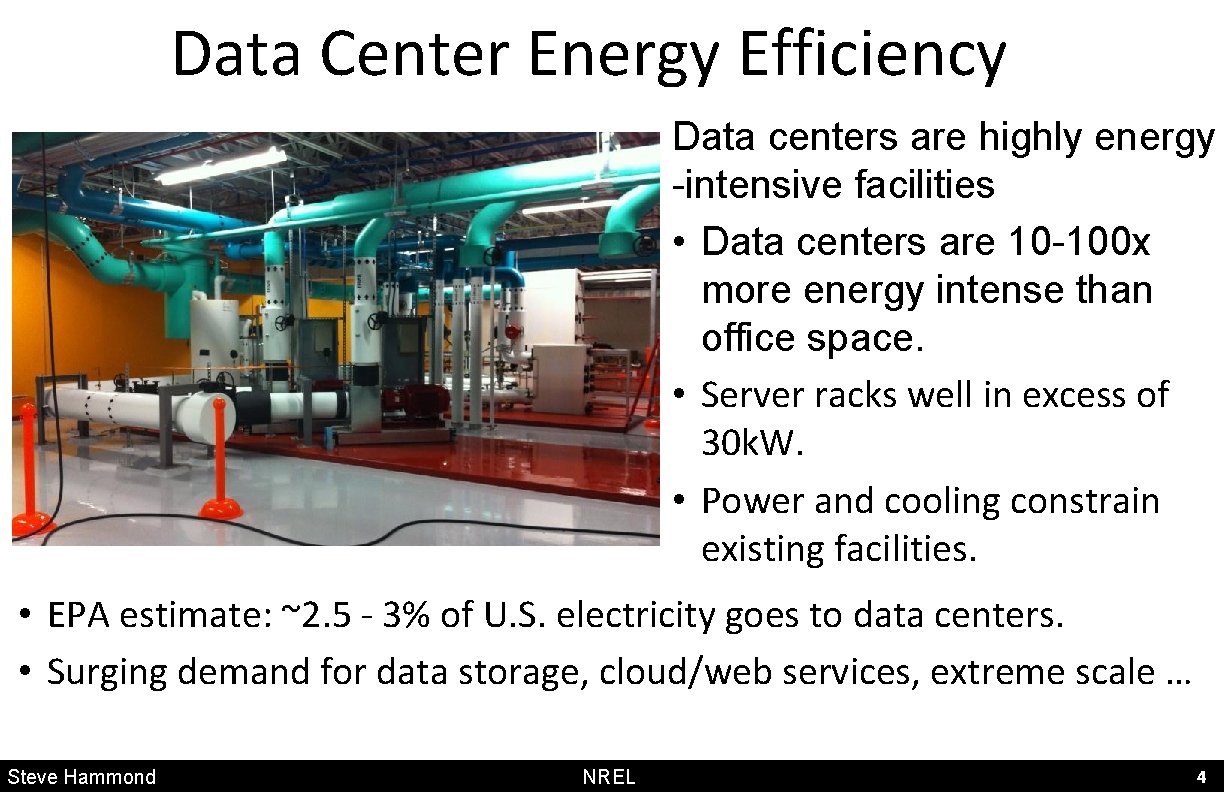

Data Center Energy Efficiency Data centers are highly energy -intensive facilities • Data centers are 10 -100 x more energy intense than office space. • Server racks well in excess of 30 k. W. • Power and cooling constrain existing facilities. • EPA estimate: ~2. 5 - 3% of U. S. electricity goes to data centers. • Surging demand for data storage, cloud/web services, extreme scale … Steve Hammond National Renewable Energy Laboratory NREL Steve Hammond 4

Biggest challenge is not technical • Data Center best practices are well documented. • However, the total cost of ownership (TCO) rests on three legs: • Facilities – “owns” the building and infrastructure. • IT – “owns” the compute systems. • CFO – “owns” the capital investments and utility costs. • Why should “Facilities” invest in efficient infrastructure if the “CFO” pays the utility bills and reaps the benefit? • Why should “IT” buy anything different if “CFO” benefits from reduced utility costs? • Efficiency ROI is real and all stakeholders must benefit for it to work. • Thus, organizational alignment is key. Steve Hammond National Renewable Energy Laboratory NREL Steve Hammond 5

Computing Integration Trends § More cores, die-stacking: memory close to x. PU. § Fabric Integration, greater package connectivity. § Advanced Switches with higher radix and higher speeds. § Closer integration of compute and switch. § Silicon Photonics § Low cost, outstanding performance but thermal issues still exist. § All this drives continued increases in power density (heat). § Power – 400 Vac 3 ph, 480 Vac 3 ph § Density – Raised floor structural challenges § Cooling – Liquid cooling for 50 -100+ k. W racks! Slide courtesy Mike Patterson, Intel 6

Energy Efficient Data Centers • Choices regarding power, packaging, cooling, and energy recovery in data centers drive TCO. • Why should we care? • Carbon footprint. • Water usage. • Limited utility power. • Mega$ per MW year. • Cost: Op. Ex ~ IT Cap. Ex! • Space Premium: Ten 100 KW racks take much, much less space than the equivalent fifty 20 KW air cooled racks. Steve Hammond NREL Steve Hammond 7

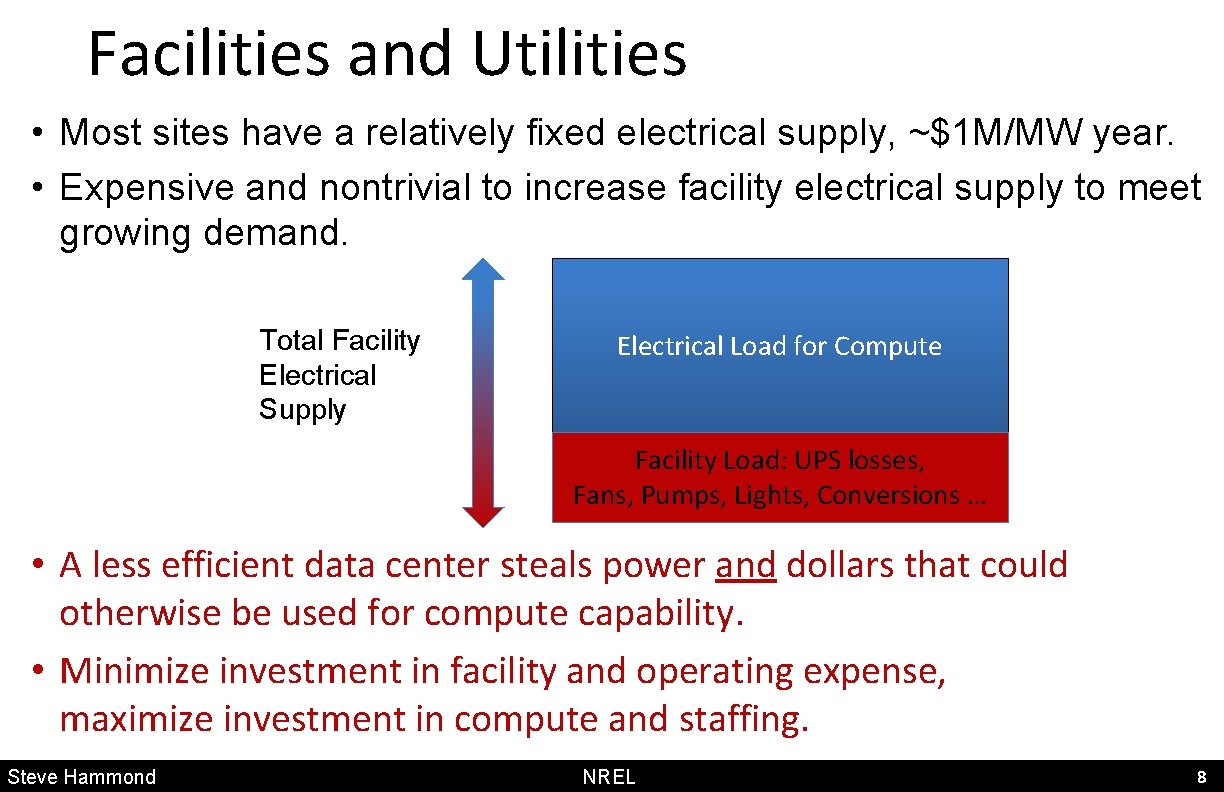

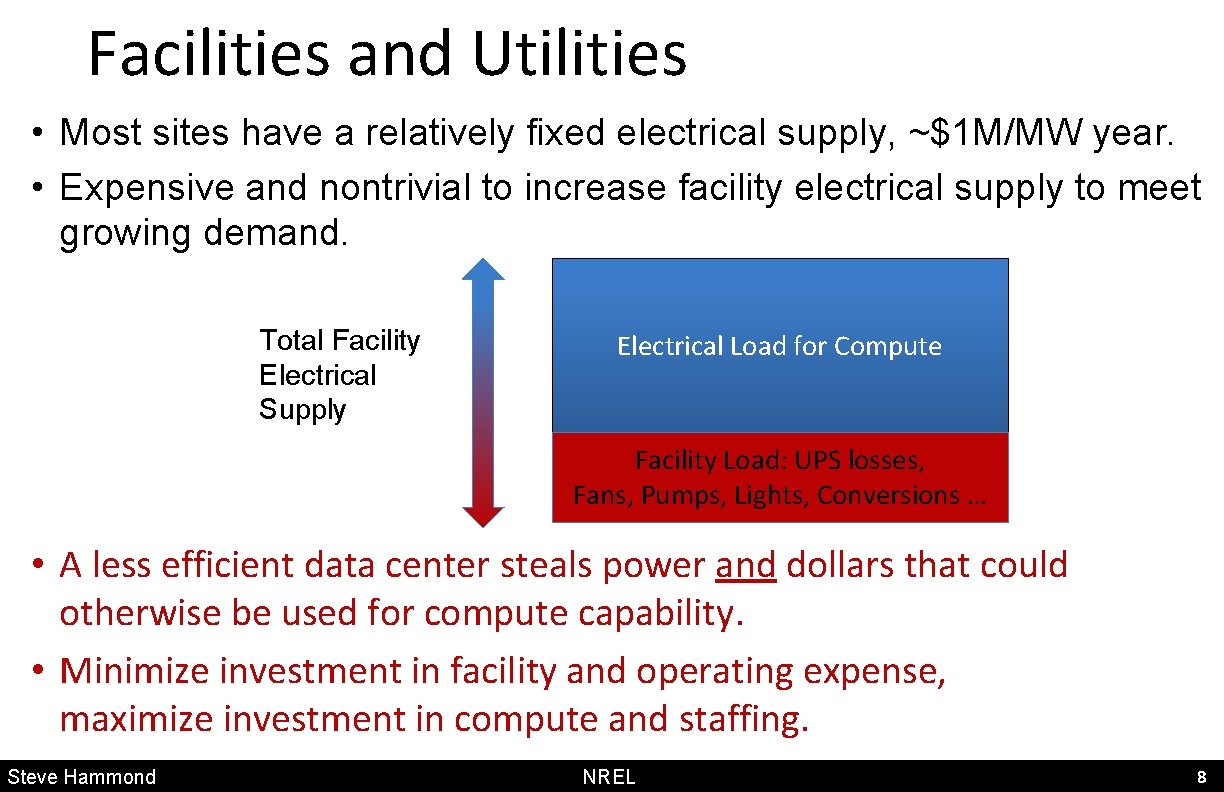

Facilities and Utilities • Most sites have a relatively fixed electrical supply, ~$1 M/MW year. • Expensive and nontrivial to increase facility electrical supply to meet growing demand. Total Facility Electrical Supply Electrical Load for Compute Facility Load: UPS losses, Fans, Pumps, Lights, Conversions … • A less efficient data center steals power and dollars that could otherwise be used for compute capability. • Minimize investment in facility and operating expense, maximize investment in compute and staffing. Steve Hammond NREL Steve Hammond 8

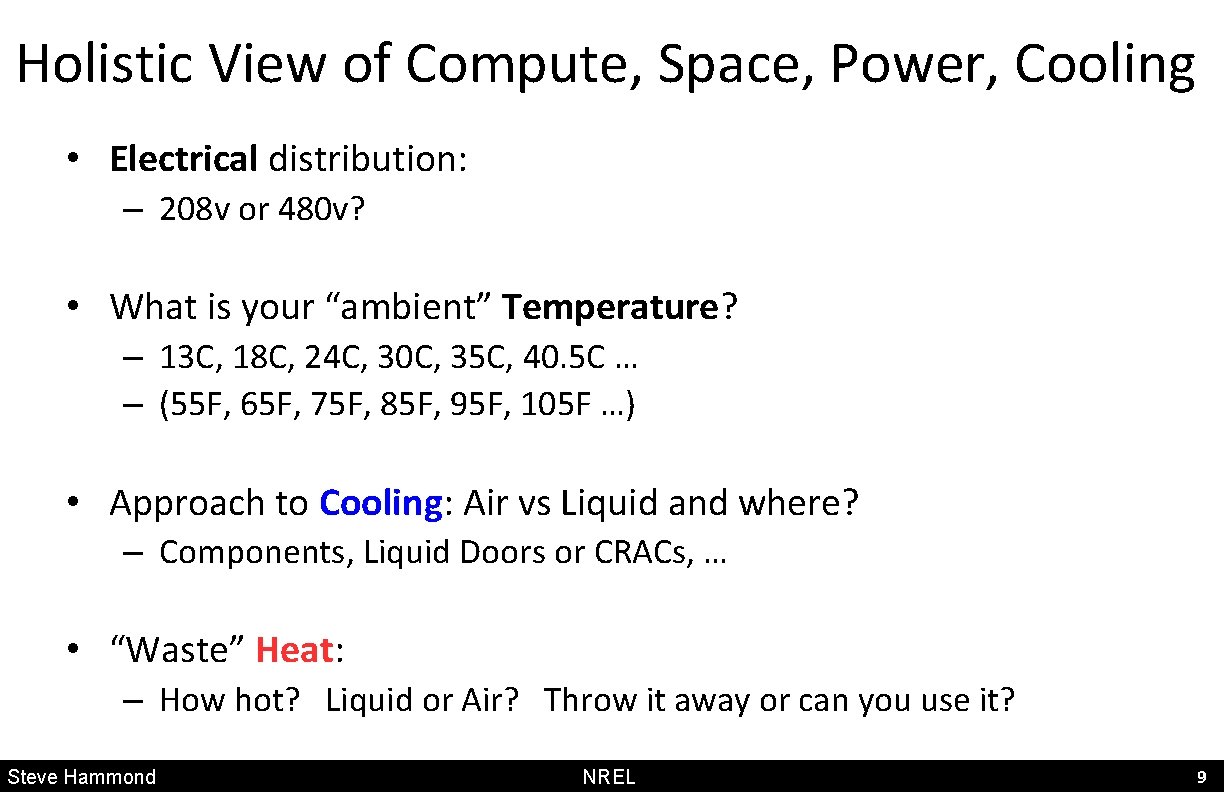

Holistic View of Compute, Space, Power, Cooling • Electrical distribution: – 208 v or 480 v? • What is your “ambient” Temperature? – 13 C, 18 C, 24 C, 30 C, 35 C, 40. 5 C … – (55 F, 65 F, 75 F, 85 F, 95 F, 105 F …) • Approach to Cooling: Air vs Liquid and where? – Components, Liquid Doors or CRACs, … • “Waste” Heat: – How hot? Liquid or Air? Throw it away or can you use it? Steve Hammond NREL Steve Hammond 9

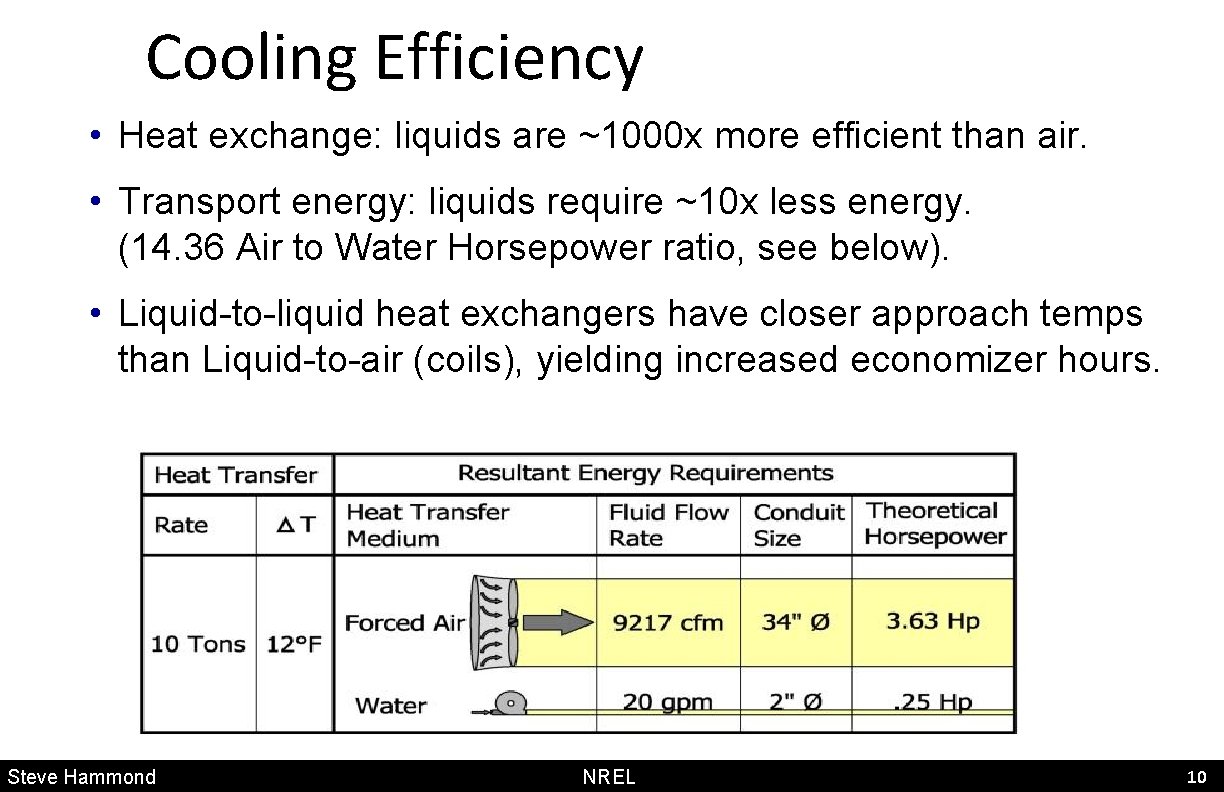

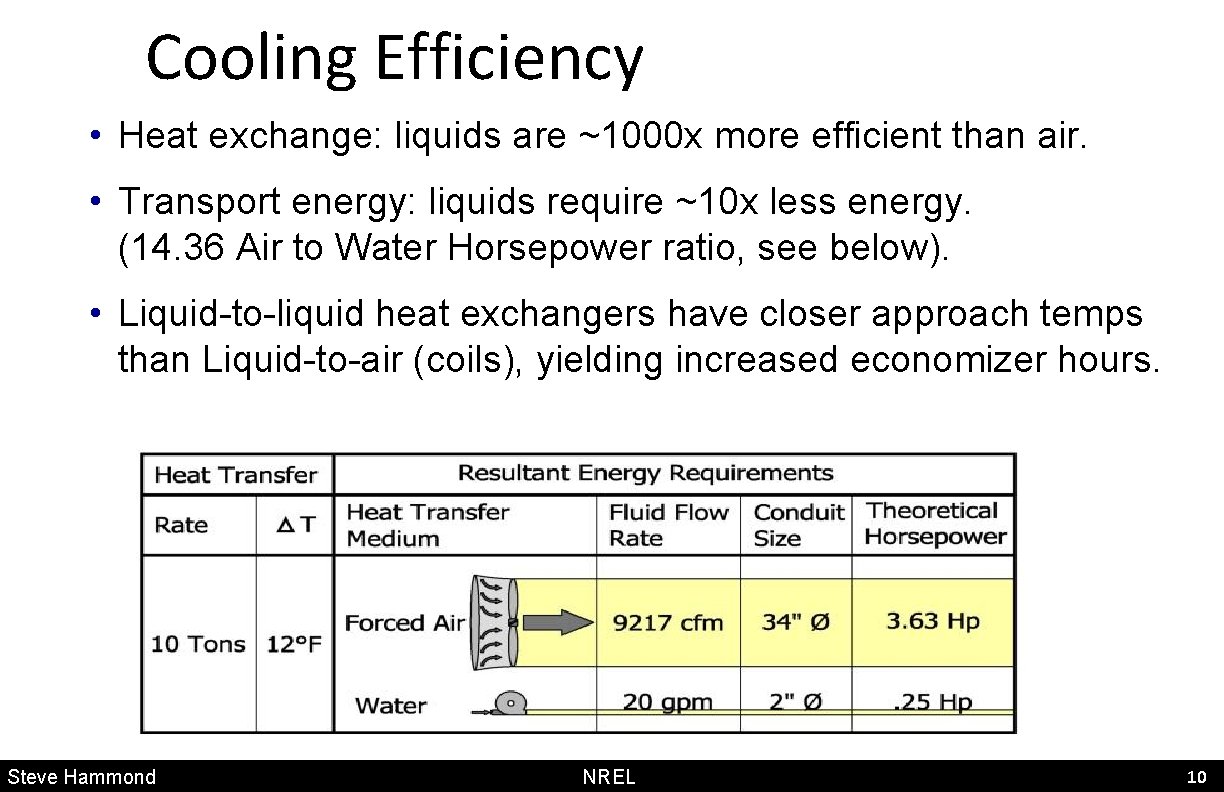

Cooling Efficiency • Heat exchange: liquids are ~1000 x more efficient than air. • Transport energy: liquids require ~10 x less energy. (14. 36 Air to Water Horsepower ratio, see below). • Liquid-to-liquid heat exchangers have closer approach temps than Liquid-to-air (coils), yielding increased economizer hours. Steve Hammond NREL 10 Steve Hammond

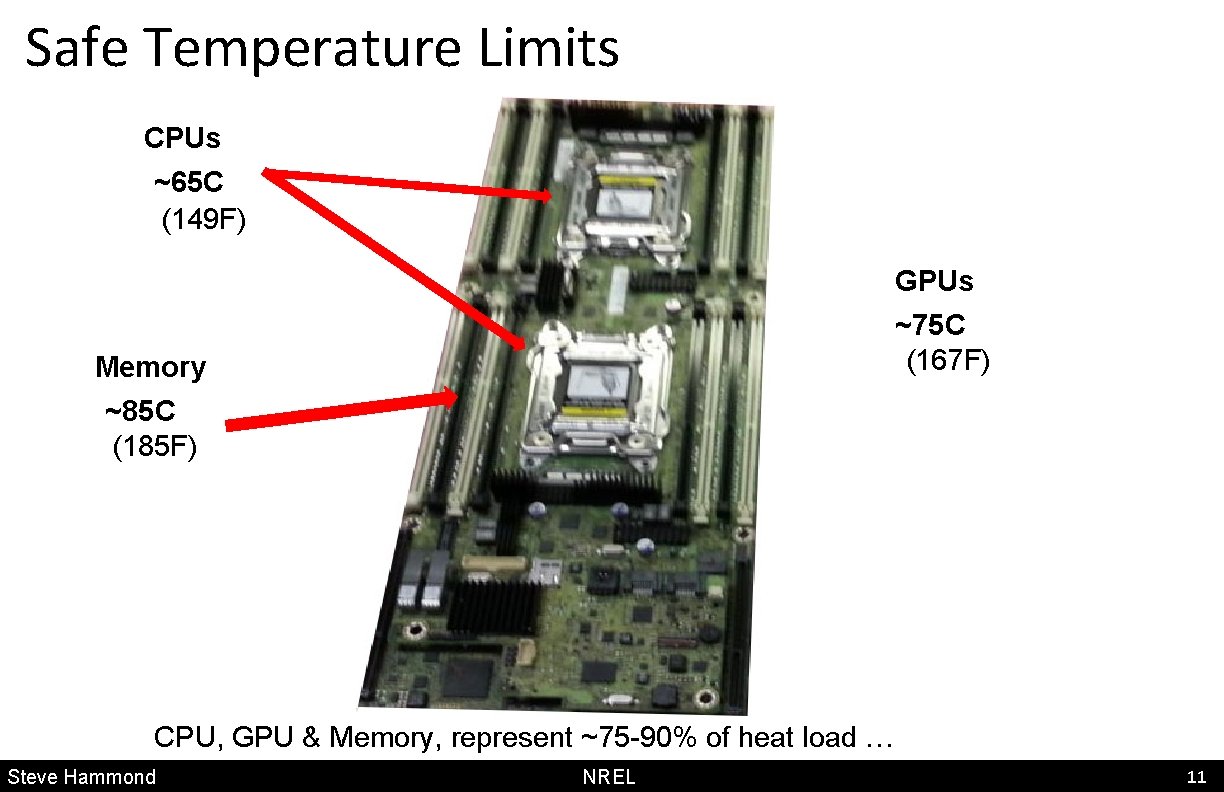

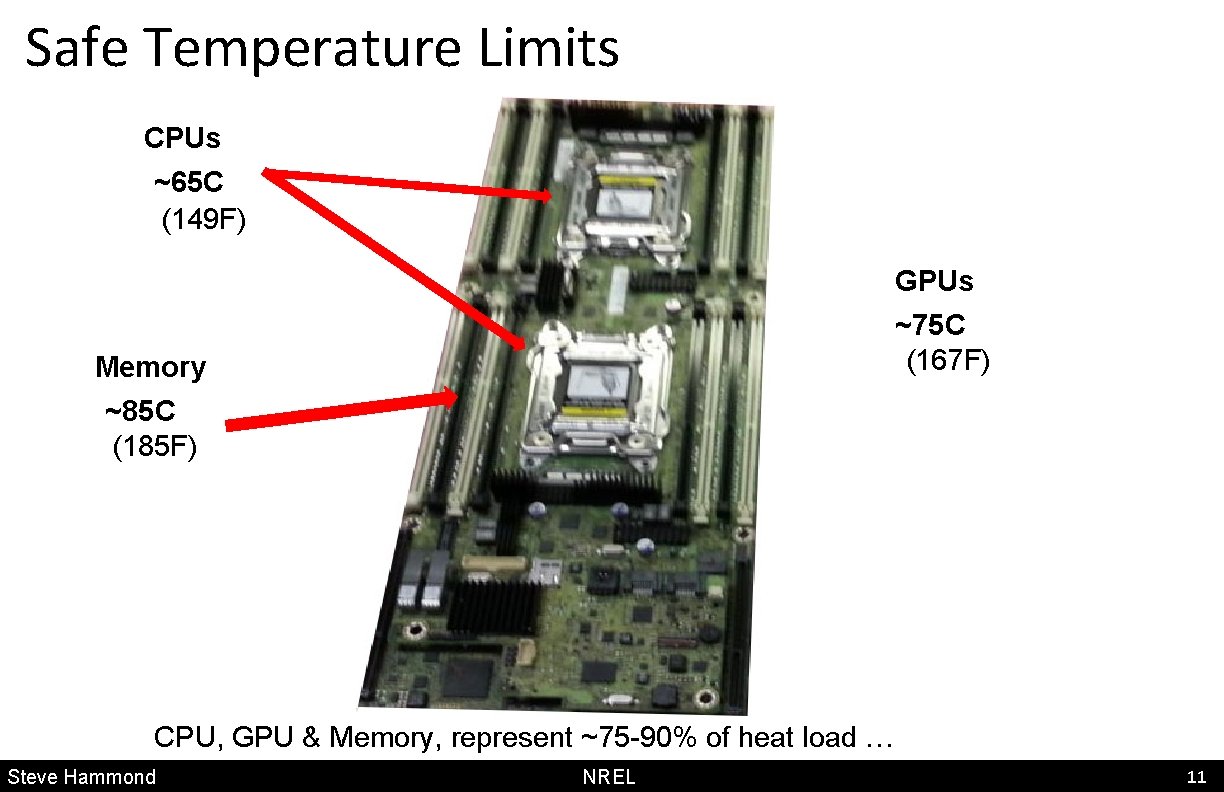

Safe Temperature Limits CPUs ~65 C (149 F) GPUs ~75 C (167 F) Memory ~85 C (185 F) CPU, GPU & Memory, represent ~75 -90% of heat load … Steve Hammond NREL 11 Steve Hammond

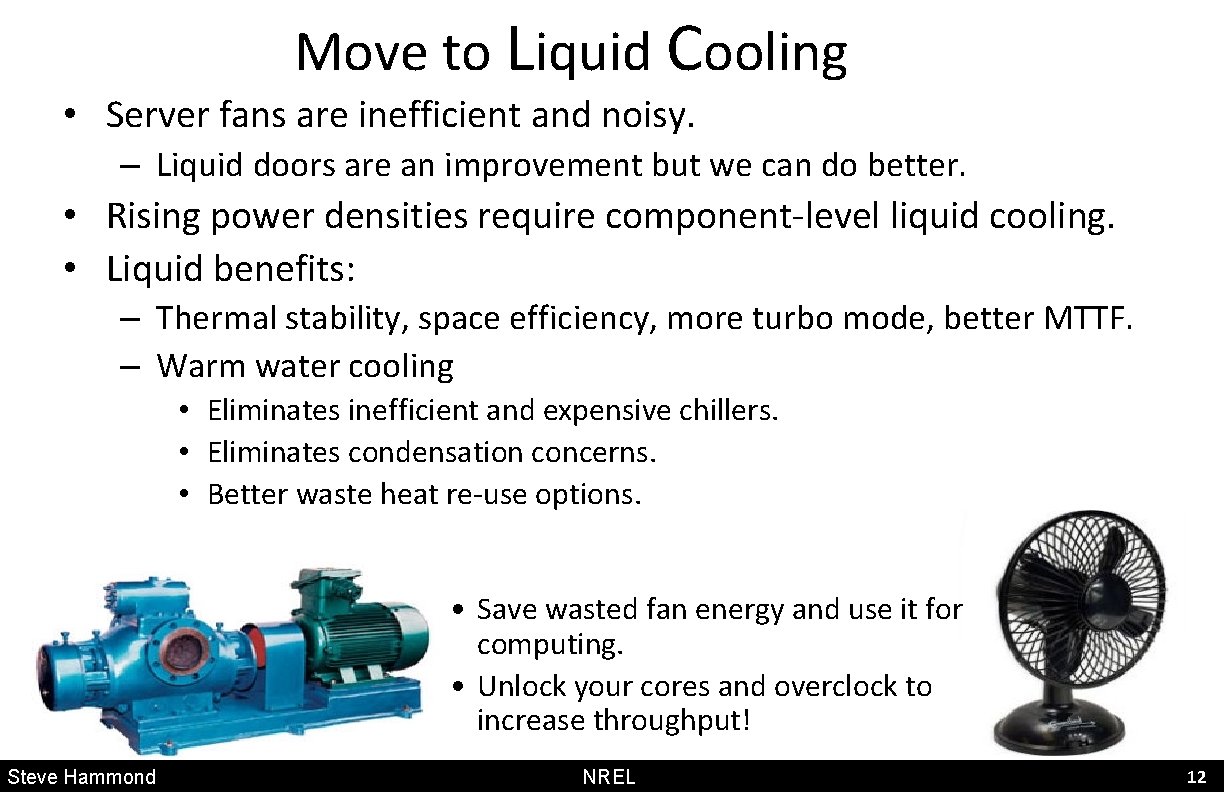

Move to Liquid Cooling • Server fans are inefficient and noisy. – Liquid doors are an improvement but we can do better. • Rising power densities require component-level liquid cooling. • Liquid benefits: – Thermal stability, space efficiency, more turbo mode, better MTTF. – Warm water cooling • Eliminates inefficient and expensive chillers. • Eliminates condensation concerns. • Better waste heat re-use options. • Save wasted fan energy and use it for computing. • Unlock your cores and overclock to increase throughput! Steve Hammond NREL 12 Steve Hammond

Liquid Cooling – New Considerations • Air Cooling: – Humidity, Condensation, Fan failures. – Cable blocks and grated floor tiles. – Mixing, hot spots, “top of rack” issues. • Liquid Cooling: – p. H & bacteria, dissolved solids. – Type of pipes (black pipe, copper, stainless) – Corrosion inhibitors, etc. • When considering liquid cooled systems, insist that vendors adhere to the latest ASHRAE water quality spec or it could be costly. Steve Hammond NREL 13 Steve Hammond

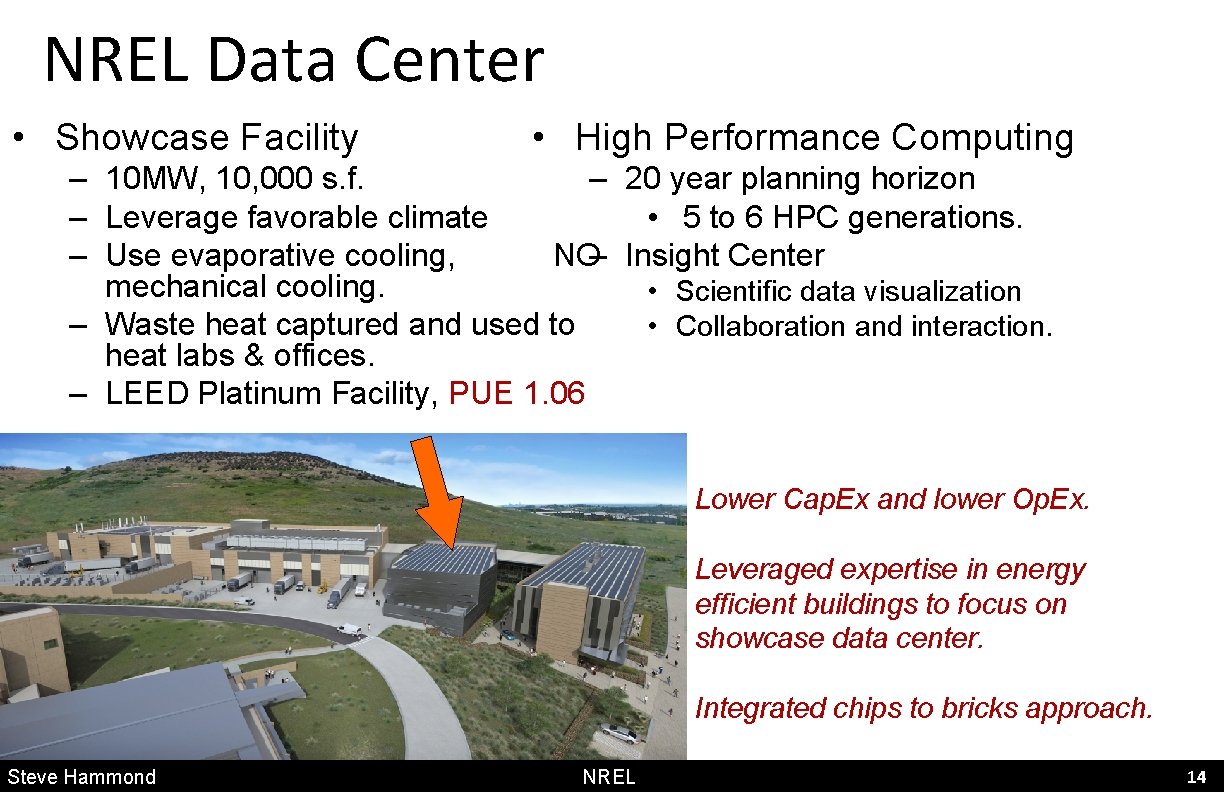

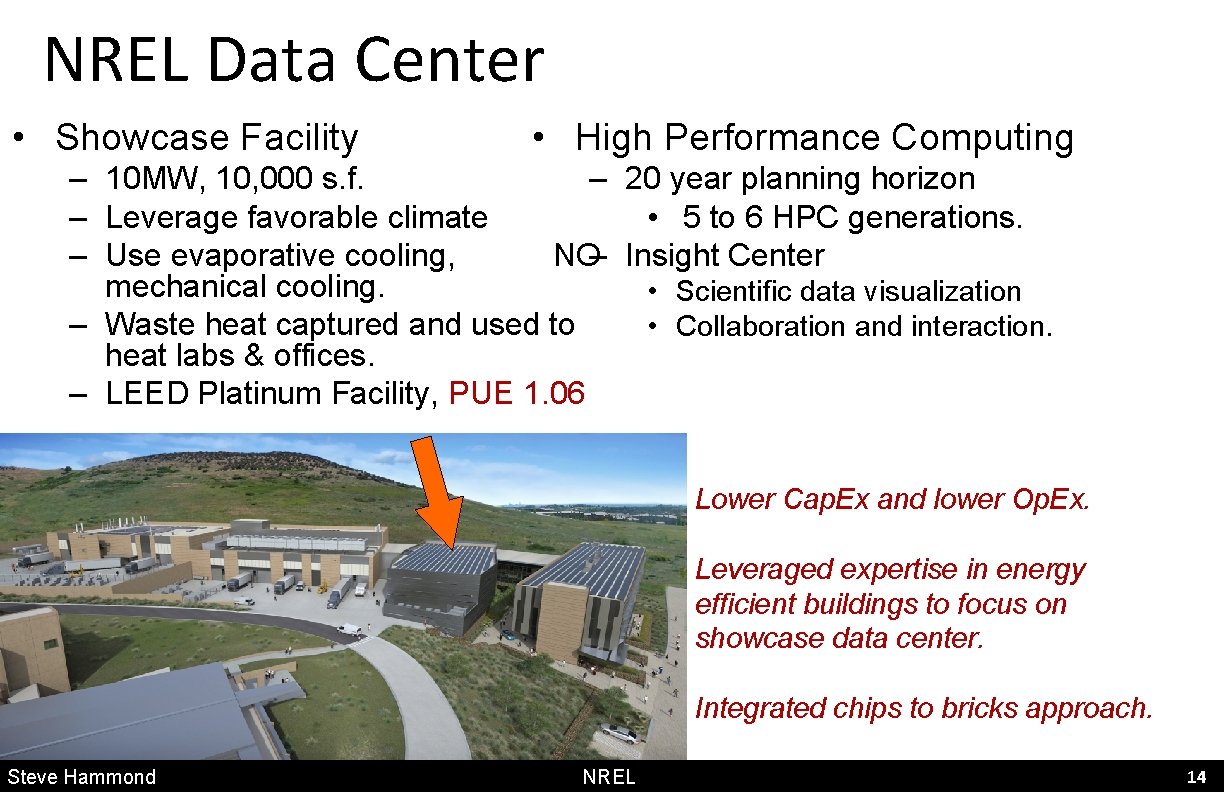

NREL Data Center • Showcase Facility • High Performance Computing – 10 MW, 10, 000 s. f. – 20 year planning horizon – Leverage favorable climate • 5 to 6 HPC generations. – Use evaporative cooling, NO– Insight Center mechanical cooling. • Scientific data visualization – Waste heat captured and used to • Collaboration and interaction. heat labs & offices. – LEED Platinum Facility, PUE 1. 06 Lower Cap. Ex and lower Op. Ex. Leveraged expertise in energy efficient buildings to focus on showcase data center. Integrated chips to bricks approach. Steve Hammond NREL 14 Steve Hammond

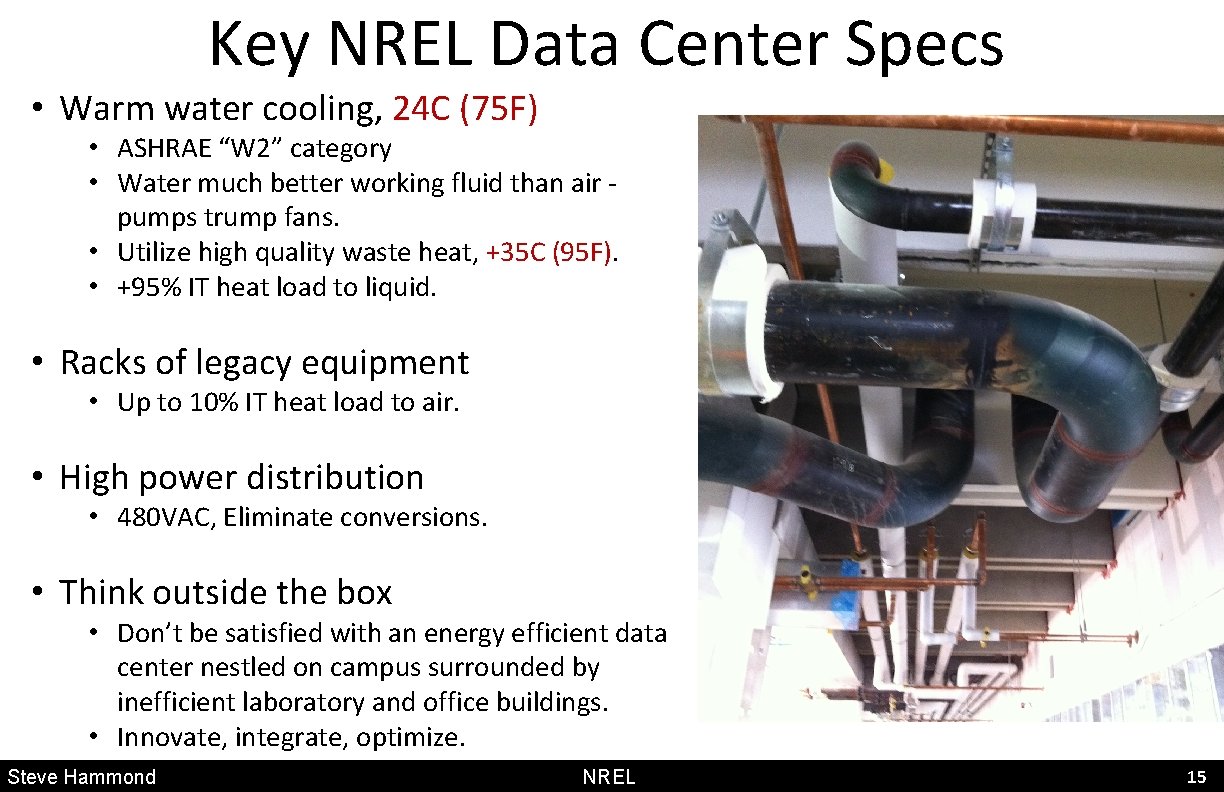

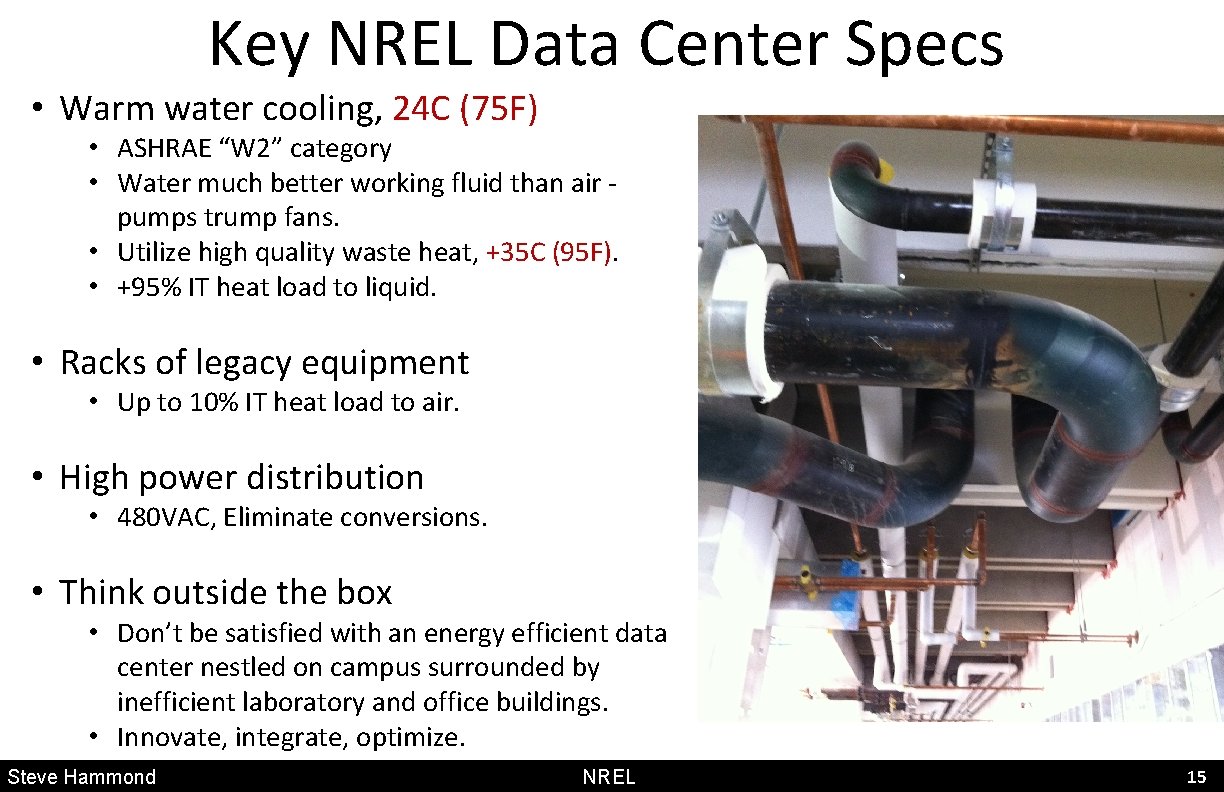

Key NREL Data Center Specs • Warm water cooling, 24 C (75 F) • ASHRAE “W 2” category • Water much better working fluid than air pumps trump fans. • Utilize high quality waste heat, +35 C (95 F). • +95% IT heat load to liquid. • Racks of legacy equipment • Up to 10% IT heat load to air. • High power distribution • 480 VAC, Eliminate conversions. • Think outside the box • Don’t be satisfied with an energy efficient data center nestled on campus surrounded by inefficient laboratory and office buildings. • Innovate, integrate, optimize. Steve Hammond NREL 15 Steve Hammond

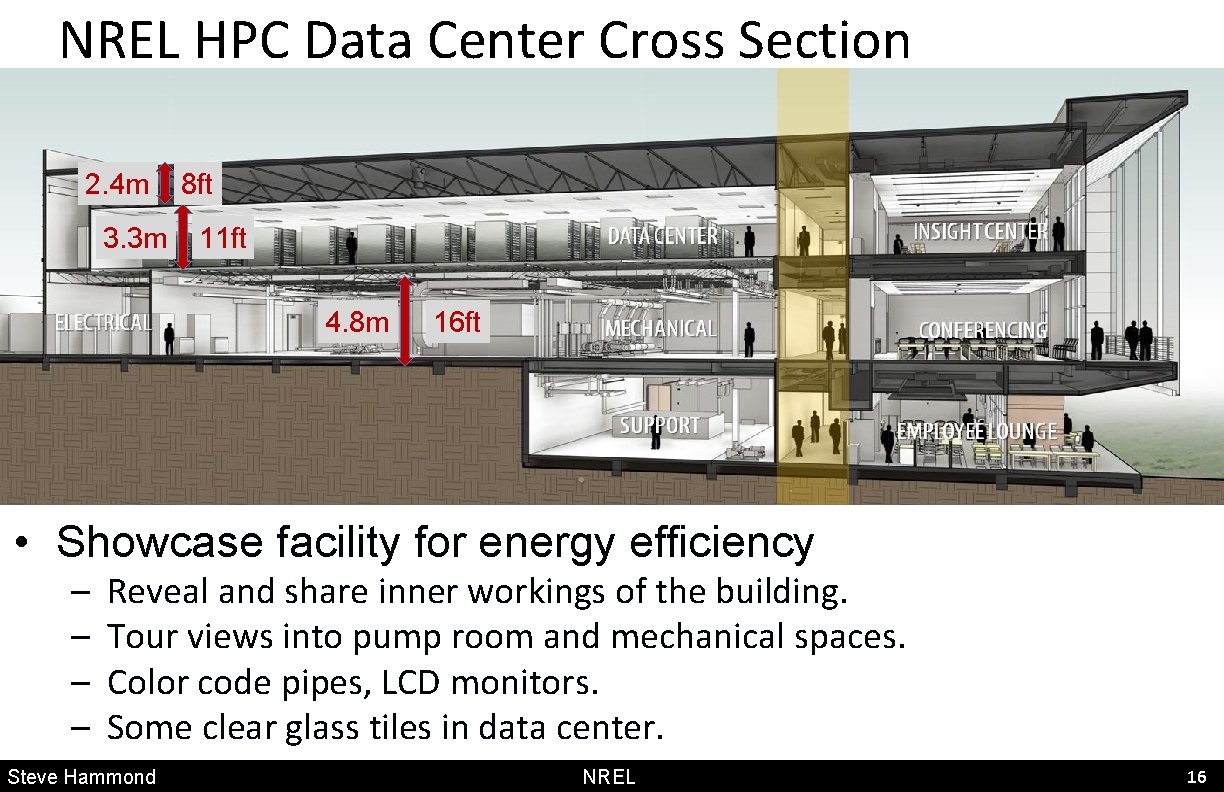

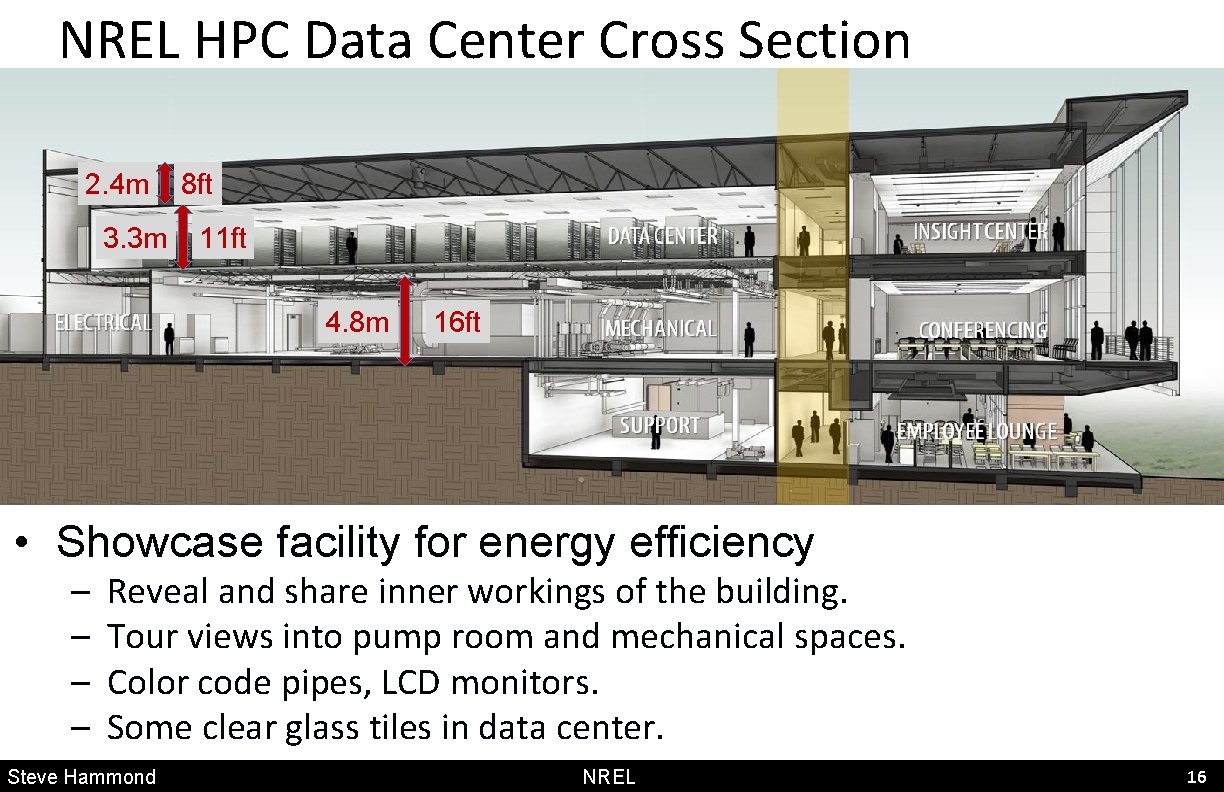

NREL HPC Data Center Cross Section 2. 4 m 3. 3 m 8 ft 11 ft 4. 8 m 16 ft • Showcase facility for energy efficiency – – Reveal and share inner workings of the building. Tour views into pump room and mechanical spaces. Color code pipes, LCD monitors. Some clear glass tiles in data center. Steve Hammond NREL 16

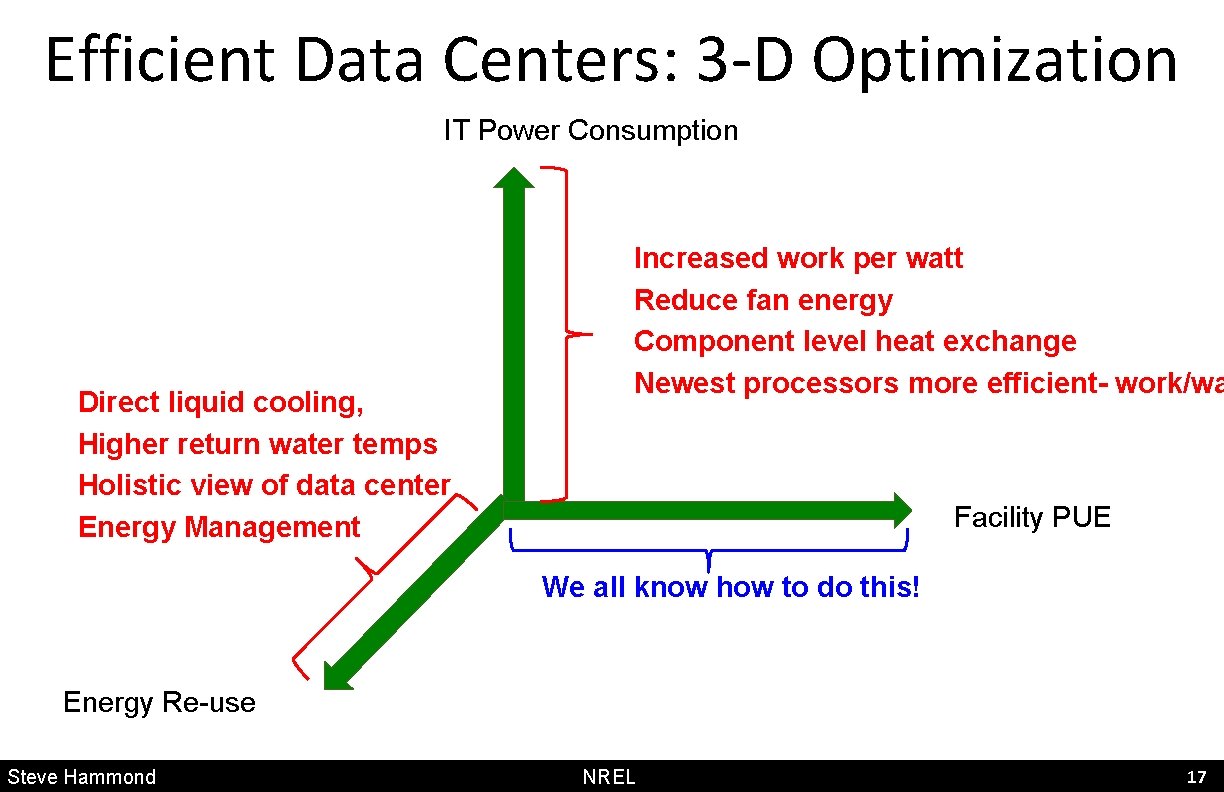

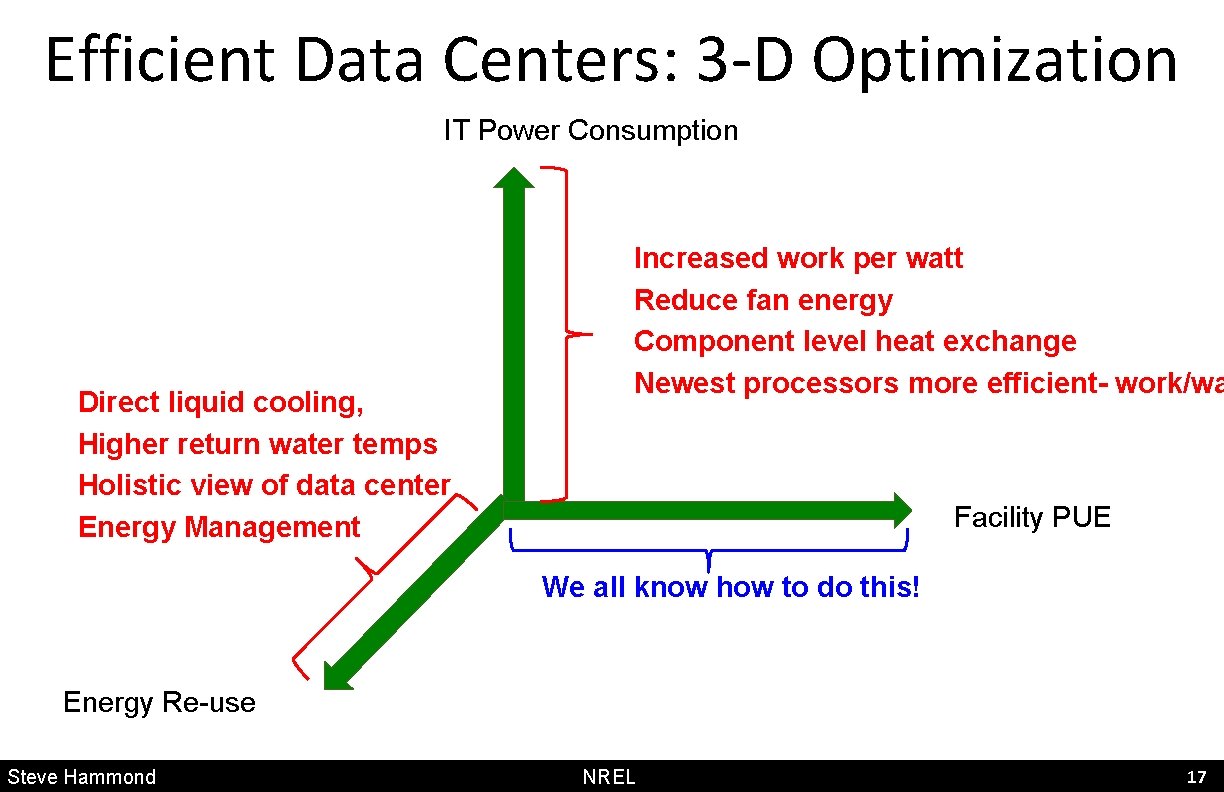

Efficient Data Centers: 3 -D Optimization IT Power Consumption Direct liquid cooling, Higher return water temps Holistic view of data center Energy Management Increased work per watt Reduce fan energy Component level heat exchange Newest processors more efficient- work/wa Facility PUE We all know how to do this! Energy Re-use Steve Hammond NREL 17 Steve Hammond

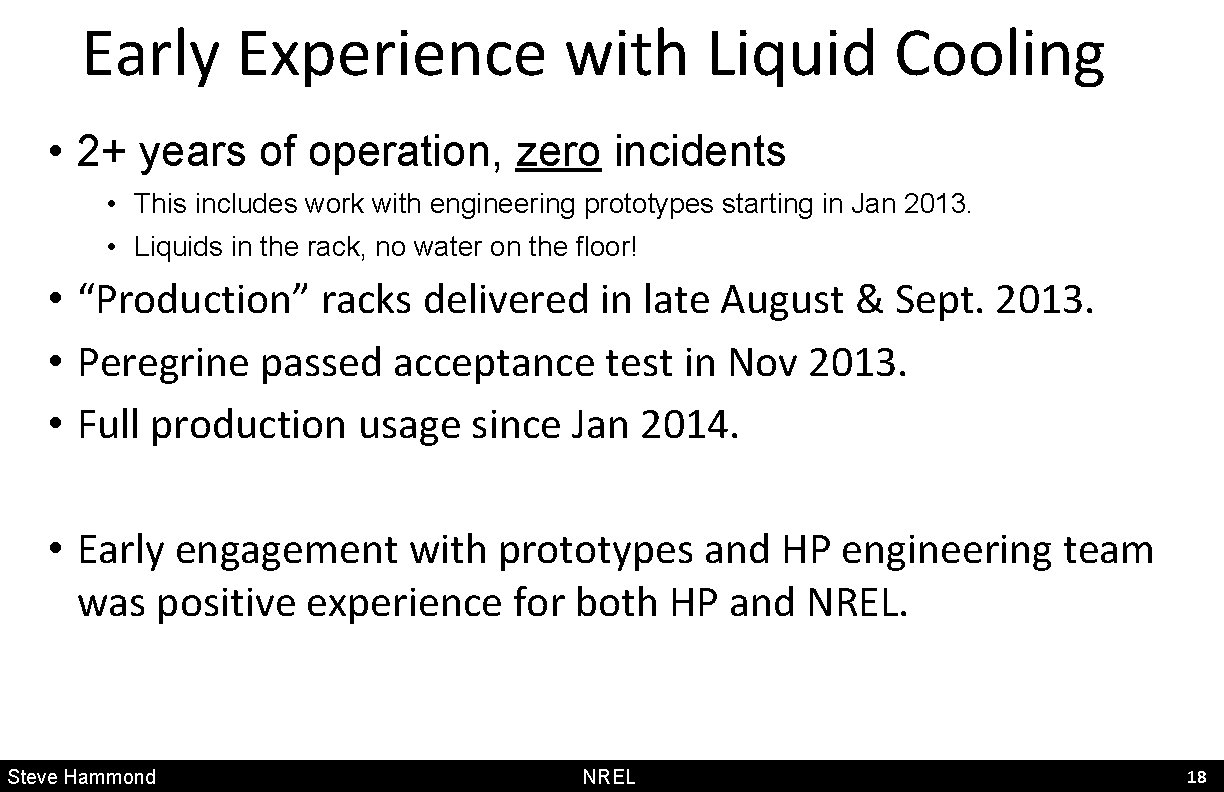

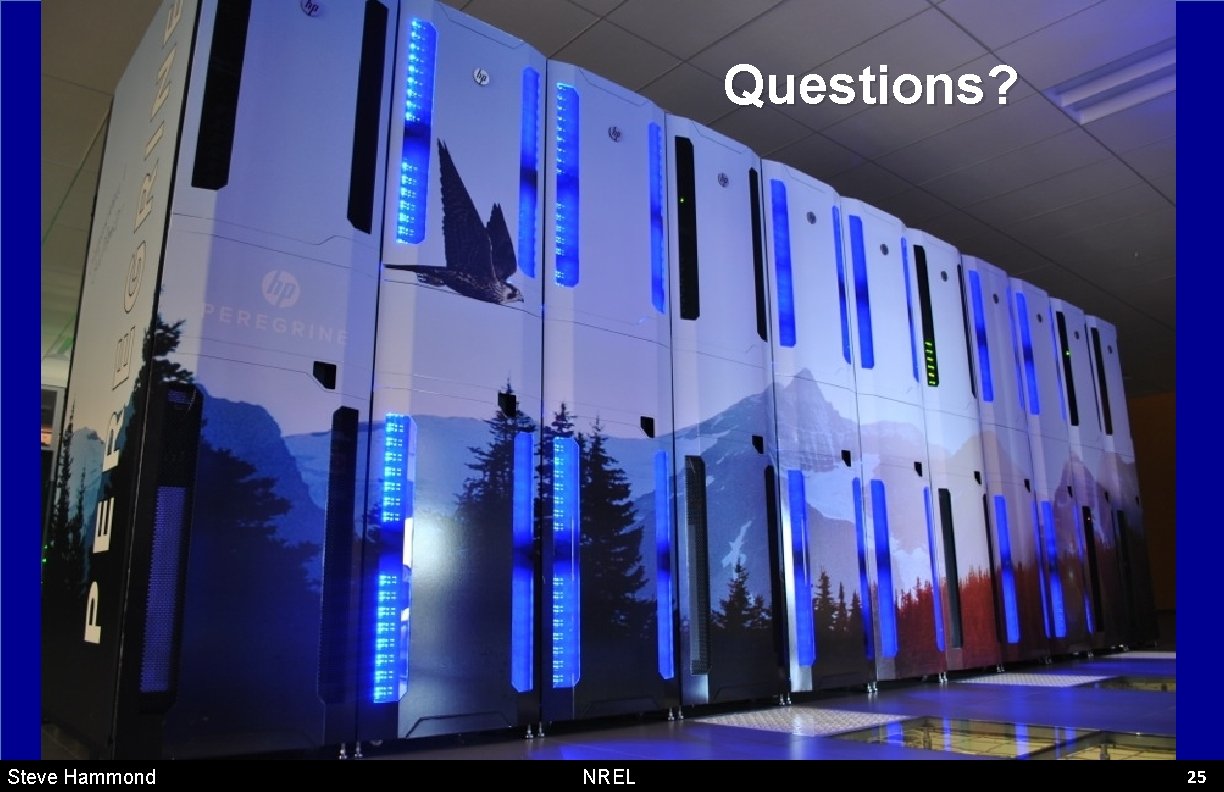

Early Experience with Liquid Cooling • 2+ years of operation, zero incidents • This includes work with engineering prototypes starting in Jan 2013. • Liquids in the rack, no water on the floor! • “Production” racks delivered in late August & Sept. 2013. • Peregrine passed acceptance test in Nov 2013. • Full production usage since Jan 2014. • Early engagement with prototypes and HP engineering team was positive experience for both HP and NREL. Steve Hammond NREL 18 Steve Hammond

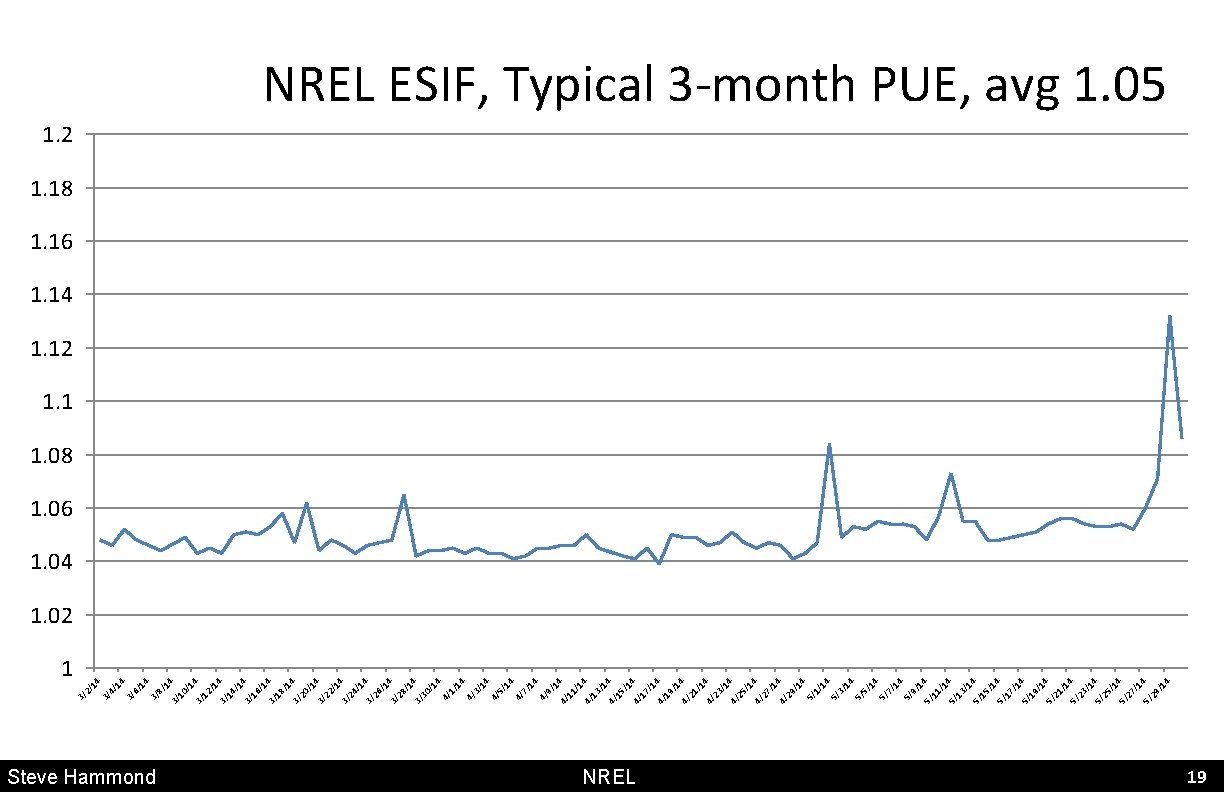

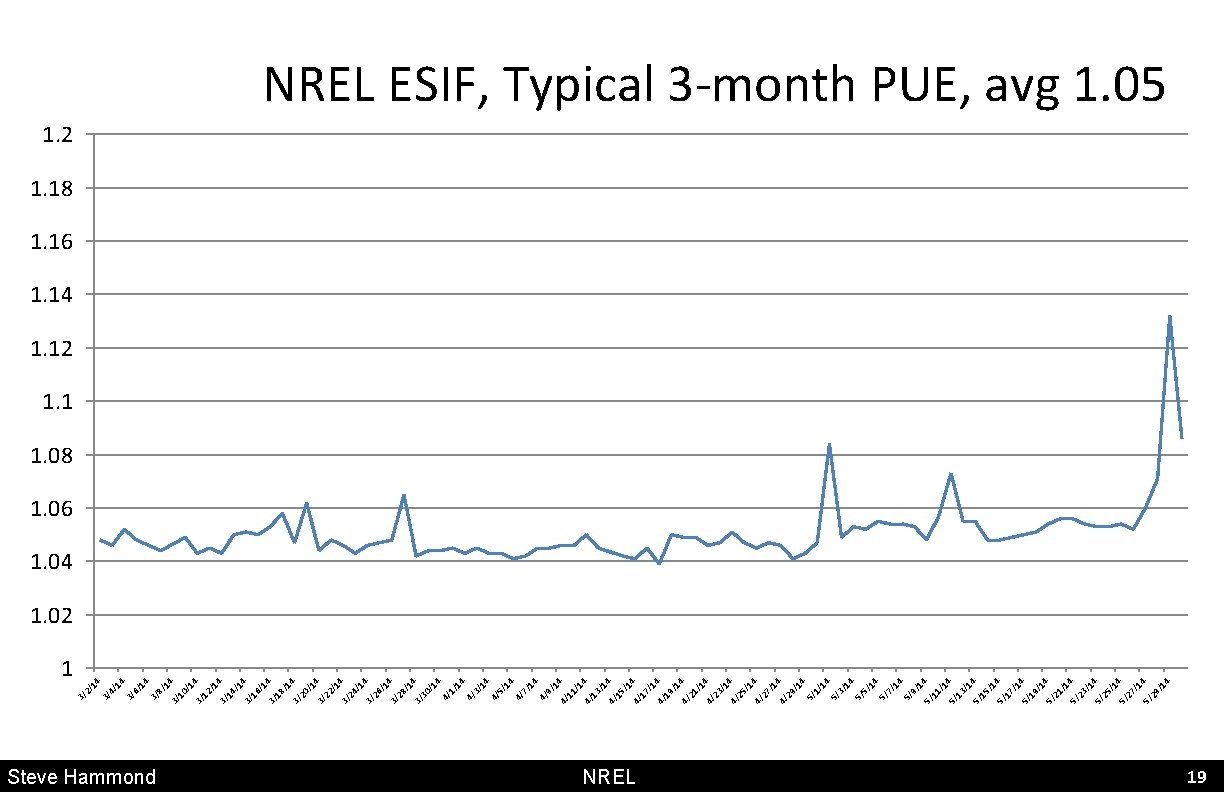

Steve Hammond NREL 4 /1 29 5/ 4 /1 27 5/ 4 /1 25 5/ 4 /1 23 5/ 4 /1 21 5/ 19 5/ 4 /1 17 5/ 4 /1 15 5/ 13 5/ 11 5/ 9/ 14 5/ 7/ 14 5/ 5/ 14 5/ 3/ 14 5/ /1 1/ 14 5/ 4 /1 29 4/ 4 /1 27 4/ 4 /1 25 4/ 4 /1 23 4/ 4 /1 21 4/ 4 /1 19 4/ 4 /1 17 4/ 4 /1 15 4/ 4 /1 13 4/ 4 9/ 14 11 4/ 4/ 7/ 14 4/ 5/ 14 4/ 3/ 14 4/ 4 1/ 14 4/ /1 4 /1 30 3/ 4 /1 28 3/ 4 /1 26 3/ 4 /1 24 3/ 4 /1 22 3/ 4 /1 20 3/ 4 /1 18 3/ 4 /1 16 3/ 4 /1 14 3/ 4 /1 12 3/ 10 8/ 14 3/ 6/ 14 3/ 4/ 14 3/ 3/ 1 2/ 14 3/ NREL ESIF, Typical 3 -month PUE, avg 1. 05 1. 2 1. 18 1. 16 1. 14 1. 12 1. 1 1. 08 1. 06 1. 04 1. 02 19 19 Steve Hammond

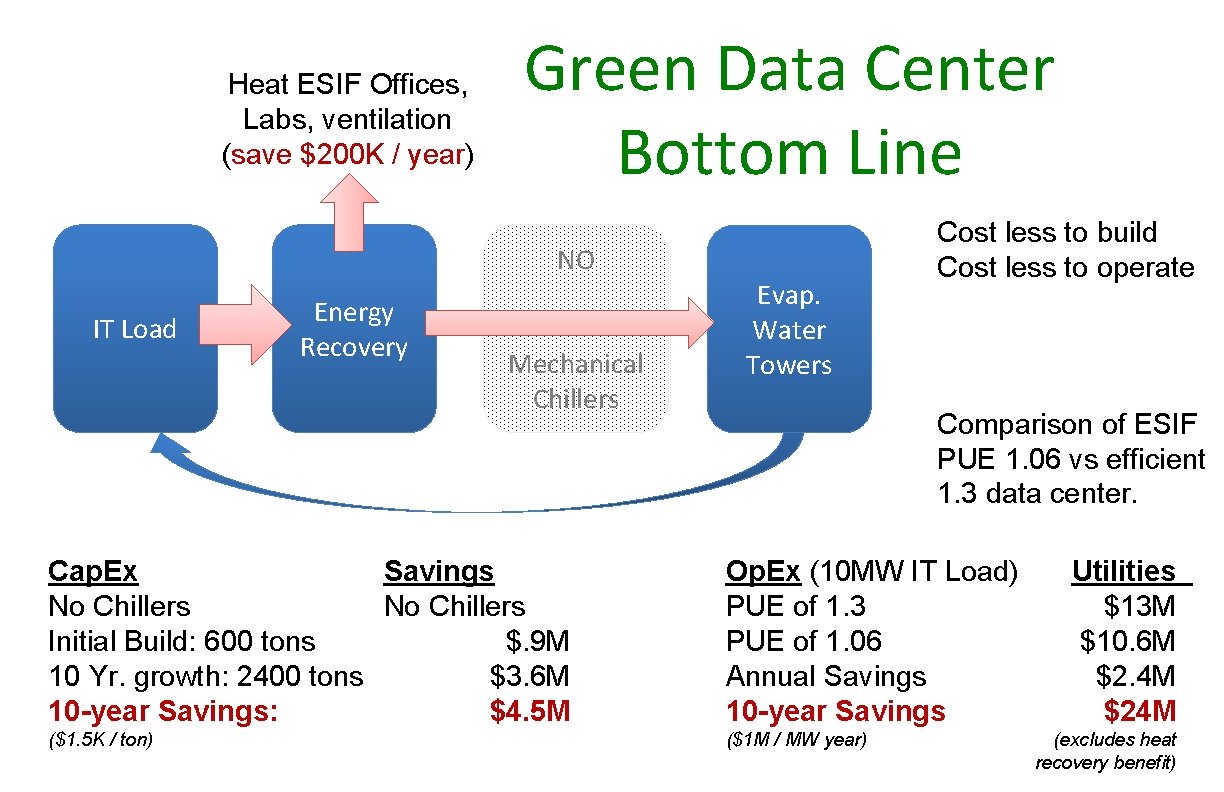

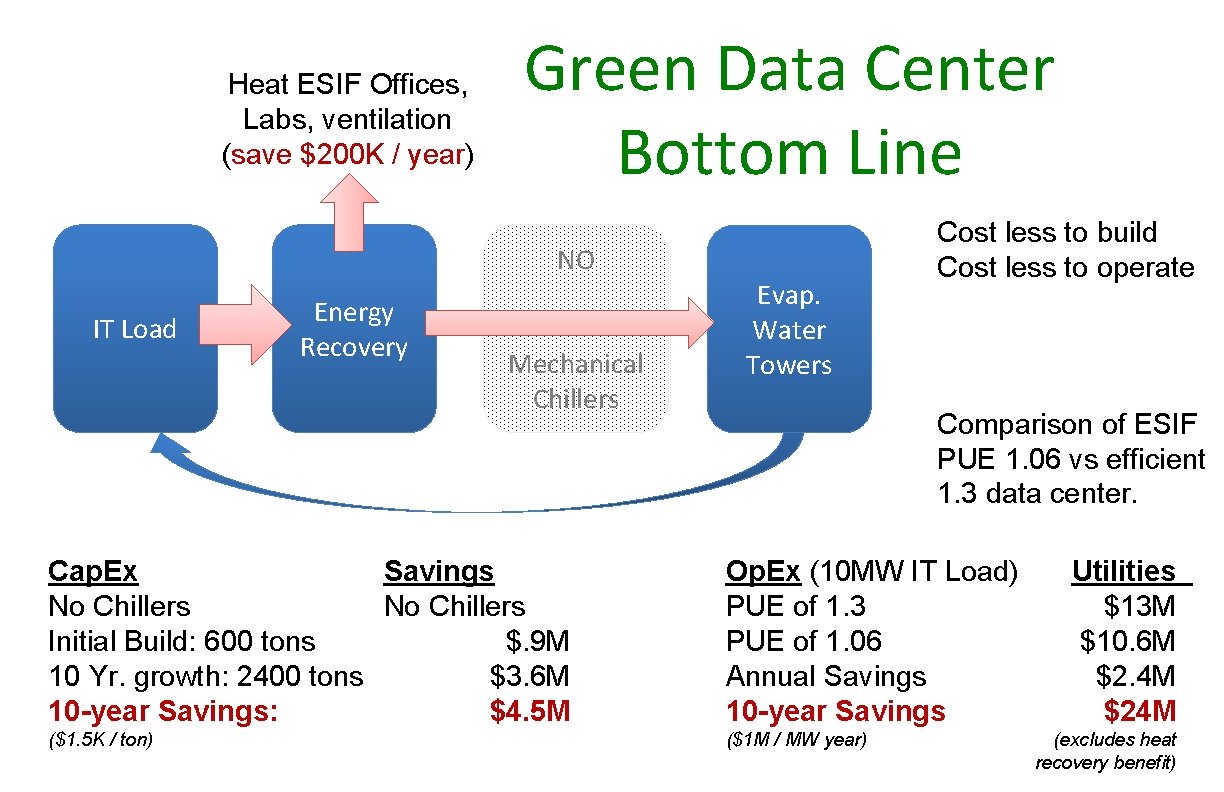

Heat ESIF Offices, Labs, ventilation (save $200 K / year) Green Data Center Bottom Line NO IT Load Energy Recovery Mechanical Chillers Evap. Water Towers Cost less to build Cost less to operate Comparison of ESIF PUE 1. 06 vs efficient 1. 3 data center. Savings Cap. Ex No Chillers $. 9 M Initial Build: 600 tons $3. 6 M 10 Yr. growth: 2400 tons $4. 5 M 10 -year Savings: Op. Ex (10 MW IT Load) PUE of 1. 3 PUE of 1. 06 Annual Savings 10 -year Savings ($1. 5 K / ton) ($1 M / MW year) Utilities $13 M $10. 6 M $2. 4 M $24 M (excludes heat recovery benefit)

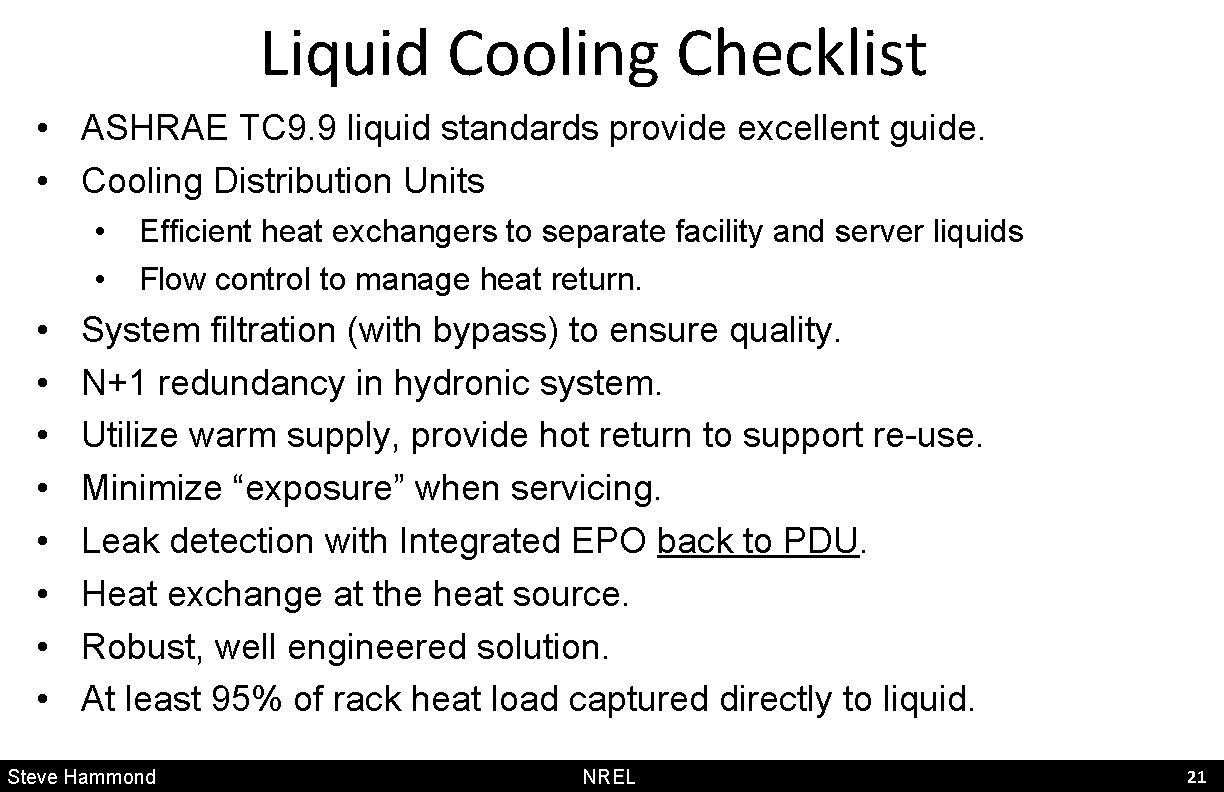

Liquid Cooling Checklist • ASHRAE TC 9. 9 liquid standards provide excellent guide. • Cooling Distribution Units • • • Efficient heat exchangers to separate facility and server liquids Flow control to manage heat return. System filtration (with bypass) to ensure quality. N+1 redundancy in hydronic system. Utilize warm supply, provide hot return to support re-use. Minimize “exposure” when servicing. Leak detection with Integrated EPO back to PDU. Heat exchange at the heat source. Robust, well engineered solution. At least 95% of rack heat load captured directly to liquid. Steve Hammond NREL 21 Steve Hammond

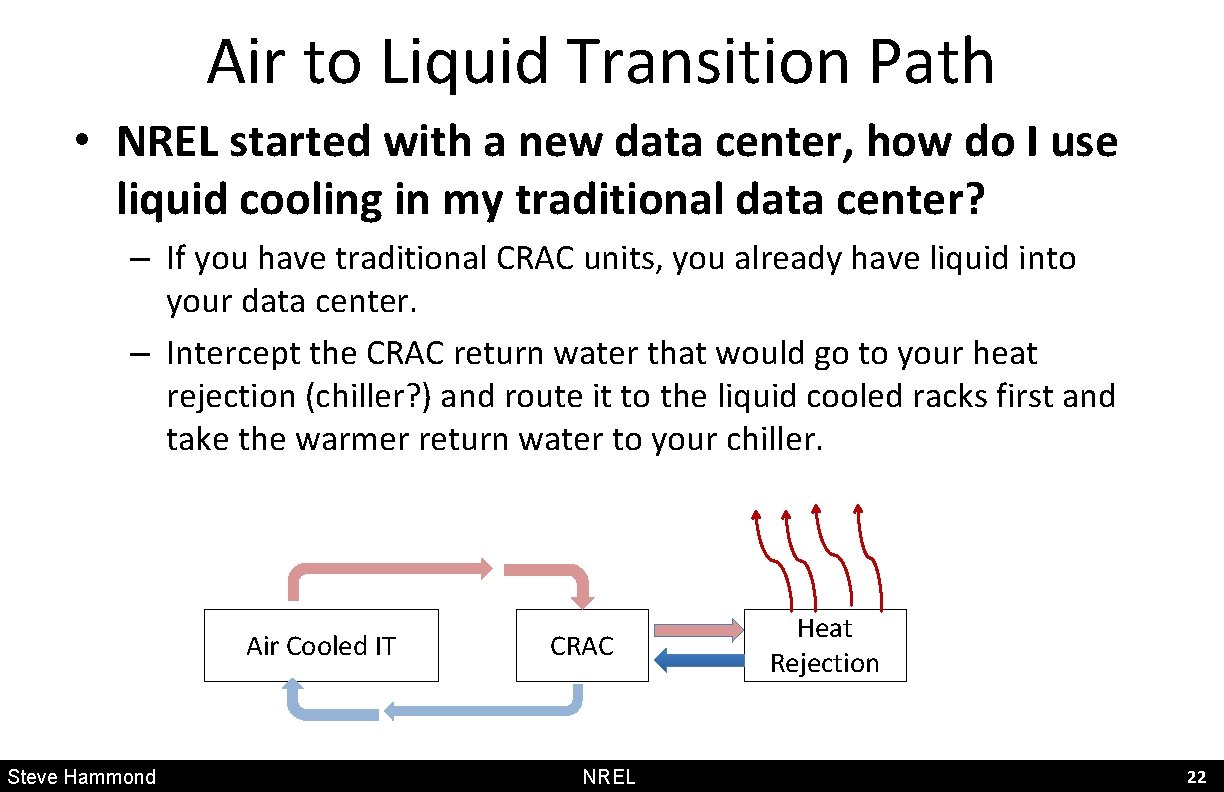

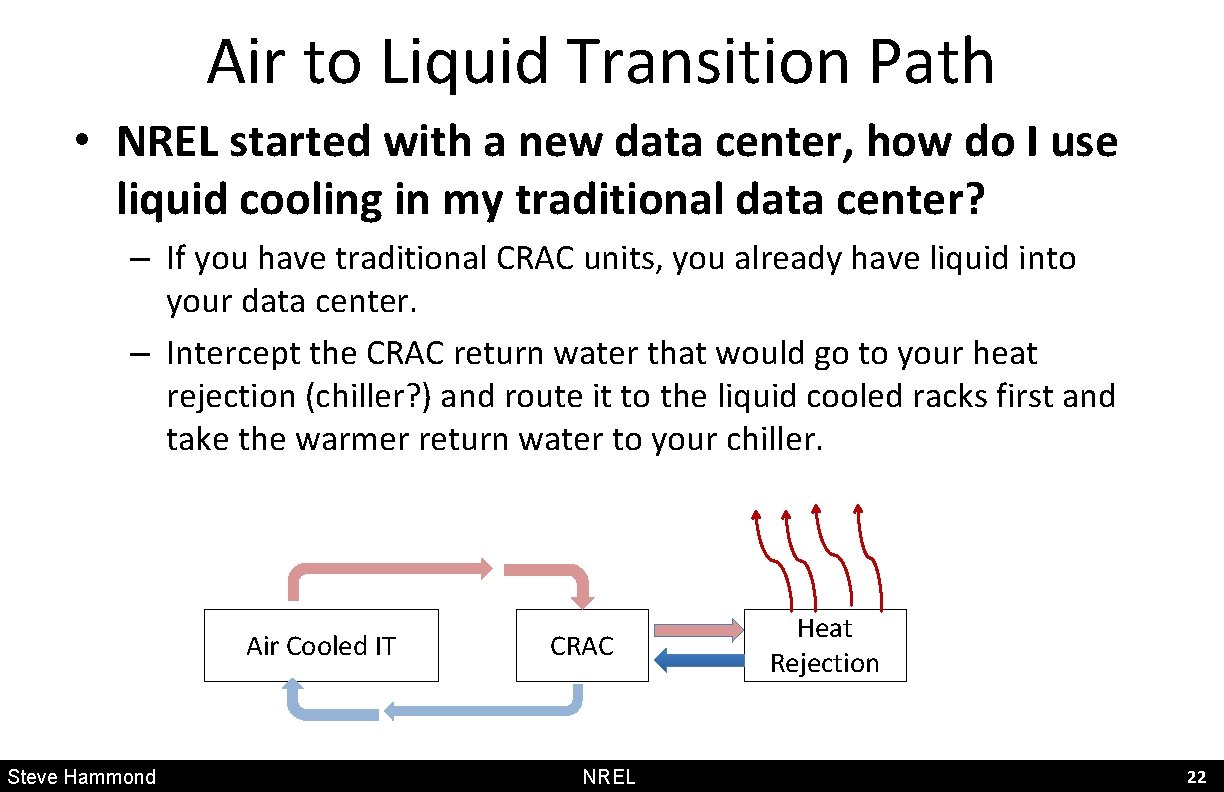

Air to Liquid Transition Path • NREL started with a new data center, how do I use liquid cooling in my traditional data center? – If you have traditional CRAC units, you already have liquid into your data center. – Intercept the CRAC return water that would go to your heat rejection (chiller? ) and route it to the liquid cooled racks first and take the warmer return water to your chiller. Air Cooled IT Steve Hammond CRAC NREL Heat Rejection 22 Steve Hammond

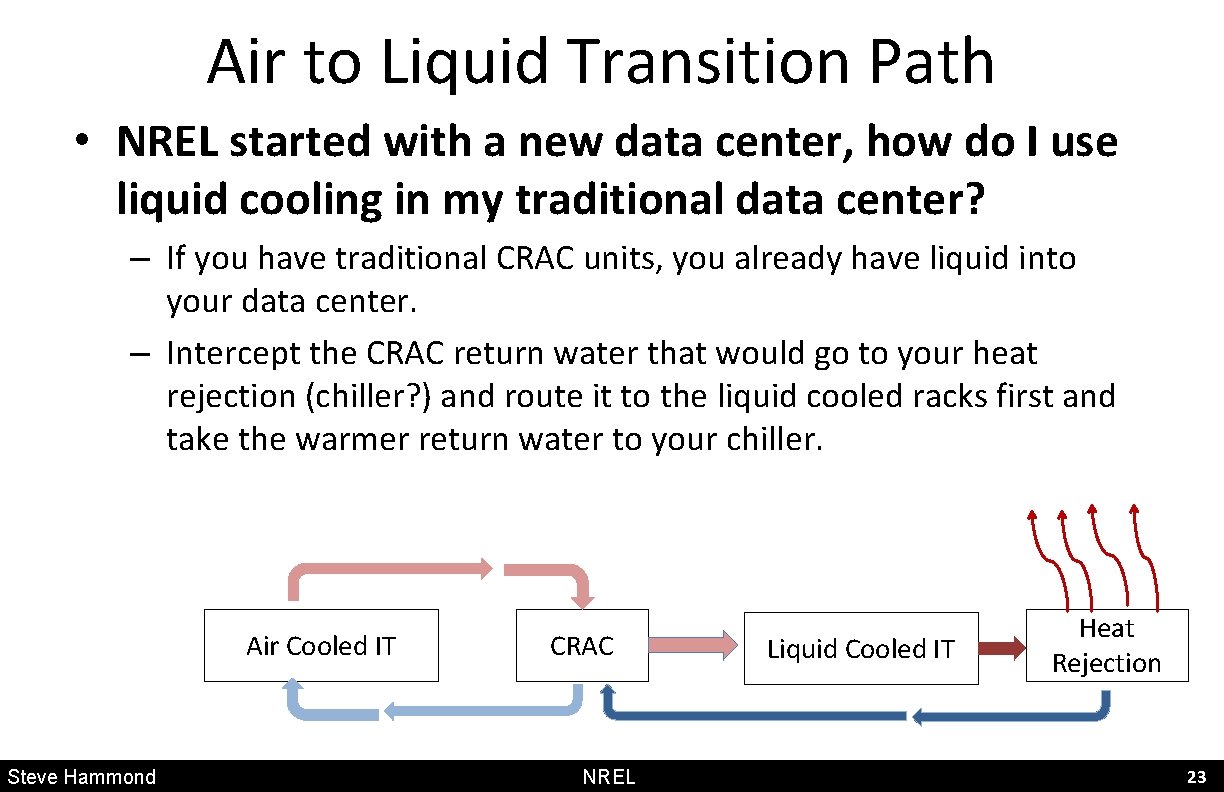

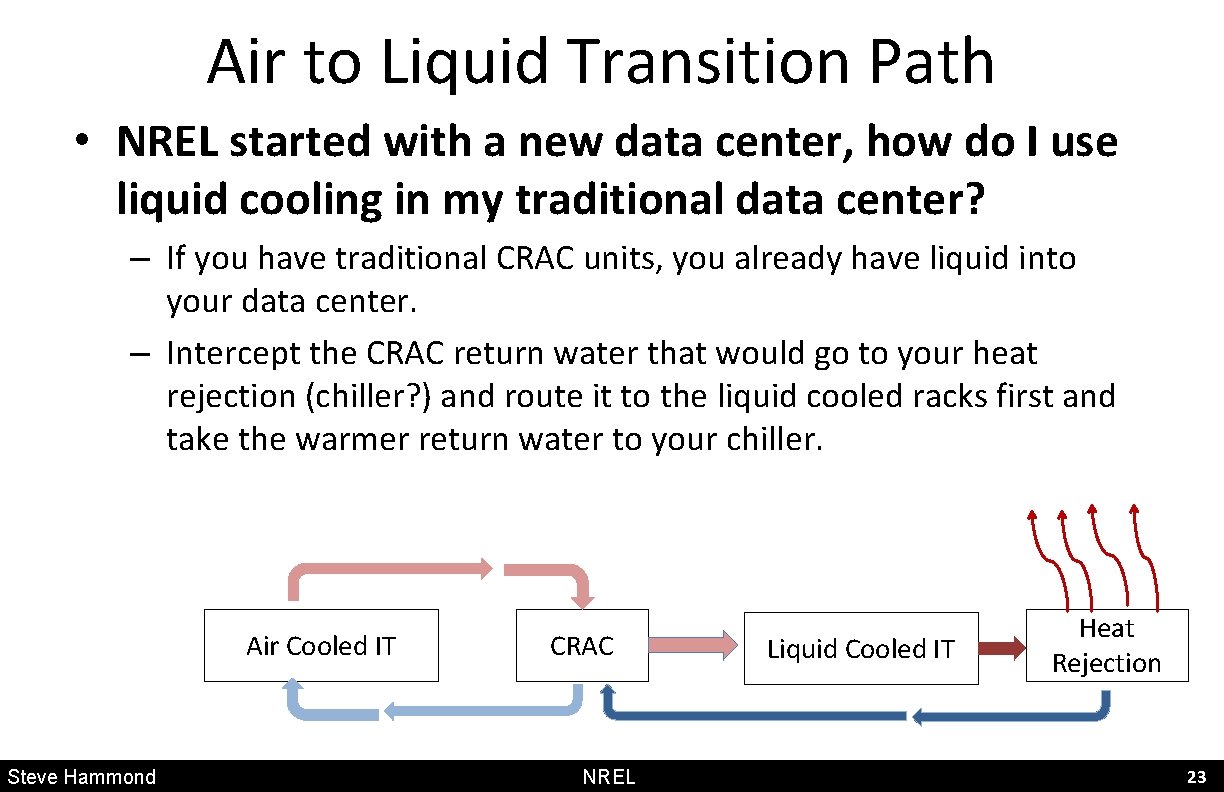

Air to Liquid Transition Path • NREL started with a new data center, how do I use liquid cooling in my traditional data center? – If you have traditional CRAC units, you already have liquid into your data center. – Intercept the CRAC return water that would go to your heat rejection (chiller? ) and route it to the liquid cooled racks first and take the warmer return water to your chiller. Air Cooled IT Steve Hammond CRAC NREL Liquid Cooled IT Heat Rejection 23 Steve Hammond

Final Thoughts • Energy Efficient Data Centers – Well documented, just apply best practices. It’s not rocket science. – Separate hot from cold, ditch your chiller. – Don’t fear H 20: Liquid cooling will be increasingly prevalent. – PUE of 1. 0 X, focus on the “ 1” and find creative waste heat re-use. • Organizational Alignment is Crucial – All stake holders have to benefit – Facilities, IT, and CFO. • Holistic approaches to Work Flow and Energy Management. – Lots of open research questions. – Projects may get an energy allocation rather than a node-hour allocation. – Utility time-of-day pricing drive how/when jobs are scheduled within a quality of service agreement. Steve Hammond National Renewable Energy Laboratory NREL 24 Steve Hammond

Questions? Steve Hammond NREL 25