The Google File System Presentation by Eric Frohnhoefer

The Google File System Presentation by: Eric Frohnhoefer CS 5204 – Operating Systems 1

Google File System Assumptions n Built from inexpensive commodity components ¨ n Modest number of large files ¨ n n n Cheap components frequently fail Few million files, each 100 MB or larger Support for large streaming reads and small random reads Files written once then appended High sustained bandwidth favored over low latency CS 5204 – Operating Systems 2

Google File System Design Decisions n n Single master, multiple chunkservers File structure Fixed size 64 MB chunks ¨ Chunk divvied into 64 K blocks ¨ 32 bit checksum computer for each block ¨ Each chunk replicated across 3+ chunkservers ¨ n Familiar interface Create, delete, open, close, read, and write ¨ Snapshot and record append ¨ n No caching CS 5204 – Operating Systems 3

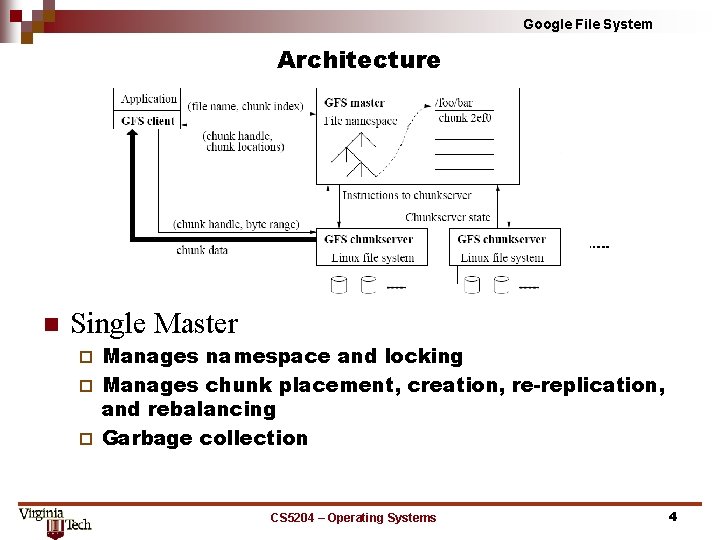

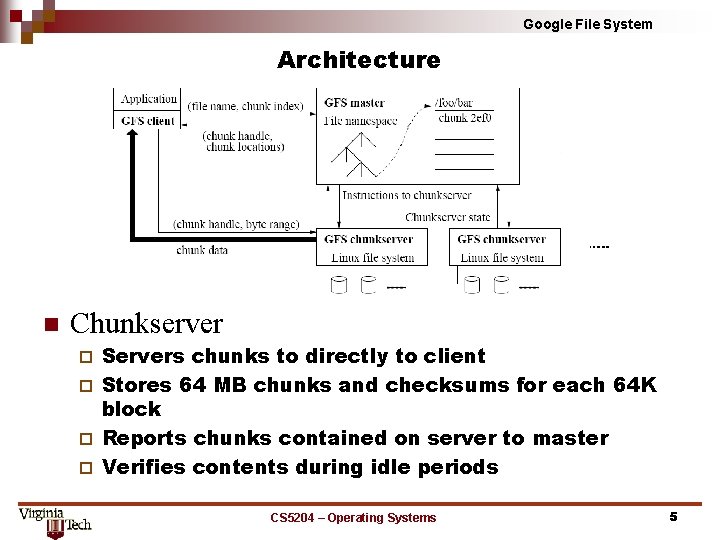

Google File System Architecture n Single Master Manages namespace and locking ¨ Manages chunk placement, creation, re-replication, and rebalancing ¨ Garbage collection ¨ CS 5204 – Operating Systems 4

Google File System Architecture n Chunkserver Servers chunks to directly to client ¨ Stores 64 MB chunks and checksums for each 64 K block ¨ Reports chunks contained on server to master ¨ Verifies contents during idle periods ¨ CS 5204 – Operating Systems 5

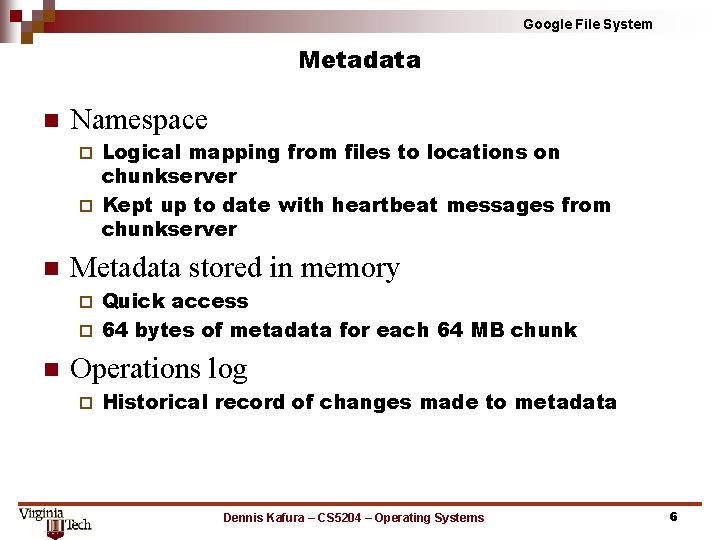

Google File System Metadata n Namespace Logical mapping from files to locations on chunkserver ¨ Kept up to date with heartbeat messages from chunkserver ¨ n Metadata stored in memory Quick access ¨ 64 bytes of metadata for each 64 MB chunk ¨ n Operations log ¨ Historical record of changes made to metadata Dennis Kafura – CS 5204 – Operating Systems 6

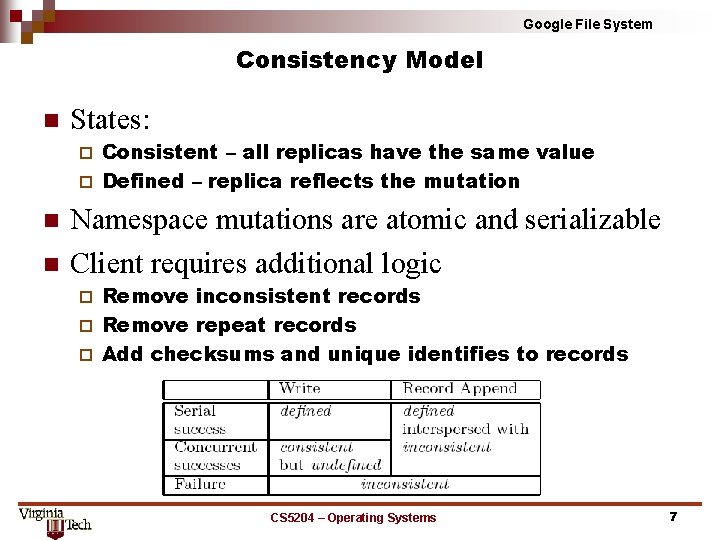

Google File System Consistency Model n States: Consistent – all replicas have the same value ¨ Defined – replica reflects the mutation ¨ n n Namespace mutations are atomic and serializable Client requires additional logic Remove inconsistent records ¨ Remove repeat records ¨ Add checksums and unique identifies to records ¨ CS 5204 – Operating Systems 7

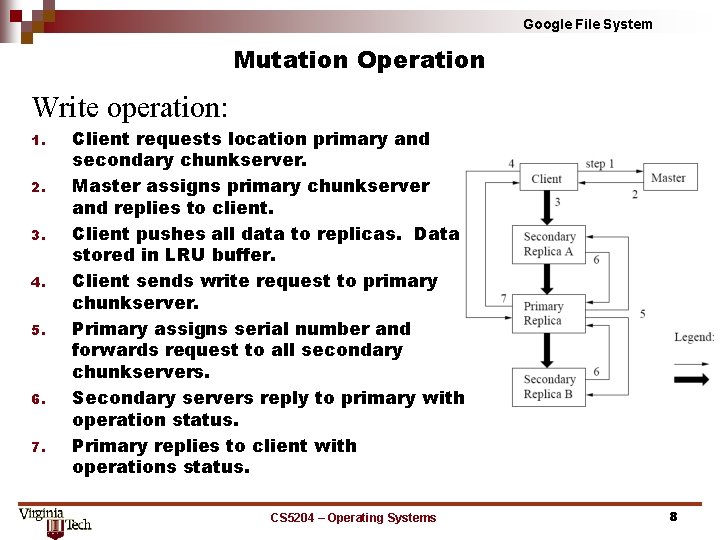

Google File System Mutation Operation Write operation: 1. 2. 3. 4. 5. 6. 7. Client requests location primary and secondary chunkserver. Master assigns primary chunkserver and replies to client. Client pushes all data to replicas. Data stored in LRU buffer. Client sends write request to primary chunkserver. Primary assigns serial number and forwards request to all secondary chunkservers. Secondary servers reply to primary with operation status. Primary replies to client with operations status. CS 5204 – Operating Systems 8

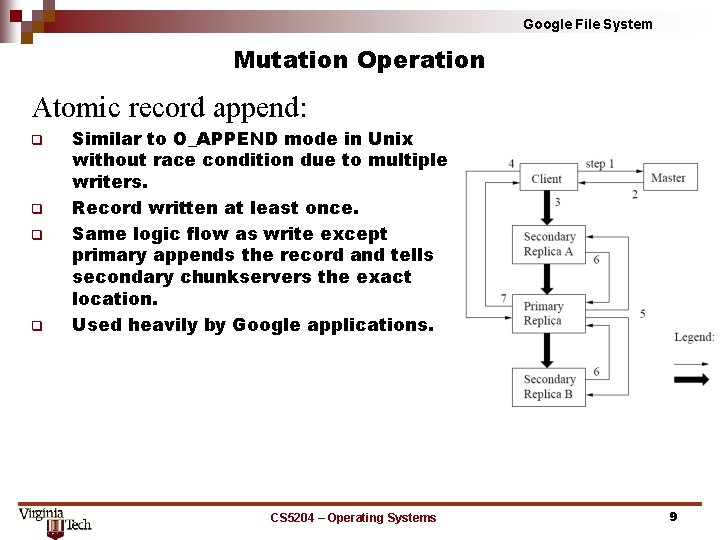

Google File System Mutation Operation Atomic record append: q q Similar to O_APPEND mode in Unix without race condition due to multiple writers. Record written at least once. Same logic flow as write except primary appends the record and tells secondary chunkservers the exact location. Used heavily by Google applications. CS 5204 – Operating Systems 9

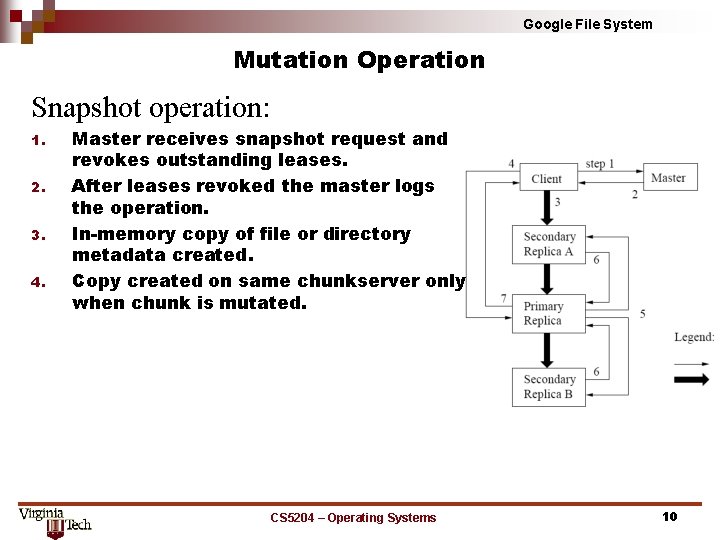

Google File System Mutation Operation Snapshot operation: 1. 2. 3. 4. Master receives snapshot request and revokes outstanding leases. After leases revoked the master logs the operation. In-memory copy of file or directory metadata created. Copy created on same chunkserver only when chunk is mutated. CS 5204 – Operating Systems 10

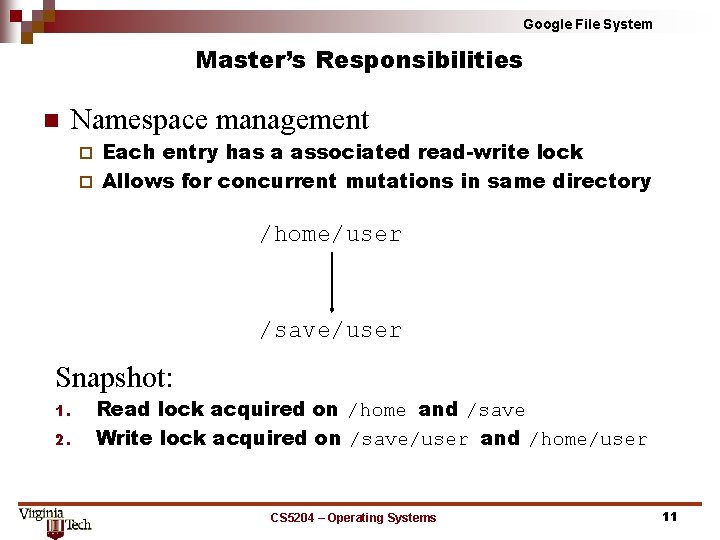

Google File System Master’s Responsibilities n Namespace management Each entry has a associated read-write lock ¨ Allows for concurrent mutations in same directory ¨ /home/user /save/user Snapshot: 1. 2. Read lock acquired on /home and /save Write lock acquired on /save/user and /home/user CS 5204 – Operating Systems 11

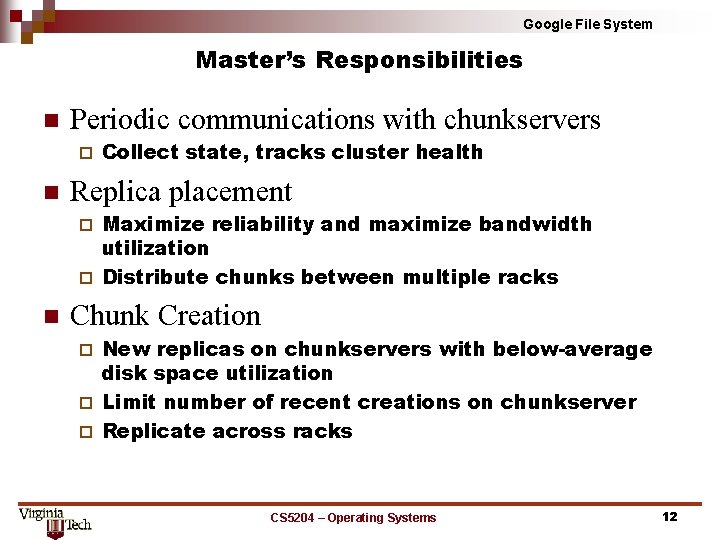

Google File System Master’s Responsibilities n Periodic communications with chunkservers ¨ n Collect state, tracks cluster health Replica placement Maximize reliability and maximize bandwidth utilization ¨ Distribute chunks between multiple racks ¨ n Chunk Creation New replicas on chunkservers with below-average disk space utilization ¨ Limit number of recent creations on chunkserver ¨ Replicate across racks ¨ CS 5204 – Operating Systems 12

Google File System Master’s Responsibilities n Re-replication Occurs when number of replicas falls below userspecified goal ¨ Re-replication is prioritized ¨ n Rebalance ¨ n Master examines the current replica distribution and moves replicas for better disk space and load balancing. Garbage collection Master logs deletion immediately ¨ File is renamed a given a deletion timestamp ¨ Files actually deleted later at user-specified date ¨ CS 5204 – Operating Systems 13

Google File System High Availability n n Fast recovery Chunk replication Default 3 replicas ¨ Distribute across multiple racks ¨ n Shadow Master state is fully replicated. ¨ Mutations only committed once log has been written on all replicas. ¨ Provides read-only access even when master is down ¨ Dennis Kafura – CS 5204 – Operating Systems 14

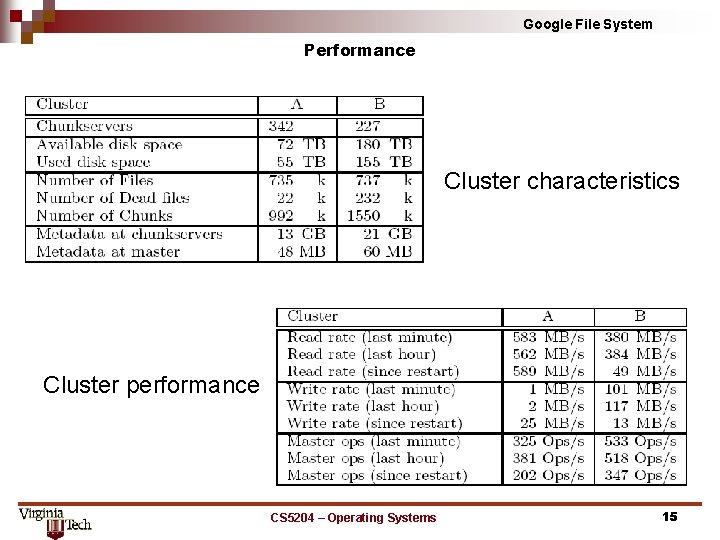

Google File System Performance Cluster characteristics Cluster performance CS 5204 – Operating Systems 15

Google File System Amazon S 3 n n n RESTful and SOAP style interface Bit. Torrent for distributed download 99. 99999% durability and 99. 99% uptime ¨ n Replicated 3 times across 2 datacenters Cost Storage: $0. 14 / GB / Month ¨ Bandwidth: $0. 10 / GB ¨ Requests: $0. 01 / 1000 Requests ¨ n Permissions controlled by Access Control List (ACL) CS 5204 – Operating Systems 16

Google File System Conclusions n n n Simple solution Seamlessly handles hardware failures Purpose built to Google’s needs Large files ¨ High read throughput ¨ Record appends ¨ Dennis Kafura – CS 5204 – Operating Systems 17

Google File System Reference n n n Cluster Computing and Map. Reduce Lecture 3 http: //www. youtube. com/watch? v=5 Eib_H_z. CEY http: //courses. cs. vt. edu/cs 5204/fall 10 -kafura. NVC/Papers/File. Systems/Google. File. System. pdf http: //communication. howstuffworks. com/google-file -system. htm Dennis Kafura – CS 5204 – Operating Systems 18

- Slides: 18