The Georgia Tech Network Simulator GTNet S ECE

- Slides: 20

The Georgia Tech Network Simulator (GTNet. S) ECE 6110 August 25, 2008 George F. Riley

Overview Network Simulation Basics GTNet. S Design Philosophy GTNet. S Details BGP++ Scalability Results FAQ Future Plans Demos 2

Network Simulation Basics - 1 Discrete Event Simulation ◦ Events model packet transmission, receipt, timers, etc. ◦ Future events maintained in sorted Event List ◦ Processing events results in zero or more new events Packet transmit event generates a future packet receipt event at next hop 3

Network Simulation Basics - 2 Create Topology ◦ Nodes, Links, Queues, Routing, etc. Create Data Demand on Network ◦ Web Browsers, FTP transfers, Peer-to. Peer Searching and Downloads, On--Off Data Sources, etc. Run the Simulation Analyze Results 4

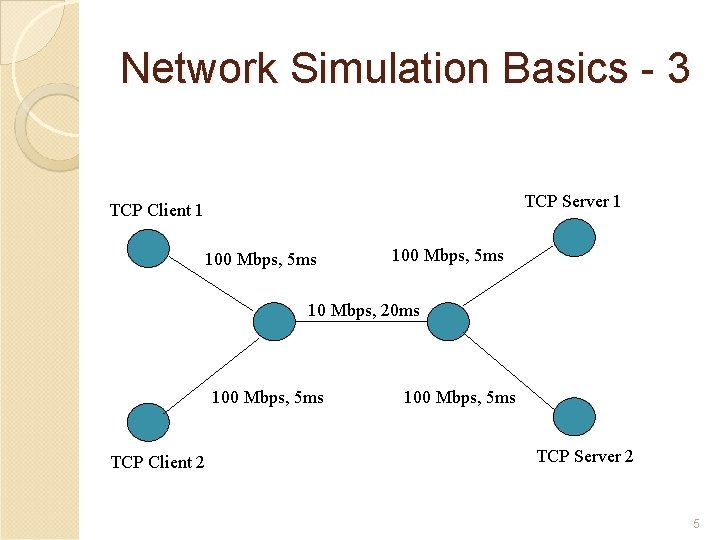

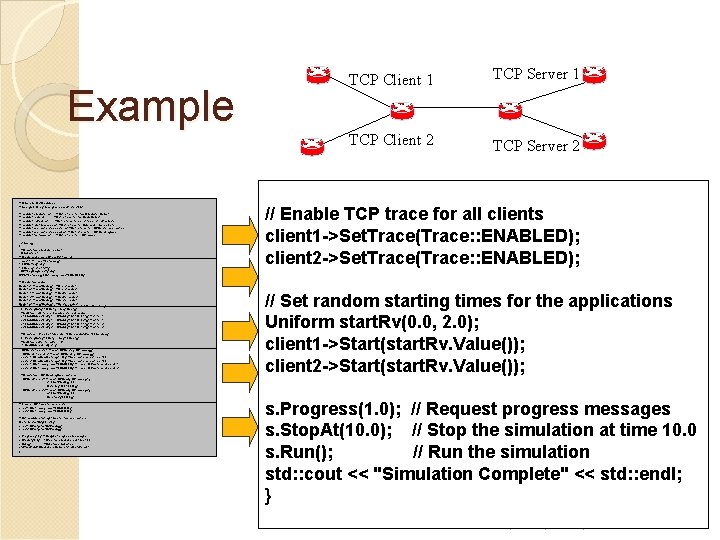

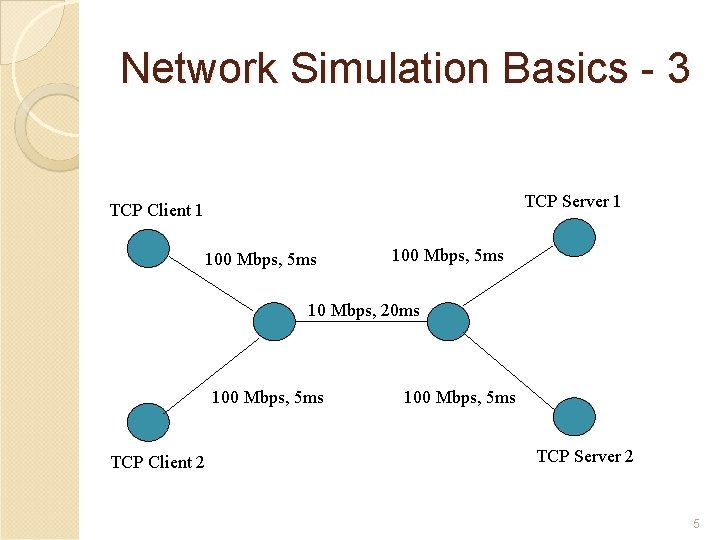

Network Simulation Basics - 3 TCP Server 1 TCP Client 1 100 Mbps, 5 ms 10 Mbps, 20 ms 100 Mbps, 5 ms TCP Client 2 100 Mbps, 5 ms TCP Server 2 5

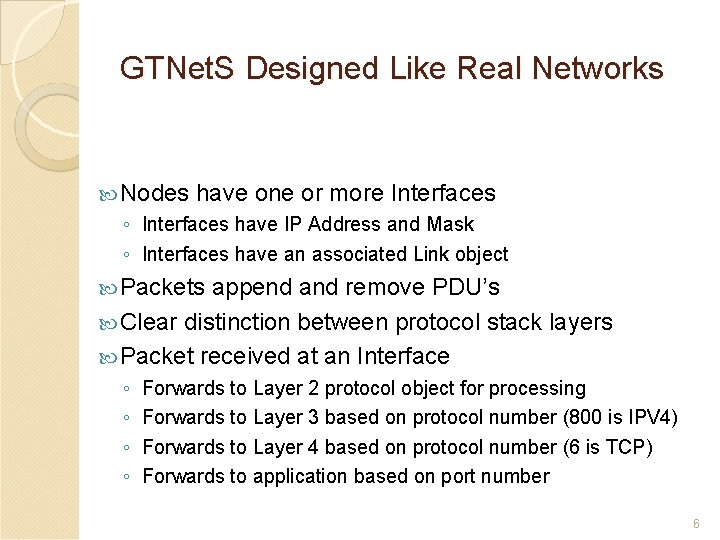

GTNet. S Designed Like Real Networks Nodes have one or more Interfaces ◦ Interfaces have IP Address and Mask ◦ Interfaces have an associated Link object Packets append and remove PDU’s Clear distinction between protocol stack layers Packet received at an Interface ◦ ◦ Forwards to Layer 2 protocol object for processing Forwards to Layer 3 based on protocol number (800 is IPV 4) Forwards to Layer 4 based on protocol number (6 is TCP) Forwards to application based on port number 6

GTNet. S Design Philosophy Written Completely in C++ Released as Open Source All network modeling via C++ objects User Simulation is a C++ main program Include our supplied “#include” files Link with our supplied libraries Run the resulting executable 7

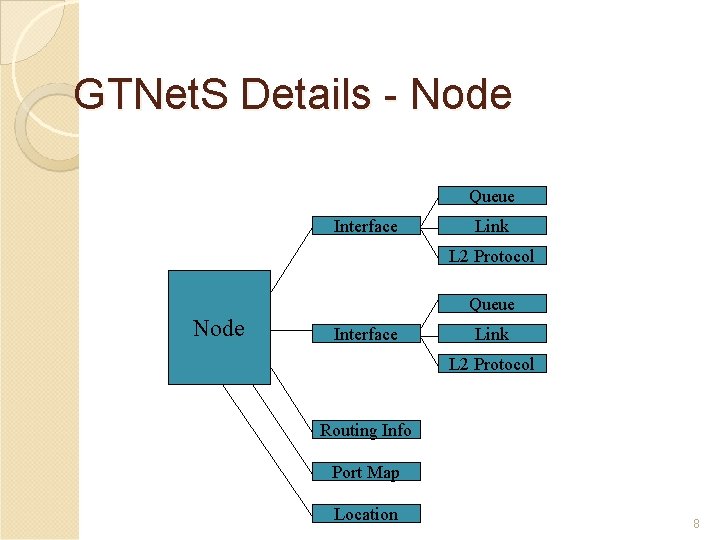

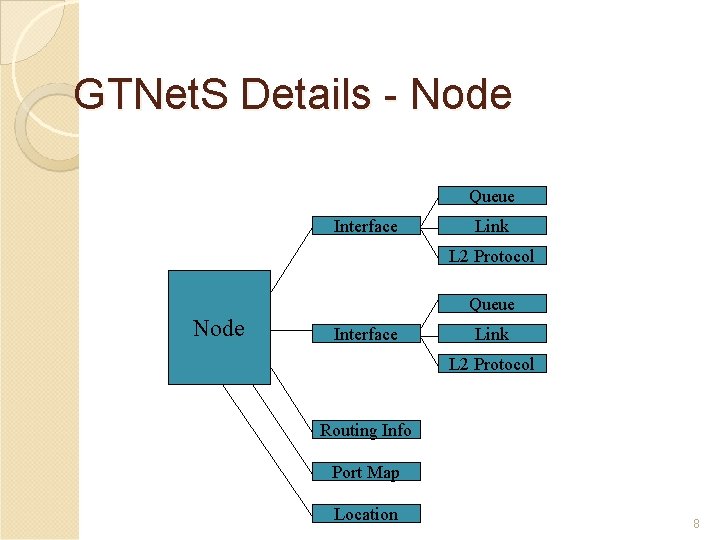

GTNet. S Details - Node Queue Interface Link L 2 Protocol Queue Node Interface Link L 2 Protocol Routing Info Port Map Location 8

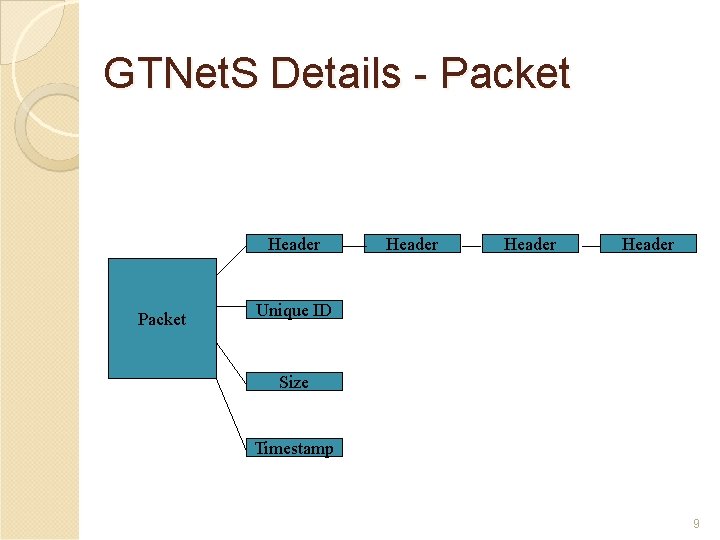

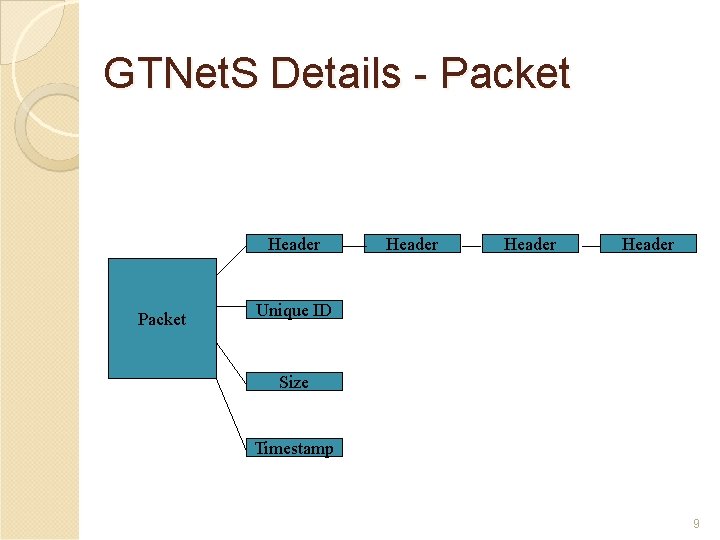

GTNet. S Details - Packet Header Unique ID Size Timestamp 9

GTNet. S Applications Web Browser (based on Mah’ 1997) Web Server - including Gnutella GCache On-Off Data Source FTP File Transfer Bulk Data Sending/Receiving Gnutella Peer-to-Peer Syn Flood UDP Storm Internet Worms VOIP 10

GTNet. S Protocols TCP, complete client/server ◦ Tahoe, Reno, New-Reno ◦ Sack (in progress) ◦ Congestion Window, Slow Start, Receiver Window UDP IPV 4 (IPV 6 Planned) IEEE 802. 3 (Ethernet and point-to-point) IEEE 802. 11 (Wireless) Address Resolution Protocol (ARP) ICMP (Partial) 11

GTNet. S Routing Static (pre-computed routes) Nix-Vector (on-demand) Manual (specified by simulation application) EIGRP BGP OSPF DSR AODV 12

GTNet. S Support Objects Random Number Generation ◦ Uniform, Exponential, Pareto, Sequential, Emiprical, Constant Statistics Collection ◦ Histogram, Average/Min/Max Command Line Argument Processing Rate, Time, and IP Address Parsing ◦ Rate(“ 10 Mb”), Time(“ 10 ms”) ◦ IPAddr(“ 192. 168. 0. 1”) 13

GTNet. S Distributed Simulation Split topology model into several parts Each part runs on separate workstation or separate CPU in SMP Each simulator has complete topology picture ◦ “Real” nodes and “Ghost” nodes Time management and message exchange via Georgia Tech “Federated Developers Kit”. Allows larger topologies that single simulation May run faster 14

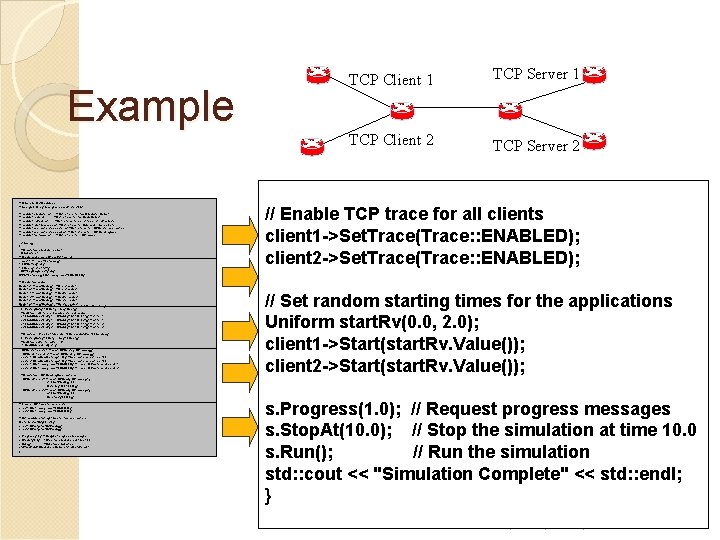

Example // // Simple GTNet. S example // // George F. F. Riley, Georgia Tech, Winter 2002 #include // #include "simulator. h" // Definitions for the Simulator Object #include // #include "node. h" // Definitions for the Node Object #include // #include "linkp 2 p. h" // Definitions for point-to-point link objects #include "ratetimeparse. h" // // Definitions for Rate and Time objects #include "application-tcpserver. h" // // Definitions for TCPServer application #include "application-tcpsend. h" // // Definitions for TCP Sending app #include // #include "tcp-tahoe. h" // Definitions for TCP Tahoe int main() {{ // // Create the simulator object Simulator s; s; // Create and enable IP packet tracing Trace* tr =and Trace: : Instance(); // Create enable IP packet tracing tr->IPDotted(true); Trace* tr = Trace: : Instance(); // Get a pointer to global trace object tr->Open("intro 1. txt"); tr->IPDotted(true); // Trace IP addresses in dotted notation TCP: : Log. Flags. Text(true); tr->Open("intro 1. txt"); // Create the trace file IPV 4: : Instance()->Set. Trace(Trace: : ENABLED); TCP: : Log. Flags. Text(true); // Log TCP flags in text mode IPV 4: : Instance()->Set. Trace(Trace: : ENABLED); // Enable IP tracing all nodes ////Createthe thenodes Node*c 1 c 1==new new. Node(); ////Clientnode 11 Node*c 2 c 2==new new. Node(); ////Clientnode 22 Node*r 1 r 1==new new. Node(); ////Routernode 11 Node*r 2 r 2==new new. Node(); ////Routernode 22 Node*s 1 s 1==new new. Node(); ////Servernode 11 Node* s 2 ==new Node(); ////Server node 22 s 2 a new Node(); Server node //Node* Create link object template, 100 Mb bandwidth, 5 ms delay Linkp 2 p l(Rate("100 Mb"), Time("5 ms")); // Add Create link object template, 100 Mb 5 ms delay // thea links to client and server leafbandwidth, nodes Linkp 2 p l(Rate("100 Mb"), Time("5 ms")); c 1 ->Add. Duplex. Link(r 1, l, IPAddr("192. 168. 0. 1")); // c 1 to r 1 // Add the links to clientl, and server leaf nodes // c 2 to r 1 c 2 ->Add. Duplex. Link(r 1, IPAddr("192. 168. 0. 2")); c 1 ->Add. Duplex. Link(r 1, s 1 ->Add. Duplex. Link(r 2, l, l, IPAddr("192. 168. 0. 1")); IPAddr("192. 168. 1. 1")); // // c 1 s 1 to to r 1 r 2 c 2 ->Add. Duplex. Link(r 1, l, IPAddr("192. 168. 0. 2")); // s 2 ->Add. Duplex. Link(r 2, l, IPAddr("192. 168. 1. 2")); // c 2 s 2 to to r 1 r 2 s 1 ->Add. Duplex. Link(r 2, l, IPAddr("192. 168. 1. 1")); // s 1 to r 2 s 2 ->Add. Duplex. Link(r 2, l, IPAddr("192. 168. 1. 2")); s 2 to r 2 // Create a link object template, 10 Mb bandwidth, //100 ms delay Linkp 2 p r(Rate("10 Mb"), Time("100 ms")); // Add Create link object template, // thea router to router link 10 Mb bandwidth, 100 ms delay Linkp 2 p r(Rate("10 Mb"), r); Time("100 ms")); r 1 ->Add. Duplex. Link(r 2, // Add the router to router link TCPServer* server 1 = new r 1 ->Add. Duplex. Link(r 2, r); TCPServer(TCPTahoe()); server 2 = new TCPServer(TCPTahoe()); //TCPServer* Create the TCP Servers server 1 ->Bind. And. Listen(s 1, 80); // Application on s 1, port 80 TCPServer* server 1 = new TCPServer(TCPTahoe()); server 2 ->Bind. And. Listen(s 2, 80); // Application on s 2, port 80 TCPServer* server 2 = new TCPServer(TCPTahoe()); server 1 ->Set. Trace(Trace: : ENABLED); // Trace TCP actions at server 1 ->Bind. And. Listen(s 1, 80); // Application on s 1, port 80 server 2 ->Set. Trace(Trace: : ENABLED); // Trace TCP actions at server 2 ->Bind. And. Listen(s 2, 80); // Application on s 2, port 80 server 1 ->Set. Trace(Trace: : ENABLED); // Trace TCP actions at server 1 // Create the TCP Sending Applications// Trace TCP actions at server 2 ->Set. Trace(Trace: : ENABLED); TCPSend* client 1 = new TCPSend(TCPTahoe(c 1), s 1 ->Get. IPAddr(), 80, // Create the TCP Sending Applications Uniform(1000, 10000)); TCPSend* client 1 = new TCPSend(TCPTahoe(c 1), TCPSend* client 2 = new TCPSend(TCPTahoe(c 2), s 1 ->Get. IPAddr(), 80, s 2 ->Get. IPAddr(), 80, Uniform(1000, 10000)); Constant(100000)); TCPSend* client 2 = new TCPSend(TCPTahoe(c 2), s 2 ->Get. IPAddr(), 80, // Enable TCP trace Constant(100000)); for all clients client 1 ->Set. Trace(Trace: : ENABLED); // Enable TCP trace for all clients client 2 ->Set. Trace(Trace: : ENABLED); client 1 ->Set. Trace(Trace: : ENABLED); client 2 ->Set. Trace(Trace: : ENABLED); // Set random starting times for the applications Uniform start. Rv(0. 0, 2. 0); // Set random starting times for the applications client 1 ->Start(start. Rv. Value()); Uniform start. Rv(0. 0, 2. 0); client 2 ->Start(start. Rv. Value()); client 1 ->Start(start. Rv. Value()); client 2 ->Start(start. Rv. Value()); s. Progress(1. 0); progress messages s. Progress(1. 0); // Request messages s. Stop. At(10. 0); simulation at time 10. 0 s. Stop. At(10. 0); // Stop the simulation at time 10. 0 s. Run(); // Run//the simulation s. Run(); Run the simulation std: : cout<< <<"Simulation. Complete"<< <<std: : endl; }} TCP Client 1 TCP Server 1 TCP Client 2 TCP Server 2 // Create the TCP Servers // Create. GTNet. S a link object template, // Simple example TCPServer* server 1 =IP new TCPServer(TCPTahoe()); //Enable Create TCP and enable packet tracing // trace for all clients //100 Mb bandwidth, 5 ms. Tech, delay. Winter 2002 // George F. Riley, Georgia TCPServer* server 2 = new TCPServer(TCPTahoe()); client 1 ->Set. Trace(Trace: : ENABLED); Trace* tr = Trace: : Instance(); Linkp 2 p l(Rate("100 Mb"), Time("5 ms")); server 1 ->Bind. And. Listen(s 1, client 2 ->Set. Trace(Trace: : ENABLED); tr->IPDotted(true); 80); #include "simulator. h" server 2 ->Bind. And. Listen(s 2, tr->Open("intro 1. txt"); 80); #include "node. h" // Add the links to client and server leaf nodes server 1 ->Set. Trace(Trace: : ENABLED); // TCP: : Log. Flags. Text(true); Set random starting times for the applications #include "linkp 2 p. h c 1 ->Add. Duplex. Link(r 1, l, IPAddr("192. 168. 0. 1")); server 2 ->Set. Trace(Trace: : ENABLED); Uniform IPV 4: : Instance()->Set. Trace(Trace: : ENABLED); start. Rv(0. 0, 2. 0); #include "ratetimeparse. h" c 2 ->Add. Duplex. Link(r 1, l, IPAddr("192. 168. 0. 2")); client 1 ->Start(start. Rv. Value()); #include "application-tcpserver. h" s 1 ->Add. Duplex. Link(r 2, l, IPAddr("192. 168. 1. 1")); //#include the Sending Applications client 2 ->Start(start. Rv. Value()); //Create"application-tcpsend. h" the. TCP nodes s 2 ->Add. Duplex. Link(r 2, l, IPAddr("192. 168. 1. 2")); #include TCPSend* = new TCPSend(TCPTahoe(c 1), Node* c 1"tcp-tahoe. h" =client 1 new Node(); // Client node 1 80, messages s. Progress(1. 0); Node* c 2 = new Node(); //s 1 ->Get. IPAddr(), Request// progress Client node 2 // Create int main() a link object template, s. Stop. At(10. 0); Node* r 1 = new Node(); //Uniform(1000, 10000)); Stop the // Router simulation node at 1 time 10. 0 {//10 Mb bandwidth, 100 ms delay s. Run(); Node* r 2 the =client 2 new Node(); //=Run the // simulation Router node 2 TCPSend* new TCPSend(TCPTahoe(c 2), // Create simulator object Linkp 2 p r(Rate("10 Mb"), Time("100 ms")); std: : cout Node* s 1<< =s; new "Simulation Node(); s 2 ->Get. IPAddr(), Complete" // Server 80, node << std: : endl; 1 //Simulator Add the router to router link }Node* s 2 = new Node(); Constant(100000)); // Server node 2 r 1 ->Add. Duplex. Link(r 2, r); UNC Chapel Hill, Feb 3, 2006 15

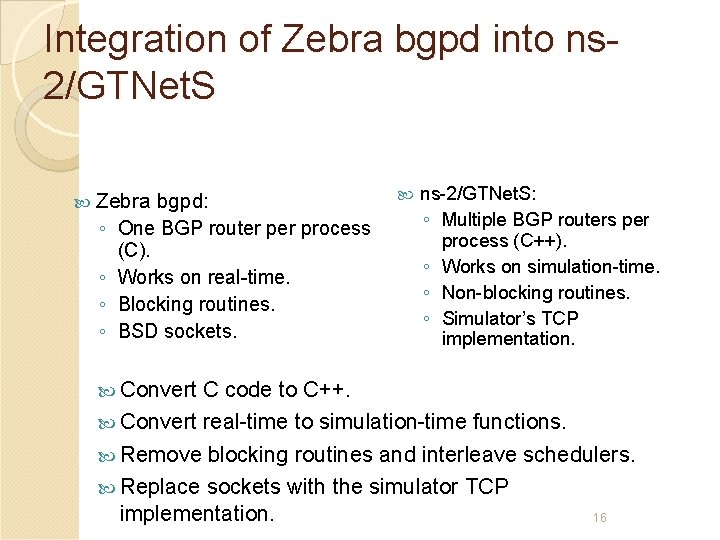

Integration of Zebra bgpd into ns 2/GTNet. S Zebra ◦ ◦ bgpd: One BGP router process (C). Works on real-time. Blocking routines. BSD sockets. Convert ns-2/GTNet. S: ◦ Multiple BGP routers per process (C++). ◦ Works on simulation-time. ◦ Non-blocking routines. ◦ Simulator’s TCP implementation. C code to C++. Convert real-time to simulation-time functions. Remove blocking routines and interleave schedulers. Replace sockets with the simulator TCP implementation. 16

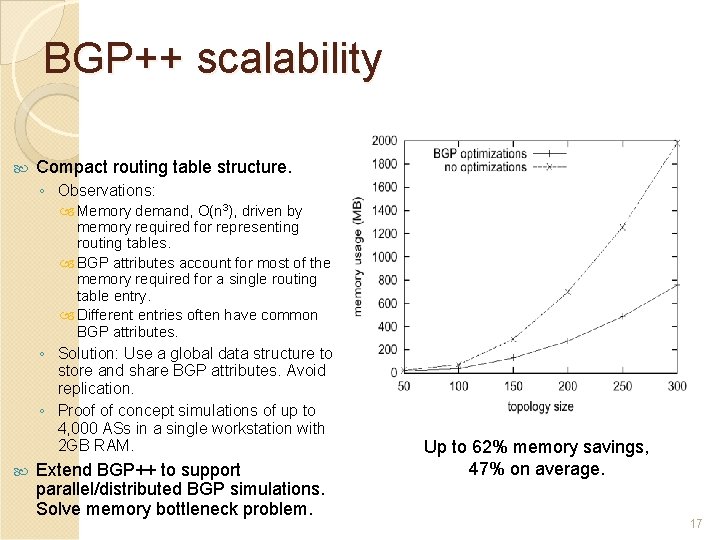

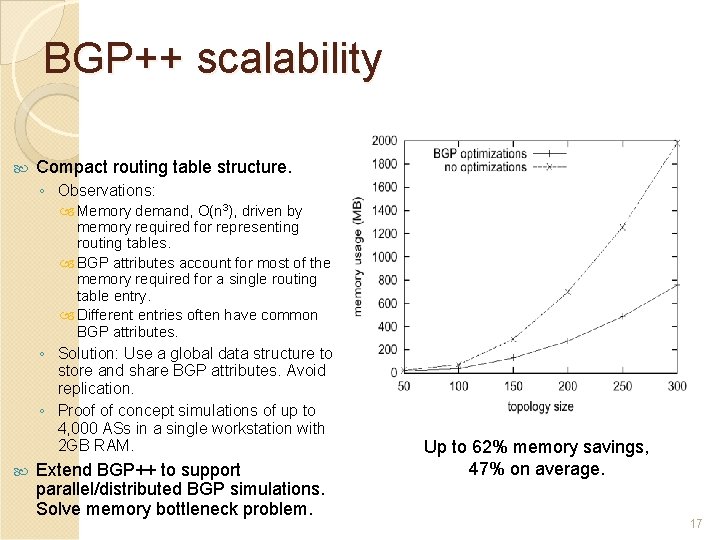

BGP++ scalability Compact routing table structure. ◦ Observations: Memory demand, O(n 3), driven by memory required for representing routing tables. BGP attributes account for most of the memory required for a single routing table entry. Different entries often have common BGP attributes. ◦ Solution: Use a global data structure to store and share BGP attributes. Avoid replication. ◦ Proof of concept simulations of up to 4, 000 ASs in a single workstation with 2 GB RAM. Extend BGP++ to support parallel/distributed BGP simulations. Solve memory bottleneck problem. Up to 62% memory savings, 47% on average. 17

Other BGP++ features BGP++ inherits Zebra’s CISCO-like configuration language. Develop a tool to automatically generate ns 2/GTNets configuration from simple user input. Develop a tool to automatically partition topology and generate pdns configuration from ns-2 configuration, or distributed GTNet. S topology. ◦ Model simulation topology as a weighted graph: node weights reflect expected workload, link weights reflect expected traffic. ◦ Graph partitioning problem: find a partition in k parts that minimizes the edge-cut under the constraint that the sum of the nodes’ weights in each part is balanced. 18

Scalability Results - PSC Pittsburgh Supercomputer Center 128 Systems, 512 CPU’s, 64 -bit HP Systems Topology Size ◦ 15, 064 Nodes per System ◦ 1, 928, 192 Nodes Total Topology ◦ 1, 820, 672 Total Flows ◦ 18, 650, 757, 866 Simulation Events ◦ 1, 289 Seconds Execution Time 19

Questions? 20