The Extraction Transformation and Load ETL Process The

- Slides: 52

The Extraction Transformation and Load (ETL) Process

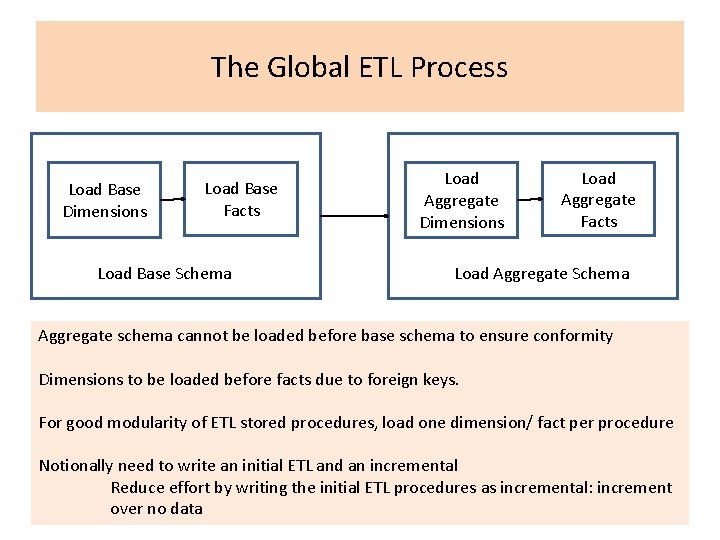

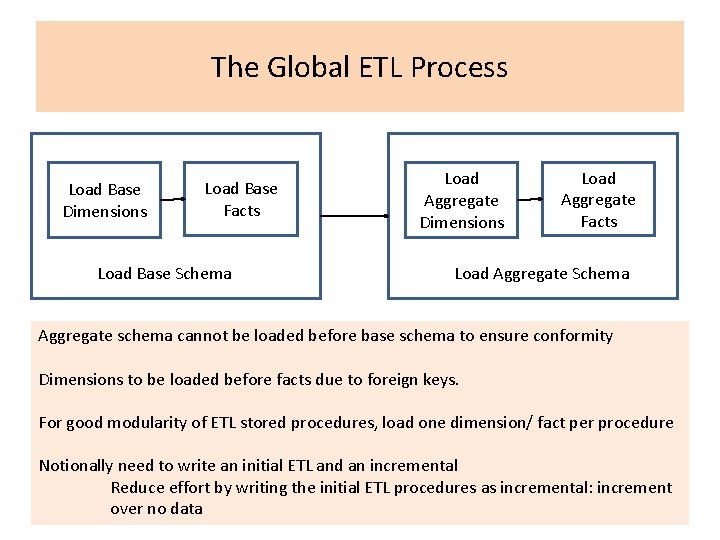

The Global ETL Process Load Base Dimensions Load Base Facts Load Base Schema Load Aggregate Dimensions Load Aggregate Facts Load Aggregate Schema Aggregate schema cannot be loaded before base schema to ensure conformity Dimensions to be loaded before facts due to foreign keys. For good modularity of ETL stored procedures, load one dimension/ fact per procedure Notionally need to write an initial ETL and an incremental Reduce effort by writing the initial ETL procedures as incremental: increment over no data

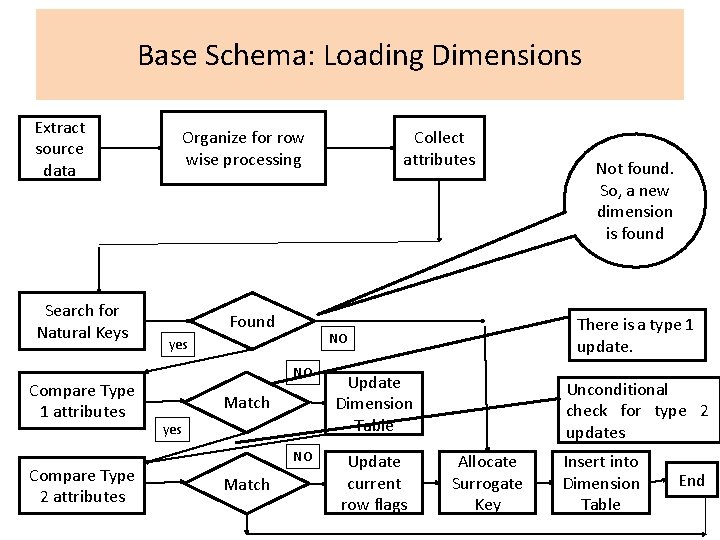

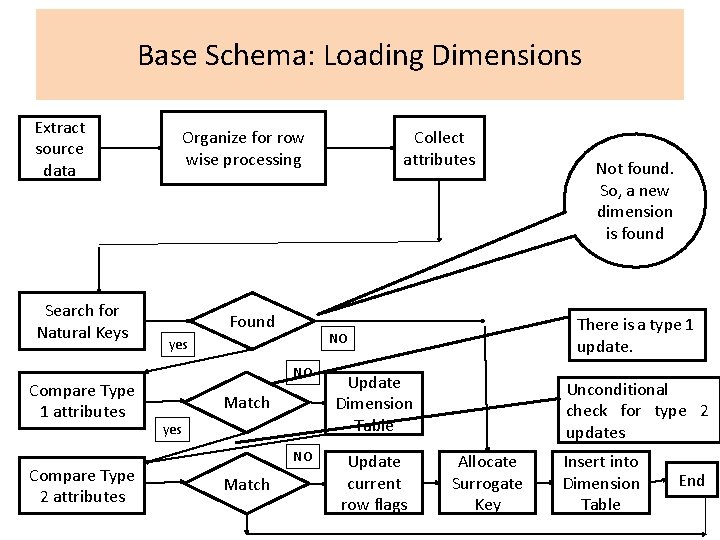

Base Schema: Loading Dimensions Extract source data Search for Natural Keys Compare Type 1 attributes Compare Type 2 attributes Organize for row wise processing Found NO Update Dimension Table NO Update current row flags Match yes Not found. So, a new dimension is found There is a type 1 update. NO yes Match Collect attributes Unconditional check for type 2 updates Allocate Surrogate Key Insert into Dimension Table End

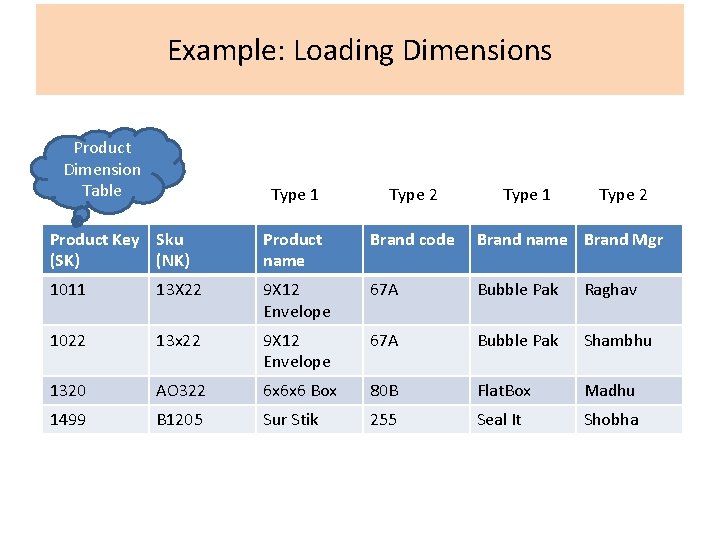

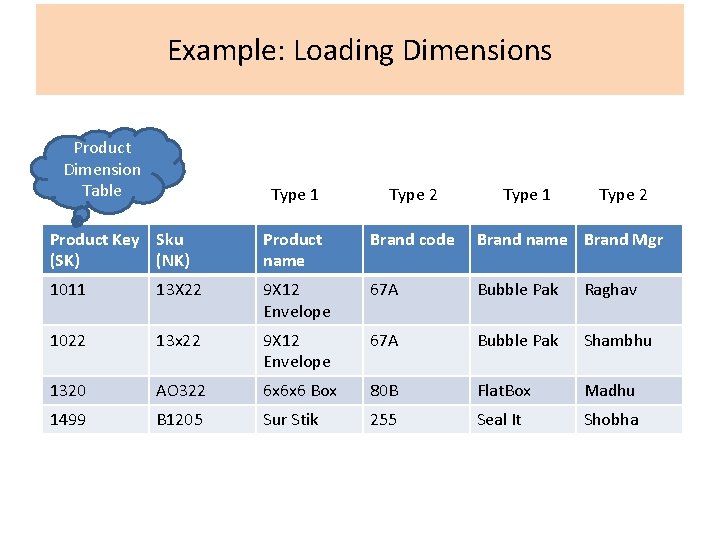

Example: Loading Dimensions Product Dimension Table Type 1 Type 2 Product Key Sku (SK) (NK) Product name Brand code Brand name Brand Mgr 1011 13 X 22 9 X 12 Envelope 67 A Bubble Pak Raghav 1022 13 x 22 9 X 12 Envelope 67 A Bubble Pak Shambhu 1320 AO 322 6 x 6 x 6 Box 80 B Flat. Box Madhu 1499 B 1205 Sur Stik 255 Seal It Shobha

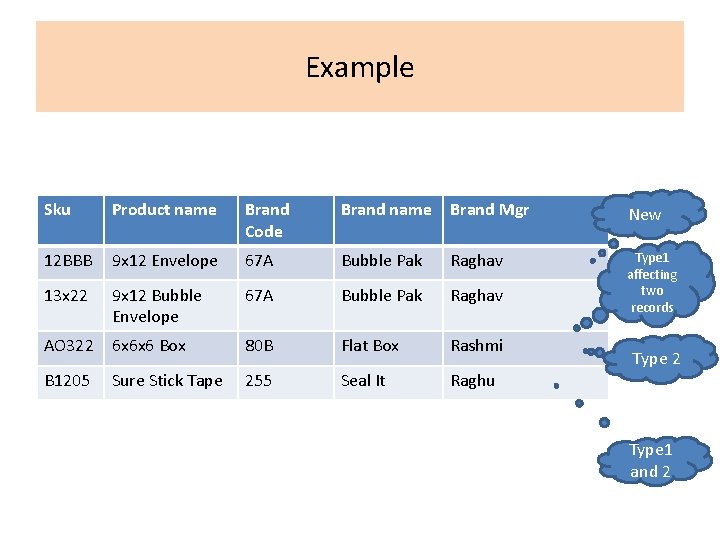

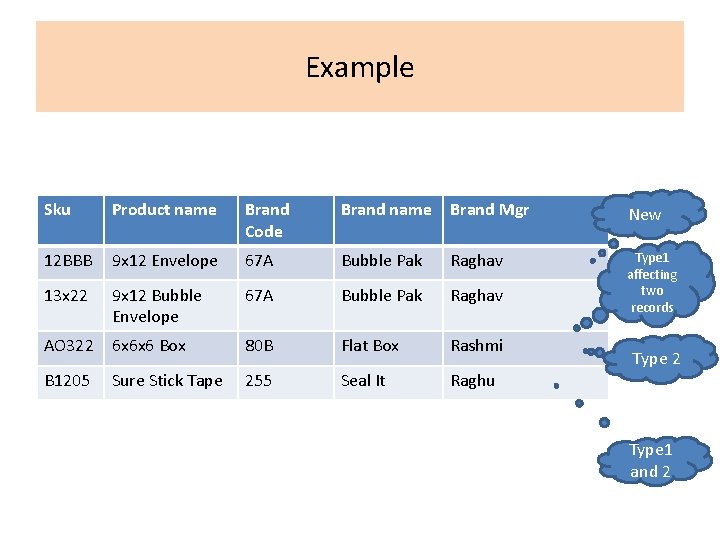

Example Sku Product name Brand Code Brand name Brand Mgr New 12 BBB 9 x 12 Envelope 67 A Bubble Pak Raghav 13 x 22 9 x 12 Bubble Envelope 67 A Bubble Pak Raghav Type 1 affecting two records AO 322 6 x 6 x 6 Box 80 B Flat Box Rashmi B 1205 Sure Stick Tape 255 Seal It Raghu Type 2 Type 1 and 2

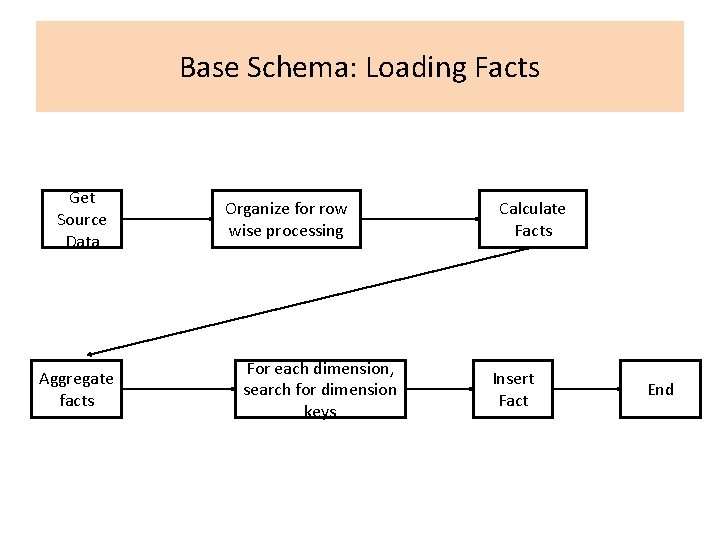

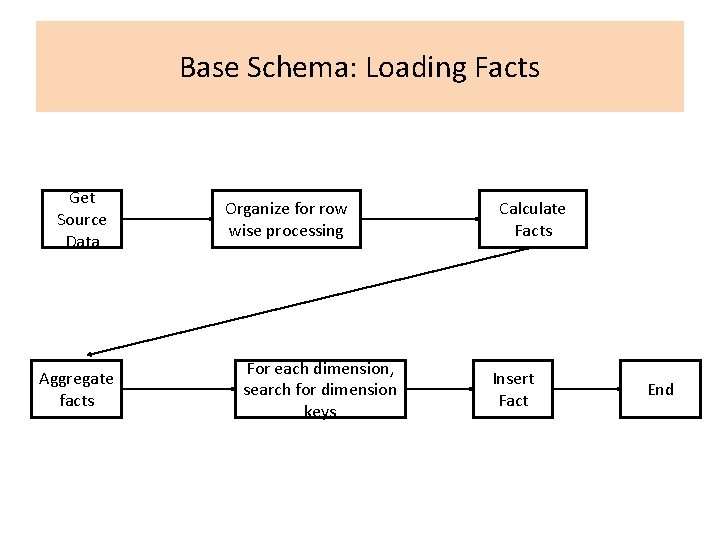

Base Schema: Loading Facts Get Source Data Aggregate facts Organize for row wise processing For each dimension, search for dimension keys Calculate Facts Insert Fact End

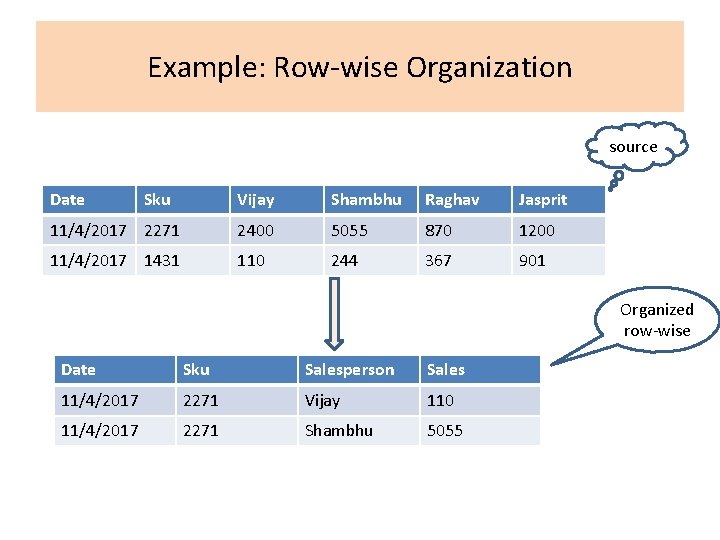

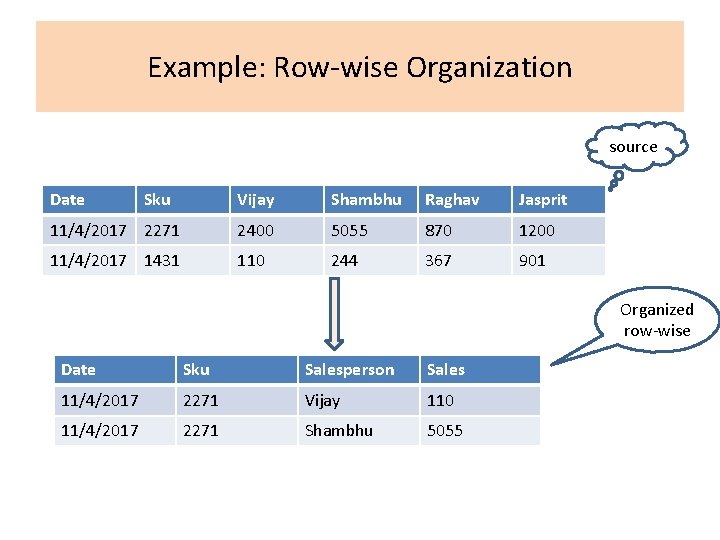

Example: Row-wise Organization source Date Sku Vijay Shambhu Raghav Jasprit 11/4/2017 2271 2400 5055 870 1200 11/4/2017 1431 110 244 367 901 Organized row-wise Date Sku Salesperson Sales 11/4/2017 2271 Vijay 110 11/4/2017 2271 Shambhu 5055

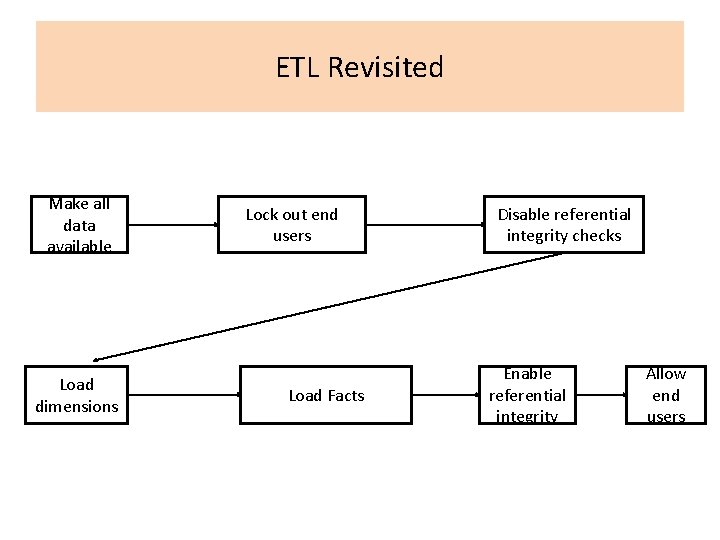

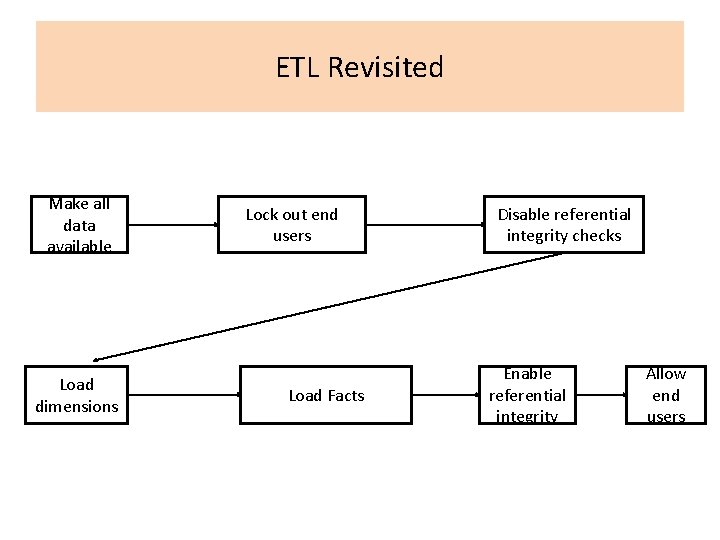

ETL Revisited Make all data available Load dimensions Lock out end users Load Facts Disable referential integrity checks Enable referential integrity Allow end users

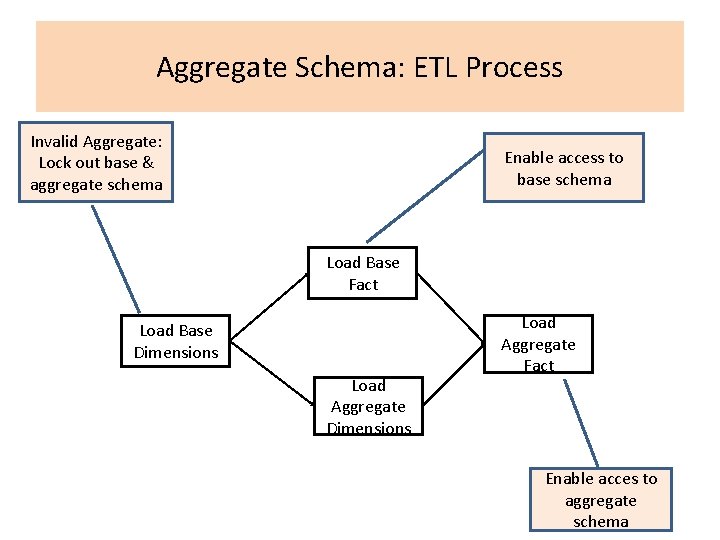

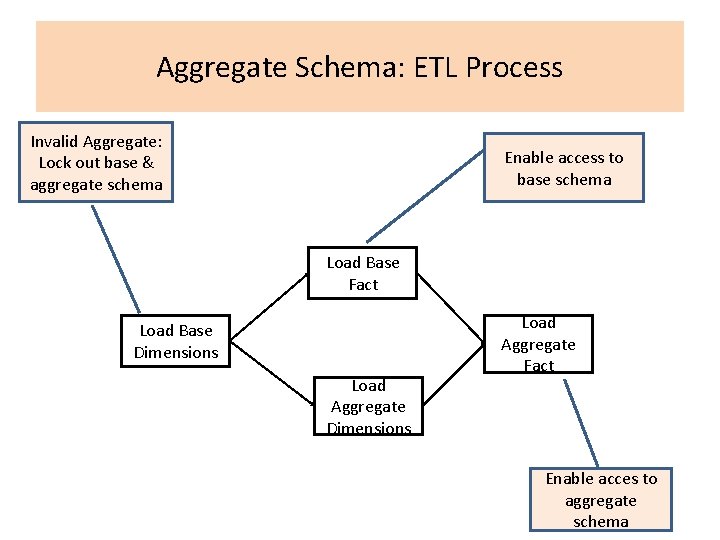

Aggregate Schema: ETL Process Invalid Aggregate: Lock out base & aggregate schema Enable access to base schema Load Base Fact Load Base Dimensions Load Aggregate Fact Enable acces to aggregate schema

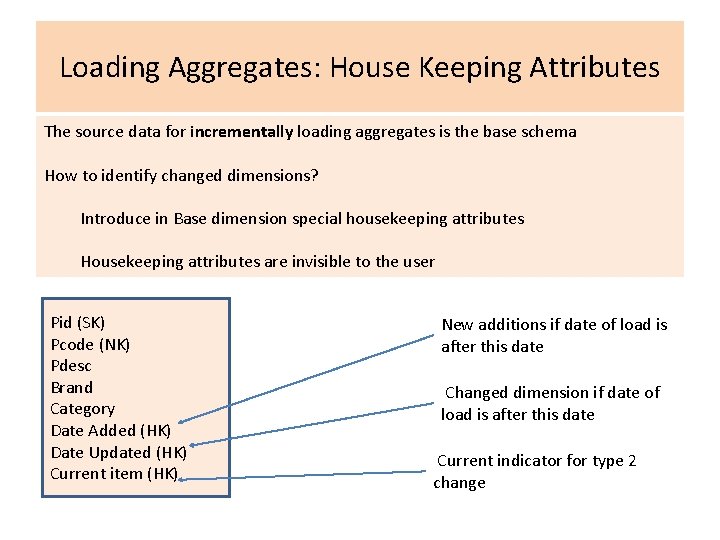

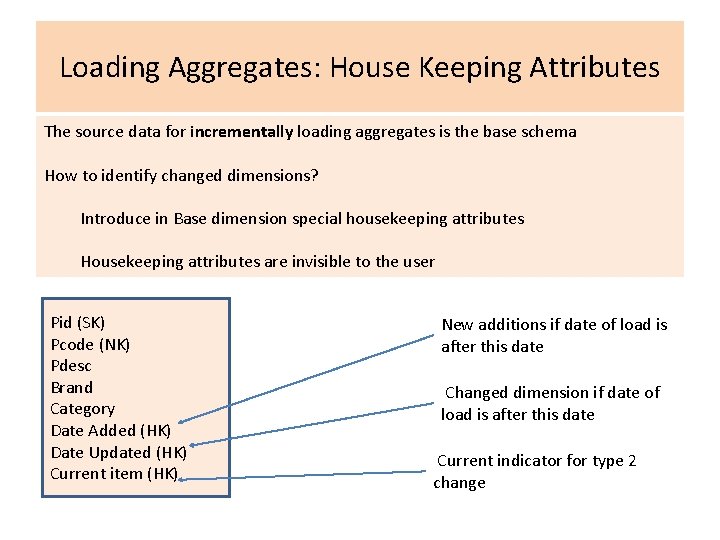

Loading Aggregates: House Keeping Attributes The source data for incrementally loading aggregates is the base schema How to identify changed dimensions? Introduce in Base dimension special housekeeping attributes Housekeeping attributes are invisible to the user Pid (SK) Pcode (NK) Pdesc Brand Category Date Added (HK) Date Updated (HK) Current item (HK) New additions if date of load is after this date Changed dimension if date of load is after this date Current indicator for type 2 change

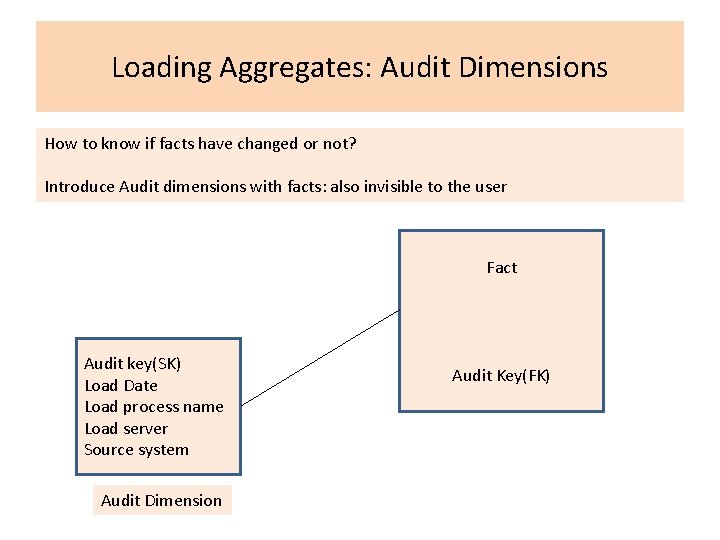

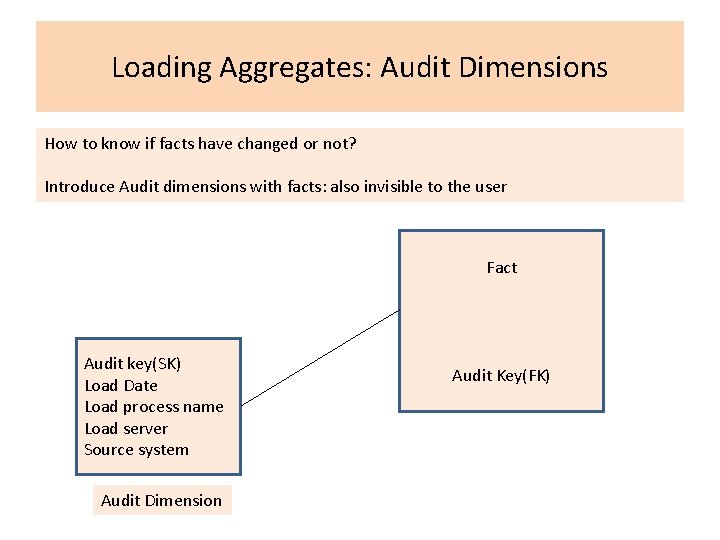

Loading Aggregates: Audit Dimensions How to know if facts have changed or not? Introduce Audit dimensions with facts: also invisible to the user Fact Audit key(SK) Load Date Load process name Load server Source system Audit Dimension Audit Key(FK)

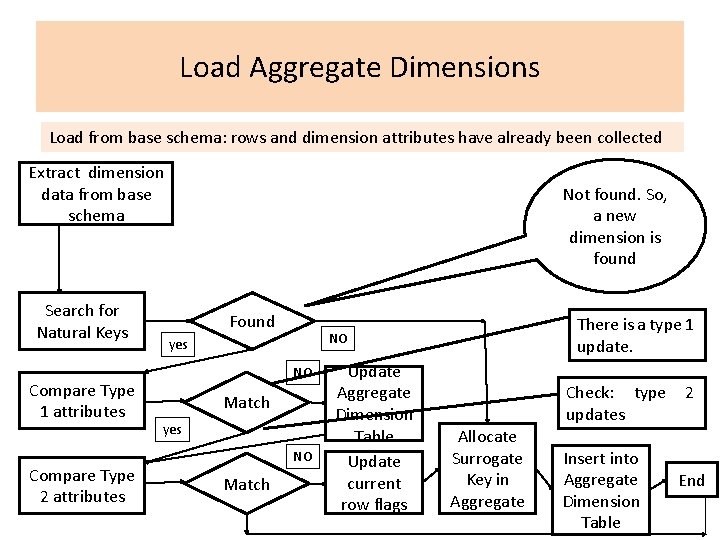

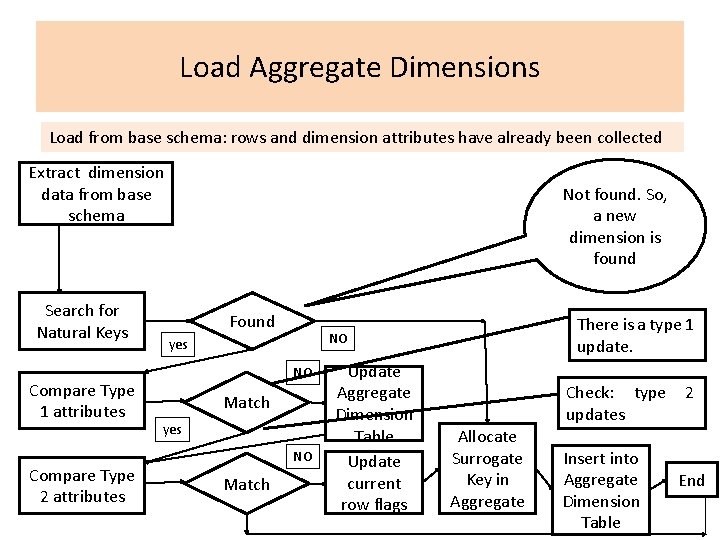

Load Aggregate Dimensions Load from base schema: rows and dimension attributes have already been collected Extract dimension data from base schema Search for Natural Keys Compare Type 1 attributes Compare Type 2 attributes Not found. So, a new dimension is found Found yes NO Match There is a type 1 update. NO Update Aggregate Dimension Table Update current row flags Check: type updates Allocate Surrogate Key in Aggregate Insert into Aggregate Dimension Table 2 End

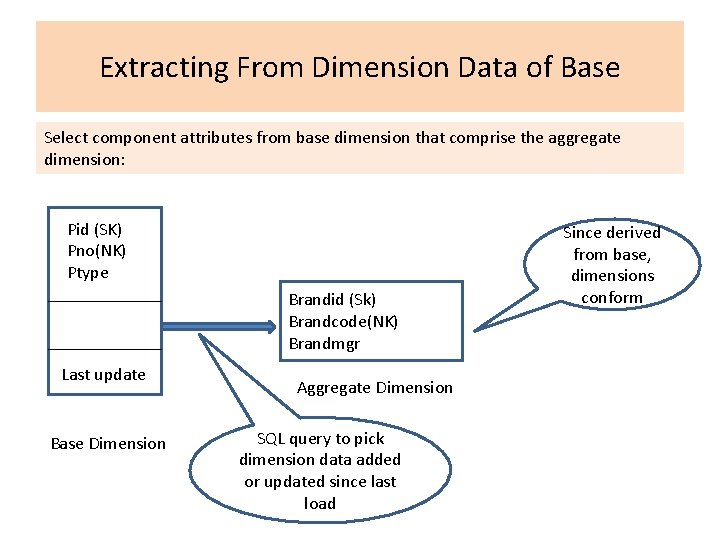

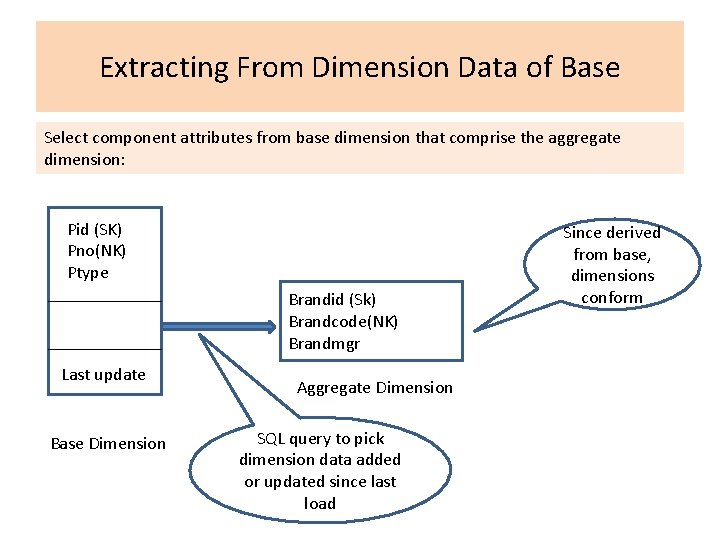

Extracting From Dimension Data of Base Select component attributes from base dimension that comprise the aggregate dimension: Pid (SK) Pno(NK) Ptype Brandid (Sk) Brandcode(NK) Brandmgr Last update Base Dimension Aggregate Dimension SQL query to pick dimension data added or updated since last load Since derived from base, dimensions conform

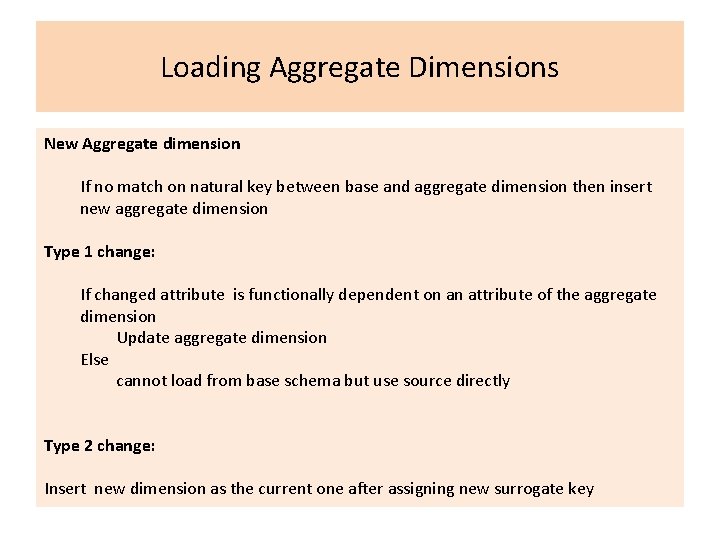

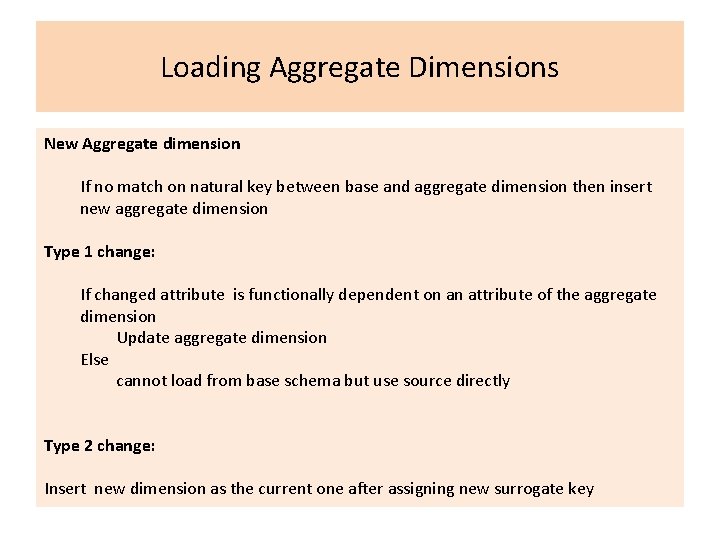

Loading Aggregate Dimensions New Aggregate dimension If no match on natural key between base and aggregate dimension then insert new aggregate dimension Type 1 change: If changed attribute is functionally dependent on an attribute of the aggregate dimension Update aggregate dimension Else cannot load from base schema but use source directly Type 2 change: Insert new dimension as the current one after assigning new surrogate key

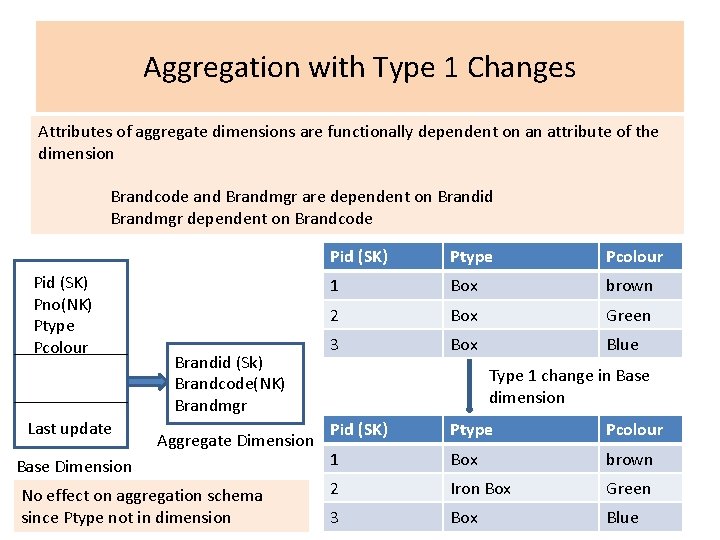

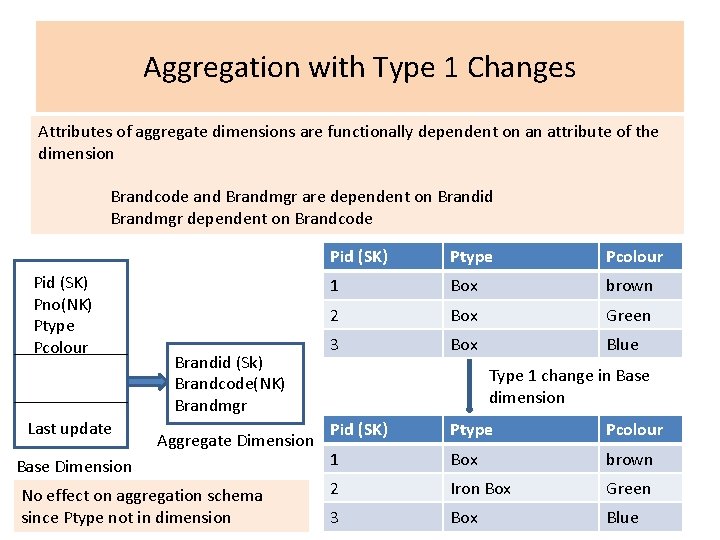

Aggregation with Type 1 Changes Attributes of aggregate dimensions are functionally dependent on an attribute of the dimension Brandcode and Brandmgr are dependent on Brandid Brandmgr dependent on Brandcode Pid (SK) Pno(NK) Ptype Pcolour Last update Brandid (Sk) Brandcode(NK) Brandmgr Aggregate Dimension Base Dimension No effect on aggregation schema since Ptype not in dimension Pid (SK) Ptype Pcolour 1 Box brown 2 Box Green 3 Box Blue Type 1 change in Base dimension Pid (SK) Ptype Pcolour 1 Box brown 2 Iron Box Green 3 Box Blue

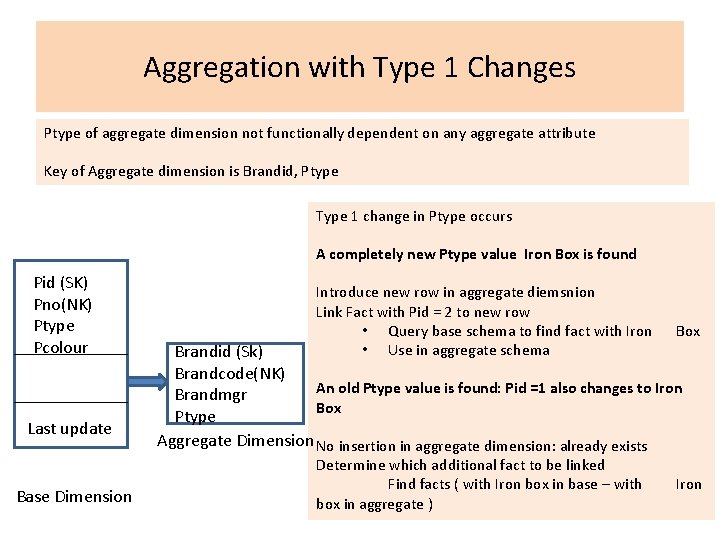

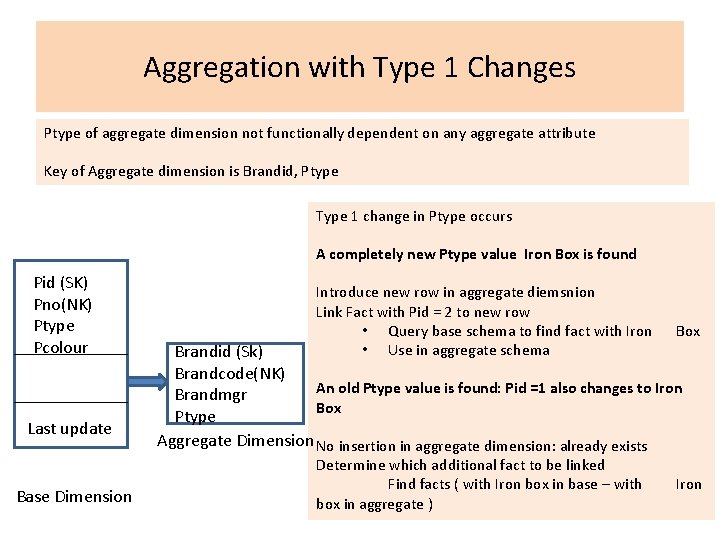

Aggregation with Type 1 Changes Ptype of aggregate dimension not functionally dependent on any aggregate attribute Key of Aggregate dimension is Brandid, Ptype Type 1 change in Ptype occurs A completely new Ptype value Iron Box is found Pid (SK) Pno(NK) Ptype Pcolour Last update Base Dimension Introduce new row in aggregate diemsnion Link Fact with Pid = 2 to new row • Query base schema to find fact with Iron • Use in aggregate schema Box Brandid (Sk) Brandcode(NK) An old Ptype value is found: Pid =1 also changes to Iron Brandmgr Box Ptype Aggregate Dimension No insertion in aggregate dimension: already exists Determine which additional fact to be linked Find facts ( with Iron box in base – with box in aggregate ) Iron

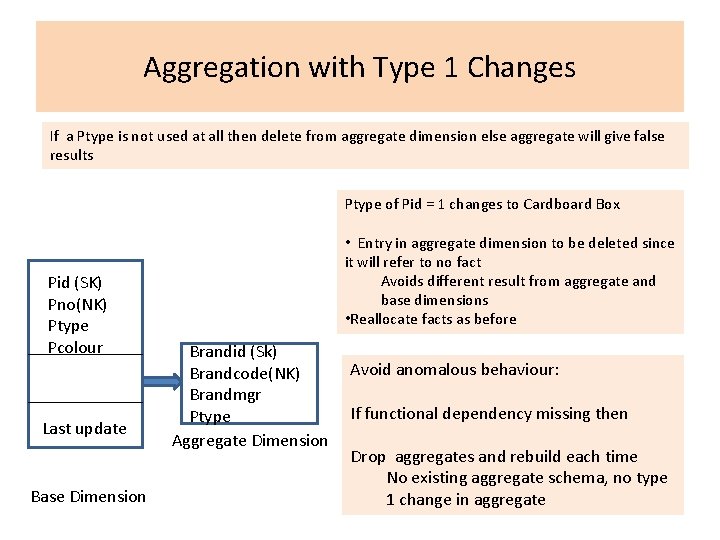

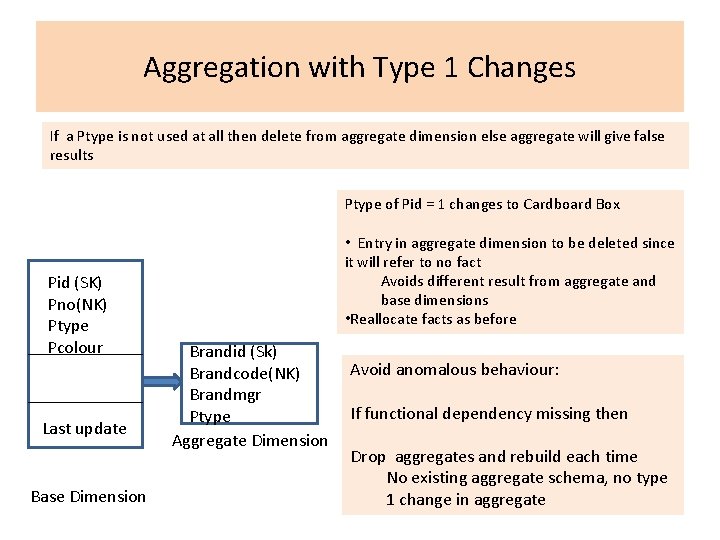

Aggregation with Type 1 Changes If a Ptype is not used at all then delete from aggregate dimension else aggregate will give false results Ptype of Pid = 1 changes to Cardboard Box Pid (SK) Pno(NK) Ptype Pcolour Last update Base Dimension • Entry in aggregate dimension to be deleted since it will refer to no fact Avoids different result from aggregate and base dimensions • Reallocate facts as before Brandid (Sk) Brandcode(NK) Brandmgr Ptype Aggregate Dimension Avoid anomalous behaviour: If functional dependency missing then Drop aggregates and rebuild each time No existing aggregate schema, no type 1 change in aggregate

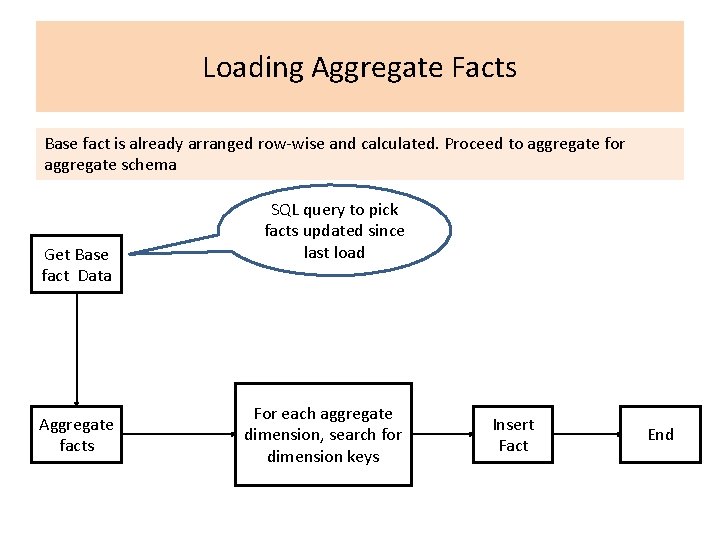

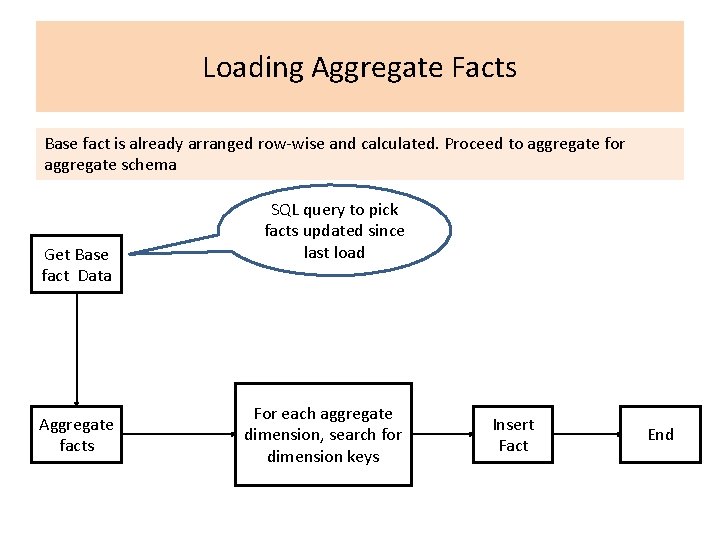

Loading Aggregate Facts Base fact is already arranged row-wise and calculated. Proceed to aggregate for aggregate schema Get Base fact Data Aggregate facts SQL query to pick facts updated since last load For each aggregate dimension, search for dimension keys Insert Fact End

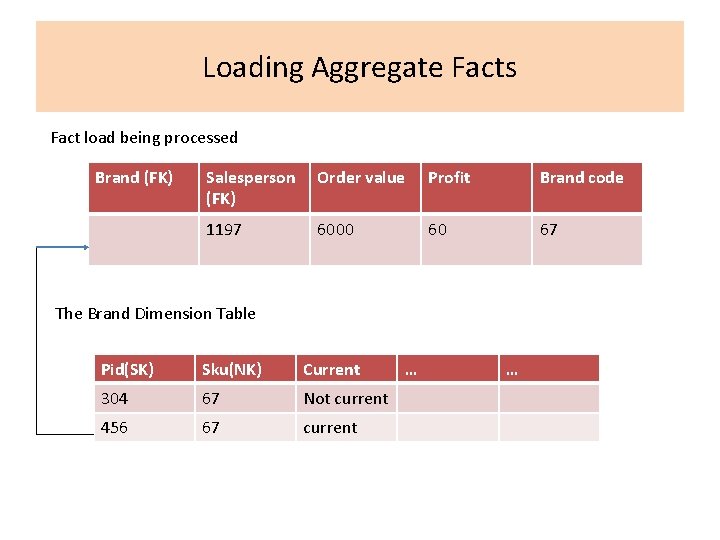

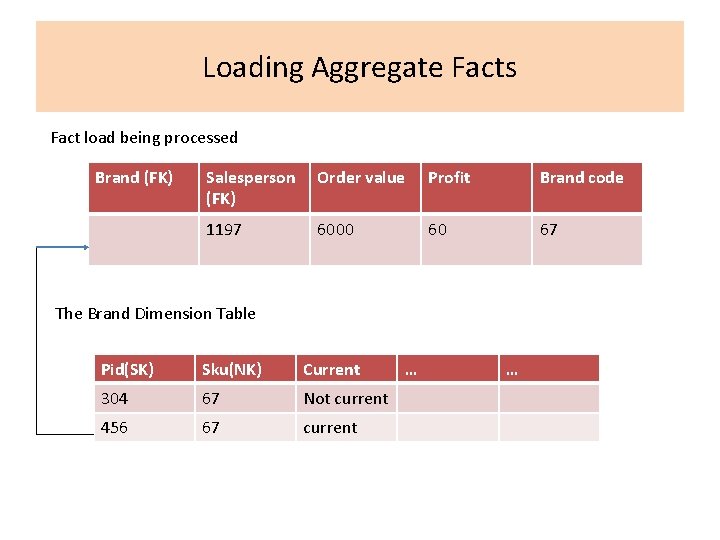

Loading Aggregate Facts Fact load being processed Brand (FK) Salesperson (FK) Order value Profit Brand code 1197 6000 60 67 The Brand Dimension Table Pid(SK) Sku(NK) Current 304 67 Not current 456 67 current … …

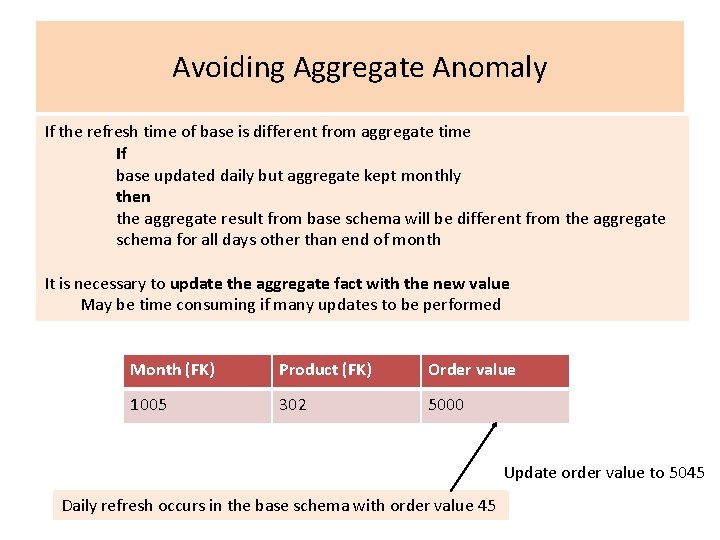

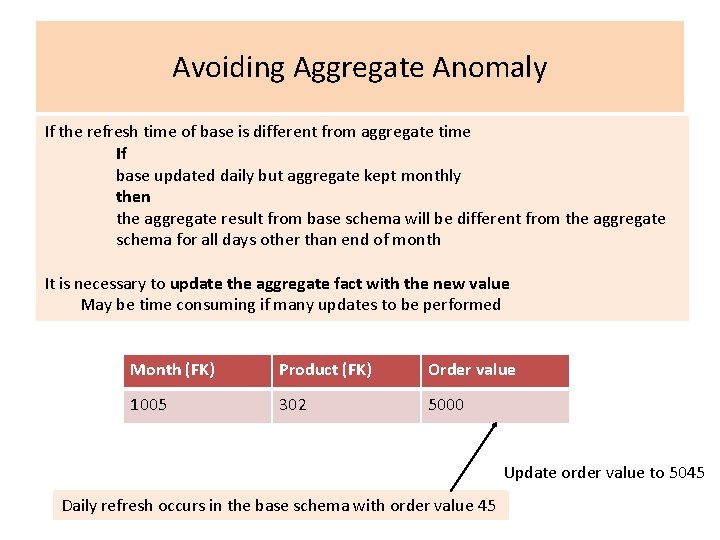

Avoiding Aggregate Anomaly If the refresh time of base is different from aggregate time If base updated daily but aggregate kept monthly then the aggregate result from base schema will be different from the aggregate schema for all days other than end of month It is necessary to update the aggregate fact with the new value May be time consuming if many updates to be performed Month (FK) Product (FK) Order value 1005 302 5000 Update order value to 5045 Daily refresh occurs in the base schema with order value 45

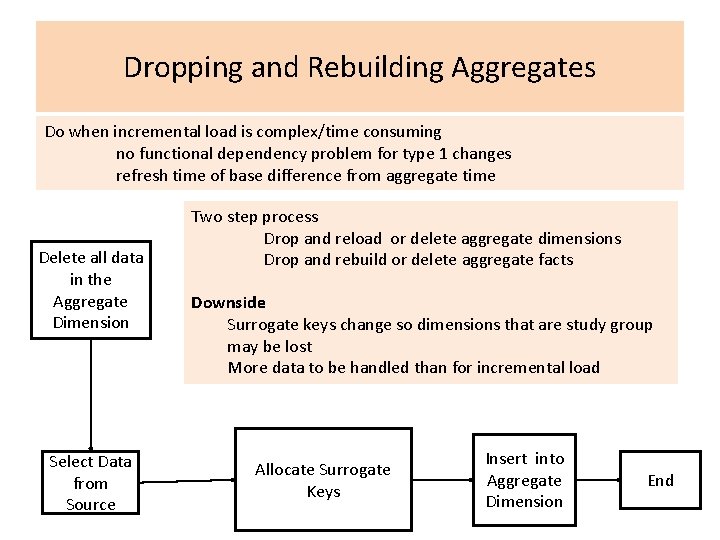

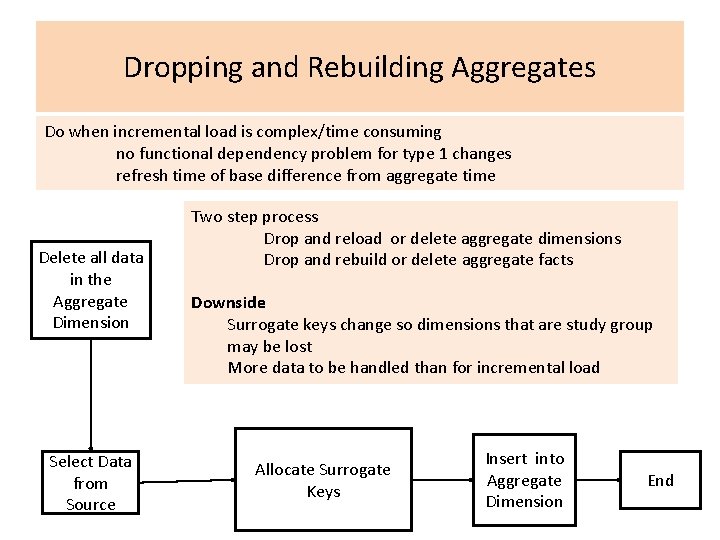

Dropping and Rebuilding Aggregates Do when incremental load is complex/time consuming no functional dependency problem for type 1 changes refresh time of base difference from aggregate time Delete all data in the Aggregate Dimension Select Data from Source Two step process Drop and reload or delete aggregate dimensions Drop and rebuild or delete aggregate facts Downside Surrogate keys change so dimensions that are study group may be lost More data to be handled than for incremental load Allocate Surrogate Keys Insert into Aggregate Dimension End

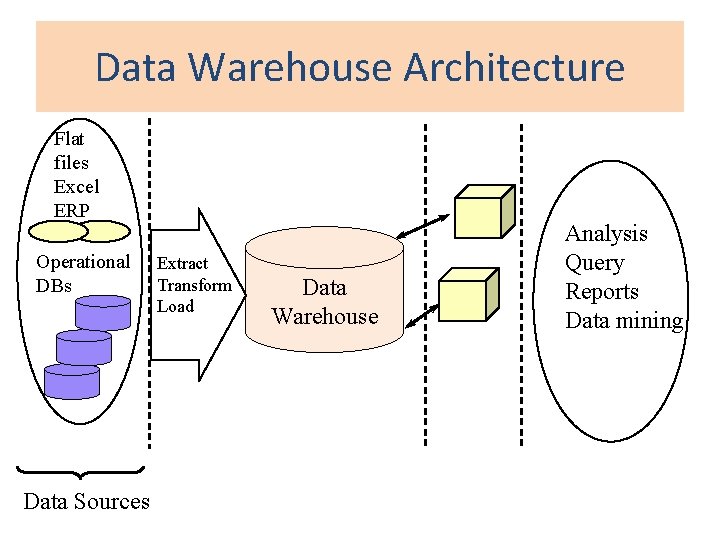

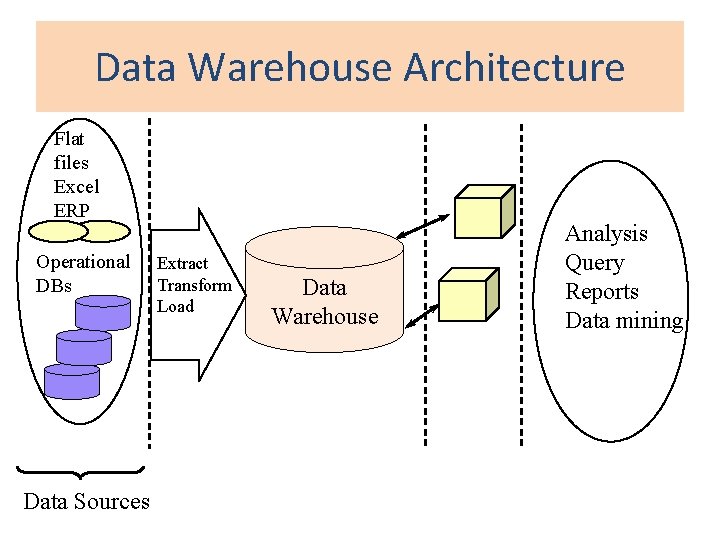

Data Warehouse Architecture Flat files Excel ERP Operational DBs Data Sources Extract Transform Load Data Warehouse Analysis Query Reports Data mining

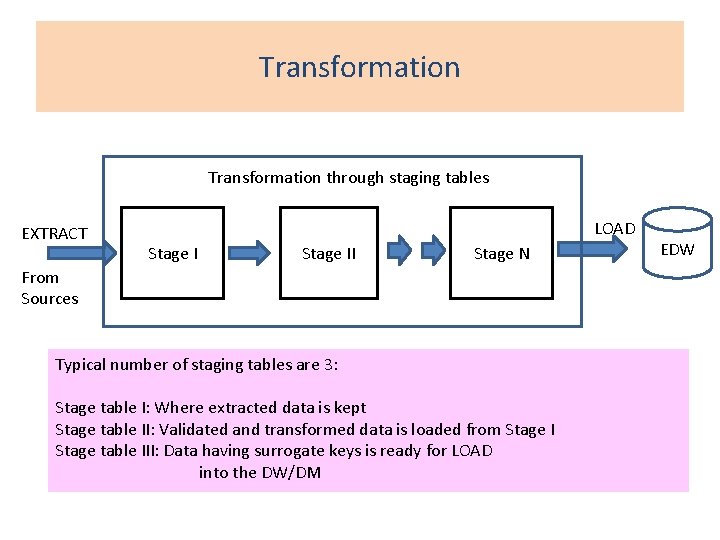

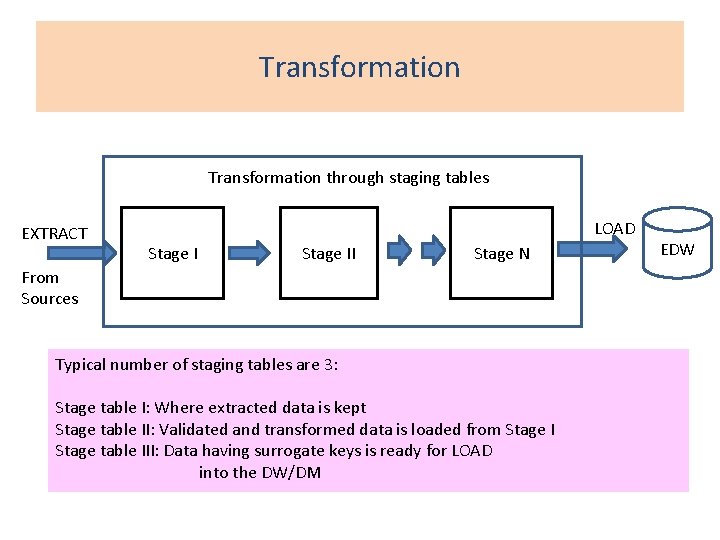

Transformation through staging tables EXTRACT LOAD Stage II Stage N From Sources Typical number of staging tables are 3: Stage table I: Where extracted data is kept Stage table II: Validated and transformed data is loaded from Stage I Stage table III: Data having surrogate keys is ready for LOAD into the DW/DM EDW

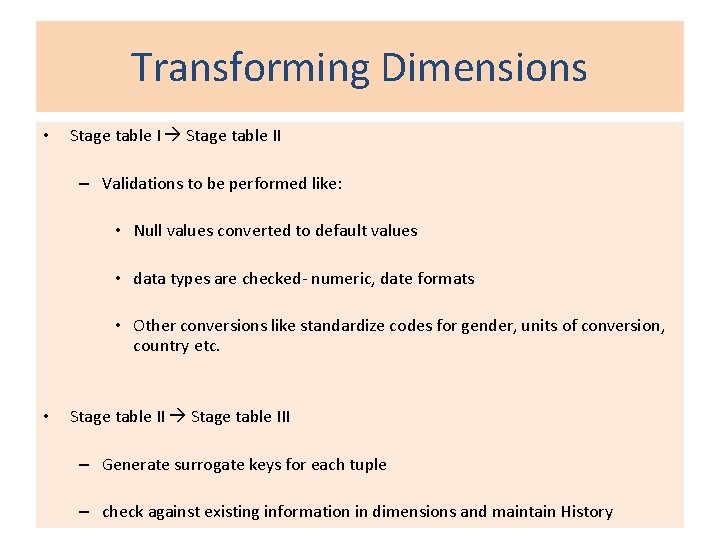

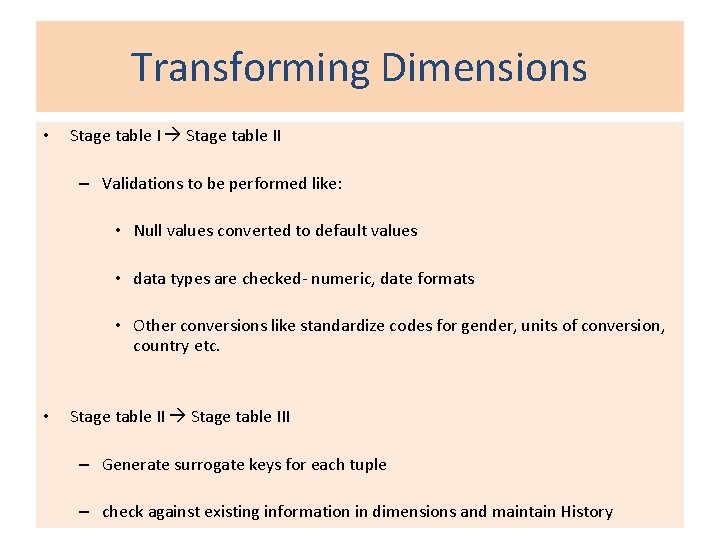

Transforming Dimensions • Stage table II – Validations to be performed like: • Null values converted to default values • data types are checked- numeric, date formats • Other conversions like standardize codes for gender, units of conversion, country etc. • Stage table III – Generate surrogate keys for each tuple – check against existing information in dimensions and maintain History

Transforming Facts • Stage table II – Validations (same like with dimensions) • Stage table III – Generate surrogate keys for each tuple – Handle Null values – Store History

Maintaining History for Dimensions The key does not change Changes in other attributes may occur with low frequency or rapidly Changes in source systems overwrite old values Overwriting in dimension tables is not always desirable product category changes in the source analysis under the old and the new category is still required

Slowly Changing Dimension Tables • • Type I Change (overwrite) Type II Change (new record) Type III Change (new attribute) Hybrid Approach – Predictable changes with multiple version overlays – Unpredictable changes with single version overlays

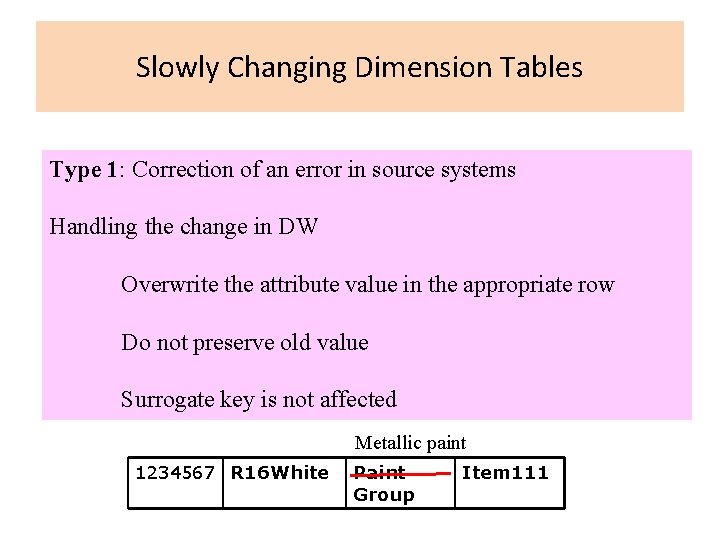

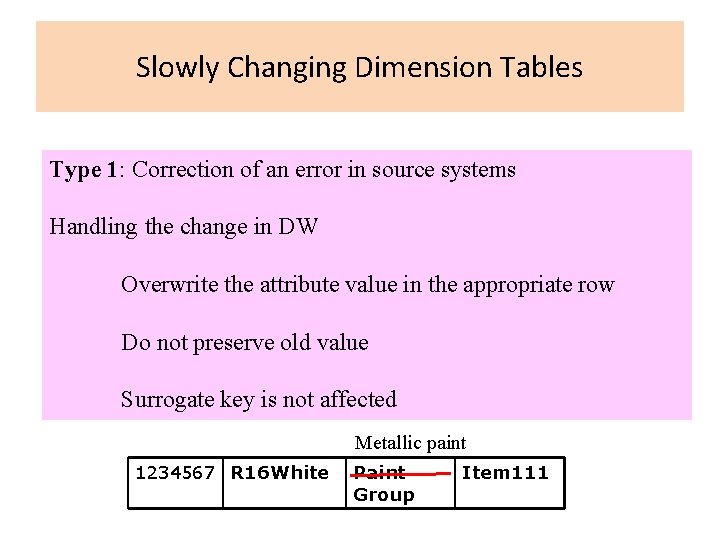

Slowly Changing Dimension Tables Type 1: Correction of an error in source systems Handling the change in DW Overwrite the attribute value in the appropriate row Do not preserve old value Surrogate key is not affected Metallic paint 1234567 R 16 White Paint Group Item 111

Slowly Changing Dimension Tables Type I ¡ Fast & Easy to implement ¡ Attribute value always reflect the latest assignment ¡ No history of prior attribute values ¡ In DW environment, can we afford to do that? ¡ NO!!!

Slowly Changing Dimension Tables Type 2: A true change scholarship earned: there is a change to yes from no in the source system Decision: Analyze performance of scholarship holders and others need both old status and new status Handling in DW Introduce another row with new data Add an ’effective from date’ attribute No changes in original row New surrogate key for the row Cannot be implemented without the help of SKs!!

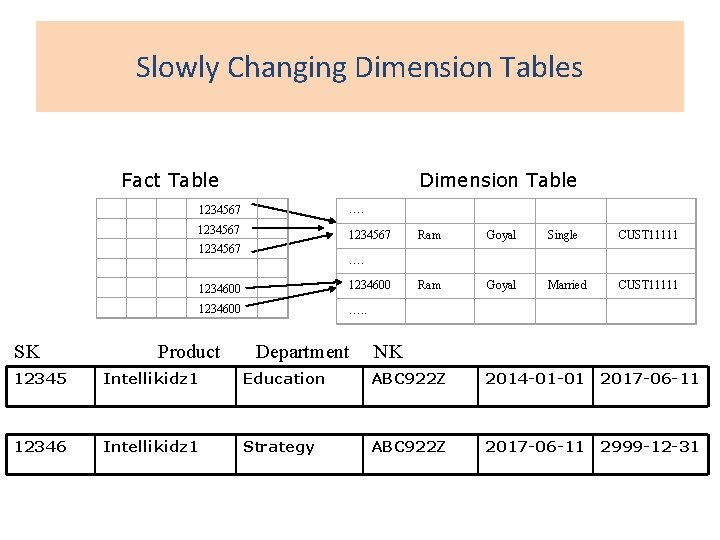

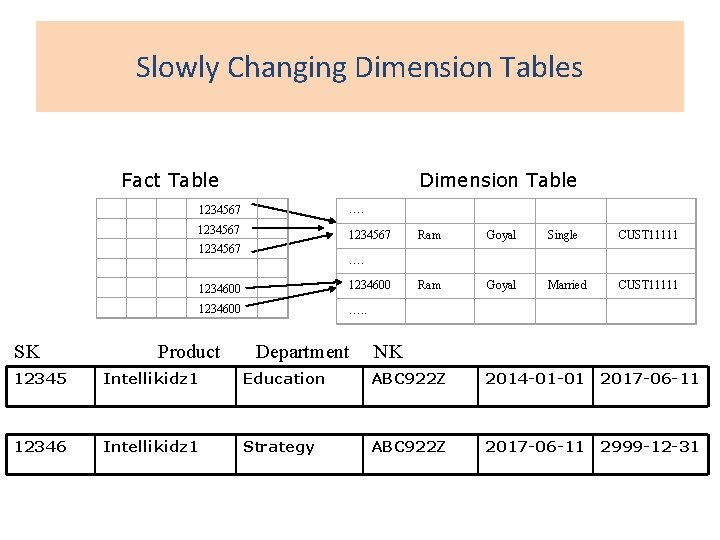

Slowly Changing Dimension Tables Fact Table Dimension Table 1234567 …. 1234567 Ram Goyal Single CUST 11111 1234567 …. 1234600 Ram Goyal Married CUST 11111 1234600 …. . SK Product Department NK 12345 Intellikidz 1 Education ABC 922 Z 2014 -01 -01 2017 -06 -11 12346 Intellikidz 1 Strategy ABC 922 Z 2017 -06 -11 2999 -12 -31

Slowly Changing Dimension Tables Type II Change: Advantages ¡ Automatically partitions history in the fact table ¡ Customer profile is easily differentiated ¡ Tracks as many dimension changes as required ¡ No need to rebuild aggregates

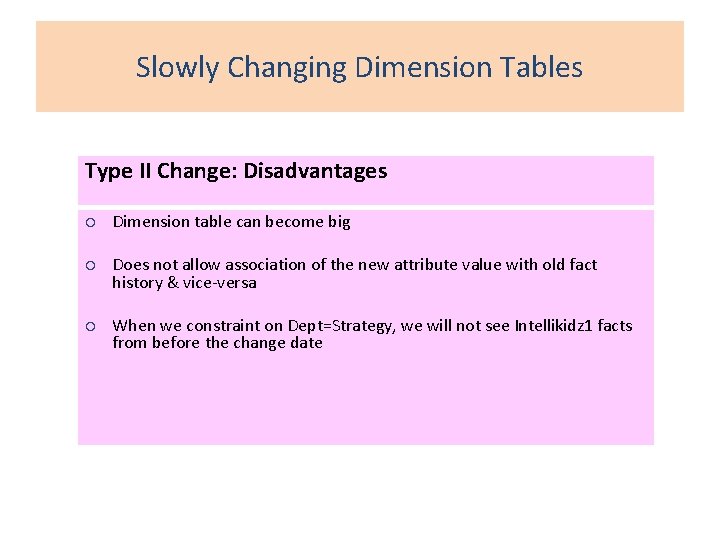

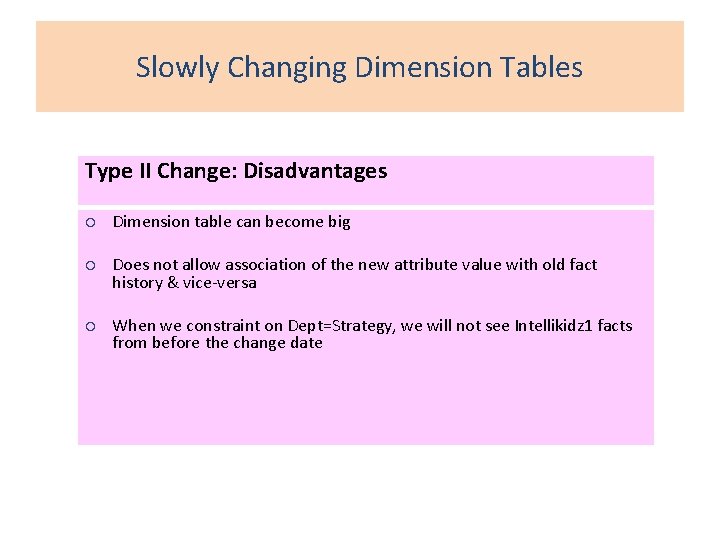

Slowly Changing Dimension Tables Type II Change: Disadvantages ¡ Dimension table can become big ¡ Does not allow association of the new attribute value with old fact history & vice-versa ¡ When we constraint on Dept=Strategy, we will not see Intellikidz 1 facts from before the change date

Slowly Changing Dimension Tables Type 3: Soft tentative change, ‘what if’ change What if we change a salesperson from one territory to another Change territory temporarily Analyze performance in both territories if result encouraging then change territory Handling in DW Add ‘old’ attribute, ‘effective date’ attribute in dimension table Least Common Change Copy current attribute value in old attribute Introduce new value in current attribute Change queries to use old and new attributes

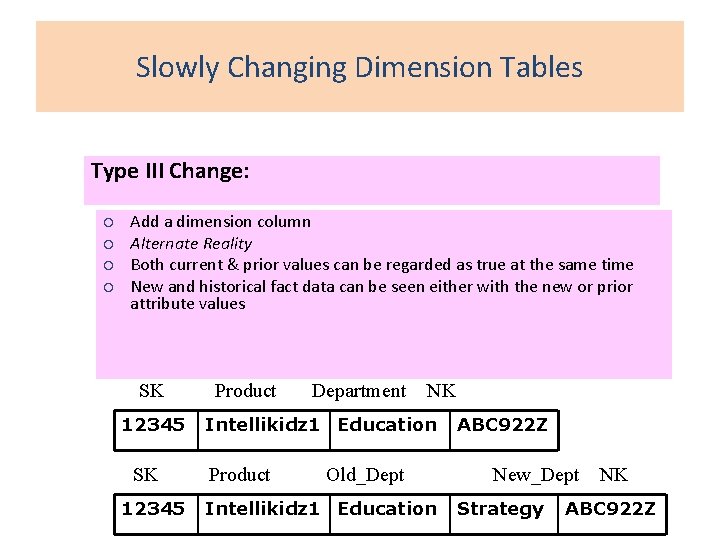

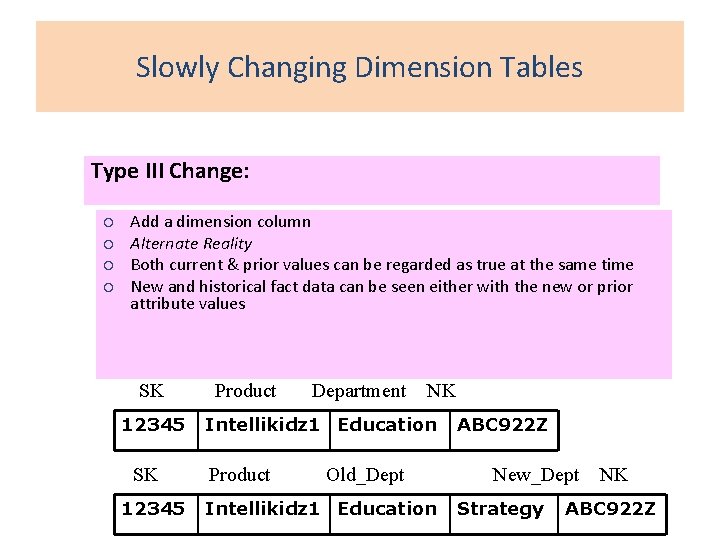

Slowly Changing Dimension Tables Type III Change: ¡ ¡ Add a dimension column Alternate Reality Both current & prior values can be regarded as true at the same time New and historical fact data can be seen either with the new or prior attribute values SK Product Department 12345 NK Intellikidz 1 Education SK Product Old_Dept 12345 Intellikidz 1 Education ABC 922 Z New_Dept NK Strategy ABC 922 Z

Slowly Changing Dimension Tables Type III Change: Problems ¡ ¡ Good for handling predictable changes Can lead to lot of wastage of space Myriad of unpredictable changes Cannot track the impact of numerous intermediate attribute values

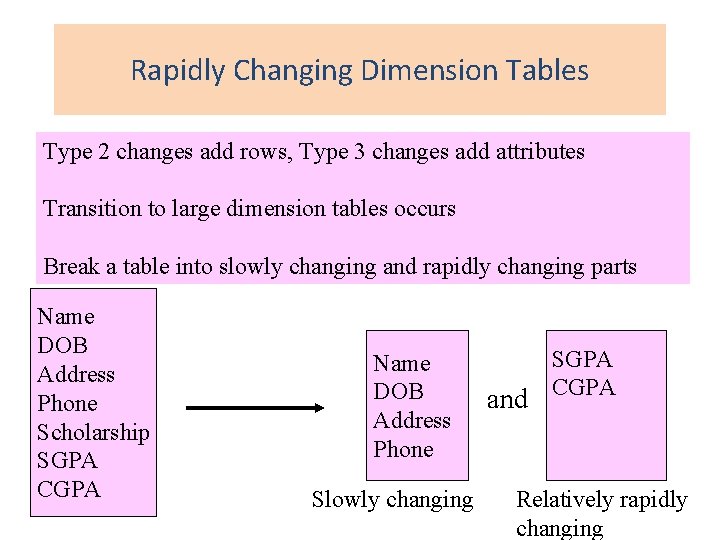

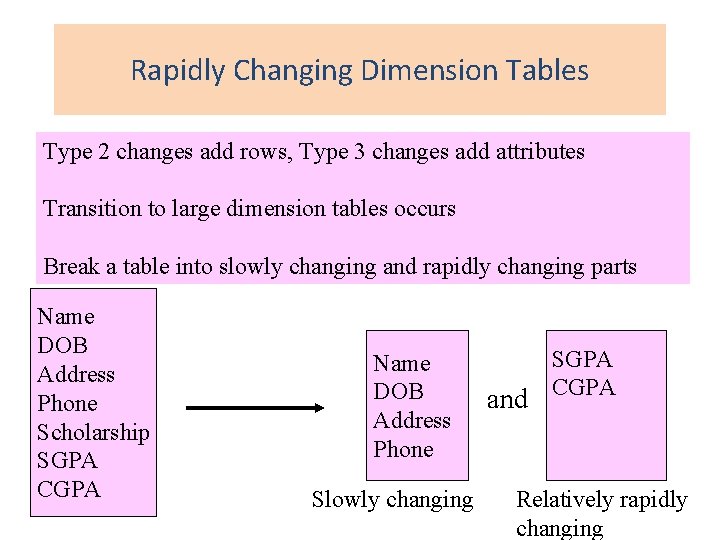

Rapidly Changing Dimension Tables Type 2 changes add rows, Type 3 changes add attributes Transition to large dimension tables occurs Break a table into slowly changing and rapidly changing parts Name DOB Address Phone Scholarship SGPA CGPA Name DOB Address Phone Slowly changing and SGPA CGPA Relatively rapidly changing

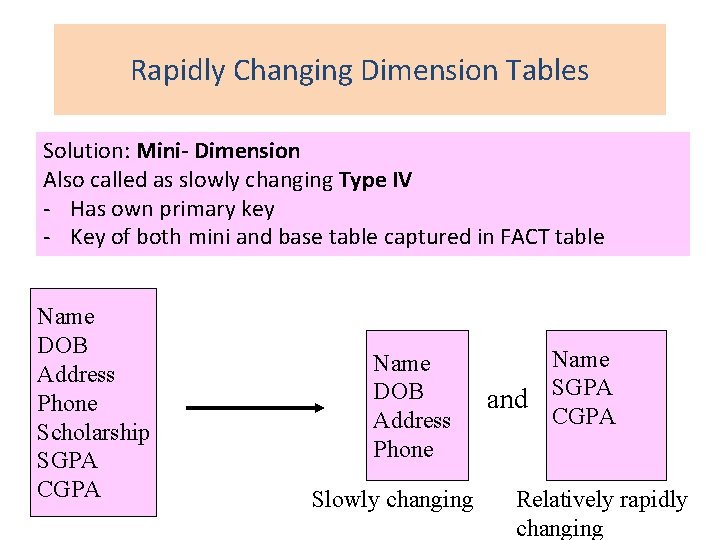

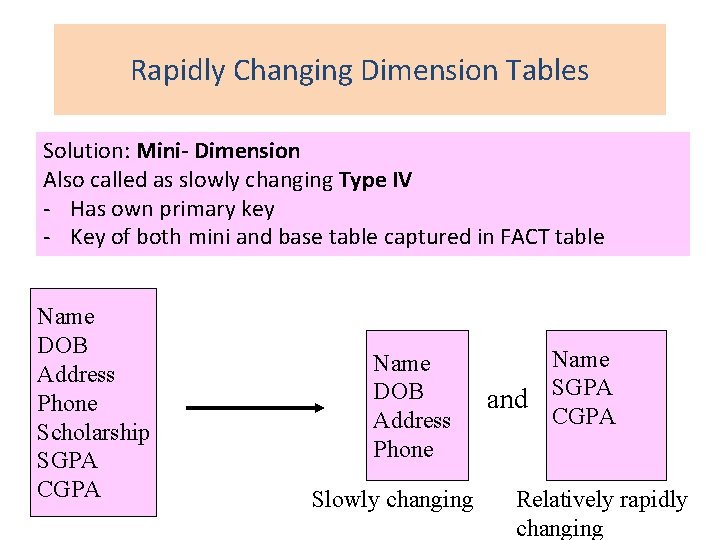

Rapidly Changing Dimension Tables Solution: Mini- Dimension Also called as slowly changing Type IV - Has own primary key - Key of both mini and base table captured in FACT table Name DOB Address Phone Scholarship SGPA CGPA Name DOB Address Phone Slowly changing and Name SGPA CGPA Relatively rapidly changing

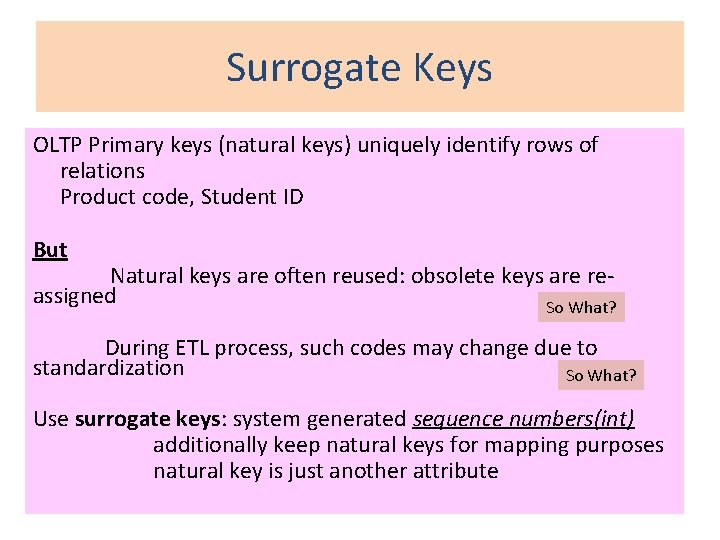

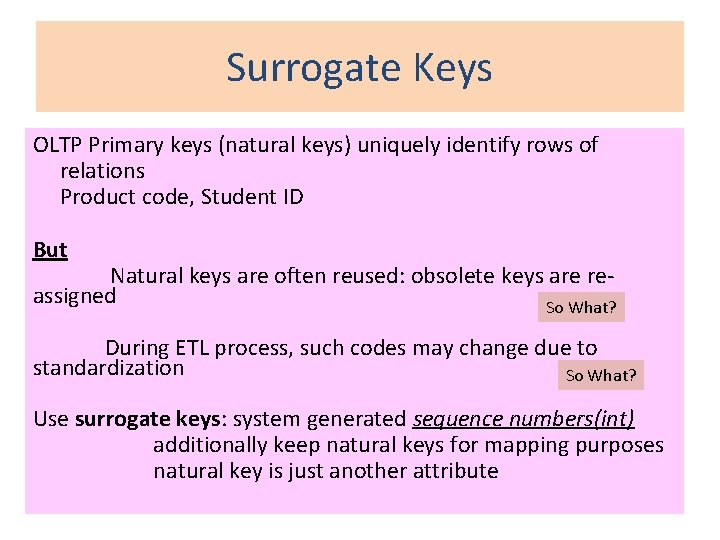

Surrogate Keys OLTP Primary keys (natural keys) uniquely identify rows of relations Product code, Student ID But Natural keys are often reused: obsolete keys are reassigned So What? During ETL process, such codes may change due to standardization So What? Use surrogate keys: system generated sequence numbers(int) additionally keep natural keys for mapping purposes natural key is just another attribute

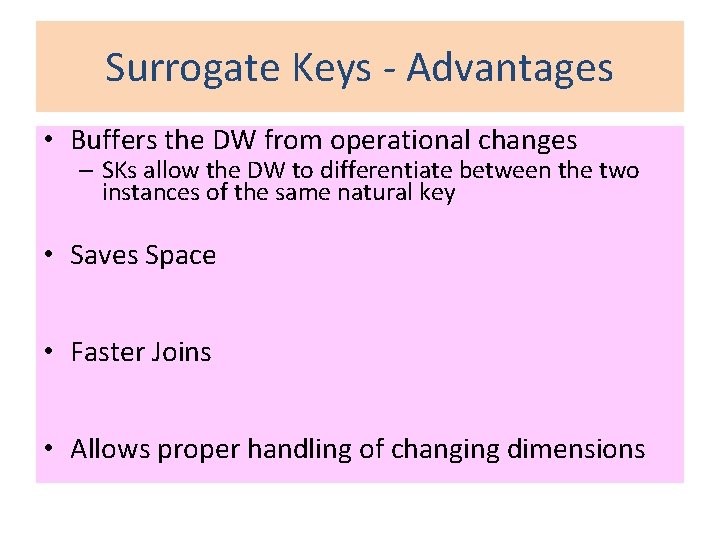

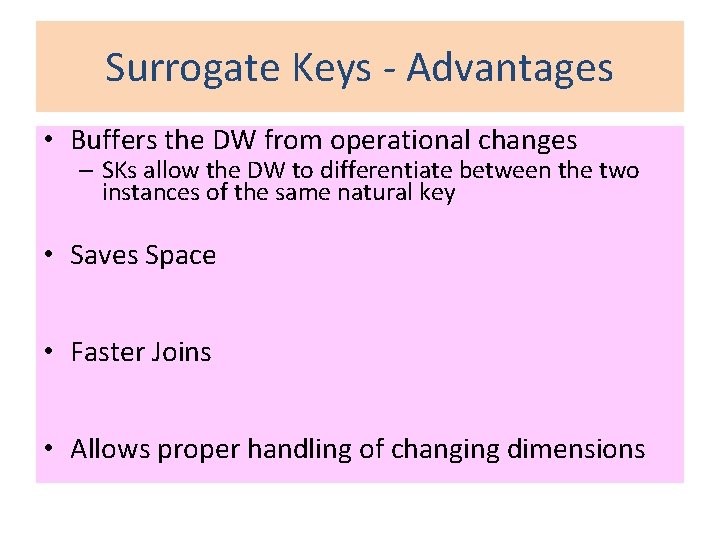

Surrogate Keys - Advantages • Buffers the DW from operational changes – SKs allow the DW to differentiate between the two instances of the same natural key • Saves Space • Faster Joins • Allows proper handling of changing dimensions

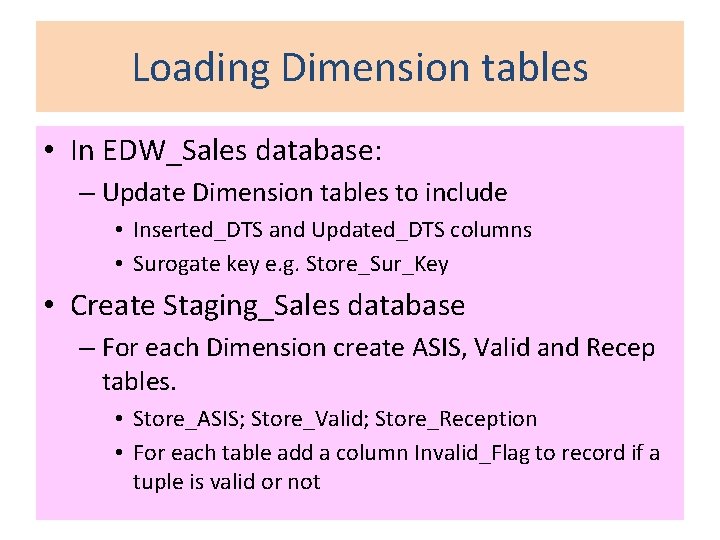

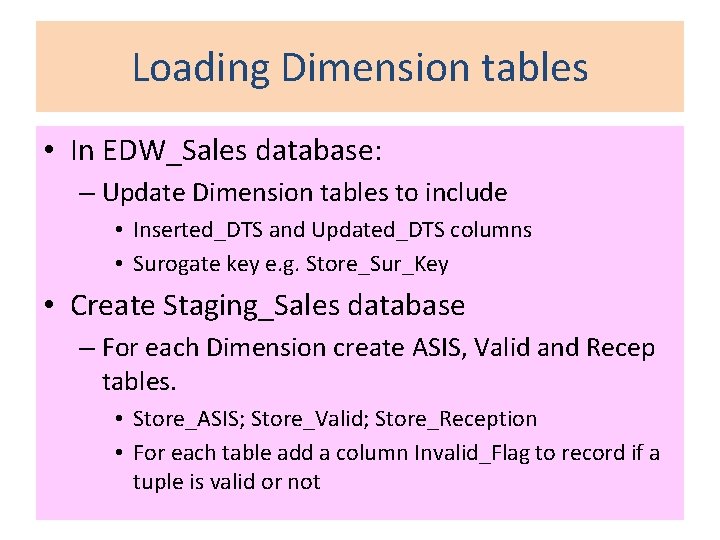

Loading Dimension tables • In EDW_Sales database: – Update Dimension tables to include • Inserted_DTS and Updated_DTS columns • Surogate key e. g. Store_Sur_Key • Create Staging_Sales database – For each Dimension create ASIS, Valid and Recep tables. • Store_ASIS; Store_Valid; Store_Reception • For each table add a column Invalid_Flag to record if a tuple is valid or not

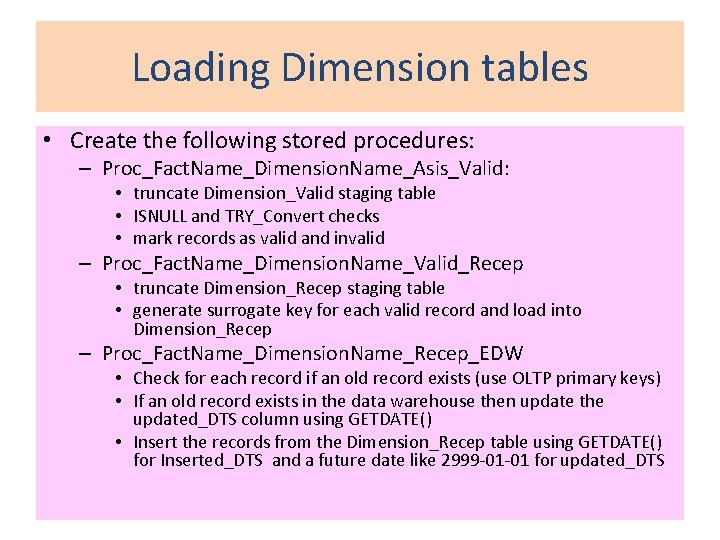

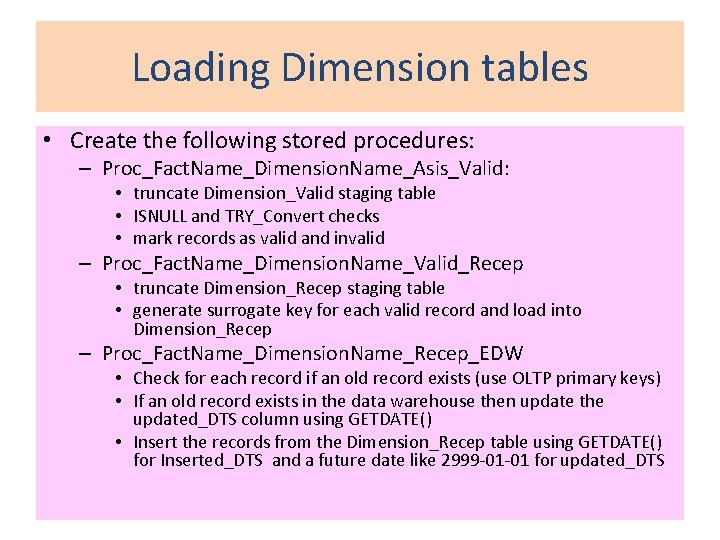

Loading Dimension tables • Create the following stored procedures: – Proc_Fact. Name_Dimension. Name_Asis_Valid: • truncate Dimension_Valid staging table • ISNULL and TRY_Convert checks • mark records as valid and invalid – Proc_Fact. Name_Dimension. Name_Valid_Recep • truncate Dimension_Recep staging table • generate surrogate key for each valid record and load into Dimension_Recep – Proc_Fact. Name_Dimension. Name_Recep_EDW • Check for each record if an old record exists (use OLTP primary keys) • If an old record exists in the data warehouse then update the updated_DTS column using GETDATE() • Insert the records from the Dimension_Recep table using GETDATE() for Inserted_DTS and a future date like 2999 -01 -01 for updated_DTS

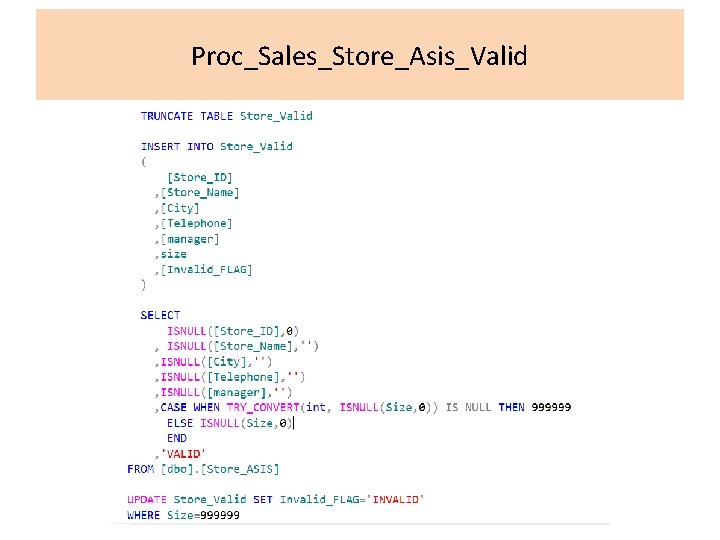

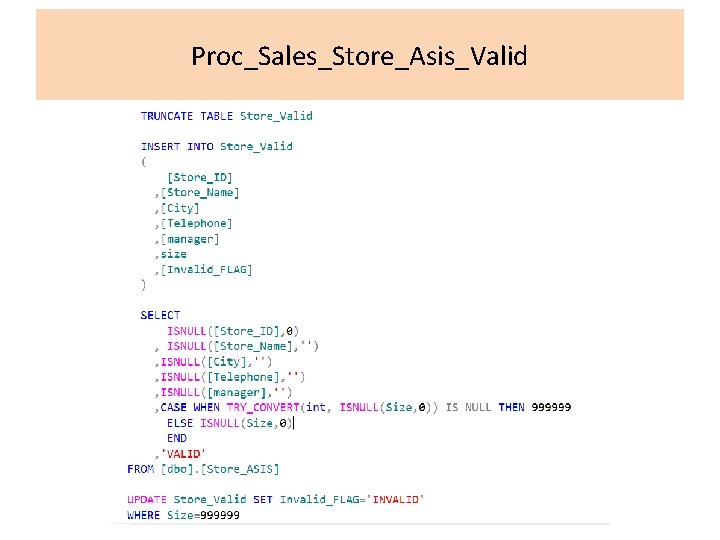

Proc_Sales_Store_Asis_Valid

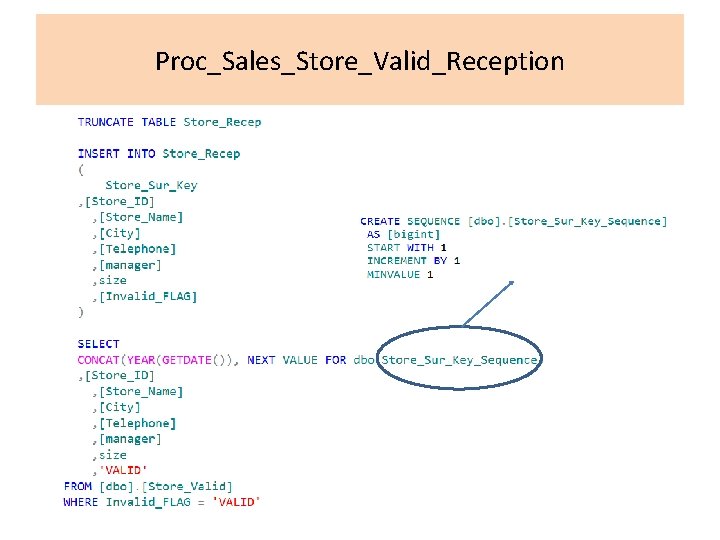

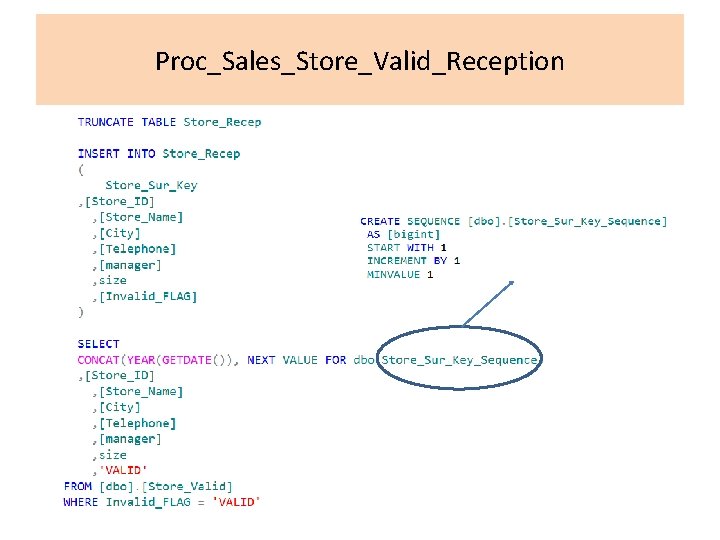

Proc_Sales_Store_Valid_Reception

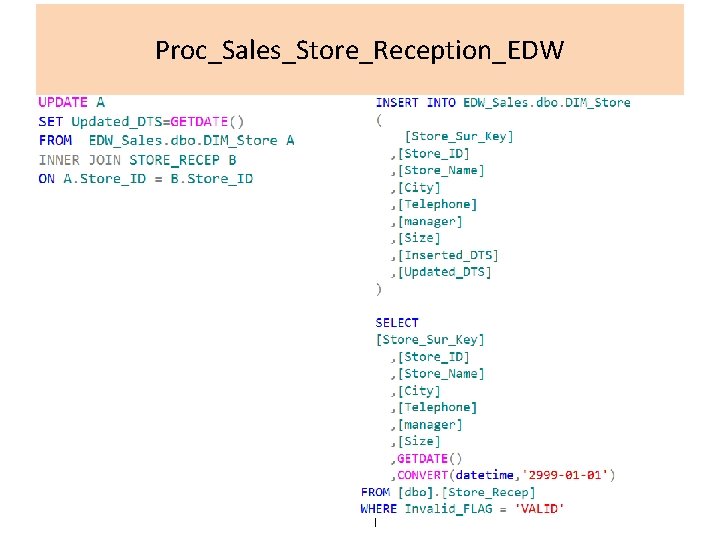

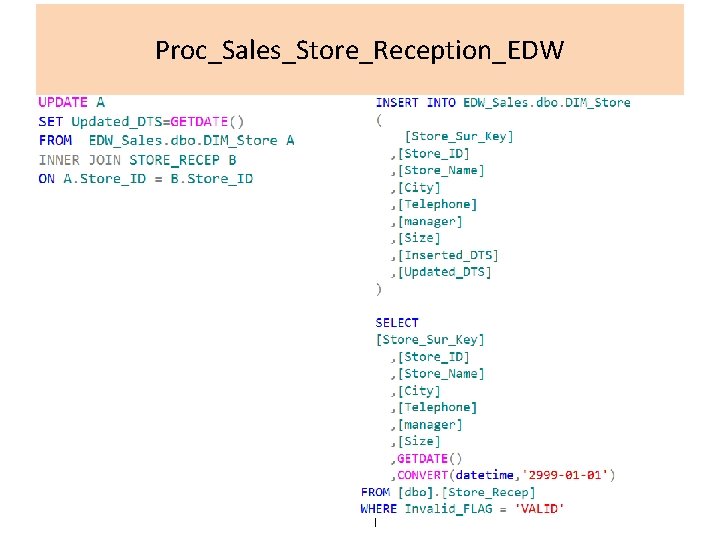

Proc_Sales_Store_Reception_EDW

Maintaining History for Facts • Several options: – timestamp – Flags to indicate valid, obsolete, pending, cancelled transaction records • e. g. normal invoice – C for current cancelled invoice – D for deleted usually single character used as flags keep it meaningful so that it can be understood by all

Handling NULL in Facts • What if there is an invoice where customer details are unavailable? SOLUTION Maintain a default surrogate key in all dimension tables while uploading Facts use this default key to indicate NULL data

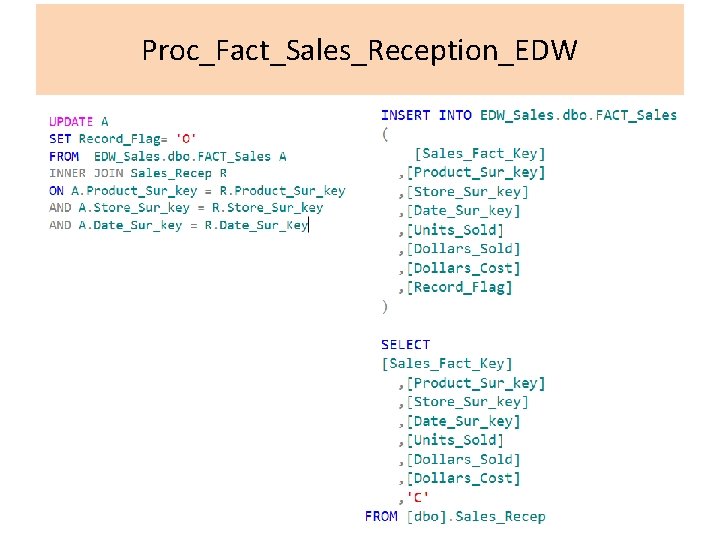

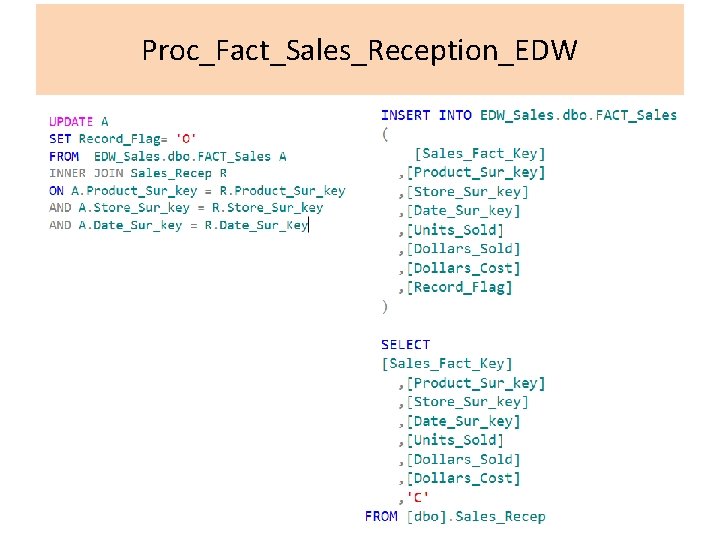

Loading FACT tables • In EDW_Sales database: – Create the Fact table • Create Staging_Sales database – For each FACT create ASIS, Valid and Recep tables. • Sales_ASIS; Sales_Valid; Sales_Reception • For each table add a column Invalid_Flag to record if a tuple is valid or not

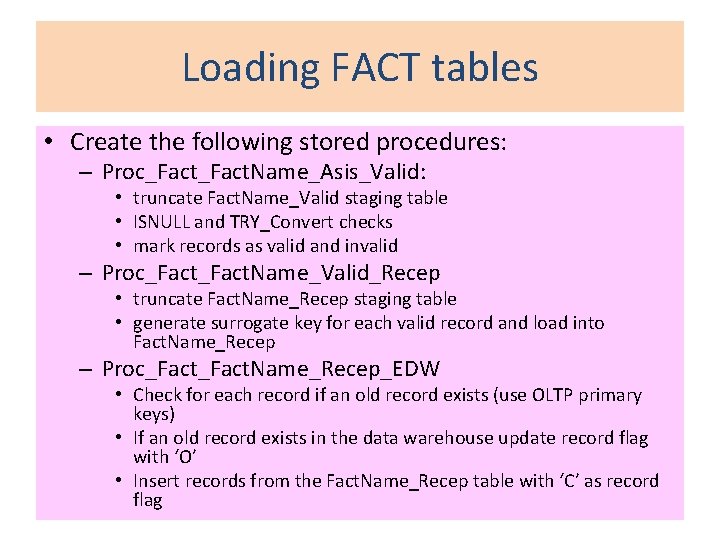

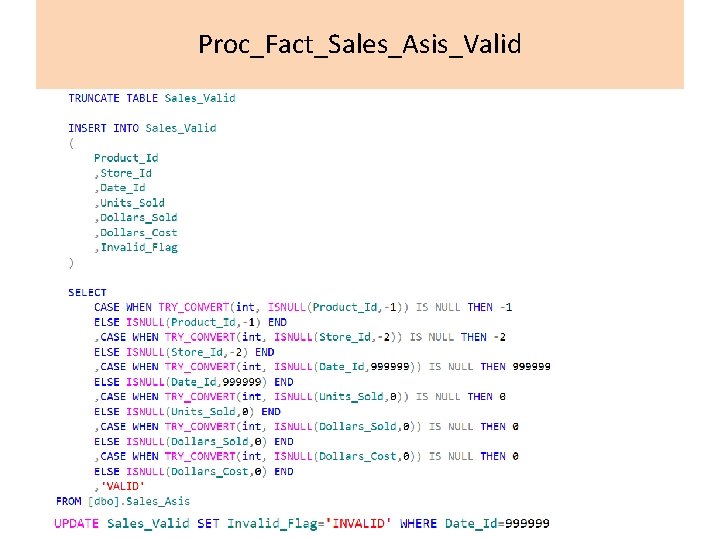

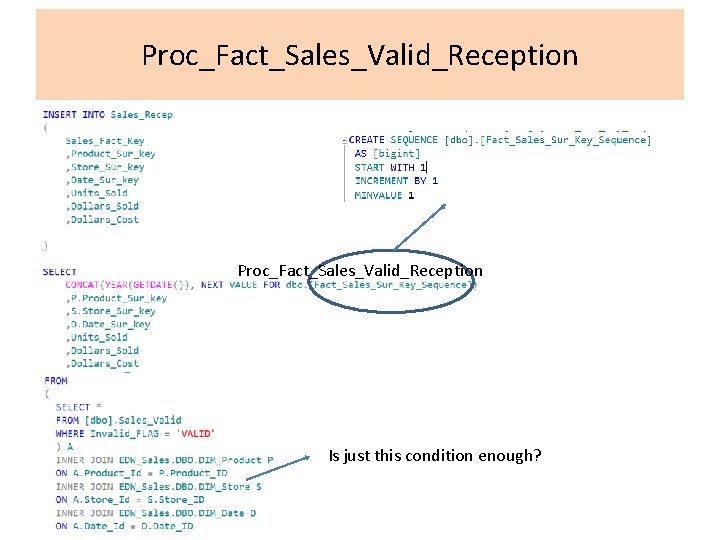

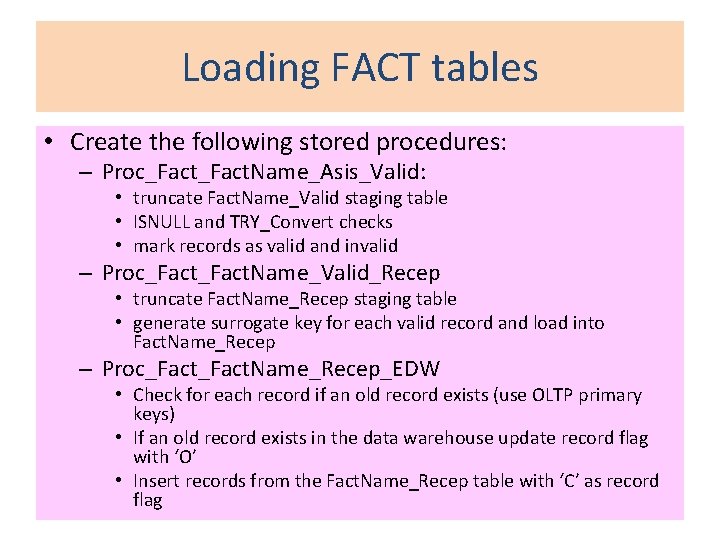

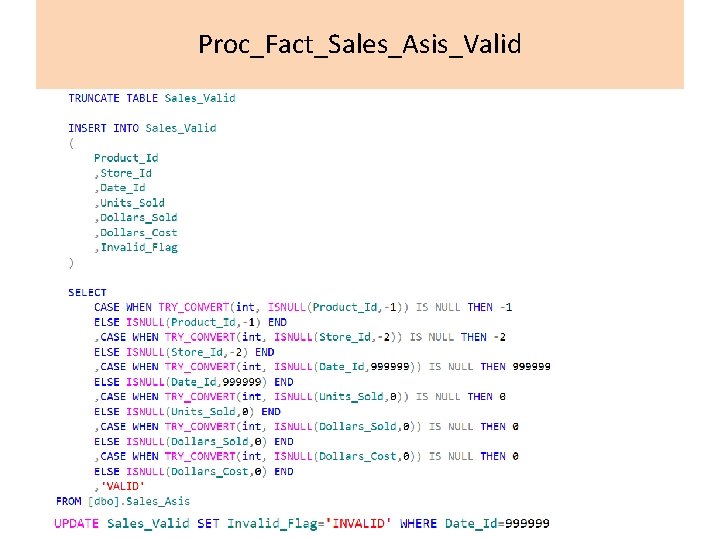

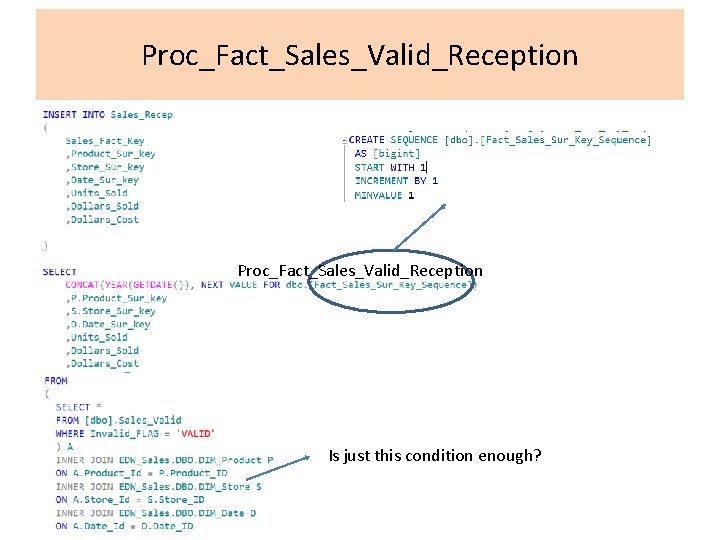

Loading FACT tables • Create the following stored procedures: – Proc_Fact. Name_Asis_Valid: • truncate Fact. Name_Valid staging table • ISNULL and TRY_Convert checks • mark records as valid and invalid – Proc_Fact. Name_Valid_Recep • truncate Fact. Name_Recep staging table • generate surrogate key for each valid record and load into Fact. Name_Recep – Proc_Fact. Name_Recep_EDW • Check for each record if an old record exists (use OLTP primary keys) • If an old record exists in the data warehouse update record flag with ‘O’ • Insert records from the Fact. Name_Recep table with ‘C’ as record flag

Proc_Fact_Sales_Asis_Valid

Proc_Fact_Sales_Valid_Reception Is just this condition enough?

Proc_Fact_Sales_Reception_EDW