The Efficiency of Algorithms Chapter 7 Chapter Contents

- Slides: 25

The Efficiency of Algorithms Chapter 7

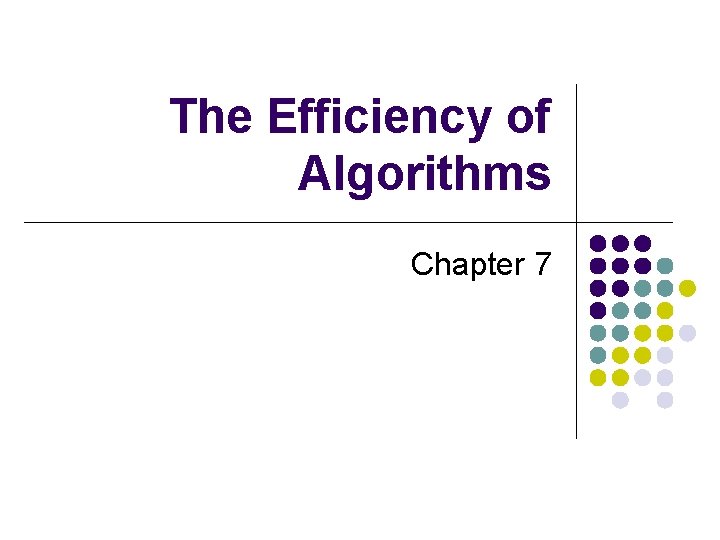

Chapter Contents l l Motivation Measuring an Algorithm's Efficiency l l Big Oh Notation Formalities Picturing Efficiency The Efficiency of Implementations of the ADT List l l l The Array-Based Implementation The Linked Implementation Comparing Implementations 2

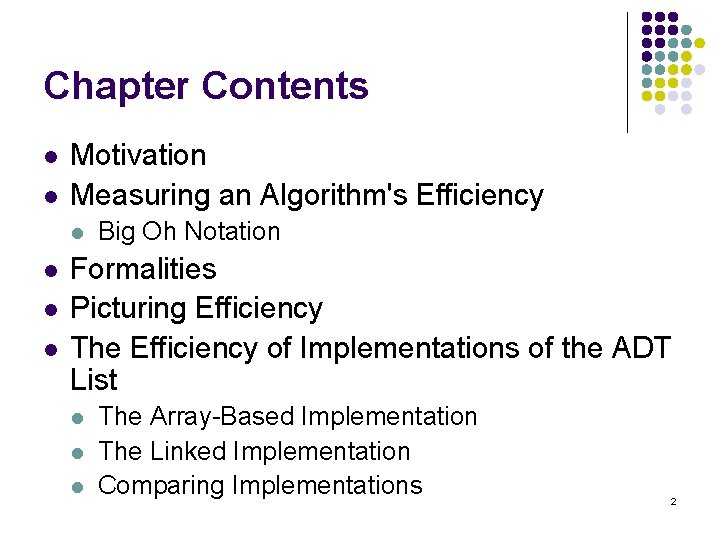

Measuring Algorithm Efficiency l l l l Algorithm has both time and space requirements called complexity to measure Types of complexity l Space complexity l Time complexity Analysis of algorithms l The measuring of either time/space complexity of an algorithm Measure the time complexity since it is more important Cannot compute actual time for an algorithm. Give function of problem size that is directly proportional to time requirement: growth-rate function Function measures how the time requirement grows as the problem size grows. l We usually measure worst-case time 3

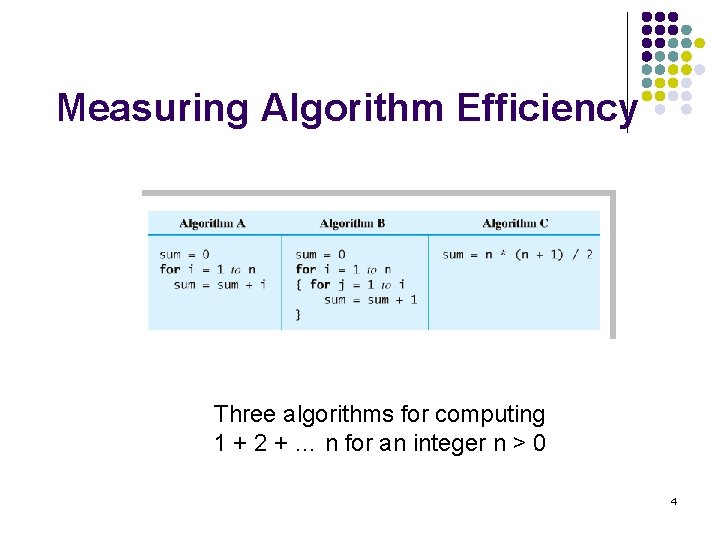

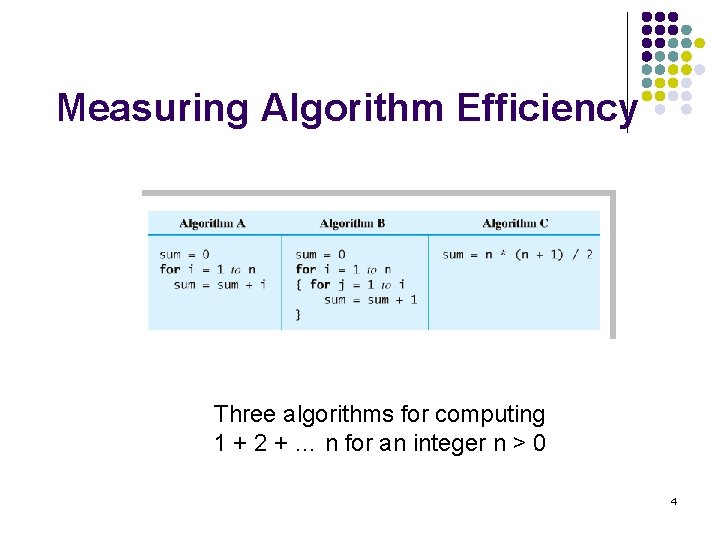

Measuring Algorithm Efficiency Three algorithms for computing 1 + 2 + … n for an integer n > 0 4

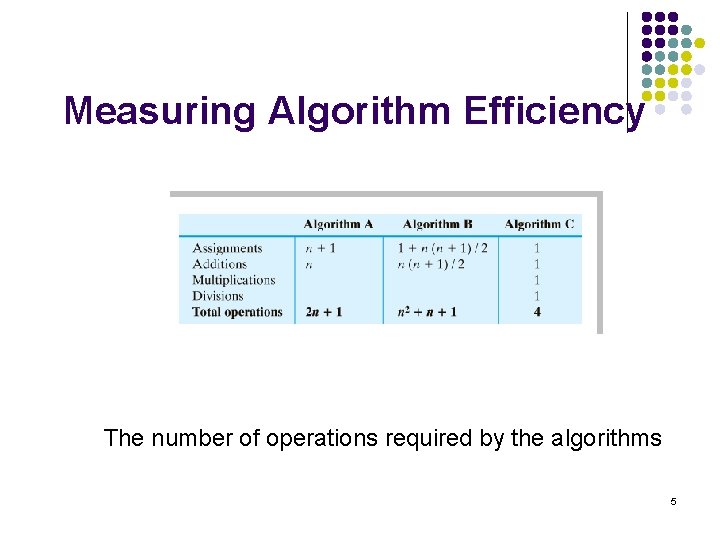

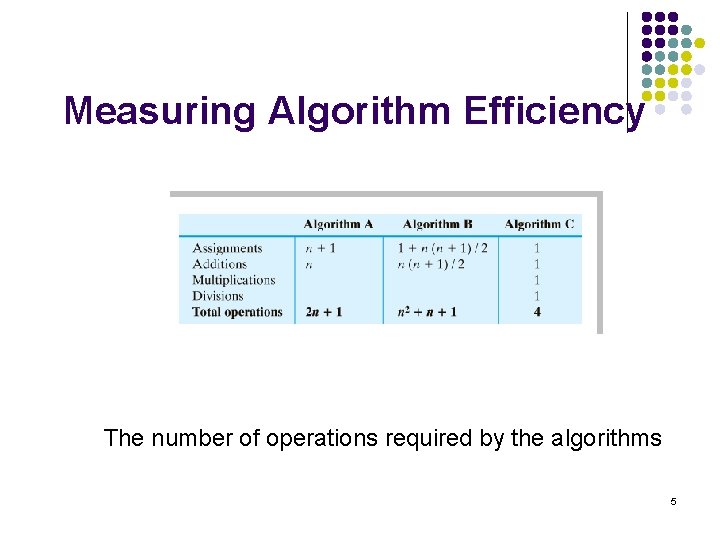

Measuring Algorithm Efficiency The number of operations required by the algorithms 5

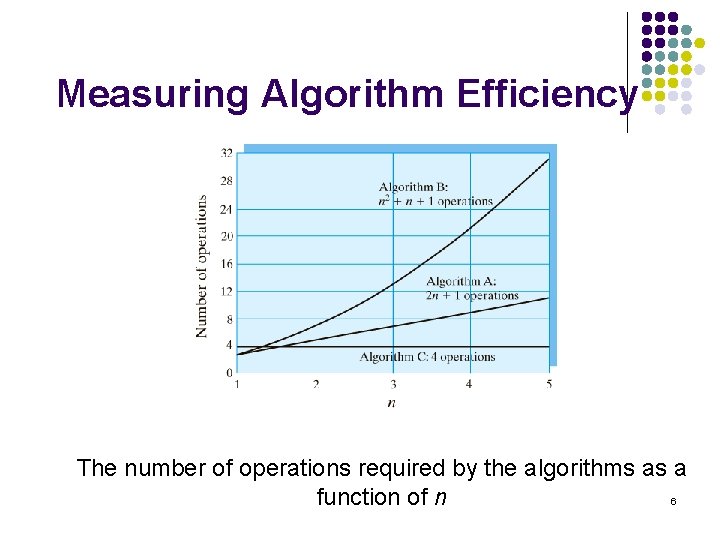

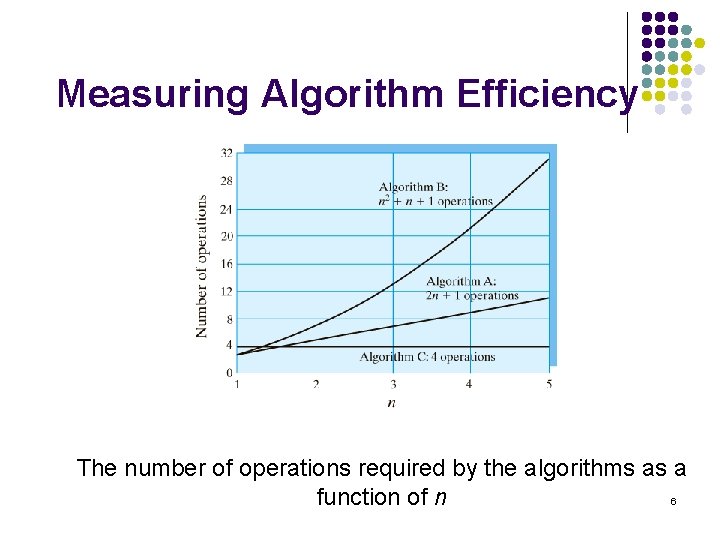

Measuring Algorithm Efficiency The number of operations required by the algorithms as a function of n 6

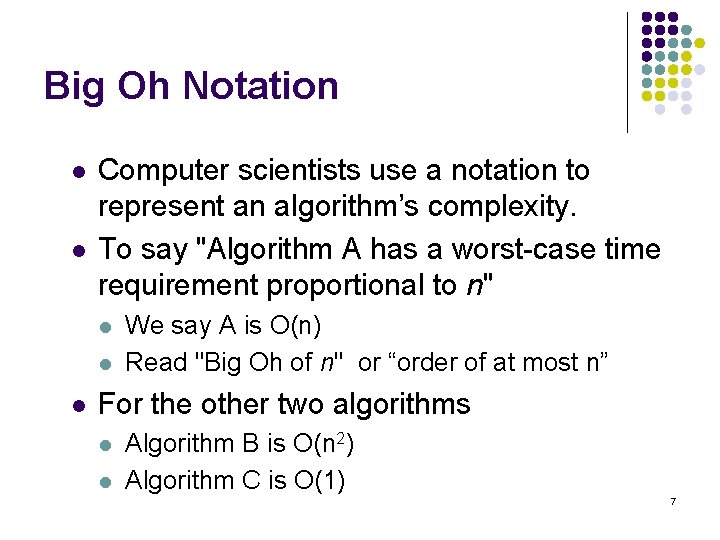

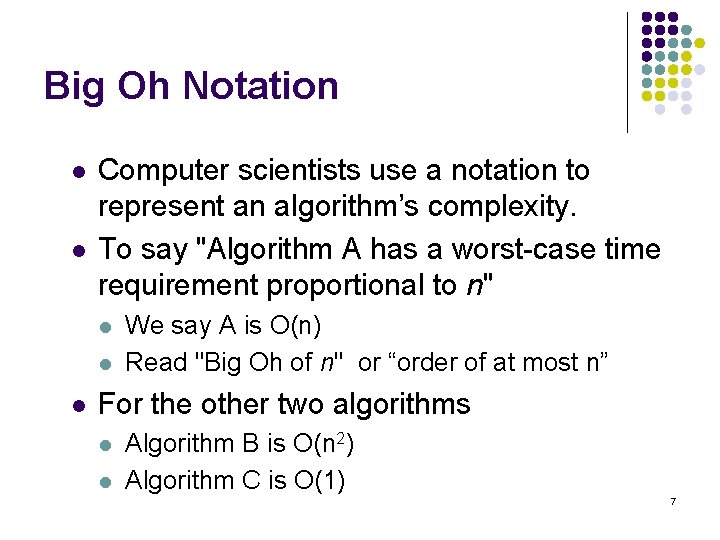

Big Oh Notation l l Computer scientists use a notation to represent an algorithm’s complexity. To say "Algorithm A has a worst-case time requirement proportional to n" l l l We say A is O(n) Read "Big Oh of n" or “order of at most n” For the other two algorithms l l Algorithm B is O(n 2) Algorithm C is O(1) 7

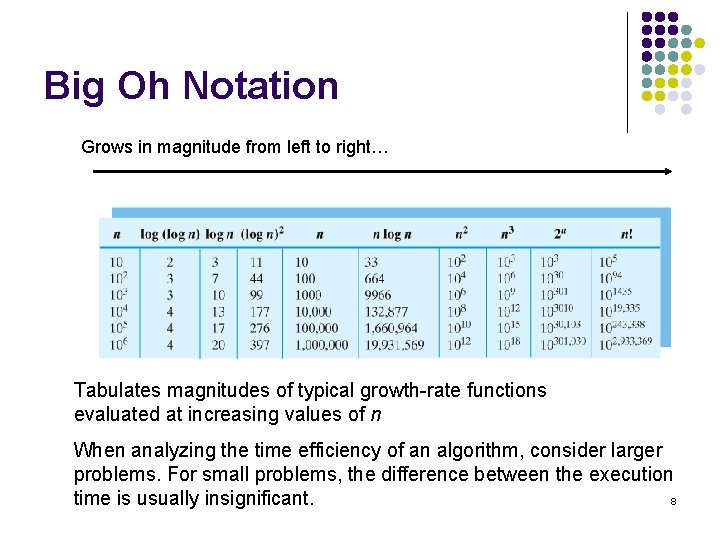

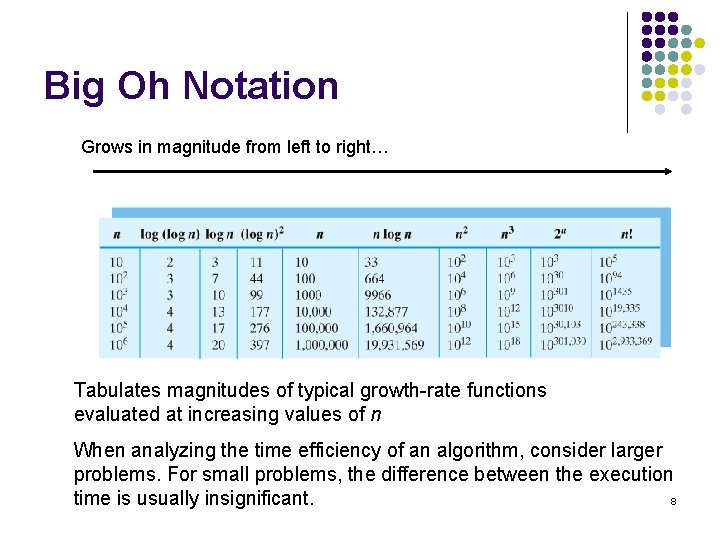

Big Oh Notation Grows in magnitude from left to right… Tabulates magnitudes of typical growth-rate functions evaluated at increasing values of n When analyzing the time efficiency of an algorithm, consider larger problems. For small problems, the difference between the execution time is usually insignificant. 8

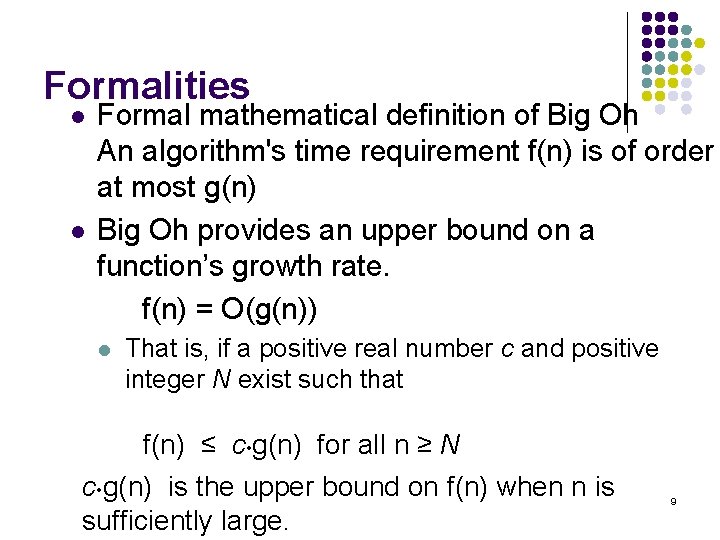

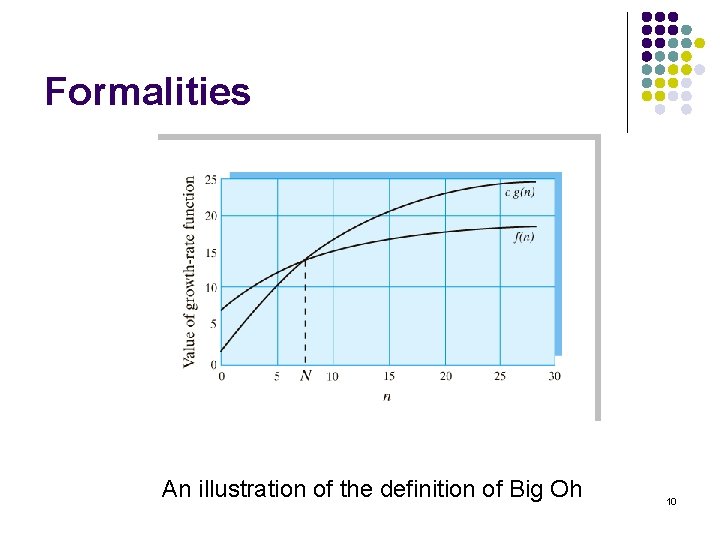

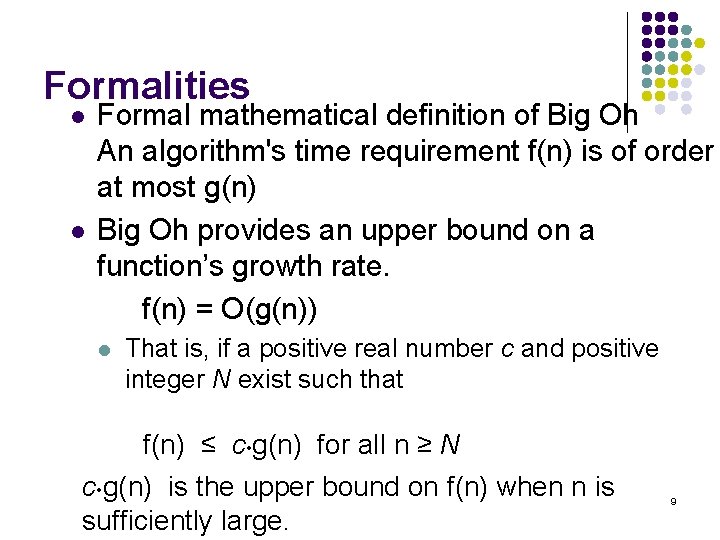

Formalities l l Formal mathematical definition of Big Oh An algorithm's time requirement f(n) is of order at most g(n) Big Oh provides an upper bound on a function’s growth rate. f(n) = O(g(n)) l That is, if a positive real number c and positive integer N exist such that f(n) ≤ c • g(n) for all n ≥ N c • g(n) is the upper bound on f(n) when n is sufficiently large. 9

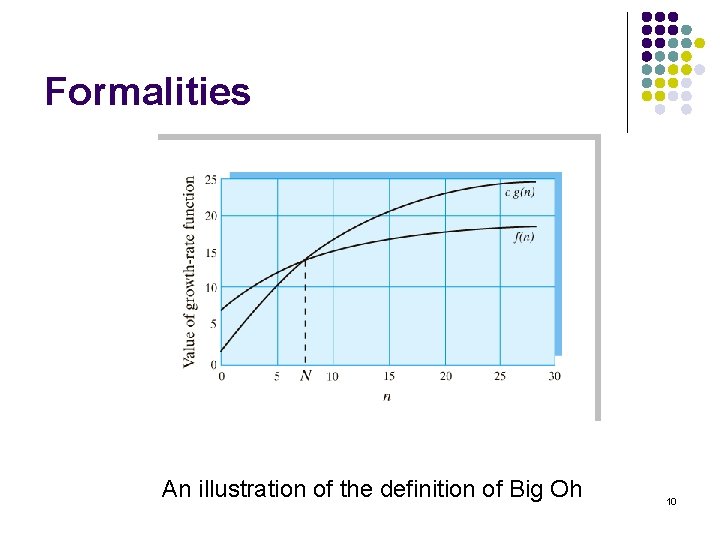

Formalities An illustration of the definition of Big Oh 10

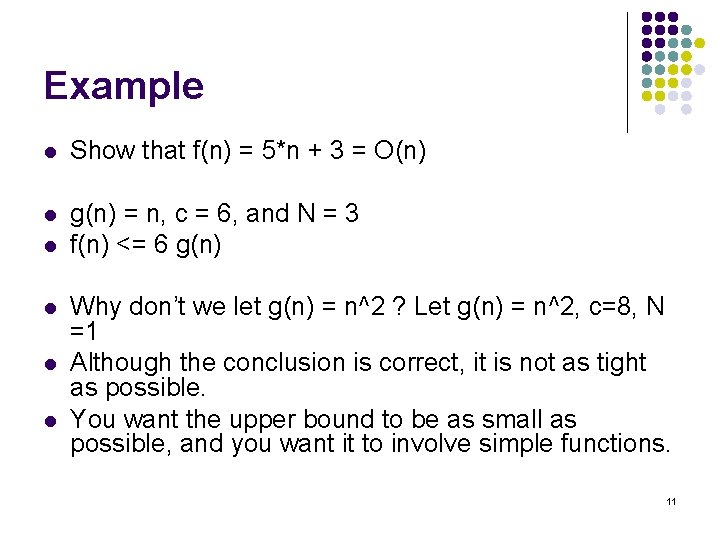

Example l Show that f(n) = 5*n + 3 = O(n) l g(n) = n, c = 6, and N = 3 f(n) <= 6 g(n) l l Why don’t we let g(n) = n^2 ? Let g(n) = n^2, c=8, N =1 Although the conclusion is correct, it is not as tight as possible. You want the upper bound to be as small as possible, and you want it to involve simple functions. 11

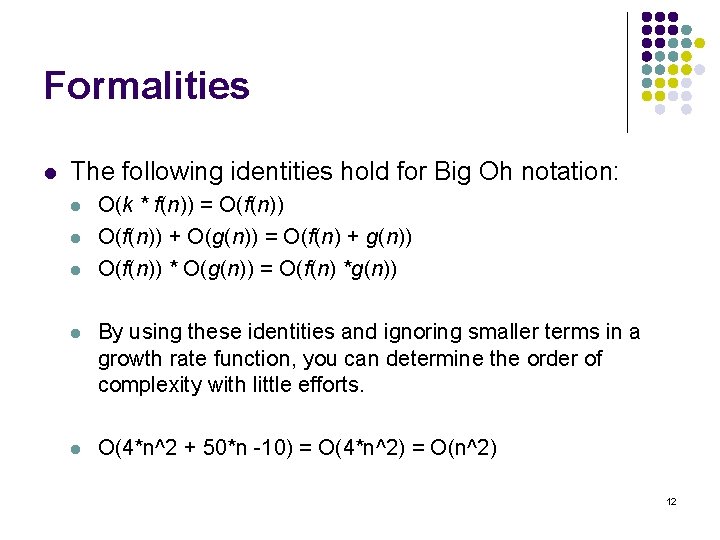

Formalities l The following identities hold for Big Oh notation: l l l O(k * f(n)) = O(f(n)) + O(g(n)) = O(f(n) + g(n)) O(f(n)) * O(g(n)) = O(f(n) *g(n)) l By using these identities and ignoring smaller terms in a growth rate function, you can determine the order of complexity with little efforts. l O(4*n^2 + 50*n -10) = O(4*n^2) = O(n^2) 12

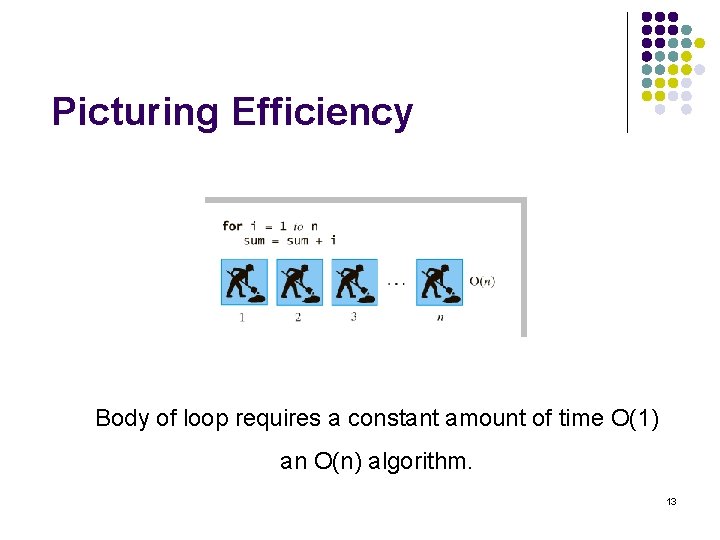

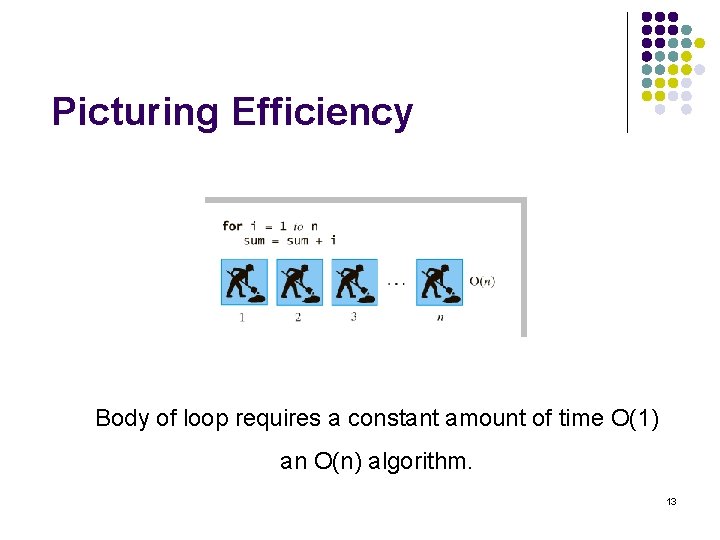

Picturing Efficiency Body of loop requires a constant amount of time O(1) an O(n) algorithm. 13

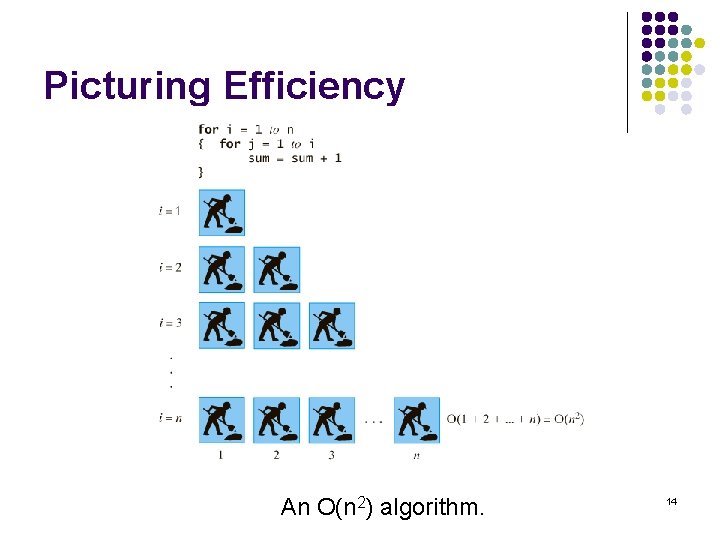

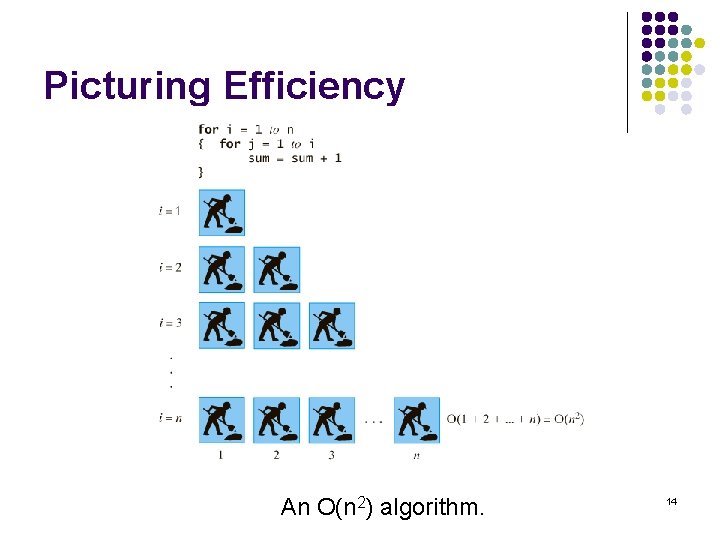

Picturing Efficiency An O(n 2) algorithm. 14

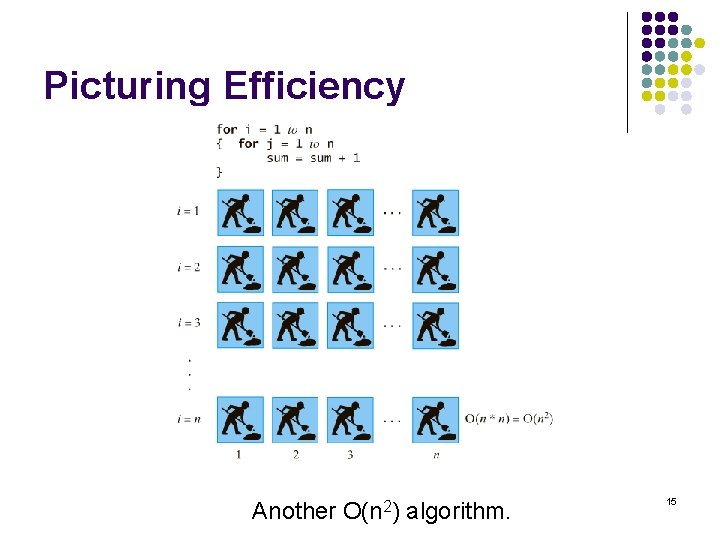

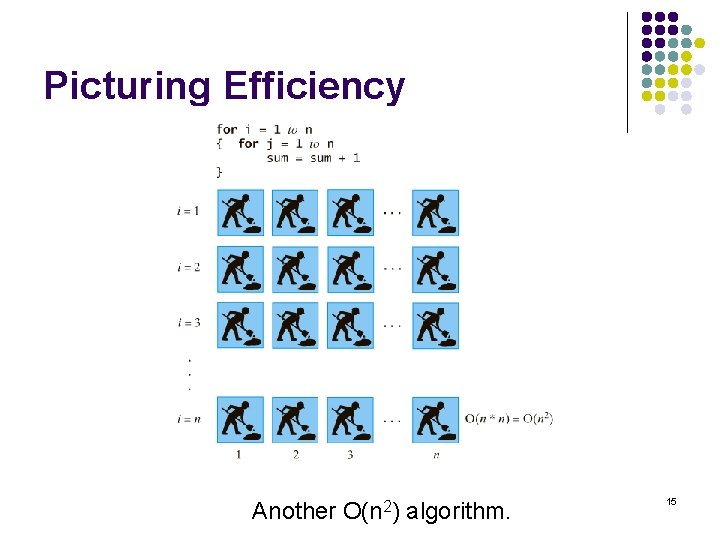

Picturing Efficiency Another O(n 2) algorithm. 15

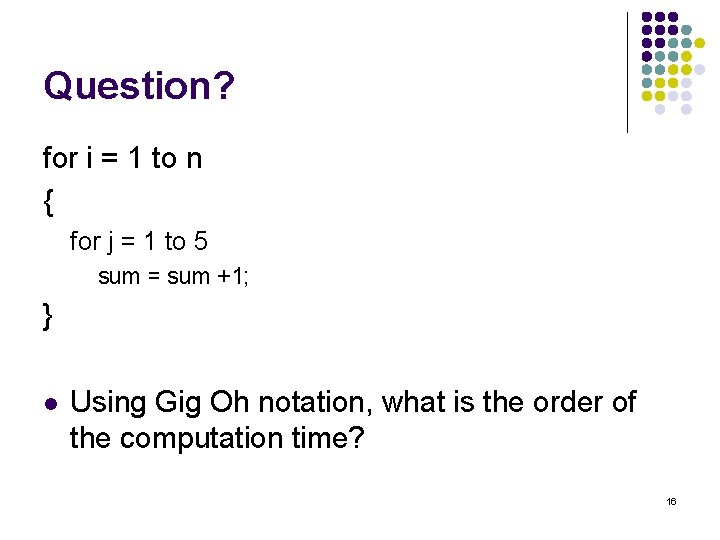

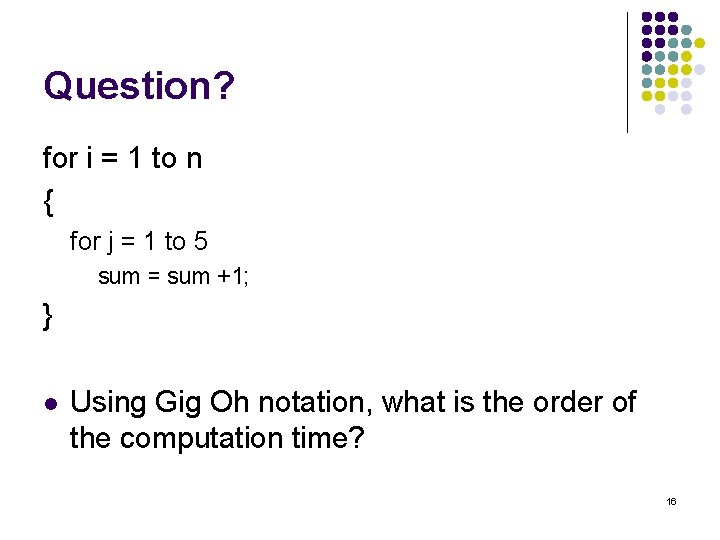

Question? for i = 1 to n { for j = 1 to 5 sum = sum +1; } l Using Gig Oh notation, what is the order of the computation time? 16

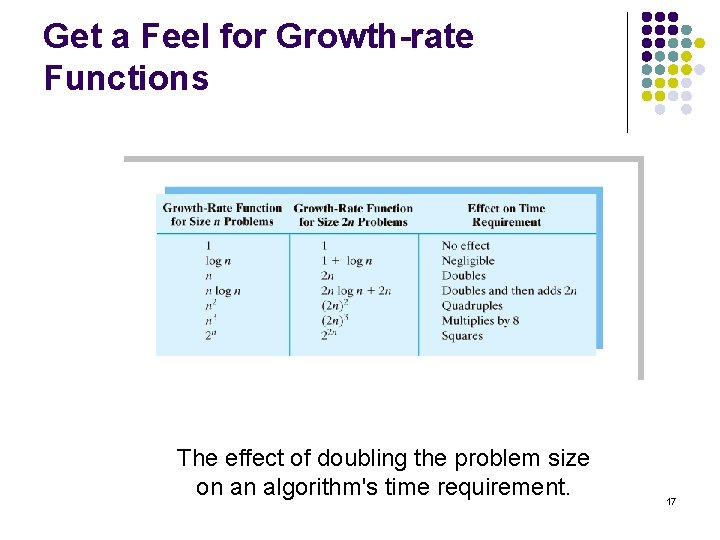

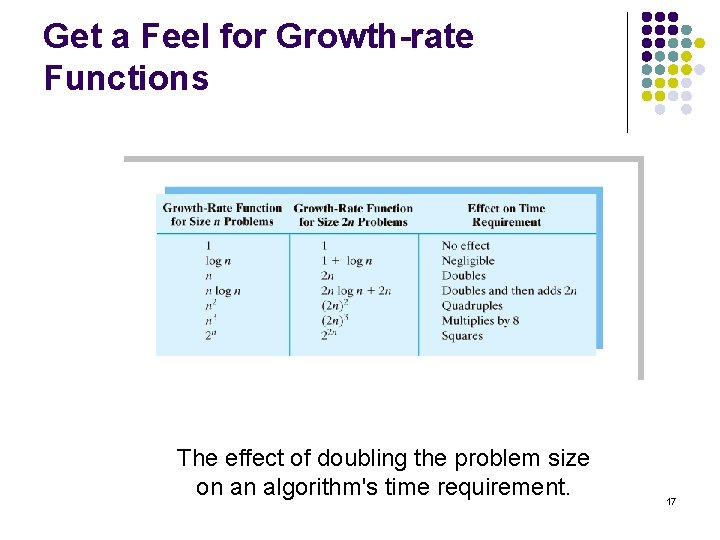

Get a Feel for Growth-rate Functions The effect of doubling the problem size on an algorithm's time requirement. 17

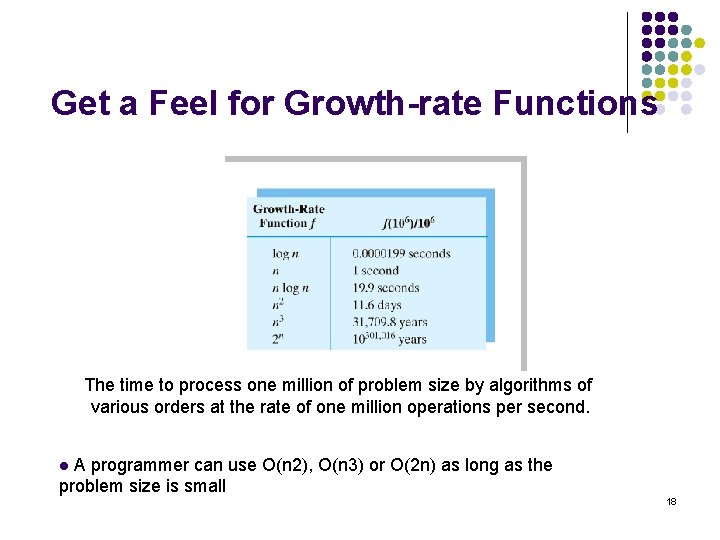

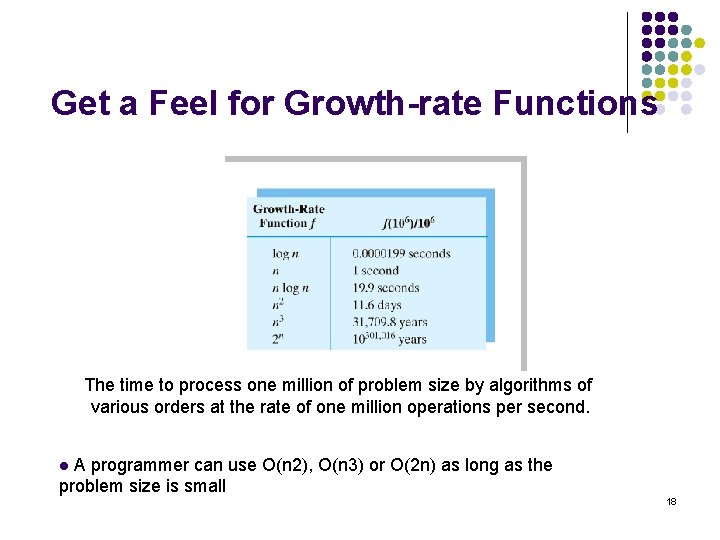

Get a Feel for Growth-rate Functions The time to process one million of problem size by algorithms of various orders at the rate of one million operations per second. A programmer can use O(n 2), O(n 3) or O(2 n) as long as the problem size is small l 18

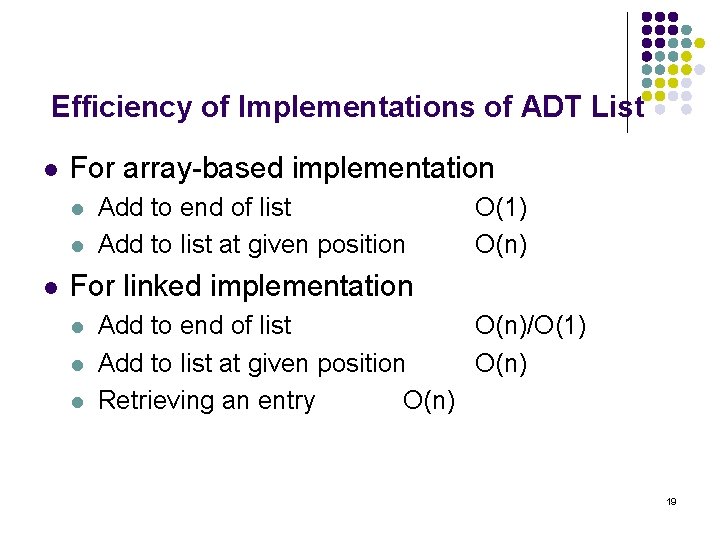

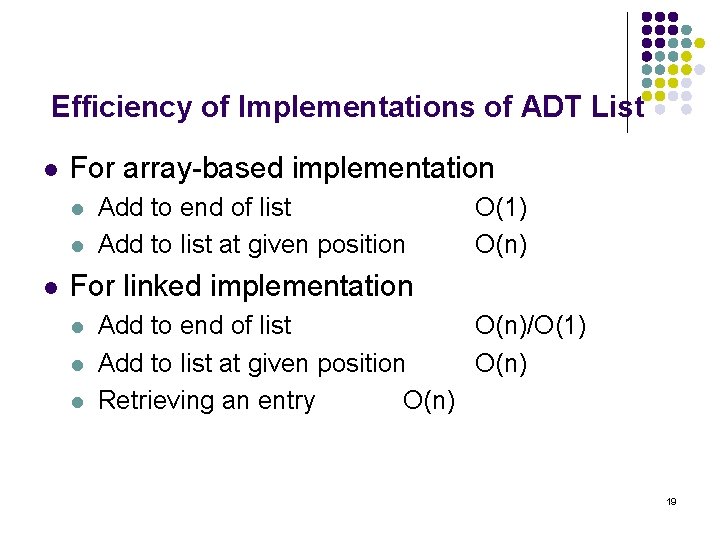

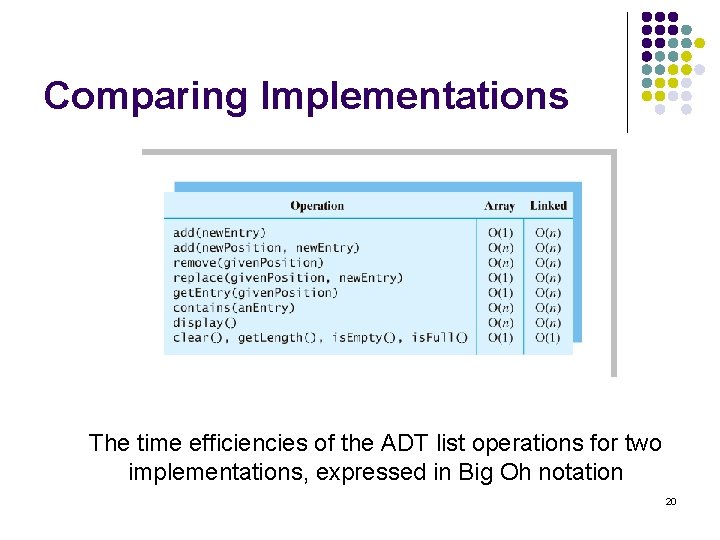

Efficiency of Implementations of ADT List l For array-based implementation l l l Add to end of list Add to list at given position O(1) O(n) For linked implementation l l l Add to end of list O(n)/O(1) Add to list at given position O(n) Retrieving an entry O(n) 19

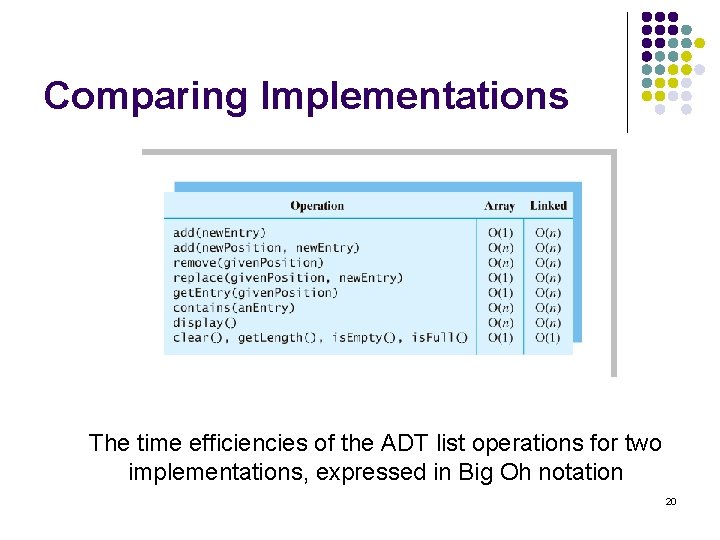

Comparing Implementations The time efficiencies of the ADT list operations for two implementations, expressed in Big Oh notation 20

Choose Implementation for ADT l l l Consider the operations that your application requires A particular operation frequently, its implementation has to be efficient. Conversely, rarely use an operation, you can afford to use one that has an inefficient implementation. 21

Typical Growth-rate function l l l l 1: implies a problem whose time requirement is constant and, therefore, independent of problem size n. Log 2 n: time requirement for a logarithmic algorithm increase slowly as the problem size increases. If you square the problem size, you only double its time. n: time requirement for a linear algorithm increases directly with the size of problem. If you squire the problem size, you also squire its time requirement. n* Log 2 n: increases more rapidly than a linear algorithm. Such problem usually divide a problem into smaller problems that are each solved separately. n^2: the time requirement for a quadratic algorithm increases rapidly with the size of the problem. Algorithms that use two nested loops are often quadratic. Such algorithm are practical only for small problem. n^3: cubic algorithm increases more rapidly than quadratic algorithm. Algorithms that use three nested loops are often cubic. 2^n: the time requirement for exponential algorithm usually increases too rapidly to be practical. 22

Exercises l l l l l Using Big Oh notation, indicate the time requirement of each of the following tasks in the worst case. Describe any assumptions that you make. a. After arriving at a party, you shake hands with each person there. b. Each person in a room shakes hands with everyone else in the room. c. You climb a flight of stairs. d. You slide down the banister. e. After entering an elevator, you press a button to choose a floor. f. You ride the elevator from the ground floor up to the nth floor. g. You read a book twice. 23

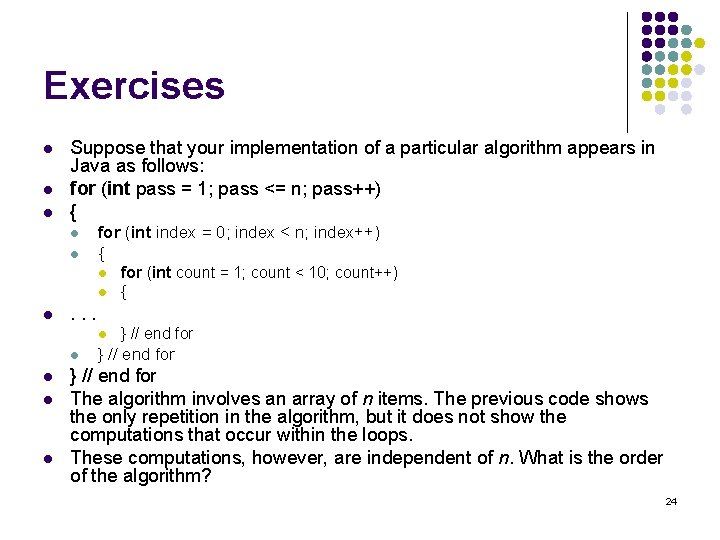

Exercises l l l Suppose that your implementation of a particular algorithm appears in Java as follows: for (int pass = 1; pass <= n; pass++) { l l for (int index = 0; index < n; index++) { l for (int count = 1; count < 10; count++) { l } // end for l l . . . l l } // end for The algorithm involves an array of n items. The previous code shows the only repetition in the algorithm, but it does not show the computations that occur within the loops. These computations, however, are independent of n. What is the order of the algorithm? 24

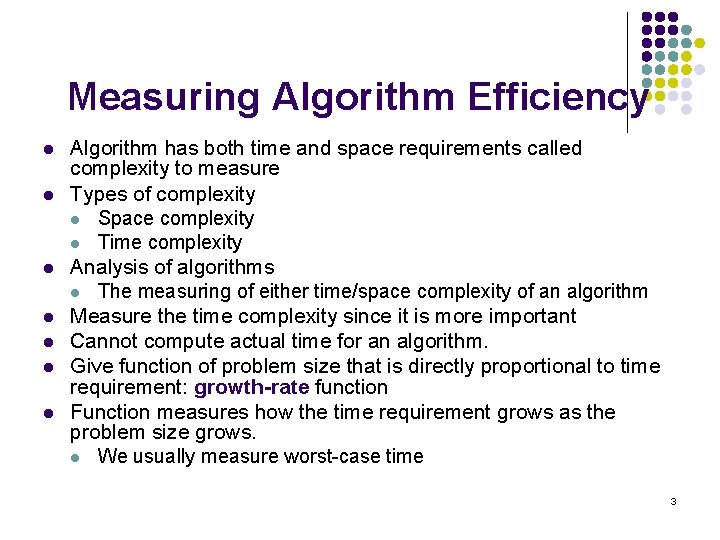

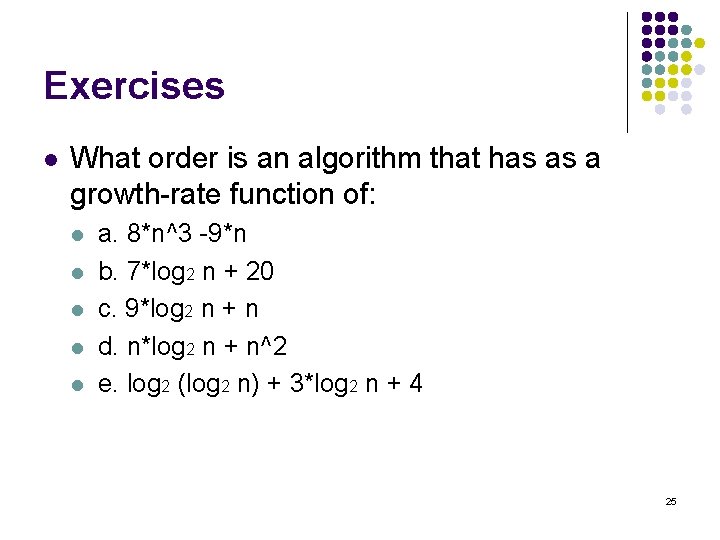

Exercises l What order is an algorithm that has as a growth-rate function of: l l l a. 8*n^3 -9*n b. 7*log 2 n + 20 c. 9*log 2 n + n d. n*log 2 n + n^2 e. log 2 (log 2 n) + 3*log 2 n + 4 25